Writing a blog is different than writing a referees’ report. I diarize certain points for the blog as I notice them. The function of these notes is to be topical and somewhat interesting. Martin Juckes has been trying to answer some questions and, to avoid strewing comments over multiple threads, I’d like to use this thread to deal with all further specific comments about replicating Juckes et al 2006. People can still comment on Juckes et al in a general way in other threads, but if you post on this thread, it had better be a precise question or comment or I’m going to delete it- even if it’s something that I’d otherwise let pass. OK?

I’ll try to add in a list of outstanding issues as I’ve noticed them to date. I’ll continue to diarize some issues as I get to them.

Note: In order to reduce noise levels, I am going to act as a type of chairman of this thread. If you wish to comment on this thread, please do so at the thread Your Comments on Juckes Omnibus. If there’s something that you post up that I feel should be transferred here for Juckes to reply to, I’ll do so. We ourselves can chat about this thread over there, but let’s leave this thread for Martin Juckes to respond to, if he so chooses.

List in Progress:

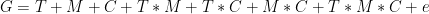

1. Calculation of SI Figure 1. How does one get from the mbh, mbhx, std and cen series to what’s illustrated in SI Figure1? This is resolved. As discussed elsewhere, Juckes used an unreported re-scaling procedure using rms instead of standard deviation. This raises other questions which will be dealt with in turn.

2. Signal "enhancement" by removing Sargasso Sea from proxy roster

3. Signal "enhancement" by removing Indigirka from proxy roster.

4. Continued presence of false statement in online submission about code availability.

5. Removal of Tsoulmajavri series

…. to be continued as I collate other points

220 Comments

Here’s a carryforward from http://www.climateaudit.org/?p=893

#18. Martin, the reason why I’ve tried to get code from people is simply because it’s impossible to read people’s minds. Here are more missing details – this is on just one diagram and this still doesn’t work.

Here’s your SI Figure 1.

Here’s what I’ve got based on what you’ve said so far, the best that I can understand it. First dividing all series by the standard deviation during tghe period 1400-1980; then centering all series by their mean in the period 1856-1980.

I’ve posted up my script for this diagram here – scroll to the end. Feel free to annotate the script to show how you got your diagram. The real mystery is that none of the series in your diagram have a similar shape to the archived "CEN" series – and all the scaling and centering in the world isn’t going to change this.

Here’s my guess as to what you’ve done and it’s only a guess:

1. What you’ve labeled as the CEN series looks like it’s actually the STD series.

2. What you’ve labeled as the STD series looks like the MBH series.

3. You don’t actually show a version of the CEN series, which lacks an uptick of the type shown by all the series in Figure 1. What you’ve labeled as the mbh/archived series also looks like it might be the MBH series scaled differently than in (2), but this is just a guess.

#Comment by Martin Juckes

Try normalising by the rms.

posted 6 November 2006 @ 3:12 pm | Edit This

#

Comment by Steve McIntyre

#36. Martin, this is getting very annoying. I said that I divided by the standard deviation over the period 1400-1980. You said to try “normalising by the rms”. I am baffled as to how this makes any difference. Wikipedia for example says: “The standard deviation is the root mean square (RMS) deviation of the values from their arithmetic mean.” Dividing by the standard deviation is, as far as I’m concerned, “normalising by the rms”.

Also this has nothing to do with the seeming mis-labeling of the series in your graphic. None of the series in your graphic has a closing endpoint lower than the peak around 1600 in some series. While in the CEN series, the closing point is below the peak around 1600. This feature is not going to change with scaling and centering. Similarly if you compare the appearance of the series, the statements below seem to be correct. Please comment.

Here’s my guess as to what you’ve done and it’s only a guess:

1. What you’ve labeled as the CEN series looks like it’s actually the STD series.

2. What you’ve labeled as the STD series looks like the MBH series.

3. You don’t actually show a version of the CEN series, which lacks an uptick of the type shown by all the series in Figure 1. What you’ve labeled as the mbh/archived series also looks like it might be the MBH series scaled differently than in (2), but this is just a guess.

posted 6 November 2006 @ 3:33 pm | Edit This

Martin’s statement is, of course, false. Here ‘s a plot of the Sargasso Sea series taken from Moberg’s Supplementary Information.

The original data is at ftp://ftp.ncdc.noaa.gov/pub/data/paleo/contributions_by_author/keigwin1996/fig4bdata and to show this more clearly, he is a re-plot:

… on another thread I wrote

Dear Martin,

Also, please quit saying you’ve answered questions when you haven’t. I said:

You said:

NO. IT. IS. NOT. ANSWERED. ELSEWHERE PLEASE. ANSWER. THE. QUESTION. ABOUT. INDIGIRKA.

I really hate to be petty about this, but handwaving and saying “I answered that elsewhere” WHEN YOU HAVEN’T just doesn’t cut it.

What about Indigirka? What about Sargasso? Both of them fit your criteria, and you didn’t use them. Why not?

w.

(crossposted from Team Euro Code)

Indigirka: Indigirka is a Moberg series, whose use in a 10-series composite with the Sargasso Sea, yields an elevated MWP-modern relationship as pointed out here

Willis: inquired about this as follows:

I then provided, for reference, the plot of the Indigirka series from the Moberg SI here:

together with a re-plot from digital data obtained from Mitrie coauthor Moberg here.

In response to Willis’ query, Juckes stated cryptically:

The Moberg Corrigendum is familiar to readers of Climateaudit as the complaint originating the Corrigendum and various responses have already been discussed here nd elsewhere at CA. The Corrigendum itself stated:

OK, Martin, your reason for not using Indigirka is not substantive. A new millennial series should be welcomed and is much more useful for verification than re-cycling the same snooped series.

I’ve transferred some comments over to another thread.

In order to reduce noise levels, I am going to act as a type of chairman of this thread. If you wish to comment about matters on this thread, please do so at the thread Your Comments on Juckes Omnibus. If there’s something that you post up that I feel should be transferred here for Juckes to reply to, I’ll do so. We ourselves can chat about this thread over there, but let’s leave this thread for Martin Juckes to respond to, if he so chooses.

Re #3: The Sargasso Sea data in ftp://ftp.ncdc.noaa.gov/pub/data/paleo/contributions_by_author/keigwin1996/fig4bdata extends to “25 Calndr YBp”. It is the convention in paleoclimate studies to specify dates relative to 1950, so 25 Calndr YBp equates to 1925. As noted by another contributor, this represents the centre of a 50 year bin, so the latest data is the 1900-1950 bin. The convention is, unfortunately, not stated in the data file. I have checked with Lloyd Keigwin and he confirms that 1925 is the appropriate end date, but with the caveat that there is considerable uncertainty in the dating because of possible contamination by atom bomb test debris (the “1950” sample has foraminifera from a range of years, mainly before the tests but possibly with some material mixed in from later).

Concerning the Indigirka data, the key phrase is “they are unpublished data”.

Concerning the figure 1 of the supplement: figure 2 of the supplement is as figure 1 but allowing for padding of data as used by Mann et al. and by McIntyre and McKitrick emulations of Mann et al. Figure 1 of the supplement follows the convention of the Juckes et al. manuscript in omitting series which do not cover the full period. My apologies for the incompleteness of the supplementary data which does not fully specify the variable naming conventions of the PCs stored there. It looks as though you are plotting the data of our supplement figure 2.

Concerning the data used in your 2005 Energy and Environment paper, the file you directed me to (proxy.MBH.txt) does not load into R. Given my inexperience with R there could be many reasons. Could you just clarify: is this the file you used for the reconstructions in your 2005 Energy and Environment paper, or are there, modifications which need to be made?

Thanks for pointing out the errors in http://home.badc.rl.ac.uk/mjuckes/mitrie_files/pdfs/comment_ee_figure2.pdf, which have now been corrected.

#7. It is an ascii file, which you can read using read.table. I can’t load R “tab” objects to either website due to website protocols that I can’t control.

Martin, when you say in respect of Indigirka, the “key phrase” is “unpublished data”, I have difficulty following your reasoning. Could you provide an objective definition of what you and coauthor Moberg mean by “unpublished data”? Moberg stated that the series was discussed in the literature. Moberg et al 2005 cited the following article:

Sidorova, O. V., Naurzbaev, M. M. Response of Larix cajanderi to climatic changes at the Upper Timberline and in the Indigirka River Valley [in Russian]. Lesovedenie 2, 73—75 (2002).

Do you merely mean that the data is “unarchived”? I’m sure that you don’t mean that – otherwise, you’d be losing a number of other series that you are relying on.

You can’t mean that the data is unavailable. I have a copy of the data and the sensitivity using the Indigirka data is readily done – indeed, I’ve already done it.

So help us along a little – what is your definition of “published data”?

Martin, in your CVM calculations, as far as I can tell, you divide series by their standard deviations over the period 1000-1980. Do you re-scale by dividing by rms in other circumstances other than SI Figures 1 and 2? If so, on what other occasions? Why do you divide by rms in SI Figures 1 and 2 if you don’t divide by rms in the CVM calculations?

Martin, I am able to accurately replicate the following two MBH CVM versions from proxy versions archived at mitrie_proxies_v01.nc

7 mr_mbh_1000_cvm_nht_01.02.001

8 mr_mbh_1000_cvm_nht_01.02.001_ff

I am running into various degrees of discrepancy in trying to replicate the following versions, which I presume pertains to locating the precise PC series that you used in each case. Would you please identify the references of the PC series used in each of these reconstructions:

9 mr_mbh_1000_cvm_nht_01.02.001_pc

13 mr_mbhx_1000_cvm_nht_01.02.001_cen

14 mr_mbhx_1000_cvm_nht_01.02.001_mbh

15 mr_mbhx_1000_cvm_nht_01.02.001_mbhx

16 mr_mbhx_1000_cvm_nht_01.02.001_std

Steve

When the questions get too difficult Martin will stop replying.They always do.

MArtin Juckes writes:

Hello Stephen, sorry to be slow to answer some questions, I didn’t realise your commiment to keep things on one thread would evaporate so quickly.

First, to return to a number of issues that have been raised over the past few days:

Could you provide me with:

c:/climate/data/mann/pc.tab

c:/climate/data/mann/eof.ordermann.tab

c:/climate/data/mann/tpca-eigenvals.tab

c:/climate/data/mann/weights1.tab

c:/climate/data/mann/gridpoints.tab

c:/climate/data/mann/gridcell.stdev.ordermann.tab

c:/climate/data/mann/mask.edited.tab

c:/climate/data/mann/nhmann.tab

c:/climate/data/mann/treepc.byregion.count.SI.2004.txt

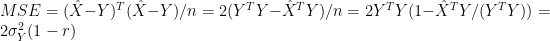

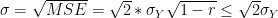

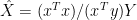

c:/climate/data/mann/treepc.byregion.directory.SI.2004.txt

These are all required by the code for your Energy and Environment (2005) paper, which you kindly provided last week.

Secondly: I notice on that on page 888 you are having trouble interpreting mr_mbh_1000_cvm_nht_01.02.001_pc: This figure could be interpreted as a coding error or as an illustration of the pitfalls of combining the use of proxy PCs and the composite approach. The problem is the arbitrary sign of the PCs. If this is not adjusted the composite is likely to be meaningless because of the arbitrariness, if it is adjusted estimates of significance can be compromised. Some such reconstructions (using adjusted PCs) are included in the discussion for comparison purposes, but for the main conclusions we avoid proxy PCs so as to avoid this problem. The curve you show on page 888 has an unadjusted PC, so it is basically meaningless.

Thirdly: you ask on page 894 about which proxies are used for each reconstruction: in the netcdf file containing the reconstructions, each reconstruction has an attribute “proxy_list” which lists the proxies.

Fourthly: Are you suggesting that any data which is in your possession should be considered as published? It is an interesting idea, and would certainly cut down all the hassle of peer review etc. But seriously, if you can stop posting extended discussion of your problems coming to grips with trivia long enough to say anything serious, do you have any authorative information about the Indigirka data in your possession which would justify its use as a proxy? If so I think it would be really useful if you could write it up and get it published.

Fifthly: Concerning your efforts to reproduce figure 1 of the supplement: your ability to create endless confusion out of a simple problem is amazing. The scaling of the curves in supplement figure 1 was not described in great detail because its irrelevant, the scaling used in the composites is described because its relevant.

posted 8 November 2006 @ 11:53 am | Edit This

#13. You complained about wanting to comment on only one thread and I set up one to accommodate.

1) My original intent in providing code was to show calculations. That’s what I usually use other people’s code for. I’ve read some of Mann’s Fortran code (e.g. it shows that he calculated the verification r2 statistic, but I haven’t attempted to run it. It wouldn’t run anyway because it calls to various directories that are inconsistent with published data.) I hadn’t thought originally in terms of turnkey code, although I have no particular problem with that and am prepared to export things from my machine to accommodate that.) The R-objects mentioned are R-objects containing Mann’s information as R-objects. pc – collation of MAnn’s pcs; eof’s Mann’s eof’s tpca-eigenvals – eignevalues; weights1 – proxy weights; gridpoints – the 1082 gridcells used; gridcell.stdev – standard deviations of gridcell temperatures; mask.edited – the sparse subset; nhmann- mann’s spliced reconstruction; treepc count and directory – number of PCs from each network used in each calc step, same with directory. I think that I’ve got a collation script online and will check. BTW, as you know, the code for the E and E paper was not archived “last week”, but in March 2006.

2)

Again, a simple question one more time: which PC versions did you use in this series? Can you list the PC series from the netCDF directory please.

3) I consulted the list of proxies in the Net CDF file, but you are unresponsive to the question. The proxy list simply lists PC series but does not say which PC version is used. There are mbh,mbhx,mbhl,std,cen; sometimes flipped, sometimes not. I just want to know which series was used (and if you re-oriented the series, which series were re-oriented). Afterwards, we may agree that the results are meaningless, but for now, can you simply say which PC versions you used – it’s a simple question really.

4) I asked a simple question: “what is your definition of “published data”?” Again, it’s a simple question. I didn’t suggest that “any data which is in your possession should be considered as published”. This sort of petty debating point is quite tiresome. You’re the one who’s de-selected the data because you said that it was “unpublished data”. Once again, what’s your definition.

5)

I beg to differ. I simply tried to replicate the curves. Had you provided any sort of rteasonable description of what you did (which might have required a justification of what you did), then I would have had no difficulty replicating what you did. Scaling is an important issue in paleoclimate and is never “irrelevant”.

#13(1) Martin, a script to collate the various Mann objects, pc.tab, eof.tab,… was originally archived in October 2003 and an updated version (reflecting some newer information) was archived in April 2006 at http://www.climate2003.com/scripts/MM03/read.mann.txt. If you execute this script, this should give you the required R-tables in the appropriate directory.

I have trouble benchmarking some of these scripts from my computer as I’ve been blocked from Mann’s FTP. I can test them on versions that I downloaded pre-blocking or that have been sent to me in the past.

Dave Dardinger asked the obvious question about bristlecones.

Martin Juckes replied:

You’ve probably noticed by now that Juckes, liuke other members of the Team, virtually never quotes me word-for-word, but always re-states things. Our position with respect to bristlecones was that the specialist who had collected the samples stated that their anomalous growth in the 20th century was not related to temperature or climatic factors. Graybill (who collected the majority of bristlecone/foxtail sites, and said that he tried to get as many strip bark samples as possible) in Graybill and Idso 1993 postulated CO2 fertilization, following up on an earlier article Lamarche et al 1984, which posited this about Sheep Mountain, the #1 site in the Mannian PC1. (The et al included Fritts, Graybill and Rose,). Lamarche, Fritts and Graybill collectively being dendro luminaries. Hughes and Funkhouser 2003 said that the growth spurt was a “mystery”. Biondi et al 1999 noted the problem. A caveat in respect to CO2 fertilization was given in IPCC 2AR.

In MM05 (EE), we reviewed other factors that could have contributed to the 20th century growth spurt – fertilization by airborne phosphates or nitrates, sheep grazing at high altitudes in the 19th century – associated elsewhere with increased growth of trees due to elimination of underbrush. We adopted no position on CO2 fertilization particularly. Our position was that, if bristlecones/foxtails were to be essential to reconstruction of world climate history, the proponents of this method needed to eliminate these other factors and hadn’t done so.

Arguably the main innovation of MBH98-99 was its adoption of bristlecone/foxtails into temperature reconstructions. Previous reconstructions (e.g. Bradley and Jones 1993) had avoided bristlecones. MBH threw caution to the winds. Their use of bristlecones/foxtails has been followed in virtually every subsequent study: Crowley and Lowery 2000(twice); Esper et al 2002 (twice); Osborn and Briffa 2006 (twice); Hegerl et al 2006 (twice). Juckes et al 2006 raised this to a new level using FOUR different bristlecone/foxtail series in his 18 series-union (which also includes two version of Tornetrask and Yamal, among other stereotypes.)

At this point, it is not just me arguing against use of bristlecones. Bristlecones were an important issue, considered by both the NAS Panel and Wegman. The NAS Panel said that strip-bark samples (which include virtually all bristlecone and foxtail samples) should be avoided in temperature reconstructions.

Juckes fails to even mention the NAS Panel or to discuss this finding.

#9, 13(4). I asked Juckes to provide the definition of “published data” that he relied on in de-selecting the Indigirka series. Instead of providing the definition, he made the following verbal joust which failed to answer the question:

Pursuing the matter, I sent the following email to Juckes coauthor Anders Moberg, who also was the lead author of Moberg et al, asking him for clarification of the observation in the Corrigendum as follows:

Moberg replied:

In other words, “unpublished data” in this context is simply that the data has not been publicly “archived”. As noted in the Corrigendum, although the data had not been publicly archived, the data was available merely by emailing Moberg (which I’d done.) For the Hockey Team in particular, this seems like a pretty thin pretext for de-selection.

Steve M, you may wish to ask Dr. Juckes, expert in plant biology, whether the tree-ring response model used for “temperature” reconstruction might be mis-specified by excluding 2-,3-, and 4-way interactions among T,P,C,N. Pile all that positive synergy onto T (mis-attribution) and you’re going to get a drastically inflated temperature response.

It’s not just about C fertilization. It’s about plants in their total environment: temp, precip, CO2, Nitrogen. Until I see this model refuted by credible data from a credible physiologist, I’m sticking to it. Not sure why the Rob Wilsons and bristlecone ecologists won’t comment. It’s not an unlikely hypothesis.

re #14: 1) Thanks for the file. Sure, I’m not expecting to run it without modification, but the code is so heavily dependent on these input tables there is not much hope of a clear interpretation without those tables. (I’m currently using the version you put on the web last week, following our earlier exchange on this issue).

2, 3) It would have saved a little time if you had mentioned that you had already looked at the lists in the files. New versions are in http://home.badc.rl.ac.uk/mjuckes/mitrie_files/data/ with corrected proxy_list attributes.

4) I see below that you eventually worked out for yourself that I’d quoted this from Nature. See below.

5) Given your habit of constantly quoting out of context and spreading false information I think this is an unlikely claim. You claim that you had trouble “extracting” this information, but you didn’t, if you remember, approach me for the information.

#16: So you are arguing that Bristlecones should be omitted on the basis of a an analysis of correlations with climate data? Shame there aren’t any cherry tree series.

#17: OK, its not just any data in your posession, but any time series that is available electronically? Even by your standards, McIntyre, this is ridiculous.

I’ve got some other work to do for a few days, I’ll check your site again sometime next week.

Re #19 But what about #18?

re: #19 Dr. Juckes

Obviously you’re not a politician. A quote like this demands the obvious. Could you be so kind as to provide us a list of such false information? I might add that I find the “quoting out of context” part pretty unlikely except on an occasional and unintended basis. Steve normally quotes directly huge chunks of material when he’s dealing with what others have said. Afterwords he might summarize briefly, but I don’t think you’ll find much context which is missing on this site if you want to look for it.

In 14(5), I stated:

To which, Juckes replied:

Note that even when Juckes purports to quote me, he uses a word “extract” that I didn’t use. Not much turns on it, but it’s all too typical. Now what exactly is “unlikely” about my claim. That I tried to replicate the curves. Obviously I tried to replicate the curves. There’s plenty of evidence of this on the blog. That if Juckes had provided a “reasonable explanation” of what he did e.g. rms normalization, that I would have had no trouble replicating the curves. Again I don’t believe that this is an “unlikely claim” or “spreading false information”. That “scaling is an important issue in paleoclimate” – again I believe that this is true and Juckes’ article seems to say the same thing. One hardly knows where to begin in responding to such intemperate comments with such little substance.

Hello McIntyre,

Re “extract”: your exact words were “We finally extracted enough information from Martin Juckes to be able to replicate SI Figure 1.”.

Re #18: No one disputes that there are other factors. E.g., Koerner et al (2003) say: “`Experimental data further suggest that situations under which CO2-enrichment exerts sustained stimulations of structural carbon incorporation are early regrowth (at least in warm climates) and deep shade.” The data used in our study are selected from sites where temperature is expected to be a growth limiting factor.

Re #22: I’m afraid its not at all obvious. You grabbed a definition of standard deviation from wikipedia, for instance, “The standard deviation is the root mean square (RMS) deviation of the values from their arithmetic mean” (on page 894). We were discussing RMS. Rather than click on the wikipedia link to the standard definition of RMS, which was in the text you quoted, you diverted attention with a lot of irrelevant stuff about electric currents.

Have you contacted Energy and Environment about the corrigendum for your article yet?

I’ve been looking at the Graybill and Idso (1993) paper again — it doesn’t appear to be reproducible in the McIntyrean sense: i.e. the data is not archived completely (the tree ring data is readily available, but I haven’t been able to get hold of the information about which trees are “strip-bark”).

Martin Juckes has not answered any outstanding questions.

As to his two observations: mea culpa. I did in one comment in a thread use the word “extract” – sorry about that.

Martin, you made no mention of rms in your article. I am unaware of any prior use of rms in paleoclimate reconstructions. So it’s pretty insolent for you to expect anyone to read your mind. I looked for a definition and located one where I could.

You have quite astonishingly failed to acknowledge the perverse flipping of the MBH PC in your “evaluation”. Figure 2 attempted to illustrate this perverse flipping. In doing so, as you pointed out, I made an illustration with the dimensionless series having a common center of 1 (tree ring chronologies.) I have included script for the revised figure in my updated script – which you did not mention in your little Comment. As you observe, this is the only figure or calculation in which this particular form of calculation is used. The revised figure in the Supplementary Information demonstrates that the perverse flipping is actually stronger when a centered PC calculation is done. I will in due course send a note to EE saying that the effect is stronger than indicated in Figure 2, but have not done so yet. I’m sure that you will also note that the perverse effect is even stronger than we indicated.

At this point, the problem for Juckes is not merely Graybill and Idso 1993, but the recommendations of the NAS Panel that strip-bark bristlecones and foxtails not be used in temperature reconstructions. Juckes astonishingly does not even discuss the NAS Panel. The issue is not simply whether bristlecones have experienced CO2 fertilization, but as the NAS panel observes (and as discussed in MM05 EE) whether other forms of fertilization, non climatic response or nonlinear climatic response have affected bristlecones. MArtin, again, you have not discussed these crucial items in your “evaluation” of past reconstructions.

I agree that one cannot determine from Graybill’s information which cores are strip bark and which ones are not. Graybill said that he sought out strip bark cores. If you don’t know which ones are strip bark and which ones aren’t, and you want to comply with the NAS recommendation, it makes the Graybill data sets unusable. Too bad. But if the reconstruction is “robust”, that shouldn’t bother you. A robust reconstruction would not be affected by the presence/absence of bristlecones, would it? That’s obviously one of things that an “evaluation” of millennial reconstructions would deal with – isn’t it??

There are many defects in how dendro people record information. For example, they don’t record altitudes. I’d like to see altitude information from the Polar Urals site – maybe your coauthor Briffa could forward that to me. BTW can you obtain identifications of the sites used in Briffa et al 2001? I’ve been trying for several years without success. While you’re at it, could you also get the actual unspliced MBH reconstruction for the AD1400 step?

Dear Martin:

Thank you for continuing to post here. Rather than look for errors in our analysis, however, it would be much more valuable if you concentrated on answering questions about the errors in your analysis.

In particular, since you were tasked with analyzing previous reconstructions, and since the NAS Panel has recommended that strip-bark species not be used, why did you use them and not even discuss the issue?

Also, the sensitivity of so-called “robust” proxy reconstructions to the presence or absence of a couple of hockeystick-shaped individual proxies is very well established. Why do you do not address this question in your paper?

Next, you have not commented on the fact that you have the same series in your reconstruction under different names.

You have not said what your criteria were for picking one of two series in an area (e.g., Polar Urals).

Finally, why have you singled Steve M. out for comment about data and methods availability, when some of your co-authors are among the worst offenders in the field?

Yes, there are occasional errors in the analyses made here, and you are welcome to point them out. But it doesn’t do your reputation, or the reputation of your paper, any good to only discuss our errors. There are gaping holes in your paper, and unless you’d like your analysis to be eventually consigned to the trash bin of history, you need to address them. These are real, solid, scientifically based questions about your work, and they will not go away simply because you prefer to discuss the mote in our eye …

Again, thank you for continuing the discussion. It is very informative, both for what you say, and for what you don’t say.

w.

Dear Dr. Juckes,

Thank you for your response to my #18. You say:

While that may be the intent of the experimentrs, and while it may generally be true, it is certainly not true for all your data sources, and more to the point it is certainly not true for the bristlecones pines, which dominate the reconstruction. My question #18 was not a general question about all trees; it was specifically focused on the bcps.

Would you care to reconsider your answer, knowing now that I’m talking about the bcps?

Martin, one of the usual practices in millennial paleoclimate is to calibrate a model on one period and reserve a verification period (e.g. 1902-1980 and 1856-1901 in MBH). It seems surprising that you did not follow this practice. When I did common calibration-verification tests on a variety of reconstructions with a common calibration period of 1902-1980, I typically observed high calibration r2, a failed calibration Durbin-Watson, a “high” RE statistic and a failed verification r2 statistic. Did you carry out tests on a calibration-verification basis? If so, why didn’t you report the results? If you didn’t carry out such tests, why not?

re #24: Could you explain what you mean by “perverse flipping”?

Do you accept everything the NAS report says?

The robustness tests, like the choice of proxies in the first place, must be based on prior information.

True, quality of data archiving is still a problem. Do you know of anyone who has evaluated a correlation between anomalous growth in strip-bark bristlecone pine and CO2 and done a significance test?

Re #25: The use of bristlecone pines is referred to in the paper (its not possible to remove specifically strip-bark data, because the archive data does not record which trees had this property, so the only option would be to eliminate all bristlecone pines).

Re removing “hockeystick shaped” profiles: I don’t do as you suggest because it would vilate the basis of the analysis: the proxies are chosen on the basis of prior information, not what the time series look like.

The Tornetraesk series used by Esper et al 2002 is not the same as the Fennoscandia series used by Jones et al 1999, and Mann et al 1998, 1999, but I’ll look into the affect of leaving out the Fennoscandia series (sticking to the rule of taking the first used).

Did you miss my previous answer?

What do you mean by gaping holes?

Re 26: The answer applies to bristlecones as it does to others. The selection of proxies is based on what was expected, not on what the series look like. The statistical terminology is a little confusing here: if new results were obtained showing independent experimental confirmation of a CO2 fertilization effect in bristlecones that would be reason for excluding them, but analyses based on what the time series look like, while they are clearly useful for other purposes, cannot be used to exclude data from an analysis of this kind.

Re 27: Using a longer calibration period gives more reliable results. As we are using proxies with long auto-correlation periods it would not be possible to get meaningful accuracy estimate from a verification period of the kind you suggest.

#28. I’m leaving now for Cornell and will be out of pocket for a while. I’ll comment briefly on the NAS Report. No, I do not rely on everything, but you need to consider what they say. By and large, specific comments are more reliable than general comments. Their recommendation not to use strip-bark trees is specific. It is also consistent with previous literature including Biondi et al 1999 and even a comment in IPCC 2AR. The use of bristlecones as a proxy is what needs to be justified, not “avoiding” their use. If you are “evaluating” reconstructions – a premise that seems increasingly doubtful – then evaluating bristlecone impact is surely much more on the agenda than many peripheral topics that you’ve spent time on.

BTW, Mann et al 2000 claimed that their reconstruction was “robust” to the exclusion of all dendroclimatic indicators. IF that’s true – and it’s a cliam well worth “evaluating”, then you should be able to forego using Graybill sites because you are unable to determine which were strip bark. You seem very reluctant to do this evaluation. I wonder why. If you’re wondering, you can see Mann’s calculations of the impact on his NOAMER PC network in his BACKTO_1400/CENSORED directory. I’m sure that you, like Mann, know the answer. Also that you, like Mann, don’t want to say it.

I’ll pick up on your other points in a couple of days.

Re: #28

Yet the people who sampled them, Graybill and Idso specifically mentioned the growth as anomalous and having nothing to do with temperature. Why do you take it upon yourself to include bristlecone pine proxies which come practically stamped with the legend “NOT TO BE USED AS TEMPERATURE PROXIES”? It doesn’t matter whether or not they correlate with CO2 or anything else – the fact remains that they do not correlate with the temperature regimes they grew in. These non-temperature proxies are then given weight by the statistical methods used and voilàƒ➡ Hockey Sticks!

re: #28 Dr. Juckes,

It appears you’re using a different meaning for the word “reliable” than I’m aware of. To rely on something is to know that it can be trusted. But if you have no way to verify that something is correct, then how can you trust it?

Also, it would seem from the wording of your sentence concerning auto-correlation periods that you’re admitting that the degrees of freedom are sufficiently low that saving an appreciable part of the instrumental data for verification reduces the significance of the results too much. In that case, I’m wondering how much trust could ever be placed in such reconstructions? Any comments?

Is there any evidence in the literature that such an a priori criteria was used in any of the initial multi-proxie reconstructions? In particular, has it been documented that all the proxies which passed such a preliminary screening were then used in the reconstruction? And later when people like your group then select which proxies from earlier reconstructions to use, how do you renew the virginity of the selection process? Steve M and others have asked. I have failed to notice an answer.

Folks, would you please post comments to MArtin on the Your Comments thread. I’ll pay attention to it and transfer from time to time.

Maybe I am missing something, Dr. Juckes, but it sure seems to me that any study whose results hinge on the deliberate ad hoc selection of only a few trees (without any real justification, even) must be questioned. I can get about any curve I want with this methodology. Dendroclimatologists seem to have a totally different idea of what the scientific method is.

If the statistical terminology is a little confusing I would be happy to clarify. What exactly is the source of confusion? There are two previous comments where I describe the problem in some detail. A search on “bristlecone misspecification bender” will direct you to those comments. #7 in “New CPD paper on reconstructions”, for example. Or #33 in “Rob Wilson on bristlecones”. (I would provide you with direct links myself, but the mouse-over pointer to comment numbers is currently not available in the new look CA.) If those comments are unclear, I can clarify further.

Note: It’s not about CO2. It’s about positive synergy between multiple factors (temp, precip, possibly others, such as CO2, N) being neglected in a linear additive temperature reconstruction model. It’s quite simple when you think about it.

Please look seriously into the matter. It is a critical weak point in your paper. If it is not addressed, I shall take you to task.

Thanks again for your participation here.

Juckes, on #34 your attention is kindly requested.

Please note that your attempt to phrase the bcp 20th c. uptick debate in terms of a yes/no CO2 fertilization question ignores the possibility of interactions among multiple variables. i.e. If G=f(T,P,C,N) is the growth model, then perhaps the main effect of C is insignificant, but the interaction terms involving C (i.e. C*T, C*P, C*N) are significant.

You must be careful not to oversimplify the alternatives to the G=C model. Steve M has never argued that that is the correct model, just that it is an undeniable possibility. You may or may not be able to refute that model; but what you need to do is refute the full interaction model. I would be most interested in hearing the opinion of your co-authors on this matter.

[Aside: Note the parallels with the nature vs nurture debate (genetics, G vs environment, E). This is a false dichotomy given the importance of the GxE interaction for many hereditary/dietary medical conditions.]

#35: It is obvious that there are interactions, and it is obvious that proxies are not thermometers. By averaging the proxies with a range of different non-temperature signals we expect the signal to “noise” ratio to be improved, where “noise” refers to all the non-temperature contributions. If anyone can show a quantifiable contribution from other factors, that would have to be taken into account. Because of the large uncertainties in the data it is important to verify the result. Our reconstruction produces not only the 20th century trend, but also the timing of the rapid warming from 1915 to 1940. The detrended series has significant correlation with the detrended temperature.

re #29: Using your code, I can show that the sensitivity you describe only exists when you use your own “arbitrary” normalisation for the calculation of the proxy PCs (ranging from a standard deviation of 0.0432 for wy023x to 0.581 for nm025). Why do you use this normalisation? Is the effective elimination of much of the data intentional?

I have run the code you provide for McIntyre and McKitrick (2003) (http://climate2003.com/scripts/MM03/redo.mann.txt). The comments suggest that it is intended to reproduce figures 6 and 7 of that paper, but what it actually produces is significantly different: the curves which are labelled as “corrections” come out flatter than the Mann et al. hockey stick. Any idea why? The plots generated by your code are here: http://home.badc.rl.ac.uk/mjuckes/tmp/fig6_mm2003_recalc.eps and

here: http://home.badc.rl.ac.uk/mjuckes/tmp/fig7_mm2003_recalc.eps. The code I used is in the same directory: with a few edits to use local files because I cannot access the climate2003 site with R, even though downloading stuff by hand is no problem.

re my previous post: If you click on the 2nd of the two links in my previous post, you get an error because I put a full-stop after the link. It should be: http://home.badc.rl.ac.uk/mjuckes/tmp/fig7_mm2003_recalc.eps

Re 36: Dr. Juckes, what is your data model? That is, can you give a statistical characterization of the signal and of the noise, and demonstrate that your calculations enhance the signal? How did you arrive at your statistical characterization of signal and noise?

Re 38: The data model is described in the appendices of the manuscript.

Re #36 (1)

Dear Dr. Juckes,

First, I’m glad you agree that the interactions between temp & precip are “obvious”. And presumably you understand how this model applies specifically in the case of 20th c. treeline conifers in the Sierra Nevada (Graumlich 1991, Salzer & Kipfmueller 2005)? Second, I’m glad your reconstruction “fits” with observed instrumental data during the 20th c. However that is not my concern. My concern is (1) the degree to which this “fit” is actually an overfit to a sample, and (2) the degree to which overfitting during the modern era leads to important biases during previous times, such as, say, the MWP.

Shall I go on, or is it clear enough now where I am going with this?

bender, here’s an interesting URL to Stine on the Y1K megadrought http://www.yosemite.org/naturenotes/paleodrought2.htm. Lloyd and Graumlich discuss this. One point that I wish to emphasize and re-emphasize: our position is not that the anomalous 20th century bristlecone growth is demonstrated to be caused by CO2 fertilization. That;s outside anything that we are in a position to argue. We merely observed that specialists in the area said that it was, while noting other specialists drawing attention to other potential fertilization (nitrate) and asserted that it was the obligation of people relying on this proxy to exclude fertilization, rather than the other way around. The obligation to show a linear relationship to temperature also requires exclusion of interaction relationships, as bender has also emphasized, and which relevant specialists have asserted to exist for foxtails.

Re #41

That link did not work for me, but it sure looks enticing.

I agree with your view, Steve M: the onus is on the protaganist – the dendroclimatologist aspiring to publish – to prove that these nonlinear interactions – which some describe as “obvious” – are not fatal to the use of a linear additive reconstruction model. I suspect there are very good reasons why smart people like Graumlich (1991) not only avoid linear additive models, but openly publish their skepticism about them.

Re #42 My bad. Must remove “.” from the link. Works fine.

Wow, that is very interesting. Thanks.

Thought: If it takes, on average, several hundred years for a bcp to succumb to a drought, then the annual mortality rate (which would be compounded over those 300 years to get the cumultaive moratlity rate) would not need to be very different between “geomorphically droughted” and non-droughted trees in order to select out 100% of the former. Fractions of a percent difference would be plenty.

In other words, very, very weak differential mortality ought to be sufficient to seriously bias the MWP tree-ring record. Which means it is entirely possible that the “negative responders” of the MWP were indeed selected out of the modern-day population that is available for sampling.

So when you see those ancient stems/stumps protruding out of the lake pictured in that link, that’s what you are seeing: the result of 1000 years of differential survivorship, the valley-slope samples long-gone. And if you could compare chronologies from those lake-bottom trees to the valley-slope trees that were lost during the MWP double megadrought, you’d likely get your nonlinear MWP back! So maybe it WAS 1°, 2°, or even 3°C warmer then than now?

bender, did you read Miller et al 2006 discussed here http://www.climateaudit.org/?p=585 or was that before you started reading here? Miller et al provide a very convincing analysis reaching those conclusions. Ironically Lloyd and Graumlich 1997 – which Juckes cites as authority for the two foxtail series used in his Union even though they are not mentioned in that article – discusses changing treelines and the role of drought rather than temperature in causing treeline changes in the MWP. Andrew Bunn’s thesis has an interesting analysis of the T-P niche occupied by foxtails based on a survey of a large number of gridcells – Bunn seems like a gem among dendro people – and reported that foxtails have a niche only in a very narrow precipitation interval centered on 120 mm in a cold environment.

As to mortality, obviously both foxtails and especially bcps are very hardy – hence their longevity. BCPs compete with big sagebrush and thus must be tolerant of aridity. It is this overlap of precipitation and temperature in this location that makes it particularly hard to isolate temperature contributions and a remarkably poor candidate as a temperature proxy for anyone other than data miners and data snoopers.

I had read it, but not all that carefully.

I have been avoiding all of the treeline recon literature because treeline dynamics and millenial-scale reconstructions are too far outside my line of work to bother much with it. Now I realize it is impossible to divorce treeline dynamics from ring width response dynamics. As climate changes, treeline changes, the limiting climatic factor changes, and the nature of the climatic response changes. “Obvious” to anyone who’s thought about it. Then again, where’s the validated model if it’s so damn obvious?

Dr. Juckes chastizes me, saying that “trees are not thermometers”, as though it was silly of me to suggest that I might know something (e.g. about model mis-specification under nonlinear interactions) that they do not. Of course I know that trees are not thermometers. That’s precisely why I am not trying to making unsupportable claims in the literature about modern day temperatures and temperature trends being “unprecedented”. There’s too much complexity and uncertainty to make that claim with any kind of confidence.

Re 46: Its good that we agree on something — I’m sorry that you find the statement “trees are not thermometers” offensive. Do you think that trees are unique in having a non-linear response to temperature, or even that the non-linear response is the same in all the tree-ring chronologies used in our study? I don’t think our study makes claims about “unprecendented” trends either. Our reconstruction has substantial upward trends in the 17th century. Our reconstruction is considerably more variable than those of Mann et al. (1998, 1999), and much more variable than that produced by the code McIntyre archived for McIntyre and McKitrick (2003) (see #36). What we do say is that our results support the IPCC conclusion about the temperatures of the last decades of the 20th century.

Actually, I thought your use of language was much more measured and reasonable than MBHx. In fact I almost said as much one day. The problem, Dr. Juckes, is that the damage of faulty language has already been done. Any time a warmer wants to say the current warming trend is “unprecedented”, they merely point to MBHx. If a skeptic argues that the trends and levels reported in those papers are not credible, the warmer will point to Juckes et al. and say it shows the same thing. “Moving on” with “independent” evidence. The fact is you don’t need to use the word “unprecedented” because Dr. Mann has already done so. So don’t be offended. It’s nothing personal.

I don’t necessarily find the statement “trees are not thermometers” offensive. What I find offensive is the context in which the statement is embedded, i.e. your writing. Your latest contribution, for example. You ask:

The answer is, umm, obviously, “no”. But I suppose this is a rhetorical question. Seems to me it’s a device, designed to dodge the substantive issue of the bcps.

But I could be wrong. So prove to me that the other proxies are not window-dressing. Prove to me that the bcp’s are not driving the show. Prove to me that all these reconstructions are not highly DEPENDENT on the bcps. Dependence. Not “independence”. (It occurs to me that “the team has a dependency problem”. Can you cure my skepticism on that one?)

Maybe after discussing this we can talk about the MWP and HONEST temperature reconstruction? (That is, unless YOU are offended by the topic of “trees as thermometers”.)

Re 48: If you accept that all proxies have problems, why are you singling out the bristlecone pines?

re 48: Our paper wasn’t written for a target audience of heating appliances: what do you mean by “a warmer”?

#50 Martin

I appreciate your attempt at humour. It’s always welcome and appeciated on this blog. Back to the serious stuff. You still haven’t answered my question. Why as head of the Atmospheric Science Group at RAL are you conducting proxy temperature reconstruction studies? Given your last reply on another thread, what is your contribution to the development of the UK’s climate computer models which at your own admission is funded by NCAR (not your paleoclimatolgy studies)? I’ve also previously asked you whether or not in your day job you have any dealings with Isaac Held and Brian Soden? As an ‘atmospheric scientist’ in not reasonable I think to expect you to.

KevinUK

#51

And Martin

As an atmospheric scientist and also paleoclimatologist supporting the Euro HT, I wondered whether you fancied a change of job in the coming new year? I’ve heared Here that they are looking to build up a climate research team at Exeter University headed up by Peter (“Day of the Trifidds”) Cox. Looks right up your street. Failing that given your relationship with the NERC it looks like the Centre for Ecology and Hydrology will be looking for a new Director come April 2007. If you get the job then at least I’ll know that my ‘green’ taxes will be going to a good cause and also if you do, make sure you give my regards to my old mates at Winfrith.

KevinUK

Martin Juckes:

Just to clarify that point about RMS (I haven’t a copy of the paper in my possession) – are you saying that the RMS calculated was RMS error? And if so, what did the paper refer to (RMS?)

Re #50

Dr Juckes asks “what do you mean by a ‘warmer'”? A warmer is the shorthand opposite of a “cooler”.

What’s ironic is that CA is not populated by a bunch of “denialists”. Data suggest we are populated by a bunch of lukewarmers! Here is a tally so far of my public opinion survey:

Q: On the A in AGW: What proportion of the 20th century warming trend do you think is attrubutable to human-caused greenhouse effects? What’s your estimate for the uncertainty, àŽⲬ on this parameter, A?

poster date A ± àŽⲉrange

BrooksHurd November 22nd, 2006 at 9:07pm 0.3 0.1 [0.2,0.4]

pat November 23rd, 2006 at 12:56pm 0.2

Reid November 23rd, 2006 at 10:27pm 0.02 0.01 [0.01,0.03]

WillisE November 23rd, 2006 at 11:12 pm 0.3 0.25 [-0.1,0.4]

bruce November 24th, 2006 at 1:21 am 0.05 0.2 [-0.15,0.25]

ChrisH November 24th, 2006 at 2:14am 0.3 0.3 [0,0.6]

beng November 24th, 2006 at 10:43am 0.2 0.1 [0.1,0.3]

The mean for “A”, so far, is 0.2.

Ans: According to CA, 20% of 20th c. warming is due to human GHG sources.

So, to Dr Juckes question what is a “warmer”, I would half-reply: “a person who believes A is much greater than 0.2”.

Keep those guesstimates coming in. At some point I’ll ask the same question over at RC. Then we can fully reply to Dr Juckes thought-provoking query.

Care to play our guessing game, Dr Juckes?

They are different versions but they are from the same site, Tornetrask. Your “rule” – using the most obsolete – is a ludicrous rule. Can you provide a methodological reference for the selection of that rule?

Re 51: First a correction: I don’t know if I typed “NCAR” (which is a US research centre), but if I did, it should have been “NCAS” (which is in the UK). I have occassional interaction with Isaac Held on the topic of baroclinic equilibration (the question as to how the level of storminess in midlatitudes responds to changes in the large scale temperature gradient).

Re 53: RMS is not referred to or used at any point in the paper. It is used to normalise curves in two figures in the supplement.

Re 54, 55: I wouldn’t like to guess. This issue is not addressed directly by any of the work I’ve done. I can’t see any reason to disagree with tthe IPCC 2001 statement that more than half the recent warming is anthropogenic.

Re 56: Could you publish your demonstration of obsolescence?

Re #57:

Mind stating your reasons for agreeing with the statement?

#58

To be fair to Dr. Juckes, he didn’t say he agreed with the IPCC’s statement – merely that he would not disagree with the statement. Those are two different assertions. His answer, taken at face value, was that it was outside his particular expertise so any response would be a guess.

Dr. Juckes:

Regarding the selection of which series to use when more than one is available from a single site, you have chosen to use the “first used”. Another possible choice might be to use the “last used”. To your knowledge, is there any reason why the former choice would be better than the latter, or would a reconstruction using the latter choice be equally valid?

Really? Maybe I’m misinterpreting his statement, but when I eliminate the double negatives, it changes to “I have every reason to agree with the IPCC 2001 statement that more than half the recent warming is anthropogenic.”

Sorry, my last post was in response to #59. I hate the inability to edit posts.

#57 Martin

Thank you for answering part of my question and for correcting your original error (repeated by myself) that NCAR should have in fact been NCAS. Now could you answer my full question. Why as head of the Atmospheric Science Group in RAL are you conduciting paleoclimatology studies specifically the evaluation of proxy temperature reconstruction studies? As I hope you’ll appreciate, as a UK taxpayer I am concerned about how my taxes are being spent (I’m sure you are as well). I’m therefore puzzled as to why RAL and specifically the Atmospheric Science Group of RAL are being funded to conduct paleoclimate studies? I suspect that I know the answer but I’d like you to confirm it. Steve in another post has eluded to the fact that when giving his presentation at KNMI (where you will be presenting in December) that he spent some time with Nanne Webber who referred to you as ‘the Euro teams’s statistics expert’? Are you? I undetsand that you are an Oxford University maths graduate but did your degress include maths with stats? As a UK taxpayer, i have no objection to funding and independent study of the methodologies used by paleoclimatologies within the UK. However I don’t think that RAL is the organisation to do this and certainly not someone who is the head of the Atmospheric Science Group of RAL.

I would instead have preferred if such a study had been done by someone with proven eminent stats credentials who is independent of the climatology field which you clearly are not. Please note that this contrasts significantly with the US situation in which Edward Wegman was given this task. I am also disturbed by the fact that as you are a member of the Green Party, that it would be difficult for you to carry such studies impartially.

KevinUK

# 61 / 62

I do not believe the IPCC is a big conspiracy, but I do believe that scientists doing their niche work in climatology have ‘faith’ of a sort that scientific methods, ‘skill’, integrity, and peer review of their community mean that the IPCC assertions are more likely correct than not. [Which is why revelations Re: Mann are so shocking to many…] This ‘faith’ in our common man is not unique to science. Society couldn’t progress without it. I apply this thinking to Dr. Juckes, without questioning his motives or honesty, when interpreting his assertion.

‘I can’t see any reason to disagree’ with my granting him the benefit of the doubt when reading his statement: 1) He has ‘faith’ that other skilled, peer-reviewed scientists have figured everything out about as well as possible, 2) He believes IPCC has completely and correctly synthesized the current state of understanding on climate, and 3) Nobody has placed a hot, smoking gun in front of his face that is so compelling it would lead him to question the other niche science on his own, 4) So he has no reason to question the IPCC assertions. I may be being more generous to Dr. Juckes than I should, but I’d rather keep him in the lion’s den w/ Steve M. and company than chase him out.

Re:#63

KevinUK,

I’m disappointed to see such a post here on ClimateAudit. Surely all that matters is the scientific correctness of a work, not petty bureaucratic jurisdictional issues, or a scientist’s political party affiliation! Do we really want to introduce various litmus tests on who is “allowed” to perform scientific work, or on who is “allowed” to collaborate with whom?

Taxpayer issues, of course, should be directed to the relevant government(s).

#65, Armand

And in due course once Martyn has answered my questions they will be.

I make no apologies to anyone for the fact that I consider AGW to be politically inspired and funded in the UK. This is a matter of record. As a UK taxpayer I have a right to ask these questions no matter how uncomfortable this may make some people feel on this blog. If the AGW debate only involved the science then I wouldn’t be concerned but as many here know and I hope you yourself will at least acknowledge AGW is a highly politicised arena in the UK. In the US they have had the good sense to reject the Kyoto Protocol, in the UK I face the prospect of having to pay further taxes to pay for yet more paleoclimatology studies, and for faster supercomputers for the Hadley Centre to run their computer models on, and more taxes so that yet more academics can set up more climate research centres as part of the Tyndall centre etc etc. I’m sorry but NO! Definitely NO! And definitely not until the data, methodologies, computer source code etc on which these alarmist claims of future dangerous climate change are based has been released so that truly independent people like Steve and Ross can analyse it and as I suspect, as they have done with MBH98 etc, demonstrate that it is does not stand up to scrutiny.

KevinUK

Re: #66

Of course you can post here anything that Steve will tolerate. I don’t see this as an appropriate forum for taxpayer issues, but I suppose we can agree to disagree on that.

I am pleased to see that you didn’t disagree with anything in my first paragraph, which described my main concerns. It would have been sad to see CA go down the RC road of guilt-by-association.

OK. let’s leave this. Kevin, you’ve made your point; you know that you won’t get an answer.

#68 Steve

I have a great deal of respect for you and Ross. Consequent I will respect you advice and now drop this issue. However I did promise at the start of the ‘Potential academic misconduct…’ thread that I would write to Martyn’s funder BCAS if he did not withdraw his slur against you from his CoPD report. As yet he has not agreed to do this and as I now have enough information to support my letter, I will now desist.

KevinUK

I have completed the first draft of the compendium of problems found in the MITRIE paper. It is available as a zipped Word file here, and as a PDF file here.

I invite everyone who is interested to read it and comment on it. I have tried to be as clear as possible, but I am not 99.98% sure that I have achieved this goal, or that I have fairly represented the arguments made by others on this site.

This should likely be a new thread, so we can collect all of the comments in one place and I can modify the document accordingly.

My great thanks to everyone for their past (and hopefully future) work on this review project.

Best to all,

w.

RE #70. Willis. A sterling effort. Some brief comments from an initial read.

1. It would be helpful to use an outline heading numbering system, and add page numbers, to facilitate reference and comments.

2. There are some missing words that reduce the power of your message.

3. In your discussion on the relationship between tree ring thickness, you introduce the idea that growth is a function of other factors besides temperature (clearly true) but you miss the opportunity to address the point that there is an inverse quadratic relationship between temperature and growth (ie growth is subdued at low temperatures, increases as optimum conditions prevail, and then reduces again with higher temperatures).

Willis,

Didn’t get through it all, but here are a few notes:

I don’t see any requirement that temperature proxies are selected for Jukes’ study. If the study only requires that the proxies extend from 1000AD to 1980AD, then couldn’t a proxy of the percent of pirates in the population also suffice? Surely the proxies should be screened to verify that they actually reflect the local temperature for a calibration period before being included in the study. To me, this is the foremost glaring error.

I think your point about over-weighting certain regions will not resonate with the tele-connection believers (actually, if one believes in tele-connections then global or hemispheric coverage should not be important at all). The retort will be that you don’t understand something about SNR, etc… etc… I think there are many more fruitful arguments to make other than hemispheric/global coverage.

RE#72 Nanny,

I agree, the over-weighting of certain regions is not worth arguing. However I do think that the lack of correlation to local grid temperatures and the resulting residuals are worth talking about. How does one make white noise from the residuals?

Also, although there might be a valid argument for climate tele-connection, there is no known reason to believe in ‘Plant- telepathy’, which would be required, given the lack of local grid temperature correlation.

Willis: I think you dropped a word here:

Also, David Black, a published specialist (Science) in G. bulloides, has pointedly [??????] the use of G. bulloides off Venezuela as a proxy for temperature, as he considers it a proxy for trade wind strength.

Bruce, thank you for your quick comments.

Done.

Let me know which ones.

Excellent point. I have added a section to detail this problem.

Many thanks,

w.

Cliff Huston, I appreciate your contribution.

While this is an interesting point, it opens up the whole question of data-snooping. If we pick only proxies with a decent correlation to local gridcell temperatures, what is to say that this correlation is not purely by chance? How can we provide adequate error analysis if we are using a selected group of proxies? I am interested in everyone’s comments about this question, as I do not know the answers.

While global/hemispheric coverage is not required, it throws off the results if we use multiple proxies from one area. While it is not a major point, it is a valid point.

My best,

w.

Cliff, you say:

Thanks, I’ve fixed it.

w.

New versions of the MITRIE review posted incorporating the above comments:

Word

PDF

w.

#75 Willis,

That was nanny (#72) – but you know that by now.

I agree that global/hemispheric coverage is a valid point, but grid cell correlation/residuals and plant telepathy sum to a major logical error. Given that your ‘short’ comment can’t go on for ever, I was suggesting (in #73) that your minor point should be replaced with that (IMHO) major point.

Hi Willis,

It’s a good start, but it needs some organisation (note also that the formatting is all over the place in the most recent version).

I suggest it needs to be organised as a series of themes (e.g. problems with proxy selection, problems with model etc.) These should probably be ordered in terms of the order they are enountered in the Jukes paper. Each section should start with an introduction providing the context, the discussion, and a conclusion stating how the discussion is problematic to the paper’s findings.

As it stands, your critique comes across as a series of nit-picks, requiring informed knowledge to relate the criticisms to the substance of the paper.

Finally, you should strip out some of the irrelevant parts (e.g. Mann and the calculation of R2) and anything that talks about “motive”. Also, the slur on SM re code archiving requires only a sentence, noting that it is inaccurate. In general, I suggest you make the critique as disapassionate as possible.

Fantastic that you’re doing it, BTW!

New versions of the MITRIE review posted incorporating a new section 119.1 regarding correlation and teleconnection (thanks, Cliff), and additions to section 57 covering the “U” shaped response of plants to temperature (thanks, bruce):

Word

PDF

w.

Good work, Willis! Some comments:

I would remove 133.

138 is the important one. 1) A1:’Unfortunately, the error characteristics of the proxy data are not sufficiently well quantified to make the choice clear.’ Why they are not quantified??

2) There are a lot of publications that discuss the calibration problem (Brown, Sundberg, Krutchkoff, etc) Equations in A1 and A2 (before the scaling step) can be found from those publications. CVM is not mentioned. The performance properties of the calibration estimators have been extensively investigated in the literature, but no references can be found from the appendix.

James, your comments are good. You say:

I like the “themes” idea, but I don’t think it can be organized in that particular way, because the themes are not separated in the original paper. However, I will write an introduction to each section along the lines of what you suggest. I’ll clean up the formatting on the final version.

Hmmm … I don’t think any of these are “nit-picks”, and I am assuming that the authors will have the required “informed knowledge” to relate them to the paper. Perhaps you could be more specific about the examples.

Their paper is supposed to be an “intercomparison and evaluation” of prior millennial reconstructions. How can the abysmally low R^2 of Mann’s paper, and his attempts to hide it, be irrelevant to that?

On the other hand, I do agree about “motive”. Since I did not use that word in the document, perhaps you could list the parts you find objectionable.

Finally, the slur on SM is a very important point. They are accusing him of doing what some of the co-authors have done. This is untrue, unwarranted, unethical, and perhaps actionable, as they are trying to destroy Steve’s credibility. The claim they are making is “How can you believe Steve M. when he says that researcher X is hiding data, when he’s doing it himself?” I find this to be appalling scientific misconduct, and have no wish to make it into a single sentence. I want that part out of their final report, it has absolutely no bearing on the subject. Or, if they want to leave it in, they need to be accurate about what happened, and include the misdeeds of their co-authors as well.

I brought this point up on CoP, and they just blew it off, so I’m repeating it in (figurative) bold type in the review.

You’re more than welcome.

w.

Hi Willis, thanks for your post. I’ll post a substantive reply tomorow.

Willis,

I neglected this in my earlier posts, but I very much appreciate the work you are doing on this document.

Thanks!

PS: To have a focused review document, I would suggest that you use Juckes section titles (with page ref.) for your major section headers and use your headers below that for sub-subjects that relate to the Juckes section. The authors will know what you are addressing (whatever the organization), but it will make the document more effective if the editors and other readers can follow.

Thanks Willis! Here are some of my suggestions (I’ll comment more if needed, just let me know):

74. The real problem here is that the assumptions on error e are not specified in the paper. It is said in the paper that the proxy noise is “independent between proxies” (i.e., spatially white) but nothing is said about temporal structure (i.e., autocorrelation).

a) Since the noise structure is not specified, it cannot be evaluated if the model is realistic. I.e., we cannot say that, e.g., model specified in 78., is better/worse as model in 78. as the submodel of 78. could be written as 75. with certain assumptions on the error e in 75. So one really needs to specify the error structure in Juckes’ model.

b) All claims about “optimality” of any statistical procedure depends on the specified model. If the model is not specified, one can not claim any optimality and/or compare different methods. This is crucial for the CVM method on p. 1028, which is claimed to be superior on the standard inverse regression (LS) solution on the same page. One simply cannot make this kind of claims without clarifying i) the model and ii) in which sense the optimality is claimed. Furthermore, a proof of the claimed optimality (or a reference of the fact) is completely lacking in the manuscript.

78. Hmmm… I would vote for the multiplicative model , but 78. is ok too.

, but 78. is ok too.

115.-118. The reason why “benchmark.txt” (random walk) outperforms CVM and the reason why Juckes’ simulations (AR(m), AR(1)) perform so poorly (Table 3) is the flipping. In order CVM to work, it needs a) linear proxy model and b) positive correlation between temperature and the proxies. Now, apparently in Juckes’ simulations he did not make sure that his simulated proxies correlate positively with the temperature. If there is about half of the proxies correlating negatively and half correlating positively, they “cancel” each other, and the correlation is bad. In the INV-method, flipping is not needed as the inverse regression takes care of that automaticly (which also means that one can not argue that flipping should not be perfored in CVM).

Related to this (and to PC flipping) is that in the Union CVM reconstruction the Chesapeake series (negative correlation with the NH temperature) was flipped. This was not reported in the text and the flipping seems to be also missing from the code. The Union-CVM reconstruction with unflipped Chesapeake series reduces considerably the performance of the CVM-Union. Moreover, the fact that this series was flipped, indicates that the authors realize the need of adjusting the series.

I’ll post here, if something else comes to my mind.

Willis: great review. I can’t think of anything you left out.

Thanks much to Willis E and all those posters making review comments on Willis E’s critique of the Union Reconstruction. I find that such efforts help me (and no doubt other laypeople reading at this site) better comprehend the underlying principles involved and summarizes some important discussion that have occurred at this blog (and summaries are something I think this blog can use more of).

I think the best case scenario for presenting this critique as part of the pre-publication review process is that it can provide some information, viewpoints and approaches that might not otherwise be presented and perhaps make any of the unaware a little more aware.

Call me cynical, but at this point, I judge that a scrupulously polished and totally non-controversial review with the POV expressed by Willis E (and many at CA) would not pass acknowledgment muster with the publishing powers that be. The aim should be to present information that can be recollected in a future and less controversial time and in such a way that an immediate dismissal cannot be rationalized and a thorough reading is encouraged. I think Willis E has accomplished that, but I say that as a civilian and not an in–goal defender of the Hockey Team, be it the NA or EU version.

Jean S, thanks greatly for your substantive comment. I am much more interested in the substance of the review rather than the form, style or layout of the paper, although both are important.

You say:

Yes. For the CVM method to work, the noise needs to be spatially and temporally white. If it is not, then there is no guarantee that the noise terms will cancel in the CVM averaging process. It also strikes me that for the method to work, in addition to the autocorrelation question you raise above, the variance of the noise needs to be constant over time. It seems like we should be able to determine if that is the case for the selected proxies, although I’m not sure exactly how. Comments?

Yes. They base their optimality claim on a comparison between INV and CVM results, not on theory

Upon reflection, it seems to me that neither , nor

, nor  , is actually the case. Chuine quotes Pisek’s photosynthetic activity curve as being of the form:

, is actually the case. Chuine quotes Pisek’s photosynthetic activity curve as being of the form:

which is not captured in either model

Therefore, it seems to me that the actual model should be something on the order of:

or

or somesuch.

Your comments on this greatly appreciated.

Excellent. This is a most clear and cogent analysis of the issue with the flipping of proxies. CVM requires that there be a positive correlation in order to work. I will revise that section of the review.

Good news. There are a number of people who contribute here whose work I always look forward to reading, and you are definitely on that list.

w.

Aren’t all these “T”‘s in your equation “Growing Season Daytime T” (Tgsd)? I think that adds a further complication to the equation.

Worth noting that my multiplicative growth model was, by design, a first-order statistical model. Not because growth is a first order process, but because you have to keep the number of terms low in a statistical model if you have limited degrees of freedom available for estimating the various effects. Fact is rarely do the higher-order quadratic and cubic terms add much to a growth model’s significance. That’s what parsimony buys you.

Hi Willis

I guess what I mean here is that it’s important to emphasise the substantive parts (ghost of TCO here). I’ve always felt that Steve M’s work was muddied somewhat in earlier years by the variety of statistical horrors he uncovered in MBH. I would put most effort into the really important parts, relating them to Juckes’ conclusions.

I think maybe a sentence describing how the MBH reconstruction fails R2 as reported ny W&A, not discussed in the Juckes et al review. I don’t think what Mann might have done is relevant to the Juckes paper.

I can’t find the example I was thinking of, it may have dissappered between the first and second version.

I agree with you willis, but for the CoP post I think it should kept to an incorrect statement (and an incorrect citation, for that matter).

#88

If the noise cancels completely in the averaging process, then CVM and classical calibration estimator become equal, because then

become equal, because then  . But if any noise left, it will make CVM to underestimate the temperature variations. I’ve been trying to understand where that square root term in line 13 in A2 comes from. And I think I’m almost there. See (1), they used small-disturbance asymptotic approximations to obtain asymptotic bias of the classical calibration estimator. If Y is the temperature to be estimated, and calibration data (x and y vectors)are zero mean, the bias can be expressed as :

. But if any noise left, it will make CVM to underestimate the temperature variations. I’ve been trying to understand where that square root term in line 13 in A2 comes from. And I think I’m almost there. See (1), they used small-disturbance asymptotic approximations to obtain asymptotic bias of the classical calibration estimator. If Y is the temperature to be estimated, and calibration data (x and y vectors)are zero mean, the bias can be expressed as :

Note that the bias approaches zero as the calibration data (size N) increases. The bias is relative to temperature, so some kind of scaling might help, rewriting the bias:

So we can try to get an unbiased estimator by using

Now just forget some betas and N from the correction, and remember to take square root as well, and you have CVM!

I think we’d better leave this out from the report, just FYI 🙂 I’m not even completely serious. But anyone interested should read the reference:

Ref1: Sristava and Singh (1989) Small-Disturbance Asymptotic Theory for Linear-Calibration Estimators. Technometrics, August 1989, Vol. 31, NO. 3

Willis, when is this review due?

Re: #93

“Open Discussion” on the website lasts until Dec 21st. I hope Willis will keep his compilation open through this week (thanks for your major efforts, Willis!).

I think there is one extra N in my equations. But no worries, when the authors respond to the review, we’ll get detailed derivation of CVM (or a reference to a paper that includes it). Or they find out that there is an error in their derivation, and the paper will be corrected accordingly or withdrawn. That’s how science works.

Steve M., I’ve been shooting to complete it by the 15th. Keep them cards and letters coming …

w.

For paragraph 78,

The paper assumes that the growth function G() is linear in average T. In fact the growth function is a complex non linear function (appologies for no Latex – I’m a MathML guy).

G() = integral over growing season of g(T, C, M) dt

Where G() is the annual ring growth, g() is the growth function and T, C, M are instantaneous values.