Relatively up-to-date radiosonde data is available from the Hadley Center, tropical (20N-20S) is here. Ratpac and Angell are not up to date. The tropical troposphere has been a source of disputes recently, but I haven’t seen any discussion of up-to-date radiosonde data, [Note: Luboš has a current discussion on radiosondes.)

You will recall the diagram illustrating a hot spot around 200 hPA in the tropical troposphere. Here’s a diagram from realclimate which is the first figure in their post entitled “Tropical Troposphere Trends”.

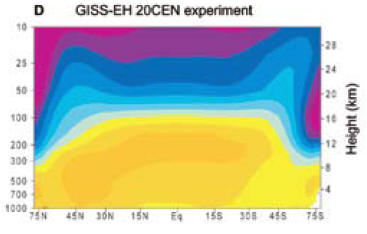

Figure 1. Graphic from realclimate said to show the effect of doubled CO2 using GISS Model E.

Here’s a simple plot of tropical 200 hPa radiosonde data to March 2008 from Hadley Center.

Only one month in the entire history of the radiosonde record since its commencement in January 1958 had 200 hPa and 150 hPa anomalies below -1.2 deg C. It was March 2008. The trend since January 1979, the start of satellite records, is -0.025 deg C/decade. Yeah, I know that it’s just one month, but it’s still a “record”. It would be interesting to calculate the odds of a negative record on the hypothesis of (say) a positive trend of 0.1 deg C/decade. (Note that these data sets are highly autocorrelated and that ARMA(1,1) coefficients are both significant with an AR1 coefficient of over 0.9 in an ARMA(!,1) model – something that reduces “significance” of any trend quite noticeably).

Note: As observed below, the GISS graphic shows the effect of doubled CO2, while the increase in CO2 levels since 1960 to date has been 20%, and about 15% since the start of satellite records in 1979. On the basis of a logarithmic impact, the first 20% increase accounts for about 26% of the impact; and the 15% increase since 1979 about 20%. So it should be noticeable in either data set. In other posts on radiosonde data, I’ve observed that there are many issues with inhomogeneity in radiosonde data.

More: CCSP 1-1 and HadAT Radiosonde Data

The U.S. Climate Change Science Assessment Report 1-1 , a report to which Douglass et al 2007 were in part responding, contained graphics illustrating both HadAT radiosonde data trends from 1979-99 and GISS projections over the same period, so it’s interesting to compare their results to the updated information here.

First here is a graphic from the CCSP report, which, inter alia, shows their calculations of HadAT radiosone trends for 1979-99, followed by my calculation of the same trends for 1979-March 2008. It’s interesting that the HadAT pattern hasn’t really changed that much even with the incorporation of 10 more years.

Top – Original caption: Figure 5.1: Vertical profiles of global-mean atmospheric temperature change over 1979 to 1999. Surface temperature changes are also shown. Results are from two different radiosonde data sets (HadAT2 and RATPAC; see Chapter 3) and from single forcing and combined forcing experiments performed with the Parallel Climate Model (PCM; Washington et al., 2000). PCM results for each forcing experiment are averages over four different realizations of that experiment. All trends were calculated with monthly mean anomaly data. Bottom — HadAt trends.

The CCSP report stated:

The pattern of temperature change estimated from HadAT2 radiosonde data is broadly similar, although the transition height between stratospheric cooling and tropospheric warming is noticeably lower than in the model simulations (Figure 5.7E). Another noticeable difference is that the HadAT2 data show a relative lack of warming in the tropical troposphere,66 where all four models simulate maximum warming. This particular aspect of the observed temperature-change pattern is very sensitive to data adjustments (Sherwood et al., 2005; Randel and Wu, 2006).

Below are their illustrations of GISS model projections 1979-99 compared to HadAt actuals. I’m not in a position to comment authoritatively on these graphics, but here are a couple of points that I find interesting. As I understand it, the top of the tropical tropopause is ∼18.7 km (70 mb). The GISS model shows warming right up to ~ 16 km (100 mb), both in the doubled and 20th century graphics, with cooling in the “blue” color code up to 25 km (25 mb). HadAt radiosonde data shows warming up to only about 12 km (250 mb), “blue” cooling from 12 km (250 mb) to 16 km (100 mb) and “purple” cooling from about 16 km (100 mb) to 25 km (25 mb) and higher.

The actual locus where additional CO2 has an immediate impact is at altitude, as more CO2 causes radiation to space to occur at a higher and colder altitude according to the Houghton heuristic cartoon. Getting the sign wrong in the 12-16 km is definitely a bit inconvenient and is not mere nit-picking and you can see why they are looking so hard at the observations to see if there’s some important inhomogeneity.

|

|

Karl, T. R., Susan J. Hassol, Christopher D. Miller, and Willieam L. Murray. 2006. Temperature Trends in the Lower Atmosphere: Steps for Understanding and Reconciling Differences. Synthesis and Assessment Product. Climate Change Science Program and the Subcommittee on Global Change Research. http://www.climatescience.gov/Library/sap/sap1-1/finalreport/sap1-1-final-all.pdf.

152 Comments

Typo in the second paragraph: “hop spot”

Actually, that was not a typo. The Modelers KNOW it is there, yet, every time someone looks for it, it has HOPPED somewhere else!!

I blame Lubos and his wormholes!!

Lemme see, the ground temp from -30 to 30 ranges from 1-2.2c. The hop spot is 3-14.6c. This data adjustment, and its rationale, will be a story for the grand kids!!

Steve this negative trend only appears in obsolete data sets and is very misleading. You should be using RAOBCORE 3.7 at the very least if you want to be taken seriously.

jeez, you claim Steve is using “obsolete data sets” – what part of “to March 2008” do you not understand?

Carl, I think jeez has omitted his /irony tag. I think RAOBCORE is only up to version 1.4, although it looks like they may need a new version with some additional adjustments to the data.

The Real Climate link claims that rising temps in the tropical troposphere are not a signature of GG based warming, but of warming due to any causes. Obviously from the charts in Steve M’s posting, this tropical trop warming is not happening. But is the underlying point true? Is it true that tropical trop warming will happen if it warms, from any cause including GG? This was the point of McKittrick’s T3 tax proposal, was it not?

[irony]29.25 years of data isn’t climate. It’s simply weather noise. It takes 30 years data to be considered climate[/irony]

Better Carl?

I am beginning to be wary of commenting on these web pages as I just keep on exposing my naievity, but; reading realclimate’s explanation of this data I am reminded of Econometric explanations of inconsistencies between observed and model predictions of economic events. I soon came to the unacademic opinion of such explanations that “…these people are making it up as they go along.” I concluded that the reason for the inconsistencies was because there were genuine inconsistencies and hence there were real problems with the models.

In short- and ignoring high level statistical manipulation of the data- is it correct that there are inconsistncies between the radiosonde measurements and model predictions? Because if it is and CA is correct in stating: “…The trend since January 1979, the start of satellite records, is -0.025 deg C/decade.” then realclimate must be making it up as they go along.

Yes … I feel much better now … was just having a senior moment 🙂

The models are not wrong, there is simply no warming in the tropics at the surface:

http://junkscience.com/MSU_Temps/UAHMSUTrop.html

Martin J asked:

RealClimate’s defense against this is to point out that the models give a very wide range of predictions, including some models that predict trivial-to-no warming in the troposphere. Thus, since the range of model predictions overlaps the radiosonde data, they conclude there is no discrepancy between the two. See here for RealClimate’s response.

Taking the Hadley Centre radiosonde data 1958 to 2008 as referenced, and sourcing a Nino3.4 monthly dataset.

There is a clear relationship between “200hPa T anomaly” and “Nino 3.4”, especially if a lag of three months is incorporated in “200 T Anomaly”. (I tested one to five month lags).

with no lag – correlation 0.56

with 3 month lag – correlation 0.71

Applying a 5 month moving average to both elements:

with no lag – correlation 0.63

with 3 month lag – correlation 0.80

A strong dip into negative territory is therefore not unexpected (assuming we have appropriately identified causation) following a significant La Nina.

Interestingly “year” also correlates positively – correlation 0.33

Combining “year” and nino3.4 as predictors in a MLR “explains” about 70% of the variance of the “200hPa T anomaly”. At 95% confidence levels, there is a 0.3c to 0.4c increase in T at 200 hPa for the 50 years 1958 to 2008, based on the “year” coefficient.

“If the pictures are very similar despite the different forcings that implies that the pattern really has nothing to do with greenhouse gas changes, but is a more fundamental response to warming (however caused).”

What if the observed warming is caused by land use changes and data adjustments? What do the models say about that type of “warming”?

It is astonishing to see science in the face of totally contradictory information being completely mum about the whole thing. It is truly a “emperor has no clothes moment.” Anybody with half a brain has got to be stunned. It seems like science is like the deer caught in the headlights or maybe the dead person twitching is a better analogy. While the arms keep moving (the press) seeming to act as if the theory is alive the brain is dead. There is no possibility of life and yet the nerves keep firing autonomously and people might think the person is still alive (the press and the general public) but the doctor looking at the brain scans realizes it’s over.

Realclimates answer to this “miss” is that the error bars are too small and 50 years of data that shows no trend is neither long enough nor are our instruments accurate enough to see the “warming” that has to be occurring. The other explanation is that winds appear to be picking up in this part of the atmosphere and that this hides the warming that is there. Personally I find both explanations “grasping at straws” sort of like the dead person analogy above when the relative says: “I saw him open his eyes.” He’s alive. He’s alive. I’m sorry to have to relate this news. No. It’s dead. The last theory that realclimate has proposed for this problem is that some of the AGW theories don’t have to have warming in this part. It’s not any of the models that the IPCC used or that are constantly run at NASA and other places but there are theories of AGW that can live without this “hot spot”. It all seems so much like grasping at straws.

It is so funny to me that as the science has collapsed the politicians are being asked to make ever more religious statements about CO2 and the “warming.” They are asked constantly now: “Do you believe in global warming?” and like they need to say it or appear to not have “religion” or to be blasphemous they need to say “Yes. I do with all my heart believe in this global warming. I do. I do.” It’s become a religious invocation at this point. A requirement to be a decent human being to say that it is warming. Essentially we are being told there is heat in the system being generated by CO2 but we can’t show you where it is. It’s hidden. Is this like Jesus hiding from us too? He is in “heaven”. Where is the CO2 heat? Is it in “eco-heaven and will come to us on eco judgement day?”

I feel I am watching a long slow video of a car accident and being unable to take my eyes off the collision and subsequent damage.

If you look at these charts above you see that 1998 was indeed an anamolous year. The El Nino that year was clearly a monstrous event, a 100 year El Nino. It created the backdrop that allowed this global warming hysteria to take hold. Without that event it would have been much harder to argue that the globe was warming. It made the hockey stick come to life. There is still the indisputable warming at the north pole but several very good scientific reports have shown that this has been because of a combination of 2 factors. 1) NAO (the north atlantic oscillation) which shifts energy between the equator and north pole of the atlantic over a 40 year period and 2) increasing soot in the snow at the north pole from industrialization and “aerosols” is providing material for the solar radiation to reduce the snows irradiance.

#14. I wouldn’t make triumphalist claims like this for a variety of reasons. It’s pretty hard to detect a 0.1 deg C/decade trend from noisy and autocorrelated data like this even when it’s there. Also, it’s hard to put a whole lot of faith in the radiosonde time series as there are many issues with homogeneity. And the likelihood is that there will be a fairly rapid recovery from this particular downspike.

I intentionally refrained from drawing any moral from this time series. Probably all that one can say is that, had it gone the other way, we would have heard about it endlessly.

Also in terms of stock market analogies, MBH98-99 was bought virtually at the top of the temperature bull market in 1998.

Can someone tell me if any of the AGW predictions have been found correct? To me it seems that they haven’t but I don’t have a thorough enough understanding of the subject. I read realclimate when such things happen and they seem to say, “Yes just what we thought.” or, “It fits the theory” when there or no predictions, or, “The data is wrong.” I say this because as a sceptic from the very beginning we are going down a black hole of public spending and taxes and none of us seem to be able to roll back the tide of political belief that global warming is MM.

Gerry Morrow

Steve: Serious people believe that it is an issue. There’s a lot of promotion and hype, but that doesn’t mean that, underneath it all, there isn’t a problem. No one’s shown that it’s not an issue. The hardest part for someone trying to understand the issue from first principles is locating a clear A-to-B exposition of how doubled CO2 produces a problem and I’m afraid that no one’s been able to give such a reference to me – the excuse is that such an exposition is too “routine” for climate scientists. That’s the first attitude than has to change.

Martin,

There are several types of modeling. One type is an engineering model that needs to be predictively accurate. Another type is a science model that uses theory to explain what we see. As much as theorists are trying to come up with “the” perfect theory, and as experimenters are trying to make precise measurements, we are actually highly suspicious of ‘perfection’ from both. Like Newton’s laws of gravity, a really, really, good theory will explain most of what we see. But even the smallest of aberations can lead to even better theories, such as Einstein’s. As scientists vie to be the one who understands the world best, our initial theories often only get us in the right ball park. Real science is supposed to have an intense competition of theories whose survivors provide the best understanding and predictive power of the world around us. Science without this extremely skeptical competition goes nowhere. And sometimes, good predictive theories prove dead wrong when we become capable of exploring and measuring even better.

There are also a wide range of fields, some of which more easily lend themselves to this form of investigation. These are often called the “hard” sciences. Human behaviour hasn’t been one of them so far. Quite often, knowledge of economic theory will actually change behaviour in a way that invalidates the theory. Or the rules get changed in the middle of the game. Or happenstance messes things up.

There are also fields, even in “hard” science that are so complex, that even with detailed knowledge and deep understanding, we just aren’t able to hand of to the engineers predictively useful models that enable technological advances. We actually made successful fission reactors before we made fission bombs, but though we quickly were able to follow up with fusion bombs, half a century after the H-bomb we still don’t have fusion reactors. And the Sun makes it look so easy.

Climate science is an amalgam of many different branches of the “hard” sciences, quite a few of which are as equally difficult as MagnetoHydroDynamics has been for the fusion scientists. This makes it an odd mixture of being a new science where scientists should be competing fiercely for the basic understanding, yet being constrained to use the already existing, extremely complex understandings of the older better understood sciences it has encompassed. Look just at weather. It is so complex, that it needs the best supercomputers to give us basic predictive powers. And yet though they say they have power to predict 10 days ahead, how often do they get it right 3 days in advance, or even that morning?

But some scientists have also discovered that they can raise money by scaring people. And that they can use those scares to influence the results of politics. Both of these undertakings require that they not seem wrong. There is nothing like having egg on ones face in the world of politics. Those scientists become activists, as trustable in their science as [snip – phrase strictly forbidden here] Note, I don’t say right or wrong, I just say biased and untrustable.

The remaining scientists, all of which would rather be competing in the fray of ideas, find themselves pressured and trapped into conformity. The very tools they have built to protect themselves from bias, and to weed out the biased and untrustable, have been turned against anyone who dares contradict. Worse, they were fooled into going along at the beginning. “I want to be trusted as an expert in my field, so I will trust others as expert in theirs. But that guy just absorbed my field and many others. He claims a broader expertise. I can’t know if he is really using my fields theories right. If I speak up, I can be made to look foolish by his referring to someone elses field. Safer to be quiet and go along.” But going along and being quiet makes the activists look right and gave them power.

Getting back to economics, Steve comes from a field that directly influences the value of investments. Snake oil salesmen existed long before. And the guy who lost money on a stupid mistake thinks he has been tricked. Good Ole Boy systems of trust and review have proven too weak to catch stupid mistakes, let alone weed out the real snake oil salesmen. So higher standards of proof are required. This protects the prover from jail, as well as protecting the investor from fraud. And when Steve looked into this field, he found gross failures that would never make it through court in his field. And when he pointed this out, he was attacked through those same mechanisms that are being used to cow critics, whether or not they are scientific. Long ago, I tried to defend those mechanisms of scientific peer review, and Steve blistered me in response. I have since come to see that he was right in doing so.

The theory could still have value. But it has been misused. We have seen nature come out of a little ice age without help from mans fires. We have also seen Multi-Decadal Oscillations, and a very strong recent El Nino / La Nina peak. On the upswing of these events, the activists denied the existence of natural variability. And now that we are seeing downsides, they grab onto natural variability to claim things are worse than they seem. A real scientist would try to seperate out the smooth underlying warming that is supposed to be happening from the natural variability. That makes it difficult to see. If he is successfull, he advances our understanding so that it explains more of what we see. If not, he needs to go back to the drawing board. But, it may also be that the data is not good enough, or it has not been good for long enough, to be able to make any conclusions at all. In which case, the data takers need to go back to the drawing board, and the rest of us need to be more patient.

But the activists can’t let any of that happen. We must act on their politics right away. Skeptics are put down as deniers. The data is sacrosanct, and every correction makes things look worse. Thus true science is killed.

I usually prefer it when government does not meddle, but we need our governments to say that they will not give money to scientists that will not follow procedures that can stand up in court. And, that they will refuse to take advice unless proper procedures have been followed, data has been made available for open review, and that it has been successfully defended through a period of tough scientific scrutiny.

Steve

I make the following comments on the radiosonde data.

1. The diagram from realclimate showing the “hot spot” in the tropics is obviously for one of the models (GISS).

2. I have looked at the up-to-date radiosonde tropical data from the Hadley Centre which is updated about every 3 to 6 months and have computed the temperature trends for 1979-2004. The changes appears to be small in regard to the trend values that we published [Douglass et al. 2007] as the table below shows. In particular the trend at 200 hPa for 1979-2004 is -0.045 deg/decade whereas from the older data set it was -0.032 deg/decade. Still no evidence for the “hot spot” in the up-dated data.

——— table of trend values———–

Trends(milliºC/decade) (1979-2004) from HadAT2 tropical (20N-20S) radiosonde data from Douglass et al. (2007) and from up-to-date data [June 8, 2008]

1st column:Pressure(hPa). 2nd column:Douglass et al trends. 3rd column: trends fom updated data

850 61 56

700 28 17

500 14 5

300 75 66

200 -32 -45

150 -147 -169

100 -431 -439

————————————-

3. RATPAC radiosondes? There has been no update of this data since we published our Paper. Again, just as in the Hadley radiosondes, this data does not show a “hot spot”

4. The Thorne paper in Nature Geoscience online (May 25, 2008) presents a new comparison of the radiosondes with the models.[Fig 1]

a. His plot of Hadley and RATPAC trends are very close to what Douglass et al. published. The conclusions in 2. and 3. above are unchanged.

b. Thorne shows new radiosonde trends from Haimberger [RICH and RAOBCORE 1.4]. We discussed the structural problems of these data in the addendum to our paper and explained why we did not use them [posted as comment #21 in the Leopold in the Sky with Diamonds thread].

c. The main point of Thorne’s article is the new paper by Allen and Sherwood in the same Geoscience issue in which they produce trend values from an anaysis based upon wind data. We have commented on some of the problems in the previous Sherwood paper. In addition, Roger Pielke Sr. has some very strong comments on these two papers in his blog, Climate Science, of June 2, 2008 which I encourage you to read.

I finally figured out that the graphic Steve showed was the 100 year forecast for 2 x CO2 – and compared it against the 50 year trend of a roughly 20% increase in CO2 – so I’m not sure thats an entirely fair comparison.

What does a 25% increase look like – albeit on 100 year time scale?

Increases on the order or 0.8 to 1.2c (shocking scaling on this diagram) over 100 years compares reasonably well with 0.3 to 0.4c over 50 years.

However – I agree with Steve that there probably isn’t the level of quality in the data to actually prove anything here (especially with simple multiple linear regressions).

Darn it – I cant see that image

It is really not credible but the IPCC records are changing as they go.

The IPCC troposphere figures can be found in http://www.ipcc.ch/pdf/assessment-report/ar4/wg1/ar4-wg1-chapter9.pdf

figure 9.1.

When I first copied them to a web page I have ( in Greek), Figure 9.1C was called the “CO2signature”, but it was from a wiki page reference, so about a month or two ago I went and copied the real thing from the IPCC site. It then stated next to figure C, so it drew the eye: Greenhouse including CO2, and an arrow to show the hot spot with a CO2 on the figure. Today I chased the original link to put it up here, and the attention is taken away from plot C, though the caption still says :well mixed greenhouse gases. CO2 seems to have become a dirty word ( for how long?)

Really.

So, in the IPCC reports the hot spot appears only with the inclusion of CO2, though they are slowly hiding it. Creeping back corrections again.

The IPCC.ch site does not have a search that I can see, so it is extremely hard to chase a specific datum that one remembers from reading all those 800 pages of the physics report( which I have, my hackles have not settled yet).

I am sure the models can be twiddled to give us no hot spot in the tropics, but I would like to see what the temperature predictions for the next hundred years will be in that case. I strongly suspect they will be normal.

16 Steve’s comment

It is fairly routine to find about 1.25C of climate sensitivity, but no-one has been able to show more than that (including Phil, who is now rather quiet).

Anything more than that is arm-waving re a mythical positive feedback from water-vapor. All there is beyond arm-waving is a questionable interpretation of Pinataubo’s effect.

Steve: Pat, try not to go a bridge too far. Serious people believe that there is an important feedback from water vapor. That’s the $64 question and unfortunately you won’t be able to find a detailed A-to-B exposition of these effects in IPCC AR4. That doesn’t mean that they are “mythical”. I also don’t mean to suggest that there aren’t issues, but let’s not overstate things here either.

Re: #6

Fred, I have had this question after reading Gavin Schmidt’s exposition for the tropical atmospheric warming at RC comparing a 2XCO2 warming via GHG forcing and an equivalent amount of warming via solar forcing. Schmidt’s zonal and vertical color maps look much the same in both cases of forcing with the obvious exception of the stratosphere that shows significantly more cooling in the case of GHG forcing.

Looking at similar maps from AR4 for the current state of our climate one could be led to judge that the affect of GHG forcing to date would give a signature of warming in the tropical troposhere that would different than solar forced warming. I have reviewed the general literature on this subject and not found any definitive explanations.

The general case is that, according to the climate models, warming in general will be enhanced in the tropical troposphere and this is probably the region where temperature trends can be most accurately measure — and I think this is what Ross McKitrick general argument states. Nevertheless, we need Ross or someone to explain and source how the tropical troposphere is a good indicator for CO2/GHG forcing, since the McKitrick T3 tax was to be placed on CO2/GHG emissions.

Re the ‘hot spot’:

That image is for calculated radiated warming, and is not trend data. A similar hot-spot shows up at 200mb in the detailed IR calculations of Clough and Iacano (1995). I believe it is due to the fact that radiative transfer in the high-absorption part of the CO2 15u band is upward from below the tropopause and downward from above it, so there is an accumulation of radiated energy there.

Steve: I think that you’ve grabbed the wrong end of the stick. To my knowledge, the image shows trend data (or equivalently temperature change) and has nothing to do with Clough and Iacono 1995 – which is a very interesting article though.

Re: #12

Joshua, I am not clear what you mean by 200 hPa anomaly. If you are referencing the 200 hPa anomaly only, that would not be telling in the present discussion as it is the ratio of temperature trends in the troposphere compared to the surface that are being considered. I was under the impression that the troposphere temperature fairly well followed the surface temperature “noise” on an annual basis.

I also am assuming you are referring to the tropical troposphere.

The satellite data shows some atmospheric warming at lower altitudes (northern hemisphere only for some reason) and some cooling in the high stratosphere (although volcanoes seem to be the trigger for changes in the base temperature levels in the stratosphere.)

These kind of temperature changes are predicted in the climate models so at least part of the climate model predictions seem to be holding up.

The real problem is only a few of the model predictions are showing up consistently in the observations. It seems to me the models need to be more consistent before we start building 100-year-out economies, planning to shut-down our power generating facilities and legislating large carbon cap and trade taxation systems.

So we just need a model that deals with the inhomogeneity and finds the warming.

Re#11, if there is a weather and/or climatic event, rest assured there is a model that demonstrates it is consistent with AGW.

My comments about the Sherwood-Allen story, with links to Pielke Sr etc.:

http://motls.blogspot.com/2008/06/sherwood-allen-and-radiosondes.html

Best wishes, Lubos

Steve,

In #21 reply, you mentioned the effects of water feedback as the $64 question. Do you know if they apply it to calculations involving the effects of changes in TSI?

As I understand it, the increased water comes indirectly as a result of an increase in heat, that causes yet more heat, which feeds back on itself a few times, and ends up supposedly quadrupling the total temperature increase. If true, that should apply to any increase in heat, even from an increase from our major heat source, the sun. Instead, I have the impression that they have ruled out the contributions from changes in TSI without including the same feedback mechanisms that they apply to CO2.

Thanks in advance for checking on this.

Re# Michael Smith (11) James Bailey (17): Thank you for your comments especially James’s thorough overview. The conclusion I draw from what you are saying is that issue here is on of professional/academic standards and that perhaps the standards that one finds in some quarters of the climate debate are not those one would expect from a rigorous scientific discipline.

While Steve MacIntyre’s cautious attitude toward pronouncements is undoubtedly the correct path, in my humble opinion, this really is starting to feel more like this:

#17. From the mining business, I am also well aware that snake oil salesmen sometimes are right. Murray Pezim had the Hemlo gold mine and Robert Friedlander Voisey’s Bay. I bought stock in the penny mine that eventually became Hemlo very early on and sold way way too early because I didn’t trust the promoter. My mistake. I would have made a lot of money back when it went a lot further than today. So I’m always conscious that just proving that someone is a snake oil sales man is not the end of the story.

In a way, I’ve been interested in cases where you could have made better decisions with proper due diligence. At Bre-X, the promoters didn’t retain drill core at site – a universal practice – saying that they had some assaying method that required all the core. That should have scared the wits out of the mining analysts as there have been many no-see-um gold scams. Any mining analyst should have walked away from the project as soon as he couldn’t see the data.

Now some climate scientists seem to resist data archiving more out of orneriness than anything else. I would like to see that sort of attitude stamped out and for “good” climate scientists to take the lead in reading the riot act to their ornery cousins.

Part of the problem is that these folks have led such insulated lives that they don’t really understand how bad this looks to people who live lives in the trenches.

I’ve added a section to the head post, noting that the U.S. CCSP 1-1 showed both the HadAT trends and the GISS projections for 1979-99. I’ve excerpted these with a discussion.

I agree with #23.

Y-scale is pressure (atmosphere levels)

X-scale is latitude

Color code is the temperature plotted into the X/Y space.

There is no reference to time at all thus how can this be construed as trend data? Looks like a one-shot reference to a doubling of CO2 perhaps?

However, I get Steve’s point. If one removes the specific temps from the color code and leaves the colors to mean a general trend, then the hot spot area should be warming more than anywhere else.

re: Snake Oil

Even a broken clock is right twice a day…

23 Steve’s comment

According to RC:

It’s calculated change in temperature with doubled CO2, but that is quite different from observed trend over time.

#33, 35. The realclimate graphic is from their post entitled Tropical TRoposphere TRends, a point that I added to the text for clarification. The temperature change for CO2 doubling by altitude has a direct linear relationship to the modeled temperature trend by altitude. It’s not “quite different”. The color coding conveys the same information, which is probably why Hansen’s original bulldog used the graphic in the first place. Pat, you’re splitting hairs here and there’s no hair to split.

Here’s the Hovmoeller diagram of tropical 200mb temperature anomalies since January 2007:

Time is the y-axis while latitude is the x-axis. Time moves downward.

The end of the last La Nina is evident in the warm colors of early 2007.

The coolness of March 2008 is evident in the blue. April was somewhat warmer but we waddled back in a cool direction in May and June.

Isn’t the real story here that the stratosphere is cooling, as shown in the graphs? I believe that is a solid prediction of AGW and that most other explanations of the observed surface global warming aren’t compatible with this observation.

#39. Stratospheric cooling is definitely consistently with increased CO2. But the “real story” is also that there should be warming in the upper tropical troposphere, which supposedly gets passed down to the surface. So the lack of such warming in the upper troposphere isn’t just a nit.

#39 Other reasons for stratosphere cooling are ozone depletion, not just AGW.

From my I-wonder-about-that file is this illustration from Gettelman:

What I wonder is how the tropical upper troposphere functions in that 14km to 17km layer where radiative cooling is near-zero. I think of it as the “dead zone”, cooling-wise.

Radiational cooling of the tropical upper troposphere is key to the idea of Hadley-Walker cells but does the top 3km of the troposphere participate? If it doesn’t participate then how does the air moving into and out of the layer behave, and why? Does the dead zone represent a sort of radiational “reserve cooling capacity” in that if it warms then it begins to remove heat via radiation?

Perhaps the behavior of this dead zone is confounding things.

#39: the stratosphere is cooling?

Not according to the RSS data since 1994

David Smith

It seems to me that the top leg of the cell is due to the blockage of natural convection by the temperature inversion which starts at the tropopause. Warm air is rising and has nowhere to go but sideways, i.e., horizontally, when it gets to the tropopause. If that is the case, the top 3 km is certainly participating.

39 (Erik Ramberg): Not really. Cooling in the upper stratosphere just means you have altered the GHE (something everybody agrees has happened) it does not preclude the possibility that most surface warming is due to some other mechanism(s).

43 (Ross): That would be the Lower Stratosphere-Ozone depletion dominates there, so it stopped there because, well, the depletion has stopped, apparently. Which isn’t supposed to be possible either…

#19 (and others):

IPCC AR4 Figure 9.1 shows a 100-year hindcast, i.e. what ought to be visible already if the atmosphere responds to forcings as in the models. 5 major categories of forcings are modeled individually; only GHG’s produces the warming bullseye in the tropical troposphere and it is large enough to dominate all the others, so the “total” panel looks like the GHG-only panel.

CCSP Page 25 has the same diagram but it’s a hindcast covering 1958-1999, ie the balloon era. Again, it’s what should be showing already.

IPCC Report Fig 10.7 shows 3 time intervals: early, middle and late 21st century. All stages show the tropical troposphere pattern (see them online at http://ipcc-wg1.ucar.edu/wg1/Report/suppl/Ch10/Ch10_indiv-maps.html), and the text leaves no wiggle room:

In other words, the models show the tropical tropospheric pattern on all relevant time scales, it is unique to GHG’s and there is no GHG-induced warming story that does not involve comparatively strong tropical tropospheric warming.

Therefore the observed lack of such a pattern in the data is a prima facie argument for, at most, low GHG sensitivity in the actual climate system. The CCSP report (page 11) says as much when discussing the model/data discrepancy:

(emphasis added)

43 Ross McK

I believe that trend of -0.3 deg per decade is about the same as the trend quoted for Douglass et al for the troposphere at around 13 km. What altitude/band is the RSS data from in the stratosphere?

From Allen and Sherwood’s abstract: “We derive estimates of temperature trends for the upper troposphere to the lower stratosphere since 1970. Over the period of observations, we find a maximum warming trend of 0.65 +/- 0.47 K per decade near the 200 hPa pressure level, below the tropical tropopause.”

0.47 represents 72% of 0.65 no? I wonder if Allen and Sherwood would appreciate betting their pension plan on a financial adviser’s recommendation offering that kind of uncertainty…

And the cherry on the cake is: “Warming patterns are consistent with model predictions except for small discrepancies close to the tropopause. Our findings are inconsistent with the trends derived from radiosonde temperature datasets and from NCEP reanalyses of temperature and wind fields. The agreement with models increases confidence in current model-based predictions of future climate change.”

As far as models are concerned, I preferred Yves Saint Laurent’s…

Pat, RSS shows the weighting function here. TLS comes from 10 KM to 30 KM, with the mean at around 16. I expect the weights vary by latitude since the troposphere is shallower over the poles.

I only mention this because people look at the TLS linear trend and say, “Ah yes, a cooling trend.” But the linear trend is obviously a poor fit. It’s not there in the data, it’s drawn in by the researcher who wants to see a pattern. What you have in the data are 3 flat line segments interrupted by volcanic perturbations. After each one the line segment steps down and ticks along flat until the next volcano. For the last 15 years there has been no interruption, and no trend down or up.

The ozone depletion angle is another interesting kettle of fish, since there never was any significant ozone loss over the tropics, it was a winter/spring event over the NH mid-latitudes and otherwise an event over the Antarctic. See the WMO assessment at http://www.wmo.int/pages/prog/arep/gaw/ozone_2006/ozone_asst_report.html.

I don’t know if the absence of ozone depletion over the tropics matters, except that it seems to me, as a very naive guess, that it removes that angle as a possible route to explain away the lack of warming in the troposphere.

49 (MV) I know I’m naive, but are they really saying that their results don’t match real data but do match the models, increasing the confidence in the models? Am I crazy or are they?

============================================

That statement, Mr. McIntyre, speaks volumes. You see, we have been told since 1989 or so that global warming was increasing in magnitude and that we would see increasing amounts of warming with every passing year. School kids are still fed “the hockey stick”. At a recent book fair at my childrens’ school there were three “global warming” titles on prominent display.

If we are no quibbling over the sign of 0.1 degree of change, that in and of itself is proof that they were wrong. If they were correct the change should be unmistakable, increasing in magnitude with every passing year, and the signal by now should be clear in every single data stream … ground or satellite-based.

That we even have the argument and have conflicting data means that the fact that they were wrong is a moot point. Now they are simply trying to jockey for position on how wrong they were.

12 (Joshua)

That is not surprising. The current negative anomaly at 200mb is historic in that it equals the record low (for that level) in HadAT2 data of January 1972. The series begins in 1958. At 150mb a new record was set a clear 0.3°K lower than any previous figure.

Consider the diagram at the bottom. It shows the range of temperature anomalies recorded at each level since 1958. It is plain that the atmosphere is heated by solar radiation on its passage to the surface of the Earth.

Consider the Hovmoller diagrams. It is apparent that the highest temperatures at 200mb are generated over the Indian Ocean and the Maritime continent where the warmest ocean generates the highest relative humidity. The lowest temperatures are experienced near Peru where the coolest ocean is located and also over mountainous areas both of which generate little evaporation.

When temperatures rise at 200mb an important layer of cirrus cloud that constitutes the most important element of the Earths albedo in the tropics simply evaporates.

You will notice that the warm anomaly occurs most strongly in Southern Hemisphere summer when the Earth is closest to the sun and irradiance is 7% greater than in July. Over solar cycle 23 the pulses in irradiance that warm this layer have very frequently occurred in Southern Hemisphere summer (see Svalgaard 4 #308 on this blog). However, as the cycle has run its course these pulses of irradiance often amounting to as much as 0.2% increase over the space of a year or less (about double that for the cycle as a whole) have gradually petered out and have sometimes occurred in mid year when they tend to be less effective in raising temperatures at 200mb over the warmest oceans. As a result these oceans have become cloudy and cooled.

My conclusion: The effects of the solar cycle on Earths atmosphere and its oceans are written in ENSO typography. It is the sun that is responsible for ENSO. ENSO is the major dynamic that is responsible for cooling and warming processes in the tropical oceans. When the tropical ocean cools Canada gets very cold in winter and it rains in Iowa in mid summer.

Erl Happ #53

I suspect that your hypothesis has a number of hurdles to overcome.

Firstly, Nino 3.4 SSTs lead upper atmospheric response by about 3 months. Therefore causation could reasonably be linked to SSTs, rather than variance in cirriform cloud.

Secondly, warmer SSTs should lead to greater convection, heating the mid and upper levels through latent heat release. There may be more cirriform cloud aloft due to this excess convection – warmer temperatures aloft do not automatically mean less cirrus.

Thirdly, El Nino / La Nina are deep ocean responses, rather than shallow events such as the Indian Ocean Dipole. It is harder to theorise how variations in solar irradiance alone can account for for such a large response.

54 (Joshua)

There is no mention of a three month lag in this document

http://pubs.giss.nasa.gov/abstracts/2007/Zerefos_etal.html

Zerefos et al. 2007

Zerefos, C.S., K. Eleftheratos, P. Zanis, D.S. Balis, and G. Tselioudis, 2007: Search for man-made cirrus contrails over Southeast Asia. Terr. Atmos. Ocean. Sci., 18, 459-474

Nor is a lag apparent when one looks at the 200mb data by comparison with that at lower levels.

Re:

Latent heat release is at the much lower level where precipitation occurs. Convection tends to cool all levels above this point including the 200mb level through to the stratosphere. Cooling is via decompression. This is clearly apparent in hovmoller diagrams of the 1997-8 El Nino event. Apparent also is a very low contribution to ozone heating in the stratosphere over the warmest tropical ocean at all times of the year. Nor is this warming at 200mb due to Outgoing Long Wave radiation because it is very low in the mix. By contrast OLR is dominant in the mix of cooling processes over cold waters like those adjacent to Peru and the signal is clearly apparent in the stratosphere. That cool zone in the right hand side of the hovmoller diagram becomes an anomalously warm zone.

As the cycle of heating proceeds in the warmest parts of the ocean (due to the loss of cirrus) the relative humidity of the air eventually recovers and the cirrus re-appears. Hence La Nina. And this can occur despite increasing sunspot activity. Its the Earth’s natural thermostat in action.

#47

This is just unbelievable. How can these people write such nonsense?

50 Ross

Thanks. You are right about the power of the added trend-line. It fooled me until I took a closer look.

IIRC, Brown et al with the Hadley group wrote off the ozone depletion hypothesis in 2000, probably for the reasons you mention.

Tom, the parenthetical insertion in the sentence you quoted should one day be the basis for a long treatise on early 21st century scientific method. Maybe it could be called “Harry Potter and the Echo Chamber of Secrets.”

Chapter 1: By what magic the “realism” of observational data sets came to be judged based on how well they validated a model, rather than the other way around.

You may find relevant the following new study, too:

Ryoo, J.-M., Darryn W. Waugh, and Andrew Gettelman, 2008. Variability of subtropical upper tropospheric humidity, Atmospheric Chemistry and Physics Vol. 8, No 10, pp. 2643-2655, May 20, 2008, online http://www.atmos-chem-phys.net/8/2643/2008/acp-8-2643-2008.pdf

I wonder about (fear) the root cause of the downturn.

Ross 58:

This behavior may be less unusual than most of you think. In a historical context some time in the future, this may be treated simply as a Kuhnian crisis point–perhaps it will become THE classic textbook example.

The only thing unusual about this particular scientific period is how much this particular “Paradigm” is also tied up in politics and economics.

For those unfamiliar with Kuhnian paradigm theory, here is an example reminiscent of the current climate science community’s resistance to change.

http://www.climateaudit.org/?p=3161#comment-258838

For those unfamiliar with Kuhnian paradigm theory, here is an example reminiscent of the current climate science community’s resistance to change.

Jeez – it is, in terms of a financial analogy, October 1929. Except, unlike then, among the “mainstream” market participants, there is no panic, in fact, they “invest” as if the downturn is only “profit taking” – when in fact the fundamentals have taken a dive.

50 (Ross): Someone in one of Roger Pielke Sr’s classes made this regarding stratosphere behavior:

Is it just me, or does that time series of the 200hpa look like a random walk?

I hear all the time in ads that gold has risen spectacularly and might double again. This to me is a fairly clear sign that now is not the time to buy gold. But that doesn’t mean it’s not time to buy gold.

3 jeez:

You’re obviously insane, somebody who actually knew what they were talking about would suggest RAXBCLRE 17.000000000058

11 Michael Smith:

And of course if my model ensemble has a mean of 14 C, then the offset from year 1880 to the offset from year 2007 on a linear trend being +.6 or so must mean it’s getting warmer.

16 Steve’s response to Gerry:

I think it’s really a matter of “Even if the trend reflects a .7 rise in global temperatures, is a 15 or 16 centigrade world any worse off than a 12 or 13 centigrade world?”

23 Kenneth Fritsch:

What makes me doubt that as something serious to contemplate? Gavin. Exposition. RC. Hmm…. Well, Gavin is smart and capable, I think, but seems working from a conclusion backwards. My challenge would be to anyone; is it possible the sampling we’re doing to derive the anomaly is vastly understating a rise in energy levels? Show that first if you’re worried about things. As I mentioned on the BB, if you really care about the environment, or about humanity, and you think carbon dioxide is the primary issue, you should be working to sequester it now, reduce the warming, so you can then release it and offset the drop in energy levels that go along with an ice age…..

29 James Bailey

More like the warmer air holds more water vapor, which helps lifts the 99+% of the air that doesn’t absorb or emit IR into higher volumes at lower temperatures. Unless it hits a point where it decides to rain and then all bets are off, no?

Re: #47

Ross, I would agree with your take away from the AR4 reports, but it contradicts what Gavin Schmidt has show at RC with regards to 2X CO2 forcing and an equivalent temperature increase from solar forcing in the tropical tropsphere — with respect to the pattern being unique to GHG forcing. I have reread all the accounts of the expected pattern evolving in the tropical troposphere and I have never seen it clearly and succinctly stated that it was unique to GHG forcing.

Part of this arises, of course, because few models look at solar forcing or any other non GHG forcings that have reached or are predicted to reach the resulting levels of temperature increases as that attributed to GHG forcing. Can you categorically state that Gavin Schmidt is wrong or misleading in this case?

Re: #66

Sam, you seem to have a penchant for drawing me into discussions we are not supposed to have at CA and particularly in a non-unthreaded thread. So listen up and read fast.

There is no lacking of ideas on what could be done theoretically or any lack of people who say they really care about the environment but unfortunately none of that will have much affect on the real and practical world. That reversible CO2 sequestering is a nice touch, however, in that it will kill two birds with one stone.

If you can get people to act now on preventing something that could happen in 10 to 30 thousand years from the present you might be able to convince them of the looming global problems with huge unfunded government liabilities that will happen a lot sooner..

Re #68: Kenneth, I believe that you are indeed correct and Ross is indeed wrong. The amplification of temperature fluctuations on a range of timescales is a general consequence of moist adiabatic lapse rate theory…namely, the fact that a rising saturated parcel of air that is initially at a higher temperature will cool more slowly as it rises than one initially at a lower temperature (because the one at higher temperature holds more water…which condenses out and releases heat as the parcel rises), so the temperature difference between them will become magnified as they go up in the troposphere.

This is noted in the Santer et al. paper [http://www.sciencemag.org/cgi/content/abstract/sci;309/5740/1551]…And, it is also the main reason why many scientists believe that the data for the tropical trends is wrong and the models are right: In particular, the data for the amplification of fluctuations in the tropical atmosphere is in very good agreement with the models over a range of time scales extending from months to a few years. It is only for the decadal or more trends that the experimental data deviates from the expectations of moist adiabatic lapse rate theory. It is hard to understand what would cause the data and theory to agree so well on the shorter timescales and breakdown on the longer timescales. And, those longer timescales just happen to also be the ones for which the data itself is quite suspect…since neither the satellite nor radiosonde data sets were designed to be accurate for slow trends over a relatively long period of time.

At any rate, even if the models are wrong and the data right, this does not specifically argue against the hypothesis that greenhouse gases are the cause of the warming…although it would of course give us less confidence in the models, period.

Erl Happ (#55)

WRT the 3 month lag – I’ve pointed you to the data – you are welcome to confirm my results – its 15 minutes work with a spreadsheet.

WRT a decrease in cirrus during El Nino over SE Asia – that paper confimrs exactly what is to be expected. El Nino shifts the dominant convection zone over towards the equatorial Pacific – with a consequent decrease over SE Asia.

Latent heat release occurs lower in the atmosphere due to condensation/freezing. Parcel bouyancy tranfers this heat into the upper levels – with mixing processes shedding heat through the layers – until neutral bouyancy is achieved – potentially beneath the tropopause.

Convective processes warm the upper atmosphere – not cool it. This is basic Met.

Your Hovmoller diagram in #53 confirms this (RH diagram 250hpa Temperature 10 North to 10 South). Note the cool temperatures near 120W at the end of 2007 and the beginning of 2008 – nicely mapping over the strong La Nina and subsequent decrease in convection.

I should just clarify that the statement in my previous post that “the amplification of temperature fluctuations on a range of timescales is a general consequence of moist adiabatic lapse rate theory” applies, I believe, specifically to the tropical atmosphere. I am not clear exactly what the story is when you look at trends over the whole globe, but at any rate, the tropical trends seem to be the ones where the data and models are in disagreement.

71 (Joshua)

Thanks for the explanation which makes sense to me (though not a meteorologist). In relation to cirrus cloud do we agree that there is a marked reduction over the Maritime continent during tropical warming events and an increase in convection and cirrus formation east of the Date Line? Should we not expect a warming at 200hPa East of the Date Line from convective processes and not over the maritime continent and the Indian Ocean? I am pointing to a marked warming over the latter, as is seen in the left Hovmoller.

In terms of correlations there is obviously a shifting of convective centres during tropical warming events. But, and this is important, for the entire tropical ocean to warm up, all oceans at the same time, albedo must in general be less. Shifting cloud from one part to another is a small part of the big picture. The Nino 3-4 part of the Pacific Ocean is not really representative of the whole and certainly not representative of those parts where the heat is being gained by exposure to greater solar radiation. It lies squarely under the zone of enhanced cloud. The correlation between 12 months of sunspot data is stronger with 200hPa temperatures when the period for the former is less by one month than it is for two months or the same time period. The lag is short and the sunspot activity precedes.

Part of the problem here may be that the response in temperatures in particular locations both at the surface and at 200hPa is driven by circulatory influences within the ocean and the atmosphere. However, it seems to me that the origin of the temperature anomaly over the Maritime continent and the Indian ocean at 200hPa is direct solar warming of the atmosphere as is apparent in the lowest figure at #53.

Sam wrote in 67:

Yes, they are trying to have it both ways. The ensemble is regarded as uncontestable with respect to the change in its average over time, but when that average is compared to observations that clearly do not match, then the ensemble’s range is invoked to explain the apparent discrepancy.

Or, to put it another way, the discrepancies that exist between models is invoked to refute any claimed discrepancy between models and observations.

Re: #74 (Michael Smith): I think you are incorrect in your claim of inconsistency. That the IPCC belief in the accuracy of their predicted warming is closer to the standard deviation than the standard error follows from comparing their statement about the range of equilibrium climate sensitivity to that of the models.

In particular, the IPCC says that the equilibrium climate sensitivity is likely to fall in the range of 2.0 to 4.5 C, where by “likely” they mean an estimated 67%-90% probability. Hence, this corresponds to a statement made with somewhere between 1*sigma and ~1.65*sigma certainty.

Now let’s look at the equilibrium climate sensitivity of the 19 models for which such sensitivity is listed in Table 8.2 of Chapter 8 of the AR4 report (Working Group 1). Here are the numbers I get using this: the average sensitivity is 3.21 with a standard deviation of 0.69 C. Hence, we would get a 1-sigma result of 3.21 C +- 0.16 C or a 1.65-sigma result of 3.21 C +- 0.26 C if we assume that the standard error was the correct thing to use for the uncertainty. This is clearly much smaller than the range that the IPCC quotes for an uncertainty that is somewhere between 1-sigma and ~1.65-sigma.

However, if we assume that the standard deviation is a better measure, then the 1-sigma result is 3.21 C +- 0.69 C and a 1.65-sigma result is 3.21 C +- 1.14 C. So, clearly the IPCC statement of the equilibrium climate sensitivity being likely (66-90% chance) of being between 2 C and 4.5 C is much closer to what one gets if one assumes the standard deviation, not the standard error, provides a reasonable estimation of uncertainty. (In fact, the standard deviation is still small relative to the IPCC error estimate if you assume it is a 1-sigma result…but is pretty much right on the nose if you assume it is a 1.65-sigma result. This may be coincidence since I don’t think the IPCC explicitly came to this estimate by just looking at the spread in the models…I think they relied more on estimates derived from studies that look at the best estimates of climate sensitivity one gets from current climate or past climatic events.)

So, your implication that the IPCC evokes uncertainty in the model predictions in a different way when making predictions than when comparing to experimental data seems to be without any actual foundation.

This, by the way, is just an estimate of the errors in regards to the forced response of the models. I.e., it doesn’t even include the additional issue that taking an ensemble average over different models or the same model with slightly different initial conditions will mean one averages over the unforced variability which will not be averaged over in the real world. This fact then makes it DOUBLY wrong to claim that the correct measure to use in comparing the models and the real world is the average (over several models and/or several runs of the same model with perturbed initial conditions) with the standard error used as the measure of uncertainty.

Please see also:

Horváth, Ákos, and Brian J. Soden, 2008. Lagrangian Diagnostics of Tropical Deep Convection and Its Effect upon Upper-Tropospheric Humidity. Journal of Climate Vol. 21, No 5, pp. 1013-1028, March 2008

Abstract

This study combines geostationary water vapor imagery with optical cloud property retrievals and microwave sea surface observations in order to investigate, in a Lagrangian framework, (i) the importance of cirrus anvil sublimation on tropical upper-tropospheric humidity and (ii) the sea surface temperature dependence of deep convective development. Although an Eulerian analysis shows a strong spatial correlation of ∼0.8 between monthly mean cirrus ice water path and upper-tropospheric humidity, the Lagrangian analysis indicates no causal link between these quantities. The maximum upper-tropospheric humidity occurs ∼5 h after peak convection, closely synchronized with the maximum cirrus ice water path, and lagging behind it by no more than 1.0 h. Considering that the characteristic e-folding decay time of cirrus ice water is determined to be ∼4 h, this short time lag does not allow for significant sublimative moistening. Furthermore, a tendency analysis reveals that cirrus decay and growth, in terms of both cloud cover and integrated ice content, is accompanied by the drying and moistening of the upper troposphere, respectively, a result opposite that expected if cirrus ice were a primary water vapor source. In addition, it is found that an ∼2°C rise in sea surface temperature results in a measurable increase in the frequency, spatial extent, and water content of deep convective cores. The larger storms over warmer oceans are also associated with slightly larger anvils than their counterparts over colder oceans; however, anvil area per unit cumulus area, that is, cirrus detrainment efficiency, decreases as SST increases.

Somewhat related:

I regularly watch the TLT Decadal Trend at RSS/MSU (http://www.ssmi.com/msu/msu_data_description.html#msu_decadal_trends)

For the past 4 months, the decadal trend has dropped at least .1 deg per month. It seems to me that this cannot be happening without

some prior period adjustments, but none are disclosed.

Can anyone offer an esplanation?

Joel and Kenneth, My assertion that the pattern is unique to GHG’s refers to the models. Perhaps I should qualify every sentence with “according to the models”. I am certainly not presenting a theory of the atmosphere, or trying to argue that latent heat doesn’t affect lapse rates. I’m reporting what is shown in the hindcast diagrams in the IPCC and CCSP reports, which those groups of authors thought important enough to show prominently.

Those figures show that–according to GCMs–only the historical GHG changes have produced a differentially-strong warming in the tropical troposphere. In particular, solar changes over the post-1891 interval and post-1958 interval show up as a diffuse, slight warming everywhere, without a tropical tropospheric amplification. From those figures, it is accurate to say that (the models assume) increased GHG levels plus standard sensitivity assumptions implies a strong tropical tropospheric trend. It is also accurate to say that since none of the other forcings shows that pattern, the observation of such a pattern in the data would only be attributable to GHG’s.

It might be possible to generate other pictures using GCMs with slightly different assumptions, but the IPCC and CCSP didn’t do so, or at least didn’t show other pictures.

It might also be theoretically true that if, in the future, there is an exceptionally large increase in solar flux, we might expect to observe a strong tropical tropospheric warming in response. But the models seem to say that in response to observed historical flux changes, we do not expect to observe such a pattern. And as I recall, one of the new conclusions of the IPCC report was that the sun has much lower effect overall than had previously been thought. I don’t assert or dispute that: I just note that the IPCC drew that conclusion.

So looking ahead, with the expectation of diminished solar output and the IPCC view that the sun doesn’t do much to the climate anyway, with reference to the hindcast experiments, if we observe a strong warming of the tropical troposphere it would likely be due to GHG’s. And since all the Figure 10.7 runs show increasing GHG’s lead to a strong tropical troposphere trend, if GHG’s warm the atmosphere it has to show up over the tropics. And, since we’re into an “A therefore B” situation, we can say “not B therefore not A”. If there is no warming in the tropical troposphere… you can finish the sentence.

Once again, I am trying to summarize the plain meaning of the IPCC Report and the CCSP report, I am not offering a rival theory of the atmosphere. If Gavin or anyone else has a different take on things, that’s fine, I would not be surprised to hear that modelers have “moved on” from the AR4 already. But I fail to see how the IPCC and CCSP reports could be interpreted as saying there isn’t a unique connection between GHG accumulation and the warming of tropical troposphere. One implies, and is implied by, the other, in the models under historical forcings.

Joel #75

I was referring to the pro-AGW people at places like RealClimate, not the IPCC. (See the link to the RealClimate article in comment 11)

When, on the one hand, someone tells the public that “the science is settled” and “the debate is over” — while on the other hand, they explain the tropical troposphere observations by invoking the variation of an ensemble that includes models that predict trivial or no warming — then I would say they are, indeed, invoking uncertainty in “different ways” in those two cases.

Regarding the IPCC, I would say that when they truncate data series that are inconveniently diverging from a trend line on a graph — then they, too, have found a “different way” to evoke uncertainty.

Of course, I am speaking not as a statistician or a scientist, but only as a layman trying to evaluate the evidence.

Re: #78

Ross Mckitrick, thanks much for spelling out your views and observations on tropical tropospheric warming. Since they are in essential agreement with what I have taken away from my reading on the matter, I feel better that I did not miss something and a whole lot less frustrated.

Conceptually I like your T3 tax as throwing done a gauntlet to those who might have other agendas in the matters of AGW mitigation. As a practical political matter I could see the idea abused and modified beyond recognition. Surely there would be those who would instead want to use the long term stratospheric cooling as a taxing basis for CO2 emissions (although that trend may have some recent past problems as your graph above indicated). There would be those that would want to spend money to get the tropical tropospheric instrumental results “in line” with the climate models. The latest efforts of Sherwood and Allen correlating winds to atmospheric temperatures, as note by Lubos Motl, is derived by the same models to which the derived data is eventually being compared stand as an example of that approach. Finally I somehow do not see from past experience that the tax revenue would remain neutral for very long.

Re: #75

One must be careful here in differentiatng an uncertainty that is statistically derived from one that the authors of the AR4 reports placed on the uncertainty by what I call a “show of hands”. The AR4 authors were to have retained a documented trail of how they determined the uncertainty limits, but since those are not published or provided by request I choose to use a show of hands. Joel Shore, if you can document the authors handling of this uncertainty call by way of statistical calculation I would gladly retract my show of hands.

Also that the IPCC is merely making a statement about the uncertainty of the range of climate model results seems to me to have no bearing on the merits or utility of using an ensemble average with standard deviation or with standard error of the mean. One can make the necessary assumptions and adjustments (like using a mean +/- a climate bias or chaotic content factor) in both cases and judge the uncertainty for oneself.

Kenneth Fritch 68

I have reread all the accounts of the expected pattern evolving in the tropical troposphere and I have never seen it clearly and succinctly stated that it was unique to GHG forcing.

See the link I have given in 21 here : figure 9.1 in the official IPCC web page has the hot spot only for CO2, which it calls now “well mixed greenhouse gases”. It used to be “CO2 signature” in the early plots.

I think that misleading is the word. Models that show no troposphere hot spot, would also lack of excessive temperature rise. See also no 47 in this thread, where the CCSP report is quoted stating:

The whole ” cloud of models” way of plotting stuff in the IPCC reports obscures that there is a random walk among models, some fitting some parameters and others others.

Joel

One thing is certain, one shouldn’t adjust suspect data to fit an assumed model, one should get better data. And if the model ensemble is largely coming out with estimates that largely confirm the initial assumption (surprise, surprise) of climate sensitivity then we need to know how reliable is that sensitivity calculation. From what I understand there are 3 ways of estimating climate sensitivity;

1. model output; which is clear circular reasoning,

2. ice-cores (more specifically Vostok) ie guesswork about relative feedbacks and even more circular reasoning,

3. 20th century trends from data with a TOBS adjustment and sporadic and disputable UHI adjustments. The calculation assumes a low fraction for natural variation with little justification and the somewhat dubious idea that we should have a flat trend in the absence of man.

Isn’t it true that the main reason we adjust the data is largely because we expect warming? Isn’t it true that the lower estimate for IPCC climate sensitivity is all that the Physics actually supports and that higher values have been guesstimated based on pessimistic scenarios of positive feedback? Maybe it’s time we made a more realistic calculation for climate sensitivity based on empirical data, rather than biased opinion. Then the models and the observational data would be that much closer to agreement.

If enhanced warming in the tropical troposphere is caused by increased specific humidity (I can’t think of any other reason), then any source of surface warming should show the same effect. As Judith Curry stated, though, the models do not deal well with deep convection. Deep convection almost certainly causes inhomogeneity in humidity both spatially and temporally. One suspects the parameterizations used in the models do not deal with this correctly. Another possibility is this is a sign the Gerald Browning is correct that the non-physical parameters used to force the models to converge cause non-physical results.

CMIP PDF

The anomaly trend is not inconsistent with the CMIP model output.

😀

Then there’s also always this

Re #78: Ross, unfortunately, appending “according to the models” to your claim won’t settle the issue as I still believe that you are incorrect. The problem is that you are misinterpretting Fig. 9.1 of the IPCC AR4 report, and the corresponding figure in the CCSP report. In particular, you are incorrect to say that these show that the solar forcing does not result in tropical amplification. The correct statement is that the figure does not allow you to determine the tropical amplification factor for solar forcing with any accuracy.

The problem is that the solar forcing is too weak and the contour intervals too broad to reach any conclusion. Look at IPCC Figure 9.1(a) showing the results from the solar forcing. What it shows is that the hindcast temperature rise at the surface is somewhere between 0 and 0.2 C (indicated by the slightly darker, greener yellow color) while the rise in the upper part of the troposphere is somewhere between 0.2 and 0.4 C (lighter yellow color). This is compatible with an amplification factor ranging anywhere from just over 1 (e.g., if the actual values were, say 0.18 C near the surface and 0.22 C in the upper troposphere) to basically infinity (e.g., the factor would be 19 if, say, the actual rise was 0.02 C near the surface and 0.38 C in the upper troposphere)! In other words, you simply can’t reach any firm conclusion on the amplification factor because the contour intervals were not designed to distinguish it. [Another potential problem is that when you have such a small forcing, variability and noise play a larger role, although it is really unclear from the figure whether this is the case or not. One would simply need a finer contour interval to resolve things.]

If you look at Fig. 9.1(c), the analogous results for the greenhouse gas forcing show a temperature rise at the surface is somewhere around 0.4 C (indicated by being very near the boundary between the lighter yellow color and the orangy yellow color…seen most clearly if you magnify the figure quite a bit) while the rise in the upper part of the troposphere is somewhere between 0.8 and 1.0 C (reddish yellow color). This is compatible with an amplification factor of about 2 to 2.5. So, in this case the forcing is large enough that it allows us to get a much better estimate of the amplification factor. It is not that the amplification factor is necessarily larger in this case; it is simply better-determined.

To make a fair comparison, one really needs to look at the effect that is produced for a solar forcing that causes a similar temperature change to that of the greenhouse forcing. That is done here: http://www.realclimate.org/index.php/archives/2007/12/tropical-troposphere-trends/ and the GISS model clearly shows that the amplification occurs for both warming mechanisms. As I noted previously, this is not at all surprising since the amplification is also predicted by the models (and seen in the real world) for temperature fluctuations on shorter timescales that have nothing to do with greenhouse gas forcings, as discussed in Santer et al. and is a general consequence of basic physics (of the moist adiabatic lapse rate) having nothing to due with any specific forcing mechanism.

“The problem is that you are misinterpretting Fig. 9.1 of the IPCC AR4 report, and the corresponding figure in the CCSP report.”

WIRM

What the IPCC Really Meant. Which is kinda like ‘Fake but Accurate’.

A normal human being looking at those figures would conclude exactly what Ross concluded. That’s the point. Is that why the figures are there? Don’t know. Don’t care. The IPCC could have explained it ‘better’ but it did not. They may for their next report but that’s not the point.

Syl: The IPCC was not using those figures to discuss tropical amplification, so they were not designed to give a very good “read” on what tropical amplification would be for the different forcings. I don’t think that you can blame the IPCC for every way in which people use (and misinterpret) their figures for things that they didn’t really design them to be used for. [Since the CCSP report dealt more directly with such issues, I suppose one could argue that they should have produced a better figure…but it looks like they used a figure from the literature. At any rate, hindsight is always best in determining how people will misinterpret what you show them…People are pretty creative in that way!]

That IPCC stuff isn’t statistical on 2X CO2 = blah blah blah degrees. It’s guess; science by vote, science by consensus, science by cherry-picking references and developing political scenarios.

And no, it’s not temperature, it’s an anomaly bounced off a model ensemble that’s right about smack dab in the middle of the ensemble. (Surprise, surprise, surprise.)

Joel #86; It’s not at all surprising the models tweaked to look like some physical process look like that physical process. The trouble is getting the sign and the quantity correct, which they suck at. 14 +/- 2.5? Come on, they’ve gotta do better than that, talking about .6 within that context.

Joel, I am happy to accept your point that a strong enough solar amplification, in principle, would yield tropical tropospheric amplification comparable to what models say were caused by GHG’s. I have no reason to dispute that. But is that relevant for explaining historical changes? I don’t think you are seriously proposing that, as an empirical matter, solar changes explain half the historical temperature changes. (You’re not one of those denialists are you?) The AR4 rules out a strong solar effect on climate. See Figure SPM2, where the historical forcing is asserted to be a tenth that of GHG’s. The bullet point on page 5 of the SPM says their (already low) estimate of the solar influence has now been cut in half. On that basis, how could anyone use historical forcings in IPCC models and get a solar change that yields a temperature effect equivalent to that of GHG’s? You must be referring to a GCM experiment in which the sun goes nova and as a result there is some tropical amplification.

For the present purpose, if we are in an interval in which solar output is steady or decreasing and GHG levels are increasing, the potential for tropical amplification from solar brightening is moot. We are into a natural experiment that will discriminate hypotheses. So far, increased GHG’s in the atmosphere since 1979 + little increase in solar flux + little tropical tropospheric warming = low GHG sensitivity.

Joel Shore,

But for the lapse rate to decrease, the specific humidity as well as the temperature must increase, i.e. relative humidity remains more or less constant, which is sort of what I said in #84 above. If the radiosonde and satellite measurements are correct, however, then specific humidity at altitude is not increasing with temperature and the parameterizations in the models that cause them to predict such an increase are wrong. If that is true, then out goes water vapor ‘positive feedback’ and the climate sensitivity to doubling of well-mixed ghg’s is at or below the low end of the IPCC scale.

Ross (#90): We seem to be essentially in agreement now. The apparent discrepancy between the models and some of the observational datasets regarding the magnification of the warming in the tropical atmosphere is still a mystery. However, what I wanted to make clear is that it is not a direct contradiction of the hypothesis that greenhouse gases are causing the warming. It is a contradiction with much more basic physics…and a contradiction that only exists on these very long timescales where the data is rather suspect, making it seem quite likely to me that the problem is with the data, although obviously there is no way to know that for sure.