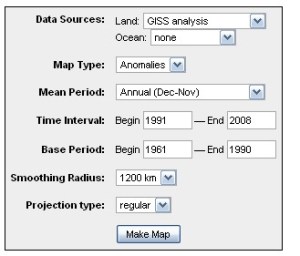

Jean S has written to me with another installment in our ongoing series about GISS conundrums. The puzzle starts with plotting the annual (Dec-Nov) GISS 1200 km anomaly map for the period 1991-2008 (here using 1961-1990 reference.) As you see, there is a Gavinesque red spot offshore Ecuador. Radio buttons generate plots at GISS here.

|

|

Figure 1. 1991-2008 1200 km anomaly

From the 250 km smooth (shown below), Jean S noted that the red spot could be pinned down to the Galapagos (more on this below.)

|

|

Figure 2. 1991-2008 Anomaly (250 km smooth).

Here’s Jean S’ conundrum. If you do precisely the same graphic – only using the Nov-Oct annual option, the red spot disappears. ???!!???

|

|

The data is at San Cristobal, Ecuador (Galapagos.)

Hansen et al 1999 stated:

there has been a real reduction since the 1960s in the number of stations making and reporting measurements.

I’ve observed on many occasions that I believe that there has been no “real reduction” in the number of stations “making: measurements – on previous occasions, we’ve offered to help NASA locate data from Wellington NZ and Dawson, Canada (and other locations where NASA has been unable to locate data from stations that report data on the internet.) Galapagos is another such situation. NASA only has measurements for 10% of the months since 1991 – with long and puzzling gaps between measurements. It’s sort of like the intermittent correspondence between Bronze Age emperors in Egypt or Assyria and Hatti, where years might pass between messages. Readers may reasonably differ as to whether Hansen would be more aptly compared to Hattusilis III or Ramesses II, to pick two of the more prominent such correspondents. I presume that the temperature measurements from the Galapagos arrived at NASA in sealed envelopes after a similar perilous journey.

Here is a plot of the anomaly data. You see the sparseness of the data after 1991. However, the royal messengers managed to get data for the big 1998 El Nino through to royal headquarters. Communications have been spotty since then, with only four measurements getting through enemy lines.

Figure 3. San Cristobal, Anomaly.

Here is the underlying data as downloaded from GISS without taking an anomaly. It seems like a pretty thin set of data to justify a Gavinesque red spot.

48 Comments

It strikes me as just plain absurd that fewer measurements of anything would be made after 1958.

snip

I blame the Finches.

Perhaps the temperature takers are cold blooded and can only stand to go out to read the temp during El Nino years…

my mom and dad were there on a cruise last spring. they said the weather was beautiful. maybe we can just fill in the missing points with B’s for beautiful. Just for record keeping purposes today here in NYC is nice but a little chilly. (NBALC)

It is just so devious the way you PLOT the DATA, as if that were a meaningful thing to do…If it is really the end of the world, can’t we free up a few people to plot out the stations and look at them? Nahhhh…

The famous tortoises of Galapagos must be delivering the data.

On a more serious note, the shuffling in and out of anomalies from an ever-changing set of stations is what invalidates the global compilations not only of GISS, but also of Hadley Centre. These inconsistencies become even more onerous over longer stretches of time. Their century-long results agree with compilations from a fixed set of stations with intact records only in the higher-frequency yearly variations. What is produced at the lowest frequencies, which determine the apparent “trend,” is largely an artifact of station choice (urban sites dominate throughout much of the globe) and “homogenization” method.

GISS admittingly adjust world-data quite extensively and they are kind enough to show how much they do add to the record. The adjustment is singularly increasing the global trend from way back in 1850 up until about 1980 where the adjustments levels off, i.e., the global average more or less stops increasing.

Now those adjustments might be well justified, I simply do not know what they do and how good their arguments may be for doing it. But coincidently though, the absence of increasing adjustment after 1980 is nearly perfectly timed with the appearance of the first satellite measurements received in 1979. The satellites are generally believed to out-perform ground based methods and so GISS could probably not keep raising the earths proposed temperature as they pleased after 1979.

Now what I can hear Steve is saying, is that even though Hansen says that stations has been dropping out since the 1960s, Steve and others are still able to find inconvenient non-warming stations that steadily report daily levels on the internet. So the statement from Hansen that stations are getting more scarce may be questionable.

To Steve:

1) Are GISS intentionally or by fault leaving out non-warming stations?

2) Are GISS in you opinion selecting for stations that show a warming-trend?

3) Did the satellites prevent GISS from continuing this selection?

Any trend can be created from cherry-picking – is that the real problem?

Steve: GISS uses GHCN data. I do not know whether Galapagos is an “inconvenient non-warming” station. With all the people involved in climate research, it annoys me that they can’t collect the stations that are in the 1991 inventory, but please do not go a bridge too far in imputing motives or malice, when a lack of initiative may well be involved.

Re: Sune (#8),

I’m glad you also noticed the adjustment “coincidence” circa 1980.

Re: John S. (#11),

I wonder when anyone has a complete replica of GISS. It seems odd that adjustments al of a sudden stop increasing just when satellites first appear. Have anyone got a reasonable answer for why GISS stops adding to the global trend after 1979-1980?

snip – blog policy prohibits discussion of motives

Re: Sune (#38),

Alas, any reasonable answer would be snipped.

Theres lots of “anomalies” with these anomaly diagrams.

Another is Australia. On the 250km plot there are a couple of browish spots (.5 to 1 range) in the center of the continent.

On the 1200km plot, the entire western half is “normal” (-.2 to .2), and the eastern half is slightly high (.2 to .5).

That’s some smoothing (or smearing) there. How wide is Australia, anyway? It looks like just a couple of measurements were used for the whole continent.

Re: henry (#8),

Careful, Henry. The land area of Australia is not all that much smaller than the USA 48 and the number of weather stations that have been started up is not so different either. Over 1,000. It’s what the folks in the USA and GB do with our data that concerns me. e.g. Does USA look hotter than Australia on the later maps because more weather stations ahve gone UHI?

Re: Geoff Sherrington (#22),

I understand about the land area and number of stations.

But if you look at Aus on the 250 km, it’s surrounded by a ring of white (-.2 to .2). If that ring is extended INWARD for the 1200km, then any internal stations would be swamped by that signal.

It’s almost like the anomalies of the continent are measured using the coastal stations, rather than the central stations.

1) Are GISS intentionally or by fault leaving out non-warming stations?

2) Are GISS in you opinion selecting for stations that show a warming-trend?

—

Most of it appears to be lazy collection methods. GISS gets their data from a NASA group or contractor, I’m not sure which. That source has not bothered keeping up with modern reporting systems.

WUWT has a link to a video regarding recent record temperatures that were “set” in Hawaii. A metereologist explains at the end of the video that the ASOS hardware installed at airports to measure temperature were never intended to provide the type of data they are now asked to provide. Apparently the hardware has an accuracy of +/-2 degrees when measuring temperature. In addition, the equipment in Hawaii was out of calibration 2 degrees on the high side resulting in a string of record highs. WUWT indicates that there are a number of these ASOS stations contributing to the climate record all located at airports.

The Dec/Nov map also has a red blotch in an empty part of the mid-atlantic. East of the GA. FL. border. Centered around 29N 57W. Must be a teleconnection to the Galapagos, since the only land within 1200 km is Bermuda at 32N 64W. About 750 km away.

Re: Bob Koss (#14),

There’s a NDBC bouy out there.

Re: John S. (#16), you’re right. There is a buoy in the area. But if Giss is using it, I would expect it to be in their station inventory. It’s not. The closest buoy is located at 33.54N -50.30W, but it doesn’t seem to fit the bill. NDBC buoy map.

Here is the Giss anomaly data for that Atlantic area.

Lon Lat anomaly

-55.00 27.00 1.1093

-59.00 29.00 1.1080

-57.00 29.00 1.1098

-55.00 29.00 1.1115

-53.00 29.00 1.1152

-51.00 29.00 1.1152

-61.00 31.00 1.1077

-59.00 31.00 1.1090

-57.00 31.00 1.1105

-55.00 31.00 1.1119

-53.00 31.00 1.1152

-51.00 31.00 1.1152

-53.00 33.00 1.1150

-51.00 33.00 1.1150

The buoy is in last grid cell above. If it was being used the adjacent cells shown below would have a valid value due to smoothing. So it’s not used.

-49.00 33.00 9999.0000

-47.00 33.00 9999.0000

PS

Giss does have some ships in their inventory, but none of them qualify either.

I would say this looks like more of the really sloppy work we’ve come to expect. Didn’t Gavin estimate that elementary quality control would require a minimum of $500,000 a year (to make sure that temperature data wasn’t a repeat of the month before)? How much would it cost to have someone locate “missing” data? 10 million?

Steve, not only does the red spot disappear, but there is a huge drop-off in the Zonal Mean at the very high latitude (about 80-90 degrees North), eliminating the huge orange glow from the arctic.

Interesting: The red spot appears to be primarily related to the Dec-Nov timeframe, as opposed to the Nov-Oct. Specifically, the Nov-Oct timeframe eliminates the red spot, even at the 1200km smoothing radius.

In contrast, the orange glow in the Arctic appears to be primarily related to using a 1200km smoothing radius, as opposed to a 250km smoothing radius.

Here’s the November, 2008 anomaly map as presented by GISS in December, 2008 –

And here is the November, 2008 map as presented by GISS today –

Some data has been added since December, making the map more complete – no surprise there. What did mildly surprise me was that this late-arriving data, presumably from more-remote regions, was “warm” enough to raise the global anomaly from the original 0.48C to a revised 0.52C . I wish I had other initial vs revised maps to see if this example is representative.

Re: David Smith (#18), if you look closely you will see they added another 2 degrees to the vertical area they are covering. Now the S. Pole color extends to grid cell -81S, previously it only went to -83S. Understandable with the 1200 km smoothing, 10 degrees is 1111 km, but they run the 250 km smoothing out to the same distance. So, in that case it seems they smooth the temperature to 250 km and whatever the temperature at 250 km happens to be, they use that to fill the grid the rest of the way out to 1111 km.

I downloaded some maps at the end of 2008 that look at temperatures from a few of the past years if you’re really interested in seeing them. I also have the anomaly datasets that go along with them. I have your email, just let me know if you want them.

Heh. I just looked at the Jan 2007 map downloaded in 2008 and the anomaly color at the S. Pole is different from the current color they show for that period. Their constant adjustments reduced the anomaly 0.02C causing a color change.

Re: Bob Koss (#19), Thanks, Bob, please do e-mail those to me.

Re: David Smith (#26), just sent them.

Maybe we should send Anthony Watts to go take a photo of the remaining weather station on the island.

>For example, McIntyre got a lot wrong in his meanderings on Mann et al 2008 PNAS reconstruction. There was no excuse as he had a detailed SI and full source code to work from.

From Tamino’s blog.

Re: MikeN (#21),

Where can i find this Tamino quote and the reasoning behind it?

I’m aware of Mann’s entirely inadequate response, based in part on data made public only after Steve’s letter was submitted.

Is there some other basis for this? I can’t find the Tamino quote by googling for it.

Re: Jason (#40),

There is no basis for the assertion that I “got a lot wrong” in my analysis of Mann’s PNAS paper. Ross and I published a comment on Mann et al 2008 at PNAS and every point in our Comment stands up, including the assertion that Mann used the Tiljander data upside down. Mann’s Reply to our comment, on the other hand, is absurd.

In the case of Mann et al 2008, he provided a lot of data and code. Most of the code is horrendous, but we were able to wade through the CPS portion eventually. The situation was quite different than MBH98 where Mann provided code only after a request from the House Energy and Cmmerce Committee. Even with code in hand, it’s not as easy as all that to decode what was done in a first pass. The commenting and documentation of the methodology is extemely poor and the code is very hard to follow. I’m sure that somewhere in the various threads I made a mis-step or two in trying to decode obscure Mannian methodology. I post on such matters so that interested CA readers can chip into the detective work and I welcome their contributions. There are many highly qualified and able CA readers who have figured out obscure Mannian methods that eluded me in a first pass – that’s one of the strengths of the site. Indeed, the obscurities and problems are so severe that other sites (e.g. Jeff Id particularly) are working on similar problems in a very cooperative way with CA.

Our efforts at the time were restricted to trying to understand the variations to CPS methodology in Mann 2008. We didn’t even try to sort out the RegEM code at the time. With the work on Steig et al, the RegEM diagnosis is far more accessible than it was before.

Re: Jason (#40),

I’d like to see that one too. The code is basically replicated with plenty of questions. Keeping the conversation PC, Tammie makes a few mistakes himself… ‘got a lot wrong’ could use some explanation.

Re: Jason (#40), Re: jeff Id (#42),

It doesn’t appear to be something Tamino said, but Deep Climate commenting on Tamino’s site. Comment link:

http://tamino.wordpress.com/2009/05/22/its-going-to-get-worse/#comment-32115

DC references a CA post where Steve was investigating a hunch, which Ryan O solved, and Steve then recognised in the head post Ryan’s observations. DC takes Steve to task on it for getting his initial hunch wrong.

What DC doesn’t seem to “get” is that an important part of science is looking into ideas and hunches, weighing up the evidence and then changing your mind if the evidence requires it. This concept of objectivity, and following the evidence, is entirely alien to the likes of DC, Tamino, RealClimate, and they don’t recognise or understand the importance of it (even when Ian Jolliffe drops by and spells it out to them in crystal clear baby steps).

Re: Spence_UK (#43),

Obviously my familiarity with proxy data and methods is much greater than my familiarity with the data in other areas – a caveat that I’ve expressed on many occasions. The link in question has nothing to do with Mann 2008 or other proxy studies. I remain mystified by exactly what I got wrong about Mann 2008 – does DC think that the Tiljander proxies were used upside-up 🙂 Mann’s making code available enabled us to determine that he used these proxies upside-down, but it doesn’t excuse him doing so in the first instance. And yet Mann’s use of upside-down proxies doesn’t seem to warrant notice in Team world.

Steve,

This is quite odd – using the larger smoothing radius should decreased variation around the trend surface, while the smaller one should emphasize those variations. (I assume that the maps are derived from the fitting of a trend surface to the station data, so lack of data in the more recent is neither here nor there – should have zero effect).

Is the data used to produce the maps easily accessible ? (I might have to be counselled on this, and then does that mean legal counsel, or a shrink) 🙂

This stuff is no different to geostats analysis of ore grades in a mine, where the smoothing radius is empirically determined from the variogram computed from the data.

I wonder if there is an error in the coding of the software used to generate the maps reproduced above?

#23

I just realised that we are dealing with data that not only vary spatially (lat/long) but also vary in the 4th dimension, time. It gets worse as well – the data are 3D in a GIS frame, lat/long/elevation, then temp, making it 4D, and then time, making 5 D.

There is no theoretical basis for computing a 5D trend surface viewed in 2D from a 5D dataset. Escher, (I am related) would have a problem drawing this in 2D.

Given the domination by the post modernists in academia, this comes as no surprise.

#24

(Still getting used to the Apple system)

you cannot derive a 2D trend surface etc from a 5D dataset. (you can but it’s meaningless physically. Imaginatively another matter.

Apologies.

I had noted at Post # 39 from http://www.climateaudit.org/?p=6106

that I saw large differences between the GISS 1200 and GISS 250 km smoothed temperature anomaly series for the global zone 20S to 20N, extracted from the KNMI web site http://climexp.knmi.nl/selectfield_obs.cgi?someone@somewhere

, when I differenced these 2 series from UAH and RSS T2LT and T2 troposphere temperature anomaly series. The 1200 km GISS series gave results more like the land and ocean HadCru3 series. All series were monthly and run from 1979 through 2009 April.

I thought that this thread may be the appropriate place to post the results I obtained when directly comparing the GISS 250km and 1200 km series. The results are listed below and include a difference graph. The R script is included. The differences trend is significantly different from 0 and, in my view, surprisingly large. The SE for the trend slope was adjusted with the Nychka method from Santer et al (2008) and 95% CIs were calculated and reported below. The combined output of the acf and pacf functions strongly indicated that the regression residuals were limited to AR1. I have to ask the question: Should the difference in smoothing radius make a significant difference in trends? And which smooth should be more correct? And why?

GISS 1200 km – GISS 250 km for the global zone 20S to 20N for the period 1979-April 2009 with data series extracted from the KNMI web site (trend is in degrees C per decade):

Trend slope = 0.02082; SE of Trend Slope = 0.00173; n = 364; AR1 of residuals = 0.317; Adj R^2 = 0.284; Adjusted 95% CIs = 0.016 to 0.0255.

Re: Kenneth Fritsch (#29),

While I think that Bob Koss’ detective work was commendable and that my interjection of the post on trend differences between GISS 1200 km and GISS 250 km for 20S to 20N for 1979-2009 could be considered butting in, I continue to wonder why there should be a statistically significant difference in these two GISS trends and amounting to, on average, about 0.2 degrees C per century.

Is it true that the short term “hot” anomaly caught by Jean S is an artifact of the map presentation and is not used in the data series?

Galapagos conundrum solved.

It seems the year 1997 is the only year in Jean’s time interval that has sufficient data to create an anomaly.

The reason the anomaly for the Galapagos only appears in the Dec-Nov map is because that period has 7 months while the Nov-Oct period only has 6 months of data. They are only requiring any 7 valid months per year instead of requiring 3 valid quarters to create an anomaly. The anomaly was 2.7 for the Galapagos.

Here is a graph of the time period in question.

Here is pseudo-code for Jan-Dec 1997 and would be adjusted for Dec-Nov and Nov-Oct crossing years.

Unfortunately it doesn’t look like it is going to format properly.

target.year = 1997

first.year = 1951

last.year = target.year – 1

For month = 1 to 12

if target.year.month NA

anomaly = anomaly + (target.year.month – average(first.year.month; last.year.month))

anomalycount = anomalycount + 1

end if

next

if anomalycount > 6 then

yearlyanomaly = anomaly/anomalycount

else

discard target

end if

Re: Bob Koss (#30),

Nicely done!

Re: Bob Koss (#30), Re: Pete D (#32),

Yes, 1997 is the only year that is included. You can verify that by setting the time interval to single years, only 1997 gives a value. So the whole large red spot in Steve’s first figure is created with a questionable single year anomaly calculation for a single station… I guess that’s called “robust” in some circles 😉

But here’s the real mystery: according to GISS (station) rules there is enough data (after 1990) for calculating annual anomaly only if you consider period 1997/Mar-1998/Feb. Then there are three seasons (out of four) that have two (out of three) monthly values present (hence enough to determine the seasonal anomaly). None of the Dec-Nov or Jan-Dec periods fulfill these conditions. Bob’s theory is a possible explanation, but shouldn’t that be then disclosed somewhere? Instead of saying:

BTW, you can use that red spot and San Christobal station (which about 1000 km from another GISS station; hence in 250km smoothing only San Christobal is included) data to figure out various things about the GISS map algorithm. For instance, I was able to determine that a trend is calculated if 2/3 of the annual values are present. This agrees with the explanation (GT=greater than):

However, as we saw already, for reporting a mean a single anomaly is enough…

Re: Jean S (#36), they don’t seem to be following this rule when doing the maps.

If you look at my pseudo-code you will see I only computed the average monthly mean up to one year prior to 1997. Then subtracted it from the 1997 value. My anomaly of 2.7 matches their anomaly provided in the gridded data that accompanies the map(search for 2.7007). I just tried using the average monthly mean for the whole record and came out with an anomaly of 2.61

I see my angle brackets were stripped out by the software. Line should read “If target.year.month (Does not equal)NA”

If you go follow the NASA link and re-create the first map, “Annual D-N 1991-2008” etc, and then change the period to Nov-Oct, most of the area around the Galapagos changes from red to grey. Grey being no-data, where did the data go to? Or to express it another way, in the D-N map, where did the data come from? If you change from D-N to N-O that’s one month’s input data creating this “anomaly”. Hmm.

Obviously I’ve missed something, you surely couldn’t create a 2 to 4 degree C anomaly over a 17 year period in one month’s data?

Re: Pete D (#32), read Re: Bob Koss (#30).

1997 was the only year between 1991 and 2008 with sufficient months of data to create a yearly anomaly(7 required), and then only if the Dec-Nov period is used. So that single yearly anomaly was used to represent the entire 1991-2008 period.

The Nov-Oct period was a month shy on data. So no years qualified.

Bob Koss: (#34)

Bob – very interesting! Do you think this issue permeates the entire spectrum of GISS data processing and/or post-processing algorithms (that is the Galapagos anomalous anomolies are not an isolated instance)? After examining GISTEMP, it’s no surprise that these kinds of bizarre behaviors are observed…

Here’s the quote which was apparently from Dr. Deep Climate (who clipped my post for pointing out NOAA lowess treatment of end data):

He’s right that I misinterpreted the meaning of the paper but it wasn’t terribly clear. The funny thing about this is that the point Ryan makes about including data in modeled information prior to verification is the same accusation Mann and Steig had against Ryan in his improved reconstruction. The difference is again that Mann and Steig did it despite repeated clarifications that using the unmodified satellite data in Ryan’s verification produced the same result — they could not admit it and closed the thread.

No more discussion.

Sorry, I just realized how far OT this is. Please snip this if it’s too off topic. Apparently, I need a nap.

DC is not up to the task of criticizing the Mann 08 autopsy. He or she may be in the future because DC studies hard, but not yet.

I’m going to redo the work I did before on M08 now that I can write R code. The redo was prompted by criticism on RC and other places by people who claim the previous work I did was proven wrong. I have no idea what they are talking about as I don’t recall any criticism of it whatsoever. SteveM and Dr. Deep above are a separate issue which again I have no idea what Deep is talking about. It seems important therefore to have a clear record.

I started with this post by cleaning up and heavily commenting a CPS signal search demonstration in R.

I made it turnkey and put a comment on nearly every line to make it as clear as possible.