The 2009 Climate Dynamics paper “Unprecedented low twentieth century winter sea ice extent in the Western Nordic Seas since A.D. 1200” by M. Macias Fauria, A. Grinsted, et al. discussed already on the thread Svalbard’s Lost Decades pre-smooths its data with a 5-year cubic spline before running its regressions.

There’s been a lot of discussion of smoothing here on CA, especially as it relates to endpoints. However, splines remain something of a novelty here.

A cubic spline is simply a piecewise cubic function, with discontinuities in its third derivative at selected “knot points,” but continuous lower order derivatives. This curve happens to approximate the shapes taken by a mechanical spline, a flexible drafting tool, and minimizes the energy required to force the mechanical spline through selected values at the knot points. The “5-year” spline used by Macias Fauria et al presumably has knot points spaced 5 years apart.

While splines can generate nice smooth pictures, they have no magic statistical properties, and have some special problems of their own. Before performing statistical analysis on spline smoothed data, William Briggs’ article, “Do not smooth time series, you hockey puck!” should be required reading. His admonition,

Unless the data is measured with error, you never, ever, for no reason, under no threat, SMOOTH the series! And if for some bizarre reason you do smooth it, you absolutely on pain of death do NOT use the smoothed series as input for other analyses!

is as valid as ever.

A function y(t) that is a spline function of time t with knots at t = k1, k2, … is simply a linear combination of the functions 1, t, t^2, t^3, max(t-k1,0)^3, max(t-k2,0)^3, … Given data on n+1 values y(0), y(1), … y(n), the coefficients on these functions may be found by a least squares regression. The smoothed values z(t) are just the predicted values from this regression, and these in turn are simply weighted averages of the y(t) observations.

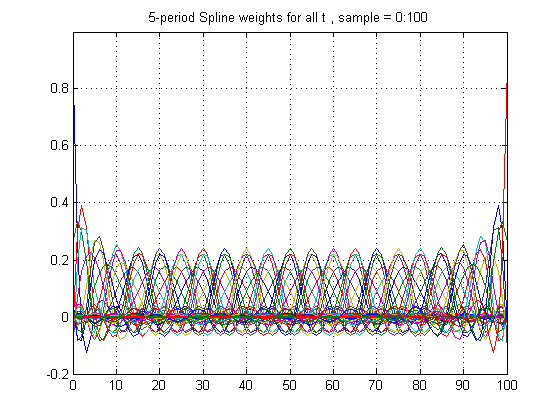

Figure 1 below shows the weight each z(t) places on each y(t’) when n = 100 so that the sample runs from 0 to 100, with knots every 5 years at k1 = 5, k2 = 10, etc:

Figure 1

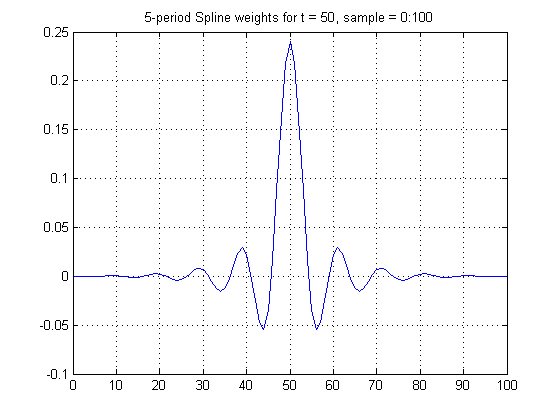

Figure 2 below shows the weights for z(50). Since t = 50 is centrally located between 0 and 100, these weights are precisely symmetrical. However, unlike a simple rectangular 5-year centered filter, the weights extend far in both directions, so that spline smoothing can induce serial correlation at leads and lags far in excess of 5.

Figure 2

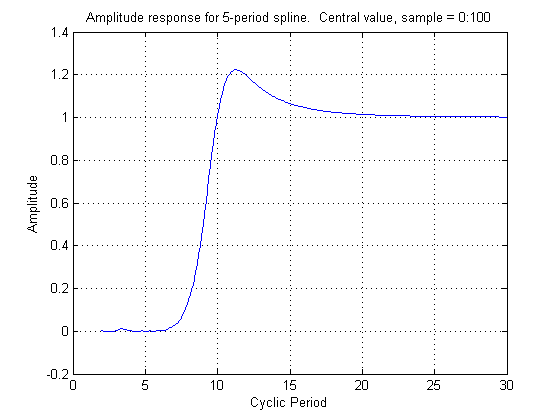

Figure 3 below shows the frequency response function for the weights of Figure 2. The amplitude is near 0 for cycles with periods under 5.7 years and is .50 for 9.0 year cycles. Unlike the frequency response functions we saw in the discussion of Rahmstorf’s smoother, comments #34, 37, 178 and 203, however, there is actually magnification of some frequencies above unity, reaching a peak of 1.22 at 11.2 years.

Figure 3

Figure 1 shows that the weights when t is between knot points are somewhat flatter than when t is right at a knot point. The frequency response between knots is therefore somewhat longer than at knot points, so that there is no unambiguous frequency response, even far from the end points.

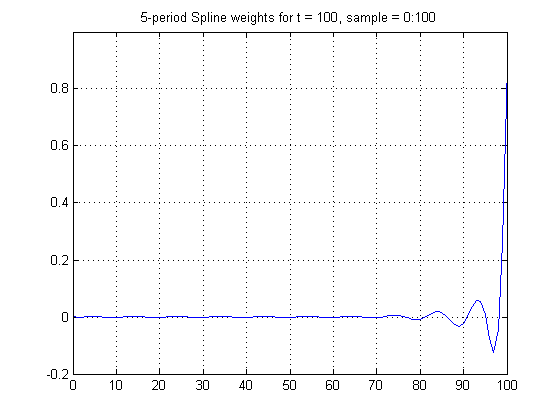

Figure 1 also shows that the weights for z(t) behave very differently as t approaches the end points. Figure 4 below shows these weights for the very last point, t = 100. Clearly they are highly skewed.

Figure 4

Because of the skewness of the weights for t = 100, the frequency response is a complex-valued function, shown in Figure 5 below. The overall frequency response is given by the magnitude of this function, shown in red. The magnitude is above 0.6 for all periods above the Nyquist period of 2, and amplifies by a factor of about 1.4 at 6-8 year periods, a very strange frequency response indeed. Furthermore, when the complex part is non-zero, there is also a phase shift.

Figure 5

Although a cubic spline produces values for z(t) for all values of t, the ones near the end of the observed sample are particularly noisy and erratic unless some additional restriction or restrictions (like the zero second derivative of a “natural” spline) are imposed.

187 Comments

I’m baffled; if you are anyway going to fit the data using least squares, or the like, why on earth bother to smooth or filter it first? There’s no legitimate advantage. I say again, this sort of stuff wouldn’t pass muster in a final year undergraduate research project.

I was wondering about that. And on a related note, I was also wondering if wavelet analysis was really the best tool in the box for some of these problems, or if it was just the newest, coolest toy that you just have to use if you want to be one of the cool kids.

It seems like there’s an unstated belief that high frequency components are all noise, and low frequency are all data, and these filters simply remove error from underlying physical phenomena, leaving pure, perfect physical data. At least that’s my sense of how these filters are being used.

What effect would it have on their conclusions if this step were left out? Is there a reason given in the paper for this seemingly extraneous filter, and if so, is it valid?

The goal in “climate science” appears to be to make their data visually appealing before analysis. An interesting approach for sure, but can it really be called science?

As a practical matter, McCulloch is going to have to re-run the computations on the original data with no smoothing, or different filters altogether, for him to impact the ideas of the people he’s trying to reach.

The statistics involved in climate studies become every more elaborate nonsense…

Although I have to say, Briggs is a real buzz kill! No smoothing at all? Where’s the fun in that? 🙂

No, no, I get what’s wrong with it. It’s constraining but it makes sense.

What’s even more funny, is that any cubic spline, particularly piecewise, can’t be used for prediction

Nick

Re: Nick (#7),

Neither can any other kind of curve-fit that isn’t based on a physical model.

This is what caused the real estate bubble; people who thought that positive exponentials can increase unbounded. It’s a most amazing thing in retrospect, how many “smart” and powerful people threw so much capital at something so obviously flawed.

And they still don’t seem to have learned their lesson. They’re still throwing astronomical resources at unsustainable goals based on simple-minded extrapolation.

Smoothing functions do have their uses: presenting noisy information in graphic form and getting some insight into the behaviour of random processes.

The problem that occurs when smoothing is done before doing regressions is that the end result understates the error inherent in the estimates of the parameters and predicted values and severely overstates the effectiveness of the regression procedure. Apparent correlations are magnified, Rsquares are higher, but it is no longer possible to carry out proper hypothesis testing without determining the adjustments which may be needed.

It is exactly this exaggeration that people who do not understand the drawbacks find so attractive: “Look at how great the results we get are; the p-values are real small” – without realizing that in fact they have violated the assumptions under which those results are interpreted.

In some cases, the correct error bounds can be calculated for such procedures, but it should be understood that they are invariably larger than those when the procedure is done correctly on the unsmoothed data.

Re: RomanM (#9), Macias et al explain why they used the 5-year spline smoothing. They believed their ice core data did not have reliable annual time resolution:

And they were aware of the resulting autocorrelation, and took fairly elaborate corrective measures:

Re: Nick Stokes (#16),

The problem here is that there are two effects going on. The underlying data may be autocorrelated to start with. This is then complicated further by the smoothing procedure involving cubic polynomials with varying coefficients along the sequence. To think that it may be corrected by AR methods and Monte Carlo white noise could be a bit of a stretch. I think a theoretical solution would be necessary.

Out of curiosity, is anyone here aware of specific properties of cubic splines that could particularly useful for correcting dating errors? I can’t think of any such offhand.

Re: romanm (#17), No smoothing technique can correct dating errors, or lack of measurement resolution. The best you can do is to acknowledge it, and reduce associated noise.

Smoothing with cubic splines is widely used in all kinds of scientific activity. This paper by the statistician Grace Wahba sets out some of its attractive properties.

The way Macias et al handle autocorrelation after smoothing corrects for the effect of all correlation in the smoothed time series, not just that which was introduced.

Re: Nick Stokes (#20),

Hmmm, this does look interesting. Thanks Nick.

From mr. BNriggs;

Oh, how simple life is.

This is the biggest frustration I have, anmong many others. It is something I find hard to extend to non-techies, be they not scientist or engineer.

RomanM.

When one is designing and building a radio communication system, smoothing the incoming signal at the receiver will not produce a lower Bit Error Rate. One must filter out the noise. To do that, one must know what that noise is. In general, one doesn’t, and it is a fool’s errand to attempt to do so.

I reckon the same applies to noise in natural signals.

BTW Has anyone done an investigation of the Signal-to-Noise Ratio of the “global temperature”? I guess not as we don’t know the signal but we see a lot of noise.

Re: Robert Wood (#11), Bingo.

RE Nick, #7,

You can extrapolate the last segment of a cubic spline, but being cubic, it’s headed fast either for plus infinity or minus infinity, and hence probably meaningless. The standard errors on such an extrapolation also blow up cubically with the distance extrapolated, and usually indicate the meaningless of the extrapolation. And the noisiness of the smoother as you approach the endpoints.

Since Macias Fauria et al are running smoothed sea ice on smoothed proxies, the terminal end of their projection (where the modern “unprecedented” values occur) is subject to noise both from smoothing the proxies and from the effect of the terminal smoothed SI series on the regression coefficients.

Re: Hu McCulloch (#12),

A lot of people don’t see that as a problem. This is what’s so pernicious about the hockey stick and other similar graphs; if I were to take 100 people at random, and ask them what it means, 90+ would say that it’s going to go up forever, and fast.

Re: Hu McCulloch (#12),

I agree that using a cubic for extrapolation is a bad idea, but the coefficients of the cubic give you an estimate of the first, second and third derivative which you could use for extrapolation if you had a suitable model.

Climate science seems to involve way too much unjustified curve fitting.

When one fits a curve, one makes assumptions about what is data and what is simple, random, measurement noise.

Most of the wiggles we see in climate data have a physical basis, I think — in other words, they are rarely random measurement noise to be “smoothed or filtered out”.

I do not understand statistics, a closed book, but I do understand a little about pcm data.

I suspect that most of statistics is not applicable to sequence related data, domain error and yet in climatic every man and his dog do so, what I call bucket people. (yup, we collected 5 buckets that month) Problem is often the use of common average where it is not applicable. (worst is moving average, worst possible filter)

Splines are cosmetic. Smoothing is not a term from signal processing.

The Briggs point about not using smoothed data (totally agree) is unfortunately somewhat pointless: go on then, when is the last time you were given raw instrument readings? I wish…

You can however legally decimate and then use the new dataset, provided nyquist and shannon are honoured. Both points are often violated.

The Shannon issue is rarely recognised. One part of it is there is a trade between time and resolution, ie. reduce the sample rate and the number of digits necessary rises. The ultimate example being the 1 bit data converter: starts very fast at one bit and ends at 20 or more bits much slower, perhaps counter intuitive. The data represents exactly the same data shape *within* nyquist and shannon limits.

When a one sample a year climatic data set is given, truncating to few digits is bad. Common practice.

Figure 2 in the article is very similar to the sinc() function, perhaps it is, which is the fourier transform of a step, the brick wall low pass filter. This is usually windowed, hence the impulse response is curtailed to finite. sinc has interesting properties, including to do with interpolation.

http://www.dsprelated.com/dspbooks/mdft/Sinc_Function.html

What happens if you convolve windowed sinc with a time series? An abrupt low pass filter.

Seems to me spline is a joke version of proper low pass filtering. Why not do that instead?

This is filtering. (spot the mistakes… stated periods are inverted, noise breaking through filter floor, whilst I was developing the filter routines)

Note too that is end corrected, obviously very wrong… who said it cannot be done? Just recognise the limitations and don’t push too hard, is conditional. (actually related to the filter impulse response, not filter length)

Is this useful or misleading? That is a call everyone has to make, there is no right nor wrong.

“Quote; “Rules are for the guidance of wise men and the obedience of fools.”

http://en.wikipedia.org/wiki/Douglas_Bader

A fun one… you have few data points yet want to produce a plot. You could oversample for display. This adds no actual data but does produce many points for plotting. A twist here folks: if the data is valid PCM data it must be put through a reconstruction filter before usage. This is what ought to be done with data rather than allowing graphing software to jaggy line. Few data though are valid pcm.

Note that this mentions 2D as well, images.

http://en.wikipedia.org/wiki/Reconstruction_filter

For anyone who’s curious, the Cook and Peters paper is here.

RE Nick Stokes #16,

The correction for autocorrelation in the Macias Fauria paper is discussed a further length in Comment 76 of the Svalbard thread.

Excellent point. I think the reason that fossil fuel depletion models, such as peak oil, work so well is that they are based on well-founded physical principles. Thus, all the curve fits that come out of the models lead to extrapolations that work well as projections. Unfortunately, climate change is not as simple as the bean counting that goes into oil depletion calculations.

Thanks, Hu M, for a more detailed discussion of the some of the potential problems of the paper in question.

Re: Nick Stokes (#20),

Nick, you seem quite sure of your diagnosis here – and without the inputted data. Are you a statistician – and a mind-reader?

Regardless, this is all good stuff from a learning standpoint.

Re: Kenneth Fritsch (#22), Kenneth, I’m a mathematician who has done a lot of statistics, and I read text. They have described their method in detail, following the quote I gave. It is a conventional identification and correction of autocorrelation, and makes no distinction between autocorrelation originally present and that introduced by smoothing.

Re: Nick Stokes (#23),

Hi Nick, have you given peters and cook a read. I’m a bit dubious of a method developed for tree rings being applied to this problem. The referenced paper has some interesting notes about end point problems and proceedures. Over my head, but it might be worth a read since they rely on the method

Re: steven mosher (#24), Yes, I did read it. It’s written in a tree-ring context, but it’s a straight presentation of regular spline mathematics. The issues in time series smoothing are much the same for any data. The program was developed for tree rings, but the method is general.

The endpoint issues are similar to those that Hu is noting (in more detail). A spline smoothed point depends on data at considerable distances. So extending the range has effects beyond the extended region, as their Fig 4 indicates. They have an impulse response in Fig 2, corresponding to Hu’s Fig 2, although they have an extra parameter p which determines how “stiff” the spline is.

Re: Nick Stokes (#25),

I was wondering if the conditions presented by tree rings were met in the new application. I recall that discussion happening around page 50 or so. It might be good to present what those conditions were.. sorry off to bed.. no time to be clearer

Re: steven mosher (#27), The only issue I could see there concerned the number of data points, which is not special to tree rings. The lengths of the series are roughly comparable. Maybe more tomorrow … 🙂

Something incomplete in the discussion so far. If the process (see ice extent, tree ring wdith, etc.) is autocorrelated (and stationary) prior to smoothing, then that is a feature that can be used to make forecasts of future states. Autocorrelation is not some hideous property that needs to be eliminated before any conclusions can be made. One needs to distinguish between first order autocorrelations arising from nonstationary trend vs. first order (and higher) autocorrelations arising from the process itself. Detrend the series. Then the first type of autocorrelation is a non-issue. The question here is whether recent low values of sea ice extent in 2007 and 2008 tell us anything about what we can expect in the near future (2009, 2010, …). If the process is naturally cyclic (higher order negative autocorrelations) you could expect some short-term rebounding before the declining trend resumes. If not, then there should be no such rebounding; the series should bounce randomly around a declining trend.

Smoothing splines in this case are unhelpfully amibiguous in their treatment of trend vs. cyclicity vs. noise.

Just for the record, here’s the MATLAB that generated the above graphs, plus a couple more for t = 45 and t = 47.

% SplineResponseFn

% For cubic spline with knots every 5 periods.

t = (0:100)’;

n = 101;

X(:,1) = ones(n,1);

X(:,2) = t;

X(:,3) = t.^2;

X(:,4) = t.^3;

j = 5;

for k = 5:5:95;

tk = (t-k).*(t>k);

X(:,j) = tk.^3;

j = j+1;

end

% This spline basis is ill-conditioned in single precision (real*4),

% but is usually OK in now-standard double precision (real*8).

% The “B-spline” basis is better conditioned, but is mathematically

% equivalent, and unnecessarily complicated in most cases.

W = X*inv(X’*X)*X’;

% If y is 101×1 vector of observations, spline model is

% y = X*beta + e, and vector z of smoothed values is

% z = X*inv(X’*X)*X’*y = W*y

% Plot selected weights:

figure

plot(t,W);

title (‘5-period Spline weights for all t , sample = 0:100′)

ylim([-.2 1])

grid on

figure

w50 = W(51,:)’;

plot(t,w50)

title (‘5-period Spline weights for t = 50, sample = 0:100′)

grid on

figure

w47 = W(48,:)’;

plot(t,w47)

title (‘5-period Spline weights for t = 47, sample = 0:100′)

grid on

figure

w45 = W(46,:)’;

plot(t,w45);

title (‘5-period Spline weights for t = 45, sample = 0:100′)

grid on

figure

w100 = W(101,:)’;

plot(t,w100)

title (‘5-period Spline weights for t = 100, sample = 0:100′)

ylim([-.2 1])

grid on

% Compute Response functions:

p = 2:.1:30;

p = p’;

np = length(p);

response = zeros(np,1);

r47 = zeros(np,1);

r100 = zeros(np,1);

for i = 1:np

c = cos(2*pi*(t-50)/p(i));

response(i) = w50’*c;

e47 = exp(1i*2*pi*(t-47)/p(i)); % 1i generates sqrt(-1)

r47(i) = w47’*e47;

e100 = exp(1i*2*pi*(t-100)/p(i));

r100(i) = w100’*e100;

end

% Plot Response functions:

figure

plot(p,response);

title (‘Amplitude response for 5-period spline. Central value, sample = 0:100’)

xlabel (‘Cyclic Period’)

ylabel(‘Amplitude’)

grid on

figure

plot(p,[real(r47) imag(r47) abs(r47)]);

title (‘Amplitude response for 5-period spline. Value for t = 47, sample 0:100’)

xlabel (‘Cyclic Period’)

ylabel(‘Amplitude’)

legend(‘real’, ‘imaginary’, ‘magnitude’)

grid on

figure

plot(p,[real(r100) imag(r100) abs(r100)]);

title (‘Amplitude response for 5-period spline. Value for t = 100, sample 0:100’)

xlabel (‘Cyclic Period’)

ylabel(‘Amplitude’)

legend(‘real’, ‘imaginary’, ‘magnitude’)

grid on

Re: Hu McCulloch (#31),

just for fun, I transliterated part of the matlab for the R-users among us. The function hu.splwt calculates the weight matrix when the predictor sequence and the knot locations are input:

Hu’s first two graphs are drawn and the smoothed sequence can be calculated.

R has several libraries for dealing with splines and spline smoothing. One of them is the package splines. A function specifically for spline regression smoothing is sreg in the package fields.

I had two essential points and questions about the paper in question. The first has been talked about here, but I think I need the answers to be a more detailed.

The first point was about how to correct for smoothing routines those statistical processes that use degrees of freedom in the calculations. As a layperson who has played around with smoothing, I know those processes can increase the auto correlations found in a time series. My question at this point is whether a simple adjustment for auto correlation to the degrees of freedom is sufficient or is a further adjustment required for, say in the case of a moving average, using the average of n data points.

The second was asked and replied to by Hu M at the other thread here on the paper in question. The authors use a lag 1 and lag 2 for each of the explanatory variables for sea ice extent in their model would seem to be susceptible to both over fitting their model and data snooping as the values of the variables and the number of them would appear to me not to be made apparent by the authors a prior reasoning. As Hu M notes after running the data through a 5 year cubic spline the gain sought by the authors is not so apparent – but then I would conjecture: why do it?

I could see cases where a study such as the one under discussion would not have combined the data for the two explanatory variables but rather have used a comparison of both to confirm one against the other. Again, as layperson with regards to statistics and the science involved in relating SST to ice extent and tree rings and O18 to SST and ice extent, I have to look for explanations within the paper that demonstrate that the authors understood a prior those relationships. I was only able to find generalities that well could have been made after the fact, and not any detailed information that would imply a prior knowledge of the details of their finished model.

Finally, my meanderings here have led me to an OT question about a model selection process such as AIC, that the authors of this paper used, I believe, for modeling the auto correlation but not the final model selection, and how this evaluation would affect the degrees of freedom. A step wise selection process using a statistical null hypothesis test would, in my mind, require an adjustment such as a Bonferroni correction, but from what I have read an AIC evaluation/rating of models that compares all the model alternatives simultaneously would not require the same amount of correction. Of course, the AIC selection process cannot be used in hypothesis testing and thus is not directly related. I can also see that providing a number of alternative choices for the model in one step is different than doing a step wise test, but is not the AIC process almost as prone to data snooping as a step wise process given that a prior reasoning is missing or poorly understood?

Finally, Bender, I think anyone who recognizes the auto correlations of the stock market, unemployment rates and inflation rates and the problems that that relationship avoids (most of the time) on planning on a day to day and month to month basis does not find auto correlation to be a bogey man. It is simply a fact of life that needs to be considered in our decisions and certainly compensated for when determining the significance with hypothesis testing.

I’m curious. What is the mean value for each of the spline weights. This would give some kind of estimate of the average first second and third derivatives. Looking at your figures, the spline seems to be a good filter for the intermediate points but not such a good filter for the end points. Of course, you could always translate the piecewise sections if you truely wanted to use it as a filter.

Is not it true that, while data manipulation using cubic and bicubic splines have logical and straight forward practical utilizations in algorithms for such processes as photo interpolation and lens making (as I recall for a bicubic spline), when these tools are borrowed for other fields their applications can be abused? The arguments for me are not whether these splines are useful, but where they are being applied and how.

RE Nick Stokes, #26,

I’m not familiar with the Peters and Cook article Steve Mosher and Nick are discussing, but they are probably using “Smoothing Splines” rather than the fixed knot splines that I assume Macias Fauria et al are using for “spline smoothing”.

“Smoothing Splines” were developed by Reinsch, “Smoothing Spline Functions,” Numerische Mathematik, 1967, 117-83. These are spline functions that place a potential knot point at every data point. By itself, this leaves the function underdetermined since it has two more parameters than observations. It also would ordinarily be exceptionally erratic between observations. But in order to tame it down and make it determinate, a penalty consisting of a “stiffness parameter” times the integrated squared second derivative is added to the sum of squared residuals. As I recall, this loss function can then be minimized using quadratic programming. The third derivative of the resulting function has discontinuities at only a few of the knot points, and which ones these are depends on the data. Cross validation is a popular way to select the stiffness parameter.

These “Smoothing Splines” happen to be popular for dendrocalibration of 14C dating (not to be confused with dendroclimatology). The Stuiver and Kra Calibration Issue of Radiocarbon, for example, has several charts of treering age versus conventional radiocarbon age, along with smoothing spline approximations. The smoothed curves are then used to adjust conventional 14C dates for natural variations in the 14C content of the atmosphere. This seems like a reasonable application of them.

BTW, the variations in 14C measured by the dendrocalibration people (as well as a Be isotope that occurs in ice cores) are thought to be an indicator of solar activity, and therefore, indirectly, a potential proxy for temperature, to the extent this is induced by solar activity. A few people have done this, but it deserves to be further investigated.

Since Macias Fauria et al are using “5-year splines” for smoothing rather than “smoothing splines”, I assume they are just placing a knot every 5 years and estimating by least squares, rather than using the more involved “smoothing spline” procedure.

Hu McCulloch, thanks for the response. That was interesting 🙂

I think that smoothing is bad but averaging/accumulating can be good.

Let me explain. Smoothing is bound to introduce autocorrelation and make correction for this more fraught. And a linear regression routine doesn’t want to see smooth data, it wants to see Gaussian data with non-smooth errors (because they are supposed to be uncorrelated for the simple analysis which most mathematicians can understand and believe in).

However, if autocorrelation over short periods is suspected, and if the resolution of the data is doubtful, then simply making the bucket bigger, i.e. (non-moving) averaging over a number of time periods, can be helpful. The averaged data should have lower autocorrelation, and one deliberately avoids trying to resolve the time domain to unrealistic accuracy.

Keep It Simple Stupid!

Rich.

Re: See – owe to Rich (#39),

Well, for regression you can always whiten the data but perhaps that defeats the purpose of smoothing. I think maximum likely hood is preferable to regression anyway and we can include the noise correlation introduced by smoothing in a likelihood function. I’m not sure the effects of smoothing if a maximum likely hood approach is used but perhaps in some cases it could reduce numeric error.

It turns out that the covariance matrix of the fitted spline values has exactly the same formula as the weight matrix when the errors have unit variance. The square root of the main diagonal of this matrix gives the standard errors, plotted below:

Because the beginning and ending values are not tied down on both sides, they have much higher standard errors than the interior values. The confidence intervals of the fitted values therefore naturally “trumpet” out at the ends.

A regression run with a spline-smoothed dependent variable must therefore use Weighted Least Squares to take into account the greater variance at the ends, in addition to adjusting for the serial correlation generated by the smoothing.

The greater noise at the ends also means that a spline smoother has a good chance of producing a Hockey Stick purely by chance.

Re: Hu McCulloch (#41), Thank you for another good post. Very interesting.

On another note, I’ve been thinking a bit about maximimum likelyhood. I presume your error at the end points makes the white noise assumption. I’m thinking about what might be a good way to express the variance in a likelyhood function for a smoothed domain.

In this post:

http://www.climateaudit.org/phpBB3/viewtopic.php?f=5&t=763&p=14937#p14937

I discuss the measurement covariance as a result of model error. I’m thinking that the covariance in the smoothed domain should have three parts, the covariance, introduced by transforming white noise in your raw data domain, into the transformed (A.K.A smoothed) domain. The variance introduced by model uncertainty, and the variance caused by white noise in the transformed domain.

Therefore I think that the variance in the likelihood function should have two parameters for white noise, and the rest of the parameters should be based upon the statistics of the model parameters.

Re: Hu McCulloch (#41),

For the information of the other readers, the covariance matrix of the fitted values is the weight matrix, W, multiplied by the transpose of W. You were certainly aware of this because the square root of the diagonal of this product is in fact what you have graphed in the comment.

What I find strange is the saw tooth shape of the curve with the highest local uncertainties at the knot points,except for 5 and 95 where the maximum is at 6 and 94.

All of this agonizing over smoothing misses a critical point. This is that we can determine experimentally the exact amount of end errors in any given dataset using any given smoothing. I wrote a paper to GRL pointing this out in re: Mann’s bogus end pinning strategy … they said I was too mean to poor Mr. Mann. I probably was, pisses me off when someone acts like a statistician and they don’t have a clue. But I digress.

In any case, what one has to do is to create a host of shorter datasets. These are made by shortening the dataset to each possible length greater that the width of the smoothing. Then apply the smooth to the shortened dataset, and get the smoothed value for the end point. Finally, compare the end point value to the value you get for that point with the complete dataset. This gives the error for that end point.

This lets us measure the average end point error of any given smoothing, and allows us to pick the smoothing that results in the least error. It also lets us put accurate error bands on the end point of the dataset.

Not sure if I’ve explained this clearly, but I assure you that you can compare any number of smoothings for any given dataset and pick the best one.

My best to all,

w.

Re: Willis Eschenbach (#43),

“All of this agonizing over smoothing misses a critical point. This is that we can determine experimentally the exact amount of end errors in any given dataset using any given smoothing.”

We can’t determine the error but we can estimate the error based on the curve we used to fit the data. Of course the parameters of this curve will also have uncertainty. So really it comes down to what is the objective function we are trying to minimize in the estimation problem. Is it weighted mean squared error, maximum likelihood or something else.

The rest of your post makes since but I’m not sure if it is optimal. However, I think it is a good sanity check to see how well, any smoothing/estimation technique works on subsets of the data. I believe I’ve seen Steve do this a few times.

Re: John Creighton (#44), what we are comparing is the error of the end points compared to the final smooth when we have all available data. This (I believe) can be determined experimentally. This, as you point out, is not the total error, merely the end point error.

hu the paper is linked above. I don’t believe the application to ice core data is as straight forward as nick implies.

Nice article, Dr Hu. Thank you.

This is not important, but I have been trying to think of other fields where the ends of a data stream are of more interest than the body. I guess this follows from mining work, where one is more interested in where the drill has entered, transitted and left the deposit than what it did in getting there and after it passed through. Financial forecasting is of course one as it noted above. The other newness that I am encountering here is the desirability to rid the data stream of anomalous values. In mining & exploration, it is the anomalous point values that can be most attractive against a monotonous background, so I’ve had to adjust thinking.

The main way this seems to express itself is that effects that are supposed to be global often have regional texture. Our BOM today announced we might have the warmest winter on record in Australia, to which the Kiwis replied that they are having one of their coldest.

So I’m more comfortable staying away from end points, though wiser for your explanation of this variation.

A smoothed sea of numbers never made a skilled mariner.

– Spliny the Elder

Wikipedia on smoothing time series.

http://en.wikipedia.org/wiki/Time_series

Re: bugs (#49),

May I suggest that you first read what is on a web page before you link to it. The Wiki article gives NO information on smoothing whatsoever on that page. The only use of the word “smooth” on that page is in the descriptive text on a small graph used as a pictorial illustration: “Time series: random data plus trend, with best-fit line and different smoothings”.

RE Steve Mosher #45, the Cook and Peters 1981 paper linked by MJW at #19 and discussed above by Steve and Nick applies the 1967 Reinsch smoothing splines to treering width data. As noted in #37 above, this is a somewhat different approach to splines than the fixed knot (every 5 years) approach used by Macias Fauria et al on the Svalbard data.

As C&P note, the advantage of either type of spline over a polynomial is that whereas the shape of a polynomial over one small interval determines its shape everywhere and vice-versa, the shape of a spline in one inter-knot interval is pretty much independent of its shape a few intervals away. This allows you to fit a locally smooth curve without imposing a global shape.

In either case, you have to impose some smoothness by deciding on either the number of fixed knots to use, or the value of the “stiffness parameter”. C&P provide the general guideline,

I’ve been fitting splines to bond yield curve data and thinking about this problem for a long time (see my 1971 J. Business and 1975 J. Finance papers and US Real Term Structure webpage). It seems to me now, in the spirit of C&P’s remark, that the universal objective is to find a smooth function, subject to appropriate end conditions, such that the errors are serially uncorrelated “white noise.” Too little flexibility causes positive first order (and higher) serial correlation, while too much flexibility can actually cause negative first order serial correlation.

The obvious solution, then, is simply to increase the flexibility by just enough to eliminate evidence of first order serial correlation in the residuals, as evidenced, eg, by the Durbin-Watson statistic. No one has ever tried this, to the best of my knowledge, but it would be straightforward to apply. The only issue I can see is whether to stop when the serial correlation is no longer significantly different from 0 by the DW stat, or when the Moment-Ratio estimate of the first order serial correlation is actually zero.

As for the end conditions, an unrestricted spline often goes berserk at the ends, which is why, in the case of yield curve fitting, I now impose a “natural” (0 second derivative) restriction at the long end of the log discount function, which permits the discount function itself to be extrapolated to infinity as a simple exponential decay. I don’t know what would be appropriate for treering or ice core data, but since the Reinsch/C&P smoothing spline penalizes the integrated squared second derivative somewhat, it will gravitate toward a reduced (if not 0) second derivative at the ends. The first derivative could be doing anything, however, which may or may not be appropriate.

I would like to see some critical discussion of the limitations of Briggs’ criticism of “smoothing.” No doubt the criticism touches on a propensity to misuse, or even abuse, various smoothing methods, especially as devices for inferring future rates of change in time series. This owes, not only to the end point issues we often see, as are being discussed here, but also to the discarding of data relevant to any distribution of errors in inferring future rates of change. Simply put, the smoothing of data makes it look like there is a lot less variability in the series, thus overstating confidence intervals, correlation coefficients, and so forth. Having recognized all that, are there any circumstances where smoothing yields valuable insight or information into the properties of time series? I’d appreciate it if the critics of smoothing would address this question.

I ask this because I have been exploring the use of Hodrick-Prescott filtering for the investigation of natural climate variability, and believe that it has some interesting usefulness in that role. I say “filtering” rather than “smoothing,” because I think it is the more appropriate term, but the end result is often indistinguishable, and as soon as some see the “smooth” result we get with this technique they dredge up Briggs’ criticism. But is it applicable? I will proffer a simple example, if Hu, or anyone else, is willing to engage me in further discussion. But first, some definitions.

Arguably, a time series can be decomposed into various components. Here let us say those are T, for a long term linear time trend, C, for a cyclical pattern in which the trend speeds up and slows down over shorter periods of time, and n for an irregular pattern we treat as “noise.” While the Hodrick-Prescott filter was not originally intended for this purpose, it easily lends itself to decomposing time series this way. Here is an example:

Now what we are looking at is the data is outputted with the stat program I used (gretl), so it is depicted in the way economists typically use the Hodrick-Prescott filter. In particular, their focus is on the “Cyclical component” in the bottom panel. But as I’m using it here as a method of decomposing a time series into T, C, and n, the bottom pane is n, the “noise” around the C depicted by the red Hodrick-Prescott “smooth” in the upper panel. I could show that the cycles we see in the upper panel are the same as what are revealed by traditional methods of frequency-domain and wavelet analysis.

Okay, so what? How is this on topic? Well, suppose we want to use this information to say something about the future, or recent past. It so happens that, more or less by design given the way the Hodrick-Prescott filter works, a linear fit through either the original data, or through the smoothed data, yields the same coefficients. But of course the standard errors of the latter are much reduced, making our measures of statistical significance appear much greater than they really are. That is, we cannot extrapolate from this data and simply ignore the historical volatility present in the data (here represented in the bottom panel). That would be a misuse, or an abuse, of the smoothing process. And that is what I see as one of the great shortcomings of all the various kinds of smoothing we see coming from climate studies these days. They appear to impart a greater degree of certainty to the results than are warranted. They are in fact discarding relevant data, and losing degrees of freedom in the process, and this can never result in greater certainty about what the underlying data truly signifies.

But that is not the same thing as saying that the smoothing has no utility or valid purpose. Here it is shown to reveal cycles that can be corroborated by other means, and is thus useful as a descriptive technique for studying the dimensions of natural climate variability. Yet, because of Briggs’ dicta, many will now refuse to even consider that the technique could have any merit.

Re: Basil (#53),

“But that is not the same thing as saying that the smoothing has no utility or valid purpose. Here it is shown to reveal cycles that can be corroborated by other means, and is thus useful as a descriptive technique for studying the dimensions of natural climate variability. Yet, because of Briggs’ dicta, many will now refuse to even consider that the technique could have any merit.”

I disagree with Briggs. If it is the case that in the original signal the errors are not statistically independent and we have a method to filter out these errors, then I think that a mean squared error fit can be improved by first filtering out these errors. Of course all this really does is whiten the data. So a high pass filter would whiten a signal that has low frequency noise.

I suppose in some cases a low pass filter could also whiten the signal. For instance, perhaps the high frequency noise is very narrow bandwidth, or the noise is such that the power of the noise increases with frequency. If we believe the noise is already white then there relay is not much point in smoothing the signal.

RE Roman #50,

But since W = X*inv(X’*X)*X’, W = W’ and W*W’ = W back again!

For y = X*beta + e, the OLS estimate of beta is betahat = inv(X’*X)*X’*y. The fitted value of z is then z = X*betahat = W*y, where W is as above.

But the OLS covariance of betahat is inv(X’*X) times the variance of the errors, which I am normalizing to unity. The variance of Z = X*betahat is then the “sandwich” matrix X*Cov(betahat)*X’ = X*inv(X’*X)*X’ = W again! So the variances are just the peaks of the individual curves in my Figure 1 above, and the se’s in #41 are just the square roots of these.

Since the weights get increasingly skewed as the end points are approached (compare Figures 2 and 4 in post), the peaks are not necessarily the midpoints, as you note for t = 5 and 95. The “sawteeth” in #41 are more than I would have expected, but I think they are correct.

Re: Hu McCulloch (#54),

I figured this out while driving to the golf course after posting my comment this morning. Without going through the details of the math as you did, from the symmetry of W, I realized that W*W’ = W2. The latter was just a second application of the regression to the predicted values of the first regression which which would give exactly the same result for the new predicted values (Imagine fitting a straight line to the predicted values of a regression – you get the same straight line which they are already in). Hence W*W = W.

I also played on R with the what happens to the standard errors when the smoothing is applied to an AR(1) sequence. For lag1 correlation .5 or larger, the standard errors of the endpoints at 0 and 100 exceed 1 so that the smoothed values become noisier than the actual end values.

Nick and others.

Here we see the purpose of the smooth or spline. To minimize small dating errors. I’ll make a couple points.

The splining that Peters and Cook discuss is designed to remove NON climatic signals in tree ring width. So,

my first question would be: can we determine that the dating errors result from non climatic signals, or are

the dating errors correlated with changes in climatic signals. Further, can we determine that smoothing, in fact,

minimizes the effects of dating errors? Since the ice record contains some annually determined points (volcanoic activity) shouldnt the splining process be constrained by this? What happens to the analysis if you allow for dating error and report that? If we have two or more annually resolved reference points can we characterize the dating errors?

Ok – I’m baffled here.

I admit I’m rusty on maths so I could be missing a lot of the arguments – apologies if this is the case – but isn’t Brigg’s point still valid? “The data is the data”? If I’ve understood correctly he is saying this specifically with reference to time series data (including proxy data) at identified discrete measurement points.

In the case of temperature records that could include a hot day in history during a cold spell. A day when a hot temperature was recorded is a valid manifestation of a climatic event. Why throw this information away by smoothing? Inherent in the discussion so far is the view that these “odd” events are in some way “errors” or “noise” corrupting a signal. IMO they are all information (“signal”) not noise. If later investigation of specific measurements shows that (say) instrument malfunction was responsible then perhaps an informed decision can be taken that certain measurements are noise, but to just put a spline through points seems totally arbitrary.

My recollection of splines is as described in Hu’s head post: a mathematical representation of a least energy curve with a shape dependent on stiffness. In the case of end points stiffness is absent and hence external constraints need to be applied to determine end conditions in addition to location. If we assume for a certain sampling rate no conditions other than location (“value”) are imposed, the spline’s shape will be determined by its stiffness. (I’ll set aside considerations of spline stiffness but for data modelling I’d be interested to know how this stiffness choice could/should be made – I didn’t see reference to this in the headline paper but may have missed it). Now lets say the sampling rate is halved. In the example laid out by Hu IMO this would be equivalent to choosing data points at interval k=10. Now the same stiffness spline with the same dataset could take up a completely different shape and, as a result, I fail to see how it can be considered representative of the information in the data.

Apologies if I’m missing the maths that shows how this is not a problem. In the case of dendro or ice core data where there is some natural smoothing (smearing?) of the data from uncertainty of the time value of the measurements perhaps this goes some way to justify a mathematical smoothing? I looked at the papers referenced up thread but Wahba seems to be based on the stated assumption that the spline is being used to approximate a “smooth” (single variate?) function g(.) and Cook and Peters is dendro specific. I do recognise the headline paper is discussing ice extent but IMO this is not defined by a single variable.

Coming on to Basil’s point which seems to relate more to Hodrick-Prestcott filtering, again I’m baffled. What new information has been revealed in the figures presented? Is the red C line offering any useful data? How is the difference between this and the original (reconstructed?) ersst data useful?

Apologies if the above comments make it obvious I have missed the essence of the arguments or repeat points already well understood. My rusty knowledge of splines is from a drafting point of view and the idea of using a pinboard and wire to model, and interrogate, recorded data is (perhaps obviously) beyond me!

Re: curious (#58),

I am wondering about this too. Why so much effort in smoothing out the data? Why not show everything complete with all the anomalies?

Re: David85 (#60),

The smoothing is used to minimize dating errors. If you look at the ice core dating literature ( i just skimmed it)

You will see that there are various methods for dating ice cores. At some depths in some places you get annual resolution. As you go deeper ( ice compresses, flows, etc) then you get less resolution, years smear together.

So, you take a chunk of ice, you crush it, you analyze the trapped gases. What year does it come from?

at some points you know this +-2 years, other points +-10 years, and at some points you might know the year

exactly ( volcano deposits). What I would like to see is a synthetic test that demonstrates that splining is the best method for reducing small dating errors. I see that assumed, but I don’t see any analytic support for that. In the referenced article splining is used to remove non climatic signals in tree ring widths. Not to adjust a dating methodology. The splining is a standardization technique as far as I can tell ( like standardizing for growth patterns that are not related to climate). In any case I don’t much support for using that method on handling the dating errors problem. I’m not saying it’s wrong or there is a better way, just that this is an interesting problem that romanm and Hu and others can probably chew on for a long time. Anyways. neat problem.

Re: steven mosher (#67),

I think it depends. In one since smoothing may reduce this kind of error but in another since it accentuates it. If the problem is that the data is a blended version of the temperature, then you may want a filter that is somewhat inverse to this (In a way this is opposite to smoothing), however, if the time blending is random then then trying to naively find an inverse filter could just increase the noise.

If the blending is uniform (like a linear filter) then the inversion problem is one like. There is the actual temperature plus white noise (White noise A), and this gets smoothed by the ice to produce a smoothed temperature record plus smoothed white noise (Comes from White noise A), plus additional white noise (White Noise B).

Now, we don’t want to find exactly the inverse filter to whiten the data because this will accentuate white noise B even if it does whiten the correlated noise produced by the ice. However, the filter should still be somewhat inverse, but perhaps contain a smoothing term for real high frequency noise.

Therefor if we want to recover the temperature recorded I contended that in some circumstances we may want to do the opposite of smoothing (depends how you define smoothing) and depending on how the proxy sample/s is related to the actual temperature data.

Re: John Creighton (#68),

Sorry to be a word Nazi, but you’re using “since” when you should be using “sense”…

Example: “How long has it been since your last AGW hysteria?”

Example: “I sense that you’re becoming more hysterical.”

Re Basil, #53,

Cogley and Nason (“Effects of the Hodrick-Prescott Filter…” J Economic Dynamics and Control) debunked the Hodrick-Prescott Filter back in 1993, showing that it can extract meaningless “business cycles” from a random walk. The average duration of the bogus cycles is determined by the “tuning parameter” that the user selects. Unfortunately, the HP filter is almost universally used in economic studies.

Actually, Briggs did start off with the qualification, “Unless the data is measured with error…” If the explanatory variable(s) in a regression contain measurement error, OLS will give biased results. Total Least Square will correct for this, but it is cumbersome and requires knowing the variance of the measurement errors (which cannot be inferred from the regression alone.) In such a situation, there might be a case for reducing the error in the explanatory variables by first smoothing them.

But the dependent variable is entirely different — its measurement error just adds into the regression error, and OLS does its thing with it. Smoothing the dependent variable will generate serial correlation in the errors where none was present before, while at worst smoothing the independent variables will just erase their signal.

I’ll be away for a few days and unable to answer further questions until Saturday.

Re: Hu McCulloch (#59), Hu,

Thank you for replying. I’m disappointed, though, that you fell back on a criticism that doesn’t apply here. Even though you may not be around to respond for a few days, I’ll go ahead and post my reaction to your reply now, while it is fresh on my mind, and perhaps we can pick it up again later.

First of all, showing that HP can extract “cycles” from a random walk no more “debunks” it than showing that you can fit a linear regression through a random walk debunks linear regression. All tools have appropriate uses, and limitations on appropriate use. Just because HP can be used inappropriately doesn’t mean that the tool is per se useless. Having spent a considerable amount of time recently studying its usefulness, I think you are probably right to deplore the way it is so widely used by economists (of which I am one, so I can say this, along with Hu, with perhaps more authority than one could who is not an economist). But I explicitly stated in my comment that I was not using the HP filter the way economists have traditionally used it. So until you deal with HP on the terms I’ve presented, rather than on the terms with which you traditionally associate it, I do not think you’ve fairly responded to my comment.

So, for the sake of further clarification, especially for anyone monitoring this exchange, let me repeat myself. In the image I posted in #53, when an economist uses HP filtering, they focus on the “cycles” they think they see in the bottom pane of the diagram. That is why, in the bottom pane, the gretl software which generated this image says “Cylical component of …” But I’m saying “No, let’s look at this differently.” I’m saying “Let’s look at the bottom pane for what it really is, which is simply a plot of residuals about the resulting smooth in the upper pane.

So if we look at it this way, what are we looking at? One way of describing what we are looking at is that the resulting smooth, in the upper pane, is a kind of local regression, with the degree of locality controlled by the value of lambda. As we increase lambda, we lose the local focus, and in the limit we simply end up with a linear regression. But I would also note that no matter what value of lambda is chosen, it doesn’t alter the “long term trend” in the data. That is, if you fit a linear regression through the smooth, it has the same slope and constant as a linear regression through the unfiltered data. So the smooth is just representing oscillations around the long term linear trend.

My contention is that the oscillations observed this way — again, the oscillations in the upper pane, not the so-called cycles in the lower pane — are, when applied to time series climate data, real representations or depictions of “natural climate variability.” In my original comment I wrote:

So rather than being any kind of spurious result, HP smoothing here is just another way of depicting natural climate variation in time series climate data. I suppose someone might wonder, then, why bother? If it is just another way of looking at what we can already see using spectral analysis, or wavelet transforms, what more does it add to our understanding of the underlying data? Well, unlike spectral analysis, it shows the oscillations (low frequency changes in rate of change) in the time domain exactly as they occurred. In this, it overlaps wavelet analysis. But it also presents the amplitude of the natural climate variability in a more natural, intuitive way, IMO.

In any case, whatever the merits of the approach, as opposed to traditional spectral analysis or wavelet transforms, my point here is that this is a case where smoothing may have its uses. That said, I would certainly cede your points, or Briggs, about being cautious how the smoothed data is used in any further regression analysis. For now, I’m simply contending that this makes use of the Hodrick-Prescott filter in a particularly useful way as a descriptive statistic or technique.

Actually, I might want to go a little further here, at least for purposes of discussion. All of the hoopla over smoothing comes about because of someone wanting to say that their smoothing shows that recent trends (in climate data) have significantly increased, thus constituting some kind of proof of AGW. The image I posted in my original comment shows how “heroic” such claims must be. This use of smoothing discards data that should not be discarded. We should have a “rule” that says that any smoothing technique should be depicted along the lines of the image I posted, where in the bottom pane we have the data that has been “discarded.” I.e., what are the residuals about the smooth? In any inferential or forecasting use of the data, those “residuals” are relevant and cannot be ignored. Looking at the data in the particular case I chose to represent in the image I posted, the data in the bottom pane is implicitly evidence of the standard error of the range of natural climate variability. It is huge. Any claim to have found a recent “statistically significant” rise in temperatures (or whatever other metric of climate change we’re considering) which has discarded, or ignored, this evidence of volatility in the range of natural climate variation should, indeed, be immediately suspect.

David85 – I don’t know but I think Hu’s papers referenced in comment 52 will be worth a look (I only saw them after posting). As far as the headline paper goes table 1 shows the improvement in r and r^2 provided by using the 5 year smoothed data. Unfortunately my maths is too patchy to know if this has been addressed in the discussions above. FWIW to my mind a spline is a useful presentation tool and I don’t see how it provides new insights to time series data. Happy to be enlightened though!

Please excuse that I’m not up to date with the right tech words. It’s about the carrying forward of errors, including near end points. My personal origins are in geostatistics where the semivariogram attempts to discern the separation of points that cease to have predictive value on each other. Let’s work in time domain, using temperature.

We take a temperature at noon today. One minute later it is likely to be much the same. Predictive power is good. If we use the same value, the bias is likely to be small.

What will be the temperature at midnight? No sun at most places outside one Pole, so likely to be cooler. Hard to predict the midnight temperature from the noon temperature. Bias likely to be large.

Proxy, we estimate the midnight temperature from midnight the night before. There will be some bias error. We can try to improve this by assuming stationarity and saying “let’s average the midnight temp on this date for the last 20 years and use that”. A different mixture of bias and imprecision will arise, from some different mechanisms.

We can also construct a model curve that might be like a sine curve, for each day of the year, from a long term data set. This gives a different set of bias and precision in the estimate of midnight temp. There are many days when a sine curve is a really poor shape to use.

As we increase the time elapsed between an actual reading and a forecast reading, both bias and precision worsen. Introduce autocorrelation corrections and concepts and it worsens again. Our predictive confidence can be poor at random time separations, but can improve at certain times, like 24 hours later, 365 days later, then into Milankovitch cycles etc. So there is really no single bias estimator that covers all bases.

At some time interval, or by using some statistical method, we can estimate a duration beyond which a measured temp becomes unreliable in the estimation of missing temps or the forecasting of future temps.

I am having problems with the way that authors are confusing bias with precision; with the coupling of temperatures that have no forecasting ability on each other; and this in steady periods, with a far bigger problem at the ends of smoothed data sets.

Would it be out of court to suggest that in reality, the actual “global” temperature has not changed within properly constructed error bounds within the last century? I keep encountering incomplete estimates of errors, even when dealing with past “settled” data.

So much has been written on CA, but I cannot recall an account of the proper accumulation and carrying forward of errors through known mechanisms and their comparison with measurement where possible. So often I see error bounds used as if they were bands of relatively fixed width that flop around, almost symmetrically, like limpet fish on a shark, about whichever new mean value has resulted from an adjustment to method.

In essence, there has been much discussion on statistical error estimation, especially when different stats approaches are used; but seemingly less discussion on real life error estimation, with the hope that large numbers of observations will cure bias. They won’t.

Steve,

Your copy of the graphic is truncated because the original is actually TWO images side by side:

and

I suspect because the second one (the animation with blinking “we are here” dot) is updated regularly and the first one is (should be) unchanging.

RomanM: Three things wrong with this comment:

1. It’s my graphic not Steve’s.

2. This is posted in the wrong thread. It belongs in the NASA: Sea Level Update thread.

3. This was known and a good way to copy the comment was addressed by Anthony Watts , in comment 31 of the Sea Level thread 5 days ago.

The curve you’re fitting to is your physical model. If it’s just one that seems to fit, you’ve created a physical model based on no understanding at all.

Of course there’s a real difference between curve-fitting and model-based estimators. A model-based estimator is going to come up with a current state estimate (and hence predictive capability) based on a physical model based on your best understanding of the physics.

I’m wondering if the smoothing functions being used currently represent current best understanding of the physics?

There’s always the possibility that there are multiple, important observables that aren’t being captured by “global mean temperature”, or whatever the input metric is called these days. That would be a problem, of course.

Statistical fiddling is a convenient smokescreen obscuring the fact that current understanding of the earth’s climate is too primitive to support the assertions of the alarmists. Arguments about the appropriateness of Kopfschmerz’s algorithm vs. Katzenjammer’s lead us to forget that 3-month forecasts are useless for planning a picnic; therefore 3-decade forecasts ought to be suspect in driving laws that plan the world’s energy economy.

“Mathematics may be compared to a mill of exquisite workmanship, which grinds you stuff of any degree of fineness; but, nevertheless, what you get out depends on what you put in; and as the grandest mill in the world will not extract wheat flour from peascods, so pages of formulæ will not get a definite result out of loose data.”

~Thomas Henry Huxley, 1825-1895, Quarterly Journal of the Geological Society of London 25: 38, 1869.

Which is saying “garbage in, garbage out” in elegant 19th century English.

I didn’t have much to add. It’s an interesting post Hu. I’ve read it several times now, and found the comments as interesting as the post. I knew the frequency response would be weird but not that weird. It makes sense though.

Completely off topic. I’ve done a post on CPS distortions based on various signal/noise ratios if people are interested in seeing more Mann08.

This concept of adjusting for dating errors by splining bothers me. It seems to rest on the assumption that the datum itself is perfectly accurate (in the y-coordinate), the only uncertainty is the time. Do we have a good reason to assume that? And I’m not questioning the precision of the isotope analysis, but aren’t we also building a half dozen other assumptions into the proxy relationships?

There have been a number of comments about never smoothing data made on this thread and I think that some context and clarification might be useful.

Statistical procedures can be viewed as coming in two basic flavors: descriptive and inferential. Descriptive statistics deals with the principles of summarizing and presenting information in data. In this context, smoothing can be a useful procedure to present features of the observed data that might be of interest. Trends in particularly noisy data can be emphasized. When used as an exploratory technique, appropriately chosen smoothing techniques can give insight into the behavior of the data. There is room for misuse, particularly, as we have seen in the past, in the behavior of the smoothed curves at the endpoint which are the result of the choices made in generating the result. However, it should always be remembered that in this situation what you are viewing is the data and not necessarily properties of the mechanism that generated it.

The second flavor has to do with making statements about the generating mechanism from which the data came. This includes error bounds, confidence intervals and tests of hypotheses. In this context, many problems can arise if smoothing becomes a part of the procedures which are normally used. The assumptions under which these procedures have been derived are invariably violated by the smoothing process and the stated results are invalidated. It is possible to correct these results for some but not all types of smoothing. As in Briggs’ admonition, it is extremely easy to be misled ( and to mislead others). This is also the reason that I cringe anytime I read “First, we smoothed the data and then we …”. Unfortunately, it is usually also an indicator of a high level of statistical naivety of the authors.

My advice to a researcher would be that smoothing can be used for the descriptive aspect of a study. Pick a smoothing method which has known positive properties for the type of data on which it will be used. Understand the parameters of the procedure and what effect they have on the process itself. Do not over-interpret by inferring that what you see can reliably be ascribed to the mechanism that generated the data – you can’t form and properly test hypotheses from the same data set. If you really want to smooth AND do inference, get professional help from someone who understands what is going on.

Re: romanm (#71),

Or you can read and post here and get some professional advice free of charge – or at least for the price of a tip every now and then. Of course, my view is from some one seeking a general understanding and not one who is on the verge of publishing a paper.

Re: romanm (#71),

Either that or they are signal processing folks that are filtering/smoothing data in search of an a priori quasi-known waveform. 🙂

It is interesting, the parallels between statistical analysis and statistical signal processing. The tools are essentially identical – other than some terminology and mathematical convention differences of course. The assumptions, however, and often conclusions, are not.

Mark

Re: Mark T (#73), Correct. That’s actually something that has happened in the past 30 years; in ’79 statistics and signal processing had almost nothing to do with each other; now, they’re almost the same toolkit. But what you also say is equally true; even though the tools are the same, the objectives and constraints are different.

The dangerous thing about these tools in the hands of the Team is that they treat their data reconstruction problem like signal processing problems, which, as you say, implies some a priori knowledge of the expected actual signal. This can easily lead to a self-fulfilling prophesy if you’re implicitly assuming the outcome in what is supposed to be a scientific investigation.

Re: romanm (#71),

I think you offer a reasoned perspective.

Re: romanm (#71),

The unfortunate issues I see with this very clear and reasoned separation of when to smooth occurs in electronics fairly often. As an exercise I often try to think of the most extreme examples and see if a paradigm still fits. In this case electronics can provide a lot of real world extreme cases that have noise on the signal of a known frequency and behavior (e.g 60hz or other frequencies).

The noise which is fully separable from the often heavily oversampled long term signal. While it’s pleasant and comforting to have such strong statements from Briggs, it’s hard for me to justify not smoothing data prior to processing when the source and behavior of the noise is known and separable from the signal.

In climatology this is often not the case, especially in unverified proxy data. So I wonder if Hu, Briggs or Roman would agree to filtering prior to other statistical processing when the noise is of known characteristics and/or of very different frequencies from the signal? It’s a slippery slope for sure but maybe it’s correct to walk the line.

Re: Jeff Id (#80),

Pulling a signal with known characteristics from noise is what your TV and radio do every day. Digital ATSC tuners for high definition TV are improving by leaps and bounds with each new generation in sensitivity and resistance to things like multipath distortion. A problem with temperature reconstruction from proxies is that the known signal, the instrumental temperature record, is likely nowhere near as precise and unbiased as assumed.

Re: DeWitt Payne (#81),

Ditto to the ditto power.

However, I see no harm in trying to extract a signal and then using that to make predictions. For example, Basil

did some work with the HP filter and temp data and solar/lunar cycles. No harm in that, except to count as science he would have to make a prediction from the signal he “discovered” Apply HP to a random walk and as was shown above you will find cycles. Now turn that into a model to predict things and you’ll fail or have very little power. In electronics

you have the benefit of being able to build a circuit and test it with new live signals. You typically know the characteristics

of the signal ( roughly lets say in some electronic warefare or signal intercept application). In climate science people are running a SETI type operation. They assume there is some life out there and try to rule out random stuff and believe that if they find a signal it must result from some intelligent process.. or something like that.

Re: steven mosher (#86),

Isn’t that a little more like ID? The signal proves that there’s intelligence which proves that there’s a signal?

Re: steven mosher (#86),

Well, we (Anthony and I) did make a prediction. We fit a sinusoidal model to the cycles, and came up with this:

Of course, curve fitting and extrapolation, without an underlying hypothesis, is just numerology, not science. The phase reversals, and long term patterns here, may be evidence in support of Katya Georgieva’s (and her colleagues’) theory of a solar north-south hemispheric asymmetry influencing long term trends in zonal versus meridional terrestrial atmospheric circulation. It will take another two decades to know for sure, but the projection in the above figure implies a moderation of temperature increases, and even net cooling, over the next couple of decades.

One thing I might add to Steven’s comment about needing a “prediction” for something to be “science,” is that the predition, combined with a plausible hypothesis, is sufficient to validate the methodology. The economists among us will be familiar with Friedman’s philosophy of the method of positive economics and its implications for the trade off between models and “reality.” Models all involve some degree of abstraction from what we call “reality.” But if the abstractions yield testable hypotheses, and useful predictions, then “the end justifies the means.” This is why I’ve never understood the effort to distinguish the IPCC GCM based “scenarios” from “predictions.” That is a distinction without a difference. For each set of assumptions used to generate a scenario, the result is a prediction that tests the validity of the assumptions. If GCM’s are not intended to produce testable predictions, they have no place in the endeavor we call science.

In any event, the relevance of the preceding paragraph to the discussion is that if “smoothing” yields a representation of the data that can be plausibly be related to testable (or falsifiable) hypotheses, then that is sufficient to validate the use of smoothing. There is no scientific justification for rejecting smoothing a priori. That does not mean that we should not be cognizant of the “negatives” associated with smoothing, especially in light of the propensity of some to use smoothing as a tool for cloaking confirmation bias. Thus I applaud Hu, Steve, William Briggs, the Jeffs, and others, for exploring the limitations of the various smoothing methods (include principle components here as a smoothing method) that are being used to allege support for various flavors of the theory of AGW. But in a sense, it doesn’t matter. If the proponents of AGW are wrong, and in their smoothing of this and that they are merely fooling themselves, their predictions will fail, and will in time be discarded. In that sense, science has an internal logic of its own that cannot be defeated. But meanwhile, we see the so-called scientific, peer-reviewed, literature filled with works of questionable value. Auditing and critiquing the methodology of these works is part of the scientific endeavor, and if this helps to hasten their demise so that we do not have to wait decades to see the predictions fail, then bring it on.

But let’s stop criticizing smoothing per se.

Re: Basil (#89),

Thanks for posting that Basil. I would say I have to agree with you ( as an instrumentalist) that all that is REQUIRED

is a testable hypothesis. So, for example, if you predict that the temperature will follow such a pattern that is a testable hypothesis. Ideally, of course, one wants to be able to connect the model to other models and put words and “physical” laws on it, but if the model works that’s enough ( I think epicycles worked ). However, you also need to recognize that I may still prefer a GCM to your model. Primarily because a GCM ( however flawed) will also say something about precipitation, and ice, and el nino, and the list goes on. Your model, even if it were superior on predicting temps would not tell me anything about hemispherical patterns or sea level or… you get the idea. So there is something to be said for a model of the climate that works from accepted physical models. WRT the word smithing that the IPCC does with prediction versus scenario. I think it is just prophylactic PR. The scenarios of course lay out a whole raft of assumed profiles, none of which will actually be met.

Re: steven mosher (#106),

The problem is you have no way of knowing if the GCM is saying the RIGHT things about precip, ice, etc. Just because it may get it right sometimes, it could be totally by chance. There’s just no way to know.

Re: Jeff Id (#80),

I think you’ve got it pretty much right. All methodologies are subject to possible misuse. This is why when teaching statistics, it is always important to spell out the assumptions made in deriving a particular procedure. The end user learns not only how to carry out a particular analysis, but also when not to do so (or to properly adapt the method to incorporate the type of data being analyzed).

I concur with your assessment that there can be situations where smoothing can (and should be done). The specific cases I have seen come from the areas of biomedical engineering and kinesiology. In one case, measurements were done on nerve impulses over a period of time under different conditions with the intent of differentiating beteen the conditions. In another, video of ice skaters accelerating from a standing start was digitized to examine the knee flex angles. Both of these cases involved the “heavily oversampled long term signal” you refer to with various types of noise. Smoothing the data before analyzing the signal does not present a particualr problem to me despite Briggs’ slightly arrogant dictum.

it is pretty clear that proxy data does not have the same information properties nor is the sampling as frequent as the examples mentioned above. The choice for the type of smoother is often dictated by “signal” properties (e.g. 40 year smoothing) which may not apply to the time series at hand. Not only does this induce changes in the correlation structure, but it may also induce bias in the values of the “actual” climate parameters being estimated.

Walking the line carefully is important. Stating all of your assumptions and verifying independently the form of the relationship between proxies and the climate “signal” under study would be a good starting point.

(I can’t believe I actaully agreed with everything you said… 😉 )