As a simple exercise, I quickly revisited the everchanging Hansen adjustments, a topic commented on acidly by E.M. Smith (Chiefio) in many posts – also see his interesting comments in the thread at a guest post at Anthony‘s, a post which revisited the race between 1934 and 1998 – an issue first raised at Climate Audit in 2007 in connection with Hansen’s Y2K error.

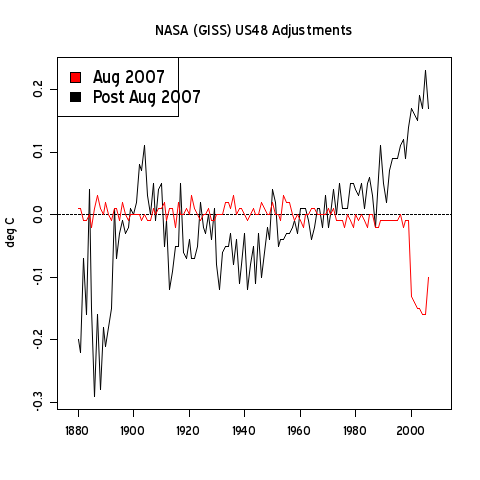

As CA readers recall, Hansen’s Y2K error resulted in a reduction of US temperatures after 2000 relative to earlier values. The change from previous values is shown in red in the graphic below; the figure also shows (black) remarkable re-writing of past history since August 2007 – a rewriting of history that has increased the 2000-6 relative to the 1930s by about 0.3 deg C.

This impacts comparisons made in 2007 between GISS and CRN1-2 stations. At the time, it was noted that GISS adjustments for UHI resulted in the GISS US temperature anomaly having quite a bit in common with a TOBS average from Anthony’s CRN1-2 stations.

Critics of Anthony’s surfacestations.org project commented on this rather smugly – too smugly given the large differences with NOAA and CRU versions in the US and the incoherence of Hansen’s adjustments outside the US. The post-2007 adjustments to GISS adjustments change this.

The increased trend in the GISS US statistic comes at the expense of reconciliation with CRN1-2 stations: the trends no longer cohere.

In the past, Hansen said that he was too busy to joust with jesters – see here. At the time, I observed:

presumably he’s too busy adjusting to have time for jousting. We by contrast have lots of time to jest with adjusters.

Little did we appreciate that Hansen’s new adjustments were not in jest.

Update Dec 26– Hansen’s new article on GISTEMP – Hansen et al 2010 here updates Hansen et al 1999, 2001. Section 4 contains a discussion of US adjustments under different systems, each purporting to show that UHI doesn’t matter. Later in section 9, there is a section on US adjustments, with a brief whining mention of the Y2K adjustment and the following graphic purporting to show that change to USHCN v2 had negligible impact.

It is entirely possible that the change in GISS US since August 2007 is primarily due to the replacement of USHCN v1 methodology (TOBS and that sort of thing that we discussed in the past) with Menne’s changepoint methodology used in USHCN v2.

Menne’s methodology is another homemade statistical method developed by climate scientists introduced without peer review in the statistical literature. As a result, its properties are poorly known.

As I mentioned some time ago, my impression is that it smears stations together so that, if there are bad stations in the network, they influence good stations. Jones used the Menne method in Jones et al 2008, his most recent attempt to show that UHI doesn’t “matter.”

My guess is that it will be very hard to construct circumstances under which UHI will matter after data has been Menne-transformed. And that tests of the various night lights scenario on data after it has been Menne-transformed will not tell you very much. This is just a surmise as I haven’t waded through Menne code. (I requested it a number of years ago, but was unsuccessful until 2009.)

It’s too bad that the Menne adjustment methodology wasn’t published in statistical literature where its properties might have been analysed by now. It’s a worthwhile topic still.

234 Comments

In the UK, this has been the coldest December, and possibly the coldest month in my 40+ years. I have no data to back this up.

No doubt Hansen already has the data to prove me wrong

Perhaps the strongest commentary on Hansen’s work is to plot the serial history of the adjustments, as you did for the latest.

They don’t exactly make it easy to compare; changes are unannounced and not archived (to my knowledge).

Like the plaintive lyric (sung by Bill Withers?),

“How long, has this been goin’ on…?”

I hold the Cheifio in high regard, but I haven’t yet grasped his point about temperature being an intensive (vs. extensive) variable. In that jargon, a system can be split and each portion will have the same temperature. So, that view is clearly incompatible with ‘averaging’ temperatures, especially with air of different properties.

Is he saying that GAT cannot be computed legitimately?

In your absence, Steve Mosher put up links to some AGU talks. In the Palmer talk, he had one slide showing non-anomalized model runs. Wow, the spread was impressive. It also raised the question of discarded model runs, discarded for ‘unphysical’ behaviors. I hope some of that will whet your appetite for disconfiguration of the various mechanogodzilla that we are supposed to fear like the hapless inhabitants of coastal Japan…

Nice to have you back in the hemisphere with us…

RR

Here’s mosher’s link to 82 climate talks;

http://sms.cam.ac.uk/collection/870907

Here’s Dr. Palmer in debate mode;

Chaired Debate: Is Building Bigger and ‘Better’ Climate Change Models a Poor Investment?

http://sms.cam.ac.uk/media/1082469

and a bonus talk, humorous after just watching the original ‘TRON’ last night with my kids;

Mike Hulme: How do Climate Models Gain and Exercise Authority?

http://sms.cam.ac.uk/media/1083388

Thanks to Steve Mc for hosting us all.

RR

RuhRoh – The song is written and performed by Ace lead singer Paul Carrack. He would later work with Squeeze and sing their biggest hit “Tempted”, and also be one of the main VOX in Mike and the Mechanics. Fantastic voice on that guy, and he’s acracker-jack keyboard player / multi-instrumentalist. What a talent!

So scientists just cannot win in your books

If they do not correct data you consider wrong then they are just despicable

If they correct data then that too is despicable unless it reduces GW

The impression you give is that no data should be changed unless it has been Oked by your so called audit site.

I believe that Hadley/CRU have sea surface data to adjust in the 1940’s. I also believe that this is downwards.

I assume you will decry this as cheating?

Why do you continue to IMPLY invalid data manipulation and then wait for your acolytes to follow on with real claims of cheating?

Perhaps you should help out wuwt and clean up some of the more ludicrous claims there? Leif Svalgaard must feel very lonley as he tries to defend reals science from the pseudo science bloggers there!

http://data.giss.nasa.gov./gistemp/updates/

Oh, TFP: If the adjustments were explained and otherwise documented, I’m sure that the response would be much more trusting. Undocumented adjustments made by a demonstrated extremist/advocate can’t qualify as scientific. You obviously feel very strongly about Hansen’s methods – why don’t YOU ask for an explanation – I promise to study it objectively. Like that will ever happen.

Odd that the “adjustments” always (almost always?) serve to make matters worse, ie to increase the magnitude of “observed” warming.

It is in the nature of things that unbiased adjustments would usually be some up, some down, and in the long run not make that much difference.

Not in regard to AGW.

thefordprefect

“The impression you give is that no data should be changed unless it has been Oked by your so called audit site.”

Well that would be a good start, but possibly should be generalized to “no data should be changed without the adjustments being notified and explained in detail, with the rationale explained.” This detail should be available to all, including the very competent auditors who frequent this site.

snip

Re: thefordprefect (Dec 26 13:52), well Ford, perhaps if the adjustments were documented and the reason for such changes documented and, most importantly, the changed and unchanged data kept in separate, clearly marked and documented files, there would not be such a kerfuffle.

You see, whilst you shout about these people being in a “can’t win” situation, it is in fact very easy to please everyone – keep the raw data and create new files for the adjusted data. Let any- and everyone see both files. Show the justification for the adjustments. Stop saying “trust me” without doing such things. EARN the trust with openness and transparency. Sure, it’s a lot of work and everyone is busy, but that’s even more reason to do it – people make mistakes, especially when they are rushed.

thefordprefect

How can one not criticise the lack of good science regading temperature adjustments?

Here is part of an email Phil Jones wrote me on 28 March 2006 –

“I would suggest you look at NZ temperatures. … What is clear over this region is that the SSTs around islands (be they NZ or more of the atoll type) is that the air temps over decadal timescales should agree with SSTs. This agreement between SSTs and air temperatures also works for Britain and Ireland. Australia is larger, but most of the longer records are around the coasts.

So, NZ or Australian air temperatures before about 1910 can’t both be right. As the two are quite close, one must be wrong. As NZ used the Stevenson screens from their development about 1870, I would believe NZ. NZ temps agree well with the SSTs and circulation influences.”

Stress ” … can’t both be right … one must be wrong.”

Can you point me to the resolution of the peer-reviewed, published, settled science behind this?

Of interest to Jones’ last sentence is the Dec 2010 review of the NZ temp record by the Australian BOM, including –

…. a markedly large warming occurred through the period 1940-1960. These higher

frequency variations can be related to fluctuations in the prevailing north-south airflow

across New Zealand. …. Temperatures are higher in years with stronger northerly flow

(more positive values of the Index), and lower in years with stronger southerly flow

(more negative values of the Index). One would expect this, since southerly flow transports

cool air from the Southern Oceans up over New Zealand.

The unusually steep warming in the 1940-1960 period is paralleled by an unusually

large increase in northerly flow during this same period. On a longer timeframe, there

has been a trend towards less northerly flow (more southerly) since about 1960.

However, New Zealand temperatures have continued to increase over this time, albeit

at a reduced rate compared with earlier in the 20th century. This is consistent with a

warming of the whole region of the southwest Pacific within which New Zealand is

situated.”

Click to access Report-on-the-Review-of-NIWAas-Seven-Station-Temperature-Series.pdf

Readers need to look at the graphs to see how convincing this is.

Do you not see the irony whereby the BOM, charged with the task to determine if New Zealand was warming, concludes that it was because this is consistent with a warming of the whole region, established in a manner similar to that being examined?

Unfortunately, there are very many unscientific inconsistencies in the global record. GISS is not alone.

I made an error. The URL starting niwa.co.nz does not contain the Australian BOM review. It has a one page letter from the BOM. The rest is apparently NZ material written with the knowledge of the BOM, which in turn notes in its one-pager that

“The review does not constitute a reanalysis of the New Zealand ‘seven station’ temperature

record. Such a reanalysis would be required to independently determine the sensitivity of, for

example, New Zealand temperature trends to the choice of the underlying network, or the

analysis methodology. Such a task would require full access to the raw and modified

temperature data and metadata, and would be a major scientific undertaking. As such, the

review will constrain itself to comment on the appropriateness of the methods used to

undertake the ‘seven station’ temperature analysis, in accordance with the level of the

information supplied”.

Re: thefordprefect (Dec 26 13:52),

“I believe that Hadley/CRU have sea surface data to adjust in the 1940′s. I also believe that this is downwards.

I assume you will decry this as cheating?”

I believe the adjustment will be upwards. Up or down is Not the issue ford. NOT the issue. The issue is twofold

Will the paper that describes the adjustment

a. supply the data before and after

b. supply THE CODE that does the adjustment

c. properly credit the people who brought the issue to the forefront

d. carry forward in a properly document way the UNCERTAINTY due to adjustment. It is not enough to correct

a record for inhomogenous collection procedures. Every adjustment carries with it an error of

prediction, but these errors are never accounted for or displayed.

If Brohan 2010 learns the lessons of the past they will supply all the data and all the code. If not, then, people will make charges. A wise man would forestall that by doing what a scientist should do.

How long will it take the “climate science” team to understand the lessons that have been learnt by all other scientific disiplines. i.e. the hardest thing any scientist faces is to remove the influences of biases that we all have?

TFP – don’t generalize about ‘scientists’. Many climate scientists, (co called), are reluctant to supply raw data and algorithms. Here is a typical/leading climate scientist’s response to a request for information: “Why should I give you my data when you just want to find something wrong with it?”. This is not the typical scientist’s attitude, training, inclination, or behavior.

Well said.

Surely many main stream scientists would deem sceptics doing them a favour by finding fault in their data so that this could put them back on the right track sooner,saving further embarassment.

From another angle, wouldn’t allowing sceptics to concede finding no fault in the data and the sampling method/code be the ultimate thumps up on the AGW course from the sceptic camp? Unless there was and still is geuine doubt/concern even to the warmists and fear led to denial.

Who then is the denier?

Actually, that is a “main stream scientist[‘s]” attitude by and large. But what’s important is that in OTHER sciences, that attitude is mitigated by the knowledge that the data WILL be examined. There WILL be “peers” out there scrutinizing conclusions, calculations and data. That in turn often elicits a paranoid and OCD pattern of behaviour where every bit of data is preserved, every transformation documented – in lab science that is what notebooks are for and why they are kept in ink. They know that some critic will be looking to shoot them down – what really drives science is the chance to turn to a colleague and say, “see, I said you were wrong.” The watchword is “document, document, document!” That way ideally, if you flubbed it, you can catch it before some graduate student sees a window of opportunity to initiate a Kuhnian paradigm shift by relieving themselves on the head of the giant upon whose shoulders they are perched.

Yes.

In the middle ages, we have church review.

From the 80s, we have peer review.

Before long it became buddy review.

But there is hope, we now have blog review.

What next?

fp;

Given the two following data points –

1) the adjustments and their justifications are undocumented, often even hidden,

2) they are overwhelmingly favorable to the thesis of the adjuster

– more than suspicion is warranted. snip

Are these adjusted (but referred to as unadjusted) temperatures the input into GISTEMP?

GIStemp takes as input GHCN, USHCN and some Antarctic data sets.

The “adjusting” is done to the GHCN and USHCN.

Therefor the “uadjusted adjusted” tempertures are the input to GIStemp.

It is a little more complicated than that as NOAA / NCDC make an “unadjusted” version (that has adjustments for some things in it) and an “adjusted” version that has even more adjustments in it. You need to look at their data set descriptions to find out exactly which adjustements are in each AND do a lot of digging.

For example, the USHCN may have a data item deemed “wrong” (too far from its ‘neighbors’) replaced by an “average of ‘nearby’ ASOS stations”. This is done silently (as far as subsequent users of the data can see). The simple fact that an AVERAGE is used will suppress the range of the resultant synthetic-data point. That the daily data min-max are then used to construct a mean will allow that range suppression to have import… Oh, and a FIXED number of degrees is used for the “out of range” filter. As cold excursions have more “range” downard that hot excursions have range upwards, this will preferentially bias to removal of cold readings and replacemnt with an average… (As “heat rises” that limits hot extreme events, but “cold pools” so has no such mitigation…)

So I guess the answer is “It depends on WHICH adjustment” as some are in the imput to GIStemp and some are not.

I probably ought to add that USHCN has a different adjustment history than GHCN and STEP0 of GIStemp merges those two histories for the USA grid/boxes…

While it is desirable to have the most consistent measurement records possible, it is even more important to have a credible record, especially if important public policy is involved.

It is not clear that the current administrators of these records are conscious of that responsibility.

As is, the adjustments over the past century appear comparable to the magnitude of the warming detected. That seriously raises the importance of those adjustments. At a minimum, these need to be backtested, by comparing old vs new procedures for a long enough period to be reasonably confident that the adjustments are in fact appropriate.

The plots above are for US48 – a very insignificant area of the total globe. Whatever the adjustments to the above figures are they make very little difference to the global average.

The plots above are for US48 – a very insignificant area of the total globe. Whatever the adjustments to the above figures are they make very little difference to the global average.

I don’t have nearly the expertise of others here, but this type of observation always puzzles me. First, points are trivialize by saying whatever area in question is insignificant to the area of the globe. Second, if it is “very insignificant” as you point out, why make the adjustments in the first place? Maybe a bunch of “insignificant” become significant at some point?

So how much should the adjustment be for the 75% of the Globe that we know that the data is at best ‘poor’ or at worst ‘non-existent’?

If I strip away all the meandering and snark, the only content left is that Hansen’s adjustment has changed and you don’t like the direction. You don’t supply any mathematical rationale for why the revised adjustment is incorrect. Nor what the total impact on the trend itself is.

Scientist,

Rather than complaining your self, how about talking to Hansen or Jones or the IPCC… and having them do a complete paper explaining not just what is being done but the REASONING AND RESEARCH TRAIL SUPPORTING what they have done and are doing!!

Scientist,

One of Hansen’s adjustments was to increase all data points after a particular year and to decrease all data points prior to that same year. Hansen did this on a sliding scale so that the magnitude of the adjustments increased positively going forward from that year and decreased going negative3ly from that year.

As an engineer, I can accept that data might need to be adjusted from time to time for some reason. I would expect that such an adjustment would statistically cancel out and not contribute to a (or create) a trend.

I checked the data myself by comparing pre-adejustment yearly average temperatures with post adjustment yearly averages. I loaded it into a spreadsheet and substracted one set from the other. When I saw the results that I described ablve, I thought that I had made a mistake in my spreadsheet. I checked and re-checekd the spreadsheet several times, until I was certain that I had not erred.

Perhaps you can enlighten me as to what sort of an error would need corrected by such an adjustment. I am at a loss to explain this.

Brooks, do you have a link to yur results. It sounds like a powerfull represnetation of the adjustments made so far.

David,

I do not have it on this computer. I believe that the Excel file is on my Terabyte drive. I am now several thousand miles from it. I’ll check after the 1st of the year.

Your statement is dripping with irony. Someone who made the adjustments should be showing us the mathematical rationale for why the adjustments are *correct*. Then we might be able to tell you if there’s any for why they are not.

scientist

You invert the burden of proof! It is up to Hansen to show that any adjustments are correct. In the absence of any rationale from NASA, the suggestion that sceptics supply a mathematical rationale is ludicrous

thefordprefect said:

“The plots above are for US48 – a very insignificant area of the total globe.

It depends on the number of data points used in the calculation of the global average. With the African continent being a non-trivial area, it may be that it contributes only a trivial number of points to the calculations compared to the number used from North America, mainly the USA.

Continental USA Trivial? Not really.

The data is applied to a grid and weighted by area.

Each missing data point can be ‘filled in’ from up to 1000 km away.

The data goes to the next step where it is “homogenized” against data up to 1000 km way (that may include ‘synthetic data’ from the first step).

The data then goes to a UHI correction that can use data up to 1200 km away (IIRC, it might be 1000 km at this step.) So at this point it may be using data that started out 3200 km away.

THEN it calculates the Grid Box anomaly… and each grid box may be “filled in” with a transformed data item from up to 1200 km away…. that may be based on “stuff” from 3200 km away…

So, take a 4400 km distance from ANY border of the USA. Remember to include Alaska and Hawaii and Puerto Rico and Guam and…

That’s the potential “reach” of USA data.

When I complain about the “Serial Application of The Reference Station Method” or “The Recursive Application of The Reference Station Method” this is the impact I’m talking about.

The published paper that shows a 1200 km correspondence of “trend” does not justify being a “Serial Adjuster” or using a “Serial RSM”.

Oh, and as the thermometer count drops from year 1990 to 2010 (from 7280 thermometers to 1260 or so) the degree to which GIStemp must “reach further” at each step increases… Station drops DO matter.

This is plainly not true, since if it was there would be no empty cells at all. Missing data are not filled in from 1000 miles away – that is only done locally. The UHI correction for non-rural sites is irrelevant for the regional trends. And whether the reach changes as the number of stations drops depends absolutely on the distribution of the remaining stations – every independent analysis has clearly shown that it doesn’t matter at all (Zeke, CCC, etc.). The CCC code is easy to install and run – why don’t you actually use it to demonstrate any of your claims?

“every independent analysis has clearly shown that it doesn’t matter at all”

Independent of what??

Re: E.M.Smith (Dec 27 17:03), unfortunately i can select 100-200 stations at random and see no effect. drops do not matter, except to the final uncertainty. And no I dont use stations 1200km away to adjust. I can get the same answer with a 5 degree bin, 3 degree bin or 1 degree bin. I hazard I could even go lower than that. and it doesnt matter how many stations you have per grid.

i’ve even tested with 1 station ( ranomly choosen) per grid.

Aren’t airports great???

Actually you can get the same answer if you remove all airports as well.

Steven,

as a previous poster already suggested, doesn’t that lead you to start questioning the data if slicing and dicing makes no difference?? That no matter what you do you obtain the same trend??

We aren’t talking about a climate controlled biodome after all!!!

If you leave all the stations with the trend you don’t want out of your collection to begin with, what happens? 😉

Andrew

Bad Andy,

It’s less trouble to simply draw a graph that suits your purpose. Nobody will ask you for the raw data; if they do, you just refuse.

If you get the same answer, no matter what you do, then the data is unbelievably “perfect” /

unbelievably, being the key word.

Actually no, what it means is this. Over a substantially long enough period the distribution of the trends of all stations is approximately normal.

For example the mean would be something like .8C and the full distribution would go from zero to 1.5C. thats for 4000 stations, say.

Now, pick 100 stations from that distribution and calculate the mean trend… opps its gunna be close to .8C

It has NOTHING to do with the data being perfect and everything to do with the distribution of temperature trends.

You then can try to see if dropping all high altitude stations matters. been there done that, no difference. You can drop all airports. no difference. You can drop all urban. nothing.

The question is is the definition people use of urban correct?

For a long long time I have been suggesting that the key to finding the UHI signal lies in having GOOD metadata. Imhoff has taken a great step in this direction. And he has shown the effect in some limited cases. I would hope that someone could duplicate his work and focus on the 7000 locations we use to measure temps. That is entirely doable. THAT should be the focus of peoples attention, not stations dropouts, not hansens refernce method, not his UHI adjustment. Those are just distractions from the real question.

IMHO, if you get .8C from ANY 100 randomly picked stations it isn’t random.

I can go looking through the GISS station graphs and find 100 stations with ZERO warming. And some with cooling since the 1930s.

I picked a spot at random:

http://data.giss.nasa.gov/cgi-bin/gistemp/gistemp_station.py?id=425726710010&data_set=1&num_neighbors=1

http://data.giss.nasa.gov/cgi-bin/gistemp/gistemp_station.py?id=425725720110&data_set=1&num_neighbors=1

http://data.giss.nasa.gov/cgi-bin/gistemp/gistemp_station.py?id=425725720140&data_set=1&num_neighbors=1

yep

Steven,

It sounds as though you’ve made a 2-D scatterplot of temperature trends vs. (log?) population, and there is no apparent pattern. If you’re analyzing trends, it would seem more appropriate to use population change rather than population itself. That is, convert population change over the record to a logarithmic metric (% growth/year, perhaps), and plot trends vs. growth rate. [Or perhaps construct a 3-D scatterplot, trends vs. population & growth rate.]

I have no idea whether the former population figures are readily available, however.

“its gunna be close to .8C”

In what decades was the .8C anomaly accumulated?

Was the .8C accumulated in the same decades?

Were those decades ones in which CO2 rose by a significant amount?

Betcha in Australia it’s going to be closer to 0.3 deg C since 1900, after the falsities are adjusted back.

Can anyone give me a reason why many truly rural coastal stations here tend to be rather level over the last 40 years, while rural inland stations say 300km or more from the sea, but at low elevations say below 300m, tend to show increases? Please don’t say that the sea moderates. The inland trends go stupid if you project them forward or back at these rates.

Has anyone a reference to an overlap comparison MMTS to mercury thermometer? There is value in looking at http://kenskingdom.wordpress.com/2010/11/22/what%e2%80%99s-the-temperature-today/

Re: Geoff Sherrington (Dec 30 07:27),

In a word – rainfall, or perhaps evaporation.

We have been – told by various media outlets especially – that the temperature increase caused the big dry. Yet there is (Australian) published science suggesting that it was other way around – that the dry caused the heat (or perhaps more accurately, a loss of evaporative cooling). Perhaps our recent experience with floods may help, ahem, settle the matter.

Neil Fisher,

It’s hard to see rainfall or evaporation giving a 40 year trend, because something has to start and end the trend. BTW, have you seen any proceedings from a climate conference Uni of Tasmania about May 2009? It mentions changes in rainfall and cloud at Macquarie Islandand probably other places (pers comm BOM) but I can’t seem to find the proceedings.

Fortunately, Dr. Hansen soon approaches retirement, and will no longer be making adjustments.

I expect ‘cooler heads’ will then have more sway over what are valid adjustments.

I wouldn’t hold your breath Steve.

snip – please don’t do this sort of editorializing

You can bet, that even though Hansen reaches retirement age, they’ll pass a special law to confer a Emeritus status on him.

Emeritus (def) Retired or honorably discharged from active professional duty, but retaining the title of one’s office or position.

So NASA may not have a choice. He’ll be free of “official” duties, but will still be able to be arrested at protests as a “NASA Climate Scientist”.

The engineering profession has developed the concept of published standards and standards committees to create them. A standards committee is a group of experts whoa re assembled either by invitation or by general request. These experts work in a defined process which usually consists of submissions for the amendment of a proposed draft standard. As a result, a standard moves from a plurality of competeing ideas to a rough consensus on the topic. This blog works over many many standards that were created in this way that enable to functioning of the Internet and the WWW.

I find it quite puzzling that this is not the procedure followed in the creation of a standard as important as that which guides the adjustment of temperate records. Considering the potential effect of AGW, the current practice of relying on independent academic researchers with rare peer review and publication seems to be quite bizarre.

My suggestion is that temperature standard be regarded as an engineering problem. Similar work in strnght of materials etc is clearly regarded as engineering work. The engineering practice can then be followed in creating a transparent and clearly explained standard for the creation of temperature records. There would be no ‘hide the decline’ ‘tricks’ in such pracrice unless everyone was aware of what the trick was about.

Correct as usual TAG, but who is listening? Certainly not the EPA, NOAA, WMO or IPCC. They already have a system that works quite nicely, for them.

Why would they seek consensus, when they claim to have it already? Backing off from that position would be an admission of error or guilt (either works).

The changes in the GISS US temperature adjustments since August 2007 are very large (~0.3 deg C) relative to the size of the trend in the most studied and measured area of the globe. Surely that deserves to be noticed and explained regardless of the direction. The size of the change is surely very surprising regardless of the direction.

Hansen et al 2010 is a very recent publication on GISS methodology, but did not contain a reconciliation of why the new adjustments differ so remarkably from adjustments believed to be satisfactory at the time of AR4. It is surely Hansen’s job to present a mathematical rationale for why these new adjustments are correct relative to the former adjustments. Why didn’t the peer reviewers ask Hansen for such a reconciliation? At present, I don’t know whether the changes arise in modifications at GISS or at USHCN or both. If people critical of my merely noting the new adjustments can clarify this point, I’m sure that readers would appreciate such a reconciliation.

Didn’t you ask for the code? And get it? Hasn’t he written papers? Haven’t you studied how these adjustments are done in the past?

Perhaps you don’t have what you need now to understand the numbers, I don’t know, but you don’t actually say that (in the post, Steve). Capisce? What is lacking?

Have you even started to do any analysis or is this just an inital post saying, look a change (along with a bunch of snark and Chefio/Watts (uck) love?

Oh…and do you have the mathematical and logical intuition to know what types of things would drive the adjustments to change over time (and what amounts typically)? I mean, think about it like a business problem. What are the key performance factors? Haven’t you dug into the algorithms before? Don’t you have some feel for them? Haven’t you (for years) noted that the numbers change over time, because of adjustments (that they change at all is not an “aha”, right?)

Steve: TCO, in a business situation, changes in accounting policy are not encouraged by independent auditors (to say the least) and must be presented with a detailed reconciliation to previous accounting policy. This is the responsibility of management not auditors. As you say, I’ve dug into these algorithms before and could doubtless do so again. Other people have now worked through the mire of Hansen’s code. Perhaps one of them can explain what’s going on here if Hansen is unable or unwilling to explain what he does. I have limited time and energy and surely it’s unreasonable to hold me responsible for not doing what is Hansen’s obligation.

What Peter Wilson said! Lots of trolls about with nothing of consequence to do or say. Do trolls get Christmas presents? Does Santa count them as naughty?

Re: scientist (Dec 26 19:45),

The questions Steve McI raises in the body of this post appear quite reasonable, to this only-modestly-informed outside observier. TAG’s 19:03 follow-on remarks framing the issue in engineering-standards terms also seem quite sensible.

By the same token and in the same spirit, Scientist’s critiques of Steve’s critique raise worthwhile issues. The field would be better if all such critiques were weighed and responded to, when it can be done.

And it usually can be.

The issues have become so personalized and so bitter that it’s very difficult for scientifically-literate laypeople to know where to begin. Much credit would go to the individuals who turn away from this course, and re-focus on the technical issues.

It’s up to the scientists(s) doing the analysis and writing the paper to adequately explain all features apparent in their analysis. Third parties CAN look for explanations, but it isn’t their obligation.

Steve gets to do the work he wants to do, not the work you want him to do, unless you can arrange a contract with him.

Regarding your questions, you’d soon realize how goofy they are if you read other people’s exploration of the Hansen analysis / code. Or maybe you wouldn’t – as one of my colleagues is fond of saying “Some things are obvious – they just aren’t obvious to everybody.”

I was reading the Hansen paper and came across this comment, which I thought was quite apropos, given the post and subsequent comments:

Correcting data known to be bad is extremely subject to the biases of the adjustor since they invaribly rely on ad hoc adjustments that are tested to ensure they produce the ‘correct’ results (i.e. results that are believed to be correct by the adjustor).

Hansen has long since despensed with the notion that he is a impartial scientist. He is a political activist and therefore the onus is on him to prove beyond any reasonable doubt that his biases did not affect his decisions about the adjustment algorithms he choose.

Which is just another way of delaying and obfuscating any true analysis until after the press releases.

As Ross observed long ago, calculation of a temperature index is the same sort of activity as calculating a Consumer Price Index. Ross (and I) long ago suggested that it would be far better for all sides of this debate if it were done by a governmental statistical agency rather than parties with an interest in models and active concerns about policy. That would avoid some of the criticism that has occurred in GISS’ occasional pratfalls. As I observed at the time of the Y2K issue, Hansen could readily have avoided much of the controversy by making a timely and straightforward change notice, instead of making wholesale changes without acknowledgement or notice. Hansen’s practices in respect to change notices and disclosure have improved markedly since then.

Steve, GISS is a government agency — not statistical but scientific — and concerned with the science of climate. It’s appropriate that such an agency to be doing the work.

The issue is that the agency has been captured by an activist, who has apparently crewed the agency with others who are convinced similarly. There’s no reason to think that a government statistical agency could not be similarly captured.

Jim Hansen’s views could just as well find a home in the breast of a statistician. And, for example, likely has done in Colorado.

So, I don’t see your option of a government statistical agency as a solution. The solution is adherence to professional integrity. No system, after all, is any better than the people running it.

GISS November anomaly 0.74°C

HadCru 0.38°C

Need I say more?

“Steve, GISS is a government agency …The issue is that the agency has been captured by an activist…”

Illustrating one example of why Steve is mistaken to suggest that assigning the task to a government agency is likely to improve quality. It might, for a time. But “capture” is all but inevitable when it comes to government agencies.

Naturally, it’s sensible to suspect private entities of self-serving behavior. It does not follow that the public sector should not also be suspected of it.

No more cakes and ale,

Alas, Polly wants cracker.

Climate clears table.

===========

Weather clears table

Cringing climate science set.

Wicked wet winter.

==========

Re: shewonk (Dec 26 20:24),

shewonk: then when corrections are submitted would you expect them to be implemented?

and if they were not implemented what would your judgement be.

1. immediately defend

2. immediately attack

3. suspend judgement until the corrections can be assessed.

be careful, trick question.

It is a couple of months since I last looked at GHCN/GISS/NCDC data and methods in any detail. I did look briefly at the changeover to nighlights-based adjustments here: http://diggingintheclay.wordpress.com/2010/07/10/gistemp-plus-ca-change-plus-cest-la-meme-chose/ I found it odd that there was so little change overall, yet the more deeply you look the bigger the changes (Figures 4 and 5). Since the adjustments are mediated though classification by population size (whether though nightlight estimation or previous method) the correct assignment becomes crucial. I have not followed this up although it is something I felt needed further investigation.

Peter O’Neill (here and subsequent posts) pointed out the location errors affecting nightlights classification for many stations and now Steven Mosher has followed this up. Overall the proportion of location errors is probably small and many not have a significant effect, but the point is – this is a set of errors that shows the due dilligence is not there. If you are basing your analysis on geolocation – you make sure your lat/long is error free.

Now also (IIRC) NCDC have started adjusting for UHI in the ROW as well as in the US. Something makes me think this started in November 2009 for GHCNv2, but I could be wrong. This means that the NCDC adjusted data, which is the INPUT for GIStemp has UHI (and TOBS/SHAP etc) already accounted for – using a type of homogenisation method (e.g. as described by Menne et al here slide 15). So the Hansen adjustments are providing a second tier of adjustment that should be unnecessary.

In the US, I think (and this is a surmise right now) that looking at the nightlights adjustment relative to Menne-adjusted station data is locking the barndoor after the horse has left the barn, so to speak. My impression is that the Menne adjustment will smear bright station histories into dark station histories. After the Menne adjustment, my surmise is that there won’t be much difference between bright and dark stations. The more interesting analysis would be bright vs dark before Menne adjustment – which doesn’t seem to have been presented in the Hansen analysis.

Re: Steve McIntyre (Dec 26 22:27), Its unclear in both hansen and Menne whether the homogenization is done between “like” stations WRT nightlights or urbanization or not. I believe ( pers comm) that the homogenization does not take into account the character of the station. Thus “smearing” UHI signals if they are ( like I contend) high frequency affairs.

That is, since UHI doesnt happen every day ( snow, clouds, winds, rain amerliorate it) and since it is seasonal, then it is likely to be a high frequency affair. Homogenization will remove or smear or filter the most extreme events. Then if you try to find UHi in a homgenized series you will be looking for a needle in the haystack.

interesting article on stata and confirmation bias in the New Yorker

a sample:

Jennions, similarly, argues that the decline effect is largely a product of publication bias, or the tendency of scientists and scientific journals to prefer positive data over null results, which is what happens when no effect is found. The bias was first identified by the statistician Theodore Sterling, in 1959, after he noticed that ninety-seven per cent of all published psychological studies with statistically significant data found the effect they were looking for. A “significant” result is defined as any data point that would be produced by chance less than five per cent of the time. This ubiquitous test was invented in 1922 by the English mathematician Ronald Fisher, who picked five per cent as the boundary line, somewhat arbitrarily, because it made pencil and slide-rule calculations easier. Sterling saw that if ninety-seven per cent of psychology studies were proving their hypotheses, either psychologists were extraordinarily lucky or they published only the outcomes of successful experiments. In recent years, publication bias has mostly been seen as a problem for clinical trials, since pharmaceutical companies are less interested in publishing results that aren’t favorable. But it’s becoming increasingly clear that publication bias also produces major distortions in fields without large corporate incentives, such as psychology and ecology.

Read more http://www.newyorker.com/reporting/2010/12/13/101213fa_fact_lehrer#ixzz1A06jhxwf

I doubt that the proportion of location errors is small. In Sweden (19 GHCN sites) more than half have location errors larger than the 0.01 degrees postulated by Hansen. Both the two “zero nightlight” sites have very large errors (25 and 30 km) and are “zero nightlights” only because of these errors (one is misplaced into the middle of a large lake and the other in the middle of an uninhabited forest).

I suspect that a revision of the 500 zero nightligt sites might yield rather startling results.

Re: tty (Dec 27 10:04),

A “revision of the 500 zero nightligt sites” might well yield rather startling results.

For a start, without correcting the location errors, but using the replaced nightlights file Steven Mosher has referred to in place of the deprecated one, less than half of the 2928 “pitch black” stations based on the latest GISS v2.inv (i.e. that with the USHCN/Colville correction) remain “pitch black”, and 88 become not merely illuminated but urban. 31 stations which were illuminated using the deprecated dataset become pitch black using the preferred dataset. (I have not checked the number of zero nightlight sites in the US, but I assume the figure of 500 sites refers just to the US sites, whereas the 2928 sites referred to above includes also sites outside the US)

If, instead of a paper in a learned journal, Hansen’s work had been reported in a submission to an engineering standards committee, these errors would have been turned up and corrected almost immediately. We would not have been wasting time submitting comments to journals with lengthy peer review processes.

The AGE issue is too important to be left to academic scientists. These UHI and globaltemperature record problems are relatively simple engineering issues. With proper use of established engineering principles and methods, they could be resolved very quickly.

A simple engineering version of “peer review” would have sufficed, TAG, i.e., a preliminary design review for the software…

Mark

Re: Verity Jones (Dec 26 20:46),

Thanks verity.

The assignment of rural/urban matters MORE the smaller the difference is.

Take a simple example. If the UHI signal is .1C and you misplace some urban as rural and some rural as urban then it’s harder to pull that signal out. In reclassifying rural and urban, using better metadata, I’ve found that the mis indentifications tend to go both directions. That means adding some rural to the urban bin and adding some urban to the rural bin.

Peter and I have been exchanging notes, but getting completely correct locations has been difficult even WITH the WMO asking all nations to update their position data.

A big factor is the non-linear response. A rural settling experiences UHI too. Indeed, the largest increments in UHI effect occur during the initial population increases. This is one factor which confounds primitive partition analysis like the night-lights methodology or using the current population census.

Yes, Dr. Spencer did an interesting study on that issue that is on his site.

Spencer’s work on this point is modern but not original–as he recognized in the text of his post. Oke (1973) found the population relationship to be:

Tuhi = 0.73log10(pop)

So every order of magnitude change in the population of a settlement increases the temperature by 0.73C.

Zeke over at Rank Exploits posted an analysis claiming UHI doesn’t matter if the Tobs changes are accepted:

http://rankexploits.com/musings/2010/uhi-in-the-u-s-a/

The trouble with that analysis is precisely this log population effect. For instance if all settlements have been growing at the same rate–say 1% per year. When you compare the trends of rural and urban stations they will be the same (about). This is Zeke’s result, but this is not the UHI phenomena from the literature. Indeed, its entirely unremarkable that rural and urban stations have the same trend if they have similar population growth rates.

His work is basically non-responsive to the actual issue, although it does address a certain cargo-cult version of the UHI argument. Its useful to cut away distracting arguments, but its important to keep in mind that Zeke’s work does not contradict Oke’s finding of a UHI contamination to the trend.

Re: Jon (Dec 28 12:06),

Oke wrote many papers after that study. log Population is a rather crude ax to swing at the problem but the best he had at the time. The study was limited and for the most part it’s recognized that the mechanism of UHI is crudely tied to population. For improved studies you can look at those that covered the US from 1900 to present (using population densities at 30 arc seconds.) You won’t find the log relationship. Similarly I looked at worldwide stations and could find no log relationship. Basically population is a proxy for UHI. It matters what the people do to the surface and where they do it.

For example, in parts of the world where you have dense populations but the people do not build high structures, you have less UHI. ( UHI results from disruptions in the boundary layer cause by building height) In places where the city is built in forests the biggest factor is deforestation, not the actual population density.

On population growth I compared sites that had no growth with sites that had high growth. For the period 1900 to 1940 I compared two sets of stations. Rural sites with no growth ( less than 15 people per sq km from start to finish) with those that went from rural to urban ( from 15 people per sq km to over 90 per sq km) The results did not indicate any significant UHI effect.

So you didn’t consider then that 1:15 is an order of magnitude and 15:150 is an order of magnitude too? Or whether the population density was uniform or bunched? Focusing on small population density areas at all is a risk factor. So your results as summarized here are not convincing, but I encourage you to reply with a link giving fuller access to your data and methods.

For instance, it would be better to take pairs of geographically similar cities of similar area where the population density around the station is ‘city-like’ i.e., approximately uniformly dense and well documented.

Roy Spencer did just this, using one of the few robust datasets and reproduced the old 1970s result. A critical element of this is that the ISH data comes mostly from airports. So the equipment is professionally maintained, the data is sampled hourly, or every three hours on the hour, (no Tobs adjustment requied), the sitings are consistent and in flat areas–little concern about station histories, and

the population densities numbers are less noisy.

See for reference:

Could you frame your results in context?

Re: Jon (Dec 28 15:44),

Yes Im aware of Roy’s work. i found it unconvincing.

In part because of the bad location information in the

core dataset and the lack of QC on that data.

We’ve exchange some mail on a different way of characterizing the urban landscape, I’ll wait to see what

he does with the info I passed on.

If you would like to specify a hypothesis I can surely test it. growth from 1 person to 15? how about 0 to 15?

or 0 to 100?

“For instance, it would be better to take pairs of geographically similar cities of similar area where the population density around the station is ‘city-like’ i.e., approximately uniformly dense and well documented.”

The above does not cut it as a description of a proceedure.

1. geographically similar? Are you talking about topography or ground cover surrounding the site?

define geographically similar.

2. Population density “around” the site? what does that mean, some rural sites have no populated areas for

10s of kilometers. UHI effects are thought to extend at most 20km (for precipitation) so persistent wind direction would matter.

3. Uniformy dense? Again, density doesnt matter as much as building height and canopy cover in the city.

see for example the portland study.

4. you also (oke 2002) want to know about the water use around the site. That’s harder to get but there are global maps of irrigation.

With suitable definitions such a test would be possible.

The paired approach ( cce was right about this) has more chance of showing the effect. I am aware of an extensive study that uses a paired approach in a very slick way, but you’ll have to wait to hear more about that. sorry.

The base code to do anything you want is on my site. If you come up with an interesting test, I’ll code it up, or you can do it.

Being impatient, I went to your website and attempted to download your releases. Your drop.io links do not work. The service has been discontinued. Regardless, what I was aiming to extract from *your* release is an X-Y scatter plot of trend in temperature vs trend in population.

No rural/urban pre-bining please!

Jon,

If all you’re looking for is that X-Y scatterplot of log trend of population versus temperature trend, I ran some similar tests:

with various datasets. However, I think in general income tends to be a better proxy than population:

Jon —

My last comment has been stuck in moderation for a while, probably because of too many links. However, if you’re looking for an X-Y scatterplot of log trend of population versus temperature trend, I ran a few tests here:

Various pingbacks at that location should also help you find posts that use other datasets for population. Overall, I think income tends to be a better proxy than population…see some of my latest posts.

Troya:

Thanks for that. I think I have the code now–now I’m just waiting for some R dependencies to recompile.

Plotting the difference in trends vs. the difference in population trend for station pairs is an exciting step.

I’m still hoping to take one step back through and plot for each station individually, the trend in temperature versus the growth-rate of population, and work with the statistics from there.

I’m expecting the residual of a line-fit to that to be large, but I must admit I’m not comfortable planning further until I see how bad it is. It may well be necessary to work out a better proxy for predicting UHI–as others have mentioned here.

Re: Jon (Dec 28 22:45),

DO you mean trend in population density?

Steve asks, “DO you mean trend in population density?”

No for a couple of reasons. First, trend implies a linear-fit model. I don’t think the slope of density directly is the right independent variable; based on prior findings of a log dependence by Oke and Spencer, my first guess is that growth-rate is the more relevant proxy as that would correspond to the trend of the logarithm.

Second, past work in this area indicates the horizon of the UHI effect is on the order of 10km. That means that not just the local density matters but a radius-dependent weighted integral of the density would be more relevant.

But I also preferring a simple model to begin the investigation. So, I’d start with a constant weighting, i.e., population in that 10km area and compute the growth-rate rather than us the population density of the 1sqkm grid cell of the station. Note: this is not the same as using 10km grid cells!

Of course you are correct that population is a mere proxy for the UHI effect.

You asked to understand better what it means for a pair of cities to be geographically similar.

Steve, what we are looking for are topographical not micro-site similarities. Mountain features, hills, valleys, bodies of water and surrounding land use. Conversely, Micro-site (ground cover) issues impose constant offsets to stations. They don’t affect the trend, although changing micro-site conditions could have an impact–I’m open to that issue, but I don’t think the metadata exists present to analyze it.

You also ask a series of further questions. My concern, that you missed, is about homogeneity and noise in your proxies for UHI. Consider just a 1sqkm area. Having 15 people living in a village in one corner and the weather station in the other is different from a 1sqkm area where 15 people live in an industrial complex with a weather station in the middle or on the edge.

Focusing attention on finding rural sites is problematic. There are significant concerns about the precision of the metadata. Urban areas pose different issues–particularly with station moves but have less noisy metadata for details such as population.

You seem interested in a rural/urban binning methodology. An indicator variable (however derived) is not the right approach at this stage. The results are too skewed by the bin selection and number of stations in a well defined bin is likely to be small–this gets back to the metadata problem.

The phenomena we’re looking for is a continuous, monotonic effect–although not necessarily linear. The place to start our analysis are, as I mentioned already, simple X-Y scatter plots of trend vs some proxy for UHI. You can look at model fitting later, first you need to get a basic feel for the data and the proxy. I suggest plotting trend versus population growth-rate as a starter. We can work on more sophisticated proxies later.

As I mentioned, I looked at your site but found lots of broken drop.io links. Please direct me to a current version of your work, and we can start discussing how to pose the investigation of this sort of topic.

Just to add some complicating factors, changes in the types of plants change the amount of respiration from vegetation. Having lived in a hot, dry location, the lawn grasses were first conventional high respiration grasses, but as development increased the water costs went up, with occasional watering bans. Almost everyone switched to low water use, drought tolerant grasses and landscaping. The net result would be that the plant respiration (a cooling factor) in the city grew until it was limited by the water supply, at which point it roughly flattened out.

There is a similar issue with irrigation over time. Different crops require different amounts of water and have different respiration rates. More importantly, irrigation methods have changed to decrease the amount of water which evaporates before it reaches the ground. This doesn’t matter as much if you’re just looking at number of acres under irrigation, but irrigation water usage per se is a little more complicated to understand.

Re: Jon (Dec 29 15:38), Steve, what we are looking for are topographical not micro-site similarities. Mountain features, hills, valleys, bodies of water and surrounding land use. Conversely, Micro-site (ground cover) issues impose constant offsets to stations. They don’t affect the trend, although changing micro-site conditions could have an impact–I’m open to that issue, but I don’t think the metadata exists present to analyze it.”

You still have not defined what you mean. What constitutes a mountain? a hill? a valley.

I can of course go get DEM data down to 30 meters(64000 files) , but If I define a mountain as one thing and do the work

and then you say ” oh no, I meant X by mountain, then I end up trying to read your mind. So, define what you mean. After all I will have to program something and write is.valley <- function (latlon, height){

and then define with numbers what consitutes a valley

A dip of 30 meters? in all directions? 50 meters? }

"You also ask a series of further questions. My concern, that you missed, is about homogeneity and noise in your proxies for UHI. Consider just a 1sqkm area. Having 15 people living in a village in one corner and the weather station in the other is different from a 1sqkm area where 15 people live in an industrial complex with a weather station in the middle or on the edge."

1. you say its different. on what empirical basis?

2. if its an industrial complex, then specify ISA as well.

3. If its industrial then specify nightlights as well

4. is 1 sqkm too big, then how about 500 meters?

But we might be able test your assumption that 15 people in a village is different than 15 people by an industrial center. What would your hypothesis be? how different? what effect size do you anticipate. on what basis? in all cases?

"Focusing attention on finding rural sites is problematic. There are significant concerns about the precision of the metadata. "

actually its getting better, if you care to take the time to survey all 7000 sites in google earth. To question the precision of the metadata one actually has to look at it and base that on an empirical investigation. I'm well aware of the precision issues since I've been looking at them for 6 months, along with others. So which metadata are you concerned about?

"Urban areas pose different issues–particularly with station moves but have less noisy metadata for details such as population."

actually not. the urban areas can have much noiser population data. This is due to the various ways they estimate population and whether you are talking about population at night or at mid day. I suspect you havent examined the various ways population density is estimated.

"You seem interested in a rural/urban binning methodology. An indicator variable (however derived) is not the right approach at this stage. The results are too skewed by the bin selection and number of stations in a well defined bin is likely to be small–this gets back to the metadata problem."

Actually, the results are not skewed by all bin criteria. After you try a few dozen, you will see that.

You suggested a paired approach. So I think its a fair question to ask you how you define the pairs.

"The phenomena we’re looking for is a continuous, monotonic effect–although not necessarily linear. The place to start our analysis are, as I mentioned already, simple X-Y scatter plots of trend vs some proxy for UHI. You can look at model fitting later, first you need to get a basic feel for the data and the proxy. I suggest plotting trend versus population growth-rate as a starter. "

Done that. plotted trend versus all proxies for UHI.

plotted trend versus combined proxies. plotted trend

versus various binning strategies.done that and more.

nothing earth shattering.

"As I mentioned, I looked at your site but found lots of broken drop.io links. Please direct me to a current version of your work, and we can start discussing how to pose the investigation of this sort of topic."

The base code is in the drop box on the site ( not drop IO anymore) but I'm refactoring the whole lot as a OOP project and then I will do a package with vinettes.

So, spend some time coming up with testable hypothesis.

absent some testable hypothesis with well defined parameters my plan to to continue to refactor the code

cause I really want to learn the R OOP stuff.

Re: Verity Jones (Dec 26 20:46),

I have only now had time to browse these comments, and, as the Peter O’Neill referred to here, I would like to leave a short note now explaining that I will not be contributing any detailed comments here on this topic at this time. I have submitted a detailed “proposal for a comment” on Hansen et al (2010) to Reviews of Geophysics, which is an “invitation only” journal, and requested an invitation to submit a comment based on that proposal. I do not wish to prejudice the chance of acceptance of that proposed comment by commenting at such length that might be taken to constitute prior publication elsewhere.

Briefly, the proposed comment deals with these location errors and their possible impact on the Gistemp analysis, and in addition quantifies and/or graphs certain aspects of the analysis which in my opinion would be of interest to any reader of Hansen et al (2010) and which, again in my opinion, should not have been left vague or unquantified in that paper.

While avoiding commenting at length for the reason above, I will nevertheless try to add some brief comments such as the one I am about to add in response to tty’s comment of Dec 27, 2010 at 10:04 AM, where such a brief comment may be of interest.

reply (to the VOG that invaded MY rectangle ;)):

OK, Steve. It sounds like I was basically right (however we express it, positive or negative). That your post just notes the change avec suspicion.

Based on reading your post, it seems at least “possible” that the change could be innocent. Either just letting the algorithm operation (i.e. no change in accounting policy, but new baselines of manuf facility every 12 months for new COGS calculations, that sorta thing). Or that the change was justified and even published (and accountants fix previous errors all the time. Weed through the 10K and look for some of the special adjustments and such.)

Thank you for notifying me that “it changed”. I reserve judgment on Hansen, other than that.

P.s. I remember a huge amount of kerfuffle to get GISS code and then you never went anywhere with it. 🙂

Re: scientist (Dec 26 22:22), Huh

The work steve did on the code was instrumental to my understanding of what was going on in several areas. It’s best you not try to revise history.

I hope that others spend some time on the unresolved issues here, I can’t right now.

I wonder if there is adjusted data being adjusted again and again?

I also hope somebody figures out what the global temperature trend is using unadjusted raw data from just rural stations.

The reconstructions without adjustments don’t show much difference in the overall global trend.

Recent satellite UHI studies have called into question whether the UHI adjustments are an order of magnitude too low. I.E. The UHI effect for Providence, Rhode Island during the summer was measured by satellite to be 12 degree C.

http://www.nasa.gov/topics/earth/features/heat-island-sprawl.html

The 12 Deg UHI noted here is surface temperature not air temperature, so it will influence how warm it feels to be there, but not how much bias the thermometers show.

Also, never forget to remember to mention the anomalization process, of looking only at trend differences after subtraction of ‘constant’ offset from baseline.

Although it seems implicitly obvious that UHI would not be a constant over the period of the trend analysis, I think it is worthwhile to routinely include this aspect in your comments.

Otherwise, perfectly reasonable insights can be categorically denigrated with a claim that ‘you don’t even understand anomalization ‘.

This is a general reminder for all;

Quite Ironic really, that I would write this in response to Dr Loehle, a guy who wrote a book about being a scientist…

Best,

RR

RuhRoh: if you are addressing me, I don’t see your point. I was making a very technical point about the satellite measurements being surface temp, not air temp. Not sure how the anomalization process affects the UHI trend. If a city was a city in 1850 and didn’t grow, the UHI trend will be zero even though it is hotter than rural, and the anomalization process with properly account for that. The data teams like GISS and CRU seem intent on proving there is no UHI trend in growing cities rather than properly accounting for a trend. Too much hassle for them or too inconvenient, your pick, to do it right.

Not so sure about that. Energy use would certainly have increased over time. Ultimately that energy will end up as heat added to the local environment.

“Energy use would certainly have increased over time”

OTOH insulation improved over time too. In many cities energy sources changed from coal and wood -lots of heat up the chimney- to a lot of electricity – not so much heat up the chimney.

However, the AC units, etc crank out a lot of heat to the outside in environmentally controlled buildings

Unless you assume air in never heated or cooled by the earth’s surface ( a very bad assumption), air temperatures a few feet off the ground would inevitably be influenced by surface temperatures.

The nature, method, purpose, effect, documentation and archieving of these adjustments is surely a good topic for investigation by the new committee on the US House of Representatives, which it is understood will be established early in the new year.

The idea of establishing engineering standards for changes in temperature records should be part of this investigation.

It would be a great step forward if proponents of the various theories of climate could actually have a firm basis for knowledge of what the temperature is now and how it has varied in the past, at least since regular instrumental records commenced.

And what is also required is a standing team in the form of an official commission dedicated to auditing the temperature data to ensure that the (hopefully soon to be) established standards are followed to the letter.

The team should consist of a small group of engineers, statisticans and forensic accountants, whose members rotate, with a percentage retiring at regular periods.

AusieDan,

Then write to the new Science & Technology Committee like I did, stressing the need for a clean slate overhaul.

Don’t forget to cc the NSP 🙂

Scientist

As a scientist, if you carried out work 10 years ago, at the tax payers expense, and made predictions based on your work, would it be ethical to change your predictions to match the real outcomes?

Not even pharmaceuticals can get away with that.

So we should only accept a geocentric universe as this is what “scientists” first proposed. Or perhaps scientists should be true sceptics and change their views on research and analysis?

snip – OT

Not at all.

However, surely the amount of re=writing in the US record since 2007 is worthy of comment.

My comments can’t seem to keep clear of the moderation queue. Is it because I link to the prior comments to the post that I link? I do so because the threading procedure can distance neighboring comments from one another, as a thread develops.

Ruhroh @ 12:42

Would you post the links to Palmer’s slides, I couldn’t locate them. Thanks

Mr Mosher brought these to our attention, at;

The link there is to the palmer talk at AGU.

http://www.agu.org/meetings/fm10/lectures/lecture_videos/A42A.shtml

Mosher later gave this link, a collection of dozens of talks;

http://www.newton.ac.uk/programmes/CLP/seminars/index.html

It seems that Palmer is advocating stochastic methodologies, and is highlighting weaknesses in deterministic modeling to make his sale.

RR

“Another homemade statistical method invented by climate scientists introduced without peer review in the statistical literature.”

Nicely put.

From the referenced Hansen document:

[13] The GISS temperature analysis has been available for many years on the GISS Web site (http://www.giss.nasa.gov), including maps, graphs, and tables of the results.

The analysis is updated monthly using several data sets compiled by other groups from measurements at meteorological stations and satellite measurements of ocean surface temperature. Ocean data in the presatellite era are based on measurements by ships and buoys. The computer program that integrates these data sets into a global analysis is freely available on the GISS Web site.

DATA and CODE seems to be available?

McIntyre you say:

My guess is that it will be very hard to construct circumstances under which UHI will matter after data has been Menne-transformed. And that tests of the various night lights scenario on data after it has been Menne-transformed will not tell you very much. This is just a surmise as I haven’t waded through Menne code. (I requested it a number of years ago, but was unsuccessful until 2009.) It’s too bad that the Menne adjustment methodology wasn’t published in statistical literature where its properties might have been analysed by now. It’s a worthwhile topic still.

Guess????

Cannot be bothered to look at the code for over a year????

This is auditing???

If the Menne adjustment were that important should you or your acolytes not have checked it?

The CRU and GISS code has been replicated by others – no gross errors found (some errors have been corrected – see my link in post Posted Dec 26, 2010 at 1:52)

Steve: Digging into the Menne code is a large project and I have other interests. It is not my personal responsibility to verify the work of every climate scientist in the world.

The issue with CRU is their handling (or ignoring) of UHI. I have never suggested that even CRU’s code was anything other than a trivial averaging or that people should expect that even CRU screwed up such a simple exercise. Quite the contrary. I suggested that CRU’s secrecy was because they were embarrassed to show that they did no due diligence on station histories.

So Ford,

why didn’t you do it FOR him since you seem all fired up about it?? Why didn’t Tamino or any of the guys at RC do a decent job of analyzing it for us to take away one more of our continuing whines about the oh so pristine and perfect GISS??

How about Science of Doom?? Why hasn’t HE done an analysis of it for us?? How many of those technical types are there that are continuously sniping at Steve who are capable of doing the analysis and haven’t done it?? Or, maybe only a couple of them really ARE able to do it and they don’t WANT it done cause all it will do is open up the can of worms about justification for parameter selections and other issues like Steig’s Antarctic paper??

Come on Ford, get to work getting out that analysis for us so we can find problems with it!!!

The code is published – is it not your “turn” to provide the critique to be pulled apart by the other side? Surely this is the province of an auditor (which I am not).

Volunteer work is well, voluntary.

Mcintyre

You say – Digging into the Menne code is a large project and I have other interests. It is not my personal responsibility to verify the work of every climate scientist in the world.

You therefore have no right to “surmise” and “guess” that it is wrong unless you have the evidence.

I find it strange that you made so much noise about lack of data and “code”, whipping your followers into frothy mouth frenzy, and then when it becomes available you (and they) totally ignore it. Why?

Mcintyre

You say – I suggested that CRU’s secrecy

What is the point of this woolley statement other than to pour yet more scorn on UEA

Check EM Smith’s site, I’m sure you know where it is. Post your questions there. He’s done a pretty thorough deconstruction.

Oh, and there’s something dribbling down your chin.

No right to surmise and guess? Really? When someone has looked into these issues for a long time and notices a big change, they have a reason for the guess. For example, many analyses at CA have shown that the homogenizations tend to adjust good stations using bad stations, especially in the ROW but even in the USA. So it is a pretty good guess. It is a “heads up” if anyone has the time to investigate. Anyone.

Every investigation into what and how begins with a surmise.

It is ALWAYS perfectly valid to wonder aloud “I wonder if it could be this?”

It’s what comes after that which matters.

Every investigation into what and how begins with a surmise.

It is ALWAYS perfectly valid to wonder aloud “I wonder if it could be this?”

Absolutely, but “I wonder if it could be this?” is not a surmise or guess. “I surmise it’s this” is very different than “I wonder if…” A surmise or guess is not a wondering or an hypothesis. It’s an inference without evidence.

I’d rephrase this just a little: What is the point of this woolley statement other than to pour yet more well-deserved scorn on UEA.

Re: thefordprefect (Dec 26 21:47), interesting, isn’t it, the difference in attitude to this and to Ross’ efforts. For Ross’ efforts, even the hint that there may be a mistake means he’s WRONG unless he shows otherwise, when he’s still WRONG – guess after guess until he grows tired of defending, at which time he’s been “proven” wrong. For The Team though, it’s RIGHT until you can not only PROVE IT, but show that “it matters” to the result, by which time they have “moved on” and “one mistake” doesn’t “matter” because there are so many other papers “proving” the result.

Yeah, I know it sounds like sour grapes or a conspiracy theory or something – yet I would urge you (and/or anyone else)to review both issues dispassionately and show me where the above is wrong.

TFP: Please note that the quote is misleading. Sure, there is a trivial inclusion of data from sats. It’s blended and merged into the small amount of data from ships, bouys, etc. that are all concocted together into the UEA CRU SST data set. By the CRU crew. THAT can OPTIONALLY be added to the GISS “land only” analysis as a last step.

The quote makes it sounds like they go from first sources to make their own analysis of SSTs. They don’t. It’s just a CRU “glue on”.

So all the “Harry Readme” and Climategate “issues” adhere to the GIStemp SSTs.

Not true. Neither CRU, nor ‘Harry’ do anything with SSTs. The HadSST products come from the the UK Met Office, and in any case GISS uses the Reynolds (NOAA) satellite product for modern SSTs.

The problems with H2010 also run in other directions.

1. H2010 claims that station locations are accurate up to aprox 1km. This is demonstrably false. Several

corrections have been sent to GISS, we will see if they decide to publish corrections.

2. the nightlights file Hansen uses has been deprecated by the PI of the nighlights program. In their words to me

they hope no one would use it for analysis. It’s been replaced by another file. I trust they have alerted Hansen

to this as I requested. we will see.

on Menne’s code. I’ve had it for some time but haven’t looked into it yet. Lot’s more to do before that.

Mr. Mosher;

Are you able to convey ‘your’ copy of the ‘Menne’ code to interested analysts?

After following some of your AGU links, I see you as being busy with bigger picture items than untangling of spaghetti-balls…

What are the constraints under which you obtained it?

TIA

RR

Hi RuhRoh, the code for the ‘automated pairwise bias adjustment software’ (Menne and Williams 2009), used in theU.S. HCN version 2 monthly temperature dataset, is available at ftp://ftp.ncdc.noaa.gov/pub/data/ushcn/v2/monthly/software

A Unix-type platform with the Fortran77 compiler is required to run the code. Virtualised Unix platforms are available for free download (eg. VirtualBox).

I document my experience running the PHA, if that’s what you’re referring to with Menne’s code:

I ran into a few minor difficulties compiling a running, but posted the resolutions for those. I have not been able to reproduce the official F52 dataset yet, since I need to do more digging into the settings used.

Troyca;

Nice of you to kick the tyres on that software.

What are your impressions regarding clarity and portability of the coding of the PHA algorithm? Do you see potential sensitivity to the compiler version?