In the 1980s, John Christy and Roy Spencer revolutionized the measurement of temperature data through satellite measurement of oxygen radiance in the atmosphere. This accomplishment sidestepped the intractable problems of creating (what I’ll call) a “temperature reconstruction” from surface data known to be systemically contaminated (in unknown amounts) by urbanization, land use changes, station location changes, measurement changes, station discontinuities etc etc.

Also in the 1980s, Phil Jones and Jim Hansen created land temperature indices from surface data, indices that attracted widespread interest in the IPCC period. The source data for their indices came predominantly from the GHCN station data archive maintained by NOAA (who added their own index to the mix.) The BEST temperature index is in this tradition, though their methodology varies somewhat from simpler CRU methods, as they calculate their index on “sliced” segments of longer station records (see CA here) and weight series according to “reliability” – the properties of which are poorly understood (see Jeff Id here.)

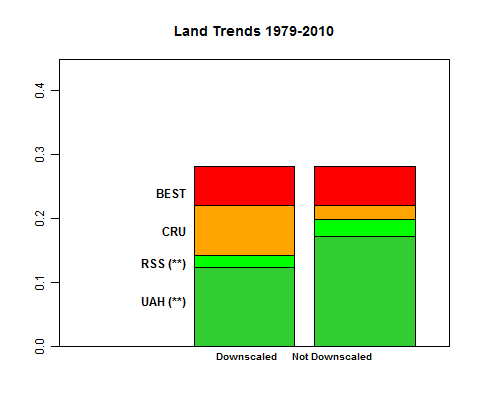

The graphic below compares trends in the satellite period to March 2010 – the last usable date in the BEST series. (SH values in Apr 2010 come only from Antarctica and introduce a spurious low in the BEST monthly data.) Take a look – comments follow.

Figure 1. Barplot showing trends in the satellite period. (deg C/decade 1979-Mar 2010.) Left – “downscaled” to surface; right – not downscaled to surface.

The BEST and CRU series run hotter than TLT satellite data (GLB Land series from RSS and UAH considered here), with the difference exacerbated when the observed satellite trends are “downscaled” to surface using the amplification factor of approximately 1.4 (that underpins the “great red spot” observed in model diagrams). An amplification factor is common ground to both Lindzen and (say) Gavin Schmidt, who agree that tropospheric trends are necessarily higher than surface trends simply though properties of the moist adiabat. In the left barplot, I’ve divided the satellite trends by 1.4 to obtain “downscaled” surface trends. In a comment below, Gavin Schmidt observes that an amplification factor is not a property of lapse rates over land. In the right barplot, I’ve accordingly shown the same information without “downscaling” (adding this to the barplot in yesterday’s post.) (Note Nov 2, 2011 – I’ve edited the commentary to incorporate this amendment and have placed prior commentary in this section in the comments below.)

The UAH trend over land is 0.173 deg C/decade (0.124 deg C downscaled) and the RSS trend from 0.198 deg C/decade (0.142 deg C/decade). I will examine this interesting property of the Great Red Spot on another occasion, but, for the purposes of this post, defer to Gavin Schmidt’s information on its properties.

The simple barplot in Figure 1 clearly shows which controversies are real and which are straw men.

Christy and Spencer are two of the most prominent skeptics. Yet they are also authors of widely accepted satellite data showing warming in the past 30 years. To my knowledge, even the most adamant skydragon defers to the satellite data. BEST’s attempt to claim the territory up to and including satellite trends as unoccupied or contested Terra Nova is very misleading, since this part of the territory was already occupied by skeptics and warmists alike.

The territory in dispute (post-1979) is the farther reaches of the trend data – the difference between the satellite record and CRU, and then between CRU and the outer limits of BEST.

BEST versus CRU

On this basis, BEST (0.282 deg C/decade) runs about 0.06 deg C hotter than CRU (0.22 deg C/decade). My surmise, based on my post of Oct 31, 2011, is that this results from the combined effects of slicing and “reliability” reweighting, the precise proportion being hard to assign at this point and not relevant for present purposes.

Commenter Robert observed that CRU now runs cooler than NOAA or GISS. In the corresponding 1979-2010, NOAA has a virtually identical trend to BEST (0.283 deg C/decade) also using GHCN data. It turns out that NOAA has changed its methodology earlier this year from one that was somewhat similar to CRU to one that uses Mennian sliced data. (I have thus far been unable to locate online information on previous NOAA versions.)

This indicates that the difference between BEST (and NOAA) versus CRU is probably more due to slicing than to reweighting.

CRU vs Satellites

CRU runs about about 0.03-0.05 deg C/decade warmer than TLT satellite trends over land and about 0.08-0.10/decade warmer in the 1979-2010 period than downscaled satellite data.

Could this amount of increase be accounted for by urbanization and/or surface station quality problems?

In my opinion, this is entirely within the range of possibility. (This is not the same statement as saying that the difference has been proven to be due to these factors. In my opinion, no one has done a satisfactory reconciliation.) From time to time, I’ve made comparisons between “more urban” and “more rural” sites in relatively controlled situations (e.g. Hawaii, around Tucson following a predecessor local survey) and when I do the comparisons, I find noticeable differences of this order of magnitude. I’ve also done comparisons of good and bad stations from Anthony’s data set and again observe differences that would contribute to this order of magnitude. But this is not the same thing as proving the opposite.

In the past, I’ve been wary of “unsupervised” comparisons of supposedly “urban” and supposedly “rural” subpopulations in papers by Jones, Peterson, Parker and others purporting to prove that UHI doesn’t “matter”. Such papers set up two populations – one “urban” and one ‘rural’, purport to show that the trends for each population are similar and claim that this “shows” that UHI is a non-factor in trends. In my examination of prior papers, each one has tended to founder on similar points. All too often, the two populations are very poorly stratified – with the “rural” population all too often containing urban cities, sometimes even rather large cities.

The BEST urbanization paper is entirely in the tradition of prior studies by Jones, Peterson, Karl etc. They purport to identify a “very rural” population by MODIS information and show that they “get” the same answer. Unfortunately, BEST have not lived up to their commitment to transparency in this paper. Code is not available. Worse, even the classification of sites between very rural and very urban is not archived, with the pdf of the paper disconcertingly pointing to a warning that the link is unavailable (making it appear like noone even read the final preprint before placing it online.) Mosher has noted inaccuracies in their location data and observes that there are perils for inexperienced users of MODIS data, Mosher reserving his opinion on whether the lead author of the urbanization paper, a grad student, managed to avoid these pitfalls until he’s had an opportunity to examine the still unavailable nuts and bolts of the paper.

Mosher, who’s studied MODIS classification of station data as carefully than anyone, observes that there are no truly “rural” (in a USHCN target sense) locations in South America – all stations come from environments that are settled to a greater or lesser degree. Under Oke’s original UHI concept, the cumulative UHI effect was, as a rule of thumb, proportional to log(population). If “urbanization” is occurring in towns and villages as well as in large cities – which it is, then the contribution of UHI increase to temperature increase will depend on the percentage change in population (rather than absolute population). If proportional increases are the same, then the rate of temperature increase will be the same in towns and villages as in cities.

If one takes the view that satellite trends provide our most accurate present knowledge of surface trends, then one has to conclude that the BEST methodological innovations (praised by realclimate) actually provide a worse estimate of surface trends than even CRU.

In my opinion, it is highly legitimate (or as at least a null hypothesis) to place greatest weight on satellite data and presume that the higher trends in CRU and BEST arise from combinations of urbanization, changed land use, station quality, Mennian methodology etc.

It seems to me that there is a high onus on anyone arguing in favor of a temperature reconstruction from surface station data (be it CRU or BEST) to demonstrate why this data with all its known problems should be preferred to the satellite data. This is not done in the BEST articles.

“Temperature Reconstructions”

In discussions of proxy reconstructions, people sometimes ask: why does anyone care about proxy reconstructions in the modern period given the existence of the temperature record? The answer is that the modern period is used to calibrate the proxies. If the proxies don’t perform well in the modern period (e.g. the tree ring decline in the very large Briffa network), then the confidence, if any, that can be attached to reconstructions in pre-instrumental periods is reduced.

It seems to me that a very similar point can be made in respect to “temperature reconstructions” from somewhat flawed station records. Since 1979, we have satellite records of lower tropospheric temperatures over land that do not suffer from all the problems of surface stations. Yes, the satellite records have issues, but it seems to me that they are an order of magnitude more tractable than the surface station problems.

Continuing the analogy of proxy reconstructions, temperature reconstructions from surface station data in the satellite period (where we have benchmark data) should arguably be calibrated against satellite data. The calibration and reconstruction problem is not as difficult as trying to reconstruct past temperatures with tree rings, but neither is it trivial. And perhaps the problems encountered in one problem can shed a little light on the problems of the other.

Viewed as a reconstruction problem, the divergence between the satellite data and the BEST temperature reconstruction from surface data certainly suggests some sort of calibration problem in the BEST methodology. (Or alternatively, BEST have to show why the satellite data is wrong.) Given the relatively poor scaling of the BEST series in the calibration period relative to satellite data, one would have to take care against a similar effect in the pre-satellite period. However, the size of the effect appears likely to have been lower: both temperature trends in the pre-satellite period and world urbanization were lower in the pre-satellite period.

One great regret about BEST’s overall strategy. My own instinct as to the actual best way to improve the quality of temperature reconstructions from station data is to really focus on quality, rather than quantity. To follow the practices of geophysicists using data of uneven quality – start with the best data (according to objective standards) and work outwards calibrating the next best data on the best data.

They adopted the opposite strategy (a strategy equivalent to Mann’s proxy reconstructions). Throw everything into the black box with no regard for quality and hope that the mess can be salvaged with software. Unfortunately, it seems to me that slicing the data actually makes the product (like NOAA’s) worse product than CRU (using satellite data as a guide). It seems entirely reasonable to me that someone would attribute the difference between higher BEST trend and satellite trends not to the accuracy of BEST with flawed data, but to known problems with surface stations and artifacts of Mennnian methodology.

I don’t plan to spend much more time on it (due to other responsibilities).

[Nov 2 – there’s a good interview with Rich Muller here where Muller comes across as the straightforward person that I know. I might add that he did a really excellent and sane lecture (link) on policy implications a while ago that crosscuts most of the standard divides. ]

A Closing Editorial Comment

Finally, an editorial comment on attempts by commentators to frame BEST as a rebuttal of Climategate.

Climategate is about the Hockey Stick, not instrumental temperatures. CRUTEM is only mentioned a couple of times in passing in the Climategate emails. “Hide the decline” referred to a deception by the Team regarding a proxy reconstruction, not temperature.

In an early email, Briffa observed: “I believe that the recent warmth was probably matched about 1000 years ago.” Climategate is about Team efforts to suppress this thought, about Team efforts to promote undeserved certainty – a point clearly made in CRUTape Letters by Mosher and Fuller.

The new temperature calculations from Berkeley, whatever their merit or lack of merit, shed no light on the proxy reconstructions and do not rebut the misconduct evidenced in the Climategate emails.

[Nov 2. However, in fairness to the stated objectives of the BEST project, I should add the following.

Although I was frustrated by the co-mingling of CRUTEM and Climategate in public commentary – a misunderstanding disseminated by both Nature and Sarah Palin – and had never contested that fairly simple average of GHCN data would yield something like CRUtem, CRUTEM and Climategate have become co-mingled in much uninformed commentary.

In such circumstances, a verification by an independent third party (and BEST qualifies here) serves a very useful purpose, rather like business audits which, 99% of the time, confirm management accounts, but improve public understanding and confidence. To the extent that the co-mingling of Climategate and CRUTEM (regardless of whether it was done by Nature or Sarah Palin) has contributed to public misunderstanding of the temperature records, an independent look at these records by independent parties is healthy – a point that I made in my first point and re-iterate in this post. While CA readers generally understand and concede the warming in the Christy and Spencer satellite records, this is obviously not the case in elements of the wider society and there is a useful function in ensuring that people can reach common understanding on as much as they can. ]

252 Comments

Steve,

Does ‘down-scaling’ the satellite data greatly reduce the trend? I cant see any big difference between RSS and Hadley land only trends.

http://www.woodfortrees.org/plot/best/from:1979/to:2009/offset:-0.2/trend/plot/crutem3vgl/from:1979/to:2009/trend/plot/rss-land/from:1979/to:2009/offset:0.26/trend/plot/uah-land/from:1979/to:2009/offset:0.4/trend

Steve: from ~0.17 tp ~0.124 deg C/decade for UAH. I’ve edited to add this info.

Steve McIntyre,

Thanks for that clarification. But I thought that level of “downscaling” (1.4, based on models) was a lot less than certain (eg. the long running controversy over lack of a tropical tropospheric hot-spot), more applicable over ocean (high surface level humidity) than over land, and more applicable to the mid troposphere than the lower troposphere. I could be wrong about this, of course.

Beautiful!

And now, just the last little bit:

Somebody ought now to list all the cretins who prematurely celebrated BEST, for future reference …

Phi – you ought to provide more details. Who’s Schweingruber and where is.the 1C/century CRU bias coming from?

Schweingruber : http://www.ncdc.noaa.gov/paleo/treering-wsl.html

a graph for instance there :

UAH NH Land (1979 – 2009) : http://vortex.nsstc.uah.edu/data/msu/t2lt/uahncdc.lt

CRUTEM3 NH http://www.metoffice.gov.uk/hadobs/crutem3/data/download.html.

Beuatifully put. I don’t think I’ve ever seen my own opinions on the temperature records so clearly described before.

1) The only real purpose of thermometer records (whether land or sea) is to attempt to reconstruct temperatures in the pre-satellite era, and until we can resolve this divergence problem it is hard to have substantial confidence in current reconstructions.

2) Current methods of looking for UHI are flawed, as the trend is affected by urbanisation not urban-ness itself. The only comparison that makes sense is between pristine rural sites and others. (The divergence between land and sea is a strong hint, but not more than that.)

3) The situation pre-thermometers is obviously much worse.

Well then the onus is on your to do the following

1. define what you mean by pristine.

2. provide some case studies that indicate the kinds of effects one can see in moving from “pristine”

to non pristine.

Much of the UHI argument gains traction from images of Tokoyo, transects across Reno, huge UHI is a poster child of sorts ( the skpetics version of an HS Icon). However, I have found that as I offer up definitions of rural.. many people move the goal posts. Now the goal post is being moved to pristine. Not even an outhouse in view. If there were as much research on the move from “pristine” to 3 sheds and cow and how much that influenced the record, then I would give more weight the argument. As it stands all the detailed work on UHI ( for city planning) is focused on larger urban areas and the suburbs. Simply, there is little field research to give us an idea of the effects one sees in going from pristine to 3 sheds and a cow. I would not rule out an effect, there is simply not a wealth of research indicating the size of the effect.

Here is an idea. Take a look at the 200 or so CRN stations. If you classify those as Pristine, then it’s a small matter to find similar sites. Again, this work is long and hard and sometimes the programs run for 2 days. So, I’m not going to go in search of pristine unless someone defines what they mean.

One could of course say that no site is pristine and we can say nothing and are stuck with what Steve shows.

There is a difference in trend that can be associated with one of three options.

1. Models have issues with amplification

2. the satellite record is wrong

3. UHI is bounded by the descrepency between the the trends.

The pristine sites are the sites that do not have thermometers. Isn’t that a problem, Steve? Isn’t the onus on climate science to settle the argument over UHI effect, and haven’t they had sufficient time and resources to do it? Or at least to have made a better effort than their BEST effort? I believe that with a few bucks and a little help from a couple of grad students, you could do a significantly better job of it. No offense to you, but that is a sad commentary on the climate science.

That’s a rather assumptive definition. What you are effectively saying is that the very act of placing a thermometer alters the temperature in a measureable way. Kind of heisenberg like except at a macro level. In fact, it looks to be almost untestable. There isnt a simple onus in this matter. The UHI effect is real and has been measured many times under many conditions. But to my knowledge there is no evidence that the mere placing of a thermometer changes the temperature. That is what you are claiming. Now, we have physics that explains why pouring concrete changes the urban energy balance. We have physics that explains why building height is the principle driver, for example, of UHI. But I know of no empirical study which supports what you claim. So, since you imply that the mere placing of a thermometer changes the temperature, how do you support this claim? code and data please. conjecture in ink, doesnt cut it.

You took that very literally Steve. That was shorthand to point to the fact that the takers of temperature have not placed a lot of thermometers in areas that are not populated. And areas that are not populated would serve as a plausible surrogate for pristine, wouldn’t they?

As I recall 27% of GHCN stations are/were in cities of 50,000 or more. Maybe you can say what percentage of thermometers are placed in, or in close proximity to towns/cities of 5,000 or more? How about 2,000? And so on. How close to dividing populated areas from almost unpopulated areas can you get, Steve? I know that you can do a better job than BEST did, and they claim that they have this crap nailed with their negative UHI finding.

I wonder how hard the climate science community is trying to figure this out. Isn’t it important? You have allowed that UHI effect could be as much as .3C of warming? Is that chickenfeed with regards to attribution? Doesn’t this stuff add up? The alarmists look the other way on these little details, or employ some kind of misdirection to change the subject. Should we not worry about this?

Lets assume that you give me station location data that is good to 1/100th of a degree or so. Then I can tell you the population at that location. And, you will find zero population stations. you’ll find everything from 0 people to 10s of thousands. Also dont trust the GHCN population data. Its old.

Meh.. on your other questions

I think of UHI affecting every city. Not just Tokyo. And by huge amounts.

“Summer land surface temperature of cities in the Northeast were an average of 7 °C to 9 °C (13°F to 16 °F) warmer than surrounding rural areas over a three year period, the new research shows. The complex phenomenon that drives up temperatures is called the urban heat island effect.”

http://www.nasa.gov/topics/earth/features/heat-island-sprawl.html

But I think this is the key:

“The compact city of Providence, R.I., for example, has surface temperatures that are about 12.2 °C (21.9 °F) warmer than the surrounding countryside, while similarly-sized but spread-out Buffalo, N.Y., produces a heat island of only about 7.2 °C (12.9 °F), according to satellite data. Since the background ecosystems and sizes of both cities are about the same, Zhang’s analysis suggests development patterns are the critical difference.

She found that land cover maps show that about 83 percent of Providence is very or moderately densely-developed. Buffalo, in contrast, has dense development patterns across just 46 percent of the city.

Providence also has dense forested areas ringing the city, while Buffalo has a higher percentage of farmland. “This exacerbates the effect around Providence because forests tend to cool areas more than crops do,” explained Wolfe.”

If it used to be forest, and it isn’t anymore, it is not pristine and has UHI.

Bruce.

Nobody argues that Tokoyo or providence is free from UHI. Both are urban by any and all classification systems. That is not the issue, never has

been the issue, and is utterly beside the point.

If there were 10,000 Tokoyos in the station inventory or 10,000 cities the size of providence, finding UHI would be so easy even you could do it.

However, a sizeable portion of stations ( thousands) have NO population, while a few are like tokoyo and providence. You can in fact go look on the web for the nice little study that “residual analysis” did on the UHI effect of large cities in GHCN ( pop greater than 1 million). the problem? those cases dont happen with a great frequency. remove them and your mean moves a tiny bit.

You didn’t get one of the points I was making. NASA discovered that farmland has UHI compared to forests. Neither have populations of any significance.

If you read WMO/CRN guidelines for placing thermometers, they always say keep it away from trees.

“Flat and horizontal ground … No shading when the sun elevation >3 degrees.”

The siting guidelines ensure warmer.

U = urban

H = heat

I = Island

are you goddard in disguise?

Land use changes are similar to UHI. While technically different, land use changes should not be excluded from the conversation about bias.

Ron nobody is excluding discussions of land use change. However, Bruce has a habit of changing the topic rather than following through with a discussion. I object to that. We can discuss UHI, raise issues, close on issues, suggest studies. If after that somebody wants to discuss land use changes.. I got that covered as well, but Bruce, unlike Don, is not interested in discussion. He does not read beyond the abstract and is a waste of my time.

I’m not sure why you keep ignoring NASA’s findings. They found 42 cities in the northeast with UHI and they found that farmland is warmer than forest and the UHI effect is larger when cities are surrounded by cooler forests. Same study.

The ability to measure current UHI lies with satellites, not a bunch of code massaging bad station data. Past UHI is problematic, but it won’t be solved by massaging bad data either.

the issue isnt finding cities with UHI

the issue is finding STATIONS with UHI.

we all know that cities have UHI. the question is which STATIONS are in urban areas, which urban areas and how bad is the UHI in THOSE urban areas. Not some other areas, the actual areas where the stations are located.

Cities have UHI. some big some small. Cities do have UHI. more than 42 I can assure you.

Some stations are in some cities; some big, some small

Some stations are not in cities. anywhere between 25 and 40% depending on how we categorize sites.

The question is NOT do cities have UHI. they do. we map it. we measure it. cities have UHI

The question is which STATIONS are either IN cities or close enough to cities to ALSO have

some measure of UHI. and How much UHI do THEY have. not 42 cities that Nasa studied, but the actual stations

used in the global average.

Cities have uhi. they do have it. that has never been the question and its why I ignore your obvious statements of fact.

Mosher

Isn’t the problem the time line change in UHI effect. A static UHI gives an offset and possibly max min differences but the rate of change changes little with uhi or no uhi

Not just cities. And we know the UHI is high. As high as 12.2C.

I suspect what you are really ignoring is that measuring UHI by coming up with a new GAT like BEST won’t work. Satellites are going to be needed.

well Bruce cities have UHI. U = urban; H= heat; I = Island.

if you want to document some other kind of heat island.. like farmers field in Iowas Island

please come up with a snappy acronym. Until such time UHI is the heat island you get in cities

Yes UHI is 12.2C and Higher!! I’ve seen UHI of way more than that.

We dont measure UHI with GATs

no GAT purports to measure UHI

The question is? How come those 12C UHI bias never show up in the GAT? Who is stealing all the UHI?

“How come those 12C UHI bias never show up in the GAT? Who is stealing all the UHI?”

Very good questions. Someday someone might figure it out. Maybe they will take the NASA research/data and compare it to temperature data and try and make some sense of it.

And playing ostrich about land use and new discoveries is not science … just because you are too literal about what the U stands for.

Perhaps your problem Steven is that you seem fixated in “Cities”. We’re talking of “Urban”, which is the U in UHI. And, FYI, urban =/= Cities. OK ? UHI is known to exist in population “centres” as low as 1-2000 people; and populations change over time.

And although maybe digressing a bit from strict UHI, land use changes are a variable that ultimately needs to be accounted for. There are undoubtedly land use changes over the past 100 years or so, all over the world.

Then again, in the end we are bound by what data we actually have. If we can define a set of sites with minimal population changes and minimal land use changes; AND, the data we derive from those stations is not so different from the data for all stations, then we do need to deal with that fact. That UHI exists and can be very significant, and that it seems to vary with population (log relationship seems generally accepted) seems incontestable, so why might we not be seeing it ? Is this data already processed to remove such affects ?

Ed.

Listen carefully. When you select very rural with no built pixels within 11km you get areas where the population is 0 to 5 people per sq km

And you can also look at land use.

And when you look at stations with zero population and no land use issues..

Guess what?

they are not on average much different from all the rest. Less than .1C per decade.

The world is warming.

mosher, why are you ticked with NASA for noting that “forests tend to cool areas more than crops do”? Aren’t you interested in science and discovering new things?

Of course, John Christy noted this a few years ago (as I’ve pointed out to you several times).

“Irrigation has turned much of the San Joaquin Valley’s dry, light-colored soil dark and damp, says Dr. John Christy, director of the Earth System Science Center at The University of Alabama in Huntsville (UAH). While the valley’s light, dry desert ground couldn’t absorb or hold much heat energy, the dark, damp irrigated fields “can absorb heat like a sponge in the day and then, at night, release that heat into the atmosphere.”

Bruce. one topic at a time.

1. you haven not gotten back to me with the Christy code or data.

2. i know that irrigation matters that is why I have metadata on crop land, rain fed cropland, irrigated cropland, and bluewater consumption for every damn station.

3. I also have forest data and historical changes to land use

When you have a paper I havent read or a data set I havent looked at, I will let you know.

UNtil such time please go bug Christy for his code and data. And dont forget it was guys like willis and steve and me who bugged climate science for code and data. do your part and get back to me when you have actual data rather than ink on paper

get that Christy data and code yet? fetch it Bruce

Steven Mosher would be much more convincing and effective without the condescension and patronizeing tone. This mis wothout rancor. The interaction could then be about the science only

The Providence station has been located at the State airport ten miles south of the city since the early 1950s (see: http://gallery.surfacestations.org/main.php?g2_itemId=2164). The location is densely suburban and the forested ring itself is ten miles to the west. The east is bounded by Narragansett Bay with its own climatic influence (sea breezes and moderating effect of the water). Previous to the move the station had been located at various spots in Providence since the 1831, each with its own local microclimate, according to the metadata. So the UHI effect here is tangled up in more than just a growing population.

How about the actual CRN sites? We’ve got a number of years of data now. They are supposedly “pristine”.

Steve: BEST has some CRN sites in it. Unfortunately they’ve “seasonally adjusted” the data. It would be nice if they also archived their data as they commenced using it.

I have a package to get CRN data.

I can also create the metadata for CRN. Here is my suggestion. people can go look at the CRN sites and say whether they are rural or not.

then I’ll tell you how they’d be classified by Modis

Steven,

Why can’t we get the data as used by BEST? That’s what you demanded of Nicola Scafetta.

Why dont you ask both of them.

One will say they have delivered preliminary data and plan a second release

The other will insult you.

Next brain buster.

Steven,

Your bias is showing. BEST promised transparency but have studiously avoided it. I don’t trust people who promise one thing and do another. Delivering preliminary data looks to be an excuse to put out results-oriented claims. Nicola insulted you because he thinks you are lazy. It is much easier to reproduce Nicola’s results than BEST results because the data sets are much smaller.

Steve: it is not fair to say that they have “studiously” avoided transparency. They’ve archived a lot of data and code. The archive is incomplete for a couple of papers. But at this point, I’m willing to attribute this to oversight rather than avoidance. There are lots of things in the organization of the archive that I dislike, but I’m hopeful that they will address these things and do not agree with condemnation.

Steve,

Perhaps I was a little tough on them, but when transparency is the entire reason BEST was founded I had high hopes they would take it seriously. They haven’t.

I can understand your personal loyalty to Muller and think it is commendable. But I’m certain you will understand that others will not feel the same sense of loyalty that you do. I am hopeful that Muller will correct the deficiencies in transparency and his penchant for overstating the meaning of his results – but I’m not as confident of these as I would like to be.

Studiously avoided it?

1. They shared an early release with me so I could get some work done for it in R

2. they pre released data and code

3. they promise a full release

I am on record with the NYT saying I will not be happy until everything is done in R.

Contrast that with scaffetta. If you cant see the difference your bias is showing

pristine! well a start would be that the site is actual know to meet the requirements for this type of station as they are laid-down .

How many KNOW sties like that exist , that is those that have be actual physical checked not guessed at ?

After all there is a reason why there is supposed to be a standard and no one would accept the use of lab equipment that failed to meet necessary standards so why should it be different outside the lab?

This is an extremely valuable piece of writing–clear, logical and cogent. (Not saying you don’t normally write to this standard, just that this is exceptional.) I would hope you would consider trying to find wider distribution for this.

Yes, this essay is cogent and powerful.

It will be read at this web site, as well as relayed far and wide, by popularizers.

Makes for a nice companion to Matt Ridley’s recent talk which is a very clear, well-structured argument in support of his views.

“It was the BEST of times, it was the worst of times, it was the age of wisdom, it was the age of foolishness, it was the epoch of belief, it was the epoch of incredulity, it was the season of Light, it was the season of Darkness, it was the spring of hope, it was the winter of despair, we had everything before us, we had nothing before us, we were all going direct to heaven, we were all going direct the other way – in short, the period was so far like the present period, that some of its noisiest authorities insisted on its being received, for good or for evil, in the superlative degree of comparison only.”

Charles Dickens, A Tale of Two Cities

thomaswfuller

Exactly. Mosher as well and I rarely agree with the moshpit. There can be NO “pristine”. Evry site will have its’ ‘local’ parametres. Pristine to me means clinically perfect. Not possible.

yes.

for example, you can well imagine that some people would think there were problems with this

30.54850,-87.87570

They might be forced to admit that the UHI here is less than Tokoyo, but they would never say

it was a good site.

“The urban heat island effect can modify rainfall patterns. Mobile, Alabama is one

example (Taylor, 1999). The city has expanded rapidly since the 1980s, replacing

forests with impervious surfaces. The resulting heat island appears to intensify

daily summer downpours. Sea breezes are laden with moisture and are the source

of daily rainfall in the summer. Northeast Mobile has a concentration of mall

parking and local annual rainfall can be 10 to 12 inches more than less paved

areas. Consequently, nearby croplands receive less rain.”

Click to access Trees_Parking.pdf

yes, the effect is known in some cases to extend 20km downwind of the city.

Mosh

It’s Tokyo

There are 39000 tokyo’s in the dataset I cant be expected to spell every one of them the same.

Every Tokoyo makes me say Tokyo to myself with a broad brummie accent. Can’t help it.

its Tokeyo

What do they mean by expanded rapidly? Mobile the city’s population has barely changed since 1970. I lived in Mobile for a time in the 80s. I can tell you that Mobile itself has hardly grown. The surrounding suburbs, particularly those across the Bay in Baldwin County have indeed grown rapidly since the 60s.

What do I mean by pristine? Well off the top of my head, something like

0) No station moves of any kind;

1) No man made structures (other than the Stephenson screen, or equivalent, itself) within 20m or ten times its own height, which ever is the larger, at any point;

2) No significant change in land use over the period considered (including no change in grazing patterns, no change in forestation, and no change in nearby lake extents);

3) No change in recording method over the period considered unless there is at least 5 years overlap of the two recording methods to enable cross-calibration.

I strongly suspect there are no pristine stations by this definition which would allow us to extend the temperature record back to (say) 1900. Pristine does indeed meean clinically perfect. And it is indeed not possible.

In practical terms, it’s presumably a matter of “more pristine” and “less pristine”, In the 2000s, the CRN stations are designed for climate recording and are about as good as one could hope for. I looked at the one near Tucson and there was a noticeable difference in trend between CRN and Tucson airport in only a few years.

My own take on the past 30 years is that it also places a pretty hard ceiling on potential urbanization contributions. The amount of urbanization in the past 30 years has been unprecedented. The contribution in earlier periods to any warming will be less (but so is the warming.)

The larger problem in earlier periods are Anthony-type problems. Undocumented changes in station quality. Because the station populations are sparse, it also becomes harder to crosscompare. Some of the high 19th century temperatures in CRU look suspicious to me and likely to be something in the measurement method.

The CRN stations are indeed as good as we’re likely to get. If we had a network of CRN stations across the world dating from 1900 we would be having a very different conversation than we are now. But we don’t, and we aren’t.

Given the limited temporal and spatial coverage of the CRN our only hope is to use them to try to estimate how bad the other stations are, and then try to make some sort of correction for it. That would require not just making comparisons between CRN stations and others, but also making direct measurements of the effects of transitions from Stephenson screens to MMTS, and from Min/Max recording to fixed time recording, and so on. But once again I agree with you (Steve McIntyre): even if we had all that sorted out our knowledge of the detailed history of many of the stations is too patchy to have great confidence in any correction method.

Well maybe I’ll do a post on CRN metadata.

Mc what do you think

Examples

30.54850,-87.87570

30.54850,-87.87570

Mobile AL huh Mosh? Ah Fairhope, Alabama, suburban Mobile, and I’d call it urban 🙂 I once worked near there and lived in Mobile near the Bay.

You can call the moon urban because we left junk there

http://maps.google.com/maps?q=30.54850,-87.87570&hl=en&ll=30.547645,-87.874167&spn=0.011679,0.026157&sll=37.0625,-95.677068&sspn=43.799322,107.138672&vpsrc=6&t=h&z=16

The point is that people will shift there definition of urban as cases come up. ether that or they wont define it. On one day they will point to a station ( CRN) and say.. THIS is the standard. and on another day they will point to the same station and say… oh wait.. I call that urban.

I looked at Google Earth before replying Mosh 🙂 . The pointer ended up in the road until you zoomed in very close. Baldwin county, Al has been the fastest or second fastest growing county in Alabama for the last 40 years by population percentage change rate. The area you point to has built up even more since I left it.

Largest county population in Alabama by rank: Jefferson, Mobile, Madison, Montgomery, Shelby, Tuscaloosa, Baldwin, then it gets muddled with Morgan, Houston, Lee, Calhoun, Etowah, Lauderdale, Marshall, Limestone, Talladega, Cullman, St Clair, Walker, Autauga, Elmore, Blount, Coffee, Colbert, Dale, Dallas, and, well my memory is failing me now.

One final note on definition of rural. I call rural an area of less than 10 people per square mile rural. Take Sumter County, AL as an example. Most of that 1000 sq mi county is rural and has been so for 60 or more years. Urban is anything more than that.

Then the BEST very rural count as rural.

0) No station moves of any kind;

1) No man made structures (other than the Stephenson screen, or equivalent, itself) within 20m or ten times its own height, which ever is the larger, at any point;

you forgot tree that grow and shade the site. What is the effect if the structure is within 19 meters?

maybe it should be 50 meters? some field research would be nice.. got any?

2) No significant change in land use over the period considered (including no change in grazing patterns, no change in forestation, and no change in nearby lake extents)

within what radius? for example. If there is a lake 10km away from the site and its extent

changes by 3 inches, does that count? 2km away and changes by 1 mm? Grazing patterns? you

will probably want to equip cows with GPS to determine that grazing patterns have not changed.

no change in forestation? order trees to stop growing? you mean the exact same number of trees

no change in area? how about tree type? tree height?

The issue here is you

have no basis to assume that every change will cause a measureable change in temperature.

I will contrast that with evidence we have that large scale changes have a measureable effect. That’s

a real concern. The blanket.. no changes whatsover, is a practical impossibility, worst than that

its undefinable, or rather you have not defined it very well.

3) No change in recording method over the period considered unless there is at least 5 years overlap of the two recording methods to enable cross-calibration.

how about triple redundant sensors?

You can effectively define all measurements out of existence. that has never been the question. There is always an approach which results in us knowing nothing rather than knowing something with uncertainty.

Well, for “pristine” areas we’ve got pretty good coverage via the mountain glacier record. And there’s overwhelming evidence of global glacier recession.

snip – not related to the specific issue here

Steve,

I’m thinking you have the right approach. With no response to my emails yet, I’m not sure they are taking my critique seriously. Currently they have a trend which is higher than the rest and some stats which are absolutely worse than the rest. In a recent article at Nature blog, they indicated that the models and data were closer than ever. I think we know now which one was changed.

Jeff,

You may have seen it, but Judith says that Muller has assured her that they are taking critiques seriously. He also told her that the premature press blitz was to get the attention of the IPCC. Of course the press release says they are in the IPCC AR5, and other exlnations are given in the FAQs with not mentiuon of the IPCC. So the discrepancy between what he says and what he does is not surprising.

I’m still hoping to hear something but my patience for stalling or non-response is limited.

I think that JC has been somewhat niave. Muller is playing with her emotions and her loyalty. Sad, very sad.

JC is also very pushed for time. I understand that patience may be limited but I predict it will look more like wisdom by the end of the week than it does today.

Hmm, that URL’s had an climateaudit.com prefix inserted, presumably to deter spammers (and temporary?) Remove anything between the http:// and judithcurry.com and you’ll arrive where I intended. Judy’s recent comment immediately below mine is well worth taking in.

“The calibration and reconstruction problem is not as difficult as trying to reconstruct past temperatures with tree rings, but neither is it trivial”

Doesn’t the BEST heterogeneity plot of temperature rates suggest that even with the ‘unprecedented’ warming over the last 50 years the chances of an individual trees, stuck as it is in one location, recording a negative trend is 1:3?

How hard would it be to gather such data? I have noticed that on exceptionally cold nights, -25F around here, that the temp varies widely it might be -15 in the city, Burlington, VT, -19 on the highway, -22 on the lightly traveled road to my house, and -25 at my garage door, 700 ft from the road.

I assume the effect is from automobiles disturbing the stratification of the air.

How hard would it be to plant solar powered satellite reporting temp stations in wilderness areas? Really, how hard?

Wilderness is not pristine according to the definition given above. dont confuse the definitional question with the measurement issue

Steve, you are just knocking down others thoughts and ideas on this without proposing how to tackle the problem. Is this all we are going to get? If so, I will stop wasting my time asking you about it.

No I am trying you to think hard about the problem. I know that if I define rural that somebody somewhere will say.. oh look three sheds and cow, I bet that cow breathes on the thermometer. So, you point to tokoyo as a poster child, and then when you are shown a remote station located at a ranger station, you say.. oh thats not pristine, theres a person who reads the thermometer.

What I can do is this. Looking at the literature from Oke on we can define certain things known to cause UHI.

Know and proven to cause it. cause it in a way that measureable. I can sort through sites that dont have those issues. When I do that work, and its brutal effin work, I know that some arm chair bozo will say.. holy crap theres a lake 10 miles away and maybe the water level changed. Thats not rural.

So, what I’m expecting isnt much, except to thnk about the problem in a way where some progress can be made.

I have a proposal for Steve. Its related to CRN which Ive just finished processing

Thank you Steve. I am not trying to give you a hard time. And I haven’t said anything about Tokyo (Bruce), or criticized the work you have reported on sorting out the rural/very rural areas, using MODIS, and cross-checking with the light thingy, and population densities. That was Tilo. And he may have a little point or two, but I believe you are capable of and are interested in doing it right, and I trust you to be honest. That is why I keep asking you. I will look forward to anything that you and Steve M. will share with us. snip – policy

I realize that don, sorry. There are those folks who want to insist that every station has 9C of UHI, that the world is really cooling and that, opps, we are also coming out of an LIA. err wait.. my favorite, its not getting warmer and increased sunshine is the cause.. err.. wait.. globale temperature doesnt exist, but that non existent thing was greater in the MWP… or the temperature record is a total crock, except when it correlates with the sun.

I’ll put together a little example of what kinds of things you can know and how that might help.

“There are those folks who want to insist that every station has 9C of UHI”

Really? Can you name one? Or is this just hyperbole, again?

So I take it you think that the UHI bias is less than 9C?

great, care to give an estimate? you can use steves work above to take a stab at it?

If not, then I’ll assume you think its 0.

You’re moving the pea. You stated “There are those folks who want to insist that every station has 9C of UHI”. I asked you to name one. Instead you changed the subject.

As you stated elsewhere, it’s not whether UHI exists, it’s whether STATION UHI exists. I’ve never seen anyone state “There are those folks who want to insist that every station has 9C of UHI”.

I think Steve is talking about Bruce, but employing a little bit of hyperbole.

“I’ve never seen anyone state “There are those folks who want to insist that every station has 9C of UHI”.” That is incorrect, since we have just seen Steve say that very thing. See how it works? Anyone can be pedantic. Your do-rag suits you.

If being pedantic means expecting someone to backup their statements, then we should all be more pedantic. I suppose you agree with Mosh them.

How about this: I’ve never seen anyone state “every station has 9C of UHI”. Better?

OK, if you think that Steve meant that literally, then you are not being pedantic. You are just being silly.

Of course its hyperbole. The simple fact is this. If you try to have a serious discussion about UHI, an honest discussion, you will find a Bruce in every crowd. So let me ask you.

You see steves analysis, It is rather logical

Satellite measures the temperature far above the surface away from UHI.

Lets stipulate that the surface ( 1% of it) has UHI.

The concern is that we are measuring the 1% and avoiding the 99% so that we have a bias.

Fair enough concern.

We can also note that by the time the air parcels from this 1% rise and mix with surrounding air

the effect is washed out. That means when we measure a trend miles above the surface that trend is

free of UHI.

Let’s say that Trend is .2C per decade

We note that the land trend is .18C per decade

Note the above is just done for illustration of the logic.

What that implies is that the UHI bias could be as high as .2 – .18 or .02C decade

In the context of this argument it does not make sense from Bruce or you to make comments like

providence has a UHI of 5 or 4 or whatever.

The debate is over methods to estimate the final and full effect of UHI on the entire record

yes, you will find cities and fields and all sorts of hot spots on the surface. The point is what is

the BIAS after collecting the samples at the surface.. what is the total bias.

Simple question: do you agree with Steves approach. remember it was an approach used by skeptics.

“OK, if you think that Steve meant that literally, then you are not being pedantic. You are just being silly.”

That was the reason I mentioned it. His comments in this thread have been laced with hyperbole, which means you can’t have a serious discussion. He’s the one being silly, not me.

I disagree with your premise. Certainly, even in a wilderness site, there will be factors that will change over time unrelated to climate. The big question is in a systematic bias of population growth. To get a ten year reference period far from the madding crowd, and to compare it to other stations for trend or lack of trend does not seem an overly expensive project for the value returned.

Whatever your point about uncertain data, we know that certain uncertainties are ignored in the noise and bluster. I would like to know if UHI is an issue or not.

“Throw everything into the black box with no regard for quality and hope that the mess can be salvaged with software.”

Beautiful description.

I’ve asked this before but have never been organised enough to check for any responses. All the discussion on UHI that I’ve seen is in the context of increasing population. If the theories put forward are correct, then the opposite effect should be apparent in areas of decreasing population. So can anything be learned from somewhere like Detroit, which I gather has experienced quite a decline in population?

It seems to me that a lot of factors could go into UHI, not all of which reverse with declining population. For example, Detroit built up a lot of paved roads, concrete structures, etc, etc. These things are still there, even though they are experiencing a declining population.

Also consider that somewhere in the urbanization process, asphalt streets are replaced with concrete. I noticed this driving US-287 in north-west Texas at 104-108 deg F. Blacktop outside the city, but white pavement in the towns. It might be a stretch to think the effect strong enough to create Urban Cooling, but it could mitigate other heating.

In my own lifetime I have seen interstates go from concrete to asphalt then back to concrete then back to asphalt both in and out of the cities. The materials used depend upon costs of materials at the time and/or subsidies to try out ‘new’ materials.

What gets lost, in my opinion, is that all biological organisms alter the local environment to enhance their own survival; and that the most altering organisms of all to the local environments over time are single celled plants, fungi and bacteria.

Urban heat island and other land use changes make measurable and notable changes in the local weather. We should be looking for measurable changes in the local weather attributable to changes in the atmospheric boundary layer first.

Assuming satellite trends and/or model scaling factors are wrong and UHI / land use change effects are about zero

– would not explain the increasing difference between sea surface and lad based trends. Land is warming faster, indeed, but after 150 years of warming and CO2 increase, one would expect trends to converge and not diverge as they did in recent decades.

– It would not explain McKitrick et Altris’ results about correlation between socio-economic development and temperature increase.

– Would not explain Pielke et Altris’ results about correlation between land use changes and temperature increase.

Only attributing the difference to down scaled satellite trends to UHI and land use changes would tick all boxes and prettily even match the grossly estimated sizes of the effects.

The writing is on the wall !

Yes, Steve, this is a very fine summation. Thank you for providing clarity.

“To follow the practices of geophysicists using data of uneven quality – start with the best data (according to objective standards) and work outwards calibrating the next best data on the best data.”

This objection can be illustrated using a UHI example. BEST fits the pieces of their short or broken temperature time series according to the idea that variation between two time series is only influenced by altitude and latitude. So if a series is included in their model and it differs from those around it, then either that difference has to be justified by a difference in latitude or altitude, and if it is not, then they assume a discontinuity that must be resolved by adjustment. Since BEST does not allow for variation by UHI, then any significant differences between urban stations and nearby rural stations is a discontinuity that must be adjusted for. This does not get rid of the UHI, it simply distributes it equally across the nearby stations. The right way would have been to begin with the truely rural stations (less than 2% built) and adjust nearby temperature strings to those stations, rather than simply stiring it all together.

I’d like to comment briefly on what Steve Mosher was writing about in regards to pristine environments and the UHI.

As noted by TJA,even a pristine environment would change over time, and this would affect climate. However, absent other factors these changes would broadly even out.

It should also be noted that there are effectively zero ‘pristine’ environments around–it’s not a real world issue.

In my opinion (just an opinion), the first cut is the deepest, in the sense that humanity’s first impacts on an environment are likely to be the most severe–wide ranging burning, extinction of grazing megafauna, etc. What happened on Easter Island probably didn’t affect climate much–but it certainly affected the environment.

But again, those effects might easily balance out, and they’ve certainly been with us through up and down cycles of temperatures. UHI is meant to be specific–whether it succeeds in that is apparently still somewhat in question. It was first measured in London a couple of centuries ago, and it is real, quantifiable and projectable.

What we are postulating in this conversation is a suburban heat island, a rural heat island and maybe even more. And it may all be real. But I don’t think the work done on this issue to date is easily relate-able to those possible effects. So Steve’s point is (IMO) more pertinent to the wider discussions about UHI, its scope and effect on global temperatures (recalculated by Phil Jones in 2005 as about 0.5C, IIRC).

The effect you are discussing here, however you label it, is probably worth examining. A smaller temperature change over a larger area of landmass due to lesser effects is a reasonable hypothesis, although I sincerely wish you all good luck in figuring out how to measure it.

But I do believe it’s a different discussion altogether.

I hoped that we were past semantic quibbling over what pristine is. The world’s population increased from 2.5 billion to nearly 7 billion, from 1950 to 2010. Most of the thermometers are where the people are, not where the buffalo roam. As cities make up less than 1% of the land surface, all the more reason to be concerned about the under-representation of the more sparseley populated areas in the temperature record. I won’t believe, given what we know for sure about human impacts on the environment, that rapid population growth along with the accompanying infrastructure has not had a significant effect on the temperatures that we have been attempting to measure.

In H2010 hansen used Nighlights ( pitch black) to classify the 1200 or so USHCN stations.

he found 300. thats 25% The population associated with that is less than 10 people per sq km, heavily skewed toward 0 people. so, perhaps 25% of the stations are where the buffalo roam. BEST had 40%, but I think they need to refine their protocal somewhat to get to very rural.

Actually Steve, I set you up. The buffalo no longer roam. Virtually all of them were killed by human hunters, who themselves have since left the prairies for the big cites. But less than 10 people per sq km, heavily skewed toward nobody, is interesting. Are you suggesting that maybe 25% of the 39,000 stations in the BEST analysis would fit that description? I wonder why they didn’t go that route, instead of that rural vs. very rural BS.

This post makes several assumptions that I think should be noted.

Firstly the assumption is made that the satellite data from UAH and RSS is still free from any impacts of cooling. This is not necessarily the case. In fact a recent paper (zou et al. 2010) has found issues with satellite data that has resulted in more cooling in the satellite record. STAR has released their analysis updated with the new corrections and their trends are stronger at the different altitudes compared to both UAH and RSS. They are in the process of working on a TLT channel and if the relationship between the higher altitudes and TLT (a synthetic channel) is similar to those of UAH and RSS then the STAR analysis will produce a significantly higher trend than UAH and RSS.

Secondly (and most importantly) Steve compares only BEST with Cru in his figures and seemingly ignores the strong agreement between NOAA Land, GISS (after Land mask has been applied) and BEST with Cru appearing as an obvious outlier. Now according to work JeffID has done previously, Cru’s station combination method will tend to underestimate trends. Secondly (and what I call more importantly), the Cru dataset is missing some of the regions which warmed the most over the past ten years. This is confirmed by a the European Center for Medium and Long Range Weather Forecasting.

http://www.metoffice.gov.uk/news/releases/archive/2009/land-warming-record

Although it is clear that BEST does provide too much confidence in their answer and how they answered the UHI question (and AMO one) is debatable, it is not warranted to consider them as being unreasonably high when you consider their agreement with the other (Arctic including) temperature series, and when you consider the significant uncertainties with the satellite data.

I disagree with your point about NOAA and GISS if the satellite data is as repreented. If the same barplot with NOAA, for example, has a larger difference to downscaled satellite, then that simply means that the urbanization and quality issues are worse than we thought – with CRU’s lesser coverage partly offsetting the error. Saying that NOAA runs hotter than CRU is not in itself an argument that a person who holds a reasonable reliance on satellite results, since, Like CRU, NOAA makes no adjustment for urbanization.

I’m not arguing that one side or other has won on the urbanization contribution. I’m merely observing that someone can reasonably rely on the satellite record and the act that different parties have calculated warmer trends using the same contaminated GHCN data doesnt, in itself, oblige that reasonable person to change his mind.

I’m not familiar with Zou et al, Higher satellite trends would obviously reduce the difference and leave less to be attributed to urbanization. I presume that Zou’s trends remain less than those observed in the station data.

Robert good points all around. However, I think you can agree with the methology.

Satillite TLT trend = X

model ampflication = 1.4X

Imputed ground trend Z = X/1.4

Observed Land Trend Y

Y – Z ~0

Deviations from zero need to be explained. that does not say what the explaination is. It just says

one is needed.

At least you can agree to that.. then the fiddling begins.. but all the fiddling happens within this general

model of how things should be.

I might need a reference for the model amplification factor of 1.4x. Where can I find that ?

Nowhere. The expected land-only amplification of MSU-LT over SAT is close to zero (actually equivalent to a factor of ~0.95 +/- 0.07 according to the GISS model).

The ocean-only tropical amplification is related to the moist adiabat which is not the dominant temperature structure over land since deep convection is mostly an tropical ocean phenomena. Ocean temperatures are rising slower than over land, therefore even if tropical land tropospheric temperatures were being set by a moist adiabat over the ocean, it would still have a smaller ratio with respect to the land temp.

I’ve just read Steve’s survey for the first time and this seems the only important correction. I presume you are disputing the following for temperature recontructions from land-based thermometer records:

Do Lindzen and Schmidt agree on the ~0.95 over land?

What John Christy once permitted me to publish was this:

It was presented to me that because the data was farther from ground, the variance was less repressed by thermal inertia which didn’t affect long term trend. I don’t beleive the 1.2 number was model related but rather radiosonde.

Thanks for this comment. I’ve amended the commentary to reflect this observation.

Thanks Gavin that helps immeasurably

Gavin,

Correct me if I’m wrong. The factor 0.95 is relative to the whole land, so including Antarctic. From the 60th parallel, the expected ratio TLT-T2M is reversed. In the case of the northern hemisphere alone (represented relatively few beyond the 60th parallel) we can expect about a factor of 1.1. The observations (UAH-CRUTEM) give a ratio of 0.69!

Gavin, sorry that I’m late to the party, but have you seen anybody who studies the effect of the land atmospheric boundary layer on temperature measurements, and whether this can lead to an additional amplification of the surface atmospheric temperature over land?

Things get really crazy near the ground, especially in the first couple of meters. On a hot day you get perhaps 5-7°C change in the first 10-meters off the ground (and at night you have a similar temperature inversion).

Here’s some representative data. (Source and data on request.)

Regardless, I think it’s worth noting that satellite measurements don’t measure the same quantity (in general) as surface measurements. Marine measurements are actually performed by measuring sea surface temperature, and it is assumed that this can be equated with air temperature (after anomalizing to remove hopefully a constant offset, but the constancy of the effect not established and probably depends on meteorology)..

Carrick – is “daytime” midday?

If I remember right, it was 2pm.

Robert: “Where can I find that ?”

Here:

Remote Sensing 2010, 2, 2148-2169

“What Do Observational Datasets Say about Modeled Tropospheric Temperature Trends since 1979?”

John R. Christy 1,*, Benjamin Herman 2, Roger Pielke, Sr. 3, Philip Klotzbach 4, Richard T. McNider 1, Justin J. Hnilo 1, Roy W. Spencer 1, Thomas Chase 3 and David Douglass 5

Abstract: Updated tropical lower tropospheric temperature datasets covering the period 1979–2009 are presented and assessed for accuracy based upon recent publications and several analyses conducted here. We conclude that the lower tropospheric temperature (TLT) trend over these 31 years is +0.09 ± 0.03 °C decade−1. Given that the surface temperature (Tsfc) trends from three different groups agree extremely closely among themselves (~ +0.12 °C decade−1) this indicates that the ―scaling ratio‖ (SR, or ratio of atmospheric trend to surface trend: TLT/Tsfc) of the observations is ~0.8 ± 0.3. This is significantly different from the average SR calculated from the IPCC AR4 model simulations which is ~1.4. This result indicates the majority of AR4 simulations tend to portray significantly greater warming in the troposphere relative to the surface than is found in observations. The SR, as an internal, normalized metric of model behavior, largely avoids the confounding influence of short-term fluctuations such as El Niños which make direct comparison of trend magnitudes less confident, even over multi-decadal periods.

According to Gavin the number is 0.95 over land rather than 1.4

This gives trends of the following:

UAH

0.182 (°C/decade)

RSS

0.208 (°C/decade)

If I recall correctly

BEST

0.28 (°C/decade)

GISS (land-masked using CCC)

0.24 (°C/decade)

NOAA

0.28 (°C/decade)

Cru

0.22 (°C/decade)

Nice;

BEST 0.28 (°C/decade) – UAH = .1C “budget” for possible UHI

BEST 0.28 (°C/decade) – RSS = .08C “budget” for possible UHI

CRU .22 -UAH = .04C “budget” for Possible UHI

CRU .22 – RSS = .02C “bugget” for possible UHI

so between uncertainties in the satellite record, uncertainty in the downscalling and uncertainty in the surface record.. it would appear that there is possibility for a modest UHI bias.

not tokyo sized.

not zero. not tokyo sized.

Yes, any plausible UHI effect during the satellite era must be modest. If I remember correctly, Douglass, Pielke Sr and others suggested the discrepancy between land surface and satellite lower troposphere trends is mainly due to changes in the surface boundary layer in low wind (esp. winter night) conditions. If that is correct, then the remaining plausible UHI effect during the satellite era has to be very small.

That doesn’t prove it was similarly small before the satellite era, but it is hard to see why the situation would suddenly change when the satellites started measuring oxygen microwave emissions in 1979. I’ve never been able to get too excited about UHI effects… and still can’t.

Robert: “According to Gavin the number is 0.95 over land rather than 1.4

Is there a source for that .95 other than Gavin?

go get the model data and calculate for yourself. Christy’s paper used 1.1, is that source ok?

Tilo, this issue of the scaling of surface temp changes to satellite-detected tropospheric temperature changes seems to be a contentious one, as shown by Gavin’s rapid claim that Steve’s use of a downscale by 1.4 is incorrect and that he should have used 1. Reading the characteristically snarky RealClimate post he links to shows indeed that this point has been much argued about, and also shows that one must make distinctions between land-only, sea-and-land, and ‘lower’ troposphere (20 deg. S to 20 deg. N) and global troposphere.

I note that whether the factor is 1 or 1.4 is not so important concerning one of the key parts of Steve’s beautifully clear essay, i.e. it is irrelevant when trying to judge whether BEST is indeed notably better for recent periods than CRU or other such as GISS. Nevertheless, it does matter for the other argument Steve presents, which is that satellite estimates of surface temperatures should be used to calibrate surface-based measurements and to attempt to estimate the role of UHI in the latter. To do that, you need to sort out what the inferred UHI-free surface temperature trend over land is, based on satellite observations. It would be very helpful if someone could point to any references about that question.

Steve: I’ve restated the section in question to show the results without the proposed downscaling as well.

Another paper uses 1.1 over land and 1.6 over sea:

Click to access klotzbachetal2009.pdf

With 1.1, there would still be significantly higher land based trends.

Factors of 1.4 or 1.6 as given for sea surface, make sea surface data significantly higher as well (or the models produce false factors). The size of this error would affect the global trend even more than the land error.

“completely contained within the 30-year record used here.

Thus, in 19 realizations this consistent ratio was calculated.

This was also demonstrated for land-only model output

(R. McKitrick, personal communication, 2009) in which a

24-year record (1979 – 2002) of GISS-E results indicated an

amplification factor of 1.25 averaged over the five runs.”

But Ross says this is un acceptable referencing

and the data he referred to wasnt really the right data?

How does one get 1.1?

The factor of 1.1 is derived in http://pielkeclimatesci.files.wordpress.com/2010/03/r-345a.pdf with the following: “Utilizing an appropriate landmask and data provided on his [Gavin Schmidt’s] FTP site at http://www.giss.nasa.gov/staff/gschmidt/supp_data_

Schmidt09.zip, we have redone our calculations and found amplification factors of 1.1 over land and 1.6 over ocean.”

however that data was only a subset as gavin pointed out.

Steven Mosher,

I didn’t notice where Gavin made a point about the Klotzbach et al. paper using a subset…Although obviously I noted that his factor of 0.95 differs from Klotzbach’s 1.1. Can you clarify the “subset” reference, please?

For reference, the amplification is related to the sensitivity of the moist adiabat to increasing surface temperatures (air parcels saturated in water vapour move up because of convection where the water vapour condenses and releases heat in a predictable way). The data analysis in this paper mainly concerned the trends over land, thus a key assumption for this study appears to rest solely on a personal communication from an economics professor purporting to be the results from the GISS coupled climate model. (For people who don’t know, the GISS model is the one I help develop). This is doubly odd – first that this assumption is not properly cited (how is anyone supposed to be able to check?), and secondly, the personal communication is from someone completely unconnected with the model in question. Indeed, even McKitrick emailed me to say that he thought that the referencing was inappropriate and that the authors had apologized and agreed to correct it.

So where did this analysis come from? The data actually came from a specific set of model output that I had placed online as part of the supplemental data to Schmidt (2009) which was, in part, a critique on some earlier work by McKitrick and Michaels (2007). This dataset included trends in the model-derived synthetic MSU-LT diagnostics and surface temperatures over one specific time period and for a small subset of model grid-boxes that coincided with grid-boxes in the CRUTEM data product. However, this is decidedly not a ‘land-only’ analysis (since many met stations are on islands or areas that are in the middle of the ocean in the model), nor is it commensurate with the diagnostic used in the Klotzbach et al paper (which was based on the relationships over time of the land-only averages in both products, properly weighted for area etc.).

Thanks Steven, that was perfectly clear and very helpful.

I said down scaling was.. “more applicable over ocean (high surface level humidity) than over land, and more applicable to the mid troposphere than the lower troposphere.”

It seems Gavin and I agreed on something! That is at least a 3 sigma event.

Robert,

Non-detection of UHI is NOT a sign of an accurate result. There isn’t much to debate IMHO. They didn’t detect it for the same reasons that others haven’t – bad methods. When a kid can drive a thermometer through the center of a city and find a 2C hump (old science project at WUWT), we know the effect happens because the city wasn’t there two hundred years ago. It is also visible in steps in the temp data when you plot it yourself. I’m very very skeptical about the UHI result.

Steve’s critiques about how steps are detected and corrected are also pertinent and represent a potential bias far greater than Roman’s station combination methods fix.

It’s all just fun though. How well can we mash together the data? What is the right method?

I just looked at the new NOAA version of GHCN. My recollection was that NOAA used to be fairly close to CRU. However, NOAA’s switched to sliced segments and its trend is now exactly the same as BEST’s. See ftp://ftp.ncdc.noaa.gov/pub/data/ghcn/blended/ghcnm-v3.pdf for information on the changeover to Mennian methods.

I can’t locate any backhistory of past NOAA versions. If anyone can locate them or has back copies, I’d appreciate it.

One of these days one of these groups is going to make a modification that actually cools the results, and I will faint.

As an illustration of Steve’s point about UHI, I posted this at Judith’s about a week ago as a highly simplified explanation:

Let’s say the year is 1950 and we are going to put a thermometer in a growing city. But the city is already there and already has a very high built density. So, let’s say that the city already has 1C of UHI effect. Over the next 60 years the city continues to grow, mostly around the perimeter. The UHI effect goes up, and by 2010 there is 1.5C of UHI effect. The thermometer was only there since 1950, so the thermometer will only see the delta UHI change from 1950 to 2010 as an anomaly. So, by that thermometer, the delta UHI effect for that period is .5C.

Now, in the same year, 1950, we put another thermometer into a medium size town. Let’s say that it has a UHI effect of .1C at the time we put the thermometer there. The town grows over the next 60 years, there is a lot of building that happens close to the thermometer, and by 2010 it has .6C of UHI effect. Again, the thermometer will not register that first .1C as anomaly. But it will register the next .5C as anomaly.

So, in 2010, what we end up with is that the urban thermometer has 1.5C total of UHI effect, and the rural thermometer has .6C of total UHI effect. But, the delta UHI for both thermometers since they were installed is .5C. It is that .5C that both of them will show as anomaly.

Now BEST comes along and decides that they will measure UHI by subtracting rural anomaly from urban anomaly. Let’s also say that there has been .3C of real warming over those 60 years. So the rural thermometer shows .8C of warming anomaly and the urban thermometer shows .8C of warming anomaly. BEST subtracts rural from urban and gets zero. Their conclusion is, “either there is no UHI or it doesn’t effect the trend”. But, as we have just seen, .5C of the .8C in the trend of both the urban station and the rural station were UHI.

With their results, BEST has failed to discover the pre thermometer urban UHI effect, the post thermometer urban UHI effect, the pre thermometer rural UHI effect, and the post thermometer rural UHI effect. They have also failed to discover the UHI addition to the trend in either place. In other words, their test is a total fail. Even if they did their math perfectly, ran their programs perfectly, and did their classification perfectly, their answer is still completely wrong. Why? Because the design of the test never made it possible to quantify UHI. Now, many of you may object to my scenario.

Some of you may wonder if it is reasonable to expect a small town to grow at a rate that pushes up the delta UHI as fast as a city. This is where the definitions of rural and urban come in. Modis defines an urban area as an area that is greater than 50% built, and there must be greater than 1 square kilometer, contiguous, of such an area. So, for example, if you have two .75 square kilometer areas that are 60% built, separated by one square kilometer of 40% built, it’s all rural. So the urban standard is high enough that an area must be strongly urban to qualify. The rural standard is anything that is not urban. And that allows for a whole lot of built. 10 square kilometers of 49% built is all classified as rural.

BEST then goes on and further refines the rural standard as “very rural” and “not very rural”. Unfortunately, they make no new build requirements for “very rural”. The only new requirement is that such an area be at least 10 kilometers from an area classified as urban. But a “very rural” place could still have up to 49% build.

This means that you can have towns, small cities, and even some suburbs that are classified as rural. In such areas there is still plenty of room to build and build close to the thermometer. In the urban areas, there is little room to build. So either structures are torn down in the city to make room for new structures, or structures are put up at the edge of the city, expanding it. The new structures being put up at the edge of the city are far from the thermometer and while they still effect it, the further away they are, the less effect they have.