CA

CA reader Gary Wescom writes about more data quality problems with the Berkeley temperature study – see here.

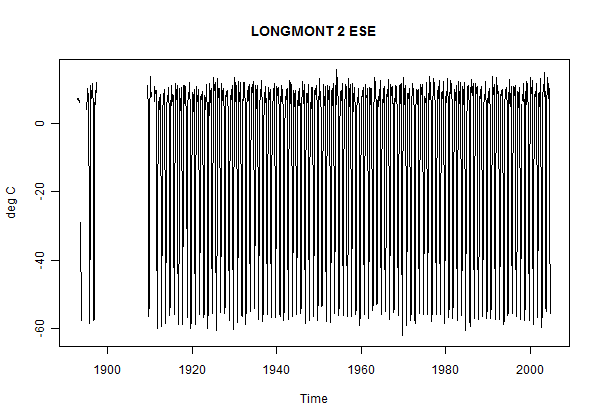

In a surprising number of records, the “seasonally adjusted” station data in the Berekely archive contains wildly incorrect data. Gary shows a number of cases, one of which, Longmont 2ESE, outside the nest of climate scientists in Boulder CO, is said to have temperatures below minus 50 deg C in the late fall as shown below:

Figure 1. Longmont 2 ESE plotted from BEST Station Data.

This is not an isolated incident. Gary reports:

Of the 39028 sites listed in the data.txt file, arbitrarily counting only sites with 60 months of data or more, 34 had temperature blips of greater

than +/- 50 degrees C, 215 greater than +/- 40 C, 592 greater than +/- 30 C, and 1404 greater than +/- 20 C. That is quite a large number of faulty temperature records, considering that this kind of error is something that is so easy to check for. A couple hours work is all it took to find these numbers.In the engineering world, this kind of error is not acceptable. It is an indication of poor quality control. Statistical algorithms were run on the data without subsequent checks on the results. Coding errors obviously existed that would have been caught with just a cursory examination of a few site temperature plots. That the BEST team felt the quality of their work, though preliminary, was adequate for public display is disconcerting.

Gary also observed a strange ringing problem in the data.

I observed earlier that I had been unable to replicate the implied calculation of monthly anomalies that occurred somewhere in the BEST algorithm in several stations that I looked at (with less exotic results.) It seems likely that there is some sort of error in the BEST algorithm for calculating monthly anomalies as the problems are always in the same month. When I looked at this previously, I couldn’t see where the problem occurred. (There isn’t any master script or road map to the code and I wasn’t sufficiently interested in the issue to try to figure out where their problem occurred. That should be their responsibility.)

Locations

Even though GHCN data is the common building block of all the major temperature indices, its location information is inaccurate. Peter O’Neill see has spot checked a number of stations, locating numerous stations which are nowhere near their GHCN locations. Peter has notified GHCN of many of these errors. However, with the stubbornness that it is all too typical of the climate “community”, GHCN’s most recent edition (Aug 2011) perpetuated the location errors (see Peter’s account here.)

Unfortunately, BEST has directly used GHCN location data, apparently without any due diligence of their own on these locations, though this has been a known problem area. In a number of cases, the incorrect locations will be classified as “very rural” under MODIS. For example, the incorrect locations of Cherbourg stations in the English Channel or Limassol in the Mediterranean will obviously not be classified as urban. In a number of cases that I looked at, BEST had duplicate versions of stations with incorrect GHCN locations. In cases where the incorrect location was classified differently than the correct location, essentially the same data would be classified as both rural and urban.

I haven’t parsed the BEST station details, but did look up some of the erroneous locations already noted by Peter and report on the first few that I looked at.

Peter observed that Kzyl-Orda, Kazakhstan has a GHCN location of 49.82N 65.50E, which was over 5 degrees of separation from its true location near 44.71N 65.69E. BEST station 148338 Kzyl-Orda is also at GHCN 49.82N 65.50E. Other versions (124613 and 146861) are at 44.8233 65.530E and 44.8000 65.500E.

Peter observed that Isola Gorgona, Italy had GHCN location of 42.40N 9.90E more than one degree away from its true location of 43.43N, 9.910E. BEST station 148309 (ISOLA GORGONA) has the incorrect GHCN location of 42.4N 9.9E.

The same sort of errors can be observed in virtually all the stations in Peter’s listing.

I realize that the climate community is pretty stubborn about this sort of thing. (Early CA readers recall that the “rain in Maine falls mainly in the Seine” – an error stubbornly repeated in Mann et al 2007.) While BEST should have been alert to this sort of known problem, it’s hardly unreasonable for them to presume that GHCN had done some sort of quality control on station locations during the past 20 years, but this in fact was presuming too much.

These errors will affect the BEST urbanization paper (the amount of the effect is not known at present.)

46 Comments

Haven’t these people ever heard of Six Sigma?

Steve, thank you for exposing the deficiencies in the BEST paper.

It could be clever of Muller to put data out there and to treat all criticism as in effect peer review, on a global scale. This circumvents the problem of journal editors steering manuscripts to a few favorite members of the Team. Muller could harness the thoughts of critics to help him improve the study, if and provided this is what he is aiming to do.

It is a pity that the report wasn’t peer reviewed before publication because it would have been interesting to see if any of the various observations and criticisms would have been revealed, had the paper not been rejected, that is.

Its a rather basic approach to quality check your data before you put it through any calculations , but it does seem to be something not done in the word of climate science .

Perhaps what we seeing is that in the rush to get these papers out they simply did not use any mark one eyeballs on the data but instead relied on statistical techniques to cover for them. The trouble with that approach is you simply no idea how big or what direction the errors are so how can you realistically know that what ever approach you used does really allow for the errors? You word your looking at is ‘sloppy’ and it does not give these paper the feeling of quality in any way .

We have seen these kind of problems for a number of years. Noting such problems prompted Anthony Watts to begin his station quality review several years ago. Nice job Anthony Watts and contributors! Why should the general public believe there is any rigorous, reliable temperature station quality management? Moreover, why should the general public believe there is such a satisfactory level of certainty in any of this data or in it’s analysis to justify anything more than further reasearch and efforts at quality control?

A demonstration of poor quality control is far more significant than the degree to which any particular error may affect results. Especially when the poor quality control is a hallmark of a group which routinely argues by appealling to authority.

If this is the BEST, I’d hate to see the worst.

The problem has always been, and will continue to be, that attention to the data is non-sexy. Climate scientists who use the data want to spend their time making predictions of how we will be roasted in a hundred years time. This is high reward, low risk work. They will be dead before they are proved wrong, in the meantime they can make a good career out of being Jeremiahs. Even the ones who are proved wrong, time and again, somehow still seem to keep on sucking on the public teat. Witness Paul Ehrlich.

Nest or den of climate scientists? A phrase comes to mind…

The need for a well documented comprehensive data repository remains unmet.

It’s a huge task. My hope was that BEST would do this.

1. Build a new database

2. Build a new method and test it on synthetic data

3. Do a comprehensive comparison between the new method and the old methods.

Err that would be engineering. we would not even attempt to throw a method at UHI or AMO without getting basics done first

Given that BEST supposedly had access to Anthony’s surfacestations metadata they should have been able to identify location errors for at least a large number of them. Also it is extremely frustrating to think that they seem to be blissfully ignoring one of the major criticisms of the temperature studio, to wit: DATA QUALITY. Not checking for outliers?! They should (but won’t) be embarrassed by this. They can wave their hands and say it doesn’t matter but they’ve made all the work they’ve done suspect.

The USHCN locations are good to less than 100meters accuracy. It’s the ROW where there is an issue

the WMO has just completed a project to have every WMO station updated with coordinates down to the second.

Some countries have no incentive to respond and there is every incentive to respond inaccurately in some instances. For example, lets take airports. Since 911 the FAA has removed it former database of airfield and runaway locations. Basically, if you want to drop a GPS guided munition on a runway most countries dont want to make that job any easier. I imagine camp david is similarly reluctant to provide coordinates.

Canadian stations have their correct lat/longs listed on environment Canada’s website. It would be interesting to check and see the difference between GHCN locations and CHCN ones that are the same station.

Yeah, you’re right. If I had to drop a GPS guided munition I’d have to get online and check Google maps or any number of other publicly-available sources to find the exact building or runway I want to hit. This would be way more difficult than, say, getting online and finding the coordinates for a weather station that happens to be off to the side of some runway, when I probably don’t want to waste munitions taking out a Stevenson screen anyway.

Welcome to the satellite era. It is just silly to think that withholding coordinates of weather stations makes any sense anymore.

President’s bungalow at Camp David

Lat: 39.6471400 Lon: -77.4625500

Here is the satellite view

http://virtualglobetrotting.com/map/presidents-bungalow-at-camp-david/view/?service=1

Only 7 significant digits in the location. This must be evidence of the reluctance

Actually Tom if I was in security I would take your action here as a threat.

The point is this. They know you can get it if you want. They will not supply it.

So people who want to get it, have to do it themselves and leave a trail.

Hmm, speaking of physicists, maybe the Gran Sasso lab in Italy has the same location problem as Isola Gorgona? Maybe that explains the faster than light speed nutrino measurement?

Hehe

Good call!

If I had ever come across a first year trainee accountant using data in this way, I would take his aside and suggested that he seek employment elsewhere, perhaps in public relations, house painting or somewhat.

I have read a many shortcomings in the field of climate science – poor sample selection, inappropriate statistics and so forth.

But this really takes the prize.

I think house painters need to be more accurate than this. Imagine if they painted a house using the wrong lat-long…wrong house!

“It was the best of times, it was the worst of times, it was the age of wisdom, it was the age of foolishness, it was the epoch of belief, it was the epoch of incredulity, it was the season of Light, it was the season of Darkness, it was the spring of hope, it was the winter of despair, we had everything before us, we had nothing before us, we were all going direct to heaven, we were all going direct the other way – in short, the period was so far like the present period, that some of its noisiest authorities insisted on its being received, for good or for evil, in the superlative degree of comparison only.”

Charles Dickens, A Tale of Two Cities

Hmm, I am getting the impression that BEST is all sizzle and no steak. And a case could be made that the media (which ate this up) are really only about sizzle anyway.

sssssssizzzzle is sssssssexy!

Dear Steve, I am not getting this criticism. BEST is obviously not the primary source of the raw data, is it? Such problems in the raw data are well-known, have obvious reasons, and whoever wants to constructively deal with them must choose a strategy what to do. And so did BEST which outlined their own strategy what to do with these problems that sometimes inevitably occur. Even if the errors can’t be uniformly caught – of course that some of them won’t – one may still get a high enough certainty that they don’t make big impact, bigger than XY.

It doesn’t seem that you have really addressed their attitude to those errors in the data. By the way, I disagree with the proposition that the data may be safely eliminated as errors if they deviate by more than 6 sigma etc. because the distribution isn’t normal. It’s decreasing much more slowly for large temperature anomalies so 6-sigma deviations may be common, genuine signs of “extreme” temperatures, and eliminating them would bring huge errors exactly because the deviations are large.

All the best

Lubos

Lubos, two slightly different responses:

– it looks to me like there’s something wrong with BEST’s calculation of monthly normals for seasonal adjustment. I don’t believe that Longmont has a whole bunch of errors in its October data; something else is going on with their algorithm. Yes, the big anomalies will get washed out in a subsequent patch in their algorithm, but, in their shoes, I’d want to know the precise provenance of the problematic numbers and ensure that none of them were “hospital-induced”.

On the incorrect locations, this might “matter”. This data is used to compare rural and urban results. Incorrect locations may well cause urban sites to be incorrectly classified as rural or very rural, damaging their attempt at stratification. I didn’t blame BEST for relying on GHCN data, but GHCN negligence in ensuring correct location information is a longstanding issue.

Thanks for your answer, Steve!

There will probably always be errors in and uncertainties about the past data – and the location of stations and so on. For this reason, the past reconstructions won’t ever be completely accurate or reliable.

Relying on someone else means that they copy the errors; but starting from scratch risks new errors because the new methods weren’t previously tested, and so on. There’s no magic cure, I guess… Still, some methods to deal with the problems are probably better than others. Sometimes I would be happier to see a more constructive tone. How would your reconstruction from the thermometers looked like and differ?

Lubos Motl

Posted Nov 7, 2011 at 5:00 PM

Fallacy of the excluded middle. The choices are not just “copy the errors” or “start from scratch” as you state.

There is also “look at the frickin’ data before and after each step to find and fix any errors”, as well as “take a look at Climate Audit and get a head start on the known errors”, among others. Not a magic cure, just the magic of hard work and paying attention to the details …

w.

Hi Lubos

On a monthly averaqed basis, I am not so sure that you should get swings of more than 20c very often – I presume that we are talking about an average or maybe mid-range average here. I know of places where the tmin and tmax can regularly oscillate by 30c or plus in a month – but the average should not be so affected. This seems to suggest a problem with the data.

Dear Steve

Sorry to trouble you with this. Dr Curry makes the following statement on her blog entitled “Pause”

“IMO, the significance of the BEST data in terms of the temperature record of the past 50 years or so is that it puts to rest the concern that Phil Jones and Jim Hansen have “cooked” the land surface temperature data. This has not been a serious concern among the people paying close attention to this issue and who actually read the journal publications and look at the actual data; but it is a concern in certain circles, and in the U.S. this concern has been raised by at least one Republican presidential candidate. The relatively small discrepancies between the BEST and the GISS and CRU data sets are of some interest; the apparent discrepancy with GISS has been resolved. Note: the CRU data set shows less warming than BEST over the past 15 years.”

My question to you is have BEST used the original temperature records lost by CRU?

Please delete if this is a stupid question and or off topic.

Regards

Don’t know. Here’s what CRU did (I’m pretty sure.) I traced this in some Kenya data where John Christy collated original data ( I don’t remember whether I posted on this.) CRU spliced WWR records from a couple of different local stations to make one “station”.

BEST has a very large file of underlying data. It’s too large for my computer and my computer is too slow to handle. With some work, I could probably work around it. It would be better(for me at least) if they organized their source data in smaller subsets so that I could spot check without having to parse the entire dataset.

Maybe it is time you installed a “data server” on your computer. A development version of Interbase is available from Embarcadero, and ODBC drivers are available — which should tie into R. I have not tested yet — but plan to do so shortly. I use Interbase for doing the Wind Energy Stats which involves millions of records — processing time for most queries is a few seconds — even when analyzing several years of data. This assumes that when you load the data you take steps to “normalize” it reasonably well.

I know other people have their favorite servers, but That one works on Windows and Linux as well as other platforms…

To do initial quality checking and fix general errors I do a program in Delphi which does the upload rather than try to fix the data after the fact. Bad records are flagged and move to a “bad” table or repaired if the error can be determined and a fix is accepted practice (whatever that means to you). All-in-all it is not a difficult project — just tedious.

Very quick estimate of how significant these large errors are.

Approx 850 stations +/- 30 C errors. That’s a bit over 2% of the total number of stations. So that kind of figure weighted for the number of stations affected:

+/- 0.6 deg C for the ensemble.

NOW IF those errors are zero mean and normally distributed we can probably divide by sqrt(850) and get and statistical error of +/- 0.02 C ,

But that’s a big if.

It does seem to clearly indicate that a lot of this has been rushed out without being checked in any real way.

Let’s be generous and accept Dr. Muller’s claim that the release date was to get inside the IPCC deadline.

Now they need to do the due diligence bit BEFORE this goes to print and hope that they don’t have too much egg on their faces

They needn’t have worried about any deadline unless the trend was less than current estimates. Any paper that shows things are worse than expected will be shoehorned into the IPCC reports somehow. Muller must have known that, so the real reason for the haste was likely just his own desire to get his name into the public eye as the new sage of temperature-wrought doom.

Of course we all know that even wildly inaccurate data can still have errors averaged out to get an accurate overall number as long as there is enough original data and no inbuilt bias. But….you still have to prove that lack of bias. The best, perhaps only, way to prove it is by matching your recon with the satellite data…as previously mentioned by Steve McI here. Since BEST did not not do this necessary check their reconstruction does not meet the minimum standard of validation. Such trivia will not, of course, bother the IPCC doom-mongers.

Gary’s paper mentions the harmonics in the filter. This is something I’d already seen looking at the FFT of the whole dataset.

http://tinypic.com/r/254ve3a/

3m, 4m 6m and 12m show quite significant peaks in the freq spectrum.

Note frequency scale is per century (200 means 6 months)

HadCrut3 , by comparison only shows the smallest of glitches at 12m and something barely distinguishable from noise at 6m.

So far Best, isn’t.

strange that image seems to have gone.

http://tinypic.com/view.php?pic=254ve3a&s=5

3m, 4m 6m and 12m show quite significant peaks in the freq spectrum.

“outside the nest of climate scientists in Boulder CO”

I imagined a high aerie in the crisp mountain air framed in a steel blue sky where young fledgelings stood poised to fly out into the real world far from their gentle caring mother.

The fact that best has:

A. Come up with a negative UHI effect.

B. Published global results that only had Antarctic data at the end.

C. Published a 39,000 station reconstruction with a completely different trend in the last decade that another 2000 station reconstruction that they also published.

D. Shows the wild adjustment errors that Garry has found.

tells me that there is no sanity checking of any kind going on with any of their work.

Considering that GHCN at one time had mislocated the Oran, Algeria record in norhtern Argentina, the St. Paul, Alaska (Pribiloff Islands) record in Saskatchewan, Canada, etc. etc., the mislocations revealed here are insignificant by comparison. The shoddiness of GHCN archiving and prevelance of urban stations in ROW makes multi-faceted vetting of EVERY record used in climatic analyses an absolute necessity. But such due diligence doesn’t generate the headlines that accompany all-too-facile conclusions about “global” trends.

Has anyone unzipped flags.txt and sources.txt in the BEST text files compilation, PreliminaryTextDataset.zip? The commentary which I have read so far seems to be based on examination of the site metadata in site_detail.txt, and site data values in data.txt, but not the further flags and sources for the individual station data records.

I ask this because I have encountered CRC failures for these two files when I extracted them using 7-Zip, and while the extracted file lengths (flags.txt: 662,530,238 and sources.txt: 3,338,241,649) match those shown by 7-Zip, the last data record in flags.txt is for Station ID 112552, and in sources.txt for 129842, both well short of the total number of station records, thus suggesting that the files should be larger still. All other files extract without error and with all station records present.

I have downloaded the zip file a second time, with the same CRC failures showing, and extracted the files in R, which does not indicate an error, but extracts files which have the same lengths as those extracted by 7-Zip. Can anyone confirm that they have extracted these two files successfully? It would help to know whether I am wasting my time if I try downloading again.

Steve: I tried to read them into R but my computer wasn’t big enough.

Yep, I have the same problem with them using WinZip.

Re: GaryW (Nov 11 10:11),

Solution: use WinRAR. WinRAR still shows the same incorrect file lengths, but extracts the full files. I presume what is happening is that both 7-Zip and WinRAR use a 32-bit integer when displaying the file length, and of course this is too small to hold a value this big. WinRAR however seems to use 64-bit integers when they are really needed, testing and extraction, but 7-Zip (or, at least, the version which I have installed – I need to check whether there has been an update which corrects this) uses 32-bit integers when testing or extracting, and gives CRC failures and truncated files for this reason. The same presumably applies to WinZip.

I find these file sizes, which seem due to the “convenience” of each row in these flag and sources files having the same number of columns. As any individual data value will only have a limited numbers of flags and sources associated with it, adding flags and sources as two additional tab delimited entries to each row in data.txt, with the individual flags and sources separated by spaces, should combine data.txt, flags.txt and sources.txt in one file of a more reasonable size, as:

If such a combined file would be of use to others, reply to this comment, and if there are any such replies I’ll make the file available in a dropbox once I have it ready, and post another comment here with instructions.

Re: Peter O’Neill (Nov 11 18:41),

I’ve updated now to 64-bit 7-Zip version 9.20, and the problem is still there. I tried to include an image in the previous comment to show the file sizes displayed by WinRAR, and the actual sizes extracted. I’ll try again here:

and the preview does show it, but in case it does not show when posted, here are the sizes:

Yes, please. A number of people, including me, have been frustrated by this. So it would be of service. You can locate it at climateaudit.info if you like.

Re: Steve McIntyre (Nov 12 00:06),

OK. I have it ready now. The text file is almost 1 GB, zipped it weighs in at 95 MB. Can you e-mail me a FTP upload link.

Slow burn on this end. Maybe on topic, maybe OFF.

I recently paid $70 to the National Climatic Data Center for a 1.5 MB file, with the 1925 to 2005 ACTUAL temperature data for Chaska MN.

I’m doing some statistical analysis, and using Chaska as the “control point” and “comparison” to the MSP data. (Which I have FOR FREE, courtesy of the local weatherman who went to the microfilms and retrieved the data going back to 1820, Fort Snelling, MN records..)

What is the BURN about? Can I say this without getting profane, demeaning, overly agitated? WHY THE HECK DO I HAVE TO PAY FOR WHAT MY GREAT GRAND PARENTS, GRAND PARENTS, PARENTS and MYSELF HAVE BEEN PAYING FOR ALL ALONG.

The concept that the NCDC needs $ for these files is BOGUS. These SLUGS are getting $2 Billion a year for PRODUCING VIRTUALLY NOTHING OF MERIT for our society.

I’ve figured it would be about $100,000 to get ALL THE DATA. AND, all the data would fit on an 8GB THUMB DRIVE.

I WANT ALL THE DATA AND I WANT IT FOR FREE?

Steve, anyone, CLASS ACTION LAWSUIT FRIENDS?

I don’t want “free” monthly averages. I WANT ALL THE DATA POINTS, period.

ANYONE WANT TO TILT AT SOME WINDMILLS WITH ME?

Max in Minnetonka

Max,

If you would like the data for Chaska, MN, as transcribed from the hand written records collected by the folks who actually read the thermometers, you can get it from this site for free:

http://cdiac.ornl.gov/epubs/ndp/ushcn/ushcn_map_interface.html

Choose your state and site. Select “Get Daily Data” in the pop up box on the map. Click on “Create a download file”. Select the temperature entries on the next page and click submit. That will give a file name to download. It will be in comma separated value (CSV) format.

5 Trackbacks

[…] BEST Data “Quality” (climateaudit.org) […]

[…] the quality of their work, though preliminary, was adequate for public display is disconcerting.https://climateaudit.org/2011/11/06/best-data-quality/It appears that BEST is just another example of the incredibly lazy and incompetent […]

[…] […]

[…] and his papers have not passed peer review, but the political apparatchik wants to showcase the incomplete and rushed, non quality controlled, error riddled BEST science as if it were factual enough to kill off “denialism” worldwide. That’s […]

[…] and his papers have not passed peer review, but the political apparatchik wants to showcase the incomplete and rushed, non quality controlled, error riddled BEST science as if it were factual enough to kill off “denialism” worldwide. That’s political […]