As mentioned yesterday, the Law Dome series has been used from time to time in IPCC multiproxy studies, with the most remarkable use occurring, needless to say, in Mann et al 2008. As noted yesterday, despite Law Dome being very high resolution (indeed, as far as I know, the highest resolution available ice core) and the DSS core being finished in 1993 and (shallow) updates in 1997 and 1999, there hasn’t yet been a formal technical publication.

To give a rough idea of Law Dome resolution, its layer thickness between AD1000 and AD1500 averages 0.45 m, as compared to 0.034 m at Vostok, 0.07 m at EPICA Dome C, 0.1 m at Siple Dome, 0.028 m at Dunde and 0.18 m at the NGRIP site, which is regarded as very high resolution. This is a very high accumulation and very high resolution site. There were some technical difficulties with the original DSS core in the 19th century and the upper portion of the “stack” presently reported relies on the DSS97 and DSS99 cores, crossdated to the long DSS core.

The original sampling of the DSS core was at approximately 10 samples per year back to AD1304 with 0.5 meter samples in earlier portions of the core. The layer thickness (see Morgan and van Ommen 1997 Figure 6) in the period from 1304 to 1000 declined from about 0.25 m to 0.18 m. So the resolution of the original 0.5 meter sampling would be about 2-3 years. Not the desired annual to which they have been working, but still very high resolution compared to most available proxies.

Version 1

The seasonally resolved O18 series from 1304-1987 (using only the somewhat problematic DSS core) was published in Morgan and van Ommen 1997 (J Glaciology). The underlying data was interpolated to monthly values; a copy was sent to Phil Jones in 1997 and was used in the SH composite of Jones et al 1998. This data was not archived. I obtained a copy of it from Phil Jones in 2004.

Phil Jones also sent this data to Canadian climate scientist David Fisher, who used it in Fisher (2002), about which more below.

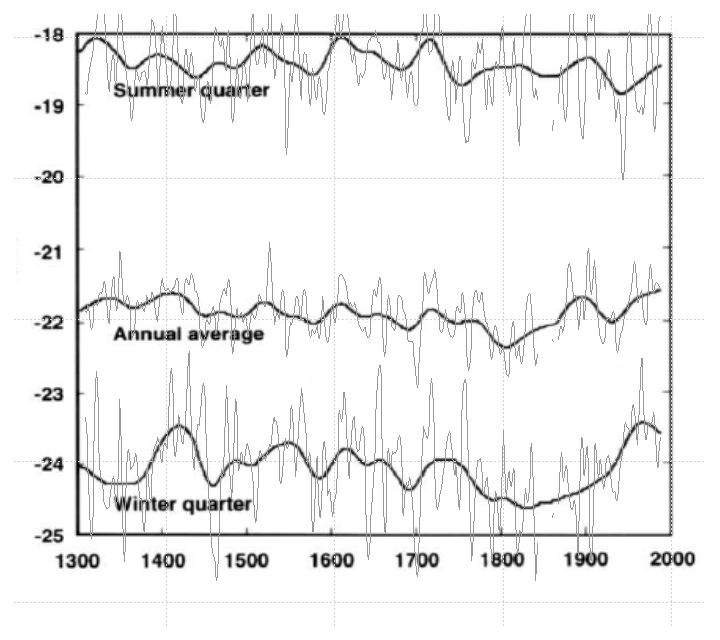

The accompanying graphic in Morgan and van Ommen 1997 showed only a VERY smoothed version of the data. I’ve overlaid this graphic with a less smoothed version of the digital data – this clearly shows that the Jones et al 1998 data is the same data used to produce the graphic in Morgan and van Ommen 1997. The gap in the 1840s is visible in the overprinted data, but has been smoothed over in the graphic from the original article. Notice also that the modern portion of this version is elevated relative to the later version plotted yesterday. (More on this below).

Figure 1. Morgan and van Ommen 1997 Figure 6 with digital data overlaid.

Version 2

In the early 2000s, van Ommen and associates created a “stack” of shallow cores to overcome the core problems in the upper part of the original DSS core and to update the series. (See for example Palmer et al 2001 (JGR)). By 2003, they had calculated a 2100 year series extracted at 4-year intervals. In the upper part, this composited from sub-annual data; I’m not sure why they wouldn’t have retained the annual information in the archive.) This data was sent to Phil Jones in 2003 and used in Mann and Jones 2003 (not illustrated) and later in Jones and Mann 2004 (where it was shown.)

I requested this data from van Ommen in late 2003 and followed up in Feb 2004. Van Ommen put me off, saying that the data would not be available until published ( mentioning a delay of a “couple of months”). Van Ommen passed my request on to Jones (1076336623.txt), who alerted Mann to this disturbance in the universe, leading to a vituperative recommendation from Mann that I not be given access to data. I did not get a copy of the data until over two years later, when a further inquiry to van Ommen was finally successful.

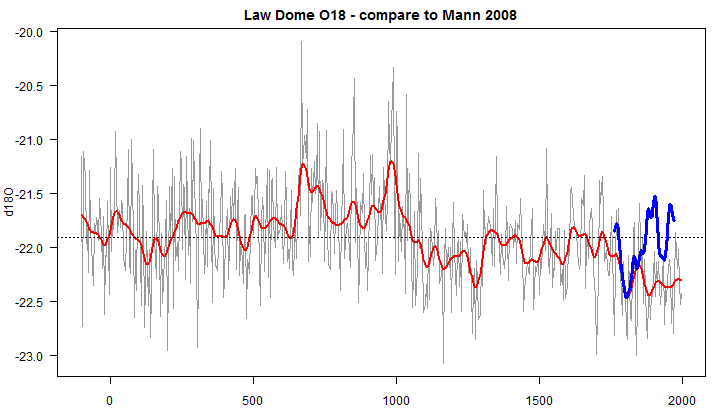

The new version extended the record back to the start of the first millennium, showing very elevated values in the 10th century. The series obviously does not accord with the expected increase of d18O values with 20th century warming. Because O18 series are so fundamental to paleoclimate, this discrepancy obviously needs to be explained. Since the series has not yet been formally published, these differences have not been addressed in academic literature. (My own take is not that this series is neccessarily “right” as a temperature history, but that it is not right to reject it, while retaining other ice core O18 series without an explanation that rises above the uninformative “regional variations” or “regional circulation”, especially when series also possibly subject to “regional circulation” are not rejected if the error goes the “right” way.)

Figure 2. Law Dome O18. red – 2003 version; blue – 1997 version

Mann et al 2008

Fisher 2002 (Holocene) carried out a principal component analysis on a wide variety of proxies for the 210 year period from 1761-1970 when the proxies were all available. Fisher created an archive of these proxies for the period 1761-1970, one of which was Law Dome (LAWA210.ANT). This was obviously only a fraction of the available data but was the portion used in his analysis.

Even though Mann had a current and much longer version of the Law Dome O18 series that he/Jones had obtained from van Ommen, Mann et al 2008 substituted the truncated version used in the Fisher principal components analysis. The difference in the two versions is shown below.

Figure 3. Law Dome versions. red- 2003 version; blue – Mann et al 2008 version ( truncation of 1997 version to 1761-1970).

It would take a while to calculate the effect of Mann’s use of an obsolete and truncated version of the Law Dome series on his SH reconstruction, but no one should assume that it didn’t and doesn’t “matter”. My guess is that this decision had a material impact.

31 Comments

Somehow it always makes today hot, and they never use the proxies which say the opposite.

So it’s not just trees that are “cherry-picked”.

How much longer are climate “scientists” going to sit on their hands on this kind of rubbish science?

And make no mistake it is rubbish. If my undergrads tried to pull a stunt like this with their 3rd year dissertations they would be failed.

Keep failing ’em Don. The day will come when ‘climate justice’ is properly defined and the global fail will be loud and clear.

Why wouldn’t a reviewer of Mann et al 2008, assuming at least one reviewer was an expert in O18 data from ice cores used as atmospheric temperature proxies, require Mann et al to include also the more recent 2003 version of Law Dome data instead of just the 1997 data? What reason would prevent that request by a reviewer or if that request was made to Mann by a reviewer what possible Mann answer could justify a negative response to a reviewer?

For those here with knowledge of climate science peer review, does using obsolete data not get seriously challenged by reviewers?

John

For climate scientists reading this: it would help improve your field’s public credibility if you could cite publication(s) of proxy data that *don’t* support the IPCC/Hockey Team version of recent paleoclimate history.

My guess is there are very few such pubs.

But that could decimate their funding. No certain crisis, no money.

The instabilities in the Mann line regarding Law Dome isotope (not CO2 concentration) records has been resolved in Gergis, et al. by their total omission. This is evolution in climate science. On what basis? I figure the isotope records have been reclassified as as “climate” proxies, but separated out as not being “temperature” proxies. That is, the isotope readings show some annular stuff, but such does not reflect temperature, but something else about “climate.” The Gergis paper indicated there may be a new trend in climate science in selecting a subset of “temperature proxies” from a set of determined “climate proxies.” This is my opinion based on the tacit confession in Gergis acknowledging that certain proxies are accepted as climate based, but not reflecting temps. I can think of no reason why such would have been mentioned except as some kind of furtive acknowledgment that certain proxies previously treated as temp. proxies are no longer for purposes of that paper at least.

If I am right, will this trend lead to re-accepting tree rings as water budget rather than temperature proxies? BWAHAHA! Of course not. Follow the money.

The introduction of “climate proxies” into the argument will make it evident to the man in the street that the diameter of the circular argument has shrunk enough for it to be seen for what it is at a single glance – no hidden declines to introduce doubt. If Gergis gets this on the radar via AR5, we could all be grateful.

Nice work Steve, as always.

But you are forgetting that, unlike other fields of science, in paleoclimate you can choose which samples you use in order to tease out the genuine climate signal. This cannot be repeated often enough, because people keep forgetting.

Let’s call it WGA. In computing we know that as Microsoft’s “Windows Genuine Advantage”.

In IPCC world it’s “Warmists Genuine Advantage”. The IPCC version of WGA is MUCH better than the Microsoft version. Microsoft’s WGA only means you can run Windows and Office.

IPCC WGA brings grants, articles in the pal-review lit-chur-chur (did I spell that right?) and more grants, occasional fame, and did I mention the grants?

Besides, if you show Mann’s algorithms the full Law Dome O18, it would just do a Tiljander and flip it upside down.

Steve writes above: “… despite Law Dome being very high resolution (indeed, as far as I know, the highest resolution available ice core)”

I draw attention to a paper* by Jinho Ahn in the current issue of Global Biogeochemical Cycles “Atmospheric CO2 over the last 1000 years: A high-resolution record from the West Antarctic Ice Sheet (WAIS) Divide ice core”. This core has nominal resolution of 20 years and interestingly shows methane and CO2 uptick back to 17th century.

The published results relate to greenhouse gas concentrations so presumably of limited interest here but presumably isotope derived temperature proxy will be available in due course if not already.

Steve: Red herring. Law Dome has monthly resolution in the same period. You need to locate accumulation data for comparison.

* Google on author, title and publication to see abstract and figures as I gather we are not permitted to quote URLs here

Have I just had my knuckles rapped – very chastening? I mentioned the WAIS alternative as it bills itself as promising the most detailed resolution in Antarctica with annual layers to 40,000 years for both ghgs and isotopes. If it is correct that Law Dome data can be resolved to monthly in recent centuries then clearly this claim is incorrect. I wonder though if “monthly” relates to the instrumental period rather than what can be interpreted from the ice cores. Presumably there is multi-year smudging of ice core retrievals through migration and closure delay anyway.

maxberan,

Here is your delima. You probably got exited when you saw the printing of the Ahn etal in AGU (probably you have SkS alerts). More of the same problems continue to be repeated in every new paper with a new high resolution technique and a new robust analysis. It is usually just a new mousetrap that does the same thing and relies on faulty methodologies of the past. Data and story seem to have difficulty coming together. Your suggestion of Ahn etal (2012) is more of the same.

First issue, is the AGU paywall. It is an instant yellow flag on ease of access.

Second issue is the Eschenbach guideline of inverse proportion of authors to usefulness of an article. Jinho Ahn and Ed Brook have worked together to develop this new technique in the past, but coming onto the IPCC deadline, four time as many authors? Another yellow flag.

Anyhow, let’s do the exercise.

“WOW, would you look at that sharp incline in CO2 post 1800…”.

(http://www.agu.org/pubs/crossref/2012/2011GB004247.shtml …Down at the bottom are thumbnail pics, goto figure 2)

“This *MUST* validate the all of the climate scientist the deniers are picking on.”.

While this story-line is cute, history says something else.

“Atmospheric CO2 and Climate on Millennial Time Scales During the Last Glacial Period.” – Jinho Ahn and Ed Brook (2008)

(http://www.ncdc.noaa.gov/paleo/pubs/ahn2008/ahn2008.html)

Here we have the typical story of historical isotopes going back 90,000 years (an extra 5,000 for CO2, of course). Notice the cute “red line” with the error bars coming from 95,000 years to 18 to 19,000 years. Then we calibrate another dataset (the mauve line) for splicing and the CO2 shoots up. Why does this *ALWAYS* happen? Did the core melt or break at 18-19kya? No, the methane appears to be continuous. I do not know. Also, check out 13-15kya of the splice-in, 3 data points of FLAT CO2 (2-3,000 years of stalled CO2), this makes a 1,000 years informative, but not useful.

The final red flag is the range of the mauve line and the green dots (10kya – 45kya). The mauve line terminated at 10kya with ~262ppm CO2, the green, less noticeable dots, terminate ~290ppm CO2. Curious, most of the error bars are +/-5ppm (x-bar I presume), but anyhow.

Now, we go back to Ahn etal (2012). The starting point of CO2 is 279ppm 1,000ya? 10,000ya it was between 260-290ppm. No red flags?

Prediction. Ahn etal (2012) will be the new hockey-stick of AR5 and there will be a protracted effort to get a hold of the data. Once the data is evaluated, the same methodology errors will be found.

Here’s the Ahn paper: WAIS, Law Dome

http://www.agu.org/pubs/crossref/2012/2011GB004247.shtml

They show CO2 concentrations at home

And show a drop (1600 or thereabouts)

But there’s an odd aspect in the abstract’s shouts:

If Law Dome CO2 correlates well to “climate”

Why not to Law Dome? Why didn’t this prime it

To show a sharp “climate” rise like CO2?

For strong “correlation” it seems quite hard to view

===|==============/ Keith DeHavelle

Why does the 1997 version have a much larger variation and different shape compared to the 2003 version in the period where they overlap? If you get differences that large just from re-sampling the core, or taking a second nearby core, doesn’t that suggest that the accuracy of the measurements is very poor, relative to the range of expected results? Therefore, even if this is a good proxy for temperature, how can this data be used to say anything about trends in temperature if the error in the measurements is so large compared to the expected trend?

It’s even worse with the “truncated” version. I assume this was derived from the 1997 version but even then, it seems to have an even more exaggerated difference compared to the 2003 version.

If what’s happening is measurement variation between different cores taken in close proximity, that suggests that an ice core record really should be based on multiple cores, with error bars derived (at least partially) from the variation between samples.

Steve: Ice cores are very expensive so it’s easier said than done. They show some evidence that nearby cores have very similar values but the discrepancy is a puzzle. I asked van Ommen about this by email and he attributed it to core problems with the DSS core, which was early in their technology. I don’t understand why the particular differences and am unaware of any technical report. Some of the problem is that there isn’t a proper publication. Van Ommen says that they’re working on it. He also said that in early 2004, but I suspect that it’s closer to reality this time.

Thanks Steve. It makes sense that it could be a measurement error as the d18O values are always closer to zero for the 1997 version compared to the 2003 version, by a varying amount. This could mean contamination, incorrect instrument calibration or reading etc.

Unfortunately, that means that anyone using the 1997/truncated version of the series in their data has used invalid data.

On the topic of high resolution Southern Hemisphere d18O temperature series showing the MWP keep an eye out for new work by Prof Paul Williams (Auckland University) on speleothems from Waitomo Cave, west-central North Island of New Zealand. I got a sneak preview a few months back. Paul derives MWP temperatures similar to modern levels for this site.

Gad, Steve, this is getting like shooting fish in a barrel. Are there any recent reconstructions by the IPCC team that don’t demonstrate cherry-picking?

==================

In respect of the recent Gergis paper, there is another reason that Dr Gergis should have used Law Dome in preference to Vostok. An “Australasian temperature reconstruction” should not strictly use data from other continents. But if it does, should use data sets a close to the continent as possible. On this basis Law Dome beats Vostok Station by over 1000 miles

I cannot come up with any other legitimate scientific field where this type of obvious data manipulation would be tolerated.

You are absolutely right. In any important clinical trial, a prospective statistical analysis plan (SAP) has to be written and approved before the first patient is enrolled.

Re: Bob (Jun 5 12:04),

But how do you know that you will get the ‘right’ answer if you can’t choose your statistical method once you know what the data is? Surely you’re in danger of arriving at a conclusion opposite to the one you want? How do you expect climatology would advance if you were to allow such things?

Latimer, as SM as inferred, but not implied, Mann, Gergis, and others are guilty of scientific and statistical malpractice.

The discrepancy discussed by Nicholas and Steve above is a major part of the ice core debate that has been pushed aside by the activities of the IPCC. Jaworowski examined some of the severe limitations of the ice core record here;

http://www.21stcenturysciencetech.com/Articles 2007/20_1-2_CO2_Scandal.pdf

He was also scheduled to appear before Congress but in an apparent political move by VP Gore and the Democrats was not allowed to appear. His brief was put on the record, but that precludes any discussion.

http://www.warwickhughes.com/icecore/

In the 1980s and early 1990s a Canadian Committee on Climate Change was operating under the auspices of the National Museum of Canada and Environment Canada. It was shut down when EC pulled the funding because we were questioning the science of the IPCC. (I was chair when it was cancelled, so know what went on.)

At several of the annual meetings we had long discussions about the problems with ice cores particularly with the input of one of the best glaciologists Roy (Fritz) Koerner. Fritz was drilling in the high Arctic at that time.

http://www.telegraph.co.uk/news/obituaries/2050482/Fritz-Koerner.html

Steve is correct about the limitations of cost of drilling, which is extended by limited access and seasons for drilling. Fritz identified most of the problems some of which I list here. Finding two drilling sites close to each other to accommodate what in classical climatology, relative homogeneity. This is a need to identify local variations in climate from larger scale changes. In ice cores this is extremely difficult because of many factors including variation in snow depth at time of deposition, then complicated by wind drift that redistributes the amount. Once the snow is settled scouring occurs, especially in cold environments where wind speeds are generally high. Katabatic flow is a major factor beyond mere barometric created winds. As Fritz explained this scouring can remove decades of layer as the hard brittle loose snow drifts across the surface.

Once the layers (ogives) are formed infiltration of melt water is a serious problem, but even after the ice becomes plastic at depth, usually below 50 metres, movement of ice layers from other areas can move into the geographic site under which the core was drilled.

There are three different forms of water within any glacier. Surface water that occurs every year with summer melt and can form large streams flowing across the surface, called supraglacial streams. Here are many images;

http://www.bing.com/images/search?q=supraglacial+streams&FORM=BIFD

There are englacial streams, that is water flowing through tunnels within the ice.

And subglacial streams that usually emerge at the edge of a glacier. Here are many illustrations;

http://www.bing.com/images/search?q=englacial+streams&go=&qs=n&form=QBIR&pq=englacial+streams&sc=0-8&sp=-1&sk=

If the glacial ice was static and permanently frozen without plastic flow it might be possible to achieve reasonable and comparable results from core to core. Add to this the problems Jaworowski identified with coring and contamination coupled with the problems associated with extraction of the gases then it is easy to see why the cores from sites relatively close to each other differ. Relative homogeneity is difficult if not impossible to achieve.

I’m an attorney, and although I took some advanced math, physics and engineering classes 35 years ago at Texas A&M, I struggle to follow much of the statistical analysis, programming and math posting in these threads, but I enjoy reading the discourse nonetheless. Forgive me if I fumble the terminology a bit.

It occurs to me that whenever any of the independent proxies which are researched and promoted by various climate scientists as reliable temperature signals are compared to the actual instrument measured temperature record, there is a generally a sizable lack of correlation. In fact it seems that the proxy data must always be artificially manipulated to create even the appearance of correlation with instrument measured data (and that, despite the companion problem of whether instrument recorded temperature data was manipulated, cherry-picked regionally, or just “value added” by its gatekeepers). It also appears to me anyway, that these proxy manipulations are considered closely guarded secrets, generally omitted from overt disclosure or discussion, but instead buried in the minutia of peer reviewed articles and appendices of IPCC reports, if available at all. This lack of transparency and data manipulation seems so routine and common that I am inclined to believe that any actual convergence between proxy developed temperatures data and instrument recorded temperatures are likely mere coincidence.

My question is this: Are any of the temperature chronologies based on proxy data actually reliable enough to determine temperatures data over the past 500, 1000, 2000 years, with the degree of accuracy necessary to make fundamental policy decision concerning “climate change”? Are the margins of error in these proxy studies actually may times the magnitude of the temperature variations considered to be caused by CO2 concentrations (yes I know, an entirely different subject)?

In summary, is there reliability and scientific value in projecting past temperatures using proxy developed data, and if so, which proxies are reliable to what degree of accuracy?

Q: Are any of the temperature chronologies based on proxy data actually reliable enough to determine temperatures data over the past 500, 1000, 2000 years, with the degree of accuracy necessary to make fundamental policy decision concerning “climate change”?

A: No.

Q: Are the margins of error in these proxy studies actually may times the magnitude of the temperature variations considered to be caused by CO2 concentrations?

A: Yes.

Q: Is there reliability and scientific value in projecting past temperatures using proxy developed data, and if so, which proxies are reliable to what degree of accuracy?

A: No.

“My question is this: Are any of the temperature chronologies based on proxy data actually reliable enough to determine temperatures data over the past 500, 1000, 2000 years, with the degree of accuracy necessary to make fundamental policy decision concerning “climate change”?”

I’ll put my aberrant view. On the question of reliability and accuracy, I don’t know. But I don’t believe any fundamental policy decisions should depend on them.

The logic of AGW is: we are putting lots of CO2 in the atmosphere. This will hinder heat loss through IR radiation and lead to warming. We have observed warming which is consistent with this effect. If we keep putting CO2 in the air, and there is a lot of C available, then it will get a whole lot warmer.

It would be nice to know that the observed warming is absolutely due to CO2, so we look to the past (proxies) to see if anything similar has happened. But this should not affect policy. The fact that something else may have caused warming then (if true) does not alter the consequence of putting CO2 in the air.

snip – blog policy discourages efforts to argue the “big picture” in single paragraphs and on every thread. Nick made a limited response.

I’ve deleted a number of responses.

I never intended to lurch into a discussion of the “big picture” with my questions, but the use of proxy temperature data seems to dominate discussion on this blog of late. My initial skepticism concerning proxy temperature records concerns the use of these data by the “usual suspects” to proclaim with high confidence that the recent (and alleged rapid) increase in NH surface temperatures is unprecedented in the past 2000 years. Such a claim seems necessary if the IPCC, EAU-CRU, NOAA and NASA are to convincingly project catastrophic climate change based solely on CO2 concentrations.

It seems to me that with respect to tree rings in particular, there are literally dozens of variables which one would expect to have a direct impact on tree ring width and density. Even when the samples all come from remote areas at high elevations, it is incredulous to presume that all environmental factors in these regions were static for 500-2000 years.

So again, if there is little reliability and therefore little value to the science of projecting past temperatures based on seemingly conflicting proxies, why does funding continue to gush forth to those scientists, their universities and other governmental organizations dedicated to studying and defending such an unreliable and suspect line of inquiry? Please consider this a rhetorical question if it appears I’m beating a dead horse.

Nick’s response is not limited and in fact fails to answer the question. Such bias is damaging.

I have to disagree with Mr. Stokes; although the paleoclimate data is not used to directly derive estimates for attribution, natural variance, sensitivity, etc, it is directly used as support for the estimates of those parameters as derived by the instrumental record & models (to boost confidence in those estimates). If you read Chapter 6 of AR4 it is full of references to how well paleocomiate reconstructions agree with model reconstructions and the instrumental record. They keep stressing the agreement because they are leaning on each other for support; the (subjective) confidence is in part based on the paleoclimate agreement. What happens if paleoclimate suggests higher global temperature regimes and faster climate shifts in the last few thousand years than currently accepted by the IPCC? The models and reconstructions would not support each other (as-is) and confidence in either (both) would have to be downgraded. Yes, the estimation of parameters based on the instrumental record would not change, but at the same time the confidence in that estimation would be impacted. Any reasonable person would see the disagreement as a sign that the estimates of these parameters and of our confidence in these parameters are both flawed.

Paleoclimate is currently used to support confidence in this science and there are important questions to answer about whether this was done correctly. It does matter.

In case you had not picked it up, it is relatively easy to create an account at the Australian Antarctic Division data centre at http://data.aad.gov.au/

This opens up papers, some raw data and because of its activity, quite a lot of reference to Law Dome. There are assumptions made that are somwhat quizzical, like equating delta O18 profiles to Greenland to peg ages.

We do not have much ice on mainland Australia to train us, so to learn and specialise you need to head to Antarctica. Those who do so might be able to answer the question of why Law Dome has such a high accumulation. To my simple mind, that is because it is cold and close to the sea, so that the usual equations governing temperature derived from oxygen isotopes have a problem of isotope provenance and perhaps a secondary one about sublimation.

Predictably, the ice-gas age difference derived from isotopes complicated recent interpretations of temperature somewhat. It seems to need better explanation and verification of mechanisms because some aspects seem implausible to the unwashed mind.

Why is my comment still in moderation?

One Trackback

[…] story here Rate this:Share this:TwitterFacebookStumbleUponRedditDiggEmailLike this:LikeBe the first to like […]