People have quite reasonably asked about my connection with the surface stations article, given my puzzlement at Anthony’s announcement last week. Anthony described my last-minute involvement here.

As readers are probably aware, I haven’t taken much issue with temperature data other than pressing the field to be more transparent. The satellite data seems quite convincing to me over the past 30 years and bounds the potential impact of contamination of surface stations, a point made in a CA post on Berkeley last fall here. Prior to the satellite period, station histories are “proxies” of varying quality. Over the continental US, the UAH satellite record shows a trend of 0.29 deg C/decade (TLT) from 1979-2008, significantly higher than their GLB land trend of 0.173 deg C/decade. Over land, amplification is negligible.

Anthony had asked me long ago to help with the statistical analysis, but I hadn’t followed up. I had looked at the results in 2007, but hadn’t kept up with it subsequently.

When Anthony made his announcement of big news, I volunteered to check the announcement – presuming that it was something to do with FOIA. Mosher and I were chatting that afternoon, each of us assigning probabilities and each assigning about a 20% chance to it being something to do with the surface stations project.

Anthony sent me his draft paper. In his cover email, he said that the people who had offered to do statistical analysis hadn’t done so (each for valid reasons). So I did some analysis very quickly, which Anthony incorporated in the paper and made me a coauthor though my contribution was very last minute and limited. I haven’t parsed the rest of the paper.

I hadn’t been involved in the surface stations paper until after his announcement though I was familiar with the structure of the data from earlier studies.

I support the idea of getting the best quality metadata on stations and working outward from stations with known properties, as opposed to throwing undigested data into a hopper and hoping to get the answer. I think that breakpoints methods, whatever their merits ultimately demonstrate, need to be carefully parsed and verified against actual data with known properties (as opposed to mere simulations where you may not have thought of all the relevant confounding factors). To that extent, Anthony’s project is a real contribution, whatever the eventual results.

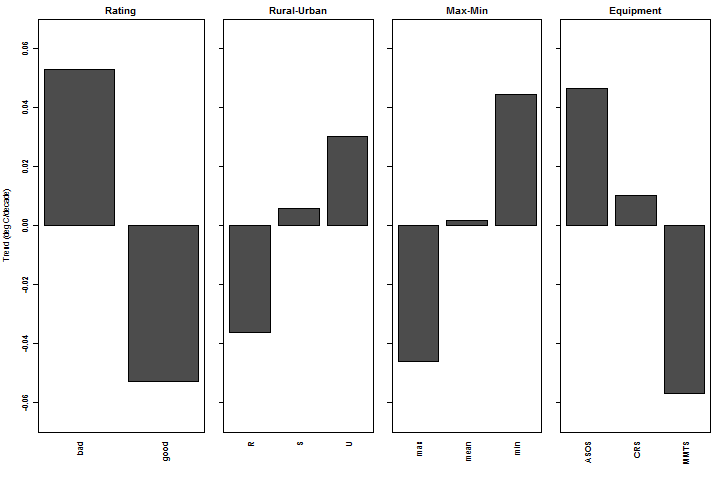

It seemed to me that random effects methodology could be applied to see the impact on trends of the various complicating factors – ratings category, urbanization class, equipment class. (Using the grid region as a separate random effect even provides an elegant way of regional accounting within the algorithm.) This yielded apparent confirmation in expected directions: a distinct effect for urbanization class in the expected direction; of ratings in the expected direction; and of max-min in the expected direction.

Figure 1. Random Effects of Urbanization, Rating, Equipment, Maax-Min.

Whenever I’m working on my own material, I avoid arbitrary deadlines and like to mull things over for a few days. Unfortunately that didn’t happen in this case. There is a confounding interaction with TOBS that needs to be allowed for, as has been quickly and correctly pointed out.

When I had done my own initial assessment of this a few years ago, I had used TOBS versions and am annoyed with myself for not properly considering this factor. I should have noticed it immediately. That will teach me to keep to my practices of not rushing. Anyway, now that I’m drawn into this, I’ll have carry out the TOBS analysis, which I’ll do in the next few days (at the expense of some interesting analysis of Esper et al.)

I have commented from time to time on US data histories in the past – e.g. here, here here, each of which was done less hurriedly than the present analysis.

174 Comments

Hi Steve.

Any chance that the list of stations and their site ratings will be made available soon?

I posted the following at the Black Board with the hopes of seeing more discussion of the issues raised in the Watts paper:

“I see that SteveM at CA has started a thread on the subject of the Watts paper. Maybe some of these critical details can be revealed there. I would hope that a reasonable discussion could avoid the personality issues. I have questions about the use of change point algorithms that have not been answered to my satisfaction to date and I have a great deal of interest in the benchmarking of these various algorithms by testing against realistic simulated data where the truth is known. I would think that change point analysis could hypothetically be the best method of adjusting non homogeneous temperature data. Unfortunately I am aware of the limitations of these methods when working with noisy data. The key to validating any system is testing it with realistic data.”

SteveM when you say the following I would agree but how do you use the actual data, as opposed to simulated data where the truth is known, to test an adjustment process. Obviously if we know the truth for a given part of the actual raw data it would be best to use that data. My problem is that I am not at all certain that we can find actual data where we know the truth, and if we could, whether these data would include sufficient typical non homogeneities to properly test the process.

“I think that breakpoints methods, whatever their merits ultimately demonstrate, need to be carefully parsed and verified against actual data with known properties (as opposed to mere simulations where you may not have thought of all the relevant confounding factors). To that extent, Anthony’s project is a real contribution, whatever the eventual results.”

I was very frustrated by Watts lack of specificity in text of his paper in reference to the temperature data sets he was using. I am assuming that Raw means the raw data before TOB adjustment and Adjusted means the finally adjusted temperatures after TOB and then application of the Menne change point algorithm.

The links below are to the Watts paper text and figures:

Click to access watts-et-al_2012_discussion_paper_webrelease.pdf

Click to access watts-et-al-2012-figures-and-tables-final1.pdf

The consistency of the expected result is impressive.

Jeff, I believe is now aware of this paper, where he gets ‘mentioned’

Click to access LskyetalPsychScienceinPressClimateConspiracy.pdf

Steve are you aware of your name being mentioned in the same paper.. (smeared by implication?)

this is how it was reported……

http://www.guardian.co.uk/environment/blog/2012/jul/27/climate-sceptics-conspiracy-theorists?INTCMP=SRCH

TOBS ?

Time of OBservation

Or… Time of Observation Bias?

Hi Steve,

figure 23 in Anthony’s paper opens in my view an easy new way to select well sited stations globally.

Click to access watts-et-al-2012-figures-and-tables-final1.pdf

Well placed stations appear to have very similar tends in tmin, tmax, and tmean. Poorly placed stations don’t, mostly because tmin trends are significantly increased.

So dUHI is (usually) affecting tmin much more than tmax (and tmean) and we are looking for stations with small dUHI over time.

To select proper stations, in a first step only those stations with very similar trends of tmin, tmax, tmean should be taken, where “similar” perferably means similar over several t.b.d. time scales.

That would include UHI free stations and stations where UHI did not change a lot, exactly, what is looked for.

If the number of such stations is not sufficient, more stations may be added, by extracting only time spans with similar tmin, tmax, tmean.

Manfred,

I have graphs presented by M.A. Vukcevic that shows that the increase in in average temperature mostly is caused by higher temperature in the winter while summer temperatures are fairly constant.

Example:

http://www.vukcevic.talktalk.net/CETsw.htm

But is this related to UHI for (central England) ?

Manfred, what you suggest is possible, but should be performed blindly and using notarized predictions.

One would, a prior, select some metric which one believed would describe particularities of sites.

One would then suggest from the temperature data alone that in a particular local there are predicted to be; n=14 sites =(1), n=25 sites =(2), and so on.

The locations/names/coordinates and the predicted classifications are then legally sealed and stored.

One would then dispatch volunteers to assess sites, without them knowing ANYTHING about an individual site and have them perform the assessment of site quality.

This data is then collated.

Only when the predictive and recorded data-sets are complete would one compare the two.

Such a study would be very powerful.

If one were to base ones classification on the US data the Watts study for ones predictive identification of site quality and use it in conjunction with European, Japanese or Australian volunteers, then one would have a very good, statistically coherent, study.

I am sure that many of the readers at Bishop Hill could be recruited to examine local station records and siting issues.

However, the would all depend on the predictions being done first, in secrecy, and being deposited in a notarized fashion. This is how similar studies are done in the biomedical field.

I was proposing anohter ivory tower algorithm instead, easy to investigate for those who have the skills to process the raw data. The first result would probably be a histogramm of raw data trends for stations wie equal trends in tmin, tmax, tmean compared with the others.

As I understood, the main issue with Muller’s UHI non-detection is the a priori assumption that low UHI stations have low dUHI. Station ratings are about UHI but only dUHI matters for temperature trends. The connection between UHI and dUHI is conplicated as shown in the log (or square) population law, better sited stations may experience more dUHI due to small changes than already heavily contaminated sites.

Addtionally, station ratings are questionable outside the ensemble verified by Anthony Watt’s surface station project.

Comparison of tmin,tmax,tmean trends fills this gap of Muller’s poorly understood and unverified assumption, because dUHI is typically directly visible as differences in these trends.

The result would still be a lower limit for dUHI, because some akward environment change or environment changes and weather combined may affect all trends in the same way.

But it may already be good eough to detect some dUHI. This thing may then be made more complex with additional selection criteria such as station rating.

Please note: many times in the past, commenters here and at other sites have been critical of every author of a climate science paper for a single detail, and assigned responsibility for an error to every author. Perhaps now, it will be allowed that every ‘author’ of a scientific paper doesn’t necessarily know every detail of every point made.

Another point. The fact that a statistician was brought in over the weekend to finish a paper does not reflect well on the entire effort. A scientific paper is not a homework assignment. I’m actually surprised Steve would put his name on such an effort. Apparently, at least one problem has arisen as a result. I have no idea how this work will shake out, but it certainly didn’t start well. Any such work should be gone over with a fine tooth comb before seeing the light of day.

MarkB:

I’ve been reading this blog since it first came online, and I have trouble thinking of any examples of what you describe. Your description may be accurate for “other sites,” but I don’t think it’s accurate for this one.

It may turn out that there are problems, but the idea behind this was to put it out into the blogosphere for trial by fire. This wasn’t peer-reviewed, and as it turns out, neither was the BEST paper. Both are subject to this pre-peer review review. I don’t think anybody is going to be surprised if problems turn up that require some revision.

ChE:

Not quite so re; Best Paper:

Unless you are suggesting that Best didn’t pass peer review.

Thus illustrating the larger point about blog review.

‘but the idea behind this was to put it out into the blogosphere for trial by fire.”

Precisely.

You will note that data for this paper is absent. That effectively means that we cannot do a proper review. we can’t audit it.

Prediction: special pleading will commence.

Re: Steven Mosher (Jul 31 13:19),

Mosh is right. You have to publish the data as well as the press release.

You cannot even begin to claim the high ground without doing so. Leave such nonsense to the stuffy academics.

Che,

Its is hard to blog review in detail without the data they used being publicly available, but we will try and look at what we can.

Steve: Zeke, I’ll talk to Anthony about making the classifications public right now. He’s a bit sensitive from past experience, but I think that there’s a better chance of the classification being put to good use if it’s public now.

I think Anthony is within his rights to withhold his new WMO-standard classifications from all but reviewers and coauthors until this paper is accepted for publication.

But of course if he wanted to release them now that would be great! I’m dying to know what happens to Wooster and Circleville here in OH, as well as Boulder! Back in 2007, I predicted that Boulder would rise to 2 using the equivalent Leroy 1999 standards from 3 using the now-obsolete CRN standards.

But Hu.

1. Anthony has put it out for blog review and cited muller as a precedent for this practice. that practice included providing blog reviewers with data.

2. Anthony brought Steve on board at the last minute even though hes been working on this paper for a year. Steve has a practice as a reviewer of asking for data. Since we bloggers are asked to review this, we would like the data.

3. if, they want to release the data with limitations, that is fine to. I will sign a NDA to not retransmit the data, and to not publish any results in a journal.

4. You have to consider the possibiity than Anthony and Steve could now stall for as long as they like, never release the data and many people would consider this published paper to be an accepted fact.

Steve: Mosh, calm down. this is being dealt with.

Steve: Steve: Zeke, I’ll talk to Anthony about making the classifications public right now. He’s a bit sensitive from past experience, but I think that there’s a better chance of the classification being put to good use if it’s public now.

Will you continue your association with the paper if the relevant data (stn ids and siting classifications) is not made public and archived in a timely manner?

On the other hand, is it publishable in a journal if it’s completely out there on a blog page?

Release of all the classifications is of course necessary for a complete review. If Anthony is so extremely gun-shy about releasing the whole batch immediately, why not start quickly with a useful subset, such as a truly random 10% or perhaps a few states’ worth?

Well, the stakes just got raised on this one

Congressional testimony

“A new manuscript by Muller et al.

2012, using the old categorizations of Fall et al., found roughly the same thing. Now,

however, Leroy 2010 has revised the categorization technique to include more details of

changes near the stations. This new categorization was applied to the US stations of Fall

et al., and the results, led by Anthony Watts, are much clearer now. Muller et al. 2012

did not use the new categorizations. Watts et al. demonstrate that when humans alter the

immediate landscape around the thermometer stations, there is a clear warming signal

due simply to those alterations, especially at night.”

Hmm. I suppose that sitting on data and not reporting adverse results or merely taking ones name off the paper, just got a bit dicey.

Perhaps, co author Christy should be sent a notice that the results he testified about were not fully baked.

GDN, You have to remember that preprints have always circulated in every field but today we have preprint servers such as arXiv which have fuzzed the standard. Publication today is essentially regarded as the publication of a peer reviewed paper, however, it is always best to consult a journal’s specific policy. This dichotomy is seen in that many people leave the original version on arXiv for people outside the paywall to read and the journals do not object much.

Muller’s papers were available online before being submitted to a journal? I was unaware of this.

General practice is to post/send out preprints at the same time as submission because having a manuscript in good enough shape to post/send out means that it is also in good enough shape to submit. There could be a few days either way in general. People are using arXiv today to establish precedent for submissions because of how long review can take.

Mr. Mosher knows my email, and has my telephone number, and mailing address, and so far he hasn’t been able to bring himself to communicate his concerns to me directly, but instead chooses these potshots everywhere.

The project was worked on for a year before we released, a number of people looked at it at various stages. Dr. John Christy was in fact the one who suggested we should put a note in about TOBS at the end, saying we will continue to investigate it it, because he knew it would be an important consideration. I concurred. We also knew that to do it right, the TOBS comparison couldn’t simply rely on the “trust us” data from NCDC. Christy had already been through that with his study of irrigation effects in California and had to resort to the original data on B91 forms to disentangle the issue.

What we are finding so far suggests NCDC’s TOBS times (we have the master file for all stations) don’t match what the observers actually do. That’s a discrepancy that we need to resolve before we can truly measure the effect along with siting.

Mr. Mosher would do well to note this comparison.

1. When The Team gets criticized on a technical point, they typically dismiss it with a wave of the hand, saying “it doesn’t matter”. Upside down proxies, YAD061, and lat/lon conflations are good examples.

2. When we get criticized on a technical point, we stop and work on it to address the issue as best we can.

Whining won’t help #2 go any faster.

Zeke has been gracious and helpful, and for that I thank him.

– Anthony

Anthony, something you said here raises a question I’ve asked elsewhere:

Over on The Blackboard, I discussed a problem I have with your Figure 23. The first panel of it displays the location of all the compliant sites with your updated classification scheme. Table 4 of your paper says 13 previously compliant sites are no longer considered compliant. However, when I checked the 71 sites listed as Rank 1/2 on the Surface Stations website, it seemed 30 were missing from your map. I even went ahead and generated an image of your map with the location of those 71 sites marked.

If my results are right, either that figure or that table must be wrong. It’s also possible the problem goes deeper. There’s no way for me (or any other reader) to tell.

Could you tell me if I’ve messed up somewhere, or explain what’s going on if I haven’t?

Actually, I may have an answer to my own question. I think a lot of the sites that didn’t show up on that map are airports. If so, Figure 3 (23 was a typo) may actually be showing Rank 1/2 sites sans airports. It doesn’t say so, and it probably should show the airports, but it’s not a big deal. It would also explain why my visual count of locations on the map came up short.

As an aside, anyone could probably regenerate the station list from that image if they wanted. All not publishing such a list does is add a layer of tedium. It might prevent a perfect replication of the list when sites are located very close to each other, but otherwise…

“We also knew that to do it right, the TOBS comparison couldn’t simply rely on the “trust us” data from NCDC.”

But what do you propose to rely on? A complete reinterpretation of the B-91 forms? I understand that your contention is that even these can’t be relied on.

The fact is that a TOBS adjustment is required if time of observation has changed. NCDC has reports that the times did change. Adherence to stated times may be imperfect, but that doesn’t mean that the reported changes can be ignored.

I highly recommend everyone read (or re-read) this link of a post from last fall by Steve and which is also linked in the second paragraph of this post. It’s a “big picture” view of his thoughts on the subject of BEST and satellite temp data. Also very accessible for those of us who are not fully conversant with all the math and science that is discussed here.

One issue of the temperature data related to Urban/suburban/rural is that, given no microsite bias, all three temperature records are valid. The real point is that they are only valid for the area around them that is uniform to the point at which the data was taken . Heat is heat, but if the site only represents .01% of the grid cell, then it’s contribution is only .01 percent. Trying to homogenize that out makes no physical sense. Doesn’t address all the problems but it at least would take that out of the equation.

In keeping with the traditions of climate audit … turnkey code and data? You guys put the paper up for review, we can hardly start without the data.

A few questions.

1. Did you double check the ratings or just put the data in an algorithm?

2. Since the new site ratings seem to depend up some manual labor done using Google earth

Did you have occasion the do a spot check on the accuracy of those ratings?

To the latter point I’ll draw your attention to just one of many comments about the accuracy

of google earth and suggest that a audit would definitely have to go down to the raw data there.

I hope folks kept records.

“The imagery in Google Earth is stretched based on the angle of the aerial object taking it. Further, the 3D terrain of Google Earth is low resolution, so the imagery can shift depending on the inaccuracy of the terrain. (You might want to try turning off the terrain and making comparisons). To make matters worse, Google has not described in detail how it goes about registering imagery. Human factors are probably involved in alignment and especially in stitching together images for esthetic appearance sake.

The key point is that Google Earth is NOT a GIS or survey-grade dataset. They don’t promise to be, and they discourage its use for those types of applications.”

3. Since Wickham made her station list available to you prior to submission, will you make your station list available to others?

4. Why did you stop at 2008?

5. What you say about amplification here differs with what you wrote in the paper.

6. What does a comparison with CRN show?

7. You use USHCNv2 metadata to classify rural/urban. Did you check that? Do you accept that definition of rural?

8. The how were grid averages computed?

Steve: As I mentioned, I’ve been involved with this paper for only a few days. You know my personal policies. I did some limited statistical analysis, which, to my considerable annoyance, I need to revisit. As you know, I don’t have a whole lot of interest in temperature data, which is an absolute sink for time. So I’m going to either have to do the statistics from the ground up according to my standards or not touch it anymore.

Steve … re: the Google Earth imagery – since the Leroy 2010 standard appears to deal only with sinks and sources within 100m – and realistically it would seem likely in many/most cases, with sinks and sources much closer than that, is any error in Google of any importance.

Seems no matter how much you warp, twist, stretch .. over 100m or less would seem you cannot create a huge error. And then – even if it were something on the order of 25% – it would seem that would not make all that significant of a change in the ratings formula result – with exception of a few stations with sinks/sources right at 100m that might suffer a shift. I’d think those cases would be very small, if any?

You might think that, but thats just the kind of thing you want to audit.

As I understand it photos were used and measurement tools were used on those photos. So I would expect that a complete dataset would include.

1. a copy of the photos.

2. a description of the method used.

3. a calibration test of the method.

4. the measurements produced.

Then you want to check that the rating was actually done properly. Remember, just because the rater says its a 3, doesnt mean its a 3. raters make mistakes.

was it blind rated? did the rater know he was re rating a site previously rated at 1? who was the rater?

lots of things you want to know.. and check.. and not take peoples word for it. You know, apply the same standards of scrunity.

Recall if you will Anthony requested paper copies of b91s to check NOAA. I think steve may also have made requests for this data. So a standard of checking it seems to me has been set. All the way down to the raw data if its available..

Why couldn’t you use the Google Maps Sat. view (or other aerial pictometry) as a cross check?

That data is not stretched or warped. Measure a handful of sites where you think an error may exist in Google Earth then do same in Google Maps Sat. view (or Terraserver or any of the similar). Seems that should give you at least a close idea.

All that said, again – considering the Leroy 2010 method uses at max a 100m distance I just can’t see any such warping or other inaccuracy being more than a 5-10 percent if that. And I cannot think there are any large number of stations where a 5-10 meter error over 100 meters would cause a class change in any significant number of stations.

I don’t disagree it should be checked in interest of accuracy, but seems a low priority to me.

Steven:

As a very large critic of you and your *style*, I agree 100% with your take on using GE. (I also applaud your relentless hammering for the data and code) GoogleEarth is a great program that I use all the time, but you always need to confirm with ground-truth and/or USGS topographic maps and/or aerial photography. However, this level of investigation is a just a small part of data collection site evaluation.

For commercial real estate toxics due diligence, we our bound by professional standards to look at historical USGS topo maps, historical Sanborn fire insurance maps, historical city directories, historical air photos, public agency records. All of this research and subsequent analysis costs between $1,000 to $2,000 per site.

This minimum professional standard of care is very likely beyond the capability of Surface Stations crowd sourcing.

In defense of Watts’ Surface Stations (I am a huge critic of WUWT, BTW), they are attempting to do the first steps of the fundamental research that should have already been carried out in each state and county by undergrads at local colleges and universities paid for by the feds between NASA, NOAA and USGS. One year, $1M each and Bob’s your uncle.

Until individual site evaluation is conducted, all of the temperature data sets are “a pig in a poke”, no matter how much statistical lipstick is rigorously applied. It sounds like there are enough holes in the Watts, et. al. study to discredit and derail the effort of reducing uncertainty in temperature records.

Howard …your numbers and info are quite interesting ad valuable. As is your grudging appearance of respect for Watts work ;-).

You point out just how ridiculous this whole temperature data process seems to be. Alleged experts who don’t or won’t do the comparatively tiny amount of proper due diligence to assure the quality of the temp records.

AGW research has been widely reported to receive billions in funding worldwide, and similarly huge sum in the US … yet they won’t due the simple due diligence of proper site inspection and reviews.

Using your numbers – at $2,000 each – we’re talking a couple million dollars in total for a detailed professional review of all 1200 stations.

That seems a tiny price to pay – in fact it would seem that survey should be done every 5-10 years on all of the core reporting stations?

Steve Mc:

A big reminder of why we should be so grateful to guys like Anthony Watts and Steven Mosher.

Having mentioned three people I greatly respect I’ll add that when I read Anthony’s teaser on Friday and all the hoopla it generated, including the published ruminations of the said Steves, something told me to “Calm down,” as one aforementioned just said to another. (The only evidence you have for my calmness and even boredom at this point is that you won’t find any record of me joining the speculation game before Anthony’s press release on Sunday. Mind you, I liked the guy who ended ‘That’s the report from my gut’. But my gut was telling me that this wasn’t worth the attention. And I think that now Steve Mc may be regretting he gave it the small amount of attention he did.)

That isn’t to say that I criticise Mr Watts in the least. He was having a go at something a bit different. Worth a shot – and once problems are sorted out, who knows what the end result will be. And I also feel that Mosher’s decision months back to get pretty heavily involved in BEST was an excellent one.

I love that feeling of seeming to face multiple ways at once, don’t you? Something I feel is essential to get even close to the truth in the climate game 🙂

I agree.

It is good to have more skeptical results getting enough attention to be worth auditing here.

Is SM saying that he was duped by AW?

Is he saying that had he known what conclusion the Watts et al 2012 paper was going to draw he wouldn’t be part of the paper?

What is Mr McKintyre saying here?

Steve: I was only on the paper a short time and I overlooked an important issue, which Anthony had paid insufficient attention to. I should have known better – my bad. I’m very annoyed at myself.

Maybe it wasn’t the last minute until Friday. He let himself be provoked into early release – no crime to my mind, but it would have precluded more care.

Steve, these guys are trolling you. They are trying to manipulate your integrity to go after Anthony. No skeptic believes you contributed anything more to this paper than what was stated nor do they for a moment believe what is posted is the final product. Everything is clearly stated as preliminary and pre-publication.

“Is SM saying that he was duped by AW?”

No, he says that he simply didn’t notice that TOBS issues weren’t accounted for in the conclusion, and his totally reasonable excuse is that he was under time pressure and therefore rushed.

“Is he saying that had he known what conclusion the Watts et al 2012 paper was going to draw he wouldn’t be part of the paper?”

I understood him to be saying TOBS issues are important, so I’d assume he’d have told anthony and tried to help him make the paper better …

Steve: quite so. it is very much my intention to ensure that these issues are recognized and properly treated.

Steve, the discussion and conclusions of Watts et al. (2012) state: “We are investigating other factors such as Time-Of-Observation changes which for the adjusted USHCNv2 is the dominant adjustment factor during 1979-2008.”

This makes this web publication even weirder to me. At least Anthony Watts seems to have ignored this problem knowingly. How did he expect to pass review with leaving such an important confounding factor out of the analysis. And it is not as if analyzing this would require a whole new study, that may have been an excuse for a less important confounder.

Mr. McIntyre (or others)

I am aware of the theory behind the TOBS, but it has struck me as an adjustment of a contrived scenario.

Background: I grew up as the son of an aeronautical engineer who had a fluid based min-max thermometer that he religiously recorded when he came home at the end of each work day and during the weekends. And plot them up on 11×17 K&E 1x1mm graph paper, one sheet per year, stack on top of each other, year after year, for about 35 years.

Did he always record the make the recording at 6pm? No. When he got home or after the hottest part of the day.

I am trying to figure out how some TOBS adjustment can or should be made to a min-max temperature record — provided that there was a gap between the min and max markers and the current mercury levels. A guy doesn’t do this to record bad data.

Yes, cold fronts would come through at 10pm making the low that was made at 4am and recorded at 6pm not the low of the calendar day. That low would be recorded the next day. But how can a TOBS adjust for that without recording min-max temperatures many times per day? Does that data exist? I doubt it. And why would it be a TOBS ‘adjustment’ instead of the min of several mins recorded that day?

Does the metadata exist to support blanket significant TOBS adjustments? Convince me that TOBS is not a contrived adjustment to get the answer “we want.”

Steve: allowing for a TOBS adjustment is reasonable enough. When max min are read daily, if they are read in late afternoon near the daily maximum, a hot day can end up contributing to the maxima for two consecutive days and the cooler next day not counted. The adjustment is made relative to theoretical midnight readings.

I understand the suspicion of these various adjustments which often seem arbitrary, but this one is fair enough,

If TOBS for rural stations was a problem in Anthony’s current paper, wouldn’t it have messed up his last one (Fall 2011) as well?

Mindbuilder,

Fall et all examined raw, tobs, and fully adjusted data. Their primary conclusions were based on the fully adjusted data, since raw data can have lots of other confounding issues (tobs changes, instrument changes, instrument moves, etc.) that may be correlated with urbanity or CRN rating the skew the result.

Steve,

Along with TOBs you might want to take a closer look at MMTS. I sent Anthony some data on the sensor transition dates so that you can do a before/after comparison.

Here is some past work I did on the subject, which seems to show a pretty clear cooling bias in max temps due to the MMTS transition: http://rankexploits.com/musings/2010/a-cooling-bias-due-to-mmts/

In my original look at this information (2007) here, I used TOBS data. I need to revisit this work.

Steve … the Conclusions section of the paper does note the TOBS issue is one that needs more investigation:

“We are investigating other factors such as Time-Of-Observation changes which for the adjusted USHCNv2 is the dominant adjustment factor during 1979-2008.”

Seems to make it clear that it was not overlooked – the issue was acknowledged and noted for future review?

Steve: needed to be dealt with.

The problem is that everyone who follows this in detail knows that there is a TOBS adjustment. we know why it is made and we know that the adjustment has been tested and validated. we know that everytime you see a weird result with data or something too good to be true, you check to make sure that the proper TOBS correction has been applied.

in fact we spent a considerable time here at CA going over TOBS.

Anytime Anthony does work my first question is always..

Did you use TOBS?

its basic.

Where has it been tested and validated? Citation please. When I read the original paper the adjustment is based on the authors made it clear that it was neither tested nor validated against real data, but in fact was largely guesstimated. That it cools the past and warms the present by around 0.4C is enough to tell us it should have been tested somewhere. I’ll be interested in Steves findings. I suspect this is a minefield.

Steve:

“The satellite data seems quite convincing to me over the past 30 years and bounds the potential impact of contamination of surface stations”

Why are you so sure? Have you studied the satellite data and methodology with the same level of auditing scrutiny as you did with the paleo-reconstructions to claim so confidently, or it is just simply convenient to say so, in order to dismiss potentially “toxic” conclusion that the surface data might be “cooked”. Correct me if I am wrong, but the procedures of collecting and processing the satellite data to create a temperature record are extremely complicated, much more so than in the case of the surface record, and both satellite records underwent more than one revision already, all of those revisions increasing substantially the trend. What is the specific basis for you belief that satellite data has more integrity than the surface record?

If TOBS is a serious problem then I’d say we need to go back to the old observation times, or use both. And it also seems like we should erect shelters at each location that are the same as the original ones along with the MMTS style shelters. Recording electronic sensors or digital cameras pointed at thermometers could be used in the old shelters.

Steve: “Anthony sent me his draft paper. In his cover email, he said that the people who had offered to do statistical analysis hadn’t done so (each for valid reasons). So I did some analysis very quickly, which Anthony incorporated in the paper and made me a coauthor though my contribution was very last minute and limited. I haven’t parsed the rest of the paper.”

So, you allowed your name to be added to the list of coauthors without reading the paper itself?!

Steve: If the paper is submitted anywhere, I will either sign off on the analysis or not be involved. I didn’t “allow” or not “allow” anything in respect to the discussion paper.

“I support the idea of getting the best quality metadata on stations and working outward from stations with known properties,… To that extent, Anthony’s project is a real contribution, whatever the eventual results.”

I agree on the idea, if achievable. But how does Anthony’s project contribute? The data seems to be just a photo (and maybe some Google Earth measurement) at a particular point in time (2009). The metadata needed for trend is of station history.

Steve: quite so. NCDC has pretty good station histories.

Nick, maybe Anthony’s project will get some beurocrat to authorize a few thousand dollars for a sites’ road trip. Reading Anthony’s reply to Revkin, it seems to me that he has a point in his argument about getting out of the office and do some field work. The basic of the temp trend are the stations. Does it not make sense to know how they are sited and what can effect them before you go through all of your modeling tests?

USGS and USCGDS areal photos used for their topo maps could be a usefull way to go back in time and inspect the site history.

Let’s hope they saved the film.

Nick,

you seem to think that NO current data is better than what Watt’s has done. How does that work?? Because you already have the answer you prefer??

I would note that better site data was needed to tie into reclassifying the stations using Leroy 2010:

Click to access CS202_Leroy.pdf

“I agree on the idea, if achievable. But how does Anthony’s project contribute? The data seems to be just a photo (and maybe some Google Earth measurement) at a particular point in time (2009). The metadata needed for trend is of station history.”

And that can be readily accomplished without leaving your desk. Do not do smiley emoticons.

I agree that Watts evaluations are snapshots but if those snapshots present a different picture that the meta data what then?

“if those snapshots present a different picture that the meta data what then?”

They don’t present a “different” picture. They present an unrelated picture. To analyse trend, you need to know about the past. It’s possible that the current photos could be used to aid interpretation of past metadata, but I can see no indication that this has been done.

Oh god, Nick here we go again. Very obviously I am saying if the snapshot shows a very different or even just different picture than the meta data would imply for the time of the snapshot there is a problem. And by the way a snapshot could be used for even validating meta data further back in time than the time of the snapshot. For example, there are changes that the snapshot might show that could tracked by other means, like when a parking lot was blacktopped or an air conditioner was installed. I am under the impression that even good meta data does not account for these changes seen in the micro climate by these snapshots.

Having said that, I have continued to repeat that one must known when and how and over what time period the micro climate changed to become what it is documented in the snapshot in order to fully utilize it. That is particularly true where studies use only a brief period of the last 30 years. If a really low quality station evaluated today were a low quality station 30 years then we can expect no effect on the 30 year trend. Also would a slowly evolving change be found by change point algorithms or meta data?

Let’s address the TOBS a different way.

Here is what we know: Someone recorded a min and a max value at a recorded time on a specific day. We will assume the record is good enough so that 4s can be told from 8s, 7s from 1s, 6s from zeros. That potential source of error is for another day.

Some recognizes that there is a TOBS issue with the max on day B on the same day as an unusually cold min. Did that max occur before cold front or is it a hold over from the day before?

As I see it, we have two basic assumptions:

1. the recorder is contientious so that we can trust the max and min, no action necessary, or

2. the recorder is an idiot or doesn’t care about getting it right.

If 2, then we recognize that the max MIGHT be in error.

our choices are:

2A: Record the potential error value in the measurement, and thus increase the error bars of our analysis, or

2B: Fudge the number to some estimate we TOBS adjust e and… do what to the overall error estimate? Leave it unchanged?

Naturally, I’m in favor of 1, with 2A as a fall back if the metadata indicates sloppiness of the recorder. 2B seems unacceptable to me ever since high school chemistry lab some 40 years ago.

If people believe TOBS is really important, then it should be primarilly be seen in increasing the uncertainty of results to a point that few conclusions can be made, not strengthening the signal.

And what is this “estimated at midnight” baloney? What on earth in the written record indicates that the min was happened at 11:50 or 00:10? Does it really make a difference to the 100 year climate record if the “day” was from midnight to midnight or 18:00 to 18:00? A six hour timeshift over a 100 year record is that critical to the result? Moving the temperature to an absolute written record at 18:00 to an estimated record at midnight is doing nothing to improve accuracy. My skepticism is pegging off scale.

Stephen the point has to be in the consistency of the time of the measurement and not precisely when it is made.

What we have is a non-robust data gathering mechanism. I’m all in favor of accepting that reality, and accepting the variance that comes with it.

Our basic problem is that data from weather stations were not designed to be used in exotic statistics. They were designed to tell people what the temperature…etc. is

So therefore we want to acknowledge in our statistics that reality. If a signal is not detectable given the real life experience, then it is not very strong

Stephen Rasey —

I too was once a TOBS Denialist, but I became a True Believer after a lengthy discussion on CA back in 2007:

I agree with you that there is nothing magical about midnight — 9 or 10 AM or PM is in fact the optimal time to avoid double counting of warm days or cool nights.

But switching from 5PM or so to 7AM or so, as has often been done, definitely cools the station on average.

Personally, I think it would be better to just treat this as a new station with a new offset, rather than to try to use the Karl algorithm to “adjust” it away.

Has anyone ever been able to study whether taking Tmin and Tmax daily over a month, a year, etc. adequately represents what happens to temps all through the varying 24 hour cycles? e.g., aren’t there some days and nights where many more minutes and hours are closer to Tmax or closer to Tmin? Does it all somehow average out or could there be significant distortions of the real “physical” temps because we don’t have 24 hour continuous data in the historical record? Do satellites now provide any adequate comparison for “validation” purposes? I may not be phrasing any of this right, I’m not in this field, but wondering if Tmin and Tmax can be enough for an accurate representation even if we had good enough data for those numbers?

Skiphil,

Some stations measure once a day. Others measure once per hour, the newest network(CRN) does it every 10 seconds. There will different readings recorded by different measurement systems. It doesn’t make much difference as long as long as the chosen method is applied consistently at each station. Might on average be worth a couple tenths of a degree difference due to method used.

Something I’ve never seen discussed is what happens if the chosen method for a station gets changed. I think that should result in a separate record being created. Although each method is self-consistent, the difference in methods may end up creating a unwanted step-change in the record if only a single record continues to be maintained.

Here is an example using a USCRN record.

http://www1.ncdc.noaa.gov/pub/data/uscrn/products/monthly01/CRNM0101-AK_Barrow_4_ENE.txt

Columns F-G are Tmax, Tmin.

Column H is (Tmax+Tmin)/2

Column I is the average of 24 hourly readings ultimately derived from the averages of 10 second readings which are taken from the average of three different thermocouples.

Note that sometimes column H is higher than I and sometimes the reverse is true.

It is certainly a well thought out system. Too bad the system is only about ten years old. Also too bad is the idea they don’t include humidity readings in the data files which I know they record.

Oops!

I linked to the monthly file instead of the daily file, but the basic description is similar.

Bah!

Now that I look at the daily file I see that is where they record the humidity. Dumb of me for not checking it out first.

Using data from here, here’s some code that looks at adjusting the time of observation, simulating min/max measurements from the hourly CRN data. It’s setup to compare 5pm vs other times:

# Import data, convert invalid measurements data = read.table("CRNH0202-2011-FL_Sebring_23_SSE.txt"); data[,11:12] <- apply(data[,11:12], 2, function(x){replace(x, x==-9999, NA)}); # Setup matrices mins = matrix(rep(0, 24*364), 24, 364); maxs = matrix(rep(0, 24*364), 24, 364); # For each starting hour for (off in 1:24) { # get the correct local hour from the data hour = (data[off,5]/100) + 1; # shift 0..23 to 1..24 # For each day for (day in 1:364) { # min is the min of the min, max is the max of the max start = off + ((day - 1) * 24); maxs[hour,day] = max(data[start:(start+24),11],na.rm=TRUE); mins[hour,day] = min(data[start:(start+24),12],na.rm=TRUE); } } # Convert missing days to NA mins[which(is.infinite(mins))] = NA; maxs[which(is.infinite(maxs))] = NA; # for each hour, average daily diffs for the year diffs = rep(0, 24); for (hr in 1:24) { diff = ((mins[18,]+maxs[18,])/2) - ((mins[hr,]+maxs[hr,])/2); diffs[hr] = mean(diff, na.rm=TRUE); } # plots plot(diffs,type='l',main='Average difference from 5pm by hour\nCRNH0202-2011-FL_Sebring_23_SSE'); plot(((mins[18,]+maxs[18,])/2) - ((mins[8,]+maxs[8,])/2),type='l', main='Diff in daily temp moving from 5pm to 7am\nCRNH0202-2011-FL_Sebring_23_SSE');Output plots are average difference over the year for different observation times, and daily differences for 5pm vs 7am. The yearly average difference for CRNH0202-2011-AK_Barrow_4_ENE has similar shape, but smaller spread.

Steve: very relevant.

Thanks Bob, and I also found this which discuses related matters:

http://www.bishop-hill.net/blog/2011/11/4/australian-temperatures.html

That is the kind of issue I was groping toward from my layman’s perspective, that it *might* matter by more than a tenth or two if a temp record is only (Tmin + Tmax) / 2

This is maybe more about the error bars then any specific correction that could be made, but I was thinking about how temps can *sometimes* be volatile during a 24 hr period, especially as weather fronts move in or out etc. Maybe it all averages out, but if there are any cloud cover changes as discussed at that BH article then there might be warming or cooling that is not about “global warming” per se (as anything related to CO2).

The point is this. If I told you that the thermometer was moved from a grassy field to under an air conditioner you would say that things changed and you would want to investigate that. If the time at which the observation was taken changes we would also want to investigate that.

we cannot pay attention to changes in observation practice in a selective manner. If we complain about changes to instruments, we have to complain obout changes in time of observation. When we do actually look at the EFFECT of changing time of observation we see very clearly that it biases the answer. changing TOB changes the temperature. Attention to details like this is something that WUWT fans should appreciate. There are two approaches to TOB changes

1. split the data, and call it two stations.

2. correct the bias.

Bias correction for TOB has been investigated. At John daly, here at CA, and in the literature.

Ignoring the need for a correction, pretending that observing practice matters for microsite but doesnt matter for changing the TOB, is not best practices

I think that Watts initial intentions were good in that he kept updating the CRN ratings for stations as the team doing the work turned it in. Some participants at these blogs, and including me, did some preliminary calculations and while the results appeared to vary with who was doing the calculations or more importantly how the calculations were being done the results were not overwhelmingly different (although I thought with sufficient data one might be able to significant differences) than the adjusted results from USHCN. The gallery at the time was expecting some dramatic differences or so was my perception. My point at that time was that the number of CRN 1 and CRN 2 stations was very small and that given the noisy data for temperature trends amongst even closer spaced stations meant that in order to see a statistically significant difference due to CRN rating would require a very large difference in trends or a larger number of stations in those classifications. I even suggested the grouping of CRN123 versus CRN45 at that time.

I admired Watts, and particularly his teams efforts, in going out into the field and looking the micro climate conditions first hand. I have often thought that climate scientists like economists fling data and statistics around of which they do have an intimate understanding and the result could be garbage in and garbage out.

I was puzzled that when Watts withdraw the updating of the CRN ratings until I realized he hoped to get the data analyzed and published. He was slow in accomplishing this task and in the meanwhile others published papers based on the CRN findings before Watts did his first paper. I have not been happy with the approach taken by any of these papers including the Watts coauthored one.

Now Watts has a different rating criteria that evidently gives different results and obviously makes this result, if it were to hold up, a publishable event. I do not understand if the prepublication is a matter of shopping the results around or not, but I see no way in hell it can be published without the original data and code. After all Watts is not exactly a climate scientist regular who might be given that exception.

Yeah only real climate scientists can leave out the code and data. That’s one thing we know for sure.

“but I see no way in hell it can be published without the original data and code.”

that’s because he is not a member of the Hockey Team.

What surprises me about all this is the apparent urgency that was created which seems unwarranted and is likely to lead to mistakes and omissions.

More haste less speed seems a worthwhile maxim in science especially when writing about something as slow moving as the Earth’s climate

Tonyb

It seems to me that the big deal with the paper is that a group has taken the trouble to examine the sites and analyse them using physical thinking. This seems to me to be potentially a far superior approach to the sort of adjustment flummery used heretofore. If detail needs sorting out, so be it: at least it won’t be smuggled into the literature, errors and all, by pal review.

I must say, though, that I might not have liked having my name added to a paper in a rush. (It happened to me a couple of times, and both times my new colleagues managed to get my name wrong!!)

Well, I am glad at least Mosher wants to apply the same standards to this paper as to one written by, say, Michael Mann. And I’m afraid I can’t understand how “the statistics” for anything like this can be done over a weekend. It’s troubling. I can’t understand the paper very well, there isn’t enough detail.

From the Watts paper – Lines 215 >>> 226

The USHCNv2 monthly temperature data set is described by Menne et al. (2009).

The raw and unadjusted data provided by NCDC has undergone the standard quality-control screening for errors in recording and transcription by NCDC as part of their normal ingest process but is otherwise unaltered. The intermediate (TOB) data has been adjusted for changes in time of observation such that earlier observations are consistent with current observational practice at each station. The fully adjusted data has been processed by the algorithm described by Menne et al. (2009) to remove apparent inhomogeneities where changes in the daily temperature record at a station differs significantly from neighboring stations. Unlike the unadjusted and TOB data, the adjusted data is serially complete, with missing monthly averages estimated through the use of data from neighboring stations. The USHCNv2 station temperature data in this study is identical to the data used in Fall et al. (2011), coming from the same data set.

Is it not the case that Anthony is simply using real_climate_science that would underscore a comparison of oranges to oranges thereby avoiding inconsistency from within the real_climate_science community with respect to their own accepted science (oranges) ??

If this is the case then it would seem to me that criticism surrounding (TOB) is a moot point.

I realize it was probably just miscommunication between the two of you, or maybe a last minute honour Watts thought to give you by listing you since you had pitched in, but one of the things that reassured me about the mathematics behind the paper was your involvement. It was disappointing, to say the least, to have enthusiastically touted this paper to some friends, and then see your post.

But … let that be a lesson to me. I despise self-interested cognitive biases in science, but am hardly immune.

I realize this is an interruption in what you would otherwise be doing, Steve, but I hope that you can help to tighten up the paper and salvage the value there is within it, which I hope is high. But, failing that, if it needs to be criticized, I hope you’ll do that too, with respect and rigour both.

Let the science prevail.

(That said, the conclusions of the paper make 100% intuitive sense to me and I won’t be in the least surprised to see them born out.)

I put up some initial thoughts here: http://rankexploits.com/musings/2012/initial-thoughts-on-the-watts-et-al-draft/

If the CRN1/2 stations from Fall et al are indeed mostly included in the new CRN1/2 pool, it does raise some concerns about how a larger effect is being found using a laxer criteria.

“Watts et al. say a statistically significant signal was found in data using minimum adjustments.”

The fact that a statistically significant signal can be found in a set of data says nothing about the accuracy of that data. And any conclusion you draw from the statistical analysis is only as strong as the underlying data is accurate.

The raw data is known to have problems. If you refuse to address them, and if they’re significant, it’s garbage in, garbage out.

Watts needs to show that homogenization algorithms are wrong. You do that by analyzing the algorithms and showing where they are wrong, not by asserting that an analysis of raw, flawed data must be better just because it shows a lower trend. That’s essentially what Watts is doing.

Steve: Yes and no. I agree with your comment about the importance of addressing problems in raw data – that’s obviously been a major concern of mine with respect to bristlecones, Yamal and so on, where there are problems more serious than “tobs”. I also agree with your remark about assuming something is better because the result meets expectations. Again a criticism of mine with respect to proxy reconstructions. ‘

I also think that the deconstruction of homogenization algorithms is a different job from presentation of the surface stations data classification and that the two jobs should be kept separate.

That was meant to be a reply to Stephen Rasey’s post below.

Wrong, because Watts is declaring that the homogenization algorithms are wrong, and pretty much stating that it’s due to a desire to show an inflated trend. He didn’t just present his surface stations data classification (hidden, as Mosher has pointed out, where’s the data?), he says they prove the homogenization algorithms are wrong.

If he didn’t go down that path, I’d agree with that. But not only has he gone down that path, but that’s his entire schtick for years, and that’s the major conclusion of his “work”.

How can you, as co-author, have missed this ???

Watts “surface stations data classification” is the result of applying Leroy (2010) siting standards to the existing readily available station data. Not a thing I can see to stop you from duplicating his work and verifying or disproving his results.

Watts identified the data used. He identified the siting standards used. He listed the process they took. And he showed his results including how the stations shifted in rating catagories from the prior Leroy (1999) standards..

The USHCN Version 2 Serial Monthly Dataset page here:

http://www.ncdc.noaa.gov/oa/climate/research/ushcn/

… provides the 4 data sets for each set of station ratings, along with the MMTS and Cotton Region Shelter (Stevenson) site information used for Menne (2010).

The NCDC Station Histories appear to be here:

http://www.ncdc.noaa.gov/oa/climate/stationlocator.html

WMO-CIMO endorsement of Leroy(2010) standard is here:

Click to access 1064_en.pdf

Leroy(2010) is here:

Click to access CS202_Leroy.pdf

Watts(2009) is here:

Click to access surfacestationsreport_spring09.pdf

And Muller’s Station data is here:

Click to access berkeley-earth-station-quality.pdf

I am a complete layman. I read the Watts report, did a little reading – mostly at blogs like here, and with 5 minutes of searching I was able to find all the above data links.

Of course I was a fool for doing so as after doing the digging, had I bothered to read the references I would have found all of this data was listed in the Watts report itself.

I believe that is all of the data required to reproduce Watts work. I even included the NCDC station history metadata in case you don’t want to do the extensive visual and/or onsite inspection Anthony and his help spent well over a year doing.

Seems to me instead of complaining about his work – if you want to refute it you should just jump in and have at it. Do the work and show where he is wrong.

Sorry dhogaza … forgot to provide you Fall (2010):

Click to access r-367.pdf

http://www.surfacestations.org/fall_etal_2011.htm

NOAA’s Climate Reference Network Site Handbook (see Sec. 2.21)

Click to access X030FullDocumentD0.pdf

Watts Surface Stations Project site master list:

http://www.surfacestations.org/USHCN_stationlist.htm

(the brief notes should provide an initial screen of suspect stations)

A. Scott: Watts “surface stations data classification” is the result of applying Leroy (2010) siting standards to the existing readily available station data. Not a thing I can see to stop you from duplicating his work and verifying or disproving his results.

One thing that would stop us from verifying his results is that he has not provided a list of the USHCN that he has classified, the classification that has been assigned, or the methodology used to make the assignation.

The fact that Google has aerial imagery, that Leroy 2010 explains a new classification scheme, and that USHCN provides its station data freely to the public does not somehow make Anthony Watts’ refusal to provide the station ids that he used, or to provide the Leroy 2010 station classifications that he used, and or the methods used to make that classifcation in his paper more palatable. Hide the data; hide the code! 😆

Steve: I agree that there is little point circulating a paper without replicable data – even though this unfortunately remains a common practice in climate science. It’s not what I would have done. I’ve expressed my view on this to Anthony and am hopeful that this gets sorted out. Making the data set publicly available for statistically oriented analysts seems far more consistent with the crowdsourcing philosophy that Anthony’s successfully employed in getting the surveys done than hoarding the data like Lonnie Thompson or a real_climate_scientist.

It would have been nice if you’d spoken out on any of the occasions in which I’ve been refused data. You are entitled to criticize Anthony on this point, but it does seem opportunistic if you don’t also criticize Lonnie Thompson or David Karoly etc.

Ron Broberg (Aug 1 17:34), Re: A. Scott (Aug 1 03:34), Re Ron Broberg comment:

See: surface-stations/#comment-345602

I agree Anthony Watts and crew should be held to the same incredibly tough standards that are required to be met by everyone else in the field of climate science.

So what does that give him before he has to cough up the code the data and all the details — twenty, thirty years? …and a half dozen FOIAs defended to the teeth? Just askin…. 😉

Or we could hang on a few days or weeks and let them deal with the other important issues that have been raised… Relax….

“I also think that the deconstruction of homogenization algorithms is a different job from presentation of the surface stations data classification and that the two jobs should be kept separate.”

Seriously, if you believe it’s a different job, how can you justify Watts position that the trend based on homogenization is “spurious” and inflated by a factor of two?

If it’s properly a different job, Watts should STFU.

If you like his conclusion, he should do the work.

Really, who are you trying to fool here?

“If it’s properly a different job, Watts should STFU.”

And as co-author, you should call him on it.

(I know you’re not really a co-author, as it’s normally understood, but until you make him take your name off the paper, you are a co-author. Time to choose, are you, or not? If not, make him remove your name from the paper, publicly.)

Steve has frequently said that when a novel statistical method is introduced, there should be a paper on details of the technique that is separate from the paper using the technique. His comment above seems no different.

There is a step before RAW data , and that is how you go about collecting it .

Remember this is problem of data collection becasue of problems with instrument/sites .

If you don’t get the collection right what ever you do with the data afterwards does not matter .

Re: KnR (Aug 1 04:15), Finally somebody who agrees with me.

Can I set this in a poster?

Is TOBs bias such an issue given the Watts’ study period was 1978-2008? I note that Fig 3 in the Menne et al paper “THE UNITED STATES HISTORICAL CLIMATOLOGY NETWORK MONTHLY TEMPERATURE DATA – VERSION 2” shows that the TOBs bias trend flattened after 1990… and so the impact on Watts’ study should be limited to only the 80’s (if at all).

In any case from the Menne paper:

“The net effect of the TOB adjustments is to increase the overall trend in maximum temperatures by about 0.012°C dec-1 and in minimum temperatures by about 0.018°C dec-1 over the period 1985-2006″.”

So even if we accept that this applies across the entire Watts’ study period, then this is still only a 10th of the trend Watts is highlighting – i.e. 0.145°C per decade.

That’s odd. In my version of the Menne et al BAMS paper, the TOBS adjustments are shown in Fig 4 and the corresponding text, starting on p 996, says:

“The net effect of the TOB adjustments is to increase the overall trend in maximum temperatures by about 0.015°C

decade-1 (±0.002) and in minimum temperatures by about 0.022°C decade-1 (±0.002) during the period 1895-2007.”

Eyeballing Fig 4, over 1979-2008 the trend difference due to TOBS looks like 0.06 °C/decade.

Click to access 141108.pdf

Got that from the above… also didn’t realise it was different from the BAMS paper – but interestingly the trend for 1985-2006 is specified there [and this is the period of interest].

My point is given that Anthony is only referencing the period from 1978-2008, then something like 50% of the stations would have ALREADY changed TObs (Time of Observation) from evening to morning. So these cannot be an issue. That should logically reduce this already small overall trend. See DeGaetano 2000:

http://journals.ametsoc.org/doi/pdf/10.1175/1520-0477(2000)081%3C0049%3AASCSOT%3E2.3.CO%3B2

But hinking about this a bit further, maybe there is a MMTS conversion issue in play – and not only that you go from a LiG reading to an electronic one. As Menne et al suggest that most of the HCN sites were converted in the 80’s. Modern base units record daily min/max temps for up to 35 days, so I would assume that this would be done on a strict daily basis (midnight – midnight).

Click to access nimbus-spec.pdf

But I’m not sure if the earlier ones did this:

http://www.crh.noaa.gov/ind/?n=mmts

So a conversion from an old style MMTS to a newer one may introduce another TOB issue where you go from a morning reading to a midnight one. Is this undestood and accounted for? Wouldn’t this introduce a warming bias?

I wonder if there was a typo in the draft – 1985-2006 should have been 1895-2006? Otherwise it’s an odd time period to choose.

There was a significant change in Fog 4 between draft and final. The flattening post 1990 that you noted has gone away.

Here is the 1986-2006 version. Good to see that peer review works, showing an impact of TOBS changes that ignores most of the 1970-90 switchover is disingenuous at best.

But if the change in observation was from afternoon to morning, I’m not sure the adjustment makes sense. “The net effect of the TOB adjustments is to increase the overall trend in maximum temperatures by about 0.012°C dec and in minimum temperatures by about 0.018°C dec over the period 1985-2006”. The bias in maximum readings is a positive bias, namely the measurement is the max of the tail end of the previous day and the current day’s maximum. How can fixing that bias yield an increase in max temps?

Never mind. If they’re making the 7am measurements look like 5pm measurements, they’d need to correct the cold bias in the minimums and reintroduce the warm bias in the maximums.

That’s not odd at all; that’ what the data shows

This is a particularly funny thread. People are worried that Anthony rushed things and that led to errors.

Yes, he did, and it did. That wasn’t the point of the timing of the release. He hasn’t submitted so there’s no need to rush from this point. He’s doing exactly what he said. Let the blogs have at it. What Steve Mc here is doing, will only add to the paper. The work of the station sitings can stand alone without the TOBS consideration. The TOBS will only give a fuller picture.

The timing was a righteous poke back at some tricks played earlier. I like it. Goose/gander.

Watts should have nailed down who is and isn’t a co-author first (and whether the co-authors stand behind the paper).

Gosh, now I’m on moderation, very cool!

Steve: not personally. don’t get overinflated. some words trigger moderation.

crosspost from WUWT:

From my limited non-technical understanding the data is readily available publicly with the exception of Anthony’s siting results using Leroy 2010. This includes the raw and adjusted temp data along with the Leroy 2010 rating spec’s, which would allow anyone to do their own duplication of the work.

To me that seems preferable here – anyone attempting to duplicate should start from the beginning – rather then working backward from the conclusions.

The paper notes they applied the readily available specs of Leroy 2010 to the Fall 2010 USHCNv2 data set.

They identify the data they use:

“We make use of the subset of USHCNv2 metadata from stations whose sites have been classified by Watts (2009)” and; “site rating metadata from Fall et al (2011)”.

They further narrow:

“Because some stations used in Fall et al. (2011) and Muller et al. (2012) suffered from a lack of the necessary supporting photography and/or measurement required to apply the Leroy (2010) rating system, or had undergone recent station moves, there is in a smaller set of station rating metadata (779 stations) than used in Fall et al (2011) and Muller et al. (2012), both of which used the data set containing 1007 rated stations.”

Seems correct to expect Steven Mosher and Zeke would have access to this station data as Watts used the same data as Muller 2012 in this regard?

They included description of data used, methods – how they calculated numbers, and their conclusions.

To me it would seem much more relevant, for those interested in replicating to follow the entire process – and see how their siting category counts came out.

And only THEN compare to Watts conclusions.

I would also be interested in seeing how the USCRN stations, which were designed per Leroy 1999 (“which was designed for site pre-selection, rather than retroactive siting evaluation and classification”) fare under a review using Leroy 2010.

Watts 2012 notes “Many USHCNv2 stations which were previously rated with the methods employed in Leroy (1999) were subsequently rated differently when the Leroy (2010) method was applied in this study”…

Again, it would be very interesting, and potentially valuable, to see if the new USCRN sees the same siting quality results using Leroy 2010.

Having personally visited the CRN site north of Seattle, and seen photos of a number of the other sites, I’d be quite surprised if any ended up below the top site ranking using Leroy 2010.

Regarding replication, it seems to me that the different aspects such as statistical analysis, checking Leroy 2010 scores, and so forth, are best done by those with expertise and interest in those areas. There’s no reason to demand that a single person or group do it all, or that it be done at the same time, or even in a certain order.

Personally, I think it will be pretty clear upon looking at 10 or 20% of the sites whether Anthony’s new Leroy 2010 scores are done correctly, and that evaluation of the statistical analysis should not wait for a re-scoring of all the sites.

Steve, you note that amplification is negligible over land in models with respect to long term trends. This is true globally in models, but I have to wonder the extent to which this effect varies (in models) from location to location. I wonder about this because a fee years back I empirically estimated the amplification factor globally based on interannual temperature fluctuations. I found it to be in the model ball park (perhaps a bit larger, actually) which implied a large warming bias in the surface data, a large cooling bias in the satellite data, some less large combination of those two, or an unknown real climactic effect on lapse rate variation that only operates on the long term and is absent from current models:

I was motivated to see if I could get a similar result for the US, so I compared USHCN data from NCDC with UAH data for the same area. Much to my surprise, the slope for twelve month smoothed and subsequently detrended data (UAH as X, USHCN as Y) indicated more variation of temperature at the surface: a slope of about 2.23 (2.32 if you don’t detrend). This leads to a very slight cooling of the surface relative to the satellite record adjusted to surface variation levels. This suggests to me that global trends are biased warm but there is not likely to be a significant bias in the US record.

If the time of observation should turn out to be a problen during the last 30 years, that would be really, really shocking.

(PS. So I learned what TOBS is in meteorology; would anybody inform me what STFU means in Canada?)

So Climate Audit has new rules. My own experience was that

1) if you are impolite

2) if you have intellectually nothing to offer

you get snipped.

Seems this guy, who is and has, is treated differently.

Steve is more tolerant towards his critics than his supporters

The phrase says more about the the person who used it than it does about Steve, so leaving it in place could be a fair response

Steve: Precisely so. I expect regular Climate Audit readers and commenters to comment politely and am disappointed when they don’t. If someone does not comply with such policies, I prefer that people do not respond to such comments.

Some, not Eli to be sure, say that Tony Watts’ rush to press release was driven to come out before John Christy’s testimony in the Senate today.

Steve: I know that it was more related to Muller. It was definitely a mistake to let the Berkeley thing get under his skin; Anthony realizes that now.

Seems like I remember a similar situation with the earlier BEST results. Strange, but I don’t recall the same some and definitely not Eli complaining vociferously about such an egregious action.

Definitely crickets …

General practice is to post/send out preprints at the same time as submission because having a manuscript in good enough shape to post/send out means that it is also in good enough shape to submit. There could be a few days either way in general. People are using arXiv today to establish precedent for submissions because of how long review can take.

As Eli recalls that is what Berkeley did, and it is quite standard. What Watts did is post a draft, and a draft full of blunders.

Eli likes to repeat himself by posting the same text multiple times…

You may not call it a “draft”, but the initial Berkeley release was rushed out filled with a generous amount of errors. Furthermore, it appears that the updated “manuscript in good enough shape to post/send out” is not quite so good enough. However, the BEST folks did not seem to be hindered from milking the media without indicating the publication status of the document.

Maybe “Eli recalls” selectively…

RomanM,

It is true that we do not know the publication status of the BEST document. We could however interpret Mosher here as implying that McKitrick’s review may have been found to be “not quite so good enough” by the editors:

http://scienceblogs.com/stoat/2012/07/30/cage-fight/

According to an update on Ross McKitrick’s web site:

Sounds to me like it has been rejected…

SOP at AGU journals now. If they think major changes are needed they reject with a suggestion to rewrite in view of the referee’s reports. It unclogs the pipeline. BEST has updated their web page to show that one of their papers has been accepted subject to some changes in the methods paper which is also under consideration.

Josh, you have a bad habit of making declaritive sentences with assertions that may or may not be true.

This has been the polite language for rejecting non-viable statistics journal manuscripts as far back as I can remember. At that point, the paper is no longer under consideration for publication by the journal so it has indeed been rejected. Should the manuscript be rewritten, it is resubmitted as a new paper.

Your efforts in spinning facts based on concepts such as “it depends on what the exact meaning of the word rejected is” come across as comical…

If you don’t know the meaning of rejected, better go back to school and redo your english comprehension classes.

My comment is aimed at Bunny Boi

snip –

Steve- I normally don’t snip critics but you’re making an untrue factual allegation here. I did not do “much of the statistical analysis” in the paper. I did not even see the paper until Friday; I did one analysis, which unfortunately did not catch a latent problem. It would have been more appropriate to acknowledge me than to list me as a coauthor, but unfortunately I did not catch this as the grandchildren were over visiting on Saturday night and Sunday morning and I missed some emails.