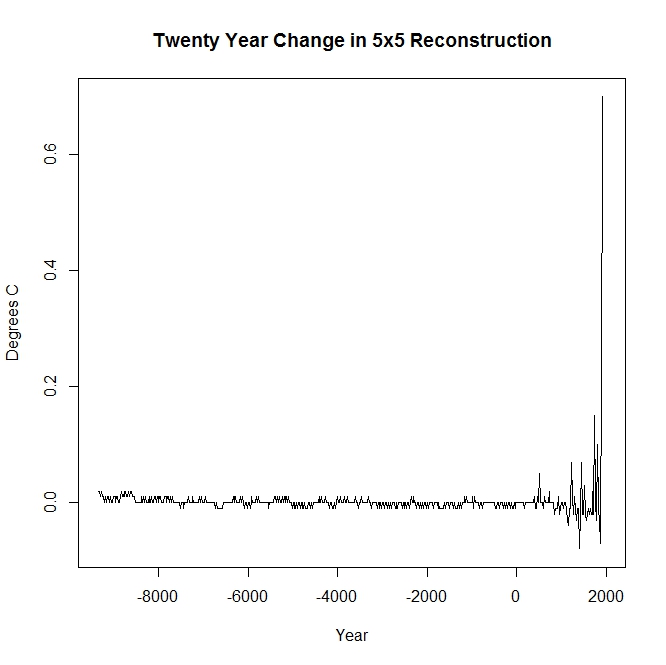

So far, the focus of the discussion of the Marcott et al paper has been on the manipulation of core dates and their effect on the uptick at the recent end of the reconstruction. Apologists such as “Racehorse” Nick have been treating the earlier portion as a given. The reconstruction shows that mean global temperature stayed pretty much constant varying from one twenty year period to the next by a maximum of .02 degrees for almost 10000 years before starting to oscillate a bit in the 6th century and then with a greater amplitude beginning about 500 years ago. The standard errors of this reconstruction range from a minimum of .09 C (can this set of proxies realistically tell us the mean Temperature more than 5 millennia back within .18 degrees with 95% confidence?) to a maximum of .28 C. So how can they achieve such precision?

The Marcott reconstruction uses a methodology generally known as Monte Carlo. In this application, they supposedly account for the uncertainty of the proxy by perturbing both the temperature indicated by each proxy value as well as the published time of observation. For each proxy sequence, the perturbed values are then made into a continuous series by “connecting the dots” with straight lines (you don’t suppose that this might smooth each series considerably?) and the results from this are recorded for the proxy at 20 year intervals. In this way, they create 1000 gridded replications of each of their 73 original proxies. This is followed up with recalculating all of the “temperatures” as anomalies over a specific 1000 period (where do you think you might see a standard error of .09?). Each of the 1000 sets of 73 gridded anomalies is then averaged to form 1000 individual “reconstructions”. The latter can be combined in various ways and from this set the uncertainty estimates will also be calculated.

The issue I would like to look at is how the temperature randomization is carried out for certain classes of proxies. From the Supplementary Information:

Uncertainty

We consider two sources of uncertainty in the paleoclimate data: proxy-to-temperature calibration (which is generally larger than proxy analytical reproducibility) and age uncertainty. We combined both types of uncertainty while generating 1000 Monte Carlo realizations of each record.

Proxy temperature calibrations were varied in normal distributions defined by their 1σ uncertainty. Added noise was not autocorrelated either temporally or spatially.

a. Mg/Ca from Planktonic Foraminifera – The form of the Mg/Ca-based temperature proxy is either exponential or linear:

Mg/Ca = (B±b)*exp((A±a)*T)

Mg/Ca =(B±b)*T – (A±a)

where T=temperature.

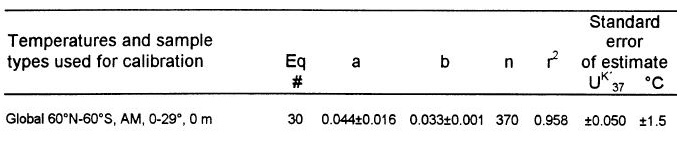

For each Mg/Ca record we applied the calibration that was used by the original authors. The uncertainty was added to the “A” and “B” coefficients (1σ “a” and “b”) following a random draw from a normal distribution.b. UK’37 from Alkenones – We applied the calibration of Müller et al. (3) and its uncertainties of slope and intercept.

UK’37 = T*(0.033 ± 0.0001) + (0.044 ± 0.016)

These two proxy types account for (19 (Mg/Ca) and 31 (UK’37)) 68% of the proxies used by Marcott et al. Any missteps in how these are processed would have a very substantial effect on the calculated reconstructions and error bounds. Both of them use the same type of temperature randomization so we will examine only the Alkenone series in detail.

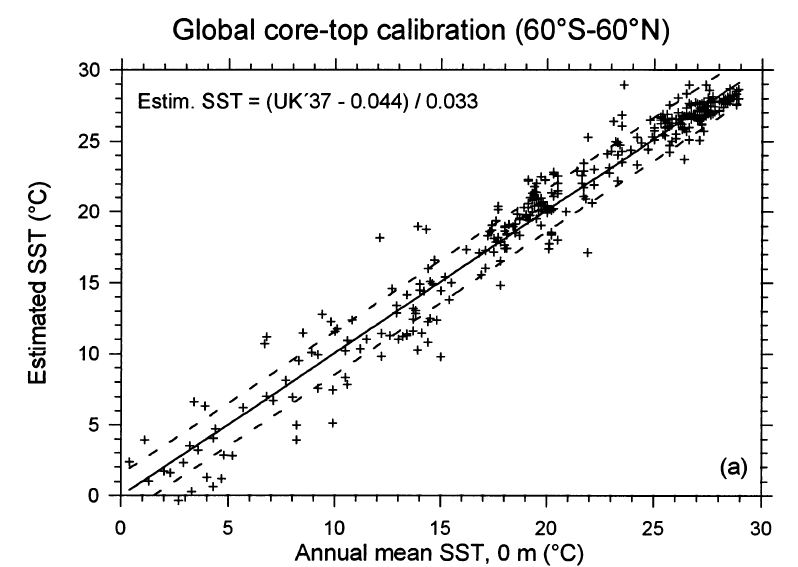

The methodology for converting proxy values to temperature comes from a (paywalled) paper: P. J. Müller, G. Kirst, G. Ruthland, I. von Storch, A. Rosell-Melé, Calibration of the alkenone 497 paleotemperature index UK’37 based on core-tops from the eastern South Atlantic and the 498 global ocean (60N-60S). Geochimica et Cosmochimica Acta 62, 1757 (1998). Some information on Alkenones can be found here.

Müller et al use simple regression to derive a single linear function for “predicting” proxy values from the sea surface temperature:

UK’37 = (0.044 ± 0.016) + (0.033 ± 0.001)* Temp

The first number in each pair of parentheses is the coefficient value, the second is the standard error of that coefficient. You may notice that the standard error for the slope of the line in the Marcott SI is in error (presumably typographical) by a factor of 10. These standard errors have been calculated from the Müller proxy fitting process and are independent of the Alkenone proxies used by Marcott (except possibly by accident if some of the same proxies have also been used by Marcott). The relatively low standard errors (particularly of the slope) are due to the large number of proxies used in deriving the equation.

According to the printed description in the SI, the equation is applied as follows to create a perturbed temperature value:

UK’37 = (0.044 + A) + (0.033 + B)* Pert(Temp)

[Update: It has been pointed by faustusnotes at Tamino’s Open mind that certain values that I had mistakenly interpreted as standard errors were instead 95% confidence limits. The changes in the calculations below reflect the fact the the correct standard deviations are approximate half of those amounts: 0.008 and 0.0005.]

where A and B are random normal variates generated from independent normal distributions with standard deviations of 0.016 0.008 and 0.001 0.0005, respectively.

Inverting the equation to solve for the perturbed temperature gives

Pert(Temp) = (UK’37 – 0.044)/(0.033 + B) – A / (0.033 + B)

If we ignore the effect of B (which in most cases would have a magnitude no greater than .003), we see that the end result is to shift the previously calculated temperature by a randomly generated normal variate with mean 0 and standard deviation equal to 0.016/0.033 = .48 0.008/0.033 = 0.24. In more than 99% of the cases this shift will be less than 3 SDs or about 1.5 0.72 degrees.

So what can be wrong with this? Well, suppose that Müller had used an even larger set of proxies for determining the calibration equation, so large that both of the coefficient standard errors became negligible. In that case, this procedure would produce an amount of temperature shift that would be virtually zero for every proxy value in every Alkenone sequence. If there was no time perturbation, we would end up with 1000 almost identical replications of each of the Alkenone time series. The error bar contribution from the Alkenones would spuriously shrink towards zero as well.

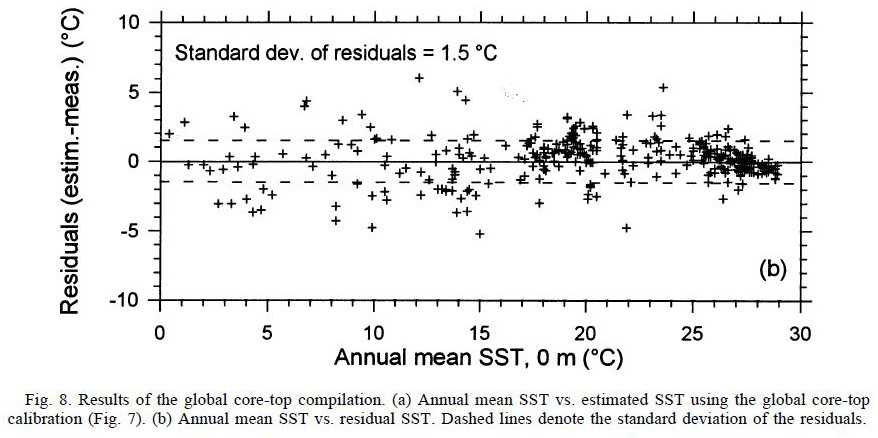

What Marcott does not seem to realize is that their perturbation methodology left out the most important uncertainty element in the entire process. The regression equation is not an exact predictor of the the proxy value. It merely represents the mean value of all proxies at a given temperature. Even if the coefficients were known exactly, the variation of the individual proxy around that mean would still produce uncertainty in its use. The randomization equation that they should be starting with is somewhat different:

UK’37 = (0.044 + A) + (0.033 + B)* Pert(Temp) + E

where E is also a random variable independent of A and B and with standard deviation of the predicted proxy equal to 0.050 obtained from the regression in Müller:

The perturbed temperature now becomes

Pert(Temp) = (UK’37 – 0.044)/(0.033 + B) – (A + E) / (0.033 + B)

and again ignoring the effect of B, the new result is equivalent to shifting the temperature by a single randomly generated normal variate with mean 0 and standard deviation given by

SD = sqrt( (0.016/0.033)2 + (0.050/0.033)2 ) = 1.59

SD = sqrt( (0.008/0.033)2 + (0.050/0.033)2 ) = 1.53

The variability of the perturbation is now three 6.24 times as large as that calculated when only the uncertainties in the equation coefficients are taken into account. Because of this, the error bars would increase substantially as well. The same problem would occur for the Mg/Ca proxies as well, although the magnitudes of the increase in variability would be different. In my opinion, this is a possible problem that needs to be addressed by the authors of the paper.

The regression plot and the residual plot from Müller give an interesting view of what the relationship looks like.

I would also like someone to tell me if the description for ice cores means what I think it means:

f. Ice core – We conservatively assumed an uncertainty of ±30% of the temperature anomaly (1σ).

If so, …

264 Comments

Ultimately we are interested in the uncertainty between the result of the calculation and the actual temperature. In statistics we infer this kind of error from the sample variation which is usually a reasonable assumption. But what happens to this assumption when you start to use a Monte Carlo method to bulk up your sample?

To give an extreme example, suppose that only one proxy measurement was input into the procedure. The Monte Carlo method would then inflate this to a respectable looking sample of 1000 data points. The variation of this respectable looking sample could then be accurately computed, but in fact it would tell us absolutely nothing about how far away we are from real temperatures. You can’t manufacture certainty out of nothing.

I confess that I have not time to read Marcott’s paper to see how this issue was addressed.

Re: Ian H (Apr 4 20:19),

Agreed. I had assumed they had used a polynomial fit of order N+1 to connect N points. But to connect them with straight lines? This results in infinite acceleration in the rate of temperature change at each of the data points. Hardly a good starting point if you are trying to improve resolution.

My initial impression was the same as Ian’s. But then I thought nothing that crazy could get published. Perhaps it could? Is this in essence what they have done?

You want to estimate the mean height of the male population of a town. You sample 73 people. Write down their heights. You then perturb each of them by generating a normal realisation with standard deviation 1sigma (As far as I can see, the value for sigma is the sample standard deviation of the 73). Now repeat this 1000 times. This gives you 1000 estimates, each based on a “sample” size 73. Take the average of your 1000 estimates as your estimator of the town’s mean male height and use the sample standard deviation of this “sample” size 1000 as your error estimate.

As Ian says, you cannot erradicate bias by generating more data points from the original ones. Also, you cannot increase precision. To see this, imagine 10 million new estimates, rather than 1000. The standard deviation of the resulting estimate would very close to that derived using the original 73, but cannot be lower. However, if you treated this as 10 million times 73 sample points of actual heights, the standard deviation of the resulting estimate would be very close to zero.

OK, there are no towns population 73 million. Assume the sampling is “with replacement”. i.e. men can be measured more than once.

I think what I am trying to say is an abstracted illustration, applied to a simple problem, of Steve’s post starting from

So what can be wrong with this?……

Nice example. However, it seems to me Marcott et al measured the height of all people – young, mature, old, men, women, from the Netherlands, Vietnam, West Africa, etc. That E term looks bigger and bigger.

Also I am pretty sure that they perturbed on the timing of the temp measures not the temp measures themselves. In the paper they say they used a 1C proxy uncertainty. This seems low compare to Muller’s estimate for Alkenones.

It sounds like they are trying to move the points on the proxies around to see if they can get then to “line up”, and then when they have the best fit between the proxies they call this the actual signal.

However, this approach ignores the obvious fact that these proxies are regional and we know that different region do not move in unison. Often when it is hot one place it is cold in another – for example the polar see-saw.

Rather than improving the resolution in this case it would just as likely tend to create nonsense, as it tried to shift the points around forward and backward in time to try and invert one proxy to match the other. This could result in high temps being shifted in one direction and low temps in the other and create trends where there were none.

My initial thoughts on this technique is that it might work with multiple proxies from a similar location to reduce error, but over widespread area it could be poorly behaved because the underlying assumption is wrong. There is nothing to say that the proxies from different regions should be expected to move in unison, have similar magnitudes, or even move in the same direction.

I’m very strongly reminded of the tree ring calibration problem. I wonder if this isn’t another example of selection bias? In effect they are using the “average” temperature of the proxies to select which proxies to use. The proxies that stray from the “average” are adjusted to match the average – in effect they are de-selected through adjustment. There appears to be a huge opportunity to introduce (amplify) error.

Would someone assist? Tamino has put in some artificial spikes to show that these would be detected using Marcott’s methods (I think) and as such spikes are not seen anywhere in Marcott’s reconstruction but are seen in the grafted temperature records for the 20th century, he concludes that it is only in the 20th century that such marked increases have occurred in an 11500 year time span. Because I’m banned from Tamino’s site for arguing and generally not showing sufficient obeisance I can’t ask if the proxies for the 20th century show this spike. I understand that there are problems using recent proxies, I don’t really know what they are but if one can artificially insert spikes in earlier periods of the time frame studied to see if they can be detected it might be informative to see if such spikes are in fact present by determining temperature in the 20th century using proxies rather than instrumental measurements. Apologies in advance for my obvious ignorance, my area of scientific expertise is biochemistry not climate science

Ian, Physicist Clive Best says he has debunked Tamino’s last post (looks plausible though I can’t judge). Also notes that Tamino has blocked him! The notorious “open mind” of Grant Foster aka Tamino strikes again….

Re: Ian (Apr 4 20:24),

Tamino and RC have been excited ever since I posted this article at their web-sites:

“as such spikes are not seen anywhere in Marcott’s reconstruction but are seen in the grafted temperature records for the 20th century, he concludes that it is only in the 20th century that such marked increases have occurred in an 11500 year time span.”

I don’t think that’s his reasoning. He says elsewhere that the proxies are particularly unable to resolve 20th Cen, because of declining numbers and end effects. But we know there was a spike (CRU), and he’s saying that a spike like that would have shown up in the earlier record.

Again, there is a spike in instrumental data but it has never been detected by a reasonable proxy. This is very annoying.

“he’s saying that a spike like that would have shown up in the earlier record”

But there was at least one such spike, though a cold one, the 8.2 KA event, and it doesn’t show up.

Nick: As I understand it, no spike equivalent to the 20th century would show up in the proxy record because of the low resolution of the proxy record, right? I seem to remember reading about a 300-year resolution, though perhaps some proxies are better than that?

Wayne,

300 year was supposed to be the upper limit – yes, many are better. But even though they might not show up well in individual proxies, when you average 73 there is a better chance. I haven’t done the calc myself.

Wayne

Source: Revkin’s interview of Jeremy Shakun at Dotearth April 1

Nick: “when you average 73 there is a better chance”.

Two comments: 1) “better chance” is no a very reliable method, and 2) averaging tends to smooth so I’d say averaging 73 items makes it less likely.

And as David L Hagen says below, there’s nothing in the data that can show anything less than 300-year-cycles. No amount of statistical processing will pull out spikes of 50 years. None.

What you have is centuries of highly-smoothed data followed by a half century of barely-smoothed (because there’s not much) data. That will make a hockey stick every time. Try it yourself.

Re: Ian (Apr 4 20:24),

Tamino has played a magician’s trick on you. He hasn’t added the spikes to the location where the proxies were created, he has drawn them on top of the proxy data. To understand this by analogy, consider this:

Adding additional planets around stars does not make them detectable to astronomers 50 years ago. These additional planets would be real spikes. However, drawing picture of planets on the old photos will certainly make them detectable! These are Tamino’s spikes. Your half blind old granny could detect them!! So, no surprise Marcott was able to do the same.

Re: Nancy Green (Apr 4 21:25),

Nancy, I like your analogies. I believe you’re exactly correct about “drawing pictures of planets on the old photos.”

Here’s approximately how I put it to Tamino in a blocked post, and also on Clive Best’s:

(I created a comment for Tamino pointing out two apparent flaws in his analysis, and suggesting workarounds. He’s not released it…)

To a person familiar with how data works it is visually obvious that Tamino’s methodology has a problem. One vs 100 vs 1000 perturbations in his method produce almost identical spike results, but with ever-smoother background data.

I believe Roman’s analysis provides a mathematical background for why Marcott AND Tamino’s methods simply don’t work. In essence, they are oversharpening the data, and in Tamino’s case he’s oversharpening an introduced digital defect.

1) Real world data would never introduce exactly identical spikes in X (time) and Y (amplitude) in all 73 proxies. Doing so introduces what is essentially a digital defect in the data… the same as an artificial dropout, or a scratch on a CD (or in Nancy’s analogy, a painted-on planet 🙂 )… When it is so perfectly aligned in the raw data, the defect will naturally survive a wide variety of processing algorithms. Yet this is what Tamino did. In the real world, different proxies will reflect climate in different ways, with at least slightly different temperature responses (assuming they are all temp proxies!) Even real thermometers don’t all produce the exact same signal.

Tamino should have had proxy-dependent variability, as well as some level of randomness, in his spike-production method.

2) Tamino’s methods are not fully explained with respect to perturbation and time-adjustment (end cap)… Steve M showed that the decisions underlying those methods and parameters are crucial to whether a spike even shows at all in modern times.

Now RomanM shows how the original Marcott method over-sharpens the data.

My final thought: IIRC, the strange thing about the “spike” in Marcott is that it is not seen in the unprocessed raw data. By whatever means, the spike is a feature of the processing methods and parameters. Yet these simulations of paleo “spikes” involve introducing raw-data spikes and determining whether the processing will eliminate the spikes. Seems like inverted tests to me!

I’m sensing two questions are important:

a) If there were temp ‘spikes’ in the past, would they be visible

b) How likely is it that processing methods and parameters will produce a spike that’s not in the original data?

Re: MrPete (Apr 4 22:06),

I’m sensing two questions are important:

a) If there were temp ‘spikes’ in the past, would they be visible

==========

As per my more detailed analogy, they would only be visible if the resolution of the proxy is sufficient to make them detectable. You can study the old photo’s of other stars all you want and you will never find a planet – unless “Tamino” has drawn one in. Yet newer, high res techniques now make it appear that planets are quite common.

A) Yes, but only if they were originally large enough to pass through the filtering process. They would be heavily attenuated from their original form. Furthermore, depending upon circumstances, they might not resemble their original form.

B) Zero, unless the processing method was designed to do so. Legitimate processing techniques, implemented correctly, should never add information, they should only make existing information easier to analyze.

Mark

It is quite easy to figure out, how “real world data” would if represented by such proxies.

With a 300 years resolution, data of +-150 years would be averaged. Temperatures from 1863 +-150 years would then contain data from the little ice age as well as from today averaged in one data point.

On top of this there are dating errors when combining different proxies, leading to a further spread of averaged data in use and a further loss of variation.

On top of that such proxies do not represent only temperature but various other related and unrelated variables which will contribute to a further loss of variation.

So their use of Monte Carlo allowed them to have smaller error bars thus giving the impression of greater confidence/accuracy. The more I hear/read the more I believe everything they did was to produce a specific result. GO TEAM!

Great stuff here Roman. Leaving the prediction error out of the Monte Carlo model? Tsk. And of course the assumptions of the Muller et al calibration model (and any associated variance attenuation) are also imported into Marcott’s reconstruction as well. Then of course we have the uncertainty of the offset alignment with modern temperatures. This could take a while.

This is the second time you have dropped a comment on this matter. You wouldn’t by any chance be planning to take a closer look at this now would you? 😉

Roman:

This is very elegantly and clearly stated.

Have you looked at Nick Stokes tool that allows you to visualize all the proxies? I am not sure technically how it relates to your analysis above but the lack of variance of the proxy anomalies particularly between roughly 5000 and 7000 BP looks very strange especially given Figure 8 that you very helpfully pasted into your post and the SE of +/- 1.5C.

I would like to see what the error bars would look like if done correctly!

Scott,

I expect that you would not see them assuming you kept the y-axis constant. 😉

Roman M,

I’m not following your logic here.

Assume no autocorrelation.

If I fit a model with residual error, E, I can generate realisations from the model by predicting mean model values and sampling from E; OR I can sample from the coefficient distributions and set E to zero. If I allow both the coefficients and the residual error to vary for each realisation then i will end up with an overestimate of the error variance. No?

Muller’s coefficients were a fit to the mean. The actual observations (actually residuals) are distributed about the mean with a SD of 1.5C. Where is that accounted for?

I don’t believe that is necessarily the case here. The model estimates are calculated from one sample and then the predictions are made on a data which are external to that sample. The error in the coefficients is independent of the E for the data to which the prediction is applied. You are dealing with two independent sources of uncertainty and both are playing a role. There is also a second step in which one has to solve for the “temperature” perturbation which does make the situation more complicated. I am willing to be convinced that you are right if you can come up with a good argument.

Either way, I don’t think that what was done by Marcott is correct.

Roman,

I commented below that I just couldn’t understand why you’re adding in a measure of Muller’s residuals (which I presume is E). I still can’t, but it seems now to me that you might be doing so because M did not include varying UK37 according to its uncertainty. It surely wouldn’t make sense to include both.

Just looking at your math, I think your first equation should actually be

U=0.044+A+(0.033+B)*(T+δT)

where U=UK37, δT=Pert(Temp)

Then to first order, we’ve created a random variable

δT=(-A-T*B)/0.033 (differs from yours by T*B)

But then you take mean of 1000. That should make the change very small, otherwise 1000 is not enough. The only thing of interest should be the mean of product terms. And here, I suspect that is very small too.

You want to add in E. The only way I can make sense of that is as a proxy for δU – uncertainty in UK37. But again, just adding that should leave no net change after mean of 1000.

Incidentally, I presume A and B are independent of time. It wouldn’t make much sense to think of the interpolation formula varying randomly from one time to the next. In that case, any effect they did have is likely to vanish after taking anomalies.

It seems to me that it’s only perturbing the age model that does anything interesting.

You are correct on the first part, but wrong on the second. Yes, the E is due to the fact that even if the regression line predicting proxies from temperature is known exactly, the observed proxies will not all fall exactly on the regression line. The uncertainty due to the fact that the regression coefficients are not known exactly contributes uncertainty independently of E. Saying that it wouldn’t make sense to account for both is statistically naive.

In fact, both are taken into account in a much less clumsy fashion than varying coefficients as done in Marcott et al when one calculates prediction intervals in regression. Müller does not overtly give enough information on the observed temperatures used in the regression (one needs the means and variance of those temperatures) to do the exact calculation and I am not sure whether I can infer them from the information given. However, if you look at the formula for the prediction interval, you will see that the end result has a lower bound of the standard deviation of E. This also addresses your point that changing the coefficients each time because those values do not appear overtly and it also deals with the fact that I was ignoring the effect of the variation of the slope coefficient in my post.

I will add an update to my post on this approach when I get a chance later today.

Nick: Obviously Roman can answer best, but I haven’t seen these particular words. There are two variances around a central value involved: 1) The variance that reflects your uncertainty in calculating a parameter of your model, and 2) the variance of the fit of the data to your model.

I believe this is the difference between the SD of our data and the SE of our slope and intercept. Even if we know, a priori what the slope and intercept are (SE’s are zero), that does not mean that the data will fall directly on the line (SD is not zero). Both sources of variability must be accounted for, but most of the time all we focus on are the SE’s.

[Roman: This is exactly what I am saying but you have expressed it in more technical terminology. I have been using simpler language so that people who do not have a sufficient statistical background can get the sense of what is wrong with the Marcott approach.

Roman: Yes, it’s best to explain things at the appropriate level for the audience and I think you’ve done well.

This kind of discussion really hurts my head, though. On the one hand, it makes me want to step through the whole chain of analysis from measurement to result, looking at the uncertainties at each step. And there is a LOT of uncertainty/variability that is not usually carried forward through that chain that should be. A LOT of assumptions are made and we end up with layer upon layer of abstraction and smoothing that is simply not reflected in the statement of the results.

So then I think, wow, the results we see reported are way overconfident. But then I hear some non-technical skeptics arguing that everything is so uncertain that we can’t possibly know anything so let’s just assume it’s all okay and stop poking around. No, that’s wrong, too.

And these two poles create a real tension that is just headache-inducing.

It feels like science is devolving into four camps: 1) scientists who gather data but then keep it close to the vest because knowledge is power, 2) scientists who are enamored with statistical methods and so take whatever data is at hand and assume if they use sophisticated-enough statistical methods they will get legitimate answers — better answers than the data would seem to support — but then 3) lay people who assume what’s “peer reviewed” or what uses “sophisticated statistical methods” must be right, and 4) lay people who are skeptical of science overall.

It’s a sad state, really. Though maybe I’m biased because the climate field is the poster child for enamored-with-statistics. (I don’t mean to imply that I’m a climate scientist, I’m not. But following this field is part of a hobby of mine so it’s over-represented in my impression of science at large.)

Steve: I think (and certainly hope) that the commentary here falls into a 5th category. The strongest commentary here applies statistical requirements to science articles that are often weakly peer reviewed.

Nick: “No, this is not regression, it’s applying a regression relation”. You need to quote what you’re replying to, since we’re all reduced to a flat thread at this depth. I assume you’re addressing my comment about errors-in-variables by saying that the x’s of which you speak (which have errors) are not being used in a regression.

I can accept that. My mistake.

(Though I’d add that it’s my impression that the original regression had errors in its x’s (time).)

Roman: You and your readers are struggling with the proper analysis of confidence interval in what an analytical chemist would call a standard curve. One can see a presentation of how an analytical chemist would do this analysis at:

Click to access 07_Calibration.pdf

The 17th, 20th and 21st slides illustrate the problem you are dealing with. If the UK’37 ratio can be measured with complete precision, you are dealing with a situation that looks like slide #21. (My guess is that the sensitivity of the alkenone proxy (95% confidence level) is understood to be about 1.1 degC, meaning that a 1.1 degC temperature change will be real 95% of the time.) Unfortunately, no measurements are ever made with complete precision. In that case you are dealing with a situation link slide #17, where the uncertainty in the calibration curve combined with the uncertainty in measuring UK’37 creates additional uncertainty (which I think you are calling E).

Frank,

“creates additional uncertainty (which I think you are calling E)”

Your slide 17 etc just deal with the algebraic consequences of the uncertainty of slope and intercept (A and B of the post). So did the prediction interval link that Roman referred me to. There is no extra E referring back to the residuals of the original regression. And I am now thoroughly convinced there should not be.

To see this, take Roman’s extreme where Muller has an infinite sample, and A and B were zero. Then your slide 17 would, correctly, be telling you that you just convert with the straight line. No extra E. The x-axis variable has uncertainty, which converts directly to that of the y variable.

I think Roman’s fallacy is the statement:

“The regression equation is not an exact predictor of the proxy value.”

Of course not, but they are not trying to predict the proxy variable. They have a reading. They are trying to predict how the proxy variable depends on T. Muller did a regression which discriminated a component that depended on T from a residual that did not. Marcott need not be concerned with the various ways in which Muller’s samples varied independently of T. He only needs the T dependence.

Nick, it seems to me that you must never have taken an elementary mathematical statistics course.

What’s the difference? They are deriving an equation which which predicts what the expected value of the proxy will be for a given temperature. As in any linear regression, Müller et al start with a linear model for the relationship between Proxy P and temperature T:

P = A + BT + E

where A and B are numeric constants. E is there from the very start to account for the simple fact that for a fixed temperature T, the measured proxy values will vary – in a scatter plot, they may appear above or below the line P = A + BT. E is assumed to be a random variable with mean 0 (which gives the line a physical meaning as the mean of all proxy values for which have an associated temperature value of T and a standard deviation of σ which describes how far the actual observed proxy value might be vertically from the line.

But A and B are not known, so the first step is to estimate them and that is what Müller did. They took 370 proxy samples along with their 370 associated temperatures and came up with estimates a, b and s for the parameters A, B and σ. The results were a = 0.044, b = 0.033, and s = 0.050. They also realized that a and b were not exactly equal to A and B, so they calculated estimates of their standard error (i.e. uncertainty) due to that pesky E term in the original model and came up with s(a) = 0.016 and s(b) = 0.001.

Now Marcott comes along with a (possibly different) set of proxies and decides that he can use this regression equation to “predict” what the temperature may have been for the conditions under which those proxies were formed. He solves for T as a function of P and gets T = (P – a)/b, thereby generating his set of “temperatures”. But, wait a minute – there was an E in that equation. What happened to it? They assumed in each case that the E was equal to 0. The effect of the variability of the proxies around the regression line has been completely lost in this process.

In order to be able to calculate error bounds for their results, they need to somehow restore this information into their data and they decide to do this by using their Monte Carlo methodology. What needs to be done is to add in a randomly generated E and see how the temperature estimate changes. However, in a very clumsy effort, they decide that perturbing the coefficients alone according to their uncertainty will do the job. It does not achieve the necessary result because it only deals with how well Müller was able to estimate the coefficients and not with the variability of predicting the proxies themselves.

This is patently false. First of all, as one can easily see from the model, E does not relate just to the “residuals of the original regression”, but to ALL of the proxies. Secondly, the prediction formula applies to proxies outside of the original study. Now look carefully at the part of the formula for the prediction interval under the square root. The initial “1” is E. Statisticians are clever people. 😉 They figured out that the distribution of the distance from a new proxy value from the calculated line is a (multiple of a) t distribution with n-2 df (368 in this case – read “normal” for that many df) with mean zero and with standard deviation equal to s*(the square root part of the formula). This takes into account E AND the uncertainties of a and b and this is the perturbation amount that should be applied to the proxy.

By the way, if you remove that “1” from the equation, you get what is needed to calculate a confidence interval for the line itself.

[Update: The link to the prediction interval is this.]

Nick Stokes (Apr 6 03:33),

I’m struggling to understand your point. In their MC perturbation analysis Marcott et al only accounted for the “model uncertainty” (Frank’s slide 13), i.e., calculated their “proxy-to-temperature uncertainty” by estimating the mean response SD. Are you saying that it is the correct approach?

Jean S,

My point? Roman says there is a big omission in Marcott’s MC which is E:

“E is also a random variable independent of A and B and with standard deviation of the predicted proxy equal to 0.050 obtained from the regression in Müller”

As far as I can see, E is effectively the residual in the original regression

UK’37 = (0.044 + A) + (0.033 + B)* Pert(Temp) + E

“Even if the coefficients were known exactly, the variation of the individual proxy around that mean would still produce uncertainty in its use.”

I do not believe those residuals need to be considered. Here’s a thought experiment. Suppose there was another independent variable s affecting UK37, not interacting with T. s normally varies with no detectable pattern, so it’s effect is included in noise. But suppose in Muller’s situation, the amplitude of s variation was unusually large, so he had to compensate by taking a lot of samples. Does Marcott have to take on an error term for that s variation?

In any case, no such reference to a third independent variable appears in Frank’s slides, as I far as I can see. There is only the uncertainty in the regression coefficients, which Narcott allowed for.

What do you think would be the value of E in the case where A and B are very small?

Nick Stokes (Apr 6 06:40),

you didn’t answer my question. I’m not interested in “thought experiments”. I gave you a link which as far as I understand is precisely dealing with the situation. Please formulate your point in terms of the link, and this might actually lead somewhere.

Jean S,

Yes, I believe mean response is the right thing for them to use. But this is a somewhat different question. Roman is talking about importing a variable from Muller’s regression.

The reason why I think the mean response is right is because of its role in the Monte Carlo. There isn’t any point in just adding variation to the observed variable, because the Monte Carlo averaging will simply remove it with no residual effect, assuming there are enough repetitions.

Nick Stokes (Apr 6 07:27),

Thanks, Nick! We now know precisely what we disagree on. And knowing your ways for many years, I do not even try to convince you of believing anything else.

Yes, that’s precisely what makes the difference between the mean and predicted responses in their perturbation analysis.

Nick –

I must be missing something here. The residual error in Muller’s sample regression has a SD of 1.5 K. That represents a lower bound on the accuracy of the temperature attributed to a given sample.

Suppose that, in digging in the muck, we didn’t find proxies but readouts from a digital thermometer (naturally it will have a date stamp such as 3456 BC ;-). Being a primitive digital thermometer, it had a relatively poor accuracy of +/-1.5 K (1-sigma). Averaging N of these readings will result in a metric T0 which ideally has a mean value of the actual temperature, but a SD of 1.5K/sqrt(N). For N=73, that means SD ~= 0.2 K. That value constitutes a lower bound on the Marcottian uncertainty. [Well, not quite — not all Marcott proxies are alkenones and I don’t know the SDs of the other proxy types.]

HaroldW

“I must be missing something here.”

Well, I guess one of us is. It’s late here, but it’s the night when we leave daylight saving, so I guess I have an extra hour. It may not make me wiser though 😦

That 1.5°S is just 30x the (Muller) UK37 se – 30 is inverse regression slope. I think here too, the primary uncertainty in T is 30x the uncertainty in their observed UK37, whatever that is.

I’m not sure what to make of the bronze age digital thermometer; it’s UK37 that is dug up. In fact I believe the real error source is not in the UK37 obs itself, but where and when it came from.

Jean S,

“And knowing your ways for many years, I do not even try to convince you of believing anything else.”

Well, I may be incorrigible, but I’m sure there are more perceptive listeners who will be interested.

Nick,

I realize that this is an argument from authority, but the odds of you being correct on a statistical issue as opposed to Jean S and Roman, is as low as Mann’s verification r2. You’re arguing here with specialists within their specialty

Nick: “The x-axis variable has uncertainty” If this is true, OLS does not work properly. You need to go to an Error-in-Variables kind of approach.

Jean S,

You are not effusive in your explanation, and I think I may see a crossed wire. I have been talking of the Monte Carlo which produced the mean curve after date variation etc. I see that you may be thinking that Marcott et al used that as their sole source of error bounds for the curve. That would indeed be wrong, but I don’t see that stated in either paper or thesis.

While I’m not in possession of the Muller et al. paper, this is speculative, but the SE in UK37 of 0.05 appears not to be uncertainty (which in this case I take as measurement uncertainty), but the spread on observed values at sites with the same SST. The SE of the estimated SST, at 1.5 K, is (as you say) 30 times that value, being just the result of solving for T from the observed UK37 values through the equation UK37 = 0.044 + 0.033*T. The meaning is still that, using the Muller best-fit coefficients, the estimated T differs from the actual by +/-1.5 K, as the second panel of figure 8 indicates.

Thinking about this some more, varying the A coefficient should have no bearing at all on the reconstruction, as the anomalization step (4500-5500BP) will negate this. Unless Marcott varied A for each sample independently, which would not seem to be consistent with its meaning as a calibration coefficient.

Wayne2,

No, this is not regression, it’s applying a regression relation.

But on the question of how M et al derive their blue CI’s; following through their Fig S2 and explanation, they aggregate in step c over the 73 proxies, and record a standard deviation. I assume that includes the between-proxy variance as well as the Monte Carlo variances, which if they were all of the same kind, would be where uncertainty in UK37 (or equivalent) values is included.

RomanM (Apr 6 08:20),

nice and very throughout explenation! But I’m afraid you are wasting your time with Nick (hopefully the explenation is useful for other people). He already stated that he believes that it is enough to account only for the errors in A and B…

Roman,

Their UK37, in your terms has a component depending on temperature (mean response) and a component that varies with other things. In compiling their stack, they account for error in the mean response through the Monte Carlo. But they include in their variance (I believe) in step c, Fig S2, the between proxy variance over their 73 proxies. Is this not covering your E? The variance in proxy values due to non-temperature effects?

Why are you still up? I thought you were going to bed! 😉

In their paper, Marcott et al keep referring to “the chronologic and calibration uncertainties estimated with our Monte Carlo simulations”. This procedure is crucial to their calculation of calibration effect on the end result. The contribution of these particular proxies that constitute 68% of all proxies used toward estimates of the error bars is very substantial. Imagine the spaghetti graph S3 in the supplement being two or more times as wide. Depending on how many replications are done, there could very well be higher variability in the reconstruction as well. In my view, correcting their work would make real substantial changes in the paper and this has to be addressed by the authors.

As an aside, there is also a slight upward bias introduced by the perturbation of the slope coefficient, but that is another issue.

Roman, Jean,

To put my argument there another way – suppose they didn’t vary dates. Suppose they didn’t vary the regression coefficients. Supposed they reduced their Fig 2 process to just step c, averaging over the 73 proxies and using the (weighted) sd to construct the CIs. Do they need your E as well?

Nick Stokes (Apr 6 09:23),

I don’t see any such step on p.8 (SI), where they explain the procedure. CIs are calculated in the step 6).

Jean S,

Yes, I think it is in step 6, They say “The mean temperature and standard deviation were then taken from the 1000 98 simulations of the global temperature stack (Fig. S2d),”. That’s the step, shown in Fig S2, where they take the area-weighted mean over the 73 proxies. I assume the sd they refer to includes the variance over those proxies.

That’s not the way I read it. Step 5 says:

To me, this indicates that 1000 reconstructions were created from the simulations in Step 5 which were then averaged to form the overall reconstruction and to calculate the standard deviation(s?). I don’t see this as referring to “variance over those proxies” in any way.

Nick Stokes (Apr 6 10:18),

what? Are you suggesting that their SD includes also proxy variance over time or over different proxies? That would be crazy, but I don’t see anything like that going on but only variance over 1000 (mislabeled 10000 in the figure as in the thesis) “stack” realizations (as it should).

And I think Nick Stokes’ fallacy is the statement :

“Argumentum ultimum verbum.”

If your model is: P = A + BT + E

then I suspect your A and B should be exact numbers.

If your model is: P = (A+/-dA) + (B+/-dB)*T

then I suspect you don’t need an E term. The curved confidence intervals around the linear regression provide a nice graphic representation of how uncertainty transfers from the temperature axis to the UK’37 axis and back. However, one needs to recognize that there may be significant experimental variability (dP) in measuring P (which potentially can be reduced by analyzing multiple samples). The uncertainty in P must be transferring to the temperature axis as shown in overhead #17). Rearranging gives

T = [(P+/-dP)/(B+/-dB) – (A+/-dA)]/(B+/-dB)

The model for the regression in Müller is P = A + BT + E. The people applying the result to their proxies have assumed that a single A and single B is used for all proxies from all locations.

Don’t confuse estimation uncertainty (which is not part of the model definition) with something like modelling the variation in the proxy-temperature relationship (which should be part of the model).

For example, I might wish to model the situation that intercept and slope might be related to physical location of the proxy site. In that case, my model equation might look like

P = (A + dA) + (B + dB)*T + E

where dA and dB are extra parameters and E = dP in your statement. The statement of a model should contain all of the relevant assumed features of the situation including any sources of randomness – your model specification should have had it written in rather then added on as an afterthought.

Jean S,

“Are you suggesting that their SD includes also proxy variance over time or over different proxies?”

Over different proxies. If you look at their cube in Fig S2, the dimensions are age, proxies and Monte Carlo reps. They collapse to 1D by averaging over proxies and Monte Carlo. It would be logical if the sd they quote is the variance associated with this total averaging process.

Anyway, I’ll try to test today using my emulation program, which basically collapses a 2D version (no MC).

Oh, Nick! You have got to be kidding! Please stop making things up.

The description of Fig. S2 states:

Where does it say “proxies” anywhere in the description? Where do you see “proxies” in the diagram?

Even if the proxies were to be included, how would that be done? Average all 1001 series together or do you envisage they did something weird and wonderful but somehow just forgot to tell us about it?

Roman, what exactly are the “datasets” if they are not the data of each individual proxy? Or are you distinguishing between the proxy (the record of d18O, etc) and the datasets (the calculated temperature based on the proxies)?

I am not distinguishing between proxy measurement and “temperature”. Each of them carries the same information because there is an exact relationship linking the two and one is reproducible from the other..

The datasets are formed by adding randomly generated quantities to each proxy and to each time with which that proxy has been linked. Although the datasets have been calculated using real proxy and time values, they do not contain the same information.

Romanm,

‘Where do you see “proxies” in the diagram?’

Vertical axis, “datasets” #1:73

Each age value is found by averaging a 73*1000 array of proxy/MC values. I don’t see why the sd quoted would not be the sd of the numbers in that array.

What are “proxy/MC” values. They are either one or the other. Once the random elements are added to the proxies, they are no longer “proxies”. They do not create 1000 NEW proxies.

The description clearly reads: “(b) Three dimensional matrix of each of the 1000 simulated datasets.”.

Did they somehow substitute “simulated data” for “real proxy data” somewhere between (a) and (b) and then put it back before the statistics were calculated in (d).

The simulated data was merged together on the horizontal level (probably quite differently) for each of the many types of reconstructions. It then makes sense that you have 1000 values for each of the 20 year periods from which the standard deviation can be calculated.

It does seem to me that it all turns on what is meant by “perturbing them with analytic uncertainties in the age model and temperature estimates” in the Real Climate Q&A

“4. Used a Monte Carlo analysis to generate 1000 realizations of each proxy record, linearly interpolated to constant time spacing, perturbing them with analytical uncertainties in the age model and temperature estimates, including inflation of age uncertainties between dated intervals. This procedure results in an unbiased assessment of the impact of such uncertainties on the final composite.”

In both cases did they include the full prediction interval or just a subset such as confidence intervals on the coefficients. Note the different language used between the two parameters “age model” and “temperature estimates”.

Anyway my feeling is I can’t tell from the descriptions and I can’t replicate to test. I do think that Nick Stokes’ suggestion somewhere above that it all comes out in the wash when we move from point estimates to regional and global estimates is a diversion – if the variance in the point estimates is understated and a function of proxy type then averaging these doesn’t fix it.

I’m also unclear why they went through the process of perturbation on temp and date separately and then interpolated a new “sample” value, and didn’t just go there directly to find a range of points in temp-time space around the original estimate.

It was done under the principle that a good way to estimate true variability of a reconstruction would be to start by doing many identical, but independent reconstructions. Then one could average these reconstructions to estimate the temperature and calculate the standard error at each point of the reconstruction to determine error bounds. This is similar to estimating the mean of a population from an average, but then needing to compare each of the observations to that average to determine how well the average may estimate that mean.

One of the reasons for the development of Monte Carlo methods was for estimating the variability of statistics for which the standard error could not be calculated theoretically because of the mathematical complexity of the statistic. It involved creating simulated samples with the same characteristics as the original sample and then calculating the statistic for each of these. The whole point of this post is that the method used by Marcott for Alkenone proxies (and likely some others) did NOT generate “temperatures” with the same characteristics as the original sample because the simulated values lacked a component of variability which made the new values considerably less variable. The end result would be a serious underestimation of the error bounds of the reconstruction.

Roman,

“What are “proxy/MC” values. They are either one or the other. Once the random elements are added to the proxies, they are no longer “proxies”.”

I think this is quibbling. They have for each age an array of 73×1000 data points. Each row of 1000 consists of perturbations of the observed values from one proxy. When you average over the 73000, and take the sd of the 73000, you incorporate essentially all of the proxy to proxy variation. And that incorporates a good measure of proxy uncertainty. If they were of exactly the same kind, it would be the obvious measure to use. And the heterogeneity doesn’t really change that.

And this relates to HAS’s point. It’s important because if that does cover proxy age value variability, and I think it does, then it would be a bad mistake to then include E as well. Double counting.

It appears that the conversation with you is pretty much done. You do not seem to be familiar with even the most basic elements of mathematical statistics and the interpretation of regression procedures.

The difference between proxy and “perturbed proxy” is not quibbling. You don’t “average over the 73000, and take the sd of the 73000” – again you don’t seem to understand the details and the details are important – and how you understand without any previous analysis or experience that “you incorporate essentially all of the proxy to proxy variation” or how “that incorporates a good measure of proxy uncertainty” is absolutely incredible. Perhaps you could explain to us the mathematics behind how this is done.

The method for perturbing of the ages is considerably different from the method for perturbing the proxy values:

How does this affect the categorical statements you are throwing around in you comments?

On several occasions, I have explained the technical and mathematical aspects of the proxy perturbations to you. You responded every time with nothing but an opinion. Bring some real evidence to substantiate what you say and then we can have something substantial to discuss.

RomanM @ 6:34 PM

I understand why Monte Carlo, just not why they ran two Monte Carlos and then combined rather than run one in temp/time space perturbing both dimensions independently – I meant this more as a curiosity rather than a substantive point.

Nick Stokes @ 6:47 PM

Of course the point remains we don’t know whether/how much it biases the best estimate if it wasn’t done, and we know it makes a difference to its PDF.

Where did you get the idea that there were two different Monte Carlo reconstructions?

The description on p. 8 of the Supplement document indicates that they did a single one combining the two perturbations as you suggest they should.

I choked on my pinot grigio when Nick accused Roman of quibbling.

Roman,

Let me put it at it’s simplest level. Suppose the MC perturbations were small. They had 1000 rows each essentially with the same proxy numbers. And they went through the same process. They would get the same result as if they had simply taken the mean and standard deviation of the individual proxies at each age (except for area weighting, which for 5×5 has small effect). And the sd would represent the proxy spread and would be a reasonable basis for a CI.

So why does the actual MC perturbation change that? Obviously it smooths, and reduces the CI’s for that reason. But it doesn’t suddenly stop the sd from expressing proxy variability.

I have now done that calc on my original recon, which did essentially average without MC. The result was an average sd of about 1°C. Obviously a lot higher than theirs, which is more like 0.2. But theirs is very substantially smoothed relative to mine.

I’d emphasise again that the issue is not whether the inclusion of proxy variability as they do it is perfect. It’s whether it is not there at all. If it is there, then simply adding in your E is not the right thing to do.

RomanM @ 9:15 PM

From the SI:

“We used a Monte-Carlo-based procedure to construct 1000 realizations of our global temperature stack. This procedure was done in several steps:

“1) We perturbed the proxy temperatures for each of the 73 datasets 1000 times (see Section 2) (Fig. S2a).

“2) We then perturbed the age models for each of the 73 records (see Section 2), also 1000 times (Fig. S2a).

“3) The first of the perturbed temperature records was then linearly interpolated onto the first of the perturbed age-models at 20 year resolution, and this was continued sequentially to form 1000 realizations of each time series that incorporated both temperature and age uncertainties (Fig. S2a).”

Step 1) “We perturbed”; Step 2) “We then perturbed”; Step 3) we put humpty-dumpty together again.

Come to that why not carry all the distributions through the global reconstructions and do the Monte Carlo at the end and pick up the rest of noise on the way?

Nick Stokes @ 9:19 PM

Call me old fashioned but I like to start with what should have been done and compromise from there. You never know what you might miss taking the short-cut (and sometime the umpire could send you back to do it properly even if only to show the short-cut didn’t matter).

If you see what I mean.

In fact I rather suspect that in this case the short-cut that you are rationalising is really the long way round if you are really interested in what is going on.

No risk in doing it right, if it doesn’t matter it will show.

HAS,

“I rather suspect that in this case the short-cut that you are rationalising”

It isn’t a short-cut. Look at the alternative that is proposed. Estimate the variability about the regression line. Create a whole lot of artificial proxies with perturbed values. Calculate the variance. Those artificial proxies would sit on the same axis of their cube.

But how to estimate the variability? Roman says import variability from Muller’s regression. But that was done with a whole different lot of proxies and circumstances.

So use the current data? From the 73 proxies estimate how they sit around some regression lines? Create artificial perturbed proxies etc?

The fact is, we have 73 proxies which do actually have the variability we’re looking for. Why not use them directly?

It isn’t rationalising either. One who proposes adding a whole lot of variance (E) has to show that it hasn’t been accounted for already.

What I am indeed assuming, btw, is that they actually did include the whole variance from the 73*1000 points per age step. Sometimes they make it sound as if they might have taken the mean over proxies and discarded that variance, which would indeed be wrong. But they are describing a fairly sophisticated algorithm, and that would be an odd thing to do.

Nick Stokes @ 1:37 AM

But the thing I don’t understand about that approach is how you get to say anything about temperatures.

Nick (1:37 AM) –

By ignoring E one is estimating a different quantity. Let me try an analogy.

Suppose you want to estimate the average height of a population. You select a group of N=73 persons at random and look up the height on their driver’s licenses. It turns out that a previous study established that when a person of known height was measured at the dept. of motor vehicles (DMV), the recorded height was on average correct, but varied with a standard deviation of Y. With some common assumptions, you’d say something like, “the average height of this group is X +/- Y/sqrt(N)”, where X is the mean of the license heights.

Now someone comes along and tells you that the yardsticks which the DMV used didn’t go through quality control, and their markings varied. What does that do to your confidence in the average height? To assess this, you create an array of 1000 random runs, in each of which you generate a perturbed height of each person (according to the actual recorded height, and variation of the yardsticks). For each run, the mean of the N perturbed heights can be calculated. The scatter of these 1000 mean values (call it Z) is an estimate of how much the sample mean varies due to the yardstick problem: before you could say definitively that the average recorded height is X, now you say “the average height, as measured by the DMV folks, is X +/- Z”. But you can’t say that the average height is X +/- Z; you still have to include the Y/sqrt(N) factor. Although you now have 73K pseudo-heights, there are only 73 measurement errors.

Just to be clear, Roman’s E is analogous to repeatability uncertainty (deviation Y), dA & dB to the yardstick uncertainty (deviation Z).

HaroldW,

I think this analogy can be developed, but it has a difference.

1. You speak of the mean of 73 individuals (X_i). But the CIs Marcott is drawing are for the mean temperature of the Holocene. For that, the error includes sampling of the proxy readings^* from a notional population. So your analogy should be estimating a population mean, from which population the 73 were sampled. So there is the familiar problem that, without Y for now, population estimated mean is mean(X_i) and has se: sd(X_i)/sqrt(72)

2. So Y and Z are a bit much. Lets just MC Y. Add N e_j’s (N=1000, e~N(0,Y)) to each X_i and average. Then the variance(over i,j)(X_i+e_j)~variance(X_i)+Y^2/N

The MC preserves the variance of X_i in the total variance.

We still have the sample variance of X_i and have added thet due to Y.

* The “population” is complicated for the proxy analysis. It’s not the population of proxies 1:73 – we don’t have a distribution model for them. It’s really the population of residuals.

Incidentally I found a non paywall copy of Huybers and Wunsch 2004, which Marcott cites as source for the random walk etc.

HaroldW,

I tried to reply, but I think it went to spam. The gist is that I think you are looking at a mean of a sample of 73, while Marcott is showing CI’s for the Holocene temperature. So there’s a sample->population mean step that’s not in your analogy. I tried to reformulate as seeking the pop mean from which the 73 were derived. Then just one of Y and Z is enough. The MC preserves the variance of the X’s and the new Y in a combined variance, as it should.

Nick (6:01 AM) –

I don’t think the sample vs. population distinction is applicable here. Yes, there is an uncertainty due to sampling 73 points in the world, vs. the much larger number available in current thermometer averages. That’s not a limitation to my analogy; Marcott et al. don’t account for sampling error in their methodology. They discuss why they think the term is negligible in section 8 of their SI.

Time span is also not an issue. Marcott et al. do not produce a single CI for the Holocene, but a CI for each time step. Marcott’s processing, once the anomalized stacks are complete at step 4, is independent for each time step. Each element of the 1000-member ensemble (from which they determine the sample deviation) is an average of (at most) 73 terms.

Roman: I believe that P = (A+/-dA) + (B+/-dB)*T OR

P = A + B*T + E can handle the uncertainty introduced by changing location because Muller devised this relationship from data acquired from many locations. It can’t handle variability introduced by things that may have changed with time (nutrients, changing species, etc.) or the experimental variability associated with measurement of P.

In my simple way of looking at it, you have to include the E unless you believe that the model exhausts the likely variables that influence what ever aspect of the Alkenones you are measuring. Muller’s model is a gross simplification of what he is measuring. Wouldn’t Marcott et al’s actual code be helpful at this point?

Re: bernie1815 (Apr 6 09:05),

you have to include E unless you believe that your values would be exactly on the regression line given you knew the coefficients A and B perfectly (and the linear model is perfect for the relationship). I don’t know how the code would help here except for checking that they actually did what they say they did.

Nick: “But they are describing a fairly sophisticated algorithm, and that would be an odd thing to do.” Yes, yes it would be. But considering how Mann, et al, have mis-used PCA and considering that it only takes a couple of lines of R (or Matlab, or …) code to do MC sampling, I’d say that this you’re over-confident on this one.

I’ve not seen much mention of something I see hiding in RomanM’s citations… I also noticed it when I went looking for an answer to a challenge introduced over at DotEarth. I’m certainly no expert so I pose this as an observation for someone else to correct or confirm:

Some are claiming that calibration is unnecessary for these proxies because they directly reflect absolute temperature, just like a thermometer (and unlike tree rings.)

I looked for any data on the uncertainties involved in the Alkenone proxies. The only thing I could find nicely matches the data in RomanM’s post above:

The source I found (An SI posted at UMass – not sure what paper this is for!) has this to say in the figure S2 caption (p3):

Am I reading this correctly, that Alkenone proxies have an inherent temperature uncertainty well over 1 ̊C? If so, how can scientists claim that no calibration is necessary?

It seems ridiculous to say that no calibration is necessary. Any time you measure a quantity in one unit and correlate it with another you have to calibrate.

And that calibration should account for any nonlinearity in the relationship.

Even the finest platinum resistance thermocouple is a calibration between measured voltage and temperature.

What I’m curious about is that any regression used to calibrate and then to predict values of Temp should have greater uncertainty as the value of Temp moves from average Temp used in the calibration period (if I remember right). Is this taken into account – it might be significant here where it appears the hindcast values are offset from the calibration values.

MrPete

“Am I reading this correctly,”

I don’t think they are saying this is the error in the calibration formula; I think its just the underlying uncertainty in UK37 multiplied by the slope factor from the formula.

Is this something we can examine via simulation?

# Generate multiplier for error value

#err = function() { runif(1,min=-1,max=1) }

err = function() { rnorm(1,mean=0,sd=1) }

# Convert UK'37 to temp

uk37temp = function(uk, Ea=err(), Eb=err(), Em=err()) {

((uk + (0.050*Em)) - (0.044 + (0.016*Ea))) / (0.033 + (0.001*Eb));

}

# 0.4351 is ODP-1019D sample 1

# No error in regression params

> sd(sapply(rep(0.4351,100000),uk37temp,Ea=0,Eb=0))

[1] 1.511062

# Marcott, no measurement error

> sd(sapply(rep(0.4351,100000),uk37temp,Em=0))

[1] 0.6042104

# All errors

> sd(sapply(rep(0.4351,100000),uk37temp))

[1] 1.634209

MrPete: The 20th and 21st overheads in the presentation I mentioned define and illustrate the sensitivity of any analytical method relying on a standard curve. It would make sense if the confidence interval you cited for alkenone proxies (+/-1.1 degC) were determined as shown for the sensitivity (from the 95% confidence intervals for the least-squares fit). Without full experimental details, it’s hard to be sure what these numbers mean. In any case, it’s impossible to measure your proxy (UK’37 ratio) with absolute accuracy. Therefore the dotted read line in overhead 21 has some thickness/uncertainty, making sensitivity is a lower limit for the true confidence interval. Careful technique and replicate samples can reduce the uncertainty in the proxy reading.

Click to access 07_Calibration.pdf

FWIW, I had an unproductive debate about some of these issues with Pat Frank after his WUWT post on dO18 proxies. He doesn’t agree with any of the above. (The y-intercept for dO18 proxies changes significantly from location to location and with glacial/interglacial because it depends on the changing O18 content of water.)

At any given slice in time they have 73 ‘measurements’ max, no matter what statistical machinations are done. Assuming that these measurements are perfectly distributed, and assuming that there is zero temporal/spatial variance in real world temps, and that the Mann stick used for alignment is a perfect proxy for temps and that the alignment with Mann is perfect, then the stddev of temp at any time slice would be the stddev of the 73 measurements assuming each had a mean of say 14 degrees and was normally distributed with a stddev of 1.5 degrees (as per the chart above). When I do the simulation I get a std of .165 or 8x the size of the Marcott shaft

So the back of the envelop sanity check shows that the shaft is nonsense…..

I think we are back where we should have finished: max 73 readings, BUT not of the same thing by the same method.

You have to be measuring the same thing by the same method to simply root the error of the combined measurements. If you have to calibrate proxy-to-proxy you have subsets that may be treated together, joined with others subsets with different error bars. The result is not even 1/8th.

And there are two errors: one of temperature and one of time. So your reading of X from #1 site may be a 100 years later than Y of #2 site.

All of this doesn’t mean you can’t do all this statistical analysis. What it means is that you have a result that is statistically correct but may have little relationship to the situation you are trying to describe. Your accuracy is greater than your mathematically derived precision.

The bar needs to be made fuzzy and wide. The center line looks good and is helpful for reading, but it doesn’t mean what it looks like and how it has been used (by Marcott).

Slightly O/T:

Jeremy Shakun responses in a Real Climate comment (#124) on core tops re-dating:

http://www.realclimate.org/index.php/archives/2013/03/response-by-marcott-et-al/comment-page-3/#comment-327407

Nick Stokes,

“I don’t think that’s his reasoning. He says elsewhere that the proxies are particularly unable to resolve 20th Cen, because of declining numbers and end effects. But we know there was a spike (CRU), and he’s saying that a spike like that would have shown up in the earlier record.”

This is being economical with the truth, to the point of being outright misrepresentation. If Nick Best’s analysis is valid he estimates a three to five-fold attenuation of amplitude of a spike over a 200 year period. (I haven’t tried to replicate with the binning used by Marcott, but this seems to make sense.) So a “spike like that” should be observable in the earlier data – true, but in a severely attenuated form. Such bumps of the correct order-of-magnitude ARE present at various times in Marcott’s results. So what is the correct scientific inference? One might conclude that no safe inference can be drawn. One might conclude that the instrumental spike is NOT likely to be unprecedented on a simple statistical test of amplitude of variation of the Marcott series. Or you could just throw science to the wind and go for the headlines?

Paul_K,

I don’t see where I’m supposed to be economical with the truth – I’m just reporting what Tamino said? Do you think the report is inaccurate?

Gentlemen,

Thank you for this posting and the many thoughtful comments. Much of the content is beyond my statistical training, but I can see that you all want to converge on a true interpretation of this matter.

I always come here for the objective review.

Thanks to all.

When I was processing the Marcott data, I found it annoying to perturb the Marcott way because there is nothing gained to assemble 1000 reconstructions, and then average them. It is simpler just to perturb each datum 1000 times into the final output data stack. I think Marcott did it this way to retain chronological isomorphism with the sediment depths — no layer should be dated earlier than an underlying layer. And while he did nominally achieve that, it is mathematically indistinguishable from just doing it all at once, especially as (as Shakun has noted) organisms can stir up the layers locally.

Anyway, episode over now, they’ve given away their “proxy uptick” although of course most of the world only remembers the dramatic press releases at the start. I’m done too. Over to Clive Best who has done the best analysis of all this that I can see, cheers.

Hah! Steve’s had pending a while a nice plot showing this.

Sorry Roman, I’ve been puzzling over this all day, but I just don’t get it (like Paul_K, I think). Where does your E come from? It seems to be the residuals in Muller’s proxies. But why do they recur here? Isn’t their variability just what is reflected in the aggregated uncertainty A and B?

You seem to be saying that they derive no benefit from the many samples studies by Muller. Each use of the relation brings back the uncertainty of each of Muller’s individual proxies. Plus A and B.

In any case, its relevance is not clear. The UK’37 relation may be uncertain, but there’s no reason to think it is variable from timestep to timestep in a given proxy in Marcott’s set. And a common value of A will disappear when anomalies are created.

Re: Nick Stokes (Apr 5 01:44),

No, the “aggregated uncertainty A and B” reflects the uncertainty in the regression line. E is the variability around the line.

And where does the uncertainty in the regression line come from?

But do you understand why that variability, presumably in Muller’s samples, should be added back in when the regression relation is used?

Nick Stokes (Apr 5 06:39),

it comes partly from the fact that Muller only had a finite number of samples so he could not derive a perfect equation. Yes I do. Nick, please re-read what Roman has written.

The uncertainty in the regression lines comes from the uncertainity of the mean values of the dependent variable. The problem is the first regression equation in the article should be E(X’37) = …. not X’37 = … If the error term is left out as was correctly pointed out by Romanm

It’s really the perturbation to the dates that produces the smoothing, not the perturbation to the temperatures. Suppose I have a sawtooth sequence of numbers 0 1 0 1 0 1 … then add 1000 zero-mean random numbers, then average, I will get pretty much the same result, 0 1 0 1 0 1… But if I perturb the dates 1000 times, moving the series sideways, and then average, that will produce a lot of smoothing.

They acknowledge this on page 8 of the SM -“age-model uncertainties are generally larger than the time step, and so effectively smooth high-frequency variability in the Monte Carlo simulations.”

“It’s really the perturbation to the dates that produces the smoothing, not the perturbation to the temperatures.”

Indeed so.

The date perturbation seems effectively equivalent to applying a low-pass FIR box filter to the data with a window width equal to the full width of the date uncertainty (ie, +/-100 years = 200 year window). This is a rather crude low-pass filter but will certainly do the job of removing any high-frequency information.

Arguably, large date errors are less likely than small date errors so a gaussian filter would be more appropriate, ie, applying a normal distribution to the error function.

Sorry, upon reading their method again, they do seem to be using a normal distribution for the error functions. If so, that means their monte carlo method is little more than a gaussian FIR filter applied to the data plus some sort of error band fuzz to account for the possible temperature error.

+/-100 year error means a 201 year window, not 200 as I said earlier but the point effectively remains the same.. they could have saved themselves some computing time and made the algorithm totally deterministic by doing the filtering this way (and it would then be more clear that this really only smoothing the data to remove any high frequency information).

It might be worth considering the uncertainties of Uk’ 37 temperature proxies.

It is actually a rather good proxy since the Uk’ 37 value seems to be directly related to the temperature of the seawater the organism once lived in, but…

The “Annual Mean SST” the Uk’ 37 is calibrated against has an appreciable uncertainty. It is not based on direct long-term measurements at the actual core site, but is an estimate probably derived from one of the gridded SST temperature datasets that are available. These are far from perfect.

There is an appreciable non-random spatial uncertainty. Pelagic organisms don’t live all their lives in one spot, and they don’t sink vertically to the bottom once they dead. This is not necessarily invariable over time. Ocean currents and winds change.

There is an appreciable temporal smear. “Organisms can stir up the layers locally” as NZ Willy notes. As a matter of fact they almost always do so, this being known as bioturbation. It is not coincidental that annual laminations are exceedingly rare in marine environments, and essentially only occur in a few permanently anoxic areas. Note that this is different from (and in addition to) the dating uncertainty for the individual data points.

“Core top” is ideally equal to “now, plus whatever smear is due to bioturbation”. This is only true if the core was collected in a way that did not destroy the unconsolidated surface layer. One hopes that this applies to all cores used for calibration.

So, no I don’t think that Uk’37 can “realistically tell us the mean Temperature more than 5 millennia back within .18 degrees with 95% confidence”. But it can realistically tell us the local SST within couple of degrees, averaged over a few centuries for an area of several thousand square kilometers somewhere relatively near the sampling point, at a time plus or minus a few centuries.