Perhaps the greatest single difference between being a “real climate scientist” and policies recommended here is that “real climate scientists” do not hesitate in excluding data ex post because it goes the “wrong” way, a practice that is unequivocally condemned at Climate Audit and other critical blogs which take the position that criteria have to be established ex ante: if you believe that treeline spruce ring widths or Arctic d18O ice core data is a climate proxy, then you can’t exclude (or downweight) data because it goes the “wrong” way.

This seems trivially obvious to anyone approaching this field for the first time and has been frequently commented on at critical blogs. However it is a real blind spot for real climate scientists and Tingley and Huybers are no exception.

Fisher’s Mount Logan ice core d18O series is a longstanding litmus test. It goes down in the latter part of the data and is not popular among multiproxy jockeys. Tingley and Huybers excluded Mt Logan from their data set, purporting to justify its exclusion as follows:

We exclude the Mount Logan series that is included in [35] because the original reference [36] indicates it is a proxy for precipitation source region and is out of phase with paleotemperature series.

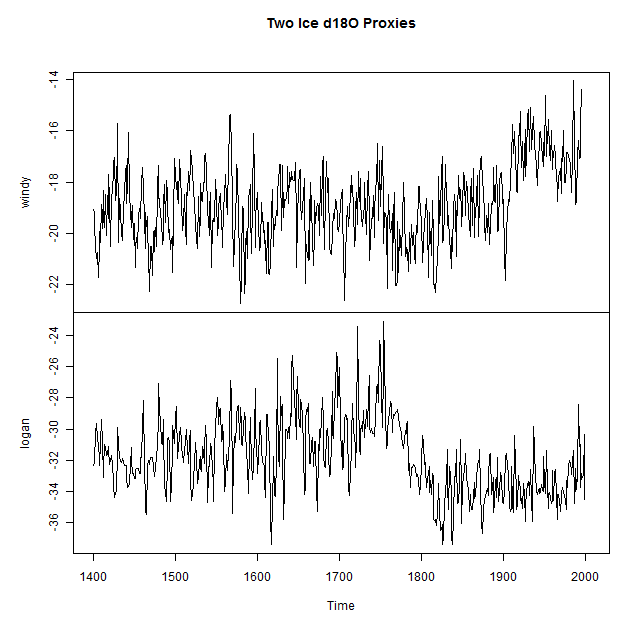

In the figure below, I show two Arctic d18O series – on the top is Windy Dome, a Thompson series from Franz Josef Land used by Tingley and Huybers and on the bottom is the Mt Logan series that they excluded.

Top: d18O. top- Windy Dome, Franz Josef. bottom – Mt Logan.

Windy Dome d18O goes up in the 19th and 20th century. The possibility that some portion of the increase might be attributable to change in source region doesn’t cross Tingley and Huybers’ mind. On the other hand, Mt Logan goes down and the authors unhesitatingly attribute this to change in source precipitation and exclude it from their network (post hoc.)

However, they don’t consider the bias inherent in this sort of ex post exclusion. Attributing the decrease at Mt Logan to changes in precipitation source is very plausible. But it’s equally plausible and even probable that changes in precipitation sources could also work in the opposite direction, exacerbating any increase due to temperature. Perhaps there was a change in source region for Windy Dome that contributed to its recent increase in d18O values. Exclusion of Mt Logan, without a compensating exclusion of an accentuated upward series, will impart a bias to any composite.

If scientists believe that Arctic d18O is a proxy for temperature, then they cannot exclude data after the fact because it goes the “wrong” way, as Tingley and Huybers have done here. Particularly if they have no compunction about using contaminated data that goes the “right” way.

If authors take longer to report data that goes the “wrong way”, this will also bias composites at any given time. For example, Lonnie Thompson’s Bona-Churchill series, which is near Mt Logan, also goes the wrong way. Has this contributed to the delay (now exceeding 10 years) in reporting these results?

123 Comments

Probably this has been asked before in comments, but since temperature proxy data are messy and ad hoc exclusion of data causes bias, why are inherently robust methods for averaging not always used in paleoclimatology?

Cees, you assume they are looking for the robust answer. But as the exclusion of this series proves, they are only interested in the “right” answer …

Welcome to climate “science”.

w.

It looks like the Mt. Logan starts responding “correctly” to temperature after ~1820.

Location maps: Windy Dome and Mt. Logan.

To be fair, they’re in very different areas that might unsurprisingly have different climates. Windy Dome is on an island surrounded by the Arctic Ocean. Mt. Logan is inland, just inside Canada and about 80 km from the Gulf of Alaska.

Given the way the northern jet swings around like a drunken sailor, it would be hard to imagine Mt. Logan dO-18 as purely temperature-determined.

The Windy Dome climate is described as, “influenced greatly by the intrusion of Atlantic-derived cyclonic systems that feed moisture into the region,” which again doesn’t bode well for a dO-18 record uncontaminated by the goings and comings of storm tracks.

But one consorts with the instrumental record while the other doesn’t, and that settles that.

By the way, Steve, you forgot to append the TM tag on “real climate scientist.” 🙂

” “real climate scientists” do not hesitate in excluding data ex post because it goes the “wrong” way”

I think what is needed here is an ex ante definition of what is the “wrong way”. Here there is a dive from about 1750 to 1820. After that, it’s pretty much rising. Why would RCS’s want to exclude that.

They wouldn’t want to exclude that. But they would need to think of a reason for excluding the pre-1820 data.

Why?

Because it doesn’t “make sense” and climate scientists only use proxies that show results which make sense.

You know that.

The anomalies over the record are negative in the 20th century.

Nick:

Absolutely.

The validity of a proxy needs to be evaluated ex ante based on geologic, climate, data collection and analytical techniques. This is done all the time in geologic studies by developing conceptual model of a particular site where wrong-way data could be signal for another behavior that a particular “proxy” is responding to instead of the target signal. Also, there are sampling issues. Like the varve thickness compensation with depth due to compaction… there is also compaction and expansion that occurs when driving a core sampler. There are also signal losses that can occur during collection, preservation, transportation, preparation, and analysis. These issues are much more important that statistical methods because one a sample is compromised, or once a field effort is over, or once destructive lab methods are complete, you can’t go back and fix poor sample handling or re-take field notes or field measurements or undestroy sample back into pristine, insitu conditions. You can always fix the statistics again and again and again.

But if it is done solely ex ante and the whole of proxies point all over the place, either (a) a reconstruction will fail skillfulness because it won’t validate well enough against the instrumental temperature record for its home region; or (b) it will make it seem like the proxy-type is not valid for temperature as a whole and not useful for temperature.

Choice B is simply not allowed in the science (as I see it) because there’s a whole bunch of members in each group that happen to verify well against the calibration period. Scientists are either going to have to come up with a way to demonstrate this as a luck/chance anomaly within an excruciatingly large population (has this been proven?) or that each of the retained members are uniquely special or uniquely specious beyond simple correlation to the calibration period.

In this case, the authors seem to be simply leaving it up to others (the original publishers of the series) to rate the validity of the members, and therefore do not have to answer to the critique of why they were included or not. So, if both are equally valid (or not) then somehow the literature must reflect that first, yes?

Salamano:

Old reports are reviewed all the time where the investigators claim their data/conclusions are good, but details they report, but don’t evaluate critically coupled with general conditions and principles they don’t understand paint a picture of garbage. Contrary, good work is easily identified to experienced investigators. Industry pays scientists and engineers very well to find where the bodies are buried from clues laid in clueless reports.

One interesting thing in Marcott was that they felt they could get ~80% of the world temperature coverage with only about 20 locations. If this is generally true, it makes sense to limit proxies to the “A” list rather than churn noise and worse hoping it will cancel out.

Salamano,

I can not disagree more. I don’t understand how you can even say that either of your “choices” are “not allowed”. This is not how science works. If some of the data supports your theory and some does not, it most likely means that you don’t understand the processes involved well enough. Just because some of the data sets correlate well with the instrumental temperature record does not prove anything. Spurious correlation is a common problem in statistics. Arrogance is not a substitute for knowledge.

Paul Penrose, “Arrogance is not a substitute for knowledge.” Fancy that! 🙂

Howard,

Until someone goes back and revisits individually accepted proxies and either publishes corrections or gets the original authors to correct them, reconstruction authors are going to be able to simply defer to them as part of their screening criteria as to why all their proxies are ‘good’ and ones left out are not. Mt. Logan is a good example of this. The reasons for including one and excluding the other can certainly be spurious. The only thing the accepted one has going for it is (a) it technically verifies against the instrument record, and (b) the original author says the one is good and the other is bad. Tingley could easily deflect and request you take it up with the other authors.

This particular work HAS been successful for a few individual proxies and/or proxy types, though certain authors keep using the data (and declaring it is proper because the results ‘validate’ better against the instrument record).

Paul,

I hear what you’re saying. However, that’s not what’s going on in a lot of climate science. Scientists are armed with the instrument record as their calibration/validation tool, and have been developing their explanations as to why one proxy is good and another bad. Unless someone publishes something to the contrary, the train has already left the building (the one that says you don’t have to include all trees/cores/varves/etc.) Where to start then? Simply dismissing the work out of hand isn’t the place.

Salamano: You seem to believe that there is a corpus of “accepted proxies” somewhere. There is not. There are individual studies in which the authors attempt to relate the geology of specific sites to climate history. Sometimes, as in the case of upside down Tiljander, the author says the data is corrupted after a certain date, and the Team uses it anyway. In other cases, the author says a proxy is reliable and the Team leaves it out. When a proxy is picked that looks “right” and then is heavily weighted either by dropout of other proxies as in Marcott or by some weighting algorithm, then it really determines the outcome. This is neither objective nor repeatable science. It would be like only picking the patients who survived to evaluate your cancer treatment.

I did indeed consider it more-or-less accepted in the Climate Science world that there exists a set of proxies that are useful for temperature reconstruction, and those off the list are deemed bad. You are saying this is not the case. I’m inclined to believe you, though I would have to think “The Team” considers anything that validates well across the instrument period as reliable, and those that don’t as not.

Why else would Dr. Mann (er Schmidt) state that: “Particular concerns with the ‘bristlecone pine’ data were addressed in the follow-up paper MBH99 but the fact remains that including these data improves the statistical validation over the 19th Century period and they therefore should be included.”

Would not then a good way to publish contrarian papers FIRST to be (a) Identifying the incorrect/contaminated/erroneous proxies and have them reflected as such in the official literature– I understand work is being done on this front; and also/simultaneously (b) to address the issue of statistical validation of the instrumental record calibration periods being non-essential (or non-indicative) of true proxy coherence?

Just dropping proxies and adding them or whatever and then jumping to publish a reconstruction might be determined fatally flawed at the outset because of the seeming necessity to validate against the temperature record, and further to include all proxies that do so, excluding others that don’t.

There are some in the climate science community that are prepared/able to accept something like a MWP being anomalously warm compared to today http://www.realclimate.org/index.php/archives/2005/01/what-if-the-hockey-stick-were-wrong/, but I don’t see it happening via reconstruction unless/until the published science addresses the aforementioned issues.

In fact, it seems to me that the science itself is moving away from the precedentedness of today’s temperatures and toward the unprecedentedness in the ‘spike’, particularly considering the force-makeup to get it to happen. Would you not agree?

Which also reminds me… going back in time, re-reading your proxy reconstruction– I was struck by the level of “Climate Audit-ness” employed by the folks at RealClimate when it came to digesting your results:

http://www.realclimate.org/index.php/archives/2007/12/past-reconstructions/

It seems to me that there could have been a lot of this same wording/exploration applied to Marcott et al by the same folks, but wasn’t done. Tamino also took umbrage at the “auditing” of Marcott and sought to declare high ground for himself by demonstrating he could statistically improve the reconstruction without much damage to its data or results (which apparently is “science”). It looks like “science” is not uniformly offered. Ergo, the value of the crowd.

Steve: I agree that this RC post was a “Climate Audit” type post. There’s an irony in this particular post that I’ve been meaning to write about: a number of the Loehle proxies, including some of the ones with which Schmidt took particular umbrage, were used in Marcott et al. Schmidt focussed on dating issues – as I did in respect to Marcott. Schmidt’s criticisms also apply to Marcott. Marcott has the added problem of justifying their coretop redating.

Marcott’s methodology is probably more similar to Loehle and McCulloch than any other study: they limit themselves to proxies already calibrated to temperature and use simple averaging. However, unlike LM, they did not center over the entire period, short-centering over the mid-Holocene. marcott’s mid-Holocene centering exacerbated his robustness problem at the end.

Salamano,

Gavin’s quote represents a failure to understand mathematics, which is an odd situation for someone who is a mathematician.

“Particular concerns with the ‘bristlecone pine’ data were addressed in the follow-up paper MBH99 but the fact remains that including these data improves the statistical validation over the 19th Century period and they therefore should be included.”

You have also fallen into the same trap:

“The only thing the accepted one has going for it is (a) it technically verifies against the instrument record,”

What happens when you “verify” noisy data against the instrument record and then average series together is that the spuriously matching noise is amplified in the instrument timeframe and randomly cancels before the instrument data. Gavin should know this by now, because he has been told so many times, but the reality is that this effect creates “unprecedented” variance in the instrument timeframe in comparison to history. It sounds good to say – well why would you use data which doesn’t match temperature? Mathematically and scientifically, it is not reasonable.

There is a second more subtle version of the process where people take a mass of proxies and regress them on temp. This deweights yucky proxies, as Steve’s post alludes to. The worse the fit, the lower the weight. In other words, it does the same thing.

The third method that seems to be the common practice these days is for the scientists to pre-select preferred proxies. This happens completely outside of the paper in most cases and leads Steve to make posts like this one.

Mathematically, all three of these methods are the same thing.

In other words, it is a very convenient math situation for those who are selling a result, yet it doesn’t hold up to the smell test. In addition to a lot of blog attention, there have been a number of papers published on the matter. Many here keep hoping to see some more recognition of the seriousness of this problem, but I believe an unbiased scientific understanding would shut down paleoclimate temperature publications until more suitable proxies could be found.

As Jeff well know, one cannot take anything from Schmidt on this topic at face value. In MBH99, Mann made an arbitrary fudge to the PC1. Jean S, UC and I spent lots of time trying to figure out the fudge. Schmidt’s assertion, once you watch the pea, is that this fudge “addressed” the bristlecone issues. This is disinformation. There were many issues surrounding bristlecones and these were not addressed by the MBH99 fudge. For example, the impact of the CENSORED directory results on MBH98 was withheld.

Re: Salamano (Apr 15 08:28),

As you note, they have stated this. The problem is, that’s not good science when this is a selection criteria rather than a result. If I say it this way it should be blindingly obvious: it is not good science to select data sets that correlate with your hypothesized outcome, and exclude those that do not.

It gets more extreme than the quote you provide, although what you provide evidence of the non-scientific nature of what we’re discussing. (If you’ve not heard about the “cherry picking” quote, you need to read CA history a bit more 🙂 )

This has been done. The most work to date has been done on strip bark BCP’s. They are specifically un-recommended for inclusion. Yet often included anyway. That’s one factor that led to the CA Almagre Adventure testing the Starbucks Hypothesis…and our interesting discoveries in Tree #31. Look at Tiljander. Again, specifically disavowed as useful in the literature. But used anyway… and even upside down!

Salamano: it helps to distinguish types of proxies vs individual sites (people use the terms interchangeably). We can say that tree rings, ice cores, lake sediment varves, alkenones…are types of proxies. There are disputes about the validity of each of these in general. Then there are individual sites such as mt logan or windy dome. Given that we have accepted the TYPE of proxy at these 2 sites, the decision to keep or reject one or the other should really be based on sound reasons, not just matching the recent temperature record or not (for reasons Jeff gave here). Maybe that particular site really did get colder recently (esp when it is an ocean core influenced by currents).

It’s this part of the quote I’m more interested in at the moment:

Which means that to some scientists they do not accept the rejection of various proxies for certain reasons if it improves the validation statistic (and therefore makes the reconstruction more robust). [these are their intimations, not mine] Jeff makes the case regarding the mathematics, which is fine, but there’s obviously some sort of paleoclimatology research/papers that affords scientists the ability to do what they do. How successful have published papers been to counter the methodology? I think the proclaimed uniqueness of the field is currently permitting them this license, yes?

Either the publishing has to be done to criticize the selection/math methodology while at the same time suggesting an improvement for the very same, or the publishing has to be done at the individual proxy/regional level. I see the successes in this latter element, but on the whole the accepted and prevailing understanding in the field still points toward saintly treemometers highly weighting a sample, etc. etc. Has anyone tried a reconstruction with the current list of “accepted” selection criteria (as in no bristlecones, no Tiljander, etc.)?

Steve: In our 2005 papers, we showed the effect of no bristlecones using MBH methods i.e. that they didn’t get a stick. This led into a long and arid debate which Mann and realclimate argued in terms of the “right” number of principal components to retain, i.e. that there was some sort of mathematical law requiring bristlecones. The NAS panel recommended that bristlecones be avoided, but Mann used them anyway in Mann et al 2008. In Mann 2008, he argued that he could “get” a Stick without bristlecones, but that recon used contaminated Tiljander; he concurrently argued that contaminated Tiljander didn’t “matter” because he could get a Stick without Tiljander (but this used bristlecones). Ross and I submitted a comment to PNAS which among other points called attention to contaminated Tiljander. Mann blustered and stonewalled the error. Everyone on skeptic blogs understood the trick, but Realclimate and the community pretended that they couldnt understand any problem. A couple of years later, we learned that Mann had slipped an admission into the SI of a different paper that his recon with neither had no skill prior to AD1500, but he didn’t retract the original paper.

I’ve done many examples over the years showing how removal of bristlecones and/or Yamal and replacement with equally or more plausible proxies changes MWP/modern proxy relationships.

Salamano: I have in fact published a paper showing why tree ring width is not a valid proxy:

Loehle, C. 2009. A Mathematical Analysis of the Divergence Problem in Dendroclimatology. Climatic Change 94:233-245

and in a REAL journal to boot.

Craig,

I think the field of climate science is kind of like the field of economics, in which publications can exist with polar-opposite conclusions, models, policy recommendations, etc. all when it comes to looking at the same picture. The Keynesians and the Austrians and all that jazz…

Except in this particular field it seems that one ‘school’ in-a-way owns the literature and the contributions to global policy / NGOs.

Salamano,

“I think the proclaimed uniqueness of the field is currently permitting them this license, yes?”

This argument is unscientific. No scientific field gets to chose data they like and discard data they don’t based solely on its shape. Were there known physical reasons to discard data, it would be reasonably discard-able, but in this post Steve has shown one of hundreds of examples of proxies which are discarded yet have no known physical problems that are any different from another proxy. The only problem seems to be that it doesn’t look like the result they want.

The math you are being told about simply does not work and it guarantees a hockeystick shape with good temperature correlation — even from raw noise. This effect has been repeatedly demonstrated on blogs and in publication.

The main-stream guys who garner funding from this field publish describing the effect as “variance loss” or something that sounds less problematic. Like the use of the term “divergence” for MXD proxies that don’t match temp.

What you have is a small group of very well paid activists who publish and approve each others publications, all containing the same kinds of failures. The argument that they are “allowed” do do this based on some unknown publication is inaccurate. When you look closely you find out that we really don’t know much about historic temps and to me that is disappointing. It would be truly awesome to know what global temperatures were a thousand years ago. I hope that someday we find out but so far we don’t have anything good enough.

Re: Jeff @ 7:50am

From what I’ve seen/read, their “physical reasons” to discard data arise from simple non-validation against the temperature record. For, if they included it, the reconstruction would be less robust…against the temperature record. Everything sprouts from there, whereby conforming proxies are declared self-evident as they clearly validate while the others have the burden of proof hurdle to argue successfully against inclusion. I realize work has been done in this area, but there are still some proxies out there where authors simply defer to others who have said “it’s good” or “it’s bad” as their screening method. [I’m just pointing out the obvious: what sets one proxy apart from another, rather than nothing]

Perhaps it’s important to try to not just validate against the temperature record principally…and instead include parameters that argue for inclusion if they correctly trend up and down (note: no mention of magnitudes) when it comes to known climate experiences (RWP, MWP, and LIA) together with the instrument record for a proxy site..? This way a muted ‘stick’ (or flat line, etc.) can still be acceptable in the instrument era if it manages to ‘catch’ well the other periods.

Salamano,

you are right at the crux of the problem. If one postulates that, for example, ring width of trees close to the treeline are a proxy for temperature then you must select all data that is collected from close to the treeline. One cannot just select those that are trending with modern day temperature and discard those that aren’t using the argument that you are selecting examples that are responding to temperature. How do you know that those same trees are responding to temperature once you are beyond the calibration windows, say 150 years ago. They might be like the trees you have discarded.

salamano writes:

==================

Perhaps it’s important to try to not just validate against the temperature record principally…and instead include parameters that argue for inclusion if they correctly trend up and down (note: no mention of magnitudes) when it comes to known climate experiences (RWP, MWP, and LIA) together with the instrument record for a proxy site..? This way a muted ‘stick’ (or flat line, etc.) can still be acceptable in the instrument era if it manages to ‘catch’ well the other periods.

=================

I suppose I should leave the discussion of this to others who know far more than me but what you are describing g here is data mining. That is, this not looking for the shape of unknown signal but verifying that a signal with known shape is in the input data.

Re Tom:

I believe there’s a reasonable degree of cross-validating evidence out there that can vouch for ‘trends’ in temperature across various eras, though not the specific apex or valley… This would include the RWP, MWP, and the LIA … so theoretically you’re not looking for a greatly specific signal, just the ups and downs being more or less similar…

…Much like what Marcott et al was satisfied with when their reconstruction corresponded to Mann et al upon manual alignment. Except this time we’re doing it with proxies without ‘only’ hallowing the modern temperature record.

Re: Steve McIntyre (Apr 15 10:44),

Gavin’s statement is really a masterpiece of misdirection. MBH99 “fudge” (termed Mannkovich bodge by Hu) concerns only MBH99 part of the reconstruction (i.e. 1000-1399AD). They did not “address” anything in the later parts which MM criticism was related.

It also funny that if you find a “correction” is needed to your proxy in one time step, isn’t it needed in other steps too? The effect is not changing whether you cut your proxy at 1000AD or at 1400AD.

Indeed, without bristlecones and Mannian PCA, it is really hard to get a passing verification REs at last steps even with Mannian standards. The fact is curiously reflected in Mann’s “addressing of the bristlecone problem”. That is, the used bodge is actually decreasing Mann’s verification stats (but still passing) at step AD1000. Among those four variations (see the bodge link above), the no bodge variation gives the highest verification RE. But then there is no “Milankovitch cooling”. On the other hand if you “bodge” too much (and you get a steeper “Milankovitch cooling”) then RE is going negative. It is really about finding just the right balance 🙂

Jean S

And, more importantly, if the cooling is too steep then the recent temperatures are not unprecedented anymore. Hard science.

Nick Stokes:

Doesn’t that get to the essence of what Steve Mc has demonstrated over the years – that deciding what the “right” result is in advance of actually running the data guarantees that your results will reinforce your starting biases?

It is mystifying that climate science can’t see this when it is blindingly obvious to everyone else.

“Perhaps the greatest single difference between being a “real climate scientist” and policies recommended here is that “real climate scientists” do not hesitate in excluding data ex post because it goes the “wrong” way…”

Imagine the outcry if pharmaceutical companies took this realclimate approach when testing new drugs.

This sort of thing pervades the current scientific research. Keep an eye on Retraction Watch to see all the research (mostly in medicine) that gets pulled almost daily. Tip of the iceberg. Thanks to sites like Climate Audit to identify questionable science in this domain.

Nick, I always love your contributions.

Please keep digging:-)

Steve: One approach would be see how much of the dO18 variance in the modern period can be explained by temperature records and how much can be explained by precipitation records (presumably the thickness or weight of each annual layer). The records might be detrended first. Could one legitimately set aside proxies which responded strongly to precipitation and weakly or not at all to temperature? Assume for simplicity that temperature and precipitation are not correlated.

Slightly offtopic question without notice…

A recent science-by-press-release alarmist headline may be worth investigating when the paper eventually comes out in Nature Geoscience.

http://www.abc.net.au/news/2013-04-15/antarctic-melting-ten-times-faster-than-600-years-ago/4628404

But if the ice melted it won’t be there any more. So what’s in the ice core is the most recent layer that didn’t melt in any subsequent year up to the dated age of the next higher layer. You won’t know how much ice loss that represents unless you know the rate of deposition during those years. The approximate rate of deposition is not known from this source or any other, right?

How do these people know the unknowable?

What have I misunderstood?

Andrew McRae,

Absolutely logical comment. In sedimentary geology, there are unconformities and disconformities where a surface was in a state of erosion before deposition started again. The time period of lost information is seldom able to be estimated. The loss of time in the Antarctic situation creates conditions that distort the time axis and require another means of independent calibration.

The O18 method is prone to easy assumptions when conversion to temperature is made, so the temperature axis is distorted as well. Example – the core as Vostok. Over the several hundred thousand years of time claimed, the area of the floating ice sheet would have varied enough to be significant as a distance over which precipitation carriers would have to travel. Thus, a signal of isotope fraction at the evaporation point would be more or less diluted according to the distance to the precipitation point. The eventual precipitation is very small, much is lost along the way. The heavier isotopes would be lost in the long path more than the short path. It is hard to understand why people draw up simple equations linking the delta O18 and temperature.

Thanks, Geoff.

Once again I find global warming is “worse than first thought”, but worse in a totally different sense!

I wasn’t specifically questioning the dO18 function, it was just an issue of how something that disappears can leave a measurable signal (of any quantity), if not for the datable time gaps between whatever physically remains.

But yes even the d18 may be harbouring confounding factors.

Could they use C14 for dating it? So in all these ice cores could the error level on C14 be due to a lack of ice from that year? So the higher the dating uncertainty the more ice melted during the summer? But then it’s worse because you have fresher Carbon washing down into older Carbon and making the older layer seem younger than it really is proportional to how much melted. Sounds a bit messy just on the face of it.

Just found the full press release is at ANU:

http://news.anu.edu.au/2013/04/15/10-fold-increase-in-antarctic-peninsula-summer-ice-melt/

So it gets worse. They measured old refreeze layers to be thinner than recent refreeze layer, and the assumption is that it has remained perfectly preserved since it froze, and is therefore undoubtedly a signal of a monotonically increasing temperature in the Antarctic since 1400AD.

Okay the late 20th century got a bit warm, we know that from 3 other methods, we didn’t need ice cores for that. The world has been warming up since the last Ice Age, big deal. It’s this monotonically increasing temperature that smells wrong.

Andrew,

No, Carbon isotopes are generally out because you would have to use CO2 and/or methane from gas bubbles to get your carbon. Then you meet the gas age/ice age problem, where dating of the gas and the ice around it can give different ages and usually does.

e.g this abstract from http://onlinelibrary.wiley.com/doi/10.1029/2005JD006488/abstract

[1] Gas is trapped in polar ice at depths of ∼50–120 m and is therefore significantly younger than the ice in which it is embedded. The age difference is not well constrained for slowly accumulating ice on the East Antarctic Plateau, introducing a significant uncertainty into chronologies of the oldest deep ice cores (Vostok, Dome Fuji, and Dome C). We recorrelate the gas records of Vostok and Greenland Ice Sheet Project 2 (GISP2) cores in part on the basis of new CH4 data and use these records to construct six Vostok chronologies that use different assumptions to calculate gas age–ice age differences. We then evaluate these chronologies by comparing times of climate events at Vostok with correlative events in the well-dated Byrd ice core (West Antarctica). From this evaluation we identify two leading chronologies for the Vostok core that are based on recent models of firn temperature, firn densification, and thinning of upstream ice. One chronology involves calculating gas age–ice age differences from these models. The second, new, approach involves calculating ice depths in the core that are contemporaneous with depths in the same ice core whose gas ages are well constrained. This latter approach circumvents problems associated with highly uncertain accumulation rates in the Vostok core. The uncertainty in Vostok chronologies derived by correlating into the GISP2 gas record remains about ±1 kyr, and high-precision correlations continue to be difficult.

Steve –

you have made an impressive study of possible cases of publication bias.

It would be quite a service if you were ever to write a paper summarising your findings on “delayed/truncated” proxies, setting out the scale of the problem. It would be a far more useful contribution to the literature than, eg, fantasy studies of conspiracy theories. It would also be the type of “general” paper which would immediately be accessible to scientists in other fields. This is important when it comes to promoting the type of concerns raised on sceptic blogs.

Geoff, the reason why people draw up simple linear equations that link ice delta O18 and temperature is because that is what is empirically observed. It is well known that in temperate and high latitude areas the spatial temperature gradient is linearly related to the spatial O18 gradient. This has been known since the seminal work of Dansgaard and Craig.

The key question is if you take a single site can you translate the spatial gradient in dO18 and T to the temporal domain. In many, and probably most cases, the answer is no. For example if one compares the change in dO18 of groundwater between last glacial maximum and modern recharge then the shift is much smaller than would be predicted if one uses the published empirical relationship between temperature and 18O. This is also true for high latitude ice. Detailed studies are needed for individual locations to understand the role of source regions, transport and precipitation conditions. In virtually all cases where data is used for palaeo reconstructions such information is missing.

Paul Dennis,

Thank you for your comment. As one trained early at the analytical chemistry bench, I have trouble accepting the assumptions made in cases like this. The difference was that we were accountable for our results and customers would leave us if we did not perform to the standards we advertised.

I sense that the climate community needs to self regulate promptly, or risk an external, authoritarian regulator, which can be more onerous than the choice to self-regulate while the option is open.

A similarity is that both the climate community and I wore white coats, but for different reasons.

Geoff, I don’t disagree with you. However you made the statement “It is hard to understand why people draw up simple equations linking the delta O18 and temperature.” I gave you the reason why people do so. The relationship between spatial temperature distribution and average annual dO18 in precipitation is remarkably strong.

On a site by site basis our understanding of d18O variation is limited. On the one hand there has been comparatively little work done on linking synoptic weather patterns with d18O etc. On the other there is a lot of data that shows monthly d18O correlating with local monthly temperatures.

Tropical and sub-tropical sites are more problematic and rain amount effects dominate.

My position is that until we understand the response of an individual site then it’s unwise to use it as a proxy. In point of fact there are very few proxies that we have a good a priori understanding of what the response is to temperature, or where the system is not under-determined. About the only one I can think of is clumped isotopes (D47) in carbonate minerals. Here the ordering of 13C and 18O in the lattice is a function of temperature alone. However, it is extraordinarily difficult to measure and the best precisions available are still only giving +/- 2 degrees C which is not a lot of use for Holocene temperature reconstructions.

Sorry if this is old news, but what you’re saying implies all ice cores everywhere are invalid as proxies for temperature.

That’s a pretty big deal but at the same time I can see how it is justified.

So (for example) for measuring Antarctic ice mass trends over the last 5 years, ice cores are out because they can’t be calibrated, GRACE is only roughly measuring something 2 degrees of separation from the quantity in question, and that just leaves laser altimetry remaining.

Does this mean ICESat is they only way to determine recent Antarctic ice loss/gain?

Andrew, I didn’t say that all ice cores are invalid as proxies. When working on the Gomez glacier on the Antarctic Peninsula we found there is a good correlation between local temperature and annual average ice d18O. This is likely to be true for other Antarctic cores, though not necessarily all. The GISP core is also well understood in terms of it’s temperature signal.

This is just another case of observer bias. The data-sets do not match what climate scientists “know” about the modern temperature record and so therefore they throw out any results that do not match this.

Observer bias is a real problem in climate science and yes, this is what is happening here and elsewhere.

Results that “agree” with this record are hyped and otherwise promoted as far as their weight goes and so instead of getting an honest appraisel of proxies, we get only proxies that agree with the modern record with no checking on whether these “over-weight” proxies are indeed correct. It is just as likely that the proxies that do match the modern temperature record are tainted in some way.

The problem paleoclimate faces is that they cannot claim they have reconstruct temperature if they cannot exclude or downweight data which doesn’t correlate. Basically, the entire field of temperature reconstruction would stop publishing until they found a functional set of temperature proxies which could be used properly.

I haven’t thoroughly looked but I suspect some moisture proxies have similar problems.

Old, but good. A Cook’s Tour

There’s an excellent discussion of the problems with dendro reconstructions on Jim Bouldin’s website, “Ecologically Orientated”. There’s about a dozen posts, including a discussion of the rejection by PNAS of a paper he has written on the subject.

For part 1, see: http://ecologicallyoriented.wordpress.com/2012/11/10/severe-analytical-problems-in-dendroclimatology-part-1/ .

The posts are certainly a worthwhile addition to the field. His first post identifies three principal problems:

“The three issues are:

(1) ring width, being the result of a biological growth process, almost certainly responds in a unimodal way to temperature (i.e. gradually rising, then rather abruptly falling), and therefore predicting temperature from ring width cannot, by mathematical definition, give a unique solution,

(2) the methods used to account for, and remove (“detrend”) that part of the long term trend in ring widths due to changes in tree age/size are ad-hoc curve fitting procedures that cannot reliably discriminate such trends from actual climatic trends, and

(3) the methods and metrics used in many studies to calibrate and validate the relationship between temperature and ring response during the instrumental record period, are also frequently faulty.”

Well worth a look, if people here haven’t seen the posts already.

Cheers,

“… and is out of phase with paleotemperature series.”

What does that statement mean? Phase is a frequency-specific delay so what they are saying is that the shape of the data matches the expected shape but there is a lead or lag. But looking at it, I don’t see how that is the case. It seems to be in-phase with other paleo temperature reconstructions up until about 1750 and then the phase “inverts” after that.

That’s quite a different thing from the whole series being out-of-phase which would imply a cause/effect relationship.

I think this is very sloppy language for a scientific article. They really should know what phase is and not just throw the term “out of phase” around as a euphemism for “negative correlation”, which seems to be what they are trying to say.

“… and is out of phase with paleotemperature series.”

I.e., does not give the right answer.

Steve,

This is a bit off topic, but could you please share with us the state of Climategate 3? Certainly there has been enough time to start releasing at least some of the e-mails. Certainly enough time has passed where even a small group of workers could give an interim report?

Your opinion on the relative silence surrounding this massive release of e-mails and data would be appreciated.

Did you take the courtesy of writing to Tingley & Huybers to ask them about Mt Logan?

Steve: Nor did they take the courtesy of sending me a copy of their article. I’d be more interested in their justification of the use of the contaminated portion of the Tiljander sediments though.

I have criticised Steve in the past for being a bit unreasonable on certain issues but I dont see why he should need to write to the authors about Mt Logan.

The authors have stated a reason for excluding Mt Logan so there is no need to seek a further explanation from them in this case. Steve is observing that the rational for exclusion appears to be arbitrary and is itself a likely source of bias. Seems a very reasonable issue to raise at this blog to me.

Say he had written to them.

For how long should he keep his powder dry and forbear from comment when he’s waiting for an answer, or even acknowledgement of his request? Would it be enough that his question was referred to in passing and “addressed” at RC via an intermediary? There’s ample precedent that indicates that attempting the sort of exchange you seem to favour can be a waste of time.

If either author wanted to respond here, I dare say they’d get a free run at it, followed by a correction / clarification in the body of the post. There’s ample precedent for this happening in the past as well.

It’s self-evident that these posts aren’t just cobbled together and shovelled online without considerable effort and attention to detail.

Interesting that this blog has to approve comments before they appear…..

Re: David Appell (Apr 15 23:55),

and you’re making that misguided comment only because your previous one-liner got caught (I released it now) in to spam-filter. Interesting, indeed, …

The reason may be that his listed URL is a dead wordpress site. That may be enough to trigger the spam filter.

Interesting that any comments here are getting caught…. Back to the question at hand: were Tingley & Huybers asked about Mt Logan, or is it OK to needle scientists without the courtesy of an inquiry?

Steve: don’t know why your comments are getting caught. My apology. Your comments are very welcome here.

Jean S: A possible reason is what charles already said: you are providing an URL (in the website field) to a dead wordpress site. Correcting that in your wordpress profile may help.

Many of us wouldn’t read it as needling scientists but as necessary questioning in the service of better science. Even the term “real climate scientists” is asking an implicit question about where good practice currently starts and ends and where it should start and end. Seeing past the style to the substance is the key to illumination, here and elsewhere.

A few days ago the “Junior Birdmen” were criticized for not excluding the modern portion of Tiljander’s varves from Central Finland because the Tiljander demonstrated that this portion of the record is contaminated. This post criticizes Tingley for excluding the dO18 proxy from Mt. Logan, which at least two groups have concluded is not a temperature proxy. (Links below.) Although dO18 is usually a proxy for the temperature at which precipitation forms, we know that it can also reflect the temperature of the ocean that was the source of the precipitation and fractionation along the way. Is there any consistent position skeptics can adopt besides “include everything” (including Tiljander, bristlecones and Yamal)?

Instinctively, I would prefer to make best use of what we think we know about the reliability of potential “proxies”, but that allows those with bias exhaustively study proxy records which point to a warm MWP until they find something wrong and stall investigations into proxies that suggest the opposite.

http://www.erudit.org/revue/GPQ/2004/v58/n2/013147ar.html

http://digitalcommons.library.umaine.edu/ers_facpub/270/?utm_source=digitalcommons.library.umaine.edu%2Fers_facpub%2F270&utm_medium=PDF&utm_campaign=PDFCoverPages

Steve: there’s a big difference. tHE modern portion of the Korttajarvi is known to be contaminated by local agriculture etc. This is not the case with Mt Logan.

On Yamal, I pointed out that Briffa had failed to use other equally plausible sites in the area which had a divergence problem and eliminated the huge uptick originating from the few series chosen.

On tree rings, my recommendation has been that practitioners pick an ex ante criterion for temperature limitation where precipitation is not an issue (e.g. treeline white spruce or whatever) and take all of the sites within the criterion. Bristlecones are in an extremely dry area and do not meet this criterion. The NAS recommended that they not be used in temperature reconstructions, but this recommendation was ignored in Mann et al 2008 and similar publications,

In addition, I pointed out that supposedly “independent” reconstructions actually were heavily influenced by a couple of series, used over and over, and were not “independent” as proclaimed.

Its not the exclusion or inclusion itself which is a problem , its the justification of way this is done .

If your grounds to excluded one set of data , which by ‘lucky chance ‘ goes against the idea your selling , can be used against data you included that does rise real questions.

The trouble is climate ‘science’ is a serial abuser of such ‘tricks ‘ , adjustments and ‘mistakes’ always favour ‘the cause ‘ and are often hidden , raw data deleted so they cannot be checked , or simply define common sense and the scientific approach. Has many in this area but more effort into advocacy they they do into science.

Frank

First principles: for something to be used as a proxy measurement for temperature, it must be responding to the changes in local(ish) temperature it is experiencing in a reasonably predictable manner. Clearly, there will be some confounding factors, but site selection (for example, picking treeline trees because their growth should be temperature limited more than moisture limited) should in theory minimise these effects.

The problem with the Tiljander sediment sequence as a temperature proxy is very simple: the later part of the sequence is not varying as a result of temperature but of changed run-off caused by land use changes. It is not a temperature proxy for at least the last 100 years and probably near 300 years (as stated by the original authors). Unfortunately Mann 2009 and other reconstructions took a spurious correlation of recent sedimentation rate increases with temperature increase during the calibration period of the reconstructions as sufficient to allow the series’s inclusion in a multi-proxy study. An unfortunate artefact of this was that the spurious recent correlation actually reversed the relationship between layer thicknesses and temperature for the period where the sequence was a potentially valid proxy, consequently flattening any variation in the earlier part of the overall reconstruction (as the temperature response was 180 degrees out of phase with the other sequences). The bottom line is that Tiljander is not a reliable temperature proxy in the last 300 years and so must be excluded from this part of any reconstruction (as a consequence, it cannot be used in multi-proxy studies like Mann’s, where proxies are calibrated against the measured temperature record, which only covers the last 150ish years)

The issue with the Junior Birdmen case is more nuanced, but is more the ‘classic’ issue of post hoc selection that Steve has long argued against. Either you accept from theory that all supposed proxies of a certain type (whether treeline larches or ice core d18O etc) are reliable temperature proxies or none are. If you exclude one series of a certain type because of confounding factors, it makes it very difficult to justify the inclusion of other proxies of a similar type. It would not be entirely impossible to make the justification, but a strong case would have to be made that the confounding factors can and do only apply to the excluded site* – for example, with an ice core ‘proxy’, the effect of nearby volcanic activity could potentially be one such reason, if this clearly had not had an effect on other similar proxy series further away. In this case however, the argument is that for the excluded Mt Logan site, the d18O profile is a result of precipitation rather than temperature, which is a confounding factor that could clearly be applicable to all such sequences.

* Of course, one of the original issues addressed by Steve and Ross was MBH 98 / 99’s inclusion of ring widths for stripped bark bristlecone pines as a temperture proxy. The ring widths were clearly anomalously wide as a result of the events that led to the strip bark formation and so should have been specifically excluded, while other similarly sited BCPs could legitimately be retained as temperature proxies. The follow on to that is that only the stripped bark trees showed a strong modern up-tick in ring width, so was one of the very small group of proxies that gave the original hockey stick its strong blade.

Ian: See my reply to Steve above. I’d like to know what evidence, if any, would be strong enough to exclude Mt. Logan, as he does Tiljander and bristlecones. (Yamal, as he points out, was simply a matter of excluding nearby tree rings that were needed to have a robust analysis.)

I presume glaciers in Central Finland still release more sediment in warm years than cold, so the Tiljander sediments could still be responding to high frequency variation in local temperature as before. Agriculture has made centennial variability unusable.

AFAIK, Central Finland has no glaciers.

Steve: Thanks for the reply, which said in part: “The modern portion of the Korttajarvi is known to be contaminated by local agriculture etc. This is not the case with Mt Logan.” From the little I’ve read, some might say that Mt. Logan is “contaminated” with moisture from the tropical Pacific during some periods.* Do we have to throw away all dO18 proxies because some vary with local temperature and a few(?) do not? Otherwise, it seems that you must accept some ex ante selection criteria, as you do for tree rings. What types of information are and are not suitable for making ex ante decisions about non-tree-ring of proxies?

* Admittedly, the evidence cited in the above papers on Mt. Logan is far from ideal. [Wild?] speculation about teleconnections between Mt. Logan and distant sites around the Pacific doesn’t demonstrate that dO18 at that locale doesn’t contain a useful temperature signal. Both papers simply mentioned that Mt. Logan was “upside down” with respect to nearby tree-ring proxies during the LIA. Lack of correlation or anti-correlation with historical temperature data (preferably detrended) near that site might do so, although other posts suggest you might not consider this method a reliable reliable enough criteria for excluding this site from a reconstruction.

Frank

I’d reverse your argument – if the authors accept that Mt Logan should be excluded from the reconstruction because it is not responding systematically to temperature, on what basis can they include any other d18O ice core series?

As I put above, I think to allow selective exclusion would need an unequivocal demonstration that the process affecting the one excluded series was not having an impact on other series of the same type of proxy. A reasonable correlation between the proxy and measured (local / regional|) temperature in my opinion can only have the same evidentiary power as the hindcast of a model – necessary but insufficient to verify the results.

Ian: I enjoyed thinking about your reverse argument and trying to learn a little of what the literature teaches. The most useful paper I read was:

Click to access JouzelJGR1997.pdf

It is clear that as one moves poleward, dO18 in precipitation changes from a precipitation proxy to a temperature proxy. There are lots of data points suggesting that dO18 is likely to be a reliable temperature proxy in Greenland and Antarctica. I don’t think it is sensible to discard all of those sites because Mt. Logan appears to not follow the same trend. If researchers use their expert judgment to exclude proxies that do meet their preset criteria for inclusion in a reconstruction, they have an obligation to do the reconstruction with and without the dubious proxy, so readers can unambiguously see to what extent their conclusions depend of the subjective decisions.

Although there are lots of relevant issues with selection and omissions of proxies, the most important point is not what is omitted, but what is included, and then it is not the inclusion of proxies with fake hockey stick blades that causes problems, but the inclusion of proxies that don’t say anything at all. The model Tingley and Huybers use is very simple – the basic temperature series is AR1, and the proxies are assumed to be linearly related to this (there is spatial correlation in the year to year variation, but this is unimportant for my point). The proxies are assumed to have the same regression coefficients within type (tree ring, ice core and varve). Now imagine that none of the proxies are related to temperature at all. The slopes will all go to zero and they will have no effect on the estimates of the temperature. These estimates will therefore only depend on the time series properties that are estimated from the observed temperatures. In other words the temperature estimates prior to the start of the observations in about 1850 will just be simulations from an AR1 model with a coefficient of about 0.47 and an innovation sd that I can’t calculate from the paper (it depends on the site specific value of 0.8, the spatial correlation and the area of the study) but it must be about 0.2 I think. The problem now is that (a) the observed temperatures have far too much long-range temporal correlation to be AR1, and (b)starting from the value in 1850 and simulating backwards to 1400 it is almost impossible to get a value as high as observed in 2010. Now in reality the proxy regression slopes won’t actually be zero (because they aren’t all useless), but they will be biased towards zero (because lots of them are). In other words, the result that current temperature is the highest in 600 years is a direct consequence of the AR1 assumption and the inclusion of lots of useless proxies. This has always struck me as an insoluble problem. Using a time series model that allows for more long term (“natural”) variation would be better, but is computationally very difficult, and omitting useless proxies will bias the reconstruction to hockey-ness if the standard for usefulness is correlation with the observed temperatures.

this is the sort of issue that we discussed in connection with our 2005 papers.

A graphic that I found quite compelling was one in which I did reconstructions with the following pairs:

1. Bristlecones; PC1 of dot com stock prices in a bull market

2. The other MBH proxies; white noise/low order red noise.

Reconstructions in MBH style using bristlecones+noise or stock prices+noises or proxies all yielded high RE values, sometimes better than the actual reconstruction. Mann’s RE statistic, far from being a “rigorous” statistic as they huff and puff about, does not discriminate against classic spurious regression.

Yes, inclusion of selected proxies gives a spurious correlation problem, but the problem here is in a sense the opposite – rather than inducing a fake hockey stick blade, the inclusion of useless proxies induces a fake handle, and the AR1 assumption gives a false sense of confidence in it.

At the very least shouldn’t any exclusionary process require X-level of correlation with instrument records? On that basis alone (intentional date manipulation not withstanding) is it not reasonable to conclude that the Windy sample is likely to be more accurate than the Logan sample? Of course, close correlation with the instrument record is not absolute proof of a valid calibration, as evidenced by the fact that neither sample captures the LIA particularly well, although (once again) Windy seems to be the more accurate of the two.

Anyway, just my two cents from a layman’s perspective.

Steve: The issue is whether you use a class of proxies or select within a class ex post. A valid proxy class needs to correlate with temperature. So if Arctic d18O or treeline larch ring width are a “proxy”, than you want the class as a whole to correlate with temperature. But you have to define the class in advance. If the proxy is very noisy, then some individual series will have enhanced trends and some might go the “wrong” way. If you select after the fact, you introduce a positive bias.

On this point, the “lay” approach – an approach almost universally adopted by “real climate scientists” – is incorrect. A point that Paul Dennis endorsed in a recent comment.

Thanks Steve,

I understand your point, but can see (and I’m not defending any of the shenanigans you’ve uncovered) the exclusion of an entire class of proxies based on one or two “bad” samples would be akin to throwing out the baby with the bathwater. If for example, 9 out of 10 samples from the same relative geographic region agree fairly well and one doesn’t, it seems more than likely the oddball sample is a fluke. Of course, as you note, the exclusionary process is often subjected to confirmation bias. Nonetheless, it seems at least possible to scientifically exclude specific samples provided an adequate explanation is provided.

I guess the “precipitation explanation” is bogus based on your knowledge and experience. Was the Logan sample the only exclusion? It would be interesting to see a graph with the Logan data included. Such a graph would bolster your case for cherry picking based on desired outcomes.

Rob, you say if nine out of ten samples from the same geographic region agree and one doesn’t then it is more than likely that the oddball sample is a fluke. However it is not possible to know thi. If one has made the ex ante hypothesis that a particular proxy tracks temperature then you include them all. Ones descriptive statistics will accommodate the oddball sample as a slightly greater s.d. and s.e.

I agree with Paul. Unless there is a known reason that the data is bad, you are stuck with it. It is easy to fall into this trap when you collect noisy data. You start questioning things which don’t match and look hard at your instrumentation, yet when it does match expectations, the tendency is to accept it.

If 9 out of 10 work for one’s assumption during the calibration period, how do we know they are not all equally problematic and that isn’t more sampling trouble than we know in the good ones. Once a class of proxies is used, all of the proxies must be used unless a unique physical problem related to data collection is identified. Any other choices mathematically bias the result.

Re: Rob ricket (Apr 16 13:05),

The “why” doesn’t matter. Cherry picking — data snooping — is excluded from good science, period.

If you have reason to surmise that Elephants conforming to parameters X, Y and Z are good proxies, then you must be willing to take a blind random sample of those proxies, without regard to the data involved.

Once you peek at the data, it is too late to change the proxy definition.

This also reflects back on the fact that the exact same data series are seen over and over again in these studies. That too is shaky science.

Thanks Paul, I’m a mathematical lightweight, (family genetics) but fairly conversant in verbal reasoning and admit some of the math on this site flies over my head. From a strictly puritanical perspective I see your point. However, is it not true that exclusion of a sample (a big if in this case) that is known to be inaccurate will result in a more accurate reconstruction? Meaning, why include a sample that deviates sharply from both the other samples and the instrument record. The last Esper et al paper provided a detailed explanation of the exclusionary process they used in their reconstruction.

If memory serves, the Esper et al paper was well received by the skeptical community and panned by the alarmists. Perhaps Esper et al applied a more reasoned approach to the

exclusionary process, but (all things being equal) we can’t be in the business of backing reconstructions that show us what we want to see and critiquing those that do not.

Apologies to Steve if he critiqued the Esper et al paper as well, or if he thinks Esper employed a more valid exclusionary process.

Bob Ricket: The problem is that the claim that a certain proxy (ice core, lake sediment, etc) is a valid thermometer is a HYPOTHESIS and there are in all cases reasons to be concerned (e.g. trees respond to rainfall, local stand density etc…ice cores respond to changes in source region, changes in ice elevation etc and on and on) so it is not a proven. To then select sites because they correlate with thermometers and drop those that do not is to assume your hypothesis is true, but doing so has a huge effect on your reconstruction. If this were a scientific backwater and the recons were for exploration only, fine, but these hockey sticks are being used to prove unprecedentedness (alarm). A higher standard of proof is needed. And if the answer is that we can’t do it properly and the confidence intervals are a mile wide, then don’t do it.

Right. And it would be perfectly reasonable, after data snooping, to note that ice cores with Property X correlate with temperature far better than others do. If you collect NEW cores with Property X, publishing all findings, you would be able to limit yourself to (the new set of) such cores in a reconstruction without being in the wrong.

Am I wrong in thinking that perhaps there are not enough well documented proxies from similar geographical regions to be able to evaluate the worth of any particular proxy or group of proxies in detecting a temperature signal?

Jan,

This should probably be possible to find some. Although, at first glance this may seem uninteresting, we can find easily good regional proxies limited to the twentieth century.

This graph (http://img38.imageshack.us/img38/1905/atsas.png) shows, for example, the melting anomaly of 3 Alpine glaciers (Huss et al. 2009). The interest of this proxy is that its obvious physical connection with temperature is confirmed by its high frequency correlation with instrumental data. Moreover, in the particular case, the low frequency correlation with the raw data of the reference station is excellent.

We find the same behavior in the same area but this time for winter temperatures with snow data (Marty 2008) : http://img830.imageshack.us/img830/9003/neige2.png

The interest of these limited but convergent samples is to provide tracks for the validation of proxies reasonably related to regional temperatures. Here we see especially that MXD seem to be a good type of proxy while instrumental data are dubious at low frequency.

Sorry, wrong image for Marty 2008 (was a joke based on a similar case), the good one : http://img69.imageshack.us/img69/3867/jndjfm.png

I add the comparison of Huss et al. 2009 with data from the reference station:

Thanks for the response phi. I was thinking more along the lines of the law of large numbers.

Rob, Jan Esper has always taken a minimalist approach to reconstructions, the best example being their 2002 paper in Science based on 14 well chosen proxies if I remember right. Fewer sites, but much more stringent criteria. Opposite end of the spectrum from the Mann et al approaches.

You are right on the data exclusion issue. If you have reason to know that a particular series is not responsive to the env param of interest, then you DON’T USE IT. People have a strong tendency to far over-simplify and dogmatize this issue here.

I repeat, if you have reason to believe that a series does not have a strong signal to noise ratio, then YOU DON’T INCLUDE IT in the sample. It is NOT a type of cherry picking. It IS a recognition of the fact that your proxy has a **non-constant ability to estimate the signal of interest**. It is non-constant because the noise element is non-constant.

Steve: Esper 2002 is the only multiproxy study to use the “Polar Urals Update” – which has very different properties from Yamal. However it uses two stripbark sites. The only two studies of this vintage that had large populations were MBH and Briffa 1998. Specialists should have paid far more attention to what caused the differences.

At the NAS workshop in 2006, Hughes made an interesting distinction between what he called the Schweingruber and Fritts approaches to reconstruction. He characterized the Schweingruber approach as picking sites ex ante and using relatively simple methods, and the Fritts approach as dumping everything into a network and relying on software to sort it out. It is clear which tradition Mann fits into. It was too bad the NAS panel didn’t discuss this point.

My own instinct on this is to prefer what Hughes called the “schweingruber” approach, as I hope is clear from my comments.

Schweingruber knows tree rings like no other. Literally, I doubt that anybody knows as much about tree rings as environmental indicators as he does–the man is a walking encyclopedia, as is clear from his book. And of course Jan Esper originates from that same WSL lab, so he doubtless absorbed much of that knowledge.

Fritts made an important contribution with his introduction of PC analysis to regional collections in his 1971? MWR article, but such higher level analyses depend directly on the lower level fundamentals of how well the proxy responds to the environment in the first place, i.e., on the kind of information that Schweingruber is an expert in. Don’t lay higher level sophistication on top of a poor foundation; make sure the foundation’s solid first or it’s just lipstick on a pig.

So, I’m with you. Fritts–>Mann and Schweingruber–>Esper

“You are right on the data exclusion issue. If you have reason to know that a particular series is not responsive to the env param of interest, then you DON’T USE IT. People have a strong tendency to far over-simplify and dogmatize this issue here.

I repeat, if you have reason to believe that a series does not have a strong signal to noise ratio, then YOU DON’T INCLUDE IT in the sample. It is NOT a type of cherry picking. It IS a recognition of the fact that your proxy has a **non-constant ability to estimate the signal of interest**. It is non-constant because the noise element is non-constant.”

I think perhaps you are over simplifying and dogmatizing an issue here, Jim. If your decision to retain or exclude a proxy series comes after looking at its correlation with the instrumental period, you have created a problem in statistically evaluating the results of your reconstruction. It sounds much like hand waving when you say to exclude a series that you have reason to believe is not responding to temperature (in the case of a temperature reconstruction). How is that reason determined and quantified? If you have no other knowledge of a proxy series being suitable for inclusion other than its relationship to the instrumental record you cannot validate a proxy series, that has “proper” response to the instrumental temperatures, back in time. All you know is that the general proxy type you are working with sometimes relate to temperature and sometimes do not. That being the case then that proxy type should not be a candidate for a reconstruction.

Re: Jim Bouldin (Apr 16 21:34),

Jim Bouldin, I am unsure what you intend to reference in the editorial remark I italicized supra.

As you are aware, the scope of the paleo literature is broad. With limited expertise and time, I’m most intimately familiar with the employment of the Lake Korttajarvi (Tiljander) data series in Mann08, Mann09, and other papers. Since (1) Tiljander has come up earlier in this thread as an example of post-hoc selection (cherry picking) and (2) you have engaged on the Tiljander issue in prior Climate Audit threads, I will comment.

The assertion that literate, numerate skeptical commenters at Climate Audit have shown a tendency to far over-simplify and dogmatize this issue is incorrect.

It is false.

The employment of the Tiljander data series by Mann08 and Mann09 raised numerous issues about the state of paleoclimatology. The failure of those authors and the editors of those journals (PNAS and Science) to acknowledge and correct the glaring errors in that employment raises further issues about the extent to which paleoclimatology, as currently practiced, conforms to the established norms of the physical sciences.

There are limits to what one can expect from a motley assembly of commenters at a lightly-moderated blog. But there is a touch of the ridiculous in blaming such “skeptics” for recognizing shortcomings in the practices of professional, published, mainstream pro-hockey-stick dogmatists.

Jim,

you’re sort-of entering in the middle of a longstanding conversation here. One point that is universally agreed upon at “skeptic” blogs – a term that doesn’t accurately characterize this blog – is that you can consistently get Sticks from red noise networks by screening data for temperature correlation. This point has been reported (more or less independently) by me, Lucia, David Stockwell, Lubos Motl, Jeff Id. The method is therefor biased. Thus, results obtained by application of biased method to noisy empirical datasets are also biased.

Recognition of this phenomenon contributes to the insistence on not screening data ex post for temperature correlation.

Acceptance of this principle does not commit one to “having” to use contaminated data e.g. the modern portion of the Tiljander data.

The fact that Mt Logan d18O doesn’t conform to other Arctic d18O series does not seem to me to be a valid reason to exclude it from an Arctic composite. Instead, the difference should be analysed. One reason for the different behavior may simply be that it is the only long series in the hemisphere between 90E and 90W. I do not see that this position is in any way inconsistent with requiring contaminated data to be excluded.

The issues with Graybill bristlecone chronologies is subtler. There I’ve mainly observed that the multiproxy results in canonical studies are unduly sensitive to this one proxy and that specialist opinion was that the 20th century growth spurt in Graybill chronologies was not due to temperature. If they are believed to be a uniquely sensitive recorder of world temperature, then the botanical basis of this uniqueness should be carefully studied and understood so that we can properly worship these chronologies.

I’ve never tried to propose an overall policy on when data should be used or not used. Nor do I expect that one exists. However, that doesn’t mean that one can’t decide individual cases. If this analogy helps (and it probably doesn’t), it’s like a “common law” approach to tort rather than a Napoleonic code.

Not this whole red noise argument again.

The whole issue of red noise giving potentially spurious correlations is way overblown, I’ve said that consistently from the first that I heard of it. I’ve never believed that to be an important issue in dendroclimatology. For starters, just because a series is autocorrelated doesn’t mean it’s a red *noise* series, and I have no idea why people automatically assume it does, but they do. That observed autocorrelation might very well be due to a cause and effect relationship with the environmental driver, and the only way to test for it is by looking at the correlation therewith.

I’d like to know how you’re going to determine what the relationship with climatic drivers is without looking at the correlation with instrumental data.

Jim, I’m not sure that you understand the red noise argument as it’s been presented here, as opposed to how Mann and his acolytes have characterized it.

For what it’s worth, Mann employed red noise simulations in proposing significance levels for his verification statistic (RE), as did Wahl and Ammann. Indeed, buried within the SI of Wahl and Ammann 2007 (not released until long after) are confirmations of key results of ours.

I never argued that everything was “just” red noise. The bristlecone chronologies are not “just” red noise. What I pointed out in connection with Mannian PCs (but a similar argument applies to correlation screening) was that the method was severely biased – it was so biased that it could produce hockey sticks out of red noise. We then looked at what was overweighted when the biased method was applied to empirical data: thus the bristlecones and the CENSORED directory. Then the issue was whether the Graybill chronologies had magical abilities to discern world temperatures and, if so, why. We also pointed out that high RE values could be generated by application of the biased Mann methodology when applied to networks of red noise: a highly relevant comment when assessing “statistical skill”.

Nor do I believe that dendro-chronologies are “just” red noise. I’m not as nihilistic as some readers. TI think that there is valuable information; that the datasets are extremely interesting and that there are important challenges to dendro – as you do.

It seems to me that the dominant faction in the community has not squarely faced these challenges – again a point on which we are probably in agreement. Unfortunately, I think that the policy of the community in joining ranks against fairly simple points from Ross and I has probably prevented them from progressing on these points.

In some ways, I think that the contrasting reactions to Mann et al 1998 and Briffa et al 1998 symptomize a path not taken. These two studies were the only two large-population reconstructions (and both were dendro, despite Mann’s veneer of “multiproxy”). Why the Briffa composite went down (the divergence problem) while Mann’s went up should have been a topic of great interest to specialists. Unfortunately, purported explanations of the phenomenon have been almost entirely disinformation, designed at marginalizing the divergence problem and saving Mann’s results. Briffa’s results were characterized as being only from a “small” population, while Mann’s were said to be from a more broadly based sample. In fact, the opposite was the case. Mann’s Stick came from the bristlecones and was very narrowly based, while Briffa’s results (based on the large Schweingruber network) were the more representative. Mann went from success to success, while Briffa had trouble even getting funding.

Jim Bouldin, we cannot draw meaningful conclusions from the fact methods that are biased to produce certain results produce those results. If a method extracts a particular result from noise, it means nothing when the method produces that particular result.

Who do you think has said we shouldn’t look at how series correlate with instrumental data? I’ve never seen anyone say anything of the sort.

What Steve McIntyre and dozens of others say is we shouldn’t screen by that correlation. Some authors do that screening directly (e.g. Gergis) whereby series with bad correlation as discarded. Others do it via aspects of their methodology whereby series with good correlation are given extra weight (e.g. Mann).