Anti-lukewarmers/anti-skeptics have a longstanding challenge to lukewarmers and skeptics to demonstrate that low-sensitivity models can account for 20th century temperature history as well as high-sensitivity models. (Though it seems to me that, examined closely, the supposed hindcast excellence of high-sensitivity models is salesmanship, rather than performance.)

Unfortunately, it’s an enormous undertaking to build a low-sensitivity model from scratch and the challenge has essentially remained unanswered.

Recently a CA reader, who has chosen not to identify himself at CA, drew my attention to an older generation low-sensitivity (1.65 deg C/doubling) model. I thought that it would be interesting to run this model using observed GHG levels to compare its success in replicating 20th century temperature history. The author of this low-sensitivity model (denoted GCM-Q in the graphic below) is known to other members of the “climate community”, but, for personal reasons, has not participated in recent controversy over climate sensitivity. For the same personal reasons, I do not, at present, have permission to identify him, though I do not anticipate him objecting to my presenting today’s results on an anonymous basis.

In addition to the interest of a low-sensitivity model, there’s also an intrinsic interest in running an older model to see how it does, given observed GHGs. Indeed, it is a common complaint on skeptic blogs that we never get to see the performance of older models on actual GHGs, since the reported models are being constantly rewritten and re-tuned. That complaint cannot be made against today’s post.

The lower sensitivity of GCM-Q arises primarily because it has negligible net feedback from the water cycle (clouds plus water vapour). It also has no allowance for aerosols.[Update: July 22. Aerosols impacted the calculation shown here as the RCP4.5 update column is CO2 equivalent, which included aerosols though they were not listed as a separate column.]

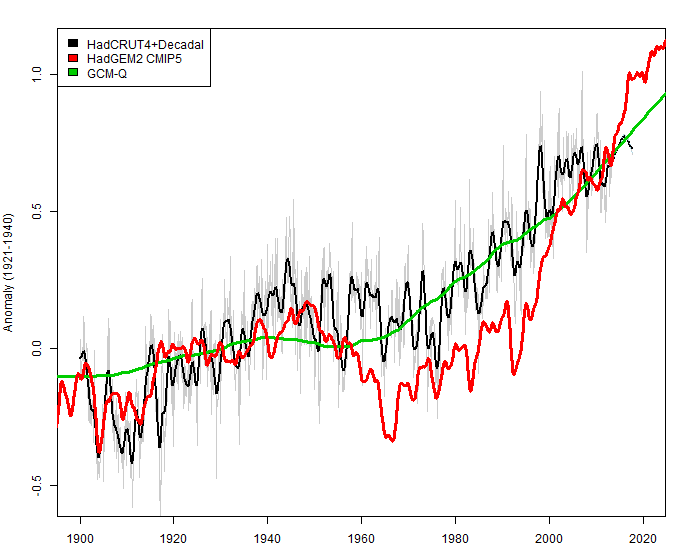

In the graphic below, I’ve compared the 20th century performance of high-sensitivity HadGEM2 RCP45, the UK Met Office contribution to CMIP5 (red), and low-sensitivity “GCM-Q” (green) against observations (HadCRUT4-black). In my opinion, the common practice of centering observations and models on very recent periods (1971-2000 or even 1986-2005 as in Smith et al 2007; 2013) is very pernicious for the proper adjudication of recent performance. Accordingly, I’ve centered on 1921-1940 in the graphic below.

On this centering, HadGEM2 has a lengthy “cold” excursion in the 1960s and too rapid recent warming, strongly suggesting that aerosol impact is overestimated and that this overestimate has disguised the effect of too high sensitivity.

Figure 1. Black – HadCRUT4 plus (dotted) decadal HadGEM3 to 2017. Red – HadGEM2 CMIP5 RCP45 average. Green – GCM-Q average. All centered on 1920-1940. 25-point Gaussian smooth.

Although the close relationship between GCM-Q and observations in the above graphic suggests that there has been tuning to recent results, I have verified that this is not the case and re-assure readers on this point. (I hope to be able to provide a thorough demonstration in a follow-up post.)

I hope to provide further details on the model in the future. In the meantime, I think that even this preview shows that GCM-Q shows that it is possible to provide a low-sensitivity account of 20th century temperature history. Indeed, it seems to me that one could argue that GCM-Q actually outperformed HadGEM2 in this respect.

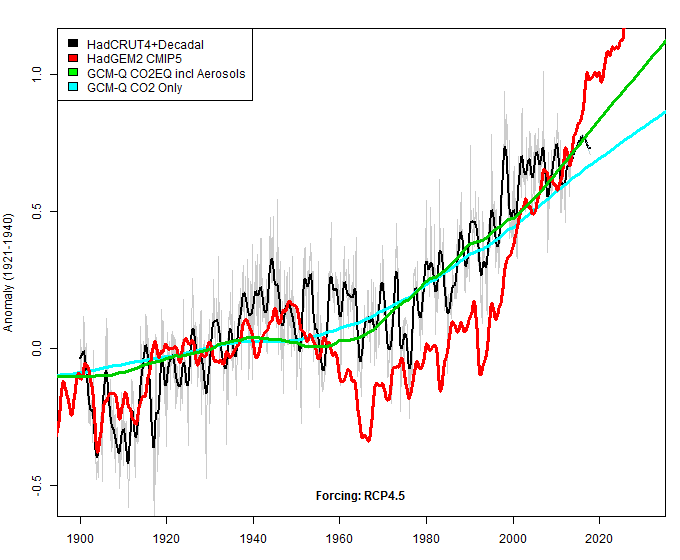

Update July 22: Forcing for GCM-Q was from RCP4.5 (see here zip). The above diagram used CO2EQ (column 2) defined as:

CO2 equivalence concentrations using CO2 radiative forcing relationship Q = 3.71/ln(2)*ln(C/278), aggregating all anthropogenic forcings, including greenhouse gases listed below (i.e. columns 3,4,5 and 8-35), and aerosols, trop. ozone etc. (not listed below).

Column 4 is CO2 only. The CO2EQ and CO2 column 4 forcings are compared in the diagram below in the same style.

203 Comments

Why using HAdcrut 4 when it is plainly obvious that they cooled the past and warmed the recent period once again in order to diminish the discrepancy between the data and the models?

http://www.woodfortrees.org/plot/hadcrut3gl/from:1997/trend/plot/hadcrut4gl/from:1997/trend/plot/none

an even more illustrative graph

http://www.woodfortrees.org/plot/hadcrut3gl/from:2001/to/plot/hadcrut4gl/from:2001/to/plot/none

Steve: the difference is not material to today’s post.

Superb. Thank you Steve and whoever else made this possible. I look forward to more on the lack of tuning against recent temperatures. At that point surely all bets are off. No aerosols? Is this the control to show how much was vacuous in more complex models? And that low sensitivity cannot be ruled out by those models? Can’t wait.

Steve, your comment on centering raises an interesting question – what does that graph look like with different centering options? In theory, I would have thought that since we’re talking anomalies from a baseline, the choice of baseline shouldn’t have that much impact. Your graph implies otherwise.

I also noticed that you used a twenty year period for the baseline. Is there a reason why a thirty year period wasn’t used? Would that have made much of a difference to the graph?

Steve: No.

Isn’t there a rather strong argument that Steve should choose a centering policy that he thinks, a priori, makes sense then sticks to it? I certainly have always felt uneasy about a more recent interval being used. But surely the most important thing, if you’re going to compare models fairly, is to take a reasonable view and stick to it.

what are the verification stats?

I have always wondered why a plot of model vs reality isn’t used. A perfect model should give a slope of 1. If there is a change in the correlation between hindcast and forecast one can decide how much of the hindcast is a ‘fit’ and not a true model.

In comments to your last post, Nic L (Lewis?) pointed out that HadGEM2 has an exceptionally high climate sensitivity and also has high negative aerosol forcing level.

Given this, the results above are hardly surprising, though visual representation is interesting.

These graphs are feeling increasingly like Rorschach tests.

I remember when spaghettis grew on trees. Now they mostly occupy climate blogs.

Wot a scare when reported the spagheiii crop failed!!1

If you like graphical patterns, or even Rorschach patterns, try

https://www.google.com.au/search?q=global+temperature+UAH+GISS+Hadcrut+RSS&client=firefox-a&hs=pjX&rls=org.mozilla:en-US:official&source=lnms&tbm=isch&sa=X&ei=TRXoUf6WMKjwiQfg-YGQCQ&ved=0CAkQ_AUoAQ&biw=1336&bih=1344

(h/t to Jo Nova).

After some years of watching these temperatures, different features will become apparent to different readers. For me, in general, the period 1940-70 started off with much more of a negative slope than it now has. It’s a type of bellwether for adjusters, some of whom might like to see cooling disappear completely from the record.

What does the model do after 2020?

Steve – I asked this on Goddard’s site too. As someone who analyzes the data as much and thoroughly as you I thought I asked you also.

Steve – I follow your site daily and was hoping you might help out on a question I have. As you have shown over and over again past tempature reocrds have been adjusted often. Usually cooling the past and warming the recent present.

On climate audit there is an article about hind casting. I started wondering if there was more to the adjustments then trying to make it look like unprecedented warming is occurring.

Specifically I was wondering if anyone was tracking the many hind casts to see if they are getting more accurate as a result of the many adjustments to the tempature record versus better modeling assumption.

I was wondering if the adjustments to the temp records are also meant to better align the GCM hind casts in addition to trying to make the warming seem serious? In other words are they trying to increase the validity of their models by showing how well the hind cast match the adjusted historical temp record thus validating the GCMs.

Just a thought I had when looking at some hind cast charts at CA.

Thanks John

Hmmm…. are there instances of a dance of “mutual tuning” where both models and temp. data have been “adjusted” over time (whether or not consciously) by different groups and research programs, resulting in temp. records and models that are more “tuned” to each other over multiple steps??

It could be a really important issue, although hard to prove. One could look at the dates of when each model update and temperature record “adjustment” has been released, but the complexities of the comparisons might be challenging.

Interesting.

From the looks of the result it would appear to be a low dimensional model. That’s not bad, but it does illustrate something. If it is a low dimensional model and it fits the data better, then one has to ask what the operational benefit is of higher dimensional models. It would be interesting to see what would happen if one were to add Leif Svalgaard’s new TSI forcing to the mix.. basically it would give you some higher frequency information in the green curve, as would adding volcanic forcing.

If it is a low dimensional model and it fits the data better, then one has to ask what the operational benefit is of higher dimensional models

This effect (simpler models giving better results) is well known among information system performance modelers. Constructing over-elaborate models that give no improvement in accuracy is referred to as “over-modeling.”

This effect (simpler models giving better results) is well known among information system performance modelers.

Same thing with neural nets. It is a form of over-fitting. As you try and improve the hindcast you quickly reach a point where this harms the forecast. In effect you are making the model more sensitive to initial conditions, leading to chaotic results which quickly diverge from reality.

I’d call this ‘Ockham’s Model’ but have an ugly feeling my ignorance is leading me into an inapt analogy.

================

and new car owners BTW.

To many parameters being monitored is potentially a good thing, but (when taken to far eg – making money by the garage) also gives the motorist to much data/errors (RED WARNING – STOP etc…) when you only want to drive the thing from A to B !!!

snip if O/T

IMHO your question is a mistake. A century of surface temperatures is not “the data” that you should be trying to fit. The HadGEM2 model is (I think) designed to predict a lot of climate variables over a fairly short timespan. -That’s a guess, I don’t think they’ve said so explicitly-.

It isn’t hard to fit a century of temperature data well, especially if you aren’t too picky. We’re happy, right, if it goes down _here_ and up _here_ and then flattens out and then rises toward the end? Not difficult. It is a much more difficult problem to track many climate variables accurately for a decade.

I don’t really believe that climate science is at the point where it can predict anything for a century, and models that try to do so are overfitting by design.

“…It isn’t hard to fit a century of temperature data well…”

Oh, really? No one has done it yet (barring this one, possibly). They’ve been at it for 30 years now.

Huh – I don’t know what you mean. Every working climate model does more-or-less what I said. Ensembles of model averages do even better. As long as you don’t insist on following all the little wiggles, they go up and down in the right places. As one of the modelers wrote recently, http://onlinelibrary.wiley.com/doi/10.1029/2012MS000154/pdf, “Climate models ability to simulate the 20th century temperature increase with fidelity has become something of a show-stopper as a model unable to reproduce the 20th century would probably not see publication, and as such it has effectively lost its purpose as a model quality measure.”

It’s not that hard. What’s hard (I imagine impossible at the current level of art) is doing it without overfitting.

In this plot, it looks as thought the green curve tracks the man, even though it misses a few excursions of the dog on the leash. (From a recent post on Climate, etc.)

Taking a wild stab in the dark, my guess is that the “Q” in “GCM-Q” stands for “Quelle”; or “source”, and that the model is one of the earliest ones developed under Smagorinsky at NOAA-GFDL.

(Smagorinsky doesn’t participate in current sensitivity discussions, at least partly because he died in 2005.)

But I’m probably wrong.

Perhaps ‘Q’ from star trek fame?

The techie gadget-inventing “Q” of James Bond movie fame??

We just need The Traveler to do his thing.

Or all the way back to Norman Phillips in 1956?

How about Hubert Lamb from the early 80’s?

Steve!

How does it look like and what is the correlation with CO2 included with suitable scaling?

Steve,

Just to clarify.

Is this GCM-Q output precalculated by a computer of your anonymous contact and handed to you, or is this model compact enough that you ran it on your home PC?

Well, retirement does happen personally.

==========

But old code never dies.

…it just reaches equilibrium.

Results of an anonymous model that assumes that aerosols have zero impact, no information on the model design (coupled ocean?, 3-dimensional atmosphere?, resolution?) and we are supposed to take this seriously? Seriously?

Yup, it was deficient alright.

=============

Who’s “we” ?

If Steve says he believes he hasn’t had the wool pulled over his eyes, that’s certainly good enough for me to consider the main proposition (and implications) of the post. YMMV.

Twice the post states that follow-up information may be on it’s way.

If that information doesn’t appear, adjust your personal level of (dis)belief accordingly. That’s what I’ll do.

(“A model of the solar system that excludes both the equant and the deferent? Tosh.”)

“A model of the solar system that excludes both the equant and the deferent?”

brilliant!!

Richard,

You forgot “not unprecedented, accelerating, or worse than we thought.” 🙂

Richard,

The question is this. If you have a model that doesnt consider aerosols and it has a skill of X, then you add our best understanding of aerosols and your skil decreases, what does that tell you?

Here is how we built high fidelity physics models. We started with the physics which we knew best. We tested the model. We measured the skill. Then folks started to tackle those less well known aspects.

NOTHING got added to the model unless it improved the skill. Of course that meant that sometimes you had missing physics. But replacing missing physics with physics that get a worse answer ISNT HELPING.

Of course there were other guys who tried to build models from first principles, guessing where they had to, making up parameterizations here and there. Tuning and twisting knobs to make it all work. and in the end, millions of dollars later, they had something with half the skill of what was known decades before they started

Steve Mo –

You know more about this than I do, but leaving out the aerosols isn’t necessarily wrong, if there is something that is offsetting the aerosols. The serious dearth of knowledge about the effect(s) of water vapor and the guessed-at forcing by CO2 are both in the opposite direction (at least as I understand from what’s gone down in the past).

Leaving out the aerosols seems in some ways to simply do some balancing of the probable overvaluation of CO2. Perhaps all this is doing is showing the magnitude of that overvaluation. I.e., the magnitude is roughly equal to the aerosol effect. BY cutting down the sensitivity maybe that effect now has a (nearly) real world value.

It might be interesting to play with that sensitivity value in THIS model – to watch the output vary in both directions. Each variation would be in essence playing with hypothetical aerosol effects.

Of course there were other guys who tried to build models from first principles, guessing where they had to, making up parameterizations here and there.

The association of ‘first principles’ with ‘guessing’ and ‘making up’ and ‘parameterizations’ is not correct. None of the latter three are associated with first principles.

“If you have a model that doesnt consider aerosols and it has a skill of X, then you add our best understanding of aerosols and your skil decreases, what does that tell you?”

This isn’t what has been done here. We don’t have a comparison of one known model that includes aerosols with an second unknown model that ignores aerosols. There are any number of reasons for the difference in the shape of the global temperature curve, not simply the inclusion/exclusion of aerosols.

All models have a trade-off between complexity and bias. Model performance may be improved by omitting poorly constrained physics describing components of minor importance, but if the model is omitting an effect thought to be over 50% of the CO2 forcing, there is a problem http://www.ipcc.ch/publications_and_data/ar4/wg1/en/tssts-2-5.html

Certainly aerosols are not the best constrained forcing, but setting them to zero is inconsistent with observational constraints. From Otto et al (2013) “the discussion around the appropriate

observational constraints on aerosol forcing is key to determine the consistency of the CMIP

models with current temperature and heat uptake observations.”

The very low inter-annual variability in the unknown model suggests there it is not capable of modelling ENSO and other interannual variability at all (or that the output has been massively smoothed). This is not good.

‘Certainly aerosols are not the best constrained forcing, but setting them to zero is inconsistent with observational constraints. From Otto et al (2013) “the discussion around the appropriate

observational constraints on aerosol forcing is key to determine the consistency of the CMIP

models with current temperature and heat uptake observations.”

lets see. you have a model that fits the temperature data better.

That model was not tuned to temperatures. It sets aerosols to zero. You have other models that do not set aerosols to zero

and are inconsistent with the very observational metric they are tuned to. That suggests:

A) perhaps the observational constraints are wrong. we see no reason to reject models for missing the hot spot and in fact use that mismatch to suggest that observations are wrong.

B) perhaps the treatment of aerosols is wrong and so wrong in fact that you get a better answer ignoring the effect all together. This is a COMMON problem in physics modelling.

Finally, richard I do not see you pounding the drum to stop the use of models in CMIP 5 that have ZERO volcanic forcings or those models that include volcanic forcing by adding it to TSI ( FGOALS I believe ).

“Certainly aerosols are not the best constrained forcing, but setting them to zero is inconsistent with observational constraints.”

I am not so sure that the observational constraints don’t allow to set the aerosol forcing to zero. Some aerosols like black carbon are more absorbing than reflecting and help the warming. But many are brownish, as is the case for whole India where the temperatures go up faster than in the nearby area of the SH. The black/brown aerosols go do deposit on snow and ice, where they help to melt glaciers, as is the case in the Himalayas, Greenland and North Pole ice.

Thus I see no reason why the overall influence of human-made aerosols can’t be zero or even warming…

“All models have a trade-off between complexity and bias. Model performance may be improved by omitting poorly constrained physics describing components of minor importance, but if the model is omitting an effect thought to be over 50% of the CO2 forcing, there is a problem.”

Possibly there is a problem but is the problem with what is “thought to be over 50% of the CO2 forcing” or with the model which omits it? It must be nice to have an opinion as to these things which can’t be questioned, but, at least at this moment, the model seems to be out-performing the opinion. Seriously.

@bmcburney

Seriously, read the update. The model does include aerosols in the forcings.

“Here is how we built high fidelity physics models. We started with the physics which we knew best.”

Starting with the physics you know best isn’t the same thing as starting with the physics that’s most important to the problem at hand. The physics that’s most important to the problem at hand is, and always has been, the water cycle, and it’s the part of the models where the proper physics is least well known and least well implemented. Until such time as this is fixed, all else is window dressing.

Skill cannot be well-determined by hindcasting; adding physics which increases skill at hindcasting while omitting physics which decreases skill is just another form of fitting masquerading as something it’s not. You cannot tell whether the added skill is spurious or not until you test the model via forecast; a test which all present models have conspicuously failed.

That’s true too. But we need a lot of time – centuries perhaps – to sort all this out. And the deadline for AR5 has already passed. There’s a mismatch of another kind there.

Fortunately, climate is a slow process, so we have time (assuming we can keep the politicians at bay).

Also, I doubt it will take centuries. Decades, perhaps, but no more. The high resolution GCM’s have actually gotten amazingly good at forecasting weather on the short term, and weather predictions are easily testable. If we can increase their skill to such a point where the parameters which impact climate (such as CO2 sensitivity) also have an impact on day-to-day skill at weather prediction we should have much better tests of their values.

I would call that – weather predictions shedding light on the guts of climate evolution in a decade or two – getting very lucky. But I hope you’re right.

“The physics that’s most important to the problem at hand is, and always has been, the water cycle, and it’s the part of the models where the proper physics is least well known and least well implemented. Until such time as this is fixed, all else is window dressing.”

thats funny. go figure out why.

“model was not tuned to temperatures”

You have no evidence of that. Assuming from the lack of any inter-annual variability that this is an old model, the best that can be said is that it has not been tuned to the last decade or so of temperature. This model probably has flux corrections and all sorts of fun.

“I do not see you pounding the drum to stop the use of models in CMIP 5 that have ZERO volcanic forcings”

From the CMIP5 experimental design

“Note that with the exception of experiment 1.3, aerosols from observed volcanic

eruptions should be included in all of the simulations.” http://cmip-pcmdi.llnl.gov/cmip5/docs/Taylor_CMIP5_design.pdf

So which models have zero volcanic forcing for the historic runs?

“models that include volcanic forcing by adding it to TSI”

What would be so horrendously wrong with that? Obviously it is not ideal, but would seem to be a reasonable first-order approximation of the effect of stratospheric sulphate particles.

Mosh wrote: “Here is how we built high fidelity physics models. We started with the physics which we knew best. We tested the model. We measured the skill. Then folks started to tackle those less well known aspects. NOTHING got added to the model unless it improved the skill. Of course that meant that sometimes you had missing physics. But replacing missing physics with physics that get a worse answer ISNT HELPING.”

This passage reeks of confirmation bias. Are you actually saying that you would never add a module to a model that didn’t increase the skill no matter how well-understood the physics or how well-tested it was in the laboratory? Perhaps the impression of confirmation bias will dissipate with further explanation.

The problem with GCMs is that they contain far too many uncertain parameters to optimize while avoiding the problem of tuning the “wrong parameter” to get the “right result”. Even if one had ideal values for all of the parameters, one could still get the “wrong result” because large cells can’t properly represent phenomena occurring on smaller scale. Further complicating the problem is that the uncertainty in measured 20th century warming (with homogeneity adjustments hypothetically “correcting” undocumented problems in station records) is wide enough to make it unclear whether the green or red model is a better fit for the observe temperature. Refining a model is always easier when the “correct answer” is precisely known.

I would suggest that no matter how well you understand it on paper, if it makes the model worse, then there is some key aspect you are missing. Therefore, no, I would not add it to the model because you obviously don’t understand it as well as you thought you did. (The system as a whole, that is). Maybe you can only add it once you understand something else related to it (a feedback for example). So adding that one well understood thing without adding its counter-part makes the model worse.

Aerosols have the biggest error bars of anything in the models and have a huge range from model to model.

Hansen? Giss Model 2?

steve, seems to be a problem with browsing on iphones (text all off to the left and unreadable)

Same here (Android / Chrome) for a while. If you have the option, in Chrome you can select menu -> Request desktop site.

Alternatively, at the very bottom of the page on the mobile site there is a link “view full site” – follow that link to get the full version.

Alternatively at the very top of the page we anned a command to shift references to Real Climate and Skeptical Science to the left, right off the screen.

Tks for the fix, I had the problem on a new tablet.

I’ve analyzed the code and the identifying personal idiosyncrasies are there. Now, I didn’t say whose code, did I?

================

A basic question about this “low sensitivity” model – what sensitivity does it assume?

Steve mentions the rather definite figure of 1.65 deg C/doubling – with no differentiation made between transient response and that elusive equilibrium version. Was this precocious little fellow coded before such a distinction was fashionable?

The only way for a GCM to not be tuned to the observations would be for the modeler to not have seen the global temperature record beforehand.

Painfully true.

Bah, me being blind. Sorry.

Well said Roy, and anyone with experience of numerical modelling will understand this. There are a multiplicity of tuning loops as well – not only the programmer, but also which changes get put forward for the trunk build, then which changes get publications to follow (something closer to the obs is more likely to get published), then which get incorporated into other models – the decisions of which will be tied to how well the changes improve the match. The propagation paths for tuning are numerous even though there is no “explicit” tuning taking place.

All of these loops are fast in comparison to the rate at which new climate data arrive, so the scope for tuning is massive and there is zero protection (that I have seen) against this.

I can assure you this modeler had NO ACCESS WHATSOEVER to the observation series it is compared to here. None. zero.

“I can assure you this modeler had NO ACCESS WHATSOEVER to the observation series it is compared to here. None. zero.”

This implies that the modeler is not of human origins or that it is an anthropogenic model that was constructed many years ago.

check your calendar

Oh, it’s probably da Vinci, or some Greek siege engineer.

===============

Yeah but (s)/he may have had access to a data set that was remarkably similar ™ climate science

The out-of-sample portion would be interesting (unfortunately I haven’t seen the date the model was “frozen”) but even this is subject to the stockpicker’s fallacy.

So it turns out our stock picker was one hell of a Guy. A lower sensitivity means falsification will take longer; but falsify it will.

Yup. Note that Canadian-born as well.

Spence’s capitalisation got me there in the end. The man’s been ahead of me in so many ways so that’s as it should be.

My initial thought was this model http://www.globalwarmingart.com/images/1/18/Arrhenius.pdf , but it appears to be high sensitivity.

Was this person ever a member of the Royal Society?

So what? He died before Hadcrut4 was developed? But then Hafcrut4 is pretty much the same as Hadcrut3 and NOAA and NCDC.

The only person in the climate debate I know who died is Stephen Schneider.

and this from his eulogy at Realclimate.

“Steve was also a pioneer in the development and application of the numerical models we now use to study climate change. He and his collaborators employed both simple and complex computer models in early studies of the role of clouds in climate change, and in research on the climatic effects of massive volcanic eruptions. “

I have seen an early video(mebbe ’70s) of Stephen Schneider in which he confessed that climate science didn’t know which way temperature was going. He has fooled a lot of people, but first he fooled himself.

=================

They may have seen it before it was adjusted though, if it is an older model.

I see that the green line hasn’t picked up the “pause” of the last 15 years (or so).

Plus, allowing for the fact that the past has been cooled and the present warmed by the “keepers”, I’d say that sensitivity of 1.65 is about ZERO. Rightly so.

That could be because it couldn’t anticipate blips like 1998. It also didn’t catch the downturn in 1976(ish). Or hindcast the 1940s peak.But now being to the low side of the post-2000 real world, it DOES suggest that – if more or less valid – that the hiatus will continue for just about as long as its now gone on (now that it is close again).

The “25-point Gaussian smooth” should explain those “misses.”

(Looking at the red line, it really does look like someone tweaked and tweaked and tweaked and every tiny adjustment in either direction made the output go way out of whack up or down, and that they had to settle on something that didn’t satisfy anyone – but at least wasn’t going off into infinity or negative infinity.

I rad once that that was a huge problem in the early days of the GCMs, and that they got in some Japanese hotshot who cured the vertical curve problem by putting ins some crap that had nothing to do with the science – it just tamed the output. I always thought that was the most bull dung thing I’d ever heard of.

Steve,

Is this the model you are referring to? http://www.q-gcm.org/

It looks like the model is small enough to rum on a workstation under Fortran 77.

Steve: No. I chose the pseudonym and did not intend to overlap.

I have a question.

Where do the two models end up in the year 2100? Can you show the graph out to that date?

Test: Can this low order GCM or CMIP5 GCMs forecast/hindcast from half the data to the other half? See similar comparisons in Scafetta’s 2013 review. DOI 10.1260/0958-305X.24.3-4.455.

I have another question relating to models, but more general, I hope it’s OK.

Does anybody know whether models have to “pass a test” in order to be included in the IPCC ensemble? With The Pause, it seems like there are several (or many) of the ensemble that look way too hot. But I don’t ever recall reading about any metrics that would get a model kicked from the ensemble.

My own cynical thoughts are that

1) It’s bad PR to announce scaping some models because they are too hot

2) It would get rid of the warmest century end projections and undermine the more alarming arguments.

3) Real-politik makes it difficult to kick out the UK, Australian and whoever contributions.

Are there scientifically based reasons to keep the warmest models in the ensemble?

I suspect the Steve is pulling our leg, and he will reveal that the model has little to do with actual climate modeling, yet produces historical results better than actual climate models.

Steve: it is a climate model.

Hahahaha!

Steve McIntyre?

Hahahaha!

No offense Steve M, but you pulling someone’s leg on this subject is so out of character. To have someone suggest it is a real hoot.

One doesn’t need a big computer or thousands of lines of code to hindcast the past century or “project” the future. An Excel spreadsheet is sufficient. The simple EBM (energy balance) models do hindcast the 20th century temperature as good or better than the multi million dollar power computer models… See the comparison by Robert Kaufmann at:

Click to access rpi0411.pdf

I once followed a one-day course at Oxford University about climate modelling, where they used such an EBM model for training. It was of the form:

dT = f1*dForcing1 + f2*dForcing2 + f3*dForcing3 + f4*dForcing4

Where the different forcings were GHGs, human and volcanic aerosols and solar and using a certain volume of ocean as heat buffer.

Initially, f1 = f2 = f3 = f4, as is assumed in most climate models (Hansen’s “efficacy” is within +/- 10% for all types of forcing, except methane). Which is questionable, as 1 W/m2 change in solar has not necessarly the same effect as 1 W/m2 change in CO2 absorbance…

But such an EBM model allows to “tune” the different forcing factors.

By firmly reducing the effect of human aerosol releases, one can also halve the effect of 2xCO2 from 3°C to 1.5°C, while the 20th century hindcast is even slightly better. See:

http://www.ferdinand-engelbeen.be/klimaat/oxford.html

I need to get the 2000-2010 data to perform the test again, as that probably favors the lower sensitivity for CO2, as the temperature since 2000 is quite flat with increasing CO2…

Here is a 0-D model.

Radiation transfer calculations result in a logarithmic dependence of the radiative forcing DS for an increase in CO2 as follows.

DS = 5.3* Ln(C/C0) watts/m2

Simply taking the Planck response needed to balance this extra forcing predicts a surface temerature rise of

DT = 1.6*ln(C/C0) deg.C

Now using the measured CO2 data from Mauna Loa we can fit the Hadcrut3 data to this form. The best fit to the data is

DT = 2.5*ln (CO2(t)/290)

see graph here

That works out as a climate sensitivity of ~1.7 deg.C

Interesting. What would you get if the effects of all GHG were included, not just CO2? I assume the sensitivity would be considerably less.

The IPCC narrative is that CO2 is the driver and changes to H2O are a feedback. Other anthropogenic greenhouse gases/aerosols cause much smaller effects. However there could well be natural changes in H2O and/or clouds which dominate over CO2 at least in the short term. This would explain why there has been no warming for over 15 years. This pause in warming is driving down measured climate sensitivities and the models are rushing to adjust as it continues.

To be correct the figure I gave of 1.7C is really for TCR (transient climate response) – so it is still possible that ECS(equilibrium climate sensitivity) is 2.5C The longer the pause in temperature continues the lower will fall climate sensitivity. If temperatures actually were to fall over the next decade then the whole edifice collapses.

Sorry, I was not clear. I did not mean to include H2O. I meant just methane, CFCs, and other long lived GHG. I thought the sum total of all those was significant compared to CO2.

Steve , this is an interesting find. However, even the low sensitivity model fails to get even a suggestion of the lack of warming since 1997.

It fails to reproduce the late 90’s surge and the following plateau in the same way that it failed to reproduce the warming around 1940 and the subsequent cooling.

That means that ALL GCMs, even low sensitivity ones, are lacking some fundamental mechanism of climate.

This model is very useful in demonstrating that. It has been clear that the more recent models are exaggerating volcanic impact as well as CO2 and the error was not evident until there was a sustained period without significant volcanism.

What this model demonstrates is that, even if we wind down the gain factor on both CO2 and aerosols, there is still a fundamental driver of climate that is not being included in the model.

And how do we know what’s fundamental, without living and testing these things for a very, very long time? Maybe the current standstill is a one-off caused by some very rare conjunction of circumstances that won’t happen again in a million years. My view is that we don’t know any of that. But this comparison of models ancient and modern (to which all the hints now seem to point) may be enough to knock out loads of false complexity and red herrings, as Mosh has just said.

“Steve , this is an interesting find. However, even the low sensitivity model fails to get even a suggestion of the lack of warming since 1997.”

For a model to predict hiatuses it would pretty much need to have some cyclicity built into it, with some mechanism FOR that cyclicity in the maths, so it could get into the code.

You make a good point, though.

If anything, I am troubled by the near-constant slope in the line since 1970 and going off into the never never land of the future. If there was a flatter curve early on and then this upslope that doesn’t seem to want to quit, I don’t actually see this as being a lucky guess for this model.

The slope later on appears to parallel the slope of the 1990s, with nothing variable happening to that slope for 50 years. I don’t buy that.

It looked good yesterday. It looks less good today.

Oooh, I know! Add aerosols to this model. 🙂

Comparing the smoothed GCM-Q model with the noisier model HadGem2 and the observed series HadCRU4 is not a particularly good way to compare models. While Q performs better than HadGem2, I do not judge that GCM-Q is a “good” representation of the observed temperatures. There are a wide array of model performances as can be seen from difference series with an observed series and CMIP5 model runs – and with none the model runs getting it ‘right”.

What is particularly informative is looking at multiple runs of the same model where it easier to appreciate that some models (runs) are better by pure chance.

To get a better notion of the “luck of the draw” with climate models, I have listed here the trends of 10 multiple runs of the CMIP5 RCP4.5 model CSIRO-MK3-6-0 for the period 1970-2013 May: 0.163, 0.201, 0.218, 0.222, 0.187, 0.153, 0.166, 0.233, 0.131, and 0.144. All trends are in degrees C per decade.

Take your pick and above all do not look too closely on how each model run produced those trends.

Stacking the six major eruptions during the thermometer record and averaging SSN we see that solar activity is generally on the wane when the eruptions happend. This raises the possibility of false attribution:

Also if we similarly stack SST for a period of about 6 years either side of eruptions we see there is already a general downward temp trend before the eruption happens.

Finding the cumulative integral and taking the four years prior to eruption as being the local average, we find that tropical regions are self-correcting and even recover the number of degree.days (growth days) despite major volcanic events.

Temperate regions recover SST but retain a deficit in growth days.

See text and links below the graphs for explanation of how they are derived.

It would be helpful to know the “high” sensitivity of the HadGEM2 model expressed as a number. I found references online to “HadGEM2-ES” having a sensitivity of of 4.86 deg c / C02 doubling, but I don’t know if that’s the same model or a previous one.

Anyone want to hazard what the relative physical “skill” is, in the absence of relative physical uncertainty limits?

My guess is that the model is the one the he farmers almanac uses.

Has the green line been smoothed? It doesn’t look all that accurate, especially in the early part of the graph.

For people who still haven’t worked it out, the key hint is the carefully chosen start year for the plot.

Doh!

Re: Jonathan Jones (Jul 22 14:18),

The start year of the graphic isn’t a clue.

People are on the right sort of track, but no one’s figured it out yet. There are a couple of other clues that you’re overlooking.

Ha. I have to say – through gritted teeth – that you fully deserve this fun at our expense. But now I want the answer!

Ed Hawkins based purely on Callendar’s ‘model’? http://www.met.reading.ac.uk/~ed/home/hawkins_jones_2013_Callendar.pdf

A Quasi-Geostrophic Coupled Model (Q-GCM)

Andrew Mc C. Hogg

Southampton Oceanography Centre, Southampton, United Kingdom

William K. Dewar

The Florida State University, Tallahassee, Florida

Peter D. Killworth and Jeffrey R. Blundell

Southampton Oceanography Centre, Southampton, United Kingdom

If I can trust Mosher’s comment “I can assure you this modeler had NO ACCESS WHATSOEVER to the observation series it is compared to here. None. zero.” then either the model predates 1896, or it’s *completely* first principles, or something very odd is going on.

The other really odd thing is that the green curve gets a post 1940 dip, without (unless I have totally misunderstood) using aerosols. I’m trying to remember what other forcings could cause that, without success so far.

Steve: Mosher wrongfooted you unfortunately. Sorry about that. I should have corrected him. The author did have access to some of the observations.

For forcing, look at RCP4.5 CO2EQ (see http://tntcat.iiasa.ac.at:8787/RcpDb/download/CMIP5RECOMMENDATIONS/RCP45_MIDYR_CONC.zip ). It has a local maximum in 1939 and does not reach that level again until 1965.

Hmmm: RCP 4.5 CO2EQ is defined as “CO2 equivalence concentrations using CO2 radiative forcing relationship Q = 3.71/ln(2)*ln(C/278), aggregating all anthropogenic forcings, including greenhouse gases listed below (i.e. columns 3,4,5 and 8-35), and aerosols, trop. ozone etc. (not listed below)”. The update to GCM-Q, which used RCP4.5 CO2EQ, did include aerosol forcing after all. I’ll amend accordingly.

OK, then given your latest remarks the source is obvious. Interestingly I’ve always thought he had an excellent point about glaciers.

Steve: yup.

Note I said he has no acces to the observation series shown here.

Of course, he may have had access to some of the same essential data. Where is racehorse when I need him

Steve: you’re 100% right. I withdraw my apology on your behalf. 🙂

Ooh, I finally get it, a day late; must be my calendar. See, told you I steal everything even if I don’t know where it came from.

==============

Yes, yes, fine and dandy, but what did GCM-Q hindcast for the tropical troposphere? 🙂

“In my opinion, the common practice of centering observations and models on very recent periods (1971-2000 or even 1986-2005 as in Smith et al 2007; 2013) is very pernicious for the proper adjudication of recent performance. Accordingly, I’ve centered on 1921-1940 in the graphic below.”

I’m supposing centering is the act of taking observed values over a period, and tuning parameters for the model to create a good match of the model to the center period? Is there a manual component to this?

So am I correct that what you did is to come up with parameters that provide good fits to 1921 – 1940 data, but what is odd is that I thought these models required massive supercomputers to run. I’m curious how you could do this.

I quote from the post:

“In addition to the interest of a low-sensitivity model, there’s also an intrinsic interest in running an older model to see how it does, given observed GHGs.”

Modern PCs are much faster than old supercomputers. I get the impression this is a really old model – possibly from the earlier part of last century. I suspect one could run this model quite happily on a graphing calculator.

I’ve made a correction to the post as follows:

Steve, what are the results of the old model if rerun without aerosols as you intended to do? Do you plan to update?

” low-sensitivity (1.65 deg C/doubling)” is this TCR or ECR?

over this time period its TCR. thats probably the most salient criticism.

Look over short periods of time the trajectory of the temperature is rather predictable by simple models..

Is it based on this work?

Click to access 11-16.pdf

Steve: no

It was used in TAR?

DOE-PCM

If Q caught the warming pulses of the early 40s and late 90s it would almost be spot on.

Added following update in post:

Forcing for GCM-Q was from RCP4.5 (see here zip). The above diagram used CO2EQ (column 2) defined as:

Column 4 is CO2 only. The CO2EQ and CO2 column 4 forcings are compared in the diagram below in the same style.

(coloring clarified)

(coloring clarified)

Steve, thank you for the “incl aerosol” and “CO2 only” comparison.

Unfortunately I am having a bit of difficulty matching the two green lines from the graph with the two green boxes from the legend. My color vision may not be the best, my computer uses probably a different “gamma” than yours and to top it off: human color perception is depended on the area of the color (a line can have a different apparent color than a box!) and on the neighboring colors (e.g. a black box around an color can further further change the perception). Color vision is messy, I’m afraid.

Do I understand it correctly that the initial graph in the blog-post was already incl. aerosols? And am I correct that in the graph from your comment the “darker” green line is “CO2 only” (which runs a tad hotter in sixties, but cooler in the 21st century), while the “lighter” green line is “incl. aerosols”?

Tony,

I have the same problem with color perception when they are similar so I used an imaging program to compare the legend with the lines, just to be sure.

Looking at the right-hand side of the graphic the lower(darker)green line includes aerosols. Upper(lighter) green line is co2

Steve:I’ve amended the coloring on this graph to green/cyan and ensured that the green is consistent in both versions. Sorry about that.

No way Bob. You can see from the original graph in this post that the ‘with aerosol’ line goes right through the current observations. So the colors/descriptions are reversed. And as Jeff Norman below noticed, the observations go into the future… This graph is messed up.

Thanks for the update and fixing the colors.

I realize you’re busy but was wondering about the fact that Callendar’s temperature work was based on 60S – 60N. Was his model also based on that? I was wondering if his model would have a better fit with those observations rather than global temps. His temps matched CRUTEM4 very well as per the Hawkins paper. Just curious…

Steve: will discuss this in more detail in a forthcoming post.

From this paper:

It should be noted that in this paper Callendar greatly underestimated the increase in CO2 concentration, hence the above conclusion was predicated on a lesser growth in temperature than observed. His figures are apparently based on a constant anthropogenic emission of 4.5 Gt CO2 per year, while recent rates have been over 30 Gt/yr. Mitigating this somewhat is an underestimate of natural absorption of the anthropogenic component.

Steve: I will post in more detail on this. There’s a difference between forecasts of CO2 emissions and levels and the modeling of the effect given observed CO2 levels – a distinction that Hansen (reasonably) emphasizes.

thanks for the response Harold W although not exactly on point… Apparently my questions/comments are too dumb for the host. I’m done here after following for over 5 years… worst post and follow up ever.

Steve: I am not always online nor always available for questions. I’ve been at the lake for the last couple of days.

Re: sue (Jul 25 00:33),

Steve’s pretty busy lately. You may note he’s not had much time to follow up, let alone read every comment in detail.

Can’t ever expect to get an author-response to every comment.

GCM-Q nailed it. Why? Low CO2 sensitivity, stripped down parameters (fudges), especially the non-understood aerosol ones. Now run it at 0 sensitivity. Just to set a baseline, you understand; I wouldn’t dare suggest the Emperor isn’t even wearing any underwear.

Oh, wait …

I think negative feedbacks similar to Willis’s Thermostat Hypothesis that remarkably caps sea temps at 31C is an important missing factor in climate models. I mean this in a broader context of which the Thermostat Hypothesis is an example. Since in over a billion years, the chain of life has not been broken and the variations in temp overall being restrained to merely 7-9C speaks loudly of mechanisms counter to swings one way or the other. CO2 doesn’t appear to be the control knob over these variations.

During the Archean geological era, a thinner crust a hotter interior – evidenced by the only period of komatiite extrusions with temperatures of 1700C instead of modern 1100C basalts suggests a hotter earth 3B years ago that cooled down to the “equilibrium” level that has been oscillated around for ~2B years. CO2 likely has a lower sensitivity than 1.0 if you add in an effect that counters the resultant temp rise. One must ask in looking at the effects of CO2 and other mechanisms could temp just rise for 10s of millenia before it reverses? Surely the main sink that acts against such a situation is the phase changes in water.

Thanks for the rapid overview of 4B years Gary. What’s a good introductory text on the Archean and ‘komatiite extrusions with temperatures of 1700C instead of modern 1100C’?

Re: Gary Pearse (Jul 22 23:06),

I was taught this in high school science in the mid 70’s. Not to belittle the point, but why doesn’t anyone seem to know this anymore.

Because the remarkable properties of water undermine the cause? How much more enforced ignorance would have been needed to keep the show on the road I hope we now never discover.

JJ

“OK, then given your latest remarks the source is obvious. Interestingly I’ve always thought he had an excellent point about glaciers.”

Not that obvious to most here I think Jonathan.

http://www.iiasa.ac.at/

and

http://tntcat.iiasa.ac.at:8787/RcpDb/dsd?Action=htmlpage&page=welcome

Look down the list of citations and names towards the bottom. Recognise anyone who has been working for years with a rap artist who can do magic tricks?

See Moshpit I can give clues that are just as cryptic as yours.

KevinUK

hey I misspelled the name for Kenneth. not very cryptic

OK Moshpit,

On the basis of your mispelt clue and JJ’s comment about glaciers, it looks like Steve will announce tomorrow that it is this gentleman’s model

http://en.wikipedia.org/wiki/Guy_Stewart_Callendar

I wonder what his dad would have thought of the ‘Sky Dragons’?

KevinUK

So, am I to understand that some long-dead fellow’s model, before we even had computers, has matched actual temperature observations more accurately than the fancy GCM’s constructed by todays expert climate scientists?

Say it isn’t so Steve, say it isn’t so.

Perhaps should rename the model GSCM-Q…

Or is that too obvious?

The choice of the centering period should give a further subtle hint for those who are still struggling with the provenance.

Steve: I’ll fill people in tomorrow. I’m busy today.

Steve,

Is the x-axis offset? The HADCRUT4 “data” appears to extend well past 2013. Is this a function of the decadal averaging? What happened at the end points? Or is this someone elses trend that you are just using unaltered for illustrative purposes?

I’m still trying to figure out what happened in Borgen last week.

This ties some clues together.

http://en.wikipedia.org/wiki/Guy_Stewart_Callendar

And here.

http://en.wikipedia.org/wiki/Gilbert_Plass

@Richard Telford

“Seriously, read the update. The model does include aerosols in the forcings.”

Yes, so I guess we have nothing to argue about as to the forcings in this particular model. For the future, however, should we immediately reject an apparently skillful model which apparently omits consideration of one of our “favorite” forcings? Is that how we do science now?

Seriously.

No, it means that one should be very sceptical of unexpected results.

I expressed doubt that a model could account for 20th Century climate change without aerosol forcing. You and others were prepared to unquestioningly accept whatever was written.

I was correct – the model had included aerosol forcing.

Who is the real climate sceptic?

Steve: you’re exaggerating tho. The aerosol impact is much more muted than HadGEM2, which was the illustrated model.

Richard, IMO, a much under-examined factor in the 19402-1960s pause presently attributed primarily to aerosols are bucket adjustments in the SST record. I’ve commented on SST bucket adjustments from time to time over the years. Folland’s Pearl Harbor adjustment looked loony to me and I criticized it in early CA posts. Thompson et al 2008 wrote up some problems with inhomogeneities in the SST record around WOrld War II, some of which I had drawn attention to at CA. At the time, I observed that one of the effects of correcting these inhomogeneities would be to mitigate the downturn in the 1950s and 1960s. The post-Thompson 2008 edition of HadCRU revised to reflect this.

I scratched the surface of the SST data a few years ago and there are many inhomogeneities in it. In addition to bucket inhomogenetieis, there are major inhomogeneities between SSTs as observed by different nations in the same gridcell. If the proportion of national observations were consistent over time, this would wash out, but there are major changes in the provenance of records, sometimes between years. Sorting it out is a formidable task.

The defects observed by Thompson et al 2008 were very gross inhomogeneities and yet they had remained unobserved by specialists for many years. I would not be surprised if they remain other important inhomogeneities in the SST record. My guess is that some of the deus-ex-machina aerosol stuff might well be explaining things that actually arise from SST inhomogeneity from buckets, nation of observation etc.

I won’t pretend to know anything about inhomogeneities in the SST data, but I do know that the 1940-1970s is present in the land-only CRUTEM4, so the decline in temperatures cannot be an artefact in the SST data.

My confidence that there is an aerosol effect comes from the well documented effect of volcanic activity – mainly through sulphate aerosols – on global climate. Volcanoes have a very efficient mechanism for injecting sulphates into the stratosphere, but the amount of sulphate they produce is relatively small compared to human activity (Rodhe 1999; Tellus).

I lean towards Richard Telford’s view that the mid-20th century slowdown is real, and can’t be explained away just in terms of measurement error. I don’t think it’s anywhere close to a slam dunk that the explanation for the slowdown is increased aerosols though.

I think it’s accepted that much of the uncertainty in climate modeling comes from the treatment of aerosol effects in the models. It’s required to have models with low values of ECS if you assume modest aerosol effects, and for high values of ECS, if you have to assume large aerosol effects.

People have noticed what appears to be a ~ 60-year cycle in the data. This ~ 60-year cycle has shown up in long-duration proxies as well, leading some credence that it may be real. In fact, if you fit the global land+ocean temperature series to a ~ 60-year cycle, then subtract the fitted cycle from the original series, the residual series shows no sign of any mid-20th century slowdown.

The existence of a significant (in terms of impact) ~ 60-year is a problem for high ECS models (models with an ECS above 3°C/doubling of CO2), because they are completely dependent on large aerosol effects for them to remain viable candidates (I believe if you invoke 60-year oscillations, you are left with 2-2.5 °C/doubling CO2 ECS models and no higher), and a problem for any model that isn’t capturing this component of natural variability.

Carrick,

I agree that the mid-20th century slowdown was real, it was real in the Northern Hemisphere and the Southern Hemisphere. The problem is that the vast majority on the industrial aerosols were generated in the Northern Hemisphere with limited transport into the Southern Hemisphere.

Richard,

There is a huge difference in residence time between SO2 injected in the stratosphere from volcanoes (2-3 years for the Pinatubo) and from human emissions: average 4 days, both as “dry” and “wet” deposit. That is mainly a matter of water vapour/clouds/rain. If we may assume that the aerosols in both cases have the same effect then, despite the higher emissions by humans, the overall global effect is around 0.05°C cooling, including all kinds of feedbacks, far less than what in general is implemented in the GCM’s.

Further, because of huge reductions in emissions in the Western world, that should show an impact downwind the main polluting areas by increased warming with decreasing emissions. But that is not the case… And as Jeff already said, the effect would be for 90% in the NH as the ITCZ washes out near all aerosols before they reach the SH. But all oceans in the NH heat up faster than in the SH (if corrected for area differences).

The role of human aerosols at least is overblown, even the sign may not be correct, as brown aerosols over India help the warming over land and help in melting glaciers of the Himalaya…

@FrediEgb

You are ignoring the aerosol cloud-albedo effect. AR4 reports this indirect effect as being more negative than the direct albedo effect, abet both effects are very uncertain.

Steve,

You seem to have forgotten that with a correctly

chosen forcing term, any time dependent model

(physically accurate or not) can be made to produce any solution one wants. The fact that the author of the code had access to observational data that he wanted to reproduce gives me no confidence in the result, especially if there are tuning parameters

in the forcing. Try the model on a weather forecast and compare with observations to see if it is anywhere near physically accurate. And supply the code so we can see what games have been played.

Jerry

I tend to agree with Gerry Browning on this. The problem with all models is that dynamics will be damped by excessive dissipation. While i find it very questionable, the doctrine that Schmidt and others promulgate is that at large time scales, the short term dynamics don’t really matter and you get sucked into the attractor, so the statistics are reasonable. This unfortunately is not a testable doctrine. However, models may get some things right such as energy balance on a regional scale despite bad dynamics and as such they probably tell us “something.” My question is whether simple models with bulk feedbacks can do a lot better. I personally would like to see a lot more money spent on trying to understand feedbacks and a lot less running GCM models.

@Richard Telfort,

“You are ignoring the aerosol cloud-albedo effect. AR4 reports this indirect effect as being more negative than the direct albedo effect, abet both effects are very uncertain.”

Even that is questionable.

If you compare cloud cover over the southern tip of India and compare that to a similar area below the equator, there are no differences in cloud cover change over the years, while according to the models, the fine aerosols should give more albedo and longer lasting clouds with increasing releases of SO2 over the NH:

Click to access io_cloud.pdf

Despite the higher aerosol load in the NH, the NH part of the Indian Ocean – and the coastal stations – are warming faster than the SH part and the station at Diego Garcia, an island below the equator in the Indian Ocean…

Carrick,

Isn’t this simple model suggestihg you don’t need cycles that things can adequately be explained by external forcing alone. The irony is that the GCM’s that try to include internal variations (arguably badly) actually make things worse! If you go with the idea that this simple model has some merit then it would seem that it strengthens the idea that internal variation is NOT contributing greatly to decadal+ variations. The shape of the 20th century temperature graph is well explained by the response to RCP4.5 external forcings alone.

I don’t know about gauging relative scepticism in this single instance. I don’t get to look over the shoulder and see too many people’s lab notebooks while they are working out a problem. Thanks for contributing, I look forward to more of the same.

@Richard Telford

Were you merely “very sceptical” of the results? Did I and others “unquestioningly accept” them?

Funny, that’s not how I remember it. I recall being interested and wanting to know more. I certianly don’t recall that I announced any grand conclusions about “what this proves”. Although it is always possible that something of that kind appeared in the thread which was then deleted by our host (as you may know, he tends to supress that kind of thing when it appears), I don’t recall seeing any such comments at all.

On the other hand, it seemed to me that your initial comment was not merely skeptical but stated a point blank refusal to consider the possibility seriously. Perhaps you expressed yourself poorly in a moment of stress.

I think it is HH Lamb from CRU.

See Figs 2 and 3 in http://phys.huji.ac.il/~shaviv/preprints/ZiskinShaviv_2column.pdf

Residuals in a low sensitivity model (~1.1 deg for 2 x CO2) are very small for both land and mixed layer temps.

volacanic effect is much exaggerated due to temporal coincidence with other variations. Will post graph later.

Also ACE ties in rather well with AMO adding credence to some of the post war adjustment. More later , no time now.

Steve

I have a low sensitivity model that matches the 20th century, the last millenium and the last 20 years very well.

Can you send me an email address I can send it to.

Comparison of accumulated cyclone energy and N. Atlantic SST.

Tropical SST in years surrounding the 6 major eruptions during the period of the thermometer record.

It is difficult to suggest any evidence of post-eruption cooling in the tropics. Follow links below the graph to a series of commented plots showing tropical / extra-tropical regions, north and south both as direct temperature anomaly plots and as cumulative integrals.

My conclusion is the effects of volcanism have been grossly over played, in order to over play the CO2 forcing.

Looking at the above model outputs they would be featureless accelerating CO2 warming without the volcanic glitches.

Despite the claimed complexity they are in reality one trick ponies.

This is probably not surprising in view of the one-track mind group thinking that has dominated climatic research for the last 30 years.

Sorry, that last post was (obviously) mine too. Just WordPress trying to think for me.

I agree with Brian H. Run the model with a 0 climate sensitivity.

In fact, I would suggest running it with a negative quantity, starting with -1.

Reason; CO2 increase does not precede temperature increase in any record, yet that is the fundamental assumption programmed into all the models.

Steve McIntyre: “Richard, IMO, a much under-examined factor in the 19402-1960s pause presently attributed primarily to aerosols are bucket adjustments in the SST record. I’ve commented on SST bucket adjustments from time to time over the years. Folland’s Pearl Harbor adjustment looked loony to me and I criticized it in early CA posts. ”

Steve, your comments were what inspired me to take a closer look at buckets & co. that resulted in my article on Climate Etc.

I concluded many of the so-called “bias corrections” were little more than speculative adjustments. Much of the variability in the pre-WWII period was removed by adjustments that were very close to being 67% of the ICOADS record itself for that period. I plotted just that in the article. ie they are removing 2/3 of the natural variability in the data. Removing early variability is essential to the CO2 interpretation of climate.

There was also a rather combative but polite exchange with John Kennedy of Met Office in comments to that article in which he gave some useful insight into some of the processes and raised several interesting points (and a mistake on my part which I then corrected).

A quick search for my name or his should pick out the relevant discussions from the 600-odd comments.

One thing we focused on was adjustment “validation”. One claimed validation was based on circular logic another was using some japanese SST data. Ironically that data was also taken using buckets. Apparently japanese don’t cause bias, only european or american ones !Japanese buckets are good for “validation”.

Another worry is the changes made to the frequency content. Most of this concerns the long term variability as discussed in the previous article. However, there is also some specific changes to the decadal scale frequencies that I plotted here:

Globally it is pretty good but the circa 9 year peak gets transformed into something else nearer 8y. As far as I am aware no one at Hadley (or anywhere else) has even checked what changes their processing makes to the spectral content of the data.

I took a very detailed look at frequency content, region by region here:

The nine year cycle is strong in most regions. It is also one the Scafetta has documented and which Curry and the Best team have just published on from the land temp record.

It seems odd that Hadley processing seems to preserve the fidelity of most frequencies but hits 9 years and creates an 8 peak that does not appear in the source data.

They don’t document their processing in sufficient detail to reproduce it so it seems unlikely anyone outside the centre will be able to find out why that happens.

In engineering this would be regarded as a serious processing error. In climate science it is not even checked for.

@Greg Goodman

I liked your plot. It helps confirm what I suspected. Warmer waters lead to more hurricanes. In fact I’m ready to elevate Emanuel’s hypothesis to a theory.

After Sandy, I created my own quasi-PDI index for tropical storms north of Cape Hatteras. The relationship with the AMO seemed obvious to me.

https://sites.google.com/site/climateadj/noreast-pdi

Aj , interesting graph, and nicely documented.

I’m not sure how much sense it makes to talk of the power of a storm without accounting for its size.

However, it does seem to confirm two points of disaccord between ACE and STT. Vecchi and Knutson documented some undercounting around WWII but it is a long way from being enought account for the discrepancy in my graph.

Now there’s no reason to suggest under-counting of land-falling storms during WWII so I think your graph confirms a notable discrepancy in the two between 1935 and 1950. Equally it confirms the peak around 1890 that is another divergence. And there is the arbitrary 0.1K adjustment that I made in 1925.

There remains a striking degree of correlation between the two series and I think cross-checking against independant data sets like this may help shed some light of the relevance of some of the speculative “corrections” applied by Hadley.

So what’s the big deal?

~ ~ ~ ~ ~ ~ ~

IPCC – Climate Change 2001:Working Group I: The Scientific Basis

http://www.grida.no/publications/other/ipcc_tar/?src=/climate/ipcc_tar/wg1/031.htm

“Climate sensitivity is likely to be in the range of 1.5 to 4.5°C.

{…}

“This rate of CO2 increase is assumed to represent the radiative forcing from all greenhouse gases. The TCR combines elements of model sensitivity and factors that affect response (e.g., ocean heat uptake). The range of the TCR for current AOGCMs is 1.1 to 3.1°C. …”

~ ~ ~

PS

http://whatsupwiththatwatts.blogspot.com/2013/07/steve-mcintyre-climateaudit-smoken.html

Why you ol’ question beggar you.

========

Steve M,

There are two major factors (as well as many more less dominant issues) which lead to high equilibrium climate sensitivity (ECS) in the CMIP models.

The first is the question raised by Carrick above, which I will rephrase:- are the 61-year cycles which are observable in the temperature datasets “accidentally” caused by coincidental aerosol offsets – which is the explanation implicitly accepted by all of the AOGCM laboratories when they use historic aerosol variation as a tuning series – or do they constitute a natural oscillatory variation due to mechanisms as yet unaccounted for. The abstraction and removal of these oscillations from the temperature series leaves a late 20th century temperature gradient very close to (only) 0.1 deg C per decade to be explained by the exogenous forcings which are actually taken into account in the models. Explicit accounting for these cycles would immediately suggest a lower climate sensitivity than implied by the AOGCMs.

The second issue is a little more complicated. I published an article on Lucia’s which showed that in the large majority of the CMIP models, the relationship between net outgoing flux and surface temperature for a fixed forcing value is a curve, rather than a straight line. The article is here:- http://rankexploits.com/musings/2012/the-arbitrariness-of-the-ipcc-feedback-calculations/

The effective climate sensitivity of nearly all the models – as measured by the inverse gradient of the flux-temperature relationship – starts off low and increases with temperature gain. One of the corollaries is that the match of GCMs over the instrumental dataset already implies a “low” climate sensitivity over this period if it is calculated from a linear feedback assumption; this value typically seems to be about half of the final ECS reported by the model labs. This was explicitly tested on three of the CMIP models. The main reason that the GCMs arrive at high estimates of ECS is this display of a curved response function. Is it a realworld effect or an artifact of the models? I still don’t know.

So should we be surprised that we can match the instrumental series with a low climate sensitivity model? Not at all. On the contrary, the observed series can only be matched with a model which has a low effective climate sensitivity. However, where a particular model ends up in terms of its long-term sensitivity is a strong function of the degree of curvature which the model displays in its flux-temperature relationship.

Steve: Paul, that’s a very interesting point.

Paul, maybe I’m not following this properly, but I would have thought the non-linearity would be in the sense to _reduce_ the sensitivity not increase it.

The plank response is T^4 which can be approximated as linear for very small changes (w.r.t. 300K), same for other negative feedbacks such as triggering of tropical storms. Many of these can only be approximated as linear for very small changes. Indeed tropical storms that govern tropical climate are not even modelled just guestimated by cloud “parameters”.

Is the curvature you show a result of dominant positive feedbacks in the models or that some forcings are modelled as linear when they are exhibiting some saturation or logarithmic behaviour?

Any system that has remained in existence for this long must be dominated by negative feedbacks. Are the models managing to produce a ‘local’ region dominated by positive feedbacks?

Doesn’t that convex curvature denote a system that is inherently unstable?

snip – way O/T

Steve, I’m not sure exactly what I wrote because it’s now snipped but you’d written above that Paul’s comment about non linearity was “a very interesting point. ”

My graphs were illustrative of the non linearity in the tropics which have very strong non linear negative feedbacks. If you want to know why a low sensitivity model works, look at the tropics and the effects of muddying everything together in unified global statistics.

My plots illustrate the near total rejection in the tropics of the negative forcing attributed to volcanic aerosols, this then also raises questions about the tropical response to AGW.

What you are showing with the comparison of GCM output and the low sensitivity model is that both volcanism and AGW are over-valued.

This is clearest in the tropics which is what I was trying to illustrate directly. Not really O/T. My extra-tropical plots show the probably false attribution of concurrent downward trends in SST starting before eruption, to volcanism.

If you thought that was O/T , I either presented it badly or you did not look at the link before binning it.

Greg,

The answer is “not necessarily”. If the curvature arises (in a given AOGCM) because of positive feedbacks which are highly nonlinear in temperature, T, then you are correct that the particular AOGCM is likely to demonstrate threshold instability – i.e. it will go unstable for a large enough value of forcing. However, if the curvature arises solely as a consequence of collapsing dimensionality, then the system can be completely stable and can retain an end-point linear relationship between forcing and equilibrium temperature. In other words, if the zero dimensional balance is replaced by a system of similar linear equations applied to different latitudes or regions, then when the aggregate flux change is plotted against the average temperature over the system, the result can be a curve with no adverse implications for stability. At different levels of forcing, the system will generate a series of self-similar curves, and retain linearity in a plot of equilibrium temperatures against input step forcings .

It is easier to demonstrate than to explain. See

http://rankexploits.com/musings/2013/observation-vs-model-bringing-heavy-armour-into-the-war/

And either way you look at it

The Arctic,

our down to earth physical “climate sensitivity” monitor,

is melting away like a pile of sugar in a rain storm.

In other words, these models and haggling over fractions and fine tuning are fine for books – but in the world we live in – we know that our injections of massive amounts of greenhouse gases above and beyond the background flux.

Have had massive impacts on our cryosphere, air and jet stream circulation pattern disruption and enhanced the state of the climate to produce an increasing tempo of extraordinary.

We know we are driving our climate to conditions no human, let alone a complex society has ever confronted… yet, folks like Mr. McIntyre keep fiddling away and doing all they can to distract from the real issue we should be facing.

so sad…

For those unfamiliar, the consistently Off-topic “Citizen’s Challenge” has one of the most obscure and shallow “Alarmist” blogs. His most recent post there recycles every erroneous RealClimate attack on McIntyre and McKitrick, even citing pre-2006 articles at RC as though they are accurate and comprehensive.

Thanks Skip, I had not realised what this character’s game was.

Sounds more like a Challenged Citizen than a Citizen’s Challenge.

“Steve McIntyre: done. Blog policy asks that readers not to try to prove or disprove AGW as O/T arguments in unrelated threads. I generally allow this sort of rant to be posted, but appreciate it when readers take the same attitude as you.”

~ ~ ~

Right keep looking at inconsequential details in obsessive micro-focus – while pretending to do science with feigned superiority.

When actual science is about culling the good information and constructively learning from that information and moving forward.

You on the other hand are dedicated avoiding the real issues and what we know for certain and learning from that – but than inaction is your objective.

~ ~ ~ ~ ~ ~ ~

AJ writes: “http://climategrog.wordpress.com/?attachment_id=421

If you think that like Steve McIntyre I’m just trying distract you, you have the data source on the graph go and plot it yourself.

This is not about haggling over fine tuning, it is about models that have got it totally wrong.”

~ ~ ~

What a wonderful example of “science in a vacuum”

“Extent” like you don’t know the difference between extent and “volume”

What about this graph:

And that’s not even touching what’s happen on Greenland!

~ ~ ~

Explain to me again why you folks feel so superior???

Steve: You say: “When actual science is about culling the good information and constructively learning from that information and moving forward.” I agree with this. In order to “cull the good information”, one also has to identify “bad information”. In the area of proxy reconstructions, I hope to have done so.

Opps, was referring to this graph:

“Greg Goodman

Posted Jul 27, 2013 at 1:57 AM | Permalink | Reply

We have 30 years of DAILY ice data. Now since you seem to think it’s melting away like sugar this should be evident in the rate of change shouldn’t it ?

If you think that like Steve McIntyre I’m just trying distract you, you have the data source on the graph go and plot it yourself.

This is not about haggling over fine tuning, it is about models that have got it totally wrong.”

~ ~ ~ ~ ~ ~ ~

Extent? volume? What about this reality? :