Figure 1.4 of the Second Order Draft clearly showed the discrepancy between models and observations, though IPCC’s covering text reported otherwise. I discussed this in a post leading up to the IPCC Report, citing Ross McKitrick’s article in National Post and Reiner Grundmann’s post at Klimazweiberl. Needless to say, this diagram did not survive. Instead, IPCC replaced the damning (but accurate) diagram with a new diagram in which the inconsistency has been disappeared.

Here is Figure 1.4 of the Second Order Draft, showing post-AR4 observations outside the envelope of projections from the earlier IPCC assessment reports (see previous discussion here).

Figure 1. Second Order Draft Figure 1.4. Yellow arrows show digitization of cited Figure 10.26 of AR4.

Now here is the replacement graphic in the Approved Draft: this time, observed values are no longer outside the projection envelopes from the earlier reports. IPCC described it as follows:

Even though the projections from the models were never intended to be predictions over such a short time scale, the observations through 2012 generally fall within the projections made in all past assessments.

Figure 2. Approved Version Figure 1.4

So how’d the observations move from outside the envelope to insider the envelope? It will take a little time to reconstruct the movements of the pea.

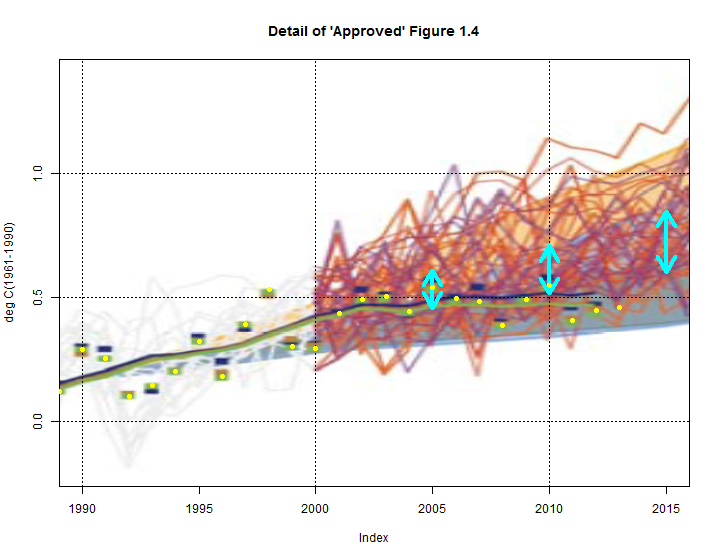

In the next figure, I’ve shown a blow-up of the new Figure 1.4 to a comparable timescale (1990-2015) as the Second Draft version. The scale of the Second Draft showed the discrepancy between models and observations much more clearly. I do not believe that IPCC’s decision to use a more obscure scale was accidental.

Figure 3. Detail of Figure 1.4 with annotation. Yellow dots- HadCRUT4 annual (including YTD 2013.)

First and most obviously, the envelope of AR4 projections is completely different in the new graphic. The Second Draft had described the source of the envelopes as follows:

The coloured shading shows the projected range of global annual mean near surface temperature change from 1990 to 2015 for models used in FAR (Scenario D and business-as-usual), SAR (IS92c/1.5 and IS92e/4.5), TAR (full range of TAR Figure 9.13(b) based on the GFDL_R15_a and DOE PCM parameter settings), and AR4 (A1B and A1T). ,,,

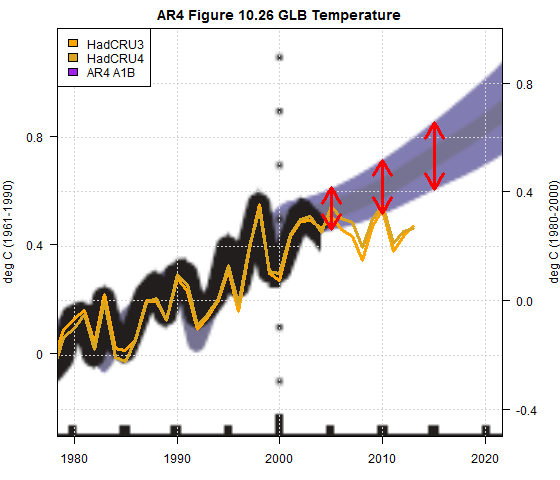

The [AR4] data used was obtained from Figure 10.26 in Chapter 10 of AR4 (provided by Malte Meinshausen). Annual means are used. The upper bound is given by the A1T scenario, the lower bound by the A1B scenario.

The envelope in the Second Draft figure can indeed be derived from AR4 Figure 10.26. In the next figure, I’ve shown the original panel of Figure 10.26 with observations overplotted, clearly showing the discrepancy. I’ve also shown the 2005, 2010 and 2015 envelope with red arrows (which I’ve transposed to other diagrams for reference). That observations fall outside the projection envelope of the AR4 figure is obvious.

The new IPCC graphic no longer cites an AR4 figure. Instead of the envelope presented in AR4, they now show a spaghetti graph of CMIP3 runs, of which they state:

For the AR4 results are presented as single model runs of the CMIP3 ensemble for the historical period from 1950 to 2000 (light grey lines) and for three scenarios (A2, A1B and B1) from 2001 to 2035. The bars at the right hand side of the graph show the full range given for 2035 for each assessment report. For the three SRES scenarios the bars show the CMIP3 ensemble mean and the likely range given by -40% to +60% of the mean as assessed in Meehl et al. (2007). The publication years of the assessment reports are shown. See Appendix 1. A for details on the data and calculations used to create this figure…

The temperature projections of the AR4 are presented for three SRES scenarios: B1, A1B and A2.

Annual mean anomalies relative to 1961–1990 of the individual CMIP3 ensemble simulations (as used in

AR4 SPM Figure SPM5) are shown. One outlier has been eliminated based on the advice of the model developers because of the model drift that leads to an unrealistic temperature evolution. As assessed by Meehl et al. (2007), the likely-range for the temperature change is given by the ensemble mean temperature

change +60% and –40% of the ensemble mean temperature change. Note that in the AR4 the uncertainty range was explicitly estimated for the end of the 21st century results. Here, it is shown for 2035. The time dependence of this range has been assessed in Knutti et al. (2008). The relative uncertainty is approximately constant over time in all estimates from different sources, except for the very early decades when natural

variability is being considered (see Figure 3 in Knutti et al., 2008).

For the envelopes from the first three assessments, although they cite the same sources as the predecessor Second Draft Figure 1.4, the earlier projections have been shifted downwards relative to observations, so that the observations are now within the earlier projection envelopes. You can see this relatively clearly with the Second Assessment Report envelope: compare the two versions. At present, I have no idea how they purport to justify this.

None of this portion of the IPCC assessment is drawn from peer-reviewed material. Nor is it consistent with the documents sent to external reviewers.

249 Comments

No need for peer-reviewing!

Just make the models colder!

But there is trouble brewing:

Their acts are trending bolder

They hoped you’d find this slower

Your research here is nifty

Their morals have slid lower

And like their lines, are shifty

===|==============/ Keith DeHavelle

Thanks Steve for exposing another logical fallacy:

Moving the Goalposts (Shifting sands; raising the bar; running for cover)

Humbug!: The Skeptic’s Field Guide to Spotting Fallacies in Thinking, Theo Clark, 2005 p101

“The goalie finally scores . . .and moves the goalposts. Goalie “Yes! I scored! . . .Granted, its an own goal, but as far as I’m concerned, a goal is a goal.”

On page 1-34 in the AR5-WG1 Final Draft, the report states how they modified the plot in Figure 1.4:

“For FAR, SAR and TAR, the projections have been harmonized to match the average of the three smoothed observational datasets at 1990.”

They simply moved the projections to match observational data at 1990 and get a better fit. It is basically an arbitrary change with, as you say, no justification. Amazing.

Steve: It looks like something else to me. In the Second Draft, they stated “Values are aligned to match the average observed value at 1990” – which sounds like a similar operation. This doesn’t explain why the placement in different in the final version.

How Inconvenient.

Very crafty! By continually do this they can hope that their faulty graphs will always appear as an upwardly acceleration of temperature shortly about to occr. This way they can never be proved wrong and their cash flow will remain intact as the catastrophe will always be but a few years into the future.

You’re seldom wrong when you back date your forecasts.

I think it is because they have renormalized all the prediction curves down so that they now sit on their wiggly smoothed data trend. The normalization point of 1990 is now sat nicely on one of the down wiggles of their fit instead of sitting on the actual measured anomaly for 1990. As a result all the predictions have conveniently shifted downwards by at least 0.15C. This then allows all TAR predictions to nicely envelope the data. Phew !

I guess this renormalization process could continue ad infinitum into the future !

No one renormalized anythink. Figure 1. isn’t from IPCC. Daily Mail tabloid newspaper created this, and claimed that its IPCC’s prediction, but that was a fake.

Figure 1 is from the IPCC’s Technical Summary of the first order draft for AR5, page TS-79:

Click to access TechnicalSummary_WG1AR5-TS_FOD_All_Final.pdf

The full draft is here:

http://www.stopgreensuicide.com/

Astounding. The ‘explanation’ is clear, but only in its intention to confound and blur.

This should be publicised. Cannot be dismissed as ‘whoops, just one mistake in a very big report’ ( like Himalaya 2035).

I was looking for some sign of humor in the IPCC text, like ‘fooled you with the first diagram’.

But they are playing for the tragic end…

That kind of action can only be explained by Groupthink, no sane mind would do this and not want to sink into the ground ashamed by the unexplainable embarrassment.

Some named person, or persons, created that graph.

It’s HIDE THE DECLINE all over again.

Crafty switch in presentation on their part. They must have taken a lot of heat for the original version, which had been all over the blogosphere as showing the data outside the range of the models.

By switching from “Values are aligned to match the average observed value at 1990″ to using “Annual mean anomalies relative to 1961–1990 of the individual CMIP3 ensemble simulations” they’ve simply widened the spread of the models to encompass the actual data.

Or another way to look at it, if we assume the focal point of the base year period is it’s midpoint (about 1975/76), they’ve shifted the focal point back 14/15 years.

“They must have taken a lot of heat for the original version, which had been all over the blogosphere as showing the data outside the range of the models.”

We’re very lucky those earlier drafts were leaked–the impact of their casting a light on the IPCC’s shiftiness could be profound, maybe eventually rivaling climategate, especially if the IPCC releases (or someone leaks) a document showing reviewers’ comments and author’s gatekeeping responses, and if someone who attended the SPM session leaks a few tidbits.

The comment by rogerknights in his post above is part of my take away from these revelations:

(1) The earlier suggestions at these blogs about revealing to the public at all stages of the IPCC AR5 process are validated. Those revelations allow the interested, and I would hope informed, observer to see how the process “works”.

(2) In this case we can readily see another of many examples that the IPCC process is biased towards spinning the evidence toward immediate government action on AGW.

(3) The time has probably come where blogs and other interested groups take the information reviewed and presented by the IPCC and provide their own conclusions and completely ignore those reported by the IPCC.

this is a brilliant suggestion

Which I labelled as shape-shifting, irony intended

The IPCC should hold a masterclass in “How to draw a complex-looking graph so as to reveal absolutely nothing”. It looks like somebody gave a 3-year-old graph paper and a box of crayons. Astounding.

As an engineer, when I present a graph, the intention is to give as much information as possible to the reader without ambiguity and without excessive clutter. This is the antithesis.

That’s the first thing I too thought looking at that graph. ‘someone is trying to not communicate something here’

I laughed. For all the world, it looks like the spiteful scribblings out of a child who couldn’t get his original effort to go as willed! It kinda says “Bah, who need science anyway?” Iconic.

I admit I wasn’t laughing as I waded into the adjusted spaghetti rather late. But the ‘Iconic’ got me. Thanks.

I’d take away his Crayolas and ban his foolscap drawing from my refrigerator door.

It gives a new meaning to ‘red noise’ in the climate context.

I have no idea what “harmonized” means in this context. Is this a real thing, and if so, could someone define it for the slower students?

Regardless of what this actually means, it appears obvious that if you change your projections so that they wrap around the means of the observations, then the observations will be somewhere in the middle of the projections. But all this means is that the original projections were plotted wrong somehow, if for no other reason than that they needed harmonization to match observations… that hadn’t yet occurred?

How do I go about becoming a climate scientist? It appears to be an ideal job, requiring perhaps only some serious study of Lewis Carroll. And maybe a hookah.

Btw, Keith, huge admirer of your poetry. 🙂

Hmm. I should’ve read further. My above comment doesn’t explain the difference from the current version of Figure 1.4 in AR5 and Figure 10.26 from AR4.

This is a masterclass in the art of beclouding,

pesadia,

Did you mean beclowning?

They are just buying themselves more time to further their politicised objectives. But the end is inevitable. If the media continues to ignore this failure of the models and malfeasance by the IPCC, non climate scientists need to speak up, else they will also suffer when the backlash comes.

Imagine if whoever drafted SOD figure 1.4 had chosen to align all the climate model output to 1989. Would you still be calling the figure “damning (but accurate)” or berating the drafter for cherry-picking a cold year for the alignment?

It should be rather obvious why aligning the model output to a single year is not sensible, so why the outrage when the IPCC ditches a poorly thought through figure?

Others will comment on the technical changes, but surely one issue with the new graph is that it was not shown to the external reviewers?

How many rounds of peer-review would you have the IPCC do?

If you will not accept any changes to the report that are not peer-reviewed, then after every edit the manuscript has to be sent back to the reviewers, who will doubtless suggest further changes, requiring more edits and yet another round of review. An infinite loop. This is plainly crazy, and is not how peer-review works in journals.

The data behind the SOD figure 1.4 and the final figure 1.4 are the same. The new figure is simply a different way of visualising the data.

Aren’t you confusing peer-review and IPCC review processes? IPCC AR is supposed to rely solely on peer-reviewed literature (what, as claimed, is not the case with this graph) and all that is written in the AR is supposed to be subject to external review process by reviewers (that as well, as claimed, has not been the case with this graph).

The claim by Steve:

“None of this portion of the IPCC assessment is drawn from peer-reviewed material. Nor is it consistent with the documents sent to external reviewers.”

Richard, this is EXACTLY how peer review works in journals. Every time you change a manuscript it has to go back to the reviewers. Once it’s been accepted you cannot change anything (except fixing minor typos). If you do propose a major change to a figure that alters a finding on a topic of central importance to your paper, it absolutely has to go out to the reviewers. Your excuse here is nonsense.

If the IPCC wants to use a system in which, time after time, key material is only inserted (or removed) after the close of peer review, then it should stop bragging about its peer review process. If it wants to boast of a rigorous peer review process then it should adopt one. After all, it was the IPCC’s self-proclaimed rigor of its peer review process that US courts and the EPA relied on when accepting IPCC findings for policymaking purpose.

Maybe your manuscripts need to be checked by the reviewers after ever change, but in general typically a manuscript is only sent for re-review if major revisions were required.

I suppose that you would like the IPCC to do an infinite number of rounds of peer review. Nicely tying up active scientists while you write your joke NIPCC report.

richard telford:

So you’re arguing that it’s okay to completely modify figures and not let the other authors see them before publication. That’s an amazing argument.

Of course the other authors would see the new figures before publication. What made you think otherwise?

Ross McKitrick:

Not quite true. Changes in a manuscript in response to a peer reviewer do not require re-review.

This is a different thing. It’s a substantively new figure. I would doubt an editor would allow it to appear in a journal without re-review. Given the collaborative nature of the reviews of AR5, not allowing the other authors to review is a breach of trust and ethics, in my opinion.

I should have said “do not *necessarily* require”. Whether a new peer review is needed depends on how substantive the changes are, and of course the editors make the final call on that.

richard telford:

Are you factually stating that all other authors of this chapter had the opportunity to review this figure?

And by “all” I mean “every other author” not just the inner circle.

Richard, I have explained in detail how I think the IPCC should structure its review process in my GWPF report here: http://www.rossmckitrick.com/uploads/4/8/0/8/4808045/mckitrick-ipcc_reforms.pdf

It wouldn’t involve tying up the IPCC authors in an infinite number of revisions. But it would block the IPCC Authors from continuing to claim that the final report has been peer reviewed even when conclusions depend on material inserted (or deleted) after the close of the review process.

“How many rounds of peer-review would you have the IPCC do?”

well

As many as O Donnell’s paper on the antarctic, or maybe as many as our paper which merely confirmed what the reviewers themselves had published.

In short.

The final draft should get a review.

“The data behind the SOD figure 1.4 and the final figure 1.4 are the same. The new figure is simply a different way of visualising the data.”

That’s testable Richard. do you have the data.. the data the actually used or will folks have to digitize shit.

Somebody made the charts. they have the data.

Steve: For the SAR envelope, I am convinced that IPCC applied the Tamino bodge. As others have observed, while Tamino’s centering method may well make more sense than choosing 1990 as a reference date, AR2 authors said that they used 1990 as a reference point. There’s a bigger problem lurking here, as Tamino misdescribed the purpose of the AR2 diagram and IPCC seems to have too quickly adopted Tamino’s misreading – without external peer review. On the AR4 envelope, Richard Telford’s assertion is not accurate: the Second Draft envelope was taken from AR4 Figure 10.26, while the Government Draft envelope is an interpretation of what they think AR4 ought to have done.

CMIP5 models are available to download and process. Tedious, though not too difficult, but you need a lot of disk space. Hopefully the data shown in each figure will be released as I suggested after AR4.

Richard

“CMIP5 models are available to download and process. Tedious, though not too difficult, but you need a lot of disk space. Hopefully the data shown in each figure will be released as I suggested after AR4.”

Well you have a problem there, since I have tried to download the data.

1. Do you know what models and what runs they used for each chart.

2. are you aware of the changes that have been made over time

3. Did they sample the GCMs in a masked fashion, ie only where hadcru had gridcells.

4. How did they transform the gaussian grids?

5. Did they plot the data correctly.

It would be easy to supply the actual time series used to create the chart.

That would facilitate checking.

Now of course you could have said.. “well get a computer an re run the models”

Bottomline, if you want folks to believe the charts, supply the data.

Im under no rational obligation to believe pixels on a page.

You are under no obligation to believe anything (except pixies). While supplying the data plotted in the graph would be useful, I don’t think it would materially affect your inclination to believe the figure.

If you want to audit the calculation of area-weighted mean temperature from the model output, go ahead. But you must be desperate to think you would find a fatal flaw in AR5 there.

Richard Telford, I’m having trouble believing what I’m hearing you say. So far, you have said, 1) there’s nothing wrong with them publishing this without re-submitting it for peer review. 2) there’s no reason why they have to supply the data (or the code) that they used to construct this, and 3) it’s unreasonable to be complaining about this or doubting their word.

Am I understanding you correctly? They should be able to post whatever graph they want, without any information on why it is right or how to check it, and all right-thinking people are supposed to just accept it?

1) The change to the figure was likely made in response to reviewer comments and been approved by the review editor. Unless you have an enormous number of review rounds some changes after the reviewers last saw the manuscript are inevitable. The change is minor and obvious. Has anyone any material objections to the new figure, which is using the same data, or is it all faux outrage?

2) The data are available to download and anyone familiar with model output would easily be able to process them to replicate this figure.

3) Complain all you like. It’s a tempest in a tumble that is diverting me from the critical review of the report I’m supposed to be writing.

Alright, I guess I believe it, since you’ve repeated it clearly. But I find it repulsive. Your words are taken directly out of the playbook of the Climategate Team: Don’t tell people how to get the result. Make sure that it’s obscure, by not providing data nor methods. Scoff at them for incompetence when they struggle to reconstruct something that should have been provided as a matter of course, and tell people that it proves that We are the professionals and Those Amateurs obviously don’t have a clue (see Dana’s lovely phrasing). If it turns out that the work is really mistaken or badly done, then say, What is all this faux outrage about that figure, anyhow – we’ve moved on and produced lots of newer better work which anyway gives essentially the same result.

Sorry, no. It’s not faux outrage. This is the kind of stuff that has convinced a decent and growing chunk of the world’s population that climate scientists aren’t scientists. If you care about climate science, instead of trying to cover for them, you should be tarring and feathering them.

Oh, sorry, I forgot: Never admit anything, no matter how obvious. You’re just giving ammunition to the enemy.

RE: Posted Oct 1, 2013 at 8:28 AM | Permalink | Reply

“The new figure is simply a different way of visualising the data.”

And in your view it’s not only a different way but a better way?

Since the draft figure is obviously flawed, of course the new figure, which avoids that flaw, is better.

If the draft figure is obviously flawed – as you tell us – I don’t understand figure TS.14 in the technical summary (p. TS-107).

Can you explain why the observations in the upper graph(s) are going to leave the predicted area of the models?

This graph supports fig. 1.4 of the second order draft.

And I don’t understand why the model graphs should be shifted “downwards” – maybe fitting to a reference year like 1992.

In 1992 the “global” temperature was reduced due to the outbreak of Pinatubo and is, therefore, no suitable reference year.

Furthermore, I don’t understand the discussion about a suitable reference year.

If the models used by the IPCC are a good representation of the observed “global” temperature they should perform a good hindcasting.

Fig 1.4 of the final draft starts at ~1950. So – why don’t the model graphs start at 1950, too?

Starting the model graphs at 2000 means to me that their hincasting skills may be miserable.

Richard claims that “The change is minor and obvious. Has anyone any material objections to the new figure, which is using the same data, or is it all faux outrage?” Whether it is material or not, is unknown.

As wernerkohl points out the shift makes backcasting an issue. The ability of models to backcast adequately to mimic the past was stated in Ch 9 AR4 as one condition necessary to attribute modern warming to anthropogenic influence. The reason given in Ch 9 was that the temperature reconstructions and models are not truly independent.

Another material objection was the use of unmeasured aerosol to help explain the cooler temperatures around 1940. This material objection not only has worsened due to the shift, it has been determined since AR4 that the aersol parameter used was too negative, thus indicating that this period should run even hotter. The lack of agrrement of backcasting and the temperature record is not immaterial to attribution.

Richard, the proper assumption at this time would be that the changes are material and that to support the changes, documentation is needed. Otherwise the claim of the same confidence could not be supported, much less an increase in confidence.

Also note that it is proper than when you redo something, you don’t just redo it to make it look like you wish, you redo it to a standard. If they are doing it to make the models look better for forecasting and it worsens the backcasting, it IS material and if true, it is a failure. The explanations, graphs and reasoning should be removed or changed to be correct. Otherwise the claim of it being the best science at present is falsified.

Yes Richard, but this was a major revision.

The bub of SMc’s comment is the statement

=========================

None of this portion of the IPCC assessment is drawn from peer-reviewed material. Nor is it consistent with the documents sent to external reviewers.

=========================

Richard Telford’s comment does not address this issue. If, as SMC notes, the new AR5 figure has no basis in peer-reviewed material and if it was not vetted by the reviewers then why was it published? Does SMc misapprehend the figure?

On a recent thread, I asked for a reference to help determine the relative offsets. Richard Telford claims there is an obvious reason why the whole endeavor is a bad idea; implying that each model should be aligned separately against … what?

The obvious thing to do is to make the offsets relative to the 1961-1990 climate normal period, or some other period if more appropriate. Picking a single year will end in tears.

Aren’t GCMs basically systems with initial value boundary conditions set? If so, then the question is “what did the model use as a baseline?” If it used the data in 1990, then that’s what it should be referenced against. If it used the average from 1960-1990, then that’s what it should be referenced against.

If it used the 1990 data as it’s starting point, then you can’t go back and plot it against the 1960-1990 average just to shift the plot. You’d have to go back and re-run the model at the new condition.

Richard Telford at Oct 1, 2013 at 4:33 AM:

“It should be rather obvious why aligning the model output to a single year is not sensible, so why the outrage when the IPCC ditches a poorly thought through figure?”

To complete a thought:

It should be rather obvious why aligning the model output to a single year is not sensible, so why the outrage when the IPCC ditches a poorly thought through figure [and replaces it with the Flying Spaghetti Monster]?

And replaces it with an even more poorly thought-through Figure, one which doesn’t honour earlier data

So, no problem then

What are you on about?

That’s my question

The earlier data I refer to are the model outputs from the 1960 period (your preferred baseline)

If one shape-shifts the baseline to honour earlier model output, these earlier outputs fail the error bars post 1998. If the shape-shift is moved to 1990 to honour later model outputs, the earlier outputs fail the error bars

So, no problem then

Shape-shifting? I don’t know any shape-shifters. And your figure does not illustrate your argument.

RIchard Telford: It is clear from AR4 Figure 10.26 (Steve’s Figure 4), that the A1B models predicted a slightly accelerating warming of 0.2 degC/decade over 2000-2020 period – a rate of warming which is very similar to that observed during the 1980-2000 period. You (and apparently the authors of AR5) are arguing that the y-intercept used in this fIgure from AR4 was inappropriate:

1) If the authors of AR5 believed that the authors of AR4 made a mistake, they should have clearly explained the earlier mistake. They should have added observations to AR4 Figure 10.26 and side-by-side shown what they now believe is a more-appropriate graph. By not openly and honestly confronting the contradiction between AR4 projections and subsequent observations, the authors of AR5 have created confusion for policymakers and the public. Skeptics can legitimately claim that the AR4 projections were wrong and others can claim that they were correct.

2) You may be correct in asserting that the 1990 start year was unusually warm, but it is also possible that it simply LOOKS warm because it occurred between two periods of volcanic cooling. Uncertainties associated with transient volcanic aerosols and decreasing anthropogenic aerosols confound the 1961-1990 baseline period for models.

3) The real issue is the SLOPE (rate of warming), not the y-intercept! You know (or should know) that few, if any, of the model runs in the “spaghetti” graph contain a 15-year period with negligible warming. As you also (should) know, Fyre (2013) found that it is “extremely likely” (p<0.05) that the warming of the past 20 years has been overestimated by models. So why are you and the IPCC attempting to obscure this simple fact with spaghetti graphs with adjusted y-intercepts? It's indefensible!

4) Suppose you were the editor of a journal that had received a paper discussing observations vs the authors earlier model projections. In their first submission (AR5 FOD), the authors include a graph that mis-located their projections from their previous paper (AR4 Figure 10.26), presumably by using the wrong reference period for calculating anomalies. As discussed in Steve's previous post, this mistake was detected by a reviewer. The authors revised their graph and also add an addition region of uncertainty that wasn't present in their earlier publication. During a second round of peer review, a reviewer notes that the revised graph shows that observations are now inconsistent with projections, contradicting the paper's conclusion. The authors revise their paper again, changing their projections for a third time (since they were originally published in AR4). They replace easily-understood confidence intervals with the "spaghetti" output from individual model runs AND add runs from additional emissions scenarios. Wouldn't you insist that the paper contain a full explanation of these changes and send that explanation out for another round of peer-review? Or would you simply reject such subterfuge?

+1

don’t expect an answer from Dr Telford anytime soon…until he consults the politburo

Frank,

Yours is the best summary I’ve seen of Dr. Telford’s defective reasoning. Point 1)is particularly telling. The essence of a scientific advance is being able to make significant theoretical predictions that are confirmed by experiment. If predictions fail the test of observation, that is a centrally important, if not fatal, matter.

You don’t get to simply go back and fudge your error envelope retrospectively to get agreement. Certainly if you do that sort of adjustment you had damn well better have some good reasons for it. You are clearly obliged to answer the question: If the convenient, newly adjusted error bars are the correct ones, why didn’t you use those back when you first made the prediction?

Under the circumstances is it just nitpicking, or unduly suspicious, to point out that the set of observations which runs colder in the last decade or so has been shown in a relatively pale shade of green?

First graph shows current observed temps at the 0.4 anomaly. Second graph at the 0.5 anomaly. 0.1 degrees of warming has been created out of nothing.

Reblogged this on Quixotes Last Stand.

According to environmental scientist Dana Nuccitelli, the “IPCC models have been accurate.”

Apparently climate contrarians are guilty of one for the following if they believe otherwise:

1) Publicizing the flawed draft IPCC model-data comparison figure

2) Ignoring the range of model simulations

3) Cherry Picking

See http://tinyurl.com/o4qn6do

Why push the outside of the envelope when you can push the entire envelope?

SkS says not to worry, IPCC model global warming projections have done much better than you think

http://skepticalscience.com/ipcc-model-gw-projections-done-better-than-you-think.html#.UkpVR8JIOhg.twitter

Steve, brilliant post by the way

I see they included the B2 emissions scenario, just to give a few more projections on the low side. In real life we’re right up there, see http://www.carbonbrief.org/blog/2011/06/iea-and-ipcc-temperature-projections

Stefan Rahmstorf from the PIK (http://www.pik-potsdam.de/) insists in his German blog that Figure 1.4 in the Second Order Draft contained an error:

http://www.scilogs.de/wblogs/blog/klimalounge/klimadaten/2013-09-27/der-neue-ipcc-klimabericht#comment-48839

He writes that the observed temperatures lie within the projected range of climate models. And he refers to Grant Foster who – according to Rahmstorf – had shown this error.

Could you comment this, please?

Thanks!

The SKS link Judy refers to above explains what Stefan is talking about. Apparently now the deal is picking which year you are aligning the values to. If it’s 1990, you get inside-the-projections values, if it’s a different year, you get outside-the-projections values. Each side accuses the other of cherry picking if you choose a year that shows a particular conclusion.

I personally think this notion of realignment is relatively new as it relates to the IPCC imagery. Everybody had all been going on the same maps for a while. Is Grant’s website cited for the changes when it comes to the new IPCC graphs? I wonder if he’ll be asking for such credit or if he’s just happy to help.

“The flaw is this: all the series (both projections and observations) are aligned at 1990. But observations include random year-to-year fluctuations, whereas the projections do not because the average of multiple models averages those out … the projections should be aligned to the value due to the existing trend in observations at 1990.”

From SkS site on Foster’s comment

Harmonise – don’t let observations charm your eyes

Let all those spaghetti graphs calm your eyes

.. and just harmonise, harmonise, harmonise

(only be sure to call it peer reviewed.)

(with apologies to Tom Lehrer)

Does AR5 have a millennium graph a la hockey stick? I can’t find it.

Seems that AR4 Figure SPM.5 would be even more telling, particularly in light of actual CO2 emissions:

First, our Skeptical Science friends showed that CO2 is following an almost worst-case scenario:

Then here’s AR4’s prediction of the warming that results:

Figure SPM.5. Solid lines are multi-model global averages of surface warming (relative to 1980–1999) for the scenarios A2, A1B and B1, shown as continuations of the 20th century simulations. Shading denotes the ±1 standard deviation range of individual model annual averages. The orange line is for the experiment where concentrations were held constant at year 2000 values. The grey bars at right indicate the best estimate (solid line within each bar) and the likely range assessed for the six SRES marker scenarios. The assessment of the best estimate and likely ranges in the grey bars includes the AOGCMs in the left part of the figure, as well as results from a hierarchy of independent models and observational constraints. {Figures 10.4 and 10.29}

Sure looks to me like the actual warming is following the orange “constant 2000 concentration” level. Hmmm.

I’m glad I’m not the only one who noticed this bait and switch game: actual CO2 has followed the “worst case” scenario, but actual warming has followed the “no additional CO2 at all” prediction. The models were falsified years ago if that is taken into account.

No matter how much you push the envelope, it is still stationary [sic, intentionally].

I think DGH’s link to Skeptical Science gives the explanation of what they did;

See http://tinyurl.com/o4qn6do

Also reprinted in The Guardian.

The key words are here:

“The first version of the graph had some flaws, including a significant one immediately noted by statistician and climate blogger Tamino:

“The flaw is this: all the series (both projections and observations) are aligned at 1990. But observations include random year-to-year fluctuations, whereas the projections do not because the average of multiple models averages those out … the projections should be aligned to the value due to the existing trend in observations at 1990.

Aligning the projections with a single extra-hot year makes the projections seem too hot, so observations are too cool by comparison.”

Thus, instead of starting the projections at the 1990 temperature value (around 0.3), they started them at an ‘average’ temperature for that period (eg. a five-year mean centred on 1990, approx 0.2). This brings all the projections down by about 0.1 degC. Removing the error bars also helps. This does not seem enough on its own, but is at least a big part of the adjustment.

What I found telling about Dana’s post is the fact that his excuse for the original error was that it was contained in an early draft. Ooops. On the other hand he argues that this plot – which has just now become public and has not been peer reviewed – is correct and forms the basis of the statement, “IPCC models have been accurate.”

Indeed we’re supposed to believe that the IPCC should be excused for an error and simultaneously believe that the organization is infallible.

Regarding your Figure 4, it’s even worse than that. Seeing that HadCRUt3 contains an artificial step change of about 0.064C (based on the fact that HadSST2 shifted up suddenly and permanently by ~0.09C relative to all other global SST datasets across the transition from 1997 to 1998, when Hadley Centre switched data sources for their product) that has never been addressed, much less amended (rather covered up when presenting their new and ‘improved’ HadCRUt4), the actual HadCRUt3 graph should compare rather like this with HadCRUt4 shown here:

http://woodfortrees.org/plot/hadcrut3gl/from:1978/to:1998/compress:12/plot/hadcrut3gl/from:1998/offset:-0.064/compress:12/plot/hadcrut4gl/from:1978/offset:-0.01/compress:12

Playing with the starting value only determines whether the models and observations will appear to agree best in the early, middle or late portion of the graph. It doesn’t affect the discrepancy of trends, which is the main issue here. The trend discrepancy was quite visible in the 2nd draft Figure 1.4. All they have succeeded in doing with the revised figure is obscuring it.

I prefer comparisons of trends like this.

I actually happen to think the AR4 is no better than the figure it was replaced by, and possibly worse because of the way the data series were aligned. My objection with both figures is in that you are plotting a projection from a scenario (rather than a forecast based on actual forcing data) on the same graph, as if you were comparing forecast outcome with actual outcome.

What would be an interesting comparison for me would be to show would happen if you ran e.g. the AR4 models further forward to 2013 (or as recent as is practicable) using the actual forcings, starting with the exact run states of the models at the stopping point of the last simulations.

A good reminder of the best visual.

One can only wonder what else will come to light as the underlying papers are published. Habitual mendacity is so reliable.

Pointman

BTW. Slight typo Steve – Klimazweiberl s/b Klimazwiebel.

The original graphic appeared in Judith Curry’s article in

‘The Australian Newspaper on the 21st of September’ so is

on the record. No down the memory hole with it.

Climate science takes the Fifth…. Assesment….

Good lord– in no other field of professional endeavor would such obviously manipulated discrepancies be accepted for a moment. Absence of peer review in sense of replicating findings is a serious omission, virtually admitting that doctored inputs have been illegitimately processed.

As years go by, such asininities are not sustainable. Any so-called researcher (“scientist” is the wrong word) or global “policy maker” [sic] claiming IPCC support is due for rude awakening.

I combined the two plots into an animated gif to be able to see the effect of the changes:

I don’t recall that in the previous assessments, the “correct” methodology of selecting the common starting point using trends. I am pleased to see that the proper adjustments have now been made to solve this previously unrecognized problem.

Thanks, very helpful.

AC

Why would they include AR4: A1B, A2 and B2 for the points in time where we have data against the actual predictions for the actual scenario (we’ve been roughly following A2).

Wouldn’t it be clearer to just include AR4[A2] for the period of time that we already have measurements for, then plot their best predictions moving forward with various scenarios as clear bands as was in the draft copy?

The observations hidden within the spaghetti strands look to be only the high end of the ranges displayed before. Especially for the years following 2000 temperature data point placement is higher to apparently vindicate the model runs.

And still, even with the shaded areas (allegedly the 90% confidence predictions) replaced by a spaghetti-graf (with shifted shadings) ..

.. where the spaghetti-lines are unsmoothed to appear presenting a wider range than plotted actual and even filtered smoothed temperatures ..

.. still, the only thing those are showing is that occasionally one spaghetti-line will dip below observations for one point.

And with many spaghetti-lines you can at least create the impressions that there are a couple of points falling below even under observed temperatures. But mutually different lines, and each line’s trend is distinctly higher than observations.

Not even with this new trick to conceal the decline can they give any such impression other than optically and superficially!

I just saw that Lucia’s had a very convincing argument (to me at least) on Tamino’s (and IPCC’s) approach.

http://rankexploits.com/musings/2013/leaked-chapter-9-ar5-musings/#comment-119655

“lucia (Comment #119655)

September 24th, 2013 at 8:55 pm

I should add: Also, consistency with Tamino’s notion of picking the 1990 ‘point’ based on the trend line means that he should align the trendline through the models to the trendline for the observations. Instead, he aligns the value of the model mean at 1990 to the trendlinefor the observations. That’s applies and oranges.

(Moreover, if he aligns trendlines at 1990 for both models and observations, and fits the trend from Jan1980-Dec1990, he will actually match the projections from the AR4. Because that’s the way OLS works!”

She has a new post where she makes it still clearer

http://rankexploits.com/musings/2013/taminos-take-on-figure-1-5/

Her figure

is nothing short of hilarious.

I wonder if the von Storch paper is referenced in AR5?

“…we find that the continued warming stagnation over fifteen years, from 1998 -2012, is no longer consistent with model projections even at the 2% confidence level.” http://www.academia.edu/4210419/Can_climate_models_explain_the_recent_stagnation_in_global_warming

Oops, actually I think it was a little too late.

Please note: von Storch’s paper was written and published BEFORE the AR5 SOD was modified.

In the AR4 10.26 GLB Temperature graph, isn’t it funny how the AR4 model follows the temp time series up to a certain point, but then suddenly switches to a smooth upward slope? I’m no math guy, but it seems like they’re going from modeled output, and then tacking on a smoothed trend at the end. Isn’t this like comparing apples to antelopes?

Maybe I’m completely wrong. But going from noisy to smooth seems bogus.

The CMIP3 runs used historical forcings for the 20th century — so e.g. they show a response to Pinatubo and El Chichón — but for the 21st they used projected forcings. See here. The multi-model mean is generally smoother than observed temperatures because the “weather noise” gets averaged out, but for volcanic eruptions, the models will have a synchronized “bump” and the mean will be affected.

Another pea??

The AR5 (SOD) Chapter 1 states this about climate model performance: “In summary, the globally-averaged surface temperatures are well within the uncertainty range of all previous IPCC projections, and generally are in the middle of the scenario ranges.”

The AR5 Final replaced the above with: “In summary, the trend in globally-averaged surface temperatures falls within the range of the previous IPCC projections.”

The Pea has and eye to the left and two seas to the right…

More AR5 trend shifting shenanigans here:

Here’s what Dana is saying:

” … McIntyre doesn’t say anything of substance. His post is basically “I don’t understand why they shifted the data up.” Ever heard of proper baselining? Tamino figured this out 10 months ago. I guess that goes to show who’s the better statistician. If you don’t trust the figures or baselining, then look at the trends as I did. McIntyre doesn’t even take that first simple step to analyze the data. Totally worthless post.”

I’m just a layman. If there’s no substance in what Dana is saying maybe Steve would drop by and put him right – see http://www.theguardian.com/environment/climate-consensus-97-per-cent/2013/oct/01/ipcc-global-warming-projections-accurate?commentpage=1

TC

TC, see Sven’s comment above.

For all I know, Tamino might be absolutely right about how the IPCC should choose baselines for its forecasts going forward. But to me it’s self-evident that, when your forecast takes the form of a delta applied to some baseline, the baseline has to be specified at the time the forecast is made, and adhered to when testing the forecast against observations– even if you think ex post that a different baseline would have been better.

Paul,

You are exactly right of course. We see folks like Tamino and Richard Telford above arguing that the data should be presented properly, and after that is done, the projections fall in line with the observations. So far, so good. But from there these same folks want to take it further and claim that the IPCC projections have been validated. In doing so, they betray the fact that they have no idea how hypotheses are properly adjudicated via the scientific method. I shall attempt an analogy. The soccer player launches the penalty kick and it misses the goal to the right by one foot. Tamino sprints along the end line with his measuring tape and discovers that the goal was actually placed three feet closer to the left corner of the field than the right. Now that the discrepancy has been rectified, we are being told that the proper thing to do is credit the kicker with the goal.

TC

“I’m just a layman. If there’s no substance in what Dana is saying maybe Steve would drop by and put him right” – at The Guardian

No chance. Any uncomfortable comments are routinely censored.

Seems pretty obvious that the IPCC can’t, as an organization, ever openly acknowledge significant discrepancies between projected warming and reality, since that would simultaneously undermine the credibility of all their projections, and reduce the IPCC’s claimed urgency for rapid reductions in fossil fuel use. Since many government funded researchers from the USA were involved in the IPCC AR5 report, it would seem reasonable for a congressional committee to call some of them to testify and explain the kinds of shenanigans Steve lays out in this post. I find the audacity in this kind of deceptive presentation both offensive and almost unbelievable. Could any of them really think this is ‘OK’?

Ok so if adjusting the data upwards doesn’t work well enough we’ll adjust the projection envelopes downwards.

That will fix our salaries for the next fifteen years.

Has anybody looked at how this changes the backcasting? IIRC, AR4 showed that the backcasting was reasonably fit.

That’s a good question. It looks to me like the actual temperatures are now running to hot from 1950-1970, by roughly the amount that they shifted the projections downwards by.

Carrick, have you read Ch 8 of AR5? I have started it. If I am reading it correctly, there is a claim that AR4 models would be running even hotter (if they matched the real system) due to the re-evaluation of aerosols and aerosol interactions. It is hard reading, but it appears to me that the sum of differences are being used to explain the divergence by what is needed to explain and not most likely. Have you had a chance to look at this?

With Ch 8, the bodge, and temperature data, changing one aspect opens the question of why only those that bring the projections and temperature closer, and not an even handed scientific approach.

If you add the shift of .1C to the backcasting, and redo the aerosol bodge for periods like the 1940’s while backcasting, I don’t think the AR4 models will look very good.

In other words, I believe the IPCC in trying to explain the pause have made their whole AR4 unbelievable. This is based on how the parts re-enforced each other. Now we have an explanation that severely compromises, IMO, that re-enforcement. Hopefully when the final comes out, it will be explained.

You don’t have to look at backcasting to find an inconsistency.

In the initial plot, the SAR scenario design is fitted to an initial lower temperature to accentuate the three years of subsequent temperature increases before the SAR was released. According to the recently invented paradigm, the starting point should be moved up to a “trend” value which would push the corresponding envelope about 0.2 degrees higher than it is in the later plot.

Otherwise it is more “apples and oranges”!

RomanM, I think that should be read more “apple to cherry pie”.

Lucia’s point of using this:””The report clearly states that the projections are relative to the mean of Jan1980-Dec1999 temperatures. That’s what it says. That’s what one should use.””

But IIRC, one of the reasons it did this was that otherwise the backcasting was not very good. Baselines seem to change when it is to “explain” away problems.

Steve: AR4’s reference in figure 10.26 was,as Lcuia says, 1980-1989 and obviously that’s what should be used. AR2 descrines the reference as 1990. Presumably they were aware that 1990 was warmer than 1992 and had the opportunity to take that into consideration.

Relax guys. It’s a dodgy trick, and they can only pull it once. Every time they move the goal posts, the Internet preserves the post holes.

Preserving the post holes only matters if you gather up the adjustments and present them in understandable ways, as well as ensure that all the adjustments are not applied selectively. That’s a lot of work and so far as I can tell it hasn’t been done.

If you are looking for a laugh, check out Figure TS.14. It show the ‘indicative likely range'(whatever that means) of global average temperature through 2035. The 2035 projection now covers everything from essentially flat temperatures for 20 years to warming at >0.25C per decade. The funny part is that the bottom of the ‘indicative likely range’ now falls completely outside the ENTIRE 299 run ensemble of CGM projections. So I guess even the IPCC authors understand that the GCM’s are projecting too much warming, they just can’t officially admit that the model projections are wrong. The best part of this graphic is that it guarantees the IPCC projections of warming can NEVER be proven wrong again, no matter how the next 20 years goes…. global warming catastrophe and urgent draconian reductions in fossil fuel use are now justified by… well, even the possibility of no warming. Politically prudent, but making the projections (and the report itself), useless in formulation of meaningful public policy. You just gotta love the “I’m right and you can never prove I am wrong” approach, which seems, sadly, a recurring theme in climate science.

TS.14 is talked about on page TS-48 (p51 in the pdf from here) and provided on TS-107 (p110). Like you I’m not sure what the pink ‘indicative likely range’ means but isn’t this graph meant to show the spread of GMST for all RCPs? Could that explain the low lower bound in 2035? Isn’t the problem from 2000 to the present that we’ve been emitting almost at the worst case level, as Mr Pete pointed out earlier?

But I’m very willing to be corrected. Brighter bulbs than me find the output of the IPCC less clear than it might be.

As Ross pointed out, the focus should be on the trends, not whether or not the observations are falling within the model variability envelope. The latter is a diversion.

Lucia is doing a lot of good work on the CMIP5 trends, and we have assembled the noise about the CMIP3 model mean trend in this work (that was unfortunately, never published). The key to assessing model performance is whether or not the observed trend fall within the modeled trend pdf.

-Chip

The green line diverge from others datasets before 1980 and after 2000.

erratum: before 1970

The current IPCC chart could/should be referred to as a Spaghetti Monster.

Ah, it seems to me that they’ve just used (a slightly modified versiof of) Tamino’s Trick (see the part of the rant starting “What about the plot from the draft of the AR5 report?”): all model values are pinned to 1990 (zero?) while observations are relative to the 61-90 baseline. In the draft version all values (including observations) were pinned to a value in 1990. Even if you accept Tamino’s justification (I do not) for this, it’s still wrong: models should be pinned to zero in 1975 (midpoint of the observation baseline) not something in 1990.

I guess the term of the day is “harmonization”, see the figure caption on page TS-96:

Steve: yes. for the SAR version, they definitely seem to have used Tamino’s Trick – or perhaps, in this context, the Tamino “bodge”. The amount that IPCC bodged the envelope is almost exactly in accordance with the Tamino bodge. I’ve looked back at the underlying SAR report and there’s another layer of the onion to peel.

Jean S,

Tamino strikes me as a somewhat disreputable source of information… always tilted towards catastrophe. I would not pay much attention to his pronouncements, IIWY.

Why is it so difficult to establish what the offsets should be? All the data is (groups of) anomalies against some (common OR NOT to the group) baseline. Whether that baseline is a year (1990) or a range of years (1960 – 1990) should all be well-defined by the data sets. Why are these questions even under debate?

does it really matter ? if they move the trend it affects the hindcasting,so the models are falsified by past observations ,if they dont,the models are falsified by recent observations .

so they are wrong no matter what year they pick for the start point ?

forget about the peer review process,it supports nothing about the actual content,only that other academics agree the methodology is reasonable.

@bit chilly

if they move the trend it affects the hindcasting,so the models are falsified by past observations

All true. But the chart “Hides the Hindcast” by reducing to minimum the color saturation of the calibration portion of each model.

The eye is drawn to the difficult to resolve 1990-2050 part of the chart. But the crime scene in in the 1970-1990 portion of the chart with impossible to resolve lines of the models. The decline in fit to data is hiding in plain sight.

Dissection of the goodness of fit of the models to the 1970-1990 interval before and after the shift applied to the Approved version should be enlightening.

[Please delete 11:45 am and this post. 11:52am is a better formatted replacement.]

@bit chilly

if they move the trend it affects the hindcasting,so the models are falsified by past observations

All true. But the chart “Hides the Hindcast” by reducing to minimum the color saturation of the calibration portion of each model.

It is nearly the same graphical trick as hiding the Briffa decline but applied in reverse. Instead of erasing the one inconvenient line, they reduce saturation to 95%, remove color, and widen the lines — 39 times.

The eye is drawn to the difficult to resolve 1990-2050 part of the chart. But the crime scene in in the 1970-1990 portion of the chart with impossible to resolve lines of the models. The decline in fit to data is hiding in plain sight. (climateaudit 12/1/2011)

Dissection of the goodness of fit of the models to the 1970-1990 interval before and after the shift applied to the Approved version should be enlightening.

Amazing analysis Mr. McIntyre. Well done!!

Another graph dealing with models projections is on page 120, Figure 11.25 of Chapter 11. The graph shows a different presentation on global mean temperature then contained in the Chapter 1 document which is the subject of this post. However it looks like the right hand did not know what the left hand was doing in that the Chapter 11 diagram shows the models far off the mark in projecting temperatures versus measured data.

Looks like they have modified the unexplained gray bands into some sort of spaghetti graph that can swallow the observations.

Using a spaghetti graph seems a crude trick to widen the prediction bounds by including not only the “natural variation” (or weather-like) year-to-year randomness from the GCM runs, but also the runs between completely unrelated models. The lowest-sensitivity models are not yet inconsistent with observations, so they’re claiming success. It would be impolite to point out that the observations barely are keeping contact with the runs from lowest-sensitivity models which also have the most negative weather excursions. As mentioned by John Davis above, the IPCC has also included B2 runs, which may well have provided the low-hanging linguine.

The IPCC has widened the prediction window to include the observations. If the “Texas sharpshooter fallacy” is to draw an unrealistically small circle about a bullet hole in a barn (and claim that’s where one was aiming), here the IPCC is saying “Well, I only said I’d hit the barn. Doesn’t matter where, does it?” [It would be cynical of me to suggest that if observations had matched the AR4 A1B multi-model mean fairly well, that the IPCC would claim that validates the use of the multi-model mean.]

In a like vein, it’s disingenuous to include the entire range of FAR predictions, from “business as usual” to scenario D — “stringent controls in industrialized countries combined with moderated growth of emissions in developing countries”. Scenario D has clearly not occurred. The IPCC should compare observations with the low/best/high estimates of “business as usual”, given in FAR SPM Figure 8. [All time series re-baselined to some common period such as 1961-1990.]

All these shenanigans just so they could say that “temps are consistent with the models” and hope that the public buys it (they know that we “flat Earthers” know it isn’t true). What utter tosh.

Do the few CMIP3 and CMIP5 model runs that do not run hotter than observations have values of atmospheric CO2 concentration that even closely approximate what actually occurred? In my opinion, only model runs that had CO2 at realistic (observed) levels should even be considered.

According to the new graph, there could be a cooling trend right through to 2035 and it would still match the models’ projections.

“We’re very lucky those earlier drafts were leaked”

Does any other science rely so crucially on leaks to make any progress?

Steve, as I’ve pointed out on a number of equations, there are much worse considerations in the graphs above. The spaghetti they present deliberately provides the illusion — as you and indeed they directly point out — that current temperatures lie within an “ensemble” of single model runs drawn from the collection of models presented.

Let us count the sins:

a) Let me choose the single model runs to put into the figure, and by running each model a few dozen times and picking the one run I include, I can make the figure look like anything at all. God invented Monte Carlo to help stupid, confirmation biased sinners avoid the deliberate or accidental abuse of statistics described by its own chapter in “How to Lie with Statistics”. To Hell with it.

b) We cannot be certain that they did, in fact, choose the model runs to include. Maybe they did just pick them “randomly”. In that case, their conclusion is a clear case of “data dredging”, only worse. This is a mortal sin even without cherrypicking.

When one does ordinary data dredging, one takes 20 jars of jelly beans, feeds them to lots of people, counts the number with acne, and discovers that green jelly beans can be positively correlated with (and hence “cause”) acne, because they beat the usual (but meaningless) cut-off of 0.05 where all of the others fail. Of course with 20 jars it is PROBABLE that one will make the cut-off, and with enough colors, one can beat even more stringent limits there are what, over thirty colors of GCM “jelly beans” in this ensemble?

If only this were the worst of it, it would be easy enough to fix. One has to use a more stringent distribution and statistical test when one has an ensemble of independent jars of jelly beans, but there are still levels of correlation between green jelly beans and acne that would be difficult to explain with the null hypothesis of no correlation.

But now take one of the actual jelly beans OUT of the jar above, that contains thirty different colors of jelly beans in a SINGLE jar. Yes, there are places where the green jelly beans are correlated with acne — some people that got acne did indeed eat green jelly beans. Most, however, did not. Some people that got acne ate more red jelly beans and no so many green. Most, however, did not. In fact, every single one of the jelly bean colors individually FAILS a simple hypothesis test of good correlation with acne — really even barely marginal correlation with acne — but nearly all colors of jelly bean had a few days (not the same days) where they were well correlated among many more days they were not.

The graph above is in the unique position of stating that while EVERY color of jelly bean INDEPENDENTLY fails a hypothesis test against the data, we can be certain that jelly beans cause acne because every color of jelly bean has at least a few people who ate that color and got acne.

This isn’t a small, ignorable error. This leads to a simple pair of possibilities. Either the assemblers of the graph and drawers of conclusions from the graph are completely incompetent at statistical hypothesis testing and data dredging and managed to put a poster child case of data dredging front and center in the report for policy makers, in which case they should be summarily fired for incompetence and replaced with competent statisticians, or else (worse) they are COMPETENT statisticians and deliberately assembled a misleading graph that openly encourages the ignorant to dredge the data by interpreting the fact that nearly every model dips for TINY INTERVALS OF TIME down to where they reach the measured GAST (but they all do it at different times, spending much less than 5% of their time down there) as evidence that collectively, the model spread includes reality. Oh, My, God. To Hell with you, sinner!

c) The next two sins are closely related. In AR4 and the early draft of AR5, the mean and standard deviation of the collection of models was presented (graphically, at least) as a physically meaningful quantity. I say standard deviation because without the usual normal/erf assumptions, how can they generate confidence levels AT ALL? The basis of nearly all such measures in hypothesis testing is the central limit theorem, especially lacking even a hint of knowledge of the underlying distribution.

However, this is in and of itself a horrible, mortal sin against the holy writ of statistics. The central limit theorem explicitly refers to drawing independent, identically distributed samples out of a fixed underlying distribution. There is no POSSIBLE sense in which the GCMs included in the graphs above are iid samples from a statistical distribution of physically correct GCMs. There IS NO SUCH THING (yet) as the latter — the GCMs don’t even MUTUALLY agree within a sensible hypothesis test — started with identical initial conditions in trivial toy problems they converge to entirely distinct answers, and if one does Monte Carlo with the starting conditions the (correctly formed) ensemble averages per GCM will often fail to overlap for different GCMs, certainly if you run enough samples.

The variations between GCMs are not random variations. They share a common structure, coordinatization, and in many cases similar physics similarly implemented. The mean of many runs of INDEPENDENT GCMS is not a statistically meaningful quantity in any sense defensible by the laws of statistics. The standard deviation of that mean is not a meaningful predictor of the actual climate. One can average HUNDREDS of failed models and get nothing but a very precise failed model, or “average” a single successful model and have a successful model. So to present such a figure in the first place is utterly misleading. To Hell with it.

c) The GCMs are not drawn from an iid of “correct GCMs”. Therefore their mean and standard deviation is already a meaningless quantity, no matter how it is presented. There is no basis in statistics for the quantitative evaluation of a confidence interval, lacking iid samples and any possibility of applying e.g. the central limit theorem. Evil Sin, to Hell with it.

I was afraid AR5 would persist in the statistical sins told in the summary for policy makers in AR4, and it appears that they have indeed done so, and even added to them.

To CORRECT their errors, though, is simple. Just draw each jelly bean (colored strand of spaghetti) against the data ALONE. For EACH model ask — is this a successful model? Not when it spends well over 95% of the time too warm. Repeat for the next one. Ooo, reject it too! Then the next one. Outta here!

In the end, you might end up with ONE OR TWO models from the entire collection that only spend >>80%<< of their time too warm, that aren't rejected by a one at a time hypothesis test per independent GCM. Those models are merely probably wrong, not almost certainly wrong.

Or, apply a Bonferroni analysis in order to obtain the p-value for the complete set. Oooo, does THAT fail the hypothesis test of "what is the probability of getting the actual data, given the null hypothesis that all of these models are in fact drawn from a hat of correct models". Since NONE of them are even CLOSE to the actual trajectory and one would expect at least one to BE close by mere chance given over 30 shots at it, be can reject the whole set (slightly fallaciously).

Finally, we could look at, I dunno, second moments — the FLUCTUATIONS of the models. Do they bear any resemblance to the actual fluctuation in the data? No they do not, not even as single model runs. Indeed, the single model runs could be rejected on this basis alone — why would the year to year variation of the climate be changing when it has historically been remarkable stable in the entire HADCRUT record, with the exception of a single decade that is almost certainly a spurious error back in the 19th century?

To Hell with it.

rgb

rgbatduke–

Well, for one, I appreciate your taking the time to write up this comment–for me, at least (a non-statistician), it does an excellent job of explaining the sins. Well, for two, posts/comments like this are a major reason that I read CA–I get to be educated about it all. Thanks to you here, I believe I come away with a much clearer understanding. “But I am in/So far in blood, that sin will pluck on sin.” –IPCC, aka “Richard III”

Thanks, Prof. Brown. Once again, you help to educate and inform us in language that non-scientists can understand.

Given that the choice of baselines is so critical in these exercises, my flabber is gasted at the way they did this. When I was involved in (quite different) research, one of the first things we did was to play around with different baselines as a reality check. Choosing your baseline is one of the most important decisions you make, and requires a lot of thought and testing.

In this excellent post, Dr. Brown (rgbatduke) has provided yet again a superb framework on which physicists and engineers who have at least a tentative sense of distrust in the proffering of AGW alarmists can organize their thoughts. In this instance, we may feel that the mainstream climate scientists are moving the “road signs” of doubtful models, and trying to justify an envelope of model outcomes based on the contention that an 18-wheeler once went through a guard rail here and into a cornfield – to say that the muddy ruts are really part of their model’s road. Dr. Brown has called out the statistical sins involved. And he has told us exactly what to look for:

“To CORRECT their errors, though, is simple. Just draw each jelly bean (colored strand of spaghetti) against the data ALONE. For EACH model ask — is this a successful model? Not when it spends well over 95% of the time too warm. Repeat for the next one. Ooo, reject it too! Then the next one. Outta here!”

This insight he has provided is of immense value. Thanks again Dr. Brown. Please give his post careful study.

Rgb, excellent summary. However, shouldn’t the first sentence use “occasions” instead of “equations”?

Funny you should ask:-) Yes, but the error is subtle enough to be a halfway decent pun.

As for elevating it to a full post (later comments) — if I were going to do an actual post on it, I’d only feel comfortable doing so if I had the actual data that went into 1.4, so I could extract the strands of spaghetti, one at a time. As it is, I can only see what a very few strands of colored noodles do, as they are literally interwoven to make it impossible to track specific models. For example, at the very top of the figure there is one line that actually spends all of its time at or ABOVE the upper limit of even the shaded line from the earlier leaked AR5 draft. It is currently a spectacular 0.7 to 0.8 C above the actual GAST anomaly. Why is this model still being taken seriously? As not only an outlier, but an egregiously incorrect outlier, it has no purpose but to create alarm as the upper boundary of model predicted warming, one that somebody unversed in hypothesis testing might be inclined to take seriously.

But then it is very difficult to untangle the lower threads. A blue line has an inexplicable peak in the mid-2000’s 0.6 C warmer than the observed temperatures, with all of the warming rocketing up in only a couple of years from something that appears much cooler. Not even the 1997-1998 ENSO or Pinatubo produce a variation like this anywhere in the visible climate record. This sort of sudden, extreme fluctuation appears common in many of the models — excursions 2 or three times the size of year to year fluctuations in the actual climate, even during times when the climate did, in fact, rapidly warm over a 1-2 year period.

This is one of the things that is quite striking even within the spaghetti. Look carefully and you can make out whole sawtooth bands of climate results where most of the GCMs in the ensemble are rocketing up around 0.4 to 0.5 C in 2-3 years, then dropping equally suddenly, then rocketing up again. This has to be compared to the actual annual variation in the real world climate, where a year to year variation of 0.1 or less is typical, 0.2 in a year is extreme, and where there are almost no instances of 3-4 year sequential increases.

I have to say that I think that the reason that they present EITHER spaghetti OR simple shaded regions against the measurements isn’t just to trick the public and ignorant “policy makers” into thinking that the GCMs embrace the real world data, it is to hide lots of problems, problems even with the humble GAST anomaly, problems so extreme that if they ever presented the GCM results one at a time against the real world data would cause even the most ardent climate zealot to think twice. Even in the greyshaded, unheralded past (before 1990) the climates have an excursion and autocorrelation that is completely wrong, an easy factor of two too large, and this is in the fit region.

Autocorrelation matters! In fact, it is the ONLY way we can look at external macroscopic quantities like the GAST anomaly and try to assess whether or not the internal dynamics of the model is working. It is the decay rate of fluctuations produced by either internal feedbacks or “sudden” change in external forcings. In the crudest of terms, many of the models above exhibit:

* Too much positive feedback (they shoot up too fast).

* Too much negative feedback (they fall down too fast).

* Too much sensitivity to perturbations (presuming that they aren’t whacking the system with ENSO-scale perturbations every other year, small perturbations within the model are growing even faster and with greater impact than the 1997-1998 ENSO, which involved a huge bolus of heat rising up in the pacific).

* Too much gain (they go up more on the upswings than the go down on the downswings, which means that the effects of overlarge positive and negative oscillations biases the trend in the positive direction.

That’s all I can make out in the mass of strands, but I’m sure that more problems would emerge if one examined individual models without the distraction of the others.

Precisely the same points, by the way, could be made of and were apparent in the spaghetti produced by individual GCMS for lower troposphere temperatures as presented by Roy Spencer before congress a few months ago. There the problem was dismissed by warming enthusiasts as being irrelevant, because it only looked at a single aspect of the climate and they could claim “but the GASTA predictions, they’re OK”. But the GASTA predictions above are the big deal, the big kahuna, global warming incarnate. And they’re not OK, they are just as bad as the LTT and everybody knows it.

That’s the sad part. As Steve pointed out, they acknowledged the problem in the leaked release, we spent another year or two STILL without warming, with the disparity WIDENING, and their only response is to pull the acknowledgement, sound the alarm, and obfuscate the manifold failures of the GCMs by presenting them in an illegible graphic that preserve a pure statistical illusion of marginal adequacy.

Most of the individual GCMs, however, are clearly NOT adequate. They are well over 0.5 C too warm. They have the wrong range of fluctuation. They have absurd time constants for growth from perturbations. They have absurd time constants for decay from perturbations. They aren’t even approximately independent — one can see bands of similar fluctuations, slightly offset in time, for supposedly distinct models (all of them too warm, all of them too extreme and too fast).

Any trained eye can see these problems. The real world data has a completely different CHARACTER, and if anything, the problems WORSEN in the future. I cannot imagine that the entire climate community is not perfectly well aware of the “travesty” referred to in Climategate, that the models are failing and nobody knows why.

Why is honesty so difficult in this field? As Steve Mosher pointed out, none of this should ever have been used to push energy policy or CAGW fears on an unsuspecting world. It is not (as he seems to finally be admitting) NOT “settled science”. It’s not surprising that models that try to microscopically solve the world’s most difficult computational physics problem get the wrong answer across the board — rather, it’s perfectly reasonable, to be expected. If it weren’t for the world-saving heroic angst, the politics, and the bags full of money, building, tuning, fixing, comparing the models would be what science is all about, as Steve also notes.

So why not ADMIT this to a world that has been fooled into thinking that the model results were actually authoritative, bombarded by phrases like “very likely” that have no possible defensible basis in statistical analysis?

All they are doing in AR5 figure 1.4 is delaying the day of reckoning, and that not by much. If its information content is unravelled, strand by strand, and presented to the world for objective consideration, all it will succeed in doing is proving beyond any doubt that they are, indeed, trying to cover up their very real uncertainty and perpetuate for a little while longer the illusion that GCMs are meaningful predictors and a sound basis for diverting hundreds of billions of dollars and costing millions of lives per year, mostly in developing countries where increased costs of energy are directly paid for in lives, paid right now, not in a hypothetical 50 years. I think they are delaying on the basis of a prayer. They are praying for another super-ENSO, a CME, a huge spike in temperature like the ones their models all produce all the time, one sufficient to warm the world 0.5C in a year or two and get us back on the track they predict.