A guest post by Nic Lewis

On 1 April 2014 the Bishop Hill blog carried a guest post ‘Dating error’ by Doug Keenan, in which he set out his allegations of research misconduct by Oxford University professor Christopher Bronk Ramsey. Professor Bronk Ramsey is an expert on calibration of radiocarbon dating and author of OxCal, apparently one of the two most widely used radiocarbon calibration programs (the other being Calib, by Stuiver and Reimer). Steve McIntyre and others opined that an allegation of misconduct was inappropriate in this sort of case, and likely to be counter-productive. I entirely agree. Nevertheless, the post prompted an interesting discussion with statistical expert Professor Radford Neal of Toronto University and with Nullius in Verba (an anonymous but statistically-minded commentator). They took issue with Doug’s claims that the statistical methods and resulting probability densities (PDFs) and probability ranges given by OxCal and Calib are wrong. Doug’s arguments, using a partly Bayesian approach he calls a discrete calibration method, are set out in his 2012 peer reviewed paper.

I also commented, saying if one assumes a uniform prior for the true calendar date, then Doug Keenan’s results do not follow from standard Bayesian theory. Although the OxCal and Calib calibration graphs (and the Calib manual) are confusing on the point, Bronk Ramsey’s papers make clear he does use such a uniform prior. I wrote that in my view Bronk Ramsey had followed a defensible approach (since his results flow from applying Bayes’ theorem using that prior), so there was no research misconduct involved, but that his method did not represent best scientific inference.

The final outcome was that Doug accepted what Radford and Nullius said about how the sample measurement should be interpreted as probability, with the implication that his criticism of the calibration method is invalid. However, as I had told Doug originally, I think his criticism of the OxCal and Calib calibration methods is actually valid: I just think that imperfect understanding rather than misconduct on the part of Bronk Ramsey (and of other radiocarbon calibration experts) is involved. Progress in probability and statistics has for a long time been impeded by quasi-philosophical disagreements between theoreticians as to what probability represents and the correct foundations for statistics. Use of what are, in my view, unsatisfactory methods remains common.

Fortunately, regardless of foundational disagreements I think most people (and certainly most scientists) are in practice prepared to judge the appropriateness of statistical estimation methods by how well they perform upon repeated use. In other words, when estimating the value of a fixed but unknown parameter, does the true value lie outside the specified uncertainty range in the indicated proportion of cases?

This so-called frequentist coverage or probability-matching property can be tested by drawing samples at random from the relevant uncertainty distributions. For any assumed distribution of parameter values, a method of producing 5–95% uncertainty ranges can be tested by drawing a large number of samples of possible parameter values from that distribution, and for each drawing a measurement at random according to the measurement uncertainty distribution and estimating a range for the parameter. If the true value of the parameter lies below the bottom end of the range in 5% of cases and above its top in 5% of cases, then that method can be said to exhibit perfect frequentist coverage or exact probability matching (at least at the 5% and 95% probability levels), and would be viewed as a more reliable method than a non-probability-matching one for which those percentages were (say) 3% and 10%. It is also preferable to a method for which those percentages were both 3%, which would imply the uncertainty ranges were unnecessarily wide. Note that in some cases probability-matching accuracy is unaffected by the parameter value distribution assumed.

I’ll now attempt to explain the statistical issues and to provide evidence for my views. I’ll then set up a simplified, analytically tractable, version of the problem and use it to test the probability matching performance of different methods. I’ll leave discussion of the merits of Doug’s methods to the end.

2. Statistical issues involved in radiocarbon calibration

The key point is that OxCal and Calib use a subjective Bayesian method with a wide uniform prior on the parameter being estimated, here calendar age, whilst the observational data provides information about a variable, radiocarbon or 14C age, that has a nonlinear relationship to the parameter of interest. The vast bulk of the uncertainty relates to 14C age – principally measurement and similar errors, but also calibration uncertainty. The situation is thus very similar to that for estimation of climate sensitivity. It seems to me that the OxCal and Calib methods are conceptually wrong, just as use of a uniform prior for estimating climate sensitivity is normally inappropriate.

In the case of climate sensitivity, I have been arguing for a long time that Bayesian methods are only appropriate if one takes an objective approach, using a noninformative prior, rather than a subjective approach (using, typically, a uniform or expert prior). Unfortunately, many statisticians (and all but a few climate scientists) seem not to understand, or at least not to accept, the arguments in favour of an objective Bayesian approach. Most climate sensitivity studies still use subjective Bayesian methods.

Objective Bayesian methods require a noninformative prior. That is, a prior that influences parameter estimation as little as possible: it lets the data ‘speak for themselves’[i]. Bayesian methods generally cannot achieve exact probability matching even with the most noninformative prior, but objective Bayesian methods can often achieve approximate probability matching. In simple cases a uniform prior is quite often noninformative, so that a subjective Bayesian approach that involved using a uniform prior would involve the same calculations and give the same results as an objective Bayesian approach. An example is where the parameter being estimated is linearly related to data, the uncertainties in which represent measurement errors with a fixed distribution. However, where nonlinear relationships are involved a noninformative prior for the parameter is rarely uniform. In complex cases deriving a suitable noninformative prior can be difficult, and in many cases it is impossible to find a prior that has no influence at all on parameter estimation.

Fortunately, in one-dimensional cases where uncertainty involves measurement and similar errors it is often possible to find a completely noninformative prior, with the result that exact probability matching can be achieved. In such cases, the so-called ‘Jeffreys’ prior’ is generally the correct choice, and can be calculated by applying standard formulae. In essence, Jeffreys’ prior can be thought of as a conversion factor between distances in parameter space and distances in data space. Where a data–parameter relationship is linear and the data error distribution is independent of the parameter value, that conversion factor will be fixed, leading to Jeffreys’ prior being uniform. But where a data–parameter relationship is nonlinear and/or the data precision is variable, Jeffreys’ prior achieves noninformativeness by being appropriately non-uniform.

Turning to the specifics of radiocarbon dating, my understanding is as follows. The 14C age uncertainty varies with 14C age, and is lognormal rather than normal (Gaussian). However, the variation in uncertainty is sufficiently slow for the error distribution applying to any particular sample to be taken as Gaussian with a standard deviation that is constant over the width of the distribution, provided the measurement is not close to the background radiation level. It follows that, were one simply estimating the ‘true’ radiocarbon age of the sample, a uniform-in-14C-age prior would be noninformative. Use of such a prior would result in an objective Bayesian estimated posterior PDF for the true 14C age that was Gaussian in form.

However, the key point about radiocarbon dating is that the ‘calibration curve’ relationship of ‘true’ radiocarbon age t14C to the true calendar date ti of the event corresponding to the 14C determination is highly nonlinear. (I will consider only a single event, so i = 1.) It follows that to be noninformative a prior for ti must be non-uniform. Assuming that the desire is to produce uncertainty ranges beyond which – upon repeated use – the true calendar date will fall in a specified proportion of cases, the fact that in reality there may be an equal chance of tilying in any calendar year is irrelevant.

The Bayesian statistical basis underlying the OxCal method is set out in a 2009 paper by Bronk Ramsey[ii]. I will only consider the simple case of a single event, with all information coming from a single 14C determination. Bronk Ramsey’s paper states:

The likelihood defines the probability of obtaining a measurement given a particular date for an event. If we only have a single event, we normally take the prior for the date of the event to be uniform (but unnormalized):

p(ti) ~ U(–∞,∞ ) ~ constant

Defensible though it is in terms of subjective Bayesian theory, a uniform prior in titranslates into a highly non-uniform prior for the ‘true’ radiocarbon age (t14C) as inferred from the 14C determination. Applying Bayes’ theorem in the usual way, the posterior density for t14C will then be non-Gaussian.

The position is actually more complicated, in that the calibration curve itself also has uncertainty, which is also assumed to be Gaussian in form. One can think of there being a nonlinear but exact functional calibration curve relationship s14C = c(ti) between calendar year ti and a ‘standard’ 14C age s14C, but with – for each calendar year – the actual (true, not measured) 14C age t14C having a slightly indeterminate relationship with ti. So the statistical relationship (N signifying a normal or Gaussian distribution having the stated mean and standard deviation ) is:

t14C ~ N(c(ti), σc(ti)) (1)

where σc is the calibration uncertainty standard deviation, which in general will be a function of ti. In turn, the radiocarbon determination age d14C is assumed to have the form

d14C ~ N(t14C, σd) (2)

with the variation of the standard deviation σd with t14C usually being ignored for individual samples.

Fig. 1: Example OxCal calibration (from Fig.1 of Keenan, 2012, Calibration of a radiocarbon age)

Figure 1, from Fig. 1 in Doug’s paper, shows an example of an OxCal calibration, with the resulting 95.4% (±2 sigma for a Gaussian distribution) probability range marked by the thin bar above the x-axis. The red curve on the y-axis is centred on the 14C age derived by measurement (the radiocarbon or 14C determination) and shows the likelihood for that determination as a function of true 14C age. The likelihood for a 14C determination is the relative probability, for any given true 14C age, of having obtained that determination given the uncertainty in 14C determinations. The blue calibration curve shows the relationship between true 14C age (on the y-axis) and true calendar age on the x-axis. Its vertical width represents calibration uncertainty. The estimated PDF for calendar age is shown in grey. Ignoring the small effect of the calibration uncertainty, the PDF simply expresses the 14C determination’s likelihood as a function of calendar age. It represents both the likelihood function for the determination and – since a uniform prior for calendar age is used – the posterior PDF for the true calendar age (Bayes’ theorem giving the posterior as the normalised product of the prior and the likelihood function).

By contrast to OxCal’s subjective Bayesian, uniform prior based method, an objective Bayesian approach would involve computing a noninformative prior for ti. The standard choice would normally be Jeffreys’ prior. Doing so is somewhat problematic here in view of the calibration curve not being monotonic – it contains reversals – and also having varying uncertainty.

If the calibration curve were monotonic and had an unvarying error magnitude, the calibration curve error could be absorbed into a slightly increased 14C determination error, as both these uncertainty distributions are assumed Gaussian. Since the calibration curve error appears small in relation to 14C determination error, and typically only modestly varying over the 14C determination error range, I will make the simplifying assumption that it can be absorbed into an increased 14C determination error. The statistical relationship then becomes, given independence of calibration curve and radiocarbon determination uncertainty:

d14C ~ N( c(ti), sqrt(σc²+σd²) ) (3)

On that basis, and ignoring also the calibration curve being limited in range, it follows that Jeffreys’ prior for ti would equal the absolute derivative (slope) of calibrated 14C age with respect to calendar date. Moreover, in the absence of non-monotonicity it is known that in a case like this the Jeffreys’ prior is completely noninformative. Jeffreys’ prior would in fact provide exact probability matching – perfect agreement between the objective Bayesian posterior cumulative distribution functions (CDFs – the integrals of PDFs) and the results of repeated testing. The reason for the form here of Jeffreys’ prior is fairly clear – where the calibration curve is steep and hence its derivative with respect to calendar age is large, the error probability (red shaded area) between two nearby values of t14C corresponds to a much smaller ti range than when the derivative is small.

An alternative way of seeing that a noninformative prior for calendar age should be proportional to the derivative of the calibration curve is as follows. One can perform the Bayesian inference step to derive a posterior PDF for the true 14C age, t14C, using a uniform prior for 14C age – which as stated previously is, given the assumed Gaussian error distribution, noninformative. That results in a posterior PDF for 14C age that is identical, up to proportionality, to its likelihood function. Then one can carry out a change of variable from t14C to ti. The standard (Jacobian determinant) formula for converting a PDF between two variables, where one is a function of the other, involves multiplying the PDF, expressed in terms of the new variable, by the absolute derivative of the inverse transformation – the derivative of t14C with respect to ti. Taking this route, the objective posterior PDF for calendar age is the normalised product of the 14C age likelihood function (since the 14C objective Bayesian posterior is proportional to its likelihood function), expressed in terms of calendar age, multiplied by the derivative of t14C with respect to ti. That is identical, as it should be, to the result of direct objective Bayesian inference of calendar age using the Jeffreys’ prior.

3. Examining various methods using a simple stylised calibration curve

In order to make the problem analytically tractable and the performance of different methods – in terms of probability matching – easily testable, I have created a stylised calibration curve. It consists of the sum of three scaled shifted sigmoid functions. The curve exhibits both plateaus and steep regions whilst being smooth and monotonic and having a simple derivative.

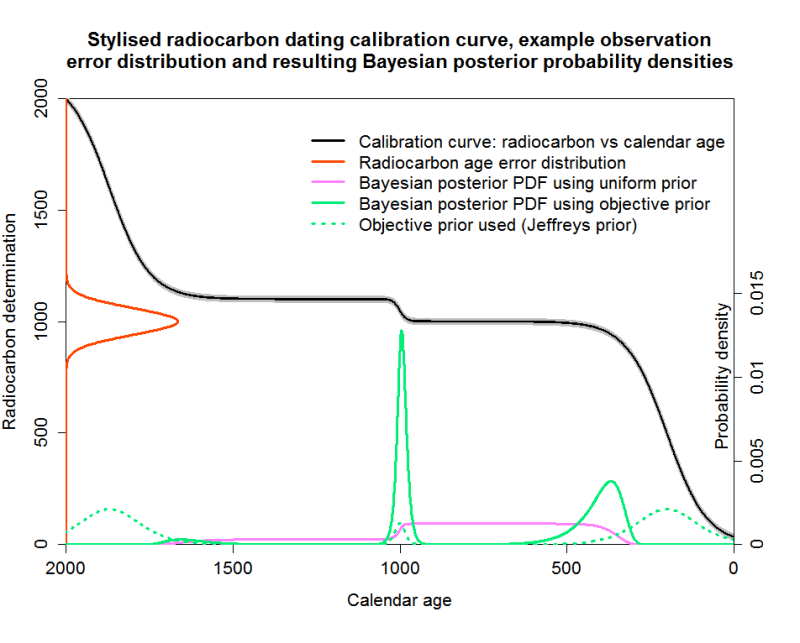

Figure 2 shows similar information to Figure 1 but with my stylised calibration curve instead of a real one. The grey wings of the curve represent a fixed calibration curve error, which, as discussed, I absorb into the 14C determination error. The pink curve, showing the Bayesian posterior PDF using a uniform prior in calendar age, corresponds to the grey curve in Figure 1. It is highest over the right hand plateau, which corresponds to the centre of the red radiocarbon age error distribution, but has a non-negligible value over the left hand plateau as well. The figure also shows the objective Jeffreys’ prior (dotted green line), which reflects the derivative of the calibration curve. The objective Bayesian posterior using that prior is shown as the solid green line. As can be seen, it is very different from the uniform-calendar-year-prior based posterior that would be produced by the OxCal or Calib programs for this 14C determination (if they used this calibration curve).

Fig. 2: Bayesian inference using uniform and objective priors with a stylised calibration curve

Fig. 2: Bayesian inference using uniform and objective priors with a stylised calibration curve

The Jeffreys’ prior (dotted green line) has bumps wherever the calibration curve has a high slope, and is very low in plateau regions. Subjective Bayesians will probably throw up their hands in horror at it, since it would be unphysical to think that the probability of a sample having any particular calendar age depended on the shape of the calibration curve. But that is to mistake the nature of a noninformative prior, here Jeffreys’ prior. A noninformative prior has no direct probabilistic interpretation. As a standard textbook (Bernardo and Smith, 1994) puts it in relation to reference analysis, arguably the most successful approach to objective Bayesian inference: “The positive functions π(θ) [the noninformative reference priors] are merely pragmatically convenient tools for the derivation of reference posterior distributions via Bayes’ theorem”.

Rather than representing a probabilistic description of existing evidence as to a probability distribution for the parameter being estimated, a noninformative prior primarily reflects (at least in straightforward cases) how informative, at differing values of the parameter, the data is expected to be about the parameter. That in turn reflects how precise the data are in the relevant region and how fast expected data values change with the parameter value. This comes back to the relationship between distances in parameter space and distances in data space that I mentioned earlier.

It may be thought that the objective posterior PDF has an artificial shape, with peaks and low regions determined, via the prior, by the vagaries of the calibration curve and not by genuine information as to the true calendar age of the sample. But one shouldn’t pay too much attention to PDF shapes; they can be misleading. What is most important in my view is the calendar age ranges the PDF provides, which for one-sided ranges follow directly from percentage points of the posterior CDF.

By a one-sided x% range I mean the range from the lowest possible value of the parameter (here, zero) to the value, y, at which the range is stated to contain x% of the posterior probability. An x1–x2% range or interval for the parameter is then y1 − y2, where y1 and y2 are the (tops of the) one-sided x1% and x2% ranges. Technically, this is a credible interval, as it relates to Bayesian posterior probability.

By contrast, a (frequentist) x% one-sided confidence interval with a limit of y can, if accurate, be thought of as one calculated to result in values of y such that, upon indefinitely repeated random sampling from the uncertainty distributions involved, the true parameter value will lie below y in x% of cases. By definition, an accurate confidence interval exhibits perfect frequentist coverage and so represents, for an x% interval, exact probability matching. If one-sided Bayesian credible intervals derived using a particular prior pass that test then they and the prior used are said to be probability matching. In general, Bayesian posteriors cannot be perfectly probability matching. But the simplified case presented here falls within an exception to that rule, and use of Jeffreys’ prior should in principle lead to exact probability matching.

The two posterior PDFs in Figure 2 imply very different calendar age uncertainty ranges. As OxCal reports a 95.4% range, I’ll start with the 95.4% ranges lying between the 2.3% and 97.7% points of each posterior CDF. Using a uniform prior, that range is 365–1567 years. Using Jeffreys’ prior, the objective Bayesian 2.3–97.7% range is 320–1636 years – somewhat wider. But for a 5–95% range, the difference is large: 395–1472 years using a uniform prior versus 333–1043 years using Jeffreys’ prior.

Note that OxCal would report a 95.4% highest posterior density (HPD) range rather than a range lying between the 2.3% and 97.7% points the posterior CDF. A 95.4% HPD range is one spanning the region with the highest posterior densities that includes 0.954 probability in total; it is necessarily the narrowest such range. HPD ranges are located differently from those with equal probability in both tails of a probability distribution; they are narrower but not necessarily better.

What about confidence intervals, a non-Bayesian statistician would rightly ask? The obvious way of obtaining confidence intervals is to use likelihood-based inference, specifically the signed root log-likelihood ratio (SRLR). In general, the SRLR only provides approximate confidence intervals. But where, as here, the parameter involved is a monotonic transform of a variable with a Gaussian distribution, SRLR confidence intervals are exact. So what are the 2.3–97.7% and 5–95% SRLR-derived confidence intervals? They are respectively 320–1636 years and 333–1043 years – identical to the objective Bayesian ranges using Jeffreys’ prior, but quite different from those using a uniform prior. I would argue that the coincidence of the Jeffreys’ prior derived objective Bayesian credible intervals and the SRLR confidence intervals reflects the fact that here both methods provide exact probability matching.

4. Numerical testing of different methods using the stylised calibration curve

Whilst an example is illuminating, in order properly to compare the performance of the different methods one needs to carry out repeated testing of probability matching based on a large number of samples: frequentist coverage testing. Although some Bayesians reject such testing, most people (including most statisticians) want a statistical inference method to produce, over the long run, results that accord with relative frequencies of outcomes from repeated tests involving random draws from the relevant probability distributions. By drawing samples from the same uniform calendar age distribution on which Bronk Ramsey’s method is predicated, we can test how well each method meets that aim. This is a standard way of testing statistical inference methods. Clearly, one wants a method also to produce accurate results for samples that – unbeknownst to the experimenter – are drawn from individual regions of the age range, and not just for samples that have an equal probability of having come from any year throughout the entire range.

I have accordingly carried out frequentist coverage testing, using 10,000 samples drawn at random uniformly from both the full extent of my calibration curve and from various sub-regions of it. For each sampled true calendar age, a 14C determination age is sampled randomly from a Gaussian error distribution. I’ve assumed an error standard deviation of 30 14C years, to include calibration curve uncertainty as well as that in the 14C determination. Whilst in principle I should have used somewhat different illustrative standard deviations for different regions, doing so would not affect the qualitative findings.

In these frequentist coverage tests, for each integral percentage point of probability the proportion of cases where the true calendar age of the sample falls below the upper limit given by the method involved for a one-sided interval extending to that percentage point is computed. The resulting proportions are then plotted against the percentage points they relate to. Perfect probability matching will result in a straight line going from (0%, 0) to (100%,1). I test both subjective and objective Bayesian methods, using for calendar age respectively a uniform prior and Jeffreys’ prior. I also test the signed root log-likelihood ratio method.

For the Bayesian method using a uniform prior, I also test the coverage of the HPD regions that OxCal reports. As HPD regions are two-sided, I compute the proportion of cases in which the true calendar age falls within the calculated HPD region for each integral percentage HPD region. Since usually only ranges that contain a majority of the estimated posterior probability are of interest, only the right hand half of the HPD curves (HPD ranges exceeding 50%) is of practical significance. Note that the title and y-axis label in the frequentist coverage test figures refer to one-sided regions and should in relation to HPD regions be interpreted in accordance with the foregoing explanation.

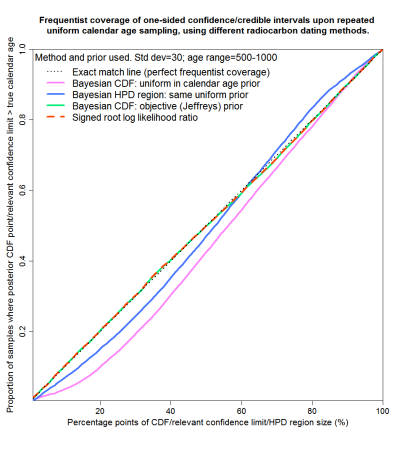

I’ll start with the entire range, except that I don’t sample from the 100 years at each end of the calibration curve. That is because otherwise a significant proportion of samples result in non-negligible likelihood falling outside the limits of the calibration curve. Figure 3 accordingly shows probability matching with true calendar ages drawn uniformly from years 100–1900. The results are shown for four methods. The first two are subjective Bayesian using a uniform prior as per Bronk Ramsey – from percentage points of the posterior CDF and from highest posterior density regions. The third is objective Bayesian employing Jeffreys’ prior, from percentage points of the posterior CDF. The fourth uses the non-Bayesian signed root log-likelihood ratio (SRLR) method. In this case, all four methods give good probability matching – their curves lie very close to the dotted black straight line that represents perfect matching.

Fig. 3: Probability matching from frequentist coverage testing with calendar ages of 100–1900 years

Fig. 3: Probability matching from frequentist coverage testing with calendar ages of 100–1900 years

Now let’s look at sub-periods of the full 100–1900 year period. I’ve picked periods representing both ranges over which the calibration curve is mainly flattish and those where it is mainly steep. I start with years 100–500, over most of which the calibration curve is steep. The results are shown in Figure 4. Over this period, SRLR gives essentially perfect matching, while the Bayesian methods give mixed results. Jeffreys’ prior gives very good matching – not quite perfect, probably because for some samples there is non-negligible likelihood at year zero. However, posterior CDF points using a uniform prior don’t provide very good matching, particularly for small values of the CDF (corresponding to the lower bound of two-sided uncertainty ranges). Posterior HPD regions provide rather better, but still noticeably imperfect, matching.

Fig. 4: Probability matching from frequentist coverage testing with calendar ages of 100–500 years

Fig. 4: Probability matching from frequentist coverage testing with calendar ages of 100–500 years

Figure 5 shows results for the 500–1000 range, which is flat except near 1000 years. The conclusions are much as for 100–500 years save that Jeffreys’ prior now gives perfect matching and that mismatching from posterior CDF points resulting from a uniform prior give smaller errors (and in the opposite direction) than for 100–500 years.

Fig. 5: Probability matching from frequentist coverage testing with calendar ages of 500–1000 years

Fig. 5: Probability matching from frequentist coverage testing with calendar ages of 500–1000 years

Now we’ll take the 1000–1100 years range, which asymmetrically covers a steep region in between two plateaus of the calibration curve. As Figure 6 shows, this really separates the sheep from the goats. The SRLR and objective Bayesian methods continue to provide virtually perfect probability matching. But the mismatching from the posterior CDF points resulting from a uniform prior Bayesian method is truly dreadful, as is that from HPD regions derived using that method. The true calendar age would only lie inside a reported 90% HPD region for some 75% of samples. And over 50% of samples would fall below the bottom of a 10–90% credible region given by the posterior CDF points using a uniform prior. Not a very credible region at all.

Fig. 6: Probability matching from frequentist coverage testing with calendar ages of 1000–1100 years

Fig. 6: Probability matching from frequentist coverage testing with calendar ages of 1000–1100 years

Figure 7 shows that for the next range, 1100–1500 years, where the calibration curve is largely flat, the SRLR and objective Bayesian methods again provide virtually perfect probability matching. However, the uniform prior Bayesian method again fails to provide reasonable probability matching, although not as spectacularly badly as over 1000–1100 years. In this case, symmetrical credible regions derived from posterior CDF percentage points, and HPD regions of over 50% in size, will generally contain a significantly higher proportion of the samples than the stated probability level of the region – the regions will be unnecessarily wide.

Fig. 7: Probability matching from frequentist coverage testing with calendar ages of 1100–1500 years

Fig. 7: Probability matching from frequentist coverage testing with calendar ages of 1100–1500 years

Finally, Figure 8 shows probability matching for the mainly steep 1500–1900 years range. Results are similar to those for years 100–500, although the uniform prior Bayesian method gives rather worse matching than it does for years 100–500. Using a uniform prior, the true calendar age lies outside the HPD region noticeably more often than it should, and lies beyond the top of credible regions derived from the posterior CDF twice as often as it should.

Fig. 8: Probability matching from frequentist coverage testing with calendar ages of 1500–1900 years

5. Discussion and Conclusions

The results of the testing are pretty clear. In whatever range the true calendar age of the sample lies, both the objective Bayesian method using a noninformative Jeffreys’ prior and the non-Bayesian SRLR method provide excellent probability matching – almost perfect frequentist coverage. Both variants of the subjective Bayesian method using a uniform prior are unreliable. The HPD regions that OxCal provides give less poor coverage than two-sided credible intervals derived from percentage points of the uniform prior posterior CDF, but at the expense of not giving any information as to how the missing probability is divided between the regions above and below the HPD region. For both variants of the uniform prior subjective Bayesian method, probability matching is nothing like exact except in the unrealistic case where the sample is drawn equally from the entire calibration range – in which case over-coverage errors in some regions on average cancel out with under-coverage errors in other regions, probably reflecting the near symmetrical form of the stylised overall calibration curve.

I have repeated the above tests using 14C error standard deviations of 10 years and 60 years instead of 30 years. Results are qualitatively the same.

Although I think my stylised calibration curve captures the essence of the principal statistical problem affecting radiocarbon calibration, unlike real 14C calibration curves it is monotonic. It also doesn’t exhibit variation of calibration error with age, but such variation shouldn’t have a significant impact unless, over the range where the likelihood function for the sample is significant, it is substantial in relation to 14C determination error. Non-monotonicity is more of an issue, and could lead to noticeable differences between inference from an objective Bayesian method using Jeffreys’ prior and from the SRLR method. If so, I think the SRLR results are probably to be preferred, where it gives a unique contiguous confidence interval. Jeffreys’ prior, which in effect converts length elements in 14C space to length elements in calendar age space, may convert single length elements in 14C space to multiple length elements in calendar age space when the same 14C age corresponds to multiple calendar ages, thus over-representing in the posterior distribution the affected parts of the 14C error distribution probability. Initially I was concerned that the non-monotonicity problem was exacerbated by the existence of calibration curve error, which results in uncertainty in the derivative of 14C age with respect to calendar age and hence in Jeffreys’ prior. However, I now don’t think that is the case.

Does the foregoing mean the SRLR method is better than an objective Bayesian method? In this case, perhaps, although the standard form of SRLR isn’t suited to badly non-monotonic parameter–data relationships and non-contiguous uncertainty ranges. More generally, the SRLR method provides less accurate probability matching when error distributions are neither normal nor a transforms of a normal.

Many people may be surprised that the actual probability distribution of the calendar date of samples for which radiocarbon determinations are carried out is of no relevance to the choice of a prior that leads to accurate uncertainty ranges and hence is, IMO, appropriate for scientific inference. Certainly most climate scientists don’t seem to understand the corresponding point in relation to climate sensitivity. The key point here is that the objective Bayesian and the SRLR methods both provide exact probability matching whatever the true calendar date of the sample is (provided it is not near the end of the calibration curve). Since they provide exact probability matching for each individual calendar date, they are bound to provide exact probability matching whatever probability distribution for calendar date is assumed by the drawing of samples.

How do the SRLR and objective Bayesian methods provide exact probability matching for each individual calendar date? It is easier to see that for the SRLR method. Suppose samples having the same fixed calendar date are repeatedly drawn from the radiocarbon and calibration uncertainty distributions. The radiocarbon determination will be more than two standard deviations (of the combined radiocarbon and calibration uncertainty level) below the exact calibration curve value for the true calendar date in 2.3% of samples. The SRLR method sets its 97.7% bound at two standard deviations above the radiocarbon determination, using the exact calibration curve to convert this to a calendar date. That bound must necessarily lie at or above the calibration curve value for the true calendar date in 97.7% of samples. Ignoring non-monotonicity, it follows that the true calendar date will not exceed the upper bound in 97.7% of cases. The bound is, given the statistical model, an exact confidence limit by construction. Essentially Jeffreys’ prior achieves the same result in the objective Bayesian case, but through operating on probability density rather than on its integral, cumulative probability.

Bayesian methods also have the advantage that they can naturally incorporate existing information about parameter values. That might arise where, for instance, a non-radiocarbon based dating method had already been used to estimate a posterior PDF for the calendar age of a sample. But even assuming there is genuine and objective probabilistic prior information as to the true calendar year, what the textbooks tell one to do may not be correct. Suppose the form of the data–parameter relationship differs between the existing and new information, and it is wished to use Bayes’ theorem to update, using the likelihood from the new radiocarbon measurement, a posterior PDF that correctly reflects the existing information. Then simply using that existing posterior PDF as the prior and applying Bayes’ theorem in the standard way will not give an objective posterior probability density for the true calendar year that correctly combines the information in the new measurement with that in the original posterior PDF. It is necessary to use instead a modified form of Bayesian updating (details of which are set out in my paper at http://arxiv.org/abs/1308.2791). It follows that it the existing information is simply that the sample must have originated between two known calendar dates, with no previous information as to how likely it was to have come from any part of the period those dates define, then just using a uniform prior set to zero outside that period would bias estimation and be unscientific.

And how does Doug Keenan’s ‘discrete’ calibration method fit in to all this? So far as I can see, the uncertainty ranges it provides will be considerably closer to those derived using objective Bayesian or SRLR methods than to those given by the OxCal and Calib methods, even though like them it uses Bayes’ theorem with a uniform prior. That is because, like the SRLR and (given monotonicity) Jeffreys’ prior based objective Bayesian methods, Doug’s method correctly converts, so far as radiocarbon determination error goes, between probability in 14C space and probability in calendar year space. I think Doug’s treatment of calibration curve error avoids, through renormalisation, the multiple counting of 14C error probability that may affect a Jeffreys’ prior based objective Bayesian method when the calibration curve is non-monotonic. However, I’m not convinced that his treatment of calibration curve uncertainty is noninformative even in the absence of it varying with calendar age. Whether that makes much difference in practice, given that 14C determinant error appears normally to be the larger of the two uncertainties by some way, is unclear to me.

Does the uniform prior subjective Bayesian method nevertheless have advantages? Probably. It may cope with monotonicity better than the basic objective Bayesian method I have set out, particularly where that leads to non-contiguous uncertainty ranges. It may also make it simpler to take advantage of chronological information where there is more than one sample. And maybe in many applications it is felt more important to have realistic looking posterior PDFs than uncertainty ranges that accurately reflect how likely the true calendar date is to lie within them.

I can’t help wondering whether it might help if people concentrated on putting interpretations on CDFs rather than PDFs. Might it be better to display the likelihood function from a radiocarbon determination (which would be identical to the subjective Bayesian posterior PDF based on a uniform prior) instead of a posterior PDF, and just to use an objective Bayesian PDF (or the SRLR) to derive the uncertainty ranges? That way one would both get a realistic picture of what calendar age ranges were supported by the data, and a range that the true age did lie above or below in the stated percentage of instances.

Professor Bronk Ramsey considers that knowledge of the radiocarbon calibration curve does give us quantitative information on the prior for 14C ‘age’. He argues that the belief that in reality calendar dates of samples are spread uniformly means that a non-uniform prior in 14C age is both to be expected and is what you would want. That would be fine if the prior assumption made about calendar dates actually conveyed useful information.

Where genuine prior information exists, one can suppose that it is equivalent to a notional observation with a certain probability density, from which a posterior density of the parameter given that observationhas been calculated using Bayes’ theorem with a noninformative ‘pre-prior’, with the thus computed posterior density being employed as the prior density (Hartigan, 1965).

However, a uniform prior over the whole real line conveys no information. Under Hartigan’s formulation, it’s notional observation has a flat likelihood function and a flat pre-prior. Suppose the transformation from calendar date to 14C age using the calibration curve is effected before the application of Bayes’ theorem to the notional observation for a uniform prior. Then its likelihood function remains flat – what becomes non-uniform is the pre-prior. The corresponding actual prior (likelihood function for notional observation multiplied by the pre-prior) in 14C age space is therefore nonlinear, as claimed. But when the modified form of Bayesian updating set out in my arXiv paper is applied, that prior has no influence on the shape of the resulting posterior PDF for true 14C age and nor, therefore, for the posterior for calendar date. In order to affect an objective Bayesian posterior, one has to put some actual prior information in. For instance, that could be in the form of a Gaussian distribution for calendar date. In practice, it may be more realistic to do so for the relationship between the calendar dates of two samples, perhaps based on their physical separation, than for single samples.

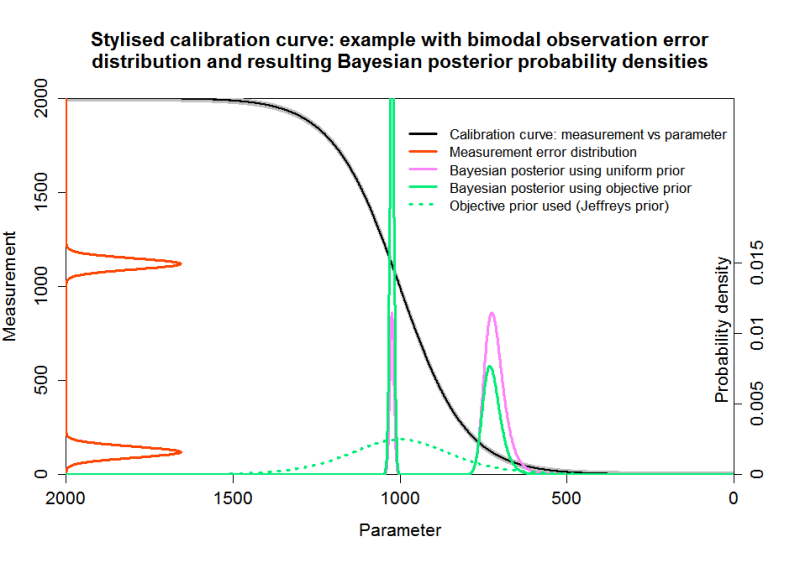

Let me give a hypothetical non-radiocarbon example that throws light on the uniform prior issue. Suppose that a satellite has fallen to Earth and the aim is to recover the one part that will have survived atmospheric re-entry. It is known that it will lie within a 100 km wide strip around the Earth’s circumference, but there is no reason to think it more likely to lie in any part of that strip than another, apart from evidence from one sighting from space. Unfortunately, that sighting is not very precise, and the measurement it provides (with Gaussian error) is non-linearly related to distance on the ground. Worse, although the sighting makes clear which side of the Earth the satellite part has hit, the measurement is aliased and sightings in two different areas of the visible side cannot be distinguished. The situation is illustrated probabilistically in Figure 9.

Fig. 9: Satellite part location problem

Fig. 9: Satellite part location problem

In Figure 9, the measurement error distribution is symmetrically bimodal, reflecting the aliasing. Suppose one uses a uniform prior for the parameter, here ground distance across the side of the Earth visible when the sighting was made, on the basis that the item is as likely to have landed in any part of the 100 km wide strip as in any other. Then the posterior PDF will indicate an 0.825 probability that the item lies at a location below 900 (in the arbitrary units used). If one instead uses Jeffreys’ prior, the objective Bayesian posterior will indicate a 0.500 probability that it does so. If you had to bet on whether the item was eventually found (assume that it is found) at a location below 900, what would you consider fair odds, and why?

Returning now to radiocarbon calibration, there seems to me no doubt that, whatever the most accurate method available is, Doug is right about a subjective Bayesian method using a uniform prior being problematical. By problematical, I mean that calibration ranges from OxCal, Calib and similar calibration software will be inaccurate, to an extent varying from case to case. Does that mean Bronk Ramsey is guilty of research misconduct? As I said initially, certainly not in my view. Subjective Bayesian methods are widely used and are regarded by many intelligent people, including statistically trained ones, as being theoretically justified. I think views on that will eventually change, and the shortcomings and limits of validity of subjective Bayesian methods will become recognised. We shall see. There are deep philosophical differences involved as to how to interpret probability. Subjective Bayesian posterior probability represents a personal degree of belief. Objective Bayesian posterior probability could be seen as, ideally, reflecting what the evidence obtained implies. It could be a long time before agreement is reached – there aren’t many areas of mathematics where the foundations and philosophical interpretation of the subject matter are still being argued over after a quarter of a millennium!

A PDF of this article and the R code used for the frequentist coverage testing are available at http://niclewis.files.wordpress.com/2014/04/radiocarbon-calibration-bayes.pdf and http://niclewis.files.wordpress.com/2014/04/radiocarbon-dating-code.doc

[i] A statistical model is still involved, but no information as to the value of the parameter being estimated is introduced as such. Only in certain cases is it possible to find a prior that has no influence whatsoever upon parameter estimation. In other cases what can be sought is a prior that has minimal effect, relative to the data, on the final inference (Bernardo and Smith, 1994, section 5.4).

[ii] I am advised by Professor Bronk Ramsey that the method was originally derived by the Groningen radiocarbon group, with other notable related subsequent statistical publications by Caitlin Buck and her group and Geoff Nicholls.

127 Comments

A butterfly flaps its statistical wing,

Shudders run through that whole history thing.

==========

Should that be ‘a degree of personal belief’>?

1. No. The calibration curve takes account of changes in 14C production rates due to varying solar and geomagnetic shielding.

2. Within each hemisphere – there is a separate calibration curve for the southern hemisphere that has an offset of ~40 years (due to increased exchange of CO2 with the ocean in the southern hemisphere. Within each hemisphere 14C is assumed to be well mixed. There probably are regional deviations, but they are likely be minor.

50,000 years.

For the Holocene (and slightly beyond) tree rings of known age (via dendrochronology) are radiocarbon dated. These data are used to calculate the radiocarbon calibration curve, which can be used to infer solar activity. But for radiocarbon dating it doesn’t matter what caused the concentration of 14C to vary.

Other materials eg corals and macrofossild in varved lakes are used for older dates because trees are not available.

Actually the calibration curve beyond the treering range (ca 12 000 BP) is very decidedly shaky. And the “raw” C14 dates themselves are best regarded as minimum ages beyond c. 35 000 rcy BP.

The problem with using corals, speleothems etc is that in contrast to trees these do not derive their carbon direct from the atmosphere, so it is necessary to correct for admixture of “old” carbon, an admixture that may well have varied widely over time.

There are a number of older pleistocene treering chronologies, but unfortunately they are all “floating” and so can’t be tied down to an absolute chronology.

NIc, thanks for this. In addition to the intrinsic interest of the problem, it’s an illuminating illustration of the technique.

The graphs make it much easier to understand for somebody like me who is not familiar with the lingo but has a basic understanding of probabilities. I don’t fully understand the mathematics behind it but overall the argument presented here is pretty convincing.

Nic

this was really helpful

“Rather than representing a probabilistic description of existing evidence as to a probability distribution for the parameter being estimated, a noninformative prior primarily reflects (at least in straightforward cases) how informative, at differing values of the parameter, the data is expected to be about the parameter. That in turn reflects how precise the data are in the relevant region and how fast expected data values change with the parameter value. This comes back to the relationship between distances in parameter space and distances in data space that I mentioned earlier.”

Very interesting and well explained.

You had me until you took shots at Bayesians.

You wrote: I think views on that will eventually change, and the shortcomings and limits of validity of subjective Bayesian methods will become recognised.

Well, sure. But, if you believed that a measured quantity should never be less than one, you would treat observations of 0.87 with suspicion. In practical terms, people are subjective Bayesians all the time. Richard Feynman said it well:

It is probably better to realize that the probability concept is in a sense subjective, that it is always based on uncertain knowledge, and that its quantitative evaluation is subject to change as we obtain more information.

He said that in 1962 when Bayesian reasoning was widely deprecated.

Now, some things that are done in the name of Bayes may be a little questionable.

Chuck

chuck

I have no problem with what Feynmann said. My objection is to when subjective Bayesians misuse the available information in estimating probability, so that the result does not fairly reflect the available information. There’s often no perfect answer, I realise.

Well,I’m against misuse of anything. But,it seems to me (a non-statistician) that frequentist theory is misused even more than Bayesian. Look at all the significance tests and tests of the null hypothesis done by climate scientists. You would think it is 1960!

I was first exposed to Bayesian reasoning in the context of decision theory. It seems to me incontrovertible that if you strongly believe that A is true but a few, unreliable measurements indicate that B is slightly more likely to be true, you should bet that A is true. Frequentist reasoning leads to the opposite conclusion.

But, as I said, I’m against misuse and all tools can be misused.

Again, you posting was excellent—it was clear and instructive. Don’t let my philosophical objection confuse my fundamental point.

Chuck

Chuck,

“…It seems to me incontrovertible that if you strongly believe that A is true but a few, unreliable measurements indicate that B is slightly more likely to be true, you should bet that A is true….”

The problem with this is the “belief” element. If the potential for A and B are both affected by the measurements of B, regardless of how unreliable measures of B might be, you simply don’t have enough information to make an informed decision. Both views should be stated and analyzed, and the need for better information highlighted. One point that underscores this is several recent metastudies that have pointed a pattern of earlier studies investigating a given hypothesis, particularly in medicine, that tend to be more confirmatory than follow up studies. That is, early studies tend look very positive, while support in later investigations tends to weaken. So, treating earlier studies as more indicative of reality – they are the prior information available – you would, if I followed your argument, find yourself betting against increasing odds of being wrong.

This article is an example of the reason I like reading when a mathematically sound person writes to convey information! I simply find it enjoyable and enlightning. Reminded me of the motivation/summary lecture prior to getting down to the nitty gritty of the mathematics proof.

But one question for Nic:

How far off is the normalized(f(x)) where f(x) = g(C(x)) where C is the calibration curve, g(y) is the pdf for the Radio Determined Age and then the normalized(f) is the pdf for the calendar age, as compared to the Bayesian Posterior using Uniform Prior?

timothy

If I understand your question correctly, they will be identical, since the [posterior] pdf for the 14C determined age is identical to its likelihood function (if one uses a uniform in 14C prior, which would be usual with a Gaussian error distribution).

To convert the posterior pdf g(y) for the 14C determined age into a pdf for calendar age one would need to multiply by the derivative of the inverse transform, so one would have:

f(x) = g(C(x)) dC(x)/dx

but I think the non-monotonicity of C(x) is a problem here.

Thanks for the post. I will have to read more slowly and inwardly digest – perhaps later over the weekend.

I should perhaps clarify that I wasn’t saying the OxCal method was necessarily right, only that it was the posterior you got from a uniform prior on calender year, which was what was being commonly assumed. I would tend to take such a report only as a ‘neutral’ way to express an experimental result before combining it with a more sensible prior on calender year based on other evidence. The idea is that it makes it easier for the result to be re-used by people with different priors, and makes it clear what part the radiocarbon evidence is contributing to the result versus any other evidence. I agree with Nic that assuming a uniform distribution as one’s actual prior is a risky operation.

I’m pretty sure that in any reasonable archaeological context you’re always going to have some prior information. Something found in a sealed Egyptian tomb is not equally likely to have been put there 40 years ago as 4000 years ago.

My view was that the meaning and proper interpretation of the OxCal result was poorly explained, and that they should have been able to answer Doug’s questions had they properly understood the method themselves. Doug’s confusion was understandable.

—

Nic’s post reminds me of the story I once heard about the man who lost his keys at night, and was walking from street light to street light, pausing at each to search in the patch of illumination. When asked why he was only looking under the street lights, surely he didn’t think the keys were any more likely to have fallen out there, he replied “Maybe not, but I think I’m more likely to find them there.”

“I’m pretty sure that in any reasonable archaeological context you’re always going to have some prior information. Something found in a sealed Egyptian tomb is not equally likely to have been put there 40 years ago as 4000 years ago.”

Some information yes, but you might be surprised by how often objects found in apparently undisturbed and sealed context turn out to be intrusive (mostly younger) when dated by C14 (or other method).

One of the loud archaeological debates concerns contamination of younger material by older carbon when the contamination is not due to a reservoir effect, e.g the debate over the early dates at Meadowcroft Rockshelter in Pennsylvania. In fact, to significantly increase the apparent age of a sample would necessitate significantly increasing the bulk of the sample with the contaminant. It is vastly simpler to contaminate a sample with younger material without detectably modifying the sample. The biggest hazard to a good date estimate is still the laboratory handling.

Steve M, Mosher, Dave

Thanks! BTW, it’s worth looking at my R code – there are a couple of useful stand-alone utilities included, in particular one that computes specified percentage points for multiple PDFs or CDFs (and from [profile] likelihoods) and can also add them to a graph as box plots.

Forgive me… (really, forgive me)… But when I saw this post and saw that you were authoring it, I was expecting some eventual prose directly engaging the latest response(criticism) to your recent paper:

http://www.realclimate.org/index.php/archives/2014/04/shindell-on-constraining-the-transient-climate-response/

I pretty-much tore through your words searching for the payoff and didn’t find it. Obviously it’s no matter, considering you’re free to write about whatever whenever 😉 …I just think there’s a couple people out there interested to hear if the case is closed there, or if there is still a matter of non-impassed debate regarding each other’s work.

I shall respond to Shindell, but I’ve had lots to do and Bayesian stats stuff also interests me. FWIW, I think the effect Shindell is making a noise about is likely very small in the real world.

As an initial move, I posted a comment on Troy Master’s blog (he also had a post attacking Shindell’s paper), in which I pointed out that Shindell’s RC piece, far from answering all the substantive points in my CA post, had simply ignored most of them. See http://troyca.wordpress.com/2014/03/15/does-the-shindell-2014-apparent-tcr-estimate-bias-apply-to-the-real-world/#comment-1427 .

Ah, I see it now.

Such is life in the blogosphere– it’s really hard to keep up with so many places where the things you’re looking for might be found.

A problem for all of us. Nic posted this in a comment on Bishop Hill ten days ago, which is how I knew to look in troyca’s direction:

Keep watching all these spaces 🙂

I’d like to take a minute to compliment you on the quality of your prose. It’s hard to write clearly about this and you did an excellent job.

Thanks, Tom!

Nic,

Thanks for the clearly written exposition of how a standard “subjective” Bayesian analysis can sometimes differ dramatically from an analysis based on an “objective” Bayesian prior (specifically, Jeffreys’ prior). As I’ll explain below, it illuminates very well the way in which your philosophical standpoint is not one I agree with.

However, I am in agreement regarding the technical details and calculations you give, with one exception – after showing that the subjective Bayesian credible interval based on the posterior CDF has perfect probability matching when the parameter value is randomly chosen from the subjective prior that is used, you remark that this is “probably reflecting the near symmetrical form of the stylised overall calibration curve”. Actually, it has nothing to do with that, and in fact you didn’t even need to run the program to find this out. One can easily prove theoretically that the probability that the credible interval based on the posterior CDF will contain the true parameter value will always be exactly as specified, if you average over true parameter values drawn from the prior used to construct the posterior. Subjective Bayesian procedures are always perfectly consistent on their own terms.

Given that, it’s easy to see why a subjective Bayesian method will usually NOT produce perfect probability matching when averaging over a distribution for true parameter values that is different from the prior it uses. In particular, if you draw true parameter values from a more restricted interval (or as an extreme, fix the true parameter to a single value), the subjective Bayesian method will think that some values are possible that in fact never occur, while thinking other values are less probable than they actually are (since with the range restricted, those values that remain possible occur more often). This is no surprise to subjective Bayesians, though the carbon-14 dating example illustrates it in particularly nice fashion.

That the “objective” Bayesian method using Jeffreys’ prior will produce perfect probability matching is most easily seen as being due to the general fact that an analysis using the Jeffreys’ prior is not affected by applying some monotonic transformation to the parameter (and then interpreting the results as transformed, of course). Standard frequentist tests of a null hypothesis based on a Gaussian observation are also unaffected by such a monotonic transformation. So in both cases, one can construct a confidence/credible interval for the carbon-14 age by well-known methods (that exhibit perfect probability matching), and then simply transform the endpoints of this interval to calendar years using the calibration curve (which I’ll assume is known exactly, since uncertainty in it doesn’t seem to really affect the argument). The result will also have perfect probability matching.

But it doesn’t follow that these intervals with perfect probability matching actually make any sense. As you seem to realize, the posterior probability density function obtained using Jeffreys’ prior is both bizarre and unbelievable. In particular, calendar ages where the calibration curve is almost flat have almost zero posterior probability density, even when they are entirely compatible with the observed data. The posterior probability that the true calendar age is in some interval over which the calibration curve is almost flat is also close to zero, so it’s not just a case of some funny problem with interpreting what a probability density is. There’s just no way this makes any sense – for instance, in your example of Fig. 2, it makes no sense to conclude that the calendar age has almost zero probability of being in the interval 750 to 850, when these calendar ages are amongst those that are MOST compatible with the data, and we are assuming that there is no specific prior knowledge that would eliminate years in this range. Similarly, it makes no sense to conclude that the interval from 1100 to 1400 has almost zero probability, even though these years produce a fit to the data that is only modestly worse than the years with highest likelihood.

Since the posterior distribution obtained using Jeffreys’ prior is unbelievable, it provides no reason to believe in the credible interval computed from this posterior distribution (ie, regardless of whether the credible region is actually good, the fact that it was obtained from this unbelievable posterior distribution is not a valid reason to think it is good).

The frequentist confidence interval (which contains all points not rejected by a null hypothesis test) will of course have the proper coverage probability. But this is of no interest, since nobody cares about this coverage property – some people they may THINK that they care, but they really only care about the result of surreptitiously swapping the order of conditioning, converting the confidence interval into a pseudo-Bayesian credible interval, since that’s what they need to actually DO anything with the interval (other than putting it in a paper to satisfy some ritualistic requirement). Interpreting confidence intervals in this swapped fashion is very common, and may cause little harm when available prior information is vague anyway, but for carbon-14 age, there is actually very strong prior information (if the calibration curve is highly non-linear).

So I don’t think there is any valid justification for using the confidence/credible intervals you produce. Now, one problem with discussing this is that no actual use for the intervals has been specified. It’s quite possible that such intervals don’t answer the question that needs answering anyway, and that instead what is needed is, say, the posterior probability that the calendar age is between 1100 and 1400 (with these endpoints having been pre-specified as the ones of interest). If this range happens to lie entirely within or entirely outside the credible interval you found, you get a rough answer of sorts, but in general you should just directly find the answer to your actual question. One advantage of Bayesian inference using a posterior distribution is the ease of answering all sorts of questions like this. But it doesn’t work if your posterior distribution is nonsensical, as is the case here if you use Jeffreys’ prior.

Your satellite example is of interest because for this example you have explicitly specified the question of interest – where should we start searching in order to recover the satellite with the least effort? (Eg, perhaps it’s enough to decide whether we should first look below position 900, or above, as you mention.) You have also clearly indicated that the prior knowledge of the satellite’s location is uniform over the strip. And though you don’t specify the measurement process in detail, you’re also clearly assuming that its properties are known, and the actual measurement gives the likelihood function you plot.

With these assumptions, there is no doubt that the correct method is found by computing posterior probabilities based on the “subjective” uniform prior. It is NOT correct to use Jeffreys’ prior. This is just a mathematical fact. Subjective Bayesian inference is completely consistent within its own world, and given all your assumptions, that is the world we are dealing with. You will find this if you run simulations of satellites falling at positions uniformly distributed on the strip, sightings occurring with some measurement process such as you describe, inferences being done using the uniform prior and the Jeffreys’ prior, and people placing bets on the basis of one inference or the other. The bets based on Jeffreys’ prior will not win as much money on average as those based on the “subjective” uniform prior.

Now, in real problems, the issues are seldom so clear. For carbon-14 dating, it may be that posterior probabilities of any sort are not what is needed. The sample is probably being dated as part of some wider research project, and what is needed in the wider context may simply be the likelihood as a function of calendar year, with no need to combine this with any prior – instead, it will contribute one factor to the likelihood function of some grander model. Of course, if you know the posterior density based on a uniform prior, that immediately tells you the likelihood function too, so the distinction may be a bit academic.

For other problems (but not the carbon-14 dating problem as you’ve described it), issues arise with “nuisance parameters”, that affect the distribution of the data, but aren’t of direct interest. If there are lots of these, it becomes infeasible to just report the likelihood function, and the prior for the nuisance parameters can affect the posterior for the parameter of interest. I think statisticians working on “objective” or “reference” priors are probably mostly motivated by issues of this sort. For the problems in your post, however, there are no nuisance parameters, so this motive for using an “objective” prior is absent.

More generally, going beyond carbon-14 dating, you should note that using uniform priors is certainly not general practice for subjective Bayesians. In fact, most subjective Bayesians would never use a uniform prior for an unbounded quantity, since this does not correspond to any actual, proper probability distribution. (An exception would be when it’s clear that the prior has little influence anyway, and it’s just easier to use a uniform prior.)

The cardinal rule for Bayesian inference is DON’T IGNORE INFORMATION. This is obvious for data – if you’ve collected 1000 data points, it’s not valid to pick out 300 of them and report a posterior distribution that ignores the other 700. But it’s also true for prior information. For instance, a posterior distribution based on a uniform prior for an unbounded positive quantity is not valid when (as is always the case) you have reason to think that, past a certain point, large values of the parameter are highly implausible. Criticism of priors used in a subjective Bayesian analysis is entirely appropriate, but blindly using Jeffreys’ prior is not.

Radford Neal

Radford,

Thanks for commenting in detail. I think the fundamental difference between us is that you assume that genuine prior information exists as to the true calendar age of a sample, whereas I do not. I agree that, if genuine prior information does exist, then Jeffreys’ prior may not be the best choice. But your statement: “Given that, it’s easy to see why a subjective Bayesian method will usually NOT produce perfect probability matching when averaging over a distribution for true parameter values that is different from the prior it uses” reveals a major problem. In general, one doesn’t know what the distribution for true parameter values is.

Bronk Ramsey’s 2009 paper refers to the argument that “we should not include anything but the dating information and so the prior [for calendar age] becomes a constant”. It states that they normally do so iff there is a single event, setting p(tᵢ) ~ U( ∞,∞). To me, those statements show an intention for the uniform prior to represent ignorance, not a justified belief that the sample was in fact generated by a process that resulted in it having equal probability of it having originated in any calendar year and/or falling within a particular date range. And from a physical point of view, there could well be other probability distributions that are more plausible, such as exponential.

A method that only provides reliable uncertainty ranges if the sample was generated by a process that produces a distribution of sample ages matching as to both shape and extent the prior distribution used seems pretty unsatisfactory to me. By contrast, both an objective Bayesian method using Jeffreys’ prior and the SRLR method will provide exact probability matching whatever distribution of sample ages the process that actually generated the sample produces.

We agree that the posterior PDF produced by use of Jeffreys’ prior may look artificial. But I don’t agree with you that means one shouldn’t believe in credible intervals computed from such a posterior distribution. IMO, the probability matching properties of such credible intervals make them perfectly believable. Much more believable than credible intervals resulting from use of a subjective prior, when one doesn’t have any good reason to think that prior accurately reflects genuine prior information.

As I wrote, I think it may be more helpful here to report the likelihood function and to think of the posterior PDF as a way of generating a CDF and hence credible intervals rather than being useful in itself. I agree that realistic posterior PDFs can be very useful, but if the available information does not enable generation of a believable posterior PDF then why should it be right to invent one?

As you say, we don’t have the problem of nuisance parameters here, but I don’t see that as a reason against using noninformative prior where there is no genuine prior information.

You didn’t interpret my satellite example in the way I envisaged, no doubt because I didn’t express it very well and also because of its physical characteristics. My words “but there is no reason to think it more likely to lie in any part of that strip than another” were intended to indicate total ignorance, not that prior knowledge of the satellite’s location was equivalent to a uniform distribution over the strip. There is a difference. You are assuming it is known that the process which generated the impact location results in it being equally likely to lie in any part of the strip, so that there is genuine prior information. Whilst that is physically plausible, it was not specified. If you do make that assumption, then I agree with your analysis.

I agree with your point about not ignoring information. I have another cardinal rule: DON’T INVENT INFORMATION.

Nic: … you assume that genuine prior information exists as to the true calendar age of a sample, whereas I do not.

Well, subjective Bayesians think that there is always prior information. I do have my doubts about this in extreme cases like assigning prior probabilities to different cosmological theories, where it’s hard to see why people should have evolved to have intuitive knowledge of what’s plausible, but we’re not dealing with that sort of problem here. People have all sorts of information about what are more or less plausible dates for old pieces of parchment, tree stumps, or whatever. It’s unlikely that this will lead to a uniform prior, however, which is why I suspect that people are using that prior just as a way of communicating the likelihood function (while possibly being a bit confused about what they’re really doing).

Nic: We agree that the posterior PDF produced by use of Jeffreys’ prior may look artificial.

The posterior PDF produced by use of Jeffreys’ prior doesn’t just look “artificial”. It looks completely wrong. I think this is the most crucial point. Your example isn’t one that should convince readers to use Jeffreys’ prior because it gives exact probabililty matching for credible intervals. It’s an example that should convince readers that Jeffreys’ prior is flawed, and probability matching is not something one should insist on. Could there possibly be a clearer violation of the rule “DON’T INVENT INFORMATION”? The prior gives virtually zero probability to large intervals of calendar age based solely on the shape of the calibration curve, with this curve being the result of physical processes that almost certainly have nothing to do with the age of the sample.

Statistical inference procedures are ultimately justified as mathematical and computational formalizations of common sense reasoning. We use them because unaided common sense tends to make errors, or have difficulty in processing large amounts of information, just as we use formal methods for doing arithmetic because guessing numbers by eye or counting on our fingers is error prone, and is anyway infeasible for large numbers. So the ultimate way of judging the validity of statistical methods is to apply them in relatively simple contexts (such as this) and check whether the results stand up to well-considered common sense scrutiny. In this example, Jeffreys’ prior fails this test spectacularly.

I think you would maybe agree that Jeffreys’ prior is not to be taken seriously, given that you say the following:

Nic: … think of the posterior PDF as a way of generating a CDF and hence credible intervals rather than being useful in itself. I agree that realistic posterior PDFs can be very useful, but if the available information does not enable generation of a believable posterior PDF then why should it be right to invent one?

But with this comment, you seem to have adopted a strange position that may be unique to you. Frequentists usually don’t have much use for a posterior PDF for any purpose. And I think “objective” Bayesians aim to produce a posterior PDF that is sensible. I’m puzzled why you would bother to produce a posterior that you don’t believe is even close to being a proper expression of posterior belief, and then use it as a justification for the credible intervals that can be derived from it. If these credible intervals have any justification, it can’t be that. And in fact, for this example, you can (and do) justify these intervals as being confidence intervals according to standard frequentist arguments (albeit ones that I think are flawed in this context). So what is the point of the whole objective Bayesian argument?

Nic: My words “but there is no reason to think it more likely to lie in any part of that strip than another” were intended to indicate total ignorance, not that prior knowledge of the satellite’s location was equivalent to a uniform distribution over the strip. There is a difference. You are assuming it is known that the process which generated the impact location results in it being equally likely to lie in any part of the strip…

I think it is impossible to maintain this distinction between a physical random process and “ignorance”, which you don’t seem willing to represent using probability (even though that’s central to all Bayesian methods, subjective or not). Archetypal random processes such as coin flips are probably not actually random, in the sense of quantum uncertainty or thermal noise, but appear random only because of our ignorance of initial conditions, which could be eliminated by suitable measuring instruments (that are not impossible according to the laws of physics).

Among both frequentists and objective Bayesians, I think there is a degree of wishful thinking from wanting to find a procedure that avoids all “subjectivity”. But it’s just not possible. Refusal to admit that it’s not possible inevitably leads to methods that produce strange results.

Radford Neal:

The criterion should not be whether the results look wrong, but whether the method (including the implicit prior) looks wrong. The Jeffreys prior on C14 age should be rejected because it implies a bizarre prior on the calibrated date of interest, given the objective information in the calibration curve. This is why it gives bizarre posteriors sometimes, but we should not reject the results just because the posterior is subjectively “wrong”.

Hu,

Certainly, when judging whether a method is valid, one should look at the details of the method, its justification, intermediate quantities like the prior, as well as the final result. But what can one say if someone just accepts all the intermediate things that look wrong to you? The only ultimate ground for comparison is the final result, and the only ultimate judge is whether the results accord with common sense.

Now, by “common sense”, I mean a sophisticated common sense, that has contemplated any insights that theoretical analysis or computational investigation has provided, and has carefully considered whether an apparently bizarre result might actually be correct. In sufficiently complex problems, we might never be able to acquire such common sense, and just have to accept the result even if it looks “wrong”, if the method used produces results in simpler situations that do accord with common sense.

But this isn’t all that complex a problem. In Nic’s Fig. 2, a look at the posterior PDF produced with the objective prior should immediately produce the reaction, “Why do calendar years from 750 to 850 have virtually zero probabillity! That doesn’t look right…”. And it’s not right.

““Why do calendar years from 750 to 850 have virtually zero probabillity! That doesn’t look right…”.”

I think it’s a matter of understanding what the approach is trying to say. The objective Bayesian approach is trying to discount our prior knowledge and tell us only what the evidence tells us, and the evidence that we’ve obtained offers us no support for the hypothesis that the calendar date is 750-850, because the experimental method used doesn’t provide that information. It is telling us that radiocarbon dating is blind in this region, due to the plateau in the calibration curve. For us to conclude from this evidence that the date is in this region, we would have to suppose a very narrow, precisely defined measurement error, so narrow that it has a very low probability, and which is further diluted by being spread out over the entire plateau. Our C14 measurement is not precise enough to resolve this interval.

When we look at a result and say “That doesn’t look right…”, that’s our prior knowledge speaking. Your assessment that there is a good chance of the true calendar date being in the 750-850 range is not based on the radiocarbon evidence, it’s based on your prior expectations about how the world is.

I agree that taken as a best estimate of how the world is, it’s not right. But that’s not what the method is designed for.

Nullius in Verba: The objective Bayesian approach is trying to discount our prior knowledge and tell us only what the evidence tells us, and the evidence that we’ve obtained offers us no support for the hypothesis that the calendar date is 750-850, because the experimental method used doesn’t provide that information. It is telling us that radiocarbon dating is blind in this region, due to the plateau in the calibration curve.