A guest article by Nic Lewis

I reported in a previous post, here, a number of serious problems that I had identified in Marvel et al. (2015): Implications for climate sensitivity from the response to individual forcings. This Nature Climate Change paper concluded, based purely on simulations by the GISS-E2-R climate model, that estimates of the transient climate response (TCR) and equilibrium climate sensitivity (ECS) based on observations over the historical period (~1850 to recent times) were biased low.

I followed up my first article with an update that concentrated on land use change (LU) forcing. Inter alia, I presented regression results that strongly suggested the Historical simulation forcing (iRF) time series used in Marvel et al. omitted LU forcing. Gavin Schmidt of GISS responded on RealClimate, writing:

“Lewis in subsequent comments has claimed without evidence that land use was not properly included in our historical runs…. These are simply post hoc justifications for not wanting to accept the results.”

In fact, not only had I presented strong evidence that the Historical iRF values omitted LU forcing, but I had concluded:

“I really don’t know what the explanation is for the apparently missing Land use forcing. Hopefully GISS, who alone have all the necessary information, may be able to provide enlightenment.”

When I responded to the RealClimate article, here, I inter alia presented further evidence that LU forcing hadn’t been included in the computed value of the total forcing applied in the Historical simulation: there was virtually no trace of LU forcing in the spatial pattern for Historical forcing. I wasn’t suggesting that LU forcing had been omitted from the forcings applied during the Historical simulations, but rather that it had not been included when measuring them.

Yesterday, a climate scientist friend drew my attention to a correction notice published by Nature Climate Change, reading as follows:

“Corrected online 10 March 2016

In the version of this Letter originally published online, there was an error in the definition of F2×CO2 in equation (2). The historical instantaneous radiative forcing time series was also updated to reflect land use change, which was inadvertently excluded from the forcing originally calculated from ref. 22. This has resulted in minor changes to data in Figs 1 and 2, as well as in the corresponding main text and Supplementary Information. In addition, the end of the paragraph beginning’ Scaling ΔF for each of the single-forcing runs…’ should have read ‘…the CO2-only runs’ (not ‘GHG-only runs’). The conclusions of the Letter are not affected by these changes. All errors have been corrected in all versions of the Letter. The authors thank Nic Lewis for his careful reading of the original manuscript that resulted in the identification of these errors.”

So, as well as the previously flagged acceptance that the F2×CO2 value of 4.1 W/m2 was wrong in the original paper, the authors now accept that LU forcing had indeed been omitted from the Historical forcing values. They had been omitted from the forcing originally calculated in ref. 22 (Miller et al. 2014); Figures 2 and 4 of that paper likewise omit LU forcing.

It is decent of the authors to acknowledge me. However, I am mystified by their claim that “The conclusions of the Letter are not affected by these changes.” Their revised primary (iRF) estimate of historical transient efficacy is, per Table S1, 1.0 (0.995 at the centre of the symmetrical uncertainty range). This means the global mean temperature (GMST) response to the aggregate forcing applying during the historical period (actually, 1906–2005) was identical, in the model, to the response to the same forcing from CO2 only. That implies its TCR can be accurately estimated by comparing the GMST response and forcing over that period. But in their conclusions they contradict this, stating that:

“GISS ModelE2 is more sensitive to CO2 alone than it is to the sum of the forcings that were important over the past century.”

and they go on to claim that:

“Climate sensitivities estimated from recent observations will therefore be biased low in comparison with CO2-only simulations owing to an accident of history: when the efficacies of the forcings in the recent historical record are properly taken into account, estimates of TCR and ECS must be revised upwards.”

I would not have left these claims unaltered if it had been my paper – not that I would ever have made the second claim in any event, since it assumes the real world behaves in the same manner as GISS-E2-R.

I also find the way that the error in the F2×CO2 value has been dealt with in the corrected paper to be unsatisfactory. The previous, incorrect, value of 4.1 W/m2 has simply been deleted, without the correct values (4.5 W/m2 for iRF and 4.35 W/m2 for ERF) being given, either there or elsewhere in the paper or in the Supplementary Information. All the efficacy, TCR and ECS estimates given in the paper scale with the relevant F2×CO2 value, so it is important. In my view it would be appropriate to provide the F2×CO2 values somewhere in the paper or the SI.

Finally, I note that none of the other serious problems with the paper that I identified have been corrected. These include:

- the use of values for aerosol and ozone forcings, which are sensitive to climate-state, being calculated in an unrealistic climate state;

- use of ocean heat uptake – which amounts to only ~86% of total heat uptake – as a measure of total heat uptake despite the observational studies Marvel et al. critique using estimates that included non-ocean heat uptake;

- downwards bias in the equilibrium efficacy estimates by not comparing the GMST response to the forcing concerned with that to CO2 forcing derived in the same way;

- various results whose values disagree significantly to the data used and/or the stated bases of calculation. For instance, all the uncertainty ranges appear to be out by a factor of two or more. (I can’t make sense of Gavin Schmidt’s justification for the way they have been calculated.)

.

Update 13 March 2016

Steve M asked about the changes in the corrected paper/SI from the original. Apart from those mentioned in the 10 March 2016 correction notice, I have identifed the following other changes:

1) The caption to Figure 1 of the paper has been changed, by inserting “(defined with respect to 1850-1859)” after “a Non-overlapping ensemble average decadal mean changes in temperature and instantaneous radiative forcing for GISS-E2-R single-forcing ensembles (filled circles)” in the second line. As worded this definition applies to temperature as well as forcing, since the filled circles relate to both variables. But I think it is intended to apply only the forcing change, not to the temperature change. This was triggered, I imagine, by the following exchange at RealClimate on 11 January 2016:

“Nic Lewis says:

Chris Colose, you asked “why do the volcanic-only forcings (red dots) hover around a positive value in the first graph?”. The explanation I give in my technical analysis of Marvel et al. at Climate Audit is that in Figure 1 the iRF for volcanoes appears to have been shifted by ~+0.29 W/m2 from its data values, available at http://data.giss.nasa.gov/modelforce/Fi_Miller_et_al14.txt. Why not check it out and see whether my analysis is confused, as has been suggested here?

[Response: You are confused because you are using a single year baseline, when the data are being processed in decadal means. Thus the 19th C baseline is 1850-1859, not 1850. We could have been clearer in the paper that this was the case, but the jumping to conclusions you are doing does not seem justified. – gavin]”

There was no mention anywhere in the originally paper or SI of the 1850-1859 mean being used as a baseline for any variable, and it appears to have been used as the baseline solely for iRF forcing.

2) The words “for the iRF case” have also been added two lines further down, at the end of the caption for Figure 1.a.

3) The second sentence of the paragraph starting “Assuming that all forcings have the same transient efficacy as greenhouse gases” now reads: “Scaling each forcing by our estimates of transient efficacy determined from iRF we obtain a best estimate for TCR of 1.7 C (Fig. 2a) (1.6 C if efficacies are determined from ERF).” The words in brackets are new, and previously the figure before “(Fig. 2a)” was 1.7 C.

4) The ECS figure in the third sentence of the paragraph starting “We apply the same reasoning to estimates of ECS.” has been changed from 2.9 C to 2.6 C.

5) Various values in graphs have been changed.

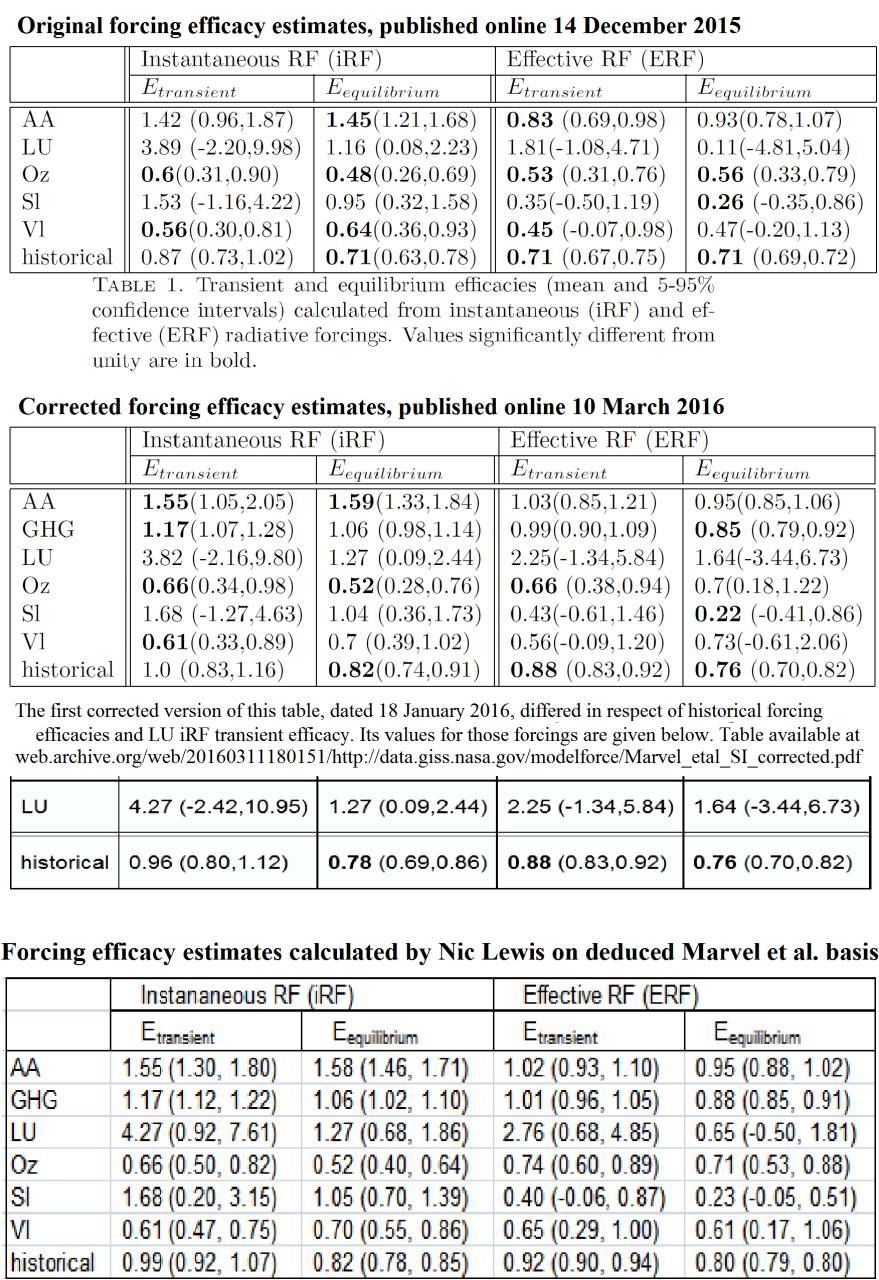

6) All the efficacy values in Table S1 in the Supplementary Information have been changed. I show below the update history for this table, along with my provisional calculations of what all the values should be.

.

My calculations are based on the method I have deduced Marvel et al. used to produce the iRF efficacy estimates, not on the basis stated by Marvel et al., which is significantly different in two respects. Marvel et al. state:

“In the iRF case, where annual forcing time series are available, TCR and ECS are calculated by regressing ensemble-average decadal mean forcing or forcing minus ocean heat content change rate against ensemble-average temperature change.”

and say that transient and equilibrium efficacies are defined as the ratio of the calculated TCR or ECS to published GISS-E2-R TCR and ECS values. But I can only match the Table S1 mean iRF efficacy values by regressing the other way around, that is regressing temperature change against decadal mean forcing or forcing minus ocean heat content change rate. Moreover, to match the ECS efficacy values (for which it makes a difference), I have to regress on a run-by-run basis and then average the regression slopes, rather than regressing on ensemble-average values as stated was done.

For all iRF forcings other than LU, my mean estimates agree with those in the 10 March 2016 version of Table S1, within rounding errors. I am currently unsure why the iRF LU efficacies differ between the version of Table S1 dated 18 January 2016, that was available at the GISS website until recently – which matched my estimate as to the mean – and the 10 March 2016 version. Nor have I yet worked out why most of the ERF mean estimates and uncertainty ranges differ between my calculations and the 10 March 2016 version of Table S1.

As already noted, my iRF efficacy uncertainty ranges are much narrower than Marvel et al.’s. Their ratio appears to be [double] the square root of [one-fifth] the number of simulation runs from which the range was derived. That is [, by a factor of 1.118 (1.225 for historical forcing), not] consistent with Gavin Schmidt’s comment at RealClimate:

“The uncertainties in the Table S1 are the 90% spread in the ensemble, not the standard error of the mean.”

Since the values given are stated to be “mean and 95% confidence intervals”, I cannot see any justification for the efficacy uncertainty ranges actually being 95% confidence intervals for a single run, centered on the mean efficacy calculated over all runs.

Note: wording in square brackets in the pre-penultimate paragraph inserted 14 March 2016 AM; I wrote the Update late at night and overlooked that Gavin Schmidt’s explanation would only account for the discrepancy if the divisor for the standard error of the mean of n runs were sqrt(n-1), rather than the correct value of sqrt(n).