Posted for bender.

bender writes:

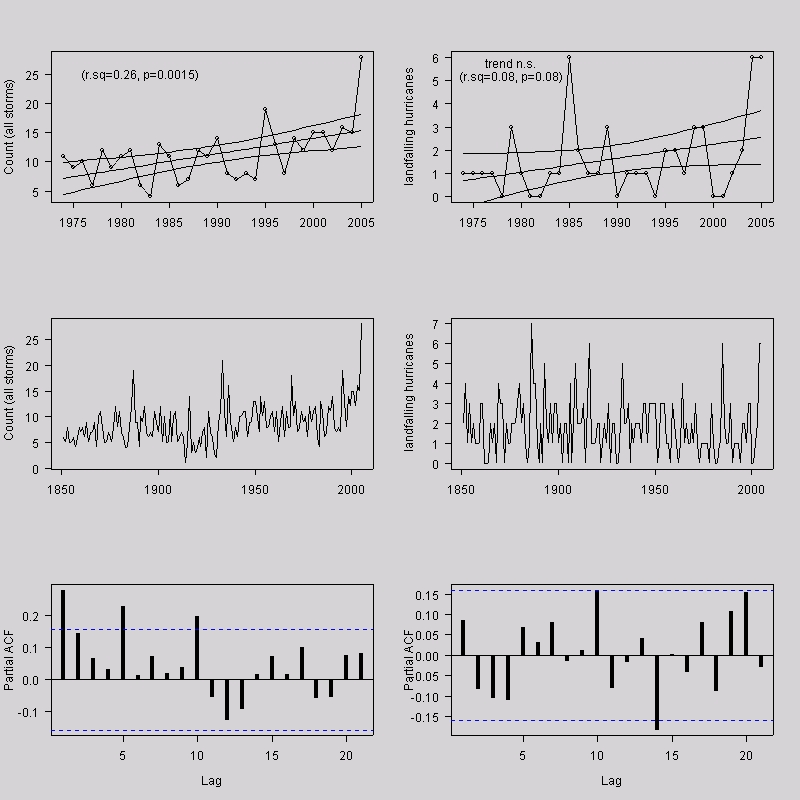

Attached are analyses of Willis’s landfalling hurricane data in post # 35. Interesting facts:

1. 1974-2005 trend is "n.s." (Note 95% confidence intervals now present.)

2. PACs for lag 10 and 20 are marginally significant. (Recall that for total # hurricanes lag 5 and 10 were sig. It is as though NAO affecting sea storms is operating on a 5y time-scale and continental high (blocking effect from decadal/bidecadal solar cycle?) is operating on a 10/20y cycle. Not sure if this has been noticed in the literature because I don’t read that literature. But I know that continental drought in the US is weakly forced by the 11/22y solar cycle, so why not the process of hurricane landfall? (i.e. You take your pick: hurricanes in 2005 are the ultimate solution for droughts in 2001-2003!) Note how this fits nicely with your "persistence" theory!

559 Comments

The landfall counts are nearly perfectly consistent with a Poisson process with a constant arrival rate. This seems odd; I would have expected that the arrival rate would be proportial to the number of North Atlantic hurricanes, but that does not seem to be the case. Is there some explanation for this? Judy Curry (#110 in previous post) may have touched on this issue:

but I found this explanation more confusing than enlightening. Perhaps the BAMS article (I have not re-read it) explains everying.

In any case, I am still surprised that the landfall data exhibit no trend or interesting time series structure (aside from the tiny blip at lag 14). The data seem almost “too good to be true” (#73 in previous post).

bender, if you ever have spare time to look at something, and you have access, perhaps you could review the linked paper.

It is a recent paper by Mann and Emanuel. They apparently had to eliminate some data due to aerosol cooling from 1950-80, and do some kind of statistical contortions in order to reach their conclusions.

link

Re #2

By the way, discrediting the AMO (Atlantic Multidecadal Oscillation) is to Atlantic hurricane climatology as descrediting the MWP and LIA is to global temperature climatology. It is a big deal. As I read it, Mann and Emanuel say they’ve done it, via statistics and data sorting.

I have a long post at Volokh.com that finds some errors in the latest BAMS paper on hurricanes and global warming.

Although I found the paper mostly persuasive that there had been a very large increase in category 4 hurricanes since 1970 (with drops or no change in the other categories of hurricanes), I see three problems with the paper.

First, the paper dismisses concerns that the choice of 1970 as a starting point may give a misleading account because of the evidence that there was global cooling from 1940 to 1970. They treat this legitimate concern as a logical fallacy, but they never explain coherently what’s fallacious about potentially choosing start or cut-off dates that are unrepresentative of larger trends or that give misleading measurements of the strength of any overall trend.

Second, if the authors actually did what they report having done with their data, then the BAMS paper should never have been published. In two charts showing the main hurricane trends, they report the data in five year periods, except for 1994, which is included in two periods, 1990-94 and the six-year period 1994-99. This may be just a typographical error, but they make this error three times in the paper (in most of the most important charts). And exactly the same error appears in another paper they published in Science in 2005 using related data, so it may well not be a typo. If these are not merely typographical errors, and they did what the article reports that they did (i.e., double counted 1994 hurricanes), then the paper should never have been published.

Third, although the article ended with a substantial discussion of responsible argumentation over the issue of hurricanes and global warming in the mainstream press, as an apparent model they pointed to their own public commentary:

Just to remind people: Katrina was a category-5 hurricane at its peak, but it was a category-3 hurricane when it hit the Gulf Coast, and it was only a category-1 hurricane at New Orleans (95 mph), though it was just below the threshold for a category-2 hurricane. The damage at New Orleans probably occurred, not because it was such an unusual hurricane but because the levees were in appalling condition.

But the data presented in the BAMS paper show what looks to be a very small and statistically insignificant rise in category-5 hurricanes from 1970 through 2005 (these data include some Pacific as well as some Atlantic hurricanes). The big increase shown in the BAMS paper is almost a tripling of category-4 hurricanes; other classes of hurricanes seem to show significant drops or no significant changes.

A 2005 Science article co-authored by the same group as the BAMS paper–Webster, Holland, Curry, & Chang, “Changes in Tropical Cyclone Number, Duration, and Intensity in a Warming Environment”–does look at Northern Atlantic hurricanes 1970-2004 separately from Pacific ones, but lumps category-4 and category-5 storms together, showing an increase for the combination, not reporting anything on category-5 hurricanes alone. I went to the data source cited in the 2005 Science paper and this is what I found for 1960-2004 hurricanes (the Science study covered 1970-2004, excluding the first two rows below and the 4 category-5 hurricanes that occurred after the period of their data, in 2005):

Category 5 Hurricanes in the North Atlantic:

1960-64 . . 4

1965-69 . . 2

1970-74 . . 1

1975-79 . . 2

1980-84 . . 1

1985-89 . . 2

1990-94 . . 1

1995-99 . . 1

2000-04 . . 2

As you can see, in the data they claimed to have used in their Science article (as I counted the events), there is absolutely no trend in category-5 hurricanes in the period of their study: 1970-2004. Indeed, the 1990s showed insignificantly fewer hurricanes than either the 1970s or 1980s. Thus, all of the increase in the North Atlantic category 4-5 storms reported in the 2005 Science article must be due to an increase category-4, not category-5 storms.

Neither paper reports any data that would show a statistically significant increase in category-5 storms that would form the scientific basis for their public claim, made along with their release of the 2005 Science article: “The southeastern U.S needs to begin planning to match the increased risk of category-5 hurricanes.”

What increased risk?

If they have the data to support that claim, they should make it public. Anyone reading that claim would think that their Science paper showed such a significant increase. But it didn’t. Even after I added the 2005 data on category-5 hurricanes, which they did not use because the season wasn’t over yet, the quick regressions I ran didn’t show any statistically significant increase in category-5 storms.

Did they just fabricate this claim of “increased risk” of category-5 storms?

If they don’t have such data”¢’¬?-and it appears that they don’t”¢’¬?-then it’s irresponsible for a scientist to imply a scientific basis for such a fear-inducing claim released along with a scientific paper. And it’s particularly odd that the authors of the 2006 BAMS paper actually discuss and criticize the mainstream press for poor environmental reporting that gives too much weight to critics of the environmental orthodoxy. The authors tell us that their scrupulousness put themselves at a disadvantage in public debate because they restricted themselves to making claims that were supported by peer-reviewed articles and data. Yet their own peer-reviewed data would seem to me to show that they had no scientific basis for saying that “The southeastern U.S needs to begin planning to match the increased risk of category-5 hurricanes.”

Bottom line:

1. The new BAMS article shows persuasive evidence of a huge jump in category-4 hurricanes 1970-2004, but declines or flat trends in the numbers of stronger and weaker hurricanes.

2. The BAMS article does not deal adequately with whether its choice of a relative cool period (1970) as a starting time influenced the results.

3. In both their 2005 Science article and their 2006 BAMS article, the authors appear to double count data from 1994, but it may just be the result of repeated typographical errors in both journals.

4. In the BAMS article, the authors criticize others for irresponsible public statements on global warming and praise their own caution, yet the press release they quote asserts an “increased risk” of category-5 hurricanes threatening the southeastern U.S., but neither their own two articles, nor the data they claim to have used, show any such statistically significant trend.

5. If the quality of peer review and editing in this field is only as careful as it seems to be on the BAMS paper, then I think it prudent for educated lay people to continue to be skeptical about the research and public assertions of climate experts, especially those who tell you to just trust them or who insist that they are just relying on what their data show. Wouldn’t expert reviewers of the BAMS paper already know that there had been no increase in category-5 hurricanes in the North Atlantic, and thus that the public statements that the authors proudly trumpet were irresponsible? Certainly, this brief foray into the literature leads me to be less confident of the conclusions of climate researchers, no matter how fervently they are asserted.

If you google paleohurricanes or paleotempestology, you can find some interesting thoughts on hurricanes in the past. At the CCsp workshop last November, the most interesting presention iMHO was by Liu who said that the last millennium had had anomalously low hurricane levels in the last 5 millennia (using sand deposits in coastal marshes and lagoons as a proxy as a I recall).

Here is my take on the debate:

* both the skeptics and warmers (Emanuel) say there is no long-term trend in tropical cyclone numbers. Worldwide, the annual number of cyclones continues to be ninety, give or take ten.

* the skeptics say that the intensity of storms is rising as sea surface temperatures rise. They use data from the last fifty or so years to support their position

* the warmers, especially those closest to the data collection (Gray, U.S. Tropical Prediction Center scientists) say the older data is flawed

* the warmers dismiss Gray and the TPC skeptics as “non-theorists” (DRS note: the issue seems to be data collection, not theory, so protests about people being non-theorists seems odd)

* the key metric is ‘power dissipation index”, which I believe uses storm velocity cubed and duration. (DRS note; if you take a data flaw and cube it, you get a much bigger flaw. Data purity is important.)

* the warmers say that, as sea surface temperature rises, storm power rises, and the historical data show increasing power, which matches rising sea temperature.

* the skeptics say that the early data, especially outside the Atlantic, tended to mis-estimate the strength and duration of earlier storms. GI, GO.

* the skeptics’ Atlantic data shows a multi-decadal oscillation (see Willis’ power dissipation chart on the other bender thread, #36 I think), with peaks roughly around 1890, 1930 and 1960. They believe we are currently in a busy part of the cycle, which began about 1995.

* the warmers seem to not believe in the oscillation (see Mann and Emanuel 2006) and instead, once data is filtered and statistically treated, the oscillation disappears and is replaced by (my guess, having not read the paper) a hockey stick.

* this question of the existence of the oscillation seems to have political implications. If the (apparent) Mann/Emanuel hockey stick is true, then those in the Atlantic basin (UCS, Canada, Europe) better do something about global warming or be blasted by greater storms.

* however, if the have been as-stormy periods in the past, and the Atlantic basin has survived before, then the political urgency drops.

Steve B., Peter H., Wiiis, bender, others, have I misstated anything? I’m just trying to summarize things in my mind.

Thanks

-Warmers are afraid of statistics that quantify the degree of uncertainty, and actively seek to suppress that information in scientific documents fed to policy people, in order to grease the wheels of an activist agenda

-Skeptics want policy to be made in light of the facts about uncertainty – uncertainty about temparautre trends, hurricane trends, CO2 sensitivity coefficients, the entire functioning of earth’s climate system, etc.

Speking of which, I haven’t received any response from Webster about the French thing. Maybe Ritson could help.

re #6.

Warmers desperately want to link extreme weather events to AGW, but I read somewhere that if the planet warms, the temperature differential between the Earth’s poles and equatorial regions will drop, resulting in weather that is more tranquil overall. Sorry I can’t provide any refernces, but does anyone know about this theory and any support that it might have historically?

I took a look at Emanuel’s (warmer) publication list, looking only at his work on tropics-related topics. I counted 45 publications, more or less, in his 25 year career.

I took a look at Landsea’s (skeptic, at least on tropical cyclone intensity and AGW) publication list, which is all tropics-related. I counted 53 publications, more or less, in his 15 year career.

The pedigrees, for whatever they are worth, look about even.

First-time poster, long-time reader of this site. Emanuel published a brief overview of the physics behind the link between hurricane wind speed and sea surface temperatures in the August, 2006 issue of Physics Today, “Hurricanes: Tempests in a greenhouse.”

http://www.physicstoday.org/vol-59/iss-8/p74.html

He presents an elegant argument, using a Carnot cycle analogy, to derive the maximum wind speed v as

v^2 = (Ts – To)/To * E,

where Ts is the ocean temperature in Kelvin, To is the temperature of the outflow (=200 K or so, according to Emanuel), and E is a parameter that accounts for the thermodynamic disequilibrium between the ocean and the atmosphere. If E remains constant as Ts is increased by 1 degree C, then the wind speed will increase by about 0.5%. My conclusion is that, while there is solid physics to support the connection between higher wind speeds in hurricanes and increased SST, the all-important magnitude of the effect is probably not measurable with existing hurricane diagnostics. Has Emanuel addressed this?

Re #11: I’m sure Bloom will have an answer shortly. I don’t.

#5 SteveM, I’m not at all surprised by your report that

“Anomalous” has become a touchstone for me. The word shows up often when (honest) earth scientists describe — usually with some embarrassment — carefully collected data on natural processes. It typically refers to some “statistically significant” but inexplicable artifact in the error structure of the data. While such artifacts are often interpretable as a trend or a period of extended excursion from the mean, many scientists have come to believe they represent something else, a truly deep and fascinating aspect of Mother Nature. Though a complete physical explanation for this phenomenon is lacking, it has been observed so frequently that it is hard to deny.

“Long-memory” (LTP, etc.) stochastic processes can provide a realistic model for the “noise” associated with these phenomena, and LTP models might be able to provide a reasonable framework for computing statistical significance. However, they do not directly answer the deeper, really interesting, questions: What is really going on? Why do natural processes exhibit behaviors so close to chaos?

What’s worrisome is when “scientists” try to remove these “blemishes” from their data. Sometimes they eliminate the offending data points altogether, usually mumbling something about “poor data quality.” Or they apply a statistical method that smears out the anomaly. Or, other times, they ascribe the “anomaly” to some arbitrary causal mechanism. While this last approach may cover up the artifact when viewed in the time domain, the artifact usually remains visible when you look at the frequency domain. In any case, to treat these natural systems this way reflects not only poor modeling, it also suppresses the most interesting part of the story.

Re #10

I also took a look at Bill Gray’s publication list. I counted 53 journal articles, more or less, over a career of around 35 years.

So:

Landsea (skeptic) 3.5 tropical publications per year

Emanuel (warmer) 1.8 tropical publications per year

Gray (skeptic) 1.2 tropical publications per year

Personally, I don’t think that size matters, that what counts is the content.

re: 12

Mr Bloom (or Mr Dano) discussing atmospheric thermodynamics? Now that would be a hoot!

Re #11

Emanuel states in his FAQ that, for a 1C rise in sea surface temperature, hurricane wind speed should increase about 5%. He also states that other modeling shows a somewhat smaller increase.

The worldwide tropical sea surface temperature has risen about 0.3C over the last 30 to 50 years.

So, the predicted increase in wind speed is maybe 2 or 3 mph.

The skeptics say that measurements, especially in years past, were, and are, not accurate enough to detect such small changes.

Emanuel uses power dissipation for his index, which lets him cube the windspeeds. That tends to make any slight wind speed differences in the historical record greater.

Suffice it to say that, to find a 3 MPH speed increase, the historical data (intensity and storm duration) has to be very, very good and, preferrably, cover a long period. Otherwise, GIGO.

“for a 1C rise in sea surface temperature, hurricane wind speed should increase about 5%.”

Shouldn’t it be a differential, difference between sea surace and atmosphere?

Re #11, would that the real world were so simple as Emanuel’s idealized Carnot cycle … Emanuel knows it is not. He says in that paper:

A second difficulty with the idealized equation is contained in his statement:

This is a huge oversimplification. Heat is transferred from the ocean to the atmosphere by a variety of mechanisms: evaporation, radiation, conduction, and spray are among them. To claim that this very complex system is linear with respect to v, the wind speed, is a gross generalization.

Next, Emanuel has conveniently ignored one of the largest heat transfer mechanisms in the hurricane. This is hydrometeors (rain, hail, graupel, sleet, etc.). The amount of energy transferred from place to place in the hurricane by hydrometeors, while not as large as the wind energy, is far too large to ignore. Every bit of energy that falls as rain decreases the wind speed.

Finally, there is a larger problem, this one also not acknowledged by Emanuel. The problem is that a hurricane is a natural turbulent flow system. With the recognition in recent decades of the Constructal Law (see for example Thermodynamic optimization of global circulation and climate, Adrian Bejan and Heitor Reis, or do a Google search on “Constructal Law”) has come a growing awareness that traditional methods such as the Carnot Cycle above give highly inaccurate answers for systems which are far from equilibrium (such as hurricanes).

Overall? It’s not an “elegant argument” as you say, it’s a Tinkertoy example and analysis which ignores many real-world aspects.

w.

Re #16, you quote Emanuel as saying:

I have confirmed that this is what he says in his FAQ.

However, this is about ten times larger than the increase given by his Carnot Cycle equation above, which is only about half a percent increase for a 1°C temperature rise, viz

((301-200)/301) / ((300-200)/300) – 1 = 0.664% wind speed increase

Clearly, either his FAQ or his Carnot Cycle equation is a long ways off the mark … or both … say what?

w.

re: 17

It’s multiple factors including temperature (as you pointed out), pressure and humidity gradients, plus recall that relative humidity increases with temp. It’s this dynamic of relative humidity that is emphasized in AGW-proponent arguments, as saturation vapour pressure (or really the partial pressure) of water increases with atmospheric temp warmed by even 1 degree.

In an ignorant press of course, all of that 1 degree increase is AGW — a view not dispelled by the authors. Such simplifications also contribute to “CO2 is The Don” arguments, while “H20 is merely a thug reactor.” There’s a lot more going on of course, but this narrower emphasis plays nicely into the boogie man publicity of CO2.

I haven’t seen Emanuel’s calculations, but I suspect that the answer is a qualified “yes, it’s a differential”. I think his numbers assume that sea surface temperature rises yet the environment (mid and upper troposphere) remain at a constant temperature.

Interestingly, the GCMs forecast that the upper temperatures rise faster than the surface temperature, at least in the tropics, so that assumption seems shaky. In general, the GCM atmosphere looks more stable, not less, than today’s.

But, there is a enthalpy differential in Emanuel’s model, which may grow with increasing absolute (but constant relative) temperatures.

An unusual headline for climate: A panel (IPCC) lowers global warming:

http://www.stuff.co.nz/stuff/0,2106,3784878a7693,00.html

http://news.google.com/news?ned=us&scoring=d&ncl=http://www.stuff.co.nz/stuff/0,2106,3784878a7693,00.html&hl=en

The Hadley Cycle was dsicovered long before the Carnot Cycle. If I’m not mistaken (and I don’t voich for it), Carnot used the Hadley Cycle as an analogy for his cycle. It’s funny that Emanual doens’t mention the Hadley Cycle, which seems highly relevant in dissipation of tropical energy.

George Hadley, 1685 – 1768 published his theory based on wind records from British ships in 1735. Amazingly, the structure of the circulation systems within the troposphere was considered to comprise three cells in each hemisphere; the Hadley Cell, a Polar Cell and in between the Ferrel Cell. This was the basic textbook depiction until about 35 years ago when the Ferrel Cell disappeared. We now consider a two cell system (Hadley and Polar) comprising basically a polar air mass and a tropical air mass. Most people still dont’ know that the tropopause that marks the upper limit of the troposphere in which these cells operate is twice as high over the equator as it is at the poles and the height also varies seasonally – at the poles it varies between 7 and 10 km (greater annual temeprature range) and between 17 and 18 km at the equator. in addition, the tropopause is not continuous but has a gap coincident with the middle latitude region where the two cells meet.

Here is a nice map showing the actual complicated boundary between polar and tropical air at the tropopause level. I don’t see a gap.

http://www.atmos.washington.edu/~hakim/tropo/

Script

Steve M., your link in #26 doesn’t work …

Thanks,

w.

It does now after I fixed Steve’s speling

RE: #3 – if warmers can discredit the AMO, then they can blame the current hurricane cycle on AGW, for maximum Algore factor.

Re: #18- Thanks for the details, Willis. Great insights, as usual. It appears to me that a lot of the required details you raise provide additional energy dissipation paths, and will act to reduce the wind speed increases caused by increased SST, which makes any claims about increased SST’s causing increased hurricane intensities even more suspect.

Also, the denominator of your calculation in #19 should be 200 K, not 301 K. The conclusion remains the same, however.

#25

You don’t see the gap in map view but in cross-section.

Yak (#30) thanks for your comment. Yes, the denominator in both should have been 200, and the increase should have been 1%. This is still a long ways from the 5% he claims in his FAQ … go figure. Either Emanuel is wrong … or Emanuel is wrong … but which one?

w.

re 31

show me a cross section, anyway what would a “gap in the tropopause” mean?

Here is the WMO definition:

see also

http://www-das.uwyo.edu/~geerts/cwx/notes/chap01/tropo.html

http://en.wikipedia.org/wiki/Tropopause

#re 31/33

Tim & Hans,

I have to agree with Hans’ retort “a gap in the tropopause”

That said, and following on from Hans’ links in #33 above, the gap is strictly theoretical.

Are we in reality or Sureality

Re #32- Willis,

In your calculation in #19, there is a missing square root sign. In #11, my equation syntax may not have been very clear. Emanuel’s result is (v)^2 =E * (Ts – To)/To. Or,

v = sqrt (E * (Ts – To)/To)

I still get a 0.5% increase in wind speed for a 1 C temperature uptick. Maybe Emanuel’s FAQ has a slipped decimal point? I can’t see how to reconcile the factor of ten difference in results reported concurrently by the same author.

Re# 34

In response to my comment on the climatesceptics group (yahoo) that, “I had understood the tropopause was not actually continuous at the point of the sub tropical jet.” William Kinninmonth replied. “That is true. It is also true for the polar front jet. There are strong vertical motions associated with parts of the jet streams and these cause strong horizontal potential temperature gradients.

Re #32, Yak, thanks for the clarification. Yes, the two results are different by an order of magnitude, with the FAQ figures saying 5% increase in wind speed for a 1°C temperature rise, and the Carnot figures showing a 0.5% increase.

However, that’s not the only oddity in the Emanuel results. I took a look at his graph of his power dissipation index (PDI) versus surface temperature. Here it is:

Now, the oddity is this. He’s rescaled the two to the same scale by multiplying the PDI by 2.1 x 10^-12. Looking at the period from the low around 1970 to the 2005 high, the sea temperature changed from from 0.4 to 1.4, a sea temperature change of one degree. And during this time, the PDI increased (according to Emanuel) from 1.9 E^11 to 7.4 E^11.

He is saying that for a 1°C temperature rise, the PDI increases by a factor of 3.9 … almost four times the power dissipated for a one degree temperature rise? I don’t think so …

The database shows no increase in the average duration of the storms from 1970 to 2005, so this means that the PDI increase must come from a purported increase in the wind strength. Since the PDI has increased by a factor of 3.9, this means that he is claiming that the wind speed increased by the cube root of 3.9 … which gives us a 60% increase in wind speed from a 1°C temperature rise.

Curiously, I had done an analysis comparing my own PDI figures with the sea temperature figures, and I found much less change in PDI during the 1970-2005 period. I never mentioned it, because it was so much smaller than Emanuel’s figures that I just assumed I’d done something wrong. Now, however, I see that his figures are very large …

What am I missing here?

w.

PDI takes into account wind speed, duration and size.

RE #37

Several things that I wonder about are:

1. The SST zone chosen (20W to 60W, 6N to 18N) is a subset of the tropical Atlantic, perhaps half of the Atlantic tropics, and is not actually the warmest part. The warmest region is west of 60W (generally, 60W to 95W and north to about 30N). It seems like the effect should be most dramatic in the warmer region, so why choose the cooler region for the graph?

2. In general, the storms are larger, more intense and spend much of their existence outside of Emanuel’s chosen region. The chosen region is actually something of a breeding ground storms and often they spend their “adult life”, where intensity is most critical, elsewhere.

3. I wonder why use just September SST, rather than, say, August thru October SST. September is the month of maximum storms, of course, but the storms in the PDI index probably include those in August and October, so why not use that SST data, too?

It may be that expanded SST data (expanded in region and time) would correlate just as well – I don’t know. His data selection simply raises my curiosity.

Perhaps Emanuel explains in the article his reasons for using limited SST data.

Further to my #39, linked is a map of the 2005 season. Look at the area of 20W to 60W and 6N to 18N.

Compare that box with the actual tracks of the storms, and where they spent most of their existence, especially the intense part.

link for 2005 season

OK, I’ll let this one go after one more comment:

I went to the GISS website where one can create sea temperature trend and anomaly maps using the Hadley data. I looked at Emanuel’s chosen box (East Atlantic) and compared that with the West Atlantic, both beginning-to-end and at different intervals. And, I looked at different months. (Caveat: this was an “eyeball” look at 250km resolution maps.)

What I saw was that, in general, the West Atlantic (the area where most of the PDI “points” were earned by storms) did not warm as much as the East Atlantic. Also, the regional warming was not completely correlated over time, meaning that in some periods one area warmed more than the other, and vice-versa.

Also, using the adjacent months, especially Ausgust, tends to add even more variability to the situation.

In short, I think that if Emanuel had used a broader (and more appropriate, in my opinion) sea surface temperature region and season, Emanuel’s figure 1 would not track so impressively.

If I can find a tool that lets me calculate a SST-time plot for, say, August-Oct over the whole tropical Atlantic (or better, the more-critical West Atlantic), i will post it.

Willis writes:

Because you have (1) cherry picked the time points (1970, 2005) and (2) inferred that the sample PDI at each time point is the ensemble mean PDI you have artifically inflated your assumed trend. The actual trend in PDI is probably half what you are assuming, if you used a statistically robust fitting procedure. (What I’m pointing to is the same difference between observation data points vs. fitted trend lines in the opening figure.) This may be not be the only thing you are missing, but it is one of them.

Fit a trend line to the PDI values as per the posted script and you will see what I mean. Working off the fitted trend line prevents you from cherry-picking start and end dates that happen to be located at noise-related local troughs or crests. Your factor may drop from 3.9 to 2.

Re #1:

1. Why are you surprised, TAC? [I’m not. The AR1 that alot of people are talking about is often not the result of an endogenous first-order autoregressive process, but instead some exogenous trend that affects only a part of the time-series. That exogenous forcing factor may strongly affect storm genesis, but only weakly affect storm decay.]

2. Are the weakly positive PACs at lag 10 and 20 *not* interesting? [Bloom wants someone to talk about “astrology”. Maybe he’s thinking solar cycles.]

Re # 42, Bender, thanks for your post. I had written:

You replied:

Since the correlation of the SST and the PDI claimed by Emanuel is so close, why does it matter whether I use

a) the trend of the SST vs the trend of the PDI, or

b) the 1970-2005 change in SST vs the 1970-2005 change in the PDI?

The differences between the two should be very small. In addition, since the correlation is so close, picking different start and end points should make very little difference.

You suggest that I check this by looking at your script. However, I’m using Emanuel’s figures, not those of the script, so the script figures are immaterial.

… please hang on while I get Emanuel’s figures …

OK, thanks for waiting. Using Emanuels actual 1973-2003 figures, rather than my estimates from just looking at Emanuel’s graph, I get the PDI increasing by a factor of 3.93/°C change in SST.

Using the 1973-2003 trends, I get the PDI increasing by a factor of 5.25/°C … a bit larger than a straight estimate …

Using the full dataset trends, 1949-2003, I get the PDI increasing by a factor of 4.75/°C, a result between the previous two figures.

But bender, for any one of these three estimates, Emanuel is showing a greater than three-fold increase in the PDI per 1°C increase in SST … does that make sense to you?

However, I asked “What am I missing”, and I realized later that I had miscalculated the increase in wind speed because I had not included the change in the total number of days of hurricanes. These increased during the 1973-2003 period by a factor of 1.8. This makes the calculated wind speed increase 30%, not 60% as I had reported before.

This leaves us with three different figures from Emanuel for the wind speed increase due to a 1°C SST increase “¢’¬? 0.5%, 5%, and 30% …

w.

Haven’t been following the argument closely enough to comment authoritatively, Willis. Just thought I might have something you might have overlooked. If my point is irrelevant, or a mere glancing blow, to your overall argument – which it may well be – I’m ok with that. Carry on.

Heck, bender, I don’t know if your post is irrelevant, because I’m not even sure if my post is relevant. That’s why I asked, what am I missing here?

In any case, bender, thanks for your contributions to the thread, sorry if the tone of my post was out of line.

w.

For a hint or two about Emanuel’s qualifications and status in the field, see here. That aside, it’s a nice not-overlong summary of the state of TC science as of 2003.

#43 bender, in #1 I wrote

to which you responded in #43

Well, the reason I’m surprised is that I’ve looked at a lot of earth science datasets, and they are rarely this simple (unless there is “something funny” with the data). My experience is that Mother Nature abhors linear systems (and specifically white noise); on the other hand, human engineering loves linear systems (and white noise). (I once heard that the goal of engineering is to linearize natural systems — with enough concrete and steel, you can make Mother Nature behave. At least we used to think so…). As a consequence, when I see really boring data, I look for a human influence. The unstated intent of my question in #1 was: Do these data accurately represent the whole truth about what occurred, or have they been “filtered”?

Considering the question from the perspective of process physics, it would seem that many of the factors that are hypothesized to affect hurricanes (SST; climate variables; CO2 concentration(?)) exhibit either trends or complex time series structures. Assuming this is true, I would expect these complex signals to show up in the landfalling hurricane data.

By analogy, I would also be surprised by reports that a company’s earnings had, over a period of decades, fluctuated randomly around a constant value, unaffected by market conditions.

Re #47:

I suspect R.A. Fisher would disagree (but of course he cherry picked his systems). TAC, how do you know the hurricane landfall numbers are not the product of a chaotic (nonlinear) process? Chaos and randomness can be pretty hard to distinguish. (Indeed, that is the idea behind digital random number generators.) Note: the PACFs shown in the intro graphic are for a linear model. Maybe a nonlinear time-series analysis would turn up something more “interesting”.

#48 bender: I’ll concede the point that I don’t know. However, having had some experience with data fabrication in the earth sciences, my initial reaction to “too good to be true” is suspicion.

Also, since you mentioned RA Fisher, you are likely aware that he used to argue for two-sided tests of a specific form: One ought to reject H0 if the data are too consistent with H0. I always liked that.

Re #49

Really? No, I was not aware of this. Do you have a reference I could look up?

#50 bender: Google “ra fisher and mendel’s data” and you’ll get a ton of references. I don’t know which one is the most reliable or accurate.

bender, I just spent a fascinating but ultimately frustrating hour searching the web for that RA Fisher quote generalizing his “too good to be true” comment about Mendel. FWIW, My “source” was a spoken (and, it seems, mis-remembered) remark by Lionel Weiss in a grad stats class 25 years ago.

However, the search did yield some interesting material. In particular, I would recommend taking a look at Patrick Bateson’s editorial on “Desirable Scientific Conduct” (Science, 4 Feb 2005; here). It discusses “selective use of the thumb” — a topic likely of interest to readers of ClimateAudit.

Sounds interesting. Link didn’t take.

#52 Here‘s the Bateson link with tinyurl — see if it works this time.

Bateson’s editorial mentions Nancy Olivieri of Toronto. Her legal battles have been legendary. Her lawyer (another Mac) is a close friend of mine.

Re: Fisher & Mendel: That makes sense. If your phenotype frequencies always converge exactly on the Mendelian expectation (remember those Punnett squares from high school?), then the data would be “too good” to be probable. I don’t see the landfalling hurricane data as falling in that category, however. They are noisy. Mendel’s data was not noisy enough.

The Bateson editorial refers to “affiliation bias” as something to be deeply suspicious of. (Indeed, it is the principle that Mark A. York uses to try to discredit M&M.) Myself, I decided long ago that affiliation bias is only worth considering in the early stages of debate. It’s been 8 years since MBH98, and I think any “affiliation bias” M&M might have had at the outset no longer matters. The source of the original skepticism does not matter when the skeptic’s argument has been proven correct. The fact is M&M’s arguments are correct, whereas the flaws in MBH98 (which are being replayed again and again in the other multiproxy studies) are fatal.

Re: #37, Figure 1 from Emanuel

This chart shows a relationship between SST and cumulative storm power. Emanuel acknowledges in the body of his article that the SST is from the Atlantic’s “seedling” area and not from the western Atlantic, where about 70% of the PDI is generated (my estimate).

In other words, it does not show the SST beneath most of the storms, but rather the SST in the region where many originated.

Warm SST in the seedling area tends to make storm seedlings more robust and mature. The storms begin earlier and become more robust before they encounter some of the adverse conditions (upper lows, land masses) of the western Atlantic. Also, the seedling area contains the important 26.5C isotherm, and so any warming of it will increase the +26.5C region, aiding storm formation.

Summarized, it’s not surprising that warmer seedling conditions generate a higher basin PDI, due to longer-lasting and more robust storms. Also, the longer a storm lasts, the greater its chances of reaching its potential (higher intensity).

If one’s goal is to see if SST is creating stronger storms, then the thing to look at is the SST beneath each storm and compare that to that storm’s intensity. That is a lot of data-crunching, but data exists.

I took a look at the storm tracks for 1992-2003, the “blade of the hockey stick”. My crude estimate, using storm days and intensities of intense storms, is that perhaps 30% of the 1992-2003 PDI was earned by storms east of 60W (an area somewhat remote until the era of satellites). For the period of 1935-1945, an era with SST close to current levels, there were no reports of intense storms east of 60W. I find that odd. It makes me wonder about the quality of historical data from that region. I will take a shot at figuring a PDI for the better-monitored western Atlantic (west of 60W and south of 30N) for 1935-1945 and 1992-2003.

Worlwide, the number of TS for the last 20 year has been constant. The North Atalntic has shown the greatest variance. This variance appears to mirror the Multidecadal Oscillation as well as the NAO.

Another point to make is the fact that many TS never hit land, and are not tracked by air recon, but satellites. Storm intensities taken from Satelite recon is very subjective -esp if the storm doesn’t have a well defined comma cloud or eye). Geostationary satelites have low resolution-3nm visual, 6nm IR. Polar Orbiting satelites have resolutions of .3nm in the visual. The process developed by Dr. Dvorak depends on how much cloud detail is present during the entire the storm’s entire life cycle. If the analyst misses this detail, his intensity calculation’s suffer.

One way to limit the errors of satellite analysis is to deploy more buoys to both the NW Pacific and South Pacific as well as the Indian Ocean.

JP, is this conclusion based strictly on data available in the literature, or do you have additional insight above and beyond what’s been published?

Bender,

Here are the some links to the JTWC. Instead of giving you ancedotal info, I’ll give the the links to thier archives, FAQ, and annual reports, etc… It would be very interesting to see what a comparable analysis for the West Pac would look like. From what I can see, there hasn’t been the elevated number of TS in the West Pac as comparable to that of the North Atlantic. There have been the occasional Super Typhoons, but this can be expected in an Ocean as large as the Pacific. The JTWC Are of Resonsibility doesn’t extend to the South Pac, but they do track cyclones in the North Indian Ocean.

http://tinyurl.com/kushs

http://tinyurl.com/hew6m

http://tinyurl.com/bqhdh

Another interesting thread. I will try to address a few points:

There is debate within the hurricane community as to which “metric” of intensity to use. There are a variety of measures, that tell you different things. Momemtum based measures are proportional to wind speed, Acummulated Cyclone Energy (ACE) is proportional to wind speed squared, and PDI is wind speed cubed. The most frequently used one is ACE. There are also cumulative extensive measures (for an entire season) that depend on the number of storms (as well as some power of wind speed and duration of the storm), plus measures that are intensive and don’t depend on the number of storms (seasonal average wind speed would be an example of intensive variable). Our variable NCAT45 is of the momentum category, and is intensive if divided by total number of storms. In my opinion, you need to look at both the extensive variables like ACE, and the intensive variables also to sort out the contribution from intensity, duration, and total number of storms. Kerry Emanuel is the main person using PDI, and people have started using PDI largely because Kerry has been using it. Kerry likes it since it correlates well with the SST and emphasizes the stronger storms. My preference is to look at duration, number and the intensity distribution separately, in addition to ACE, to try to untangle what is going on.

Re SST. The convention in the tropical cyclone community has been to look at SST in the “main development region” of each ocean basin, which is where the storms typically spin up initially to tropical storm status. But I agree that this is misleading. It seems that you need to look at the tropical SST averaged over the ocean basin, and also the gradients in SST which influence the atmospheric dynamics. Patrick Michaels in a recent GRL paper attempted to track the SST underneath a tropical storm, but that turns out to be a not very useful thing to do since there are so many variables involved in the evolution of an individual storm, not to mention the fact that the storm itself modifies the SST.

Re U.S. landfalling hurricanes, I addressed this a little bit in my congressional testimony

Click to access GT%20-%20Curry%20Testimony.pdf

The “cycles” you see in your analysis have been seen by others, I am particularly interested in the ~20 yr cycle, working on trying to understand that one now. The number of U.S. landfalling cyclones (again, using running mean to filter high frequency variability from El Nino etc) shows strong 70 year cycle and a noticeable 20 yr cycle (note I am told that the total number of named storms is the most reliable in this data set).

The 70 yr and 20 yr cycles are shared with the total number of NATL named storms, but the trend since 1970 (after you filter out 70 yr cycle) is barely there in the landfalling statistics. This is just what I have eyeballed, we have not done any serious statistical analysis of this time series yet, and I am certainly interested in what this group has to say on the subject. One hypothesis is that the AMO may have its greatest influence more in the track of the storms rather than in the number/intensity of storms that are mainly sensitive to SST (if you filter out the year to year variability).

Welcome back Judith. Your informative posts are appreciated, as always.

One thing to keep in mind when looking at US landfalling storms is that the majority, perhaps 70% to 80%, originate in the western basin (west of 70W). The factors (low shear, upper high pressure, presence of old fronts, etc) that favor development in this area (West Caribbean, Gulf of Mexico, Bahamas) may be different from the factors (Saharian dry air, SST, trade wind speed) of Emanuel’s seedling box (east of 60W). So, the US landfalling database may appear different from the overall Atlantic, because, to a large extent, it is driven by different factors and actually is different.

Speaking of Emanuel’s SST box, I think his paper’s correlations depend to some extent on the exact definition of the box. I found it odd that his box extends down to 6N, which is below the beaten path of late-summer disturbances. When I redefined his box to a more appropriate (to me) 10N, the correlation fades a bit. If I include the western basin, the SST hockey stick flattens and the correlation breaks further.

As a side note, I find his figure 3 box to be a head-scratcher. Figure 3 PDI is based on northern hemisphere storms yet his SST goes to 30S, so that half of his SST box comes from the uninvolved southern hemisphere. And, I have no idea if he’s mixing seasonal SST data (Pacific) with September data (Atlantic). I am not suggesting anything, but simply wondering, as my kids say, “What’s up with that?”

David

One final comment on Emanuel’s Figure 1:

The definition of the SST box affects the shape of the “handle” of the Figure 1 hockey stick. If the box is defined as 6N to 18N then the 2000s are the warmest period. If the box is defined as 10N to 18N, or 10N to 20N, then the period 1932-1943 looks to be as warm as 1992-2003. And, 1938 looks about as warm as 2003. (Caveat: this is based on me eyeballing data: I have not done all the averaging to get the exact grid numbers.)

Again, to me, 10N to 18N seems more appropriate than Emanuel’s definition (6N), based on the tracks of typical late-summer disturbances. If my box definiton is used, then the hockey stick becomes valley-like, and the 2000s are no longer as prominent.

I will crunch some numbers this weekend and see if my eyeballs are correct.

Re #63

Careful, Dr. Curry, if you try to understand low-frequency variability in these kinds of data

Steve Bloom might accuse you of being an astrologist. And that would be a major hit to anyone’s credibility.

Dang, forgot the smiley’s again.

A curiosity I noticed, other than the selection of a small sea surface area to compare against, is the fact that the North Atlantic data are compared to the September average SST, whereas the North Pacific data are compared to the July-November average SST. Since Emanuel provides no a priori explanation of this choice, I tend to suspect selective data mining … but YMMV.

w.

Thanx for the explanation Willis. I was wondering why you got different results. My I suggest a nonlinear effect where warmer temperatures increase late season hurricanes but decrease mid season hurricanes. As the temperature gets warmer what is late season changes but not the number of hurricanes. Atleast this seems true in the gulf. In the pacific things might be different because of different ocean currents.

Re #67: Analysis of climate cycles is one thing, bender, while assuming a priori that they are evidence of insolation changes is something else. That sort of thinking seems to be a slippery slope around here. OTOH I’m sure we’d all be very interested to see your predictions for 2010 and 2015.

Also, didn’t this whole discussion (now encompassing three threads and over 500 comments!) begin with a question you had for Judy? Since you seem shy about asking it again, I’ll reproduce it for you:

‘In Curry et al.’s Fig 1. the trends are so obvious that no statistics are required to convince a skeptic of their significance. But the observations are for 5-year windows. Taking a moving average will tend to exaggerate a trend by reducing interannual noise. If they had presented annual observations, how much would that have fuzzed up the trends? How much would it have compromised the statistics? Their argument 1 on p. 1028 is fine … as long as the uncertainty surrounding the statistics is taken out of the picture. Why did the authors choose to eliminate the uncertainty by using a 5-year window? Because it simplified a story intended for a lay audience? Is this a simplification, or an oversimplification?

‘”Science is what we have learned about how to keep from fooling ourselves.” – Richard Feynamn’

I’ve never been clear as to why, but you and Steve M. went on to make a huge deal out of this. Hopefully Judy will answer now.

Re the 5 year windows, this was Peter Webster’s choice on how to present the data. When he first started looking at the data, he was so struck by the large increase in CAT45 hurricanes that he did not think any fancy statistical analysis was needed. This paper was submitted around July 1, 2005. We didn’t think this would get published, since we had recently found out that we were apparently “scooped” by Kerry Emanuel who submitted his paper a few months earlier than us. Prior to the 2005 hurricane season, there was no particular interest in the hurricane and global warming issue, there had been a low level dialogue about this for the past decade or so. Subsequently, given the concerns that were raised about the quality of tropical cyclone intensity data particularly outside the NATL, it didn’t make sense to beat this data to death statistically. It will be interesting to see what the various tropical cyclone reanalysis projects deliver, this should at least give us some useful error statistics to work with.

In trying to understand long term trends or multidecadal variability, it seems sensible to eliminate those elements of high frequency (albeit high amplitude) fuzziness like el nino that are well understood and known (?) not to contribute to the long term trend (I would appreciate any robust statistical analysis of what we think we “know”)

This eschange is interesting to me, since it illustrates the gaps in perspectives of the climate researchers (wo bring rich Bayesian priors to their analysis) vs the perspective of the statistician/econometricians (I’m prepared to try to bridge this gap, but I suspect the gap with the lawyers eg volokh is beyond me). Both perspectives are valuable, and bridging the gap between the two would be a really good thing. Climate researchers are not all expert statisticians (I certainly am not, although we have two good statisticians in our group Carlos Hoyos and Viatcheslav Tatarskii) and I agree that there is plenty to criticize in much of the statistics in climate research. At the same time, statisticians without understanding the underlying physics, phenomenology and data constraints provide a limited contribution. So I am hoping that this exchange will help bridge this particular gap.

The debate here seems to be limited about the statistical analyses of the data, rather than the data and the conclusions from that data, per se.

If it is obvious there is no need for statistics.

If it isn’t, statistics assume importance to often result in competent scientists calling white black in contradiction to the evidence.

#72. Judy, rgarding statisticians. I’ve chatted with von Storch about this and he has got frustrated over the years with most statisticians who try to deal with climate data, because their approach isn’t veryhelpful – by which I think that he had in mind that they are used to independent distributions. Econometrics is a actually a field of statistics which is completely used to dealing with annual autocorrelated data; I think that econometricians are far more likely to be helpful to an average climate statistical problem than a statistician drawn at random from a university statistics faculty. Some people in business statistics are even more used to weird distributions – plus they are very used to the perils of self-deception when dealing with autocorrelated series. Econometrics is really quite a large field with many journals. Maybe what’s needed is a Journal of Climate Statistical Theory or some such title, where a proper understanding of the issues was built up.

I would say that that’s exactly when fancy statistical analysis is needed. Do you have a long-tailed distribution? Are the statistics autocorrelated? Hurricanes are obviously a form of extreme values – so you’re already deep into the tail of some kind of storm distribution.

Some of Mandelbrot’s early work on wild distributions was stimulated by hydrological variables. Ex ante, I would expect hurricanes to have a very “wild” distribution statistically. Having said that, it also seems quite plausible to me that warmer SSTs would result in more hurricanes.

Not interested in hurricane dynamics. Only interested in the way scientific information gets packaged for consumption by policy people. Will not say again. (“Statistics weren’t needed”, me arse.)

#74:

Such processes are also bread-and-butter for all (engineering) fields covered by an umbrella term “statistical signal processing”. For a rather extensive introduction see, e.g., the free book by Gray and Davisson: An Introduction to Statistical Signal Processing.

I should say, too, that my general unhappiness about the way uncertainty surrounding “unprecedented warming trends” is presented in graphical and statistical analyses comes by way of direct experience with junior and senior policy makers – people who routinely ignore uncertainty as an additional dimension of complexity too difficult to deal with. It makes their job MUCH easier if that information is suppressed … and so they actively seek to have it suppressed. (Witness Peter Webster’s choice, outlined in #72.) I have seen this happen with my own eyes, so don’t tell me I’m a paranoid conspiracy theorist. It happens, routinely. In fact I would say it is the prevailing culture. It is the reason why journals like Ecology devote entire issues to the problem of incorporating uncertainty into the science->policy process.

Bloom in #71 hints that maybe this minor point of mine on hurricane frequency does not merit the bandwidth it’s consumed. Maybe. OTOH, this was the entire problem with the hockey-stick. An uncertainty-free hockey stick is a convincing hockey stick. THAT is my point. (Now made so many times at CA I am starting to repeat myself.)

1/3 of “FUD” is “U”. And, unfortuantely, U (=uncertainty) is part of the equation that can not be ignored if we are to get a robust estimate of the “A” in AGW. Who shall disagree with that?

Re #77. Thanks very much for the link, Jean S. Looks like mandatory reading for all tree-ringers.

RE 75:

I think it’s plausible too, IF the temp difference between the SSTs and the mid & upper-atmosphere over those areas increases. The real power of tropical storms depends on the difference (both in temp & moisture) between these vertical levels, very generally. IOW, if the mid & upper tropospheric temps also increased the same as the SSTs, I don’t think there’d be any significant increase.

Basic greenhouse theory says mid & upper tropospheric temps will increase first, overall.

Re #80

Does basic solar theory suggest oceans may respond slowest, but strongest? Maybe the observed trend in SST-driven storm frequency is due to the latter (lagged effect of warming in the 1950s & 1980s) as opposed to the former? (These are wildly uninformed speculative questions, BTW.)

The sea surface situation is complex, IMO. First most of the Solar Shortwave radiation is absorbed somewhat to much below the actual surface and then is well mixed to a depth which varies quite a bit with season and latitude. Movement of heat below this level is very slow and dependent largely on ocean currents.

Longwave IR heat, however, is absorbed in the top few millimeters and some % of their energy is expended on increased evaporation before it mixes. This depends strongly on windspeed and so forth. Once it got entrained into a mixing cell, however, it would basically act like shortwave solar. But in any case, it doesn’t take too long to change the temperature of this surface layer, especially in the tropics where there’s both more radiation and a shallower mixing layer. That’s why we get these El Nino and La Nina situations. And these changes are far in excess to the expected changes from any increase in GHGs. Temperatures over large areas of the ocean surface can change by degrees in periods of months or a year or two. That’s why I’ve been suspicious about these claimed measurements of hundredths of degrees in the global SST and their asignment to AGW. It’s true that if there is much AGW it would eventually show up in SSTs, but there’s just not been enough time to accurately measure such changes. Those who claim to have seen it are either fooling themselves or lying.

Interestingly, Emanuel’s 2005 article notes that air temperatures in the tropics have not risen as much as sea surface temperatures, which increases the contrast and, per Emanuel, may account in part for the stronger storms.

Re 83, say what? Since the driver for the storm is the temperature difference ‘ˆ’€ T between the SST and the air temp … wouldn’t that make for weaker storms?

w.

But isnt it that SST are warmer than air temp, so if the sea surface is warming more, and the air isn’t doesn’t the satement hold true?*

*please note that this works against AGW theory in that air temperatures are not warming, if AGW was warming the air we would see a reduction in Hurricane intensity.

Re #85

That was my read on it, ET Sid. (I think Willis was just caught speed-reading. Nothing more.)

I agree.

I fear trying to correct Willis, because he’s usually correct. But it was bugging me.

Interestingly enough I did a little searching to confirm before I respond, and while I could find plenty of info on sea temperatures in relation to hurricanes, not so with air temperatures.

Re my 84, y’all are correct and I was wrong … not for the first time … nor for the last, I fear. Too much speed reading, as bender suspected.

My thanks to all for the gentle correction,

w.

You know what though? If ever Willis is so blind that he simply can not see his error, I have confidence he’s the kind of man who will provide his code for his peers to analyze. He might not be happy about it, but he would do it. That’s why he gets let off easy. Not because he’s playing for the “right team”, but because he plays by the rules. (Enough moralizing on my part.)

I think its time for a group hug.

a few further comments

For a moment, forget the “policy relevance” aspect of the hurricane issue. Purely from a scientific point of view, it would be reasonable for hurricane/climate scientists to conduct a study where they assemble a data set, present the results, and provide a physical interpretion of the data. Then, other researchers might build upon the original study and conduct a more rigorous statistical analysis of the data. And the research would proceed from there. Our initial paper was written purely to be a provocative scientific paper to present the results of the global satellite TC database. At the time the paper was submitted, we were not even thinking about policy implications, we were hoping that this would be viewed as a provocative paper that would be subsequently cited in the scientific literature.

Once you throw policy and the media into the mix, then this process does not work unimpeded (viz the theme of the mixing politics and science paper). At the time we submitted our original science paper around May 1 2005, the hurricane/global warming issue was no particular big deal in terms of policy and the media. It became a big deal in early august with the publication of Emanuel’s paper in the midst of an already very active hurricane season. Then when our paper was published right between Katrina and Rita, the #$%^& hit the fan. Now that the topic of hurricanes/greenhouse warming has clearly become a hot button policy relevant issue that people are paying attention to, I know that at least I personally feel more compelled to consider the policy implications of any further research I conduct on this topic in terms of the actual problems I decide to work on, the methodology used (in terms of data quality and statistical analyses), and the framing of the potential “sound bites” from the paper so that the results are easily and appropriately interpreted by the public. Why don’t climate researchers do this on every paper? We are not trained to do this, most of us are unaware of all the little policy wars going on surrounding this issue (and don’t want to be distracted by this stuff), and most of the papers we write (>99%) never make it into the public arena. Also, once scientists start considering the policy implications in our papers in the manner that I described, we are then characterized as having an agenda, as “warmers”, “alarmists”, etc. So what are researchers supposed to do in terms of conducting research on highly relevant topics? It is not obvious, and this entire craziness has resulted in the public perception in the integrity of climate science taking a beating.

In the most extreme case of all this (the hockey stick wars), media attention and the preparation of assessment documents for policy makers resulted in the dueling realclimate vs climateaudit blogs and substantial polarization of the groups, making it very difficult and contentious to come to something sensible.

Re the hurricane issue, I (and Webster, Emanuel, Holland) have been fighting “wars” over the data quality with people from the National Hurricane Center in the media and this has been very frustrating since much of the other sides argument rests in “appeal to authority” (they know the data is bad, even though they have been using it for decades and have previously said the data was ok), without any rigorous (or even unrigorous) uncertainty analysis. There should be no wars on the statistical front, I look forward to considering any statistical analysis that you conduct on the data and trying to learn from the issues that you raise (I assume that my colleagues would feel the same way, but they are more blogophobic than I).

re #91: Dear Judith, first thank you very much for sharing your thoughs here. As a working scientist, I’ve been here for a while for a very simple reason: you get a lot of information from here. I know nothing about hurricanes (except that I lived through Hugo/89), but I’m still following these issues with interest as it seems that there are a plenty of people who know something about it and, more importantly, are ready to put their thoughts on the line of fire. So my advice to your colleagues is not to be that blogophobic, and come here to share their thoughts. Maybe they learn something here too: there seems to be some expertise from various kinds of fields here. They can be anon if they wish not to reveal their identity, so there is nothing to loose.

I think the same as with any other topic. Simply be honest and open. Honestly do your best, openly share your data/results/methods/codes etc with anyone interested, and be prepared to admit your shortcomings/mistakes etc. This is the way science progresses, and this is what all scientists should be fundamentally interested: the truth whatever it is.

I’m sure Steve can share your feelings! Just read a bit around here what he’s gone through while trying to obtain any relevant data/codes.

Again, thank you for coming here. I sure wish you keep posting!

I’m getting off-topic, but I hope Steve allows this.

Judith:

This is why I think one should not really follow media on these matters. If you are interested, check the things yourself. This is why I came here in the first place: PCA is something I happen to know, Mann’s PCA calculations are so obviously fundamentally flawed, and I still saw commentary in the web/media furiously defending him and ridiculing Steve&Ross (“the messangers”). If a student of mine turned in a term paper with such calculations, I am not sure if I would even bother to explain him/her why it is flawed instead of simply saying “this is plain wrong”. I’m sure Wegman was thinking why in the heck, after two years, this thing is even an issue!

After following a while now what’s going on in “climate science”, I am not really worried about the media. My concern (and this IMO is something the climate field itself should really pay attention to) is the peer-review process in the field, which IMO seems to be somewhat corrupt. I wish you would take time to review the discussion here (CA topic Bürger and Cubasch Discussion). Two things: 1) do the reviewers actually have something to say about the actual content of the paper? 2) Are the comments (tone/language etc) of AR2 really acceptable? If I were the editor, I would dismiss AR2 from any of the comments he/she has made so far.

Jean, thanks for these posts (hopefully we are not going too far off topic). the post on the cubasch paper was very interesting. I don’t get my primary info from the media, but the media polarizes the scientific debate and raises the stakes and makes it more difficult for both sides to come to anything sensible.

The review process (and also the assessment process) is of great concern to me. Major difficulties are encountered on subjects of “relevance”, that have economic and/or policy relevance. The problems eventually sort themselves out in the published literature, but in the intermediate term a lot of craziness can result that can mislead policy makers.

At the Fall AGU meeting in Dec, there is a Union session on the Integrity of Science to address these issues (I am an invited speaker), I will try to raise this issue on the blog a few weeks before my talk to get input from people that I can ponder for my talk.

Judith, my business background is in mining promotions ands peculations and what attracted my interest in the HS and IPCC TAr was how “promotional” they were. In a securities offering, you have to give “full, true and plain disclosure”. This means that you have to prominently disclose adverse results i.e. results adverse to your thesis. This is the part that academics – at least the ones that I’ve been in controversy with – don’t get. Their attitude – and one that is all to prevalent is a “don’t ask don’t tell” statnadard i.e. if you don’t say anything untrue, then you’re OK. “Falsification” in science codes of conduct theoretically prohibits this, but is disregarded.

For business promotions, press releases are subject to the same standards as prospectuses. There is an ongoing duty of full, true and plain disclosure.

“Due diligence” is a big topic. Since journal “due diligence” is negligible, authors cited in IPCC etc. should be obliged to provide a complete due diligence package in the form of data and methods.

There’s nothing very hard about these issues. Just start looking at how other occupations – where money is involved – handle things.

Re #94, Judith Curry

An excellent idea, and may I join Jean and others in welcoming you here.

One place you might like to start with is a presentation Ross McKitrick gave recently on the need for an independent public sector auditor to review science that is being used for public policy.

Ross, if you are around, what sort of reception did you get for this paper ? Any chance of anything arising from it ?

Actually, Steve, if Ross has the time and the inclination, that presentation and how it was received might make an interesting header post.

Re #91

Finally, herein lies the answer to my original question about the Curry et al. Fig 1 hurricane trend graphic. Clearly, there could not have been any intent to deceive policy people (by suppressing the uncertainty around the trend) because this was not a hot-button political issue at the time the paper was submitted. My suspicions were unfounded, and I have regained some confidence in the community Dr. Curry speaks of.

If we recognize that there are much better ways to analyze and to present that kind of noisy time-series data then I’d say we’ve come quite a ways from the earlier assertions, ridiculous, that “hurricane counts are not subject to sampling error”.

The links in #98 should be reversed.

I’m not so sure if I agree with this. While Katrina brought the issue more to the forefront, my perception is that it was a big deal prior to May ’05, at least as a media issue.

As an example, see Chris Landsea’s Jan ’05 resignation from the IPCC, and his letter describing media coverage of the hurricane/global warming issue and the actions of his colleagues at the time.

Thanks for the opportunity to diverge a bit on this topic. I read the McCullough and McKittrick paper, very interesting. I totally agreed with everything until section 3.3, the recommendation of a government oversight committee (libertarian lawyers at Volokh, alert!). The issues raised at climatesciencewatch and by the republican war on science do not suggest that this would be a good idea. I think that the journals themselves have to take on a major part of this responsibility, with prestige of the journals becoming associated with the due diligence (and not with the number of press releases generated by the articles).

The issues raised in this paper, while valid and important, are only part of the problem in my opinion (but i won’t diverge this thread further at this point).

One little diversion. I’ve noticed some interesting parallels and contrasts in the hockey stick (HS) vs the hurricane global warming (HGW) controversies. In HS, the issue was availability and transparency of the data set. In HGW, the dataset was publicly available for anyone to pull of the web from a number of independent sources. The HGW data controversy arose from the people who created the data sets saying it wasn’t good enough to do AGW detection and attribution studies, without providing documentation for this assertion. In HS, the climate researchers were the “insiders”, while in HGW the climate researchers were the “outsiders” challenging the hurricane establishment.

re 100: the landsea/trenberth tempest was really a mini media tempest generated because the media likes conflict. It was mainly of any relevance at all because of what it said about the IPCC process and the behaviour of people involved in the process. There was no real science meat in anything that was going on between landsea and trenberth. My take on this was that the media discussion was not so much about global warming policy or hurricane adaptation policy, but rather mostly about the IPCC process.

#101. Judith, I agree with you that a government oversight committee is a bad idea and do not endorse it. I’ve argued with Ross about this concept. I think that there are many much more practical things to do – such as improve standards of data archiving, disclosure of source code, much more attention to disclosure of results adverse to the thesis. I also don’t think that inadequate due diligence at journals is a priority, although I think that some focus could be re-directed. I’d like to see less attention paid by reviewers to whether something is “right” and more to whether materials are provided so that the results can be tested.

The CPD review of Burger and Cubasch provides an object lesson in how journal reviewing goes astray. Nobody asks whether they have provided materials so that their results can be checked or verified. Leaving Mann’s review aside, I was very irritated at the reviwer who argued that some other problem was more interesting than the one that they addressed. So what? A securities commission reviewing a prospectus wouldn’t say – well, maybe you shouldn’t be selling hamburgers, did you think about sellling Mexican food? I’d rather see more effort spent on disclosure and less worrying about whether something is “right” which seems to degenerate quickly into arguing POV.

Re #100

Semantics. Media attention was ramping up over time; there was no threshold where you could say the issue was apolitical at month t and political at month t+1. Cr. Curry’s perspective resonates with my own lay experience: I did not start thinking about how you would properly analyze that kind of data until I saw the sickening Katrina images on TV and the cartoon time-series in the newspaper.

Bless you for this suggestion. Reviewing is a very challenging, time-consuming, and very thankless task. In fact you’re more likely to get spanked than thanked for a diligent review. Some journals are much more careful than others about review for correctness of assertions. (Any false assertions, however small, are usually declared a fatal flaw and the paper is rejected.) There are really two (or more) tiers of journals; the problem is that it is not clear to a non-specialist, or outsider, which are which. Thus it is not clear which papers “to trust” and which “not to trust”. You don’t want to abandon review-for-correctness because that trustability factor is the most sacred deliverable of all. But you definitely want to boost replicability by forcing authors to maintain publicly available turnkey scripts – which takes away their precious monopoly on truth, and serves to boost inter-lab competition. This will serve society’s best interest.

Two other innovative ideas: (1) authorship should be anonymous to reviewers, but (2) reviewership should be made public knowledge at the time of publication.

I’m signing off for about 36 hours, travelling back to the states from ECMWF (will post about Vitart and ECMWF seasonal forecasts when i get back to the states).

#72

That sounds like a gentle way of saying that climate research, as practiced, is essentially a subjective (Bayesian) activity, which may explain a lot about the tone of debate in this field. I happen to subscribe to the view that “subjective (Bayesian)” is absolutely not the same as “bad” — most of life’s best things are subjective (Bayesian) — yet it is hard to ignore that nagging problem of credibility when using methods resistant to impartial audit. FWIW, Volume 2B of “Kendall’s Advanced Theory of Statistics” — which generally endorses Bayesian statistics — does a nice job discussing conflicts that arise between (and because of) “logical” and “subjective” probabilities, particularly when dealing with propositions that are theoretically provable. It is also worth noting that some “classicists” are driven crazy by the very concept of subjective probability. It is probably true that at least one of the two sides in that debate “doesn’t get it”.

Though I have not heard the argument, perhaps even the hockey stick is defensible as a Bayesian construct. Could it serve as an informative prior, ostensibly obtained through rigorous mathematical manipulations but really just an expression of belief? Hmmm. When the NAS Report used the word “plausible” to describe MBH conclusions, was that a subtle hint intended to suggest viewing things from a Bayesian perspective?

Just a thought. 😉

#105 Bender,

The “traditional” review process (2-3 anonymous reviewers deciding on the fate of a paper) is now outdated. Technology allows us to post un-reviewed papers, and have a “dynamic” review process, played out in the open, as well as all supplementary material needed to reproduce the results. The end-user is the best judge of a paper’s quality and significance.

The peer-review system survives because it is at the core of the scientific hierarchy. “Gate keeping” is the only power that an academic scientist can ever have, and with it come prestige, promotion and grant money. That’s why there is resistance to change it.

On a practical side: it is unrealistic to ask reviewers to reproduce results that often have taken months, if not years, to obtain, not to mention complex experimental setups, etc. Not all papers are just statistical calculations like MBH. On the other hand, it would be possible for a journal to do some random audits of papers it publishes. Authors would have strict guidelines as to what material they should keep to supply the auditors. A journal’s reputation would be enhanced if it were to enforce such measures.

Papers used in the context of public policy decision should also be subject to stricter auditing.

Re #108

I agree the traditional model is growing outdated; but it hasn’t been abandoned, and won’t be for some time, for the precise reasons you outline: capital interests, once entrenched are immovable (except by their own choosing). Thus the discussion is worth having.

RE: #103 – A new ISO standard certainly seems compelling. Many of the principles of something like ISO 9000 would apply. About 1 year ago someone stacked up ISO 9000 against SOX in “Quality Progress” (the main, quasi technical “club” journal of the ASQ) and it was quite good in terms of mapping. For that matter, this blog might serve as the initial framework for developing the new standard. Amongst us we probably have a pretty good representation into international standards bodies.