Yet another propaganda essay masquerading as a scientific paper has been published (SI here) in the journal, Environmental Research Letters. The latest entry, Quantifying the Consensus on Anthropogenic Global Warming in the Scientific Literature, written by a team of activist bloggers led by John Cook of the antithetically named Skeptical Science blog, attempts to further the meme of a 97% consensus of scientific support for a faltering Global Warming movement.

There have been a number of posts, for example, here, here and here at Lucia’s Blackboard or this one and that at WUWT which discuss the weak data gathering / data interpretation methodology and the truly incredible spin-one’s-head- around algorithm for generating a value of “97” which conveniently ignores a large proportion of the data. My focus in this post will be to examine some of the other “quantifying” material.

Given the virtual absolute absence of available data and statistics in this paper, this will not be that easy a task. The authors have apparently bought into the Climate Science tradition of why should I make the data available if you will only use it to prove me wrong. I should point out that I have spent much of my academic career doing just that and become reasonably adept at recognizing situations where work might be shoddy. This is due to my experiences with consulting on academic research projects, Ph.D. and Master’s theses work as well as outside the university. When interviewing the researchers about their project, I would tell them that I would attempt to find everything that was wrong with their planned research. When it got to the point that this was no longer possible to do, I would be satisfied that the statistical aspects would be adequate for answering the questions that they wished to answer.

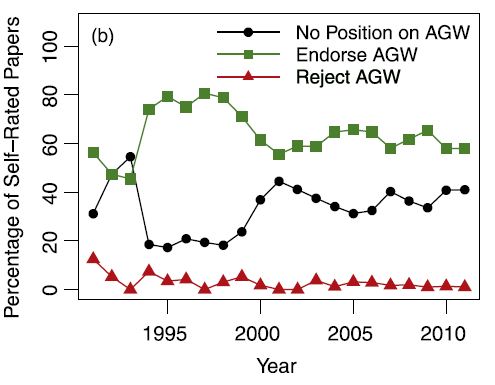

One of the items that caught my eye was Figure 2b: Percentage of self-rated endorsement, rejection and no position papers.

This figure was discussed in the text of the paper:

Figure 2(a) shows the level of self-rated endorsement in terms of number of abstracts (the corollary to figure 1(a)) and figure 2(b) shows the percentage of abstracts (the corollary to figure 1(b)). The percentage of self-rated rejection papers decreased (simple linear regression trend -0.25% ±0.18% yr-1, 95% CI, R2 = 0.28; p =0.01, figure 2(b)). The time series of self-rated no position and consensus endorsement papers both show no clear trend over time.

Figure 2(a) showed that the number of abstracts each year was increasing at a very high rate. This implied that the variance of the percentages calculated for figure2(b) would change substantially from year to year. Since a “simple linear regression” assumes homoscedasticity (i.e. equal variability) of the data from year to year, this would mean that the early years with very few abstracts would have an inordinately strong influence in the calculation of the parameter estimates as well as possibly distorting the interpretation of the significance of the results.

As well, the model for this regression (which for convenience we will do for a probability rather than a percentage) looks like: Pk = α + βTk where Pk is the probability that a paper in year Tk will belong in the category for which the regression is being done (e.g. “rejection papers”). An observation for that year will look like pk = nk / Nk where nk is the count of papers in years k and Nk is the total number of abstracts in that year. One should note that pk is assumed to have a binomial distribution with parameters Nk and Pk. The full model becomes pk = α + βTk + εk where εk is a random variable with mean 0 and variance equal to Pk (1 – Pk ) / Nk .

The data needed to create Figure 2(b) was, you guessed it, unavailable, so I digitized the data in the graph using a simple R programme. The large symbols used in the graph were not helpful to the process, but I managed to get what appeared to be a very reasonable replica of the various plots. The replicated plot appears below near the end of the post.

Using creative rounding, I also turned these percentages into yearly counts for the categories each year for later use. As a check, I calculated both the yearly count totals and the overall category totals. The yearly counts matched the counts given in Table S2 of the Supplement except for the years 2009 and 2011 where they were each out be 1 in opposite directions. The overall totals for the categories were

reject endorse nopos

40 1321 781 : Estimated from Figure 2b

39 1342 761 : Given in the paper

The differences of size 20 seem to be much too large to be explained as digitization errors (since a check on Photoshop of my reconstruction and the original graph showed an extremely close matchup of points, so this appears to be something that Mr. Cook could address by providing the “correct” values for the totals he gives in the paper. However it is not the central issue here.

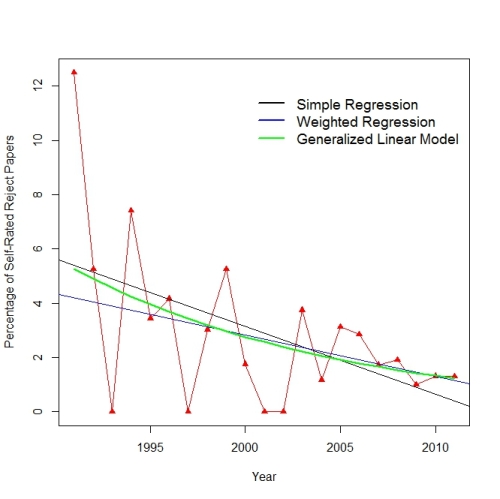

Using the digitized data, the “simple regression was carried out in R. The comparable statistics to those above for the Reject group were (to all decimal places provided – round as you wish) :

-0.2501658% ± 0.1950723% yr-1, 95% CI, R2 = 0.2749; p = 0.0147 Calculated in R

-0.25% ±0.18% yr-1, 95% CI, R2 = 0.28; p =0.01 From the paper

The Cook bound for the confidence interval appears that it might have been calculated from a simple 2 times standard error calculation rather than formally using the appropriate t-value – something one should not do in a professional paper.

As I mentioned earlier, the “simple” regression method assumes equal variability from year to year which is clearly not the case. In order to correct for this, we need to do a weighted regression where the optimal weights are equal to the inverse of the variance of the data value. Thus the weights look like:

Wk = 1/( Pk (1 – Pk ) / Nk ) = Nk / ( Pk (1 – Pk ))

There is a slight problem here in that the Pk’s are not known. One could substitute the sample proportions or better still, one can estimate the probabilities from the regression equation, Pk = α + βTk. This is done by alternately calculating the regression and the weights until the process converges (iteratively reweighted least squares). I wrote a short program for doing this and recalculated the above estimates:

-0.1524997% ± 0.1182535% yr-1, 95% CI, R2 = 0.2772; p = 0.0142

The magnitude of the slope is reduced by about 39% from the inappropriate unweighted regression.

I repeated this exercise for each of the other groups for which no results whatsoever were calculated because ostensibly “the time series of self-rated no position and consensus endorsement papers both show no clear trend over time”. One would think that for interest’s sake, such results would at least appear in the SI.

Endorse Group:

-0.16485% ± 0.7570545% yr-1, 95% CI, R2 = 0.01081; p = 0.654 Simple Regression

-0.4350717% ± 0.5419943% yr-1, 95% CI, R2 = 0.1294; p = 0.1093 Weighted Regression

No Position Group:

0.4149549% ± 0.7862663% yr-1, 95% CI, R2 = 0.06034; p = 0.283 Simple Regression

0.603483% ± 0.570961% yr-1, 95% CI, R2 = 0.2048; p = 0.0394 Weighted Regression

Using a more appropriate regression can produce substantial changes in the results.

So is this the end of the story? Not quite. Unbeknownst to some amateur statisticians, using regression to analyze this type of data is not recommended. For example, if one were to use the Cook paper regression to “predict” the probability for the Reject group for any year from 2013 on, they would get a negative value ( possibly because retractions would exceed new publications???). Methodology termed Generalized Linear Models has been developed for exactly this type of situation. In our case, we will use a slightly different model:

Pk = exp(α + βTk) / (1 + exp(α + βTk))

where exp(x) represents the exponential function ex. This particular model is also known as logistic regression. Fitting is done using maximum likelihood techniques and interpretation of the estimated coefficients differs somewhat from the regression case. Unlike linear regression the result is always between 0 and 1 with no negative probabilities. Also, confidence intervals for probabilities are usually not symmetric about the estimated value.

Using the glm() function in R, the calculations were done for all three groups:

Reject:

…………………………Estimate Std. Error z value Pr(>|z|)

(Intercept) 144.97037 54.51333 2.659 0.00783

year -0.07427 0.02720 -2.731 0.00632

Mean annual rate of change from 1991 to 2011: -0.2007284

Endorse:

………………………..Estimate Std. Error z value Pr(>|z|)

(Intercept) 38.788789 18.676384 2.077 0.0378

year -0.019095 0.009308 -2.051 0.0402

Mean annual rate of change from 1991 to 2011: -0.4384528

No Position:

…………………………Estimate Std. Error z value Pr(>|z|)

(Intercept) -55.417983 19.119586 -2.898 0.00375

year 0.027343 0.009528 2.870 0.00411

Mean annual rate of change from 1991 to 2011: 0.60276

You will note that all three of the groups now seem to have statistically significant rates of change with the Endorse group showing a decrease of almost 9 percentage points over the 20 year period.

The plot below shows the distribution of the Reject group with associated regression lines and the fitted GLM.

All of the groups with associated GLM fits:

All of the R calculations and the data used are available by running the following lines in R (you may need to fix the quotes first):

dfile = “http://statpad.files.wordpress.com/2013/05/cookpost.doc ”

download.file(dfile, “cookpost.R”, method = “auto”, quiet = FALSE, mode = “w”,cacheOK = TRUE)

Open the script cookpost.R .

31 Comments

It looks like there’s something missing in “I would attempt to everything that was wrong”.

Missing word?

When interviewing the researchers about their project, I would tell them that I would attempt to everything that was wrong with their planned research

_____

Interesting that the Endorse category experienced a period of rapid decrease at about the same time the No Position experienced a rapid increase in percentage. It may be about the same time the whole world realized that global average temperatures were not increasing.

Great job, Roman.

The ‘endorse’ category and the ‘no position’ categories are in fact almost perfect mirror images of each other.

That shouldn’t be surprising because the three percentages always total 100. The reject group is relatively small so changes in one of the other two categories will induce a comparable change in the third one.

“Since a “simple linear regression” assumes homoscedasticity (i.e. equal variability) of the data from year to year, this would mean that the early years with very few abstracts would have an inordinately strong influence in the calculation of the parameter estimates as well as possibly distorting the interpretation of the significance of the results.”

Possibly distorting?. It would appear that around 1994 “No Position” holders migrated to “Endorse” in mass exodus almost overnight. Hard to think of any one event or paper that would cause that…?

Roman, “When interviewing the researchers about their project, I would tell them that I would attempt to everything that was wrong with their planned research. When it got to the point that this was no longer possible to do, I would be satisfied that the statistical aspects would be adequate for answering the questions that they wished to answer.”

Gotta say, Roman, that anyone who needs that kind of statistical vetting on their project isn’t doing science. Epidemiology, at best.

Thanks to everyone for finding my typo. I have edited the post above by adding the bolded word:

Another thought: Roman, why not make an example of Cook’s paper? I know there’s quite some controversy in the psychological literature about the abuse of statistics and data peeking to adjust results post hoc. For example: “Statistics as rhetoric in psychology” and “How to Confuse with Statistics”

That last paper is in volume 20 of Stat. Sci. which is dedicated to the misuse of statistics.

Why not choose out Cook’s paper as exemplifying everything that’s wrong in abusing statistics. Analyze every detail, write it up, and publish it.

Doing so will expose Cook’s chicanery, embarrass Environmental Research Letters as inept, and humiliate the University of Western Australia. All good outcomes.

You’ve done the work already. Your paper could be a tonic for the field.

Please, pretty please, with statistical strawberries strewn through?

=============

John Cook does appear to have produced another negative exemplar—an article that illustrates, in detail and along multiple dimensions, how not to conduct, analyze, or eport empirical research in the social sciences.

Unfortunately, he’s competing with his own sometime collaborator, Stephan Lewandowsky, for worst in category.

how not to to *report* empirical research… I meant to type.

I do love the smell of statistics done well in the morning 🙂

Another example from Volume 20 of Statistical Science that seems to capture the Cook/Lewandowski operational modus:

How to Lie with Bad Data

Richard D. De Veaux and David J. Hand

Source: Statist. Sci. Volume 20, Number 3 (2005), 231-238.

Abstract

“As Huff’s landmark book made clear, lying with statistics can be accomplished in many ways. Distorting graphics, manipulating data or using biased samples are just a few of the tried and true methods. Failing to use the correct statistical procedure or failing to check the conditions for when the selected method is appropriate can distort results as well, whether the motives of the analyst are honorable or not. Even when the statistical procedure and motives are correct, bad data can produce results that have no validity at all. This article provides some examples of how bad data can arise, what kinds of bad data exist, how to detect and measure bad data, and how to improve the quality of data that have already been collected.”

Ah well, I posted this at WUWT, but of course should have posted this here. So I’ll post for the first time here now

Cook:

“Oreskes 2007predicted that as a consensus strengthened, the number of papersrestating the consensus position should diminish. An elegant confirmation of this prediction that came out of our data is that as the consensus strengthened (e.g., the percentage of endorsements among position papers), we also observed an increase in the percentage of “no position” abstracts.”

Source:

http://www.culturalcognition.net/john-cook-on-communicating-con/

So now if they adopt your criticism and adjust the paper, does that make Cook a denier, under Dana’s new definition.

MikeN,

was your comment in response to mine? (Cook now taking the position from above the line, thus acting like / encouraging deniers –> Dana’s twitter position?)

If so, no. That quote comes from a discussion on the 22nd of May, starting here:

http://www.culturalcognition.net/blog/2013/5/22/on-the-science-communication-value-of-communicating-scientif.html

But I did now check the Cook paper as well (first paragraph of Discussion):

“Of note is the large proportion of abstracts that state no position on AGW. This result is expected in consensus situations where scientists ‘…generally focus their discussions on questions that are still disputed or unanswered rather than on matters about which everyone agrees’ (Oreskes 2007, p 72). This explanation is also consistent with a description of consensus as a ‘spiral trajectory’ in which ‘initially intense contestation generates rapid settlement and induces a spiral of new questions’ (Shwed and Bearman 2010); the fundamental science of AGW is no longer controversial among the publishing science community and the remaining debate in the field has moved to other topics. This is supported by the fact that more than half of the self-rated endorsement papers did not express a position on AGW in their abstracts.”

Use of 7 decimal points is kinda crazy.

No, I mentioned in the post that I intentionally was not rounding the values. I wanted everyone to see exactly what I was looking at when I was doing the calculations.

In fact, when I was doing the digitization of the percentages, it would have been foolish to round the digitized values because the next step was to reconstruct the counts by multiplying these by the known total for each year. The product is then rounded to an integer. Errors in digitizing which produce products whose fractional portion is close to one-half can push the rounded count to the wrong value.

I wanted everyone to see exactly what I was looking at when I was doing the calculations.

Not to be pedantic, but recall that what is displayed is often only an approximation of the underlying data. With caveats about relevance, language, data type, etc.

The main reason your comment caught my eye was that I just happened to be working a little issue w/ the problem of Round(x) not being a linear operator, ie. Round(A) + Round(B) != Round (A + B), Round (mA) != m Round(A).

RomanM: I am not sure of what point you are making with regard to my above statement as to why I did not bother to round the output from the calculations. It was what I was looking at whether it was “only an approximation of the underlying data” or not. On the contrary, in the paper, a p-value which I replicated as 0.0147 had been rounded to 0.01 presumably to give it an air of “significance at the .01 level”, something which I thought reflected the mindset of the authors of the paper.

Assuming that the papers appeared in peer reviewed journals, is it surprising that self-endorsed AGW continue to head the table? I am only surprised that “no position” is making progress and that any “reject AGW” managed to get published at all. Surely the only statistic worth investigating is the number of papers in each category that have been rejected.

“… statistic worth investigating is the number of papers in each category that have been rejected.”

How about

‘… statistic worth investigating is the percentage of initial rejections in each category.’

These were my thoughts exactly and have been throughout the debates on this paper.

It’s like choosing a % of red-haired people and using them to stand for for the entire population. More seriously, in ‘climate science’ only those disposed to endorse AGW have been in a position to research and to publish in the peer reviewed literature for so long, it’s inevitable that more papers will fall into the pro-AGW category; given this, the result is meaningless.

the thing I object to is such categorization of papers in the first place. A paper that only looks at say something about volcanos or the MWP does not on its own “refute” the alarmist position, but may be in contradiction to it when placed with other data/studies. I also object to Cook and friends treating the whole thing monolithically, as if it were a simple matter of faith or not. But the climate models and climate itself are immensely complex. On the one hand, I personally am impressed that the models do as well as they do, but on the other hand do not find them remotely useable for turning society upside down since they disagree with each other and with data so much. So the basic classification they do is meaningless to my mind.

As a psychological paper, the classification was supposed to be a kind of a Rorschach test for the the authors of the papers. The funny thing is, it’s turned out to be a Rorschach test on Cook et al.

John R T

‘… statistic worth investigating is the percentage of initial rejections in each category.’

That would be interesting!

RomanM, you should check out a recent post on Skeptical Science. It uses “statistics” to estimate the total number of papers related to global warming and concludes 881 (of 137,000) reject AGW. It’s cringe-worthy.

This just in!

“97% Study Falsely Classifies Scientists’ Papers, according to the scientists that published them”

http://www.populartechnology.net/2013/05/97-study-falsely-classifies-scientists.html

It seems that the author did a McIntyre-quality(TM) audit of a sample of sceptical papers and found that they were seriously micharacterized as either endorsing . He then contacted the authors of those papers and got some excellent analysis.

Sorry my comment above got a bit garbled. Here’s a better explanation of the article, from the author himself:

“To get to the truth, I emailed a sample of scientists whose papers were used in the study and asked them if the categorization by Cook et al. (2013) is an accurate representation of their paper. Their responses are eye opening and evidence that the Cook et al. (2013) team falsely classified scientists’ papers as “endorsing AGW”, apparently believing to know more about the papers than their authors.”

There is an interesting arcticle here that shows confirmation bias in fact it shows more than that. It alleges to reveal the purpose of the paper before the results were compiled.

http://www.populartechnology.net/2013/06/cooks-97-consensus-study-game-plan.html

Lots of papers are written with a purpose in mind. I don’t think this shows anything remarkable. It’s not right, but it’s also not surprising.

3 Trackbacks

[…] The number of papers endorsing AGW is falling, while the number of papers with no position is increasing. Looks like an increase in uncertainty to me. Read the whole post here […]

[…] https://climateaudit.org/2013/05/24/undercooked-statistics/#more-17962 […]

[…] Harley https://climateaudit.org/2013/05/24/undercooked-statistics/ […]