As many readers have already surmised, the “GCM-Q” model that visually out-performed the Met Office CMIP5 contribution (HadGEM2) originated with Guy Callendar, and, in particular, Callendar 1938 (QJRMS). My attention was drawn to Callendar 1938 by occasional CA reader Phil Jones (see here and cover blog post by co-author Ed Hawkins here.) See postscript for some comments on these articles.

Callendar 1938 proposed (his Figure 2) a logarithmic relationship between CO2 levels and global temperature (expressed as an anomaly to then present mean temperature.) In my teaser post, I used Callendar’s formula (with no modification whatever) together with RCP4.5 total forcing and compared the result to the UK Met Office’s CMIP5 contribution (HadGEM2) also using RCP4.5 forcing.

In today’s post, I’ll describe Callendar’s formula in more detail. I’ll also present skill scores for global temperature (calculated in a conventional way) for all 12 CMIP5 RCP4.5 models for 1940-2013 relative to simple application of the Callendar formula. Remarkably, none of the 12 GCM’s outperform Callendar and 10 of 12 do much worse.

I’m not arguing that this proves that Callendar’s parameterization is therefore engraved in stone. Callendar would undoubtedly have been the first to say so. It is undoubtedly rather fortuitous that the parameters of Callendar’s Figure 2 outperform so many GCMs. The RCP4.5 forcing used in my previous post included an aerosol history, the provenance of which I have not parsed. I’ve done a similar reconstruction using RCP4.5 GHG only with a re-estimate of the Callendar parameters, which I will show below.

Guy Callendar

Guy Callendar (see profile here) seems entirely free of the bile and rancor of the Climategate correspondents that characterizes too much modern climate science.

Callendar’s life got off to a good start in 1898: he was born in Canada (though he was raised and lived in England). (The next major AGW figure, Gilbert Plass, was born and brought up in Canada, though he later moved to the U.S.) He was the son of a prominent physicist, Hugh Callendar, who was succeeded at Montreal’s McGill University by Ernest Rutherford. Hugh Callendar appears to have been very prominent in his day and, among other activities, had developed steam tables that were widely used in industry. (Much of the present profile is drawn from here.)

Guy Callendar lost one eye in a childhood accident, but nonetheless was a keen tennis player, reaching the finals of the club singles championship in 1928 at Ealing Lawn Tennis Club and winning the club doubles championship at Horsham Lawn Tennis Club at the age of 49 in 1947. Not easy for someone with only one eye.

Callendar earned a certificate in Mechanics and Mathematics in 1922 at City & Guilds College and then went to work for his father examining the physics of steam until his father’s death in 1930. In 1938, Callendar was employed as a “steam technologist” by the British Electrical and Allied Industries Research Association and his seminal 1938 paper was therefore communicated to the Royal Meteorological Society by Dr G.R. Dobson, F.R.S:

Although Callendar’s qualifications would undoubtedly lead a modern Real Climate or Skeptical Science reader to dismiss him as suffering from Dunning-Kruger syndrome, Callendar (1938) is the first article that provides a clear scientific basis for modern AGW theory, albeit of a low-sensitivity and unhysterical type. Callendar’s detailed and first-hand technical expertise on steam and water vapour enabled him to articulate the infrared properties of increased carbon dioxide in the atmosphere, an understanding that appears to have eluded the contemporary establishment, whose views seem related to modern skydragons. Indeed, the structure of Callendar (1938) includes a discussion of issues that frequently trouble newcomers to the debate (spectral overlap, CO2 dissolution in the ocean) and, in my opinion, IPCC reports are diminished by not including modern reviews of such topics.

Callendar’s “Formula”

In Figure 2 of Callendar (1938) – see below, Callendar showed his estimate of the change in temperature (as an anomaly) arising from varying CO2 levels in “temperate” zones. Although Callendar did not characterize the curve in this figure as logarithmic, it obviously can be closely approximated by a log curve, as shown by the red overplot which shows a log curve fitted to the Callendar graphic. Its 2xCO2 sensitivity is 1.67 deg.

Figure 1. Callendar 1938 showing temperature zone relationship. Log curve (red) fitted by digitizing 13 points on the graphic and fitting a log curve by regression: y= -2.635113 + 2.410493 *x. This yields sensitivity of 2.41 *log(2) = 1.67 deg.

Callendar did not show corresponding graphics for tropical or polar regions, but commented that the results for other zones were similar. Nor did Callendar show the derivation of his results in Callendar (1938). It is my understanding that he derived these results from his knowledge of the infrared properties of carbon dioxide and water vapour (and not by curve fitting to observations, though he had also carried out his own estimates of changes in global temperature.)

Callendar implicitly discounted the arguments for substantial positive feedbacks on initial forcing that characterize subsequent GCMs, observing the nagative feedback from clouds as follows:

On the earth the supply of water vapour is unlimited over the greater part of the surface, and the actual mean temperature results from a balance reached between the solar ” constant ” and the properties of water and air. Thus a change of water vapour, sky radiation and tempcrature is corrected by a change of cloudiness and atmospheric circulation, the former increasing the reflection loss and thus reducing the effective sun heat.

“GCM-Q”

Although Callendar (1938) included projections of future carbon dioxide emissions and levels, Callendar had no inkling of the astonishing economic development of the second half of the 20th century. As Gavin Schmidt has (reasonably in this case) observed in connection with Hansen’s Scenario A, the ability to forecast future emissions is unrelated to the evaluation of the efficacy of a model’s ability to estimate temperature given GHG levels.

I thought that it would be an interesting exercise to see how Callendar’s 1938 “formula” applied out-of-sample when applied to observed forcing and compare it to the UK contribution to CMIP5 (HadGEM2), which I had been discussing. For my comparison, I used IPCC RCP4.5 forcing as a mainstream estimate, inputting their “CO2 equivalent” of all forcings (RCP4.5 column 2). It turns out that RCP4.5 column 2 “CO2 equivalent” includes aerosols converted to ppm CO2 somehow, as well as the other GHG gases (CH4, N2O, CFCs etc) plus aerosols (converted to ppm CO2). At present, I don’t know how these estimates have been constructed and make no comment on their validity: for this exercise, I am merely taking them as face value for a relatively apples-to-apples comparison.

Callendar’s relationship was based on anomaly to “present mean temperature”. For my calculations, I adopted the 1921-1940 anomaly as an interpretation (differences from this are slight) and therefore centered HadCRUt4 observations and HadGEM2 on 1921-40 for comparison.

Callendar’s Figure 2 is for “temperate” zones but he reported that the relationship was “remarkably uniform for the different climate zones of the earth”. For the purpose of the exercise, I therefore used the relationship of Callendar’s Figure 2 to estimate GLB temperature, recognizing that the parameters of this figure would only be an approximation to Callendar’s GLB calculation. I have not examined whether the Callendar formula might work better or worse for 60S-60N, as, in carrying out the exercise, I was not taking the position that the parameters in the Callendar formula were “right” – only seeing what would result.

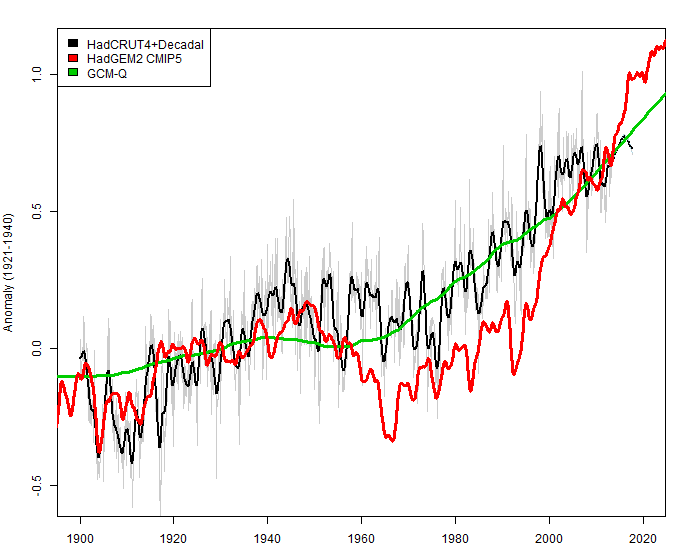

Here’s what resulted (as I showed in the previous post). A reconstruction from the Callendar 1938 formula applied to RCP4.5 CO2 equivalent seemingly out-performed the HadGEM2 GCM. While some readers presumed that “GCM-Q” must have incorporated some knowledge or information of second-half 20th century temperature history in the development of the “model”, this is not the case. “GCM-Q” directly used the formula implicit in Callendar 1938 Figure 2. (I realize that my interest in the results arises in large part from their coherence with subsequent observations, but it wasn’t as though I foraged around or did multiple experiments before arriving at the results that I showed here, the first runs of which I sent to Ross McKitrick and Steve Mosher.)

Figure 2. Temperature estimate using Callendar relationship versus HadGEM2.

Skill Scores

Next in today’s post, I will quantify the visual impression that “GCM-Q” outperformed HadGEM2 by using a skill score statistic that is commonplace in the evaluation of forecasts, estimating the “skill” of a model from the sum of squares of the residuals from the proposed model as opposed to a base case, as expressed below where obs is a vector of observations and “model” and “base” are vectors of estimates.

skill = 1 - sum( (model-obs)^2)/sum( base-obs)^2)

This calculation is closely related to the RE statistic in proxy reconstructions, where the base case is the mean temperature in the calibration period. However, the concept of a skill score is more general and long preceded the use of RE statistics in proxy reconstructions. In today’s calculation, I used 1940-2013 for comparison (using 2013 YTD as an estimate of 2013.)

In addition to calculating the skill score of HadGEM2, I also calculated skill scores for the 12 CMIP5 RCP4.5 averages on file at KNMI. These skill scores (perfect is 1) are shown in the barplot below:

Figure 2. Skill Scores of CMIP5 RCP4.5 models relative to Callendar 1938.

Remarkably, none of the 12 CMIP5 have any “skill” in reconstructing GLB temperature relative to the simple GCM-Q formula. Indeed, 10 of 12 do dramatically worse.

Aerosols

In the comments to my previous post, there was some discussion about the importance of aerosols and whether 20th century temperature history could be accounted for without invoking aerosols.

Directly using the Callendar 1938 “formula” on RCP4.5 GHG CO2 equivalent (RCP column 3) leads to a substantial overshoot of present temperatures. As an exercise, I re-calibrated a Callendar-style logarithmic relationship of temperature to RCP4.5 GHG and did the corresponding reconstruction of 20th century temperature history, once again calculating skill scores for each of the CMIP5 GCMs, this time against the Callendar-style estimate only using GHG (no aerosols), as shown in the graphic below:

Figure 3. Skill Scores of CMIP5 RCP4.5 models relative to re-calibrated Callendar-style estimate using GHGs only.

The temperature reconstruction using the reparameterization is shown in the graphic below. This reconstruction is not out-of-sample, as observations have been used to re-calibrate. Its climate sensitivity is lower than the Callendar 1938 model: it is 1.34 deg C.

Figure 4. As Figure 2 above, but including recalibrated temperature reconstruction using RCP4.5 GHG (column 3).

Comments

What does this mean? I’m not entirely sure: these are relatively new topics for me.

For sure, it is completely bizarre that a simple reconstruction from Callendar out-performs the CMIP5 GCMs – and, for most of them, by a lot. For the purposes of this observation, it is irrelevant that Callendar reconstructed temperature zones (both given his comment that other zones were remarkably similar and the fact that the specific parameters of Callendar Figure 2 are not engraved in stone). Even if the Callendar parameters had been calculated using the observed temperature history, it is surely surprising that such a simple formula can out-perform the GCMs, especially given the enormous amount of time, resources and effort expended in these GCMs. And, yes, I recognize that GCMs provide much more information than GLB temperature, but GLB temperature is surely the most important single statistic yielded by these models and it is disquieting that the GCMs have no skill relative to a reconstruction using only the Callendar 1938 formula. As Mosher observed in a comment on the predecessor post, a more complicated model ought to be able to advance beyond the simple model and, if there is a deterioration in performance, there’s something wrong with the model.

From time to time, others have pointed out this ability of simple models (and a couple of readers have sent me interesting essays on this topic offline). In one sense, “GCM-Q” is merely one more example. However, the fact that the parameters were estimated in 1938 adds a certain shall-we-say piquancy to the results. Nor do I believe that one can ignore the relative coherence of Callendar’s low sensitivity results to observations in forming an opinion on the still highly uncertain issue of sensitivity. That GCM-Q performed so well out of sample would interest me if I were a climate modeler.

Third, all the GCMs that underperform the Callendar formula run too hot. It seems evident to me (and I do not claim that this observation is original) that the range of IPCC models do not fully sample the range of physically possible or even plausible GCMs at lower sensitivities. Perhaps it’s time that the climate community turned down some of the tuning knobs.

Finally, Callendar 1938 closed with the relatively optimistic comment that, in addition to the direct benefits of heat and power, there would be indirect benefits at the northern margin of cultivation, through carbon dioxide fertilization of plant growth and even delay the return of Northern Hemisphere glaciation:

it may be said that the combustion of fossil fuel, whether it be peat from the surface or oil from 10,000 feet below, is likely to prove beneficial to mankind in several ways, besides the provision of heat and power. For instance the above mentioned small increases of mean temperature would be important at the northern margin of cultivation, and the growth of favourably situated plants is directly proportional to the carbon dioxide pressure (Brown and Escombe, 1905): In any case the return of the deadly glaciers should be delayed indefinitely.

This last comment was noted up in Hawkins and Jones 2013, who sniffed in contradiction that “great progress” had subsequently been made in determining whether warming was “beneficial or not”, bowdlerizing Callendar by removing Callendar’s reference to direct benefits (heat and power) and carbon dioxide fertilization:

Since Callendar (1938), great progress has been made in understanding the past changes in Earth’s climate, and whether continued warming is beneficial or not. In 1938, Callendar himself concluded that, “the combustion of fossil fuel [. . .] is likely to prove beneficial to mankind in several ways” , notably allowing cultivation at higher northern latitudes, and because, “the return of the deadly glaciers should be delayed indefinitely”.

Postscript

As noted above, my attention was drawn to Callendar 1938 by occasional CA reader Phil Jones in Hawkins and Jones (2013) (here), which was discussed by coauthor Hawkins here.

Hawkins and Jones (2013) focused on one small aspect of Callendar’s work: his compilation of World Weather Records station temperature data into zonal and global temperature anomalies, in effect, delimiting Callendar, whose contribution was much more diverse, as a sort of John the Baptist of temperature accountancy, merely preparing the way for Phil Jones.

They noted that Callendar was “meticulous” in his work, an adjective that future historians will find hard to apply to present-day CRU. Hawkins and Jones observed that Callendar’s original working papers and station histories had been carefully preserved (at the University of East Anglia). The preservation of Callendar’s original work at East Anglia seems all the more remarkable given that Jones’ CRU notoriously reported that it had failed to preserve the original CRUTEM station data supposedly because of insufficient computer storage – an excuse that ought to have been soundly rejected by the climate community at the time, but which seems even more laughable given the preservation of Callendar’s records.

Postscript2: “Were Callendar’s Estimates Accurate?” is in the background to Richard Allen’s pod-snippet linked by Bishop Hill here. The screenshot in the background appears to be from the poster presentation by Hawkins and Jones – an odd choice of background.

225 Comments

Ha. Your fans are a broad church indeed.

Steve: In the previous post, I described Jones as “a CA reader, who has chosen not to identify himself at CA”. However, Jones made numerous references to CA in the Climategate dossier – thus at least an “occasional CA reader”, though arguably not a “fan”:)

I promise I won’t do this again until I at least finish the article but

has to be one of the great lines of Climate Audit history. As an obvious fellow-sufferer with Callendar I’m well pleased.

Unfortunate that Callendar correlate better false data. The only sound observational basis is satellites since 1979 and it is much too short to draw conclusions. Oddly enough, HadGEM2 CMIP5 follows a little better that HadCRUT4 what is known about surface temperatures by proxies. The cooling of the 60s and 70s is indeed the feature of the twentieth century the most characteristic and the best established.

You can find it at

http://www.biokurs.de/treibhaus/literatur/callendar/callendar1938.doc

The 1940 and 1958 papers are available by changing the date. This is from an archive put together by Ernst Beck and does not appear to be accessible through the main website.

Steve M,

Very entertaining, BUT…

Let me introduce three definitions to avoid future confusion.

The Equilibrium Climate Sensitivity (ECS) is defined (by the IPCC) as the steady-state SAT temperature achieved after a doubling of CO2.

The Transient Climate Response (TCR) is defined (by the IPCC) as the SAT temperature at the point of doubling CO2 when carrying out a 1% per year CO2 growth (numerical) experiment.

The Effective Climate Sensitivity is the apparent climate sensitivity per unit forcing expressed in degrees C per W/m2 obtained by taking the inverse slope of a plot of outgoing flux vs SAT temperature.

I have not been able to access an original version of the 1938 Callendar paper – only various other papers which reference it – so my comment here may be completely erroneous. That said, it seems that Callendar took a number of different assumed levels of CO2 and then calculated the steady state surface temperature which he thought would result from that level of CO2. This is not the same as the transient temperature which would result at the achievement of that particular level of CO2, since this is dependent on growth rate and system response time. Hence, his calculation of ECS in modern parlance was 1.67 deg C (for a doubling of CO2).

From that you can calculate a linearised effective climate sensitivity of 1.67/3.7 deg C per W/m2, or alternative if the effective forcing value is not equal to 3.7 W/m2. Using this effective climate sensitivity, you can then predict temperature from the CO2 level at a particular point in time, (converted to forcing as per your log formula). To do this, however, you also have to postulate a system response time – a missing variable here.

What I think you have done is misapplied his formula as though it yields a correct transient temperature response when the CO2 level is (arbitrarily) growing. If his formula is indeed a steady-state solution, then his actual temperature prediction would be lower.

Steve: see Callendar 1938 . Callendar did not turn his mind to transient vs steady state response. I did an experiment using the relationship in Figure 2. The outperformance of the GCMs using this simple formula is true regardless of how one interprets the transient vs steady state response. Nonetheless , I take your point that the characterization of the climate sensitivity of the formula does depend on the time path if there are material delays between forcing and temperature impact. It’s not a topic that I’ve parsed. As I recall, Lindzen argues for very short delays – a point that seems logically distinct from other issues in controversy, but I haven’t followed the arguments for and against.

.

The paper is available at http://onlinelibrary.wiley.com/doi/10.1002/qj.49706427503/pdf It seems to have been made open access.

It would be interesting to get Lindzen’s reaction on that specific point and the rest of the post. But looking good, SteveM/GuyC 🙂

Paul_K, I suspect that Callendar used logic to deduce that the difference in timescale between a transient response and final steady state in response to an increase in the steady state energy influx was less than one year.

This logical deduction is based on the fact that there is nowhere on the Earths surface where the difference in the summer maximum and winter minimum temperatures is less than any temperature change resulting from the addition of ‘green house gasses’.

It is actually rather easy to calculate the transient response, just take a thermometer and stopwatch to a place on Earth just before a total solar eclipse. You will note a drop in temperature occurs as soon as you are in the penumbra and a rapid drop when in the umbra.

DM, you’re talking here only about atmospheric response, while the argument for lags is mostly based on oceans.

I’ve taken a quick look at some energy-balance articles on the topic. One line of argument seems to be that a very slow exchange between surface and deep ocean means that the response of the surface layer to additional forcing will be rather rapid since deep diffusion is slow. Since this is the layer that is relevant for HadCRUT, this would argue for short response in the temperature measurements.

Not to venture off topic, but here is the temperature profiles at a mid-latitude site in the Northern Hemisphere (50 N, 145 W).

http://www.astr.ucl.ac.be/textbook/chapter1_node11.html

Background here

http://www.astr.ucl.ac.be/textbook/chapter1_node11.html

You can see that there is almost 10 degrees of temperature change in the top 25 meters. The lag in temperature change and light flux is about three months.

Ah the age old question of how to account for the “heat in the pipeline”. Steve’s implementation of Callendar’s formula is somewhat like a one-box model with a fast tau. To me, this model seems to fit observations better than the fast-slow reponse model of GCM’s. Not that the GCM’s agree on how fast and slow the reponse should be.

Based on some calculations I’ve done the following exponential decay model will produce a .995 R^2 statistic of the CMIP5 multi-model mean RCP4.5 scenario over the period 1900-2100:

ecs * forcing anomaly/3.7 * exp(-(t^1/2)/tau)

So essentially a one-box model using the square-root of time.

JMO, but lately I question that GHG effects have any significant time-lag. No GHG back-radiation can penetrate water beyond a few mm, unlike solar shortwave. So their “warming” effect would be almost instantaneous as SST and added atmospheric water vapor. OTOH, some of the changes in solar radiation (albedo changes) would be “stored” below the water surface & would result in a time-lag.

Quite remarkable really. If these results stand up to the white hot glare of scrutiny that will undoubtedly follow from the ‘climate community’ and do not turn out to be anything other than coincidental, this will be the most remarkable example of any scientific field which has regressed over such a remarkably long period.

Grant/funding corruption in action?

I agree with all that the fact that this old school model outperforms so many modern ones is remarkable. However I don’t find it surprising at all, and could have easily predicted that all the modern models would be running hotter without needing Steve to prove it, although I am glad he has.

As climate is the focus of this blog, it is understandable that readers feel that this scientific “regression” may be unique to climate science, but rest assured, it isn’t. There have been true believers gnawing away at other fields for decades too – economics, psychology and others too.

Tom Desabla:

A suggestive way of putting it, because for any software engineer worth his salt what Steve has shown beyond doubt is that climate science, not least its authoritative expressions in IPCC reports, has been atrocious in regression testing of its central general circulation and other models, taking that important term in its broadest and most important sense.

I can’t speak for psychology, as my therapist would surely confirm, but on economics I’m with srp‘s excellent post on Bishop Hill three days ago. Despite all its conflicts of interest the dismal science surely does a better job at maintaining some integrity than the puffed-up one for which Guy Callendar was such an excellent but neglected pioneer. Not of course that this is setting the bar higher than Lilliputian.

snip – overdeditorialzing

Tom: Thanks. My advice is never to interpret use of the Zamboni as your thoughts being “less than welcome,” just that the editor felt they weren’t right for a particular thread. There have been useful analogies made between climate and the credit crunch that I have seen and been involved in but they don’t happen all the time.

The concern at the moment is about regression testing. This should have been done all along (as Steve is effect putting right very belatedly now) and reported all along, most of all in the IPCC reports. That there is nothing is quite simply a gaping hole in the whole story. That is worth everyone who reads this taking in.

Amazing how much has been ‘forgotten’ (pg 235):

It is we11 known that temperatures, especially the night

minimum, are a little higher near the centre of a large town

than they are in the surrounding country districts ; if, therefore,

a large number of buildings have accumulated in the vicinity

of a station during the period under consideration, the departures

at that station would be influenced thereby and a rising trend would

be expected.

Steve: OT to this post. Nor is it fair to say that this issue has been “forgotten”: it remains much discussed to this day,

Steve M,

Thanks for the pointer to the original paper which I had carelessly missed on the first read-through. It is a remarkable paper in many aspects.

The paper does unquestionably derive this CO2-temperature relationship as a steady-state condition directly from Stefan-Boltzmann. However, while that does raise a big question mark over your application of the relationship to calculate transient temperature values, it actually suggests to me that he did an even better job than you may think. A slightly more rigourous application of the steady-state formula to account for transient behaviour should take his long wavelength temperature projection to exactly where it should sit in my view – i.e. cutting through the peaks and troughs of the 61 year oscillation. I will test this and produce a graph to show you when I get a few minutes.

Paul

Steve: Paul, I welcome your analysis. This is a new topic for me. I’ve noted your various posts at Lucia’s but haven’t parsed them. As a quick observation, it seems to me that, if one imputes noticeable delay between forcing and eq temperature, doesn’t it make the “pause” that much harder to rationalize?

Callendar seems to be the right guy at the right time; a steam engineer he would have been an expert on steam tables, the Rankine cycle. Since to a first order the earth’s

climate is largely driven by the response of the oceans to sunlight, his “simple”

model is a good first start. British engineers by his time had over 100 years of

experience with steam and the power spectrum of light would have been known by his

time (mainly visible light and near IR). I always find much to learn from older

textbooks or reports, and this is a good example. Great series of posts. I enjoyed the

scavenger hunt.

So what does the 0-dimensional energy-balance model predict about heat-waves in Australia, the decay of Arctic sea ice and Amazonian precipitation? Nothing? How about the impact of ozone depletion on Antarctic climate or Atlantic hurricanes? Still nothing?

A GCM can make predictions about all of these aspects of climate. A zero-dimension model cannot.

Steve: quite so. I’m not sure who you are arguing against. The issue is that there is no reason why a GCM should perform worse than Callendar in hindcasting observed GLB temperature. I see no reason why the (perhaps contested) ability to project regionally should detract from skill in GLB temperature. Nor have you have given any plausible reason. The poor GCM skill scores certainly suggests systemic problems in the parameterizations used to tune the models.

True – a GCM can make predictions about all of these aspects of climate. And all too often, GCMs disagree with each other on the magnitude (substantially) and/or sign of change of these aspects, and/or conflict with what becomes the observed reality.

GCM’s can’t make predictions about any of those aspects of climate, and to suggest otherwise is an abuse of “predict” as scientific language.

“So what does the 0-dimensional energy-balance model predict about heat-waves in Australia, the decay of Arctic sea ice and Amazonian precipitation? Nothing? How about the impact of ozone depletion on Antarctic climate or Atlantic hurricanes? Still nothing?”

What do GCMs predict about monkey’s flying out of my butt? nothing.

When comparing models you dont get to specify a test that the model was not prepared to answer. One doesnt test the skill of a hurricane model by looking at the snowstorms in Russia it predicts. And one doesnt test a GCM by seeing if it gets the count of ass monkeys right.

Calendar sets the table: predict the global temperature. And his model sets the standard. Beat that.

Now of course you can do something different. You can predict ass monkeys and temperature and then claim you are better than his model because while you miss his mark on temperature you add the critical feature of ass monkeys.

Normal model development would say “add all the ass monkeys you like computer engineer, but dont go backwards on our signature metric”

So, yes, GCMs are more comprehensive than 0 dimensional models.And they do a better job at higher dimensions–by default. But thats not the question. All the impact studies and costs associated with them are driven by getting temperature right. Pragmatically speaking Im interested in the best prediction tool for that metric. Im not interested in which model gets the number of snowflake correct, or ass monkeys correct, Im interested in which model gets the cost driver correct. Screw the physicists dream of verisimilitude. Give me actionable forecasts.

( of course I can argue the other side as well )

I am confident that you can!

😉

The GCMs disagree on local changes in precipitation, Arctic amplification, etc. The ability to model changes specifically in the Arctic or Australia isn’t there.

http://www.sciencemag.org/content/340/6136/1053.full

Can I suggest that was not influenced by “beliefs” in what the result should be.

Steve or anyone else for that matter:

Is Callendar’s 1961 article Temperature fluctuations and trends over the earth relevant to this discussion. If it is, can somebody point me to a copy that is not pay-walled?

He was right ! : This is exactly the same result as described in this comment

The figure of 1.7C is actually for TCR (transient climate response) – so it is still possible that ECS(equilibrium climate sensitivity) is as high as 2.5C. However the longer the pause in temperature continues the lower will fall climate sensitivity and If temperatures actually were to fall over the next decade then the whole edifice collapses anyway.

Yes, Even the orthodox in Climate Science admit that GCM’s have limited skill at regional climate. So, one must ask what do they do that simple energy balance models do not.

“Although Callendar’s qualifications would undoubtedly lead a modern Real Climate or Skeptical Science reader to dismiss him as suffering from Dunning-Kruger syndrome…”

Undoubtedly? I think RC has a better claim on PJ’s readership than CA. And he said:

“Callendar’s achievements are remarkable, especially as he was an amateur climatologist, doing much of his research in his spare time, without access to a computer. He was meticulous in his approach, and a large collection of his notebooks are currently archived at the University of East Anglia.”

Doesn’t sound so dismissive.

They may change their opinion now.

Hardly. As Steve said, he picked up the idea for these posts from the Hawkins and Jones paper, and they said of that 1938 model:

“Fig. 1 compares the latest CRUTEM4 (Jones et al. 2012) estimates for annual near-global land temperatures with that of Callendar (1938). The agreements in trends and variability are striking”

It was their idea to write a paper celebrating the 75th anniversary.

Nick,

I read that differently from your apparent interpretation.

If a previously unknown Callendar had shown up at any time in the last twenty years attempting to publish papers similar to the ones he actually published in the earlier parts of the 20th century, Callendar’s qualifications would undoubtedly lead a Real Climate or Skeptical Science reader to dismiss him as suffering from Dunning-Kruger syndrome. Undoubtedly.

Steve: yup. Real Climate and Skeptical Science commenters regularly allege Dunning Kruger syndrome against people who, like Callendar, have experience in other fields. It’s ludicrous for Stokes to suggest otherwise. That RC after-the-fact made an exception for Callendar himself is irrelevant. It’s very clear that the sort of attitude exemplified in their threads would have been applied in the day to Callendar. Not that Stokes would acknowledge the obvious.

Yep. A mordant first century wit said of the establishment of his day: you build the tombs of the prophets, showing that you agree with your fathers who murdered them. They tried to do away with him as well.

Does this mean that in 2089 McIntyre will be celebrated at RC/SS?

Remember folks, the Dunning Kruger effect only applies if they don’t like what you have to say. If they do like what you have to say, you can have no qualifications, be a fool and still become Gavin Schmidt’s Guru.

“Steam technologist?” Obviously he had ties to big industry! Dismiss his paper immediately!

Obviously he had been bought out by big coal.

Do not send to know for whom the ice Tols, it clarifies for the economists.

=============

They had to be meticulous or boilers blew up maiming and killing en masse. Now it’s just narratives that explode catastrophically.

=================

If you visit the Black County Museum or Iron Bridge Gorge you can see quite a few working steam engines from the 1780;s to the 1930’s. They all have modern, post-1900’s, boilers, as the older ones had an unfortunate habit of exploding. Modern health and safety rules mean they cannot be authentic.

Henry Clemens, the younger brother of the author Mark Twain, died along with 250 passengers and crew of the Mississippi paddle-steamer Pennsylvania had a boiler explode in the 1850’s. Steam engineers were like the aerospace engineers of the day. The boilermakers were an esteemed class of workers, who were highly paid for the time, they had to bend steel and rivet it without work hardening or introducing invisible cracks. They would strike their work with copper rods to listen to the tone and detect flaws, like the wheel-tappers did on rail wheels.

Indeed. A lot of hot-shots seem to believe that the IQ of the human species has been constantly rising (culminating in themselves). The remarkable discoveries and inventions of people who lived thousands of years ago are either not known of, or regarded as aberrations.

Steam engineering was important enough to have its own research institute in Callender’s day. I’m guessing that the computer model jockeys of today think that is a sign of how dumb people were then – once you get the James Watt tea-kettle thing, the rest is easy-peasy. No wonder they keep being blindsided by hubris.

Gutenburg.org has various E-book Flavors of Dionysius Lardner’s very readable 1840 book, “The Steam Engine Explained and Illustrated”. In addition to the very thorough technical discussions of the development of theories of steam application to power and the engines themselves, there is a fascinating chapter on the likely effects on the economy of inexpensive and reliable transport.

The book is worth reading for that chapter alone.

j ferguson – typo, s/b

gutenberg.org

A magnificent resource.

I don’t think it’s fair to say that many think IQs have been rising, but it’s certainly true that the body of available knowledge has risen. Therefore, one has to do less research to arrive at a certain plateau. You don’t have to re-invent the wheel (or the steam engine).

Look up Flynn effect

2.41*log(2) = 0.73; 2.41*ln(2) = 1.67.

All GCMs just linearly extrapolate fractional forcing. It’s not so surprising, therefore, that Callendar’s equation does as good a job as modern models. The scandal that’s revealed by your post, Steve, that everyone’s using multimillion dollar sooper-dooper numero-snoopers to do calculations that can be done just as well by hand. Climate models contribute almost nothing to climate science.

“The scandal that’s revealed by your post, Steve, that everyone’s using multimillion dollar sooper-dooper numero-snoopers to do calculations that can be done just as well by hand”

Do you think this can be done by hand?

“Do you think this can be done by hand?”

This one statement and the youtube video refers to tells us exactly what is wrong with climate science, and why climate scientists don’t understand why their message isn’t being received by the public.

Nick, prior to the postnormal science being practised in the halls of climate science, scientists did all they could to describe nature in as simple a way as possible so that the rest of the world could understand it, if not easily, but in concept. Hence the beautiful equations of Maxwell in the 19th century and the even more simple equation of Einstein, E = MC^2. The great man was probably as pleased with the simplicity of his equation as he was with the ideas behind it.

Nowadays the public are considered too stupid to understand climate science because it’s too complicated, yet it’s practitioners don’t understand it enough themselves to describe it in a way that is easily understandable to the general public. As Lord Rutherford once said, (presciently in my view):

“If you can’t explain your theory to a barmaid it probably isn’t very good physics.”

“The great man was probably as pleased with the simplicity of his equation as he was with the ideas behind it.”

Relativity was not thought obvious to everyone. There was a riposte to Pope’s optimistic

“Nature and Nature’s Laws lay hid in night

God said ‘Let Newton be’ and all was light”

which went:

“It did not last – the Devil howling ‘Ho!

Let Einstein be!’ restored the status quo”

But the fact is, the Earth is complex. GCM’s had been criticised, for example, for not modelling ENSO. This is the sort of complexity needed to manage that, as the animation shows.

Can Callendar do ENSO?

Steve: Not the right question. Can models which produce ENSO replicate GLB temperature as well as Callendar? If not, why not?

Obviously not Nick. But why wasn’t anyone checking how the most basic prediction was doing against both real world temperature anomaly and older models such as Callendar’s? Steve’s uncovered something that should be of great embarrassment here.

“But why wasn’t anyone checking how the most basic prediction was doing against both real world temperature anomaly and older models such as Callendar’s? “

They were. As part of the AR4 on model evaluation, Sec 8.8 surveys the results of simpler models.

Steve: the link noted the existence of simpler models, but did not “survey” their results. Is there another location in which the survey is reported or did you speak a little too quickly on this?

Of course. But nothing from Callendar in the References. Would you prepared to admit that IPCC working group 1 missed a trick there?

Hmm, I retract the ‘of course’. The lack of any actual survey, let alone comparison of Callendar’s model out of sample with hindcasts of more recent GCMs, as Steve has done, means we owe Nick our gratitude for highlighting the inadequacies of AR4 WG1 in this area. At least, if Sec 8.8 is the whole of the story.

“Is there another location in which the survey is reported “

Sec 8.8 surveys the lesser complexity models. Table 8.3 gives, for example, details of 8 EMICS (intermediate complexity) with parameter ranges, properties etc. But yes, the results are referred to elsewhere; distributed in the results chapter (10), with the acronyms SCM (simple) and EMIC (intermediate). Sec 10.5.1, for example, deals explicitly with the hierarchy of models, focusing on sensitivity and uncertainty.

And yes, Callendar is not referenced. The AR4 tends to focus on what has happened since the TAR. I expect Callendar would be mentioned in the FAR, but I don’t have a copy.

Steve: section 10.5.1 doesn’t look to me like what you advertised: isn’t it the stuff that Nic Lewis found to be garbage??

Nick Stokes:

There are at least three important possible meanings of ‘what has happened’ that I can see. The most basic is that there are more real-world observations, including global emissions of CO2 and aerosols and readings at temperature stations and SST buoys, leading to new values for stats like globally averaged temperature anomaly, and the like. Second, GCMs from last time (or even from FAR) may have been run again with the now known values for various anthropogenic factors. And third, GCM code may have been changed and the new code, assumed ‘improved’, run against the new values and into the future.

In reporting ‘what has happened since the TAR’ has there been enough happenin’ in the second category? Or has that been strangely overlooked?

The absence of mention of Callendar, and Steve’s current findings, subject to further scrunity, seems prima facie evidence that something has indeed been missing.

It’s a good point, here. When did climate science lose sight of Callendar’s science and when did the economists lose sight of his economic projections.

Was it a moment of ‘absence’, or ‘deliberance’?

===================

Do I think “this” can be done by hand? I know that animated simulations of complex systems can be done by hand, the Disney studios did it for decades. Next question.

Not the right question. Do you need to do it thus at all?

snip = pointless bickering

The context was global air temperature projections, Nick. GCM air temperature projections can be done by hand. Your SST challenge is irrelevant.

But speaking of SSTs, Carl Wunsch has noted that ocean models don’t converge. He wrote that when he asks at meetings about the physical meaning of a non-converged result, he gets shrugged off with the comment that model results ‘look reasonable.’

You raised SSTs as evidence of model wonderfulness, so how about it? What is the physical meaning of your pretty graphics representing the output of a non-converged ocean model?

Or can climate models do this:

https://sites.google.com/site/climateadj/ocean_variance

Nick Stokes: “Do you think this can be done by hand?”

Nick – My question is both O/T and clueless, but is there anything reasonably accessible discussing validation of this model, or significant parts of it?

“Do you think this can be done by hand?”

Maybe – an historical example here:

http://www.vangoghgallery.com/catalog/Painting/747/Wheat-Field-with-Cypresses.html

There are others with different starting conditions.

Nick Stokes provided a link to an elaborate animation of sea surface temperatures which i assume came from a elaborate model run on a supercomputer. Does anyone else find it disturbing that climate science can create such elaborate animations but cannot provide an accurate measure of climate sensitivity to CO2?

I am a total layman at this but I would like to ask the question of jsut hat is the most critical outstanding issue in climate science? Is it CO2 sensitivity?

I suppose my question for Nick Stokes is that there are many different climate models with varying parameters. I have herd that despite the difference in parameters that they all hindcast pretty well. If this is so what does this say about the utility of climate models?

richard telford

Posted Jul 26, 2013 at 3:58 PM | Permalink | Reply | Edit

Shakespeare, 1 Henry IV

GLENDOWER.

HOTSPUR.

Climate Wars, Act 13 Scene 5

TELFORD.

MCINTYRE.

In general, the regional predictions of the GCMs are worse, in some cases much worse, than the global predictions.

Steve, this is a most hilarious find, that a 1938 model outperforms all of the above. Not only that, but you’ve done an un-lagged version. If you lag it, from the looks of it the fit would improve … all of the GCMs I’ve tested have lags of half a decade or less.

Next, after years of arguing for this very thing, it’s a personal satisfaction that you note this line:

Steve: Willis, I was thinking of you when I inserted this quotation. 🙂

I note that you call this a “negative feedback” but Callendar doesn’t describe it that way. He describes it in terms of a governor, which counterbalances (or in his terms “corrects”) the temperature whether it goes up or down.

Finally, I have also argued that the warming will be generally beneficial, so I’m glad to see him say:

This has been part of my argument about the so-called “social cost of carbon”, which is that it ignores the social benefit of carbon.

A marvelous paper, well done, and well played.

w.

Willis, control systems 101: governors (aka proportional controllers) work by negative feedback. They’re exactly the same thing.

Harold, control systems 101. Governors use both positive and negative feedback (although there are one-sided governors), and are specialized mechanisms which exist apart from the feedbacks which they control.

w.

Wrong. Positive feedback destabilizes. It’s never used in governors.

You’re driving down the road at 60. You put cruse on. It finds a throttle position that makes the car go 60. You hit a hill. The speed goes down. The cruse control reacts to slowing by more throttle. Speed goes down. Throttle goes up. Negative feedback.

You go up and over the top of the hill and down the other side. Speed goes up. Throttle goes down. Negative feedback.

You hit flat again. Speed goes down. Throttle goes up. Negative feedback.

It’s all negative feedback. Positive feedback would make the the car speed up more if it’s above the set speed, and slow down more if it’s below the set speed. A positive feedback cruise control would ether floor the accelerator and stay there, or stop the car completely.

What’s really weird is that I was agreeing with you. Stability is, in itself, an indication of negative feedback. This is really basic CS theory; negative feedback always stabilizes, and positive feedback always destabilizes. There’s a narrow range of positive feedbacks that merely amplify, but outside of that small range, positive feedback will always go unstable.

So the governor analogy is perfectly apt. Callendar was right on the money.

Steve, thanks for bringing into the light the exceptional work and achievements of Guy Callendar. It is interesting that he had to go through a Fellow of the RS to get his work validated – some things never change – but notable that he did. Also, the leisurely pace of moving his paper through the process – submitted May ’37 and spat out February ’38 – indicates a very rigorous review process, a laid-back approach to same, or perhaps both. Best of all, he was a working stiff in the pay of Big Steam! Wonderful stuff.

A slight quibble with your post – you seem to agree with Mosher that making models more complex should make them better, otherwise they are wrong. Having some experience with models (although not scientific ones) IMO that is backwards. In my experience of models of dynamic systems (principally economics), making them more complex very frequently makes them worse.

I think that the substance of your post illustrates this point quite well.

Steve: I don’t think that we disagree on model complexity: I agree that making models more complex can make them worse. Indeed, one of my reservations about ultra-complicated GCMs is that their requirement to model everything gives far more opportunity to go astray and screw up what they started with,

Thanks, Steve. I guess that the term “models” is too broad. In engineering, adding factors can be a definite plus – these models typically describe things that are static or have well tested limits.

Dynamic models, such as those used in climate or economics, are cats of another colour.

@ Willis Eschenbach,

It is hard to dislike someone who uses Shakespeare so appropriately.

Steve,

Models that fixate on a dominant variable are doomed to failure but that does not discourage “Climate Scientists” around the world in the least.

The fixation on CO2 can be traced to 1896 when Arrhenius stated:

“The selective absorption of the atmosphere is……………..not exerted by the chief mass of the air, but in a high degree by aqueous vapor and carbonic acid, which are present in the air in small quantities.”

Arrhenius went on to calculate the warming effect of CO2 at ~5.4 K/doubling of CO2.

It is easy to explain the temperature variations during the last seven glaciations (~800,000 years) in terms of CO2. Just set the doubling coefficient to 16 K/doubling:

With a starting point of 1900, Callendar’s 2.41 K/doubling looks pretty good but the IPCC likes to focus on 1850 as the date the Industrial Revolution began to destroy the planet.

Even though my math tutor was J.C.P.Miller I can’t compete with your command of statistics so you may be able to refute my assertion (based on “Least Squares”) that the best fit starting at 1850 requires a climate sensitivity of 1.6 K/doubling.

Ain’t it wonderful to have a constant that varies with time over such a huge range!

I met SM’s son at AKCO, a Canadian bar, and suggested that he read the HSI cuz M and M and the Bishop had saved civilization. Still believe it.

This work would suggest that the man wasn’t sure he had saved it so decided to demolish something even more central to the IPCC’s narrative – and far more costly. If all the GCMs could have been replaced by pencil and paper for this:

as Mosh put it earlier, then there is nothing less than a gaping hole in the picture painted for policy makers these 25 or so years. And this one is going to be very hard to hide.

Needless to say it’s the If that’s the key word there. But Racehorse has gone ominously silent.

>> Guy Callendar (see profile here) seems entirely free of the bile and rancor of the Climategate correspondents that characterizes too much modern climate science. <<

Of course, that's because people like you and your crowd have harassed the latter to no end.

So what exactly is your point? That if only you'd have had the chance to harass Callendar, he too have been just as upset about it?

No end? Au contraire, the end is near.

=====

Would it be considered pylon to say he’s marked it well?

Why not comment on the larger point, instead of pointing to a tempest in a teacup?

There’s harassment and there’s embarrassment. This is the latter. It needs facing up to. But it could also point to a far more transparent modelling process where GCMs lose some complexity in return for much more openness, where everyone interested can reproduce results on an affordable machine. Don’t think there’d be a problem with Callendar’s ‘code’ on that front. This is a clarion call for a different way.

David Appell wrote:

I believe that the bile and rancor of the Climategate correspondents arose from the correspondents themselves and not from external “harassment”. The mean-spiritedness of the Climategate correspondents is evident early on. On accasions when there were opportunities to provide even-tempered responses, they too often took the low road.

This was very much my personal experience when I first entered this field. David, as you are aware, I began in this field by publishing a couple of articles in academic journals criticizing the methodology and data of Mann et al 1998. This was not “harassment”. I was particularly disappointed by the Climategate correspondence in late 2003 responding to our entry with what can fairly be characterized as “bile” and rancour”. There was no effort on the part of the correspondents- Stephen Schneider included – to understand whether there might be something wrong with the articles that we criticized. Their objective was to ridicule and disparage us. At the time, I was completely unknown to them, but nonetheless they felt free to impute motives to me.

You yourself participated at the time in the dissemination of Mann’s lies about the context of our article: Mann’s false claim that we had requested an “Excel spreadsheet”; Mann’s claim that the dataset to which I had been directed and which bore a timestamp of 2002 had been prepared especially for me; Mann’s claim that we had not checked with him when we noticed problems with the data; Mann’s claim that the dataset which he moved into a public folder in November 2003 had been online all the time (notice the CG2 email in which Mann accused me in September 2003 of trying to “break into” his data.

Your blog article distributed these lies to the public, though Mann had himself distributed them widely through email distribution. When I asked you in the politest possible manner, appealing to your journalistic ethics, to correct Mann’s untruthful allegations, you refused.

“The trouble with most of us is that we’d rather be ruined by praise than saved by criticism.”

Steve, you might be interested in an exchange I had with David Appell about a year ago. He requested a single example of Michael Mann lying. I responded:

I then pointed out we have the correspondence between you and Michael Mann. David Appell’s response:

There is no excuse too great for David Appell. He seriously suggested you deceptively posted only a portion of the correspondences you had with Mann et al in order to paint Michael Mann as a liar. It’d have been easy for Mann to prove this about you, but nobody else has ever suggested it. Still, Appell thinks it’s a valid enough idea to dismiss the overwhelming evidence that Mann lied.

That’s what made me decide David Appell is like a less intelligent, less honest version of Nick Stokes. I figured there was no point in pursuing the matter further as I’m sure he could find ways to deny any evidence one might present.

Steve: As you observe, Appell’s attempt to rationalize Mann’s lying is very Nick Stokesian. But even this bizarre excuse doesn’t rationalize the other elements of Mann’s lying at the time: (1) the data that I was referred to was timestamped in 2002 and was not prepared in response to my request in April 2003; (2) we had contacted Mann to confirm that this was the data that he had used and I provided an email to show it. Also in the Climategate emails, there’s an email from Mann to Climategate emails described as containing my original request and which was the email that I had placed online. I don’t regard Appell as harshly as some readers but I’m very disappointed in his irrationality on this topic.

Of course, that’s because people like you and your crowd have harassed the latter to no end.

The irony, it burns.

Of course, that’s because people like you and your crowd have harassed the latter to no end.

So what exactly is your point? That if only you’d have had the chance to harass Callendar, he too have been just as upset about it?

David Appel, argues that science is “rough and tumble”

Yet asking for data is harassment.

-snip-

@David Appell,

As a master of obfuscation you seem unable to make precise statements. What I got out of your comment was a complaint that Steve has somehow been unfair to the “Climategate Correspondents”.

In the opinion of virtually everyone Steve is overly kind and generous. What he sees as errors in statistical analysis look like dishonesty or fraud to many of us.

The problem with your web site (Quark Soup) is that honest discussion is not possible when censorhip rules. You will get better treatment here than you deserve.

Steve, why do the observations on your graph go past the present? I know I said I was done here but this is bothering the heck out of me. In the past you were a detail guy, now ‘it doesn’t matter’ (trade marked by you via climate scientists) isn’t going to work… Bender, Bender, Bender… or RC… Please help me. I’m dazed and confused…

Steve: I’ve included the most recent UK Met Office decadal forecasts as a dotted line and noted ” + Decadal” in the legend. I’ll make the caption clearer.

oh! Thanks for the reply.

“This last comment was noted up in Hawkins and Jones 2013, who sniffed in contradiction that “great progress” had subsequently been made in determining whether warming was “beneficial or not”, quoting Callendar, but surgically removing Callendar’s reference to direct benefits (heat and power) and carbon dioxide fertilization

“surgically removing”? Bowdlerization is an alternative term.

In this case, it is an example of altering a scientific publication to change its message.

Snipping a quote to change its meaning is technically known as “Dowdification” after Maureen Dowd of NYT.

http://www.urbandictionary.com/define.php?term=dowdification

Regards,

Bill Drissel

Grand Prairie, TX

Steve, nice work! The (lack of) skill of the current GCM’s always wondered me and I have been suspicious about the use of human aerosols as a convenient “tuning knob” to fit the past, and even so not so good, especially not in current times where the reduction of aerosols in the Western world is near fully compensated by the increase in SE Asia. With virtually no change in global aerosols, there is no way that aerosols can explain the current standstill in temperature with ever increasing CO2 levels.

Thus what else can be the cause of the standstill 1945-1975 and 2000-current? Large ocean oscillations may be one of the culprits, as these are not reflected in any GCM. But if they are responsible for the standstill of the past and today with record CO2 emissions, then they may, at least in part, responsible for the increase in temperature inbetween…

As a side note, Callendar was the man who did throw out a lot of local CO2 measurements made by chemical methods to show a curve for what he thought that the real increase of CO2 over time was until then. He used several predefined criteria to do that, not the post editing we see from many in modern climate research.

The remarkable point is that his estimates for the increase of CO2 over that period was confirmed several decades later by ice cores…

Steve: there was a recent article on tuning which Judy Curry covered. I haven’t parsed the topic but it sounded like there are knobs connected to cloud parameterization – clouds needless to say being a sort of black hole in terms of comprehensive understanding,

Steve re “clouds . . .being a sort of black hole in terms of comprehensive understanding,”

In his “TRUTHS” presentation (2010, slide 7) Nigel Fox of the UK National Physical Lab summarizes the IPCC’s uncertainty of 0.24 for clouds compared to 0.26 total (2 sigma). I.e. Clouds form ~ 92% of all uncertainty in the feedback factor. Furthermore:

I think I’ve never heard so loud

The quiet message in a cloud.

=================

I’ve worked with an MIT climate model, I think it was EPPA 2. Presumably a simplified version as century runs would run in about 10 minutes. For this, you were explicitly providing values for ocean sensitivity, aerosols, and clouds. And yes, changing these numbers would give you huge variations in the sensitivity. The professor even acknowledged that certain values which were possible would give you an amount of warming equal to the previous century+ and no big deal.

“And yes, changing these numbers would give you huge variations in the sensitivity.”

Which is congruent with the precision MIT “Monty Carthon” climate study tool shown here:

(This is not science; this is a “Big Six” carnival game.)

Even more primitive than Callendar is the more recent work of Akasofu (2010, 2013), who has looked at the simplest non-trivial fit to the data: he predicted the present temperature stasis and predicts that it will last another 15 years. It may, but is not likely, to be a fluke. Akasofu made his prediction based on the attribution of the first harmonic to oceanic patterns. It is a concern that most practitioners of GCMS have not taken it at all seriously. If they had engaged in 2000, they might have thought more carefully about what is happening, and the systematic divergence of the GCMS from the real-world data that ahs occurred since then might have not have emerged into the serious problem it is today.

Syun-Ichi Akasofu, ‘On the Present Halting of Global Warming’, Climate 2013, 1, 4-11; doi:10.3390/cli1010004

S-Y Akasofu , ‘On the recovery from the little ice age’ 2010, Natural Science 2 1211-24

This chimes in beautifully with a recent post by Willis at WUWT.

He found that a simple formula that can easily be run on a laptop performed as well or possibly better than the climate models running on million-dollar supercomputers.

snip – overeditorializing

Nice to see good “old fashioned” science in action. Excellent stuff Steve. Top quality.

Thanks Geronimo- love this:

“As Lord Rutherford once said, (presciently in my view):

“If you can’t explain your theory to a barmaid it probably isn’t very good physics.”

(And no surprises on reading David Appel’s response…)

I don’t know why folks here are characterizing Callendar as some sort of ‘unknown.’

As has been pointed out above, his work is well-described in Weart’s “Discovery of Global Warming,” and JR Fleming has written a biography at book length.

http://www.rmets.org/shop/publications/callendar-effect

One offshoot is an online article:

https://secure.ametsoc.org/amsbookstore/viewProductInfo.cfm?productID=13

His bio is also the primary source for my article on Callendar, here:

http://doc-snow.hubpages.com/hub/Global-Warming-Science-And-The-Wars

Callendar is scarcely unknown to readers of RC, or of the climate mainstream. For example, his papers form an important and treasured archive at the University of East Anglia:

https://www.uea.ac.uk/is/collections/G.S.+Callendar+Notebooks

I’ve characterized Callendar as the man who brought CO2 theory into the 20th century. He corresponded with several of the important mid-century figures in that study–notably Gilbert Plass, but also, if I’m not mistaken, Dave Keeling. And Revelle was certainly aware of his work–probably so, too, was Bert Bolin, first chair of the IPCC.

All of which explains why it was that scientists celebrated the 75th anniversary of his 9138 paper, back in April. (Googling “guy callendar anniversary” produced 697,000 hits!)

[Steve: I certainly did not characterize Callendar as “some sort of ‘unknown'”. Quite the opposite. In my post, I cited and linked your article http://doc-snow.hubpages.com/hub/Global-Warming-Science-And-The-Wars, noting that I had drawn on it for my profile of Callandar. I also referred to the Callendar archive at the University of East Anglia, contrasting Callendar’s “meticulous” recordkeeping with Phil Jones’ casual failure to preserve original station data. I cited Hawkins and Jones’ retrospective article on Callendar, tho I criticized it for overly focusing on Callendar’s temperature accountancy work. I also mentioned Plass, also Canadian- born. I don’t understand your complaint.

]

Sorry, “1938 paper”!

Thanks for linking the article. I’m not complaining about your original text; I’m expressing surprise at the characterizations of a number of commenters–and, for clarity, ‘some sort of unknown’ is my phrase, relating only to my perception of a number of comments, and is not meant to refer to any particular comment.

Steve: if you are criticizing someone, I think that you have an obligation to accurately quote and reference precisely what you are criticizing. Gavin Schmidt at Real Climate has a reprehensible habit of not doing this. It’s a bad habit that you should try to avoid.

Doc Snow

With nominally 425 citations to Guy Callendar (1938), it is surprising that the IPCC ignores his climate sensitivity estimate.

Steve: Archer and Rahnstorf, Climate Crisis, reported that Callendar’s sensitivity estimate was 2 deg C and that he had supported water vapor feedbacks.

“I also mentioned Plass, also Canadian- born.”

So was Nesmith… the inventor of basketball. This gives credence to the old joke:

Q: How many Canadians does it take to screw in a light bulb?

A: Ten. One to screw in the lightbulb and nine to say “Look… He’s Canadian!!!”

Naismith.

=====

I staannd coorectid 🙂

Nesmith was the Monkey who flew out of Moshers Akasofu.

You wouldn’t have noticed if I used an accent aigu, as in “Nésmith”, eh?

Heh, I wouldn’t have been able to find my way home.

===============

🙂

🙂 Good one. Makes me remember (very fondly) my years living in Ontario.

I’ve asked Spencer Weart a number of times when he is going to write ‘The Discovery of Global Cooling’.

====================

Doc Snow:

I found your article clear and helpful. The links were also helpful. Alas Flemings book appears to be hard to find.

Thanks, much appreciated. I don’t have a copy of the Fleming myself; my local library was able to get me one via ILL (Inter-Library Loan.) Maybe yours can, too?

Brunt’s “Discussion” to Callendar’s paper appears as pertinent today in terms of evaluating GCM’s “skill” in hindcasting/forecasting (or lack thereof as Steve quantifies):

A “steam technologist” who did furnace calculations would be familiar with methods of calculating radiation through an atmosphere containing CO2 and H2O, because flue gas contains those two species.

Steve: his practical experience clearly enabled him to see things that academic climate scientists of his day were unfamiliar with.

“Steve: his practical experience clearly enabled him to see things that academic climate scientists of his day were unfamiliar with.”

Much like our host 🙂

You seriously underestimate how hard and low resolution IR measurements were in those days.

Excellent work, Steve McIntyre. But ….

1. I never had much faith in the models used by the IPCC that projected global temperatures to 2100 because they could not be validated before 2100. Consistency requires that I restrain my faith in Callendar’s model until then. However I have much more faith in it than the IPCC’s model because it does seem to fit the data better than the IPCC models.

2. What global temperature would Callendar’s models project using various scenarios? Am I correct in assuming Callendar model is mostly C02 forcing without much feedback? Then Callendar is assuming, say, sunspots are not important.

Whatever the answers to the above questions are I think the current modeler’s might want reevaluate their models.

klee12

Steve: I’m not asking readers to take a position on whether Callendar was “right”. As I observed, his parameters are hardly “engraved in stone”. The question was why, after so much resources and effort, GCMs had no skill in GLB temperature relative to Callendar.

Can’t wait for Bel Tol to chime in.

=============

Evidently the establishment has already defined Callendar’s work as “consistent with” IPCC http://en.wikipedia.org/wiki/Guy_Stewart_Callendar though “on the low end.” Never mind the difference between a climate sensitivity of 2 deg per Archer and 1.67 deg, the latter value lying outside of the range of liklihood defined by IPCC.

Steve:

This is what I was trying to point to in the last thread and it got snipped as O/T.

The cloud “amount” is the biggest fiddle factor in the whole story, especially in the tropics where most of the energy input to the system comes in.

Roy Spencer calculated that is would take a 2% change in cloud cover to equal the CO2 forcing and I don’t think anyone claims the current guestimates of cloud cover are accurate to within 2%.

That means modellers can just pick cloud “parametrisations” that give the results they like.

Also tropical cloud cover is not just some wandering “internal variability” it is a strong negative feedback climate mechanism. The problem is that individual tropical storms are well below the geographical resolution of any model so don’t get modelled AT ALL. Just parametrisation.

We have a natural experiment to look at climate response to changes in radiative input in the from of major eruptions. And when we look at the tropics we see a very different response to extra-tropical regions:

The cumulative integral of degree.days (or growth days to farmer) seems to be fully maintained in the tropics. This implies a strong, non-linear negative feedback mechanism. It think it is very likely that it is tropical storms that provide the physical mechanism

Willis has posted a number of times on this at WUWT calling it a “governor”. A governor would maintain a roughly constant value of the control variable. I think my graphs show this is tighter control that a temperature governor, since it appears to maintain the cumulative integral. This would be closer to an industrial PID controller.

To do this requires a self-correcting mechanism not just a passive negative feedback. The nature of tropical storms where the negative feedback is amplified and self maintaining seems to provide that.

The series of inter-linked graphs I’ve provided demonstrate that there is a fundamental control mechanism in the tropics that leaves them with a near zero sensitivity to changes in radiative forcing.

That may go some way to explaining why a low sensitivity model works better on global averages but it’s not just a case of playing with the global tuning knob.

OK. Arguing for specific knobs is non-Callendar but I guess that I opened the theme.

FWIW it seems to me that clouds also function as a sort of regulator in mid-latitude summers. Today is another cool cloudy day in Ontario in what has been a rather cool cloudy summer. In our mid-latitude summers, heat waves seem to occur when there are blocking patterns that enable the sun to pour in, as in the 1936 and 2012 heat waves. Although we are often told that cloud feedbacks are positive, in our mid-latitude summers, when the total solar insolation is very large even in tropical terms, cloudy days are cool.

Given that the planet is warmest in NH summer (even though it is then almost at the farthest in its orbit), mid-latitude thermostats are probably worth mulling over as well.

“… mid-latitude thermostats are probably worth mulling over as well.”

Indeed, and my graphs demonstrate that too. The same one I linked above covers ex-tropical SH and shows that between 3 and 5 years after the “average” eruption the integral is flat. ie SST is at the SAME temp as the four year, pre-eruption reference period.

How much of this is the stabilising effect of tropics and how much is local ‘thermostatic’ effects would need investigation.

The down step is a loss of degree.days not a permanent temperature drop.

“Given that the planet is warmest in NH summer …”

This is why I shy away from global averages, especially land + sea averages. Land temps change about twice as fast as SST.

That means that looking at SST should be sufficient to follow any warming patterns and avoids introducing the land/sea ratio bias of NH.

I think it is quite important to get any further with understanding that we move beyond unified global average metrics.

That kind of approach is OK as a first approximation but to understand why models are not working and have even a chance of determining system behaviour beyond a trivial CO2 curve, we need to stop muddying the water my mixing all the paints in one pot.

Steve,

Yes, a cool and cloudy summer but not cooler than the average at Pearson (since 1938 anyway).

BTW and COTSSAR(*), Environment Canada changed how they register temperatures at Pearson (GTAA) in July.

(*) Completely Off Topic So Snip As Required

Given the fact that GCM-Q is a few orders of magnitude simpler and less resource intensive than the GCM’s you’re pitting it against, how feasible would it be to exhaustively test parameterisation and goal-seek a better fit? Not a guarantee of the plausibility / reality of the winning parameters, but maybe interesting.

Hi Steve,

I think Callendar did some amazing work. Our focus in the 75th anniversary paper was on his temperature records, but his work in collecting the various CO2 observations and also inferring that the ocean would not take up all the excess human emissions of CO2 was also excellent, and way ahead of Revelle who proved this much later. His model of the atmosphere was advanced for the time, but he did consider the radiative balance at the surface, whereas we now consider that this is flawed and the balance at the top of the atmosphere (TOA) is more appropriate. Interestingly, Arrhenius used TOA balance.

Regards,

Ed.

PS. The two links which look they should go to my blog article on this are wrong – one points to the paper (first para), and the other is missing (in postscript).

PPS. The article I mention is this one:

http://www.climate-lab-book.ac.uk/2013/75-years-after-callendar/

And, the accepted paper on the 75th anniversary is now online in it’s final form, and is open access:

http://onlinelibrary.wiley.com/doi/10.1002/qj.2178/abstract

Ed.

@Ed Hawkins, I agree that Callendar did amazing work in his time. But I have some objections against your story in the first link for the mixing in of the role of aerosols in the cooling period 1945-1975, which isn’t part of the Callendar story, but part of the tuning story of current GCM’s to explain that period with increasing CO2 levels.

While SO2 emissions may have had some small role in that period, they can’t have a role in the current standstill, as the increase of emissions in SE Asia is compensated by the decrease in emissions in the Western world, thus there is hardly any increase in cooling aerosols while CO2 levels are going up at record speed and temperatures are stalled. That makes it quite doubtfull that the same aerosols would have had much impact in the previous period of temperature standstill/cooling.

@FerdiEgb – the effect of aerosols is thought to be more complicated than you imply. For example, a simple shift of emissions from one location to another could still have a global temperature impact because of (a) emissions into a regionally cleaner atmosphere have a larger impact, and (b) any cloud & circulation response will depend on the mean state in the region where the emissions occur, i.e. the effect is likely non-linear. As the emission of aerosols in the 1940s onwards tended to be into a cleaner atmosphere they may have had a larger effect.

There is still much debate about possible causes of the recent slowdown in temperatures – but the natural solar & volcanic forcings are very likely to have had an effect:

http://www.climate-lab-book.ac.uk/2013/recent-slowdown/

As an aside, Steve’s forcing estimate used above for the GCM-Q doesn’t, I believe, include the natural forcings?

Regards,

Ed.

@Ed Hawkins, I have more the impression that aerosols were a convenient way to explain the non-change in temperature 1945-1975 with increasing CO2 levels. When stringent measures were taken in industrial (and residential area’s – see the London smog) that should have given a huge difference in temperature downwind the most polluting sources (as the average residence time of tropospheric SO2 is only 4 days), but that was not measurable at all.

But this is an aside of the main article which is about the performance of the complex GCM’s compared to the simplest model possible (or any simple model, see: http://www.economics.rpi.edu/workingpapers/rpi0411.pdf ), maybe worth another discussion, about the causes of the current standstill…

I’ve found the asides on this thread particularly educational though – those allowed to remain by the rumbling zamboni. Another sign the main post may just be onto something?

@FerdiEgb – the direct effect of aerosols is fairly well understood, and produces a cooling effect – it is not just a convenient way to explain the flat period. The indirect effects of aerosols have more uncertainty. And, when the clear air acts were implemented, and the cooling aerosols were removed, the temperature started to increase. Remember that the global temperature changes are not instantaneous with changes in forcing – this lag is missing from Steve’s model as he acknowledges above.

@Richard Drake – I think Steve should add the volcanic forcings to GCM-Q, and use a skill measure which doesn’t penalise the GCMs for having internal variability. Simple models are useful, but have their limitations.