A guest post by Nic Lewis

Introduction

Global surface temperature (GMST) changes and trends derived from the standard GISTEMP[1] record over its full 1880-2016 length exceed those per the HadCRUT4.5 and NOAA4.0.1 records, by 4% and 7% respectively. Part of these differences will be due to use of different land and (in the case of HadCRUT4.5) ocean sea-surface temperature (SST) data, and part to methodological differences.

GISTEMP and NOAA4.0.1 both use data from the ERSSTv4 infilled SST dataset, while HadCRUT4.5 uses data from the non-infilled HadSST3 dataset. Over the full 1880-2016 GISTEMP record, the global-mean trends in the two SST datasets were almost the same: 0.56 °C/century for ERSSTv4 and 0.57 °C /century for HadSST3. And although HadCRUT4v5 depends (via its use of the CRUTEM4 record) on a different set of land station records from GISTEMP and NOAA4.0.1 (both of which use GHCNv3.3 data), there is a great commonality in the underlying set of stations used.

Accordingly, it seems likely that differences in methodology may largely account for the slightly faster 1880-2016 warming in GISTEMP. Although the excess warming in GISTEMP is not large, I was curious to find out in more detail about the methods it uses and their effects. The primary paper describing the original (land station only based) GISTEMP methodology is Hansen et al. 1987.[2] Ocean temperature data was added in 1996.[3] Hansen et al. 2010[4] provides an update and sets out changes in the methods.

Steve has written a number of good posts about GISTEMP in the past, locatable using the Search box. Some are not relevant to the current version of GISTEMP, but Steve’s post showing how to read GISTEMP binary SBBX files in R (using a function written by contributor Nicholas) is still applicable, as is a later post covering related other R functions that he had written. All the function scripts are available here.

How GISTEMP is constructed

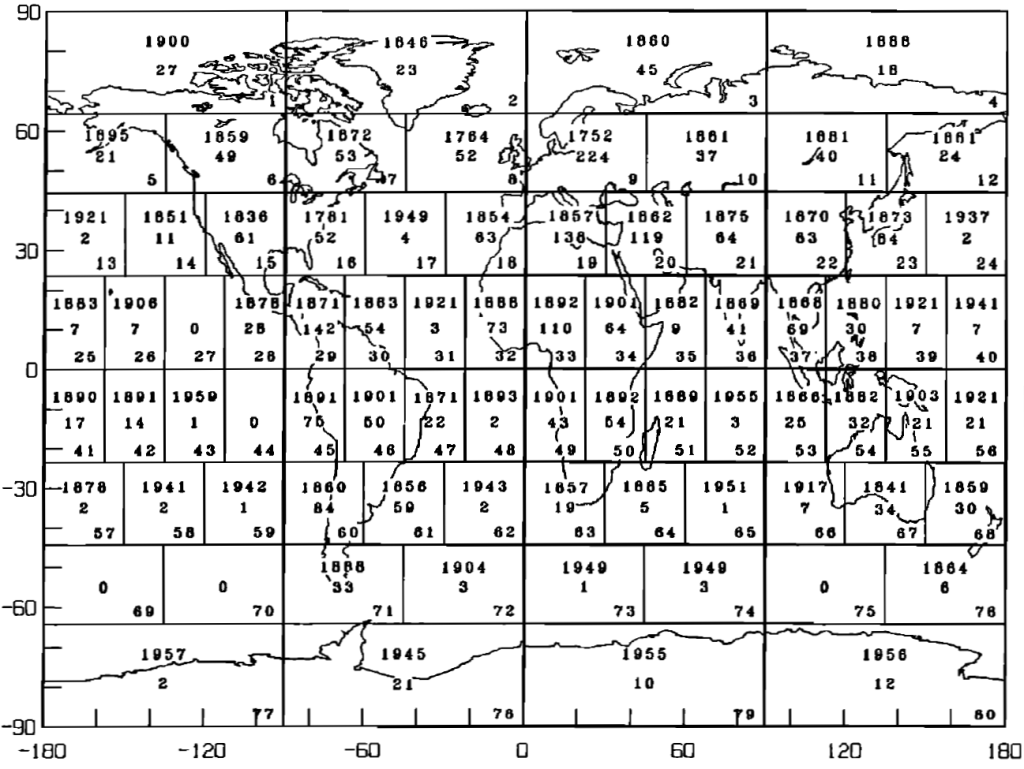

Rather than using a regularly spaces grid, GISTEMP divides the Earth’s surface into 8 latitude zones, separated at 0°, 23.58°, 44.43° and 64.16° (from now on rounded to the nearest degree). Moving from pole to pole, the zones have area weights of 10%, 20%, 30%, 40%, 40%, 30%, 20% and 10%, and are divided longitudinally into respectively 4, 8, 12 16, 16, 12, 8 and 4 equal sized boxes. This partitioning results in 80 equal area boxes. Each box is then divided into 100 subboxes, with equal longitudinal extent, but graduated latitudinal extent so that they all have equal areas. Figure 1, reproduced from Hansen et al. 1987, shows the box layout. Box numbers are shown in their lower right-hand corners; the dates and other numbers have been superseded.

Figure 1. 80 equal area box regions used by GISTEMP. From Hansen et al. 1987, Fig.2.

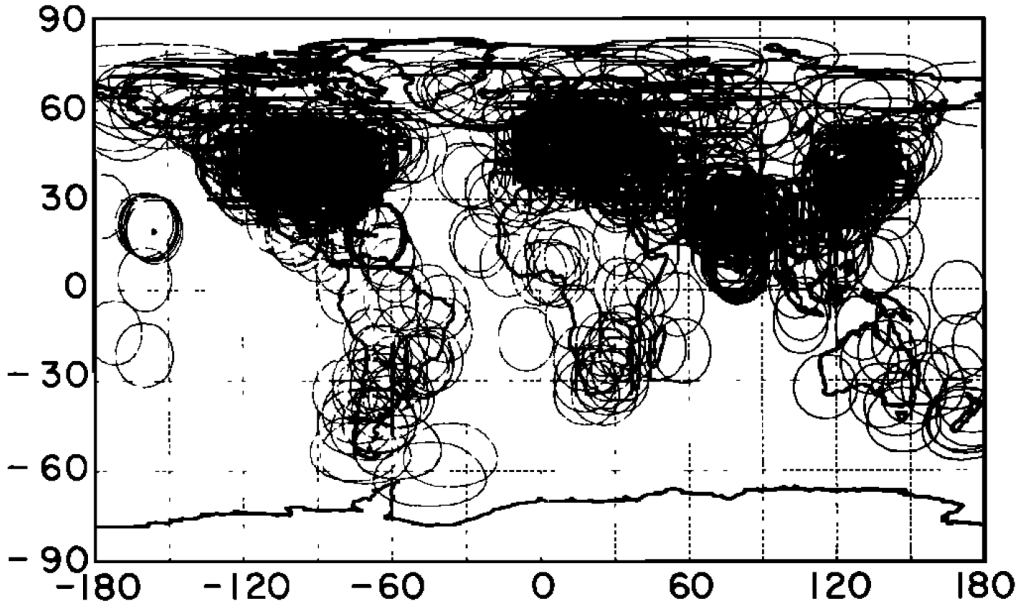

Where the distance from a land meteorological station to a subbox centre is less than a gridding radius (limit of influence), normally set at 1200 km, its temperature anomalies[5] are allowed to influence those of that subbox.[6] Figure 3, reproduced from Hansen et al. 1987, illustrates the areas thereby influenced in 1930 by the set of stations originally used. Almost all land outside Antarctica was by then within 1200 km of a meteorological station. Coverage was slightly poorer in 1900.

The 1200 km limit of influence was set to equal that at which the average correlation of annual temperature changes between pairs of stations falls to 0.5 at mid and high latitudes, or 0.33 at low latitudes. It is implicitly assumed that correlation of annual changes is indicative of similarity of trends, which may not be entirely accurate. Hansen et al. 1987 found no directional dependence of annual correlations, but while temperature trends have no general longitudinal dependence they do vary systematically by latitude.

Figure 2. Distribution of land stations and their 1200 km radii of influence in 1930. From Hansen et al. 1987, Fig.1.

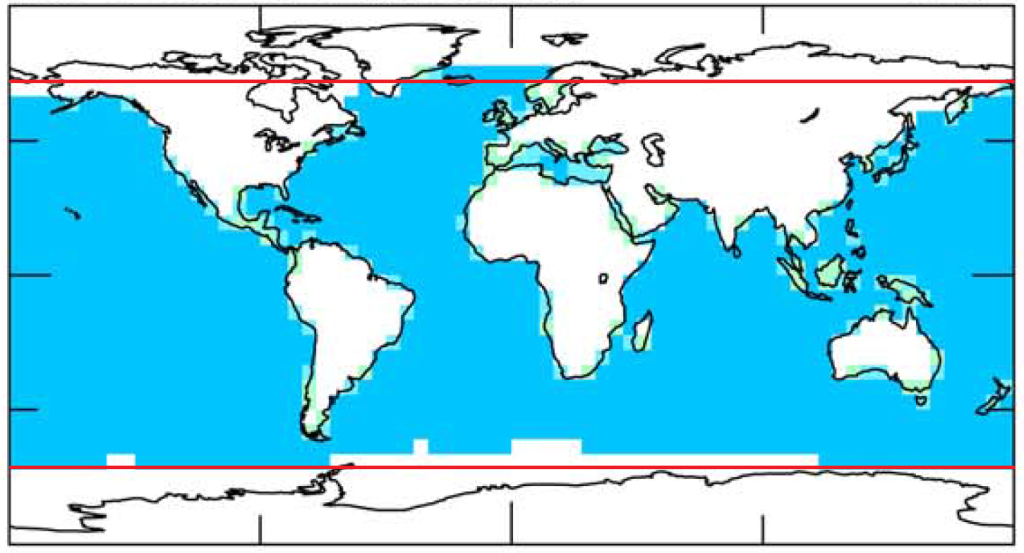

Subboxes in ice free ocean areas use SST data – and are therefore not subject to influence by land stations within the 1200 km limit – whenever it is available, provided that at least 240 months SST data exist and that at no time was there a land station within 100 km of the subbox centre.[7] Although ERSSTv4 SST data is complete in ocean areas, Hansen et al. 2010 stated that SST data is only used in regions that are ice free all year. The effective ocean area is on this basis reduced by 10%, to 64% of the global surface area, from its actual fraction of 71%. Although the Hansen et al. 2010 statement seems to be inaccurate,[8] in most calendar months SST data appears to be used only over a fairly small fraction of the ocean north of 60°N and south of 60°S.

Figure 3, reproduced from Hansen et al. 2010, shows the ice-free ocean area. The added lines showing the extent of the GISTEMP polar latitude zones. Their position indicates that temperature anomalies in those zones are dominated by land station data. The use of land station data to infill temperatures over sea ice hundreds of kilometres away appears to provide a preferable measure of surface air temperature to the use of equally distant SST data (or to setting the temperature in sea ice cells to seawater freezing point), provided the intervening sea ice cover is almost complete. Where, however, a significant proportion of the ocean surface in or near the grid cell concerned is open water, as in areas of broken sea ice, it is not clear that using land temperatures is appropriate.

Figure 3. Ice-free ocean area in which GISTEMP uses SST data. From Hansen et al. 2010 Fig. A3, with

lines (red) added showing boundaries of the northern and southern polar boxes latitude zones.

Records for each box are built up by combining records from each constituent subbox with data, equal-weighted, after first converting them to anomalies.[9] Records for each latitude zone are then built up from each constituent box, weighted according to the number of its subboxes with data.

A peculiarity of the GISTEMP method for combining land and ocean data is that their relative weight in each latitude zone, and hence the global, temperature anomaly time series changes over the record, as the availability of land station data varies. It also depends on the limit set for a land station’s influence. With a 1200 km limit variation in the relative land and ocean weights should be small after 1900 save in the southern polar latitude zone, but with a smaller limit the variation would be larger and the land weight might increase significantly over time. Prior to 1900 the land weighting may have been materially too low in the two tropical latitude zones, at least, even with a 1200 km limit.[10]

The GISTEMP global record was originally created by combining latitude zone temperature anomalies weighted in the same way. But in 2010 an important change was made. In subsequent versions of GISTEMP, latitude zone anomalies have been weighted by each zone’s area, even if it only has defined temperature changes over part of its area.

The relevance of the 1200 km limit of influence

Hansen et al. 1987 stated that using alternatives to the 1200 km limit on a station’s influence had no significant effect on global temperature changes. Hansen et al. 2010 stated more specifically that the global mean temperature anomaly was insensitive to the limit chosen for the range from 250 to 2000 km, and that the GISS Web page provides results for 250 km as well as 1200 km. In support of this insensitivity, it gave the 1900–2009 linear trend based change in global mean temperature as 0.70°C with a 1200 km limit and 0.67°C with a 250 km limit.

I was surprised that the GMST trend was not more affected by the limit on a station’s influence, and decided to examine the sensitivity for the current GISTEMP version. Unfortunately, GISS appears no longer to provide global mean LOTI data using a 250 km limit on their Web pages. However, very commendably, GISS makes available computer code to generate GISTEMP.[11] The code has recently been rewritten in the modern language Python and, although I am unfamiliar with that language, the code and procedure for running it are well documented and I found it simple to run and to modify parameters it uses.[12]

I checked my results, with the gridding radius set at the standard 1200 km, against those from output on the GISTEMP web pages.[13] The global trends were within 0.01°C/century of each other over 1880-2016, 1900-2009 and 1979-2016. The linear trend based change in GMST over 1900-2009 I obtained was 0.89°C, a remarkable 27% higher than that given in Hansen et al. 2010.

Global mean comparisons

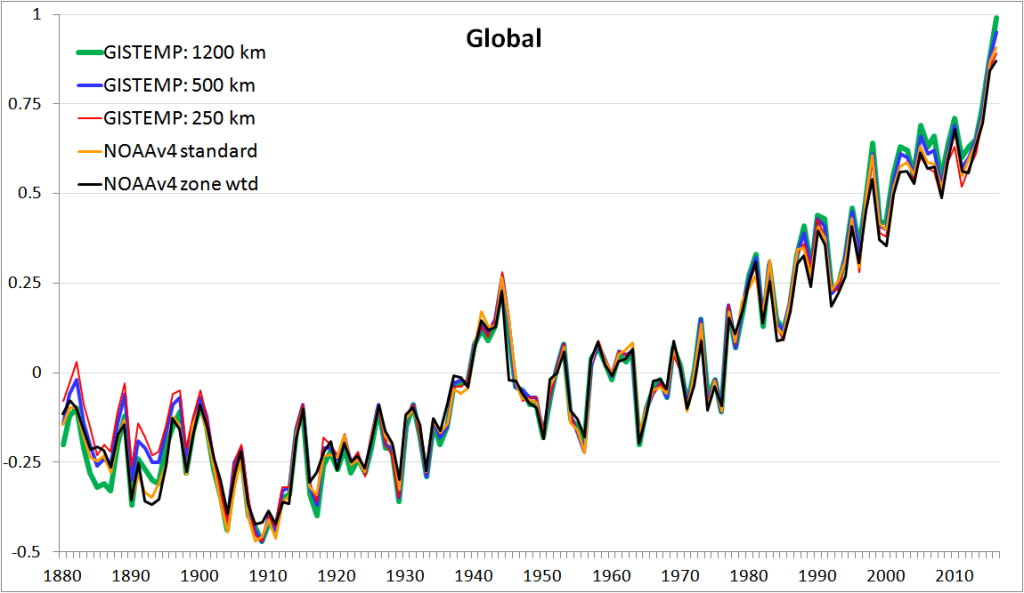

Figure 4 shows a plot of global temperature computed for GISTEMP using 1200 km (green) and 250 km (red) limits on land stations’ influence, and also on an intermediate 500 km limit (blue). It is relevant to show the NOAAv4 global time series (orange) for comparison, as like GISTEMP it is based on ERSSTv4 ocean and GHCNv3.3 land data.[14] Unlike the post-2010 version of GISTEMP, NOAAv4 area weights the temperature anomalies of all cells with data.[15] To provide a fairer comparison with GISTEMP, I have computed a NOAAv4 global time series (black) giving, as for post-2010 GISTEMP, a full area weight to each of the eight GISTEMP zonal latitude bands irrespective of how many of the cells in it have data.[16]

Figure 4. Global temperature anomalies (°C) for GISTEMP at different limits of influence, and for

NOAAv4.0.1 area weighted by grid cells with data (standard) or by 5° latitude bands with data

Although all the global time series follow each other closely over most of the record, there are clear differences in the first half century or so and over the last few decades. In the late 1800s, and to a modest extent during most of the 1915-1935 period, the GISTEMP 1200 km line tends to lie below the other lines, although the NOAA lines fall some way below it for several years in the 1890s. Contrariwise, in the late 1800s the GISTEMP 250 km line generally lies above the other lines, with the GISTEMP 500 km line next. Over the last few decades, the GISTEMP 1200 km line is generally the highest, followed by the GISSTEMP 500 km line. These tendencies are reflected in the linear trends for the different global time series over various periods, given in Table 1.

| Dataset / trend period | 1880-2016 | 1880-1950 | 1950-2016 | 1979-2016 |

| GISTEMP 1200 km | 0.72 | 0.37 | 1.42 | 1.72 |

| GISTEMP 500 km | 0.66 | 0.28 | 1.38 | 1.64 |

| GISTEMP 250 km | 0.62 | 0.22 | 1.30 | 1.52 |

| NOAAv4 standard | 0.68 | 0.37 | 1.35 | 1.62 |

| NOAAv4 zone weighted | 0.65 | 0.34 | 1.29 | 1.60 |

Table 1. Linear trends in GMST (°C/century) by dataset and period

The GISTEMP GMST trend I obtain over the full 1880-2016 record is 0.72°C/century when using a 1200 km gridding radius. When this limit of influence is reduced to 250 km, the trend becomes 0.62°C/century. So, using a 1200 km limit rather than 250 km now leads to a 16% higher full record trend, rather than the 4½% higher trend as reported in Hansen et al. 2010. Slightly over half the difference appears to relate to the early decades of the record. The average GMST anomaly over 1880-1900 was approaching 0.1°C warmer when a 250 km rather than 1200 km limit of influence was used. However, a substantial part of it arises over recent decades, when global land station coverage is much more complete. Over 1979-2016 the GMST trend is 1.72°C/century with a 1200 km limit of influence, but only 1.52°C/century with a 250 km limit. So, the claim in Hansen et al. 2010, the latest paper documenting GISTEMP, that the global mean temperature anomaly – and by implication the trend in GMST – is insensitive to the limit on a station’s influence over the range 250 km to greater than 1200 km is simply not true in relation to the current version of GISTEMP.

Examining temperature anomaly time series for the latitude zones where varying the limits of influence has the greatest effect provides some insight into the sources of the trend differences. It turns out that the largest contributions to differences in the 1880-2016 GMST trend come from the polar zones.

Southern polar zone comparisons

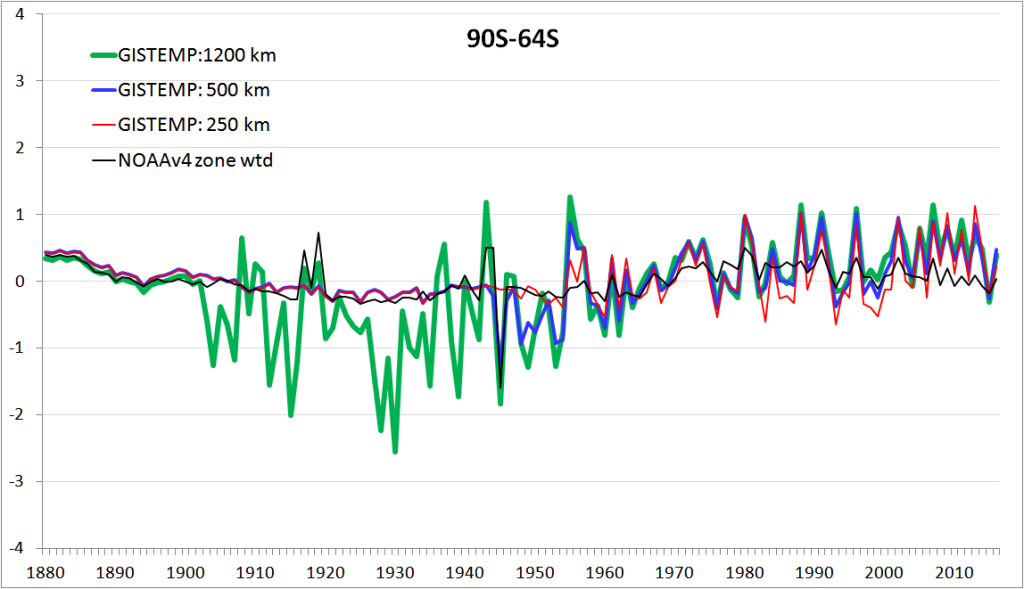

Figure 5 shows time series for the 90S-64S GISTEMP latitude zone at different limits of influence.

.

Figure 5. 90S-64S latitude zone temperature anomalies (°C) at different limits of influence (km)

The 90S-64S latitude zone time series at 1200 km influence limit is remarkable. The wild swings between 1903 and 1943, not exhibited to any extent when a limit is set at 250 km or 500 km, turn out to be caused by a single meteorological station, Base Orcadas, located in the South Orkney islands, some way outside this latitude band. The South Orkney Islands have a climate of transition to polar cold weather, not participating in the polar regime; weather conditions can vary markedly from year to year.[17] Despite it being at 60.7°S, the centres of 18 subboxes in the 90S-64S zone are within 1200 km of Base Orcadas. Although a station’s weight in a subbox declines linearly with distance of the subbox centre from the station, reaching zero at its limit of influence, in the absence of other data for the subbox the station’s influence does not diminish until the limit is reached.[18] As there were no other data for the 18 subboxes involved, their temperature anomalies were set equal to those of Base Orcadas. And because there were very few observations in this latitude zone until after WW2 – a smattering of ship SST readings in the ice-free ocean area during summer months – the Base Orcadas data dominated the southern polar zone temperature anomaly for the 1200 km limit time series over 1903-1943; its weight only fell below 33% in 1955.[19]

After working this out, I found that the influence of Base Orcadas on GISTEMP’s 90S-64S zone had been pointed out back in 2009.[20] However, at that time the effect on GISTEMP’s global time series was completely negligible, as until 2010 the weight given to each latitude zone in determining the GMST anomaly was proportional to the area in it for which there was data, which for the 90S-64S zone was only a small fraction of its total area prior to 1955. However, in 2010 GISTEMP switched to weighting each latitude zone by its full area irrespective of how many of its subboxes had data.

Are changes in temperature at Base Orcadas representative of those for the 90S-64S zone, which is dominated by continental Antarctica and the ocean adjacent to it? I very much doubt it. For a start, the correlations of annual temperature changes at Base Orcadas with those at stations in the interior of Antarctica (with records starting circa 1957) are low.

With Base Orcadas dominating them, temperature anomalies for the 90S-64S zone during the first decade or so after 1903 and during the 1930s were generally strongly negative. By contrast, those from data restricted by a 500 km or 250 km limit of influence were only weakly negative, which mirrors much more closely the behaviour of anomalies in the adjacent 64S-44S zone, where data was much less sparse. In my view, the standard GISTEMP methodology is unsuitable for application in the southern polar zone prior to the mid 1950s. While the influence of Base Orcadas on GMST trends is only minor, it is not completely negligible even over the entire record. If pre-1955 Base Orcadas data is removed, the GISTEMP 1880-2016 GMST trend, with a 1200 km limit of influence, falls by 0.01°C/century – over a quarter of the excess of the GISTEMP trend over that for the standard version of NOAAv4.

One other unusual feature of GISTEMP in this latitude zone is that it uses a reconstructed record for Byrd station in Antarctica, the only location in the interior of West Antarctica with a useful pre-1981 record. The reconstruction stitches together, without any offset, the records of two stations having somewhat different locations, construction and instrumentation, and whose records are separated by some years. This procedure, which produces a fast warming record for Byrd, is not in accordance with normal practice for temperature datasets. The reconstructed Byrd record is not used by in HadCRUT4v5 nor to my knowledge in any other dataset apart from the Cowtan & Way infilled version of HadCRUT4. However, its contribution to the GISTEMP global temperature trend is very small.[21]

Linear trends for the different 90S-64S time series over various periods are given in Table 2.

| Dataset / trend period | 1880-2016 | 1880-1950 | 1950-2016 | 1979-2016 |

| GISTEMP 1200 km | 0.60 | -1.54 | 1.25 | 0.71 |

| Ditto ex Orcadas pre 1955 | 0.27 | -1.30 | 1.32 | 0.70 |

| GISTEMP 500 km | 0.23 | -1.10 | 1.06 | 0.56 |

| GISTEMP 250 km | 0.10 | -0.75 | 0.72 | 0.94 |

| NOAAv4 zone weighted | 0.08 | -0.66 | 0.35 | -0.86 |

Table 2. Linear trends in 90S-64S anomalies (°C/century) by datasets and period

The GISTEMP 1200 km limit southern polar temperature trend over the satellite period, 1979-2016, during which Base Orcadas had limited influence, exceeded that using a 500 km limit.[22] A comparison with ERAinterim, arguably the best reanalysis dataset, suggests that the standard GISTEMP 1200 km limit version produced an excessive trend in southern polar latitudes since 1979.[23]

Northern polar zone comparisons

I now turn to GISTEMP’s northern polar zone. Figure 6 shows time series for the 64N-90N GISTEMP latitude zone. Here, there are noticeable differences between the various datasets in both early and late decades in the record. These differences are larger than for the southern polar zone, if one ignores the impact of Base Orcadas, despite data being less sparse in the northern than the southern polar zone, especially early in the record.

Figure 6. 64N-90N latitude zone temperature anomalies (°C) at different limits of influence (km)

The lower temperature anomalies up to 1900, relative to those over the stable period from 1903 to 1916, when using the 1200 km limit of influence are questionable. The NOAAv4 dataset weighted in the same way as GISTEMP shows a considerably smaller increase, as (to a lesser extent) do the 250 km and 500 km limit GISTEMP versions. The Cowtan & Way infilled version of HadCRUT4.5 warms only a third as much as the standard 1200 km GISTEMP version in this latitude zone between these two periods.

Linear trends for the different 64N-90N time series over various periods are given in Table 3.

| Dataset / trend period | 1880-2016 | 1880-1950 | 1950-2016 | 1979-2016 |

| GISTEMP 1200 km | 1.77 | 2.85 | 3.16 | 5.74 |

| GISTEMP 500 km | 1.60 | 2.61 | 3.02 | 5.44 |

| GISTEMP 250 km | 1.42 | 2.32 | 2.75 | 4.96 |

| NOAAv4 zone weighted | 1.11 | 1.68 | 2.39 | 4.34 |

Table 3. Linear trends in 64N-90N anomalies (°C/century) by datasets and period

The large divergence between all GISTEMP variants and NOAAv4 in the 64N-90N latitude zone almost certainly relates to the treatment of sea ice. In a non-infilled record like HadCRUT4, cells with sea ice have no data; their temperature anomaly is effectively treated as always equalling the mean for the region over which anomalies are averaged. In HadCRUT4 this is entire hemispheres, although it would be possible instead to average over latitude zones, as in GISTEMP.[24] In NOAAv4, the spatially complete ERSSTv4 ocean data is used in cells with sea ice. The temperature for such cells is set to -1.8°C, near the freezing point of seawater. In GISTEMP, as the ERSST SST data is flagged as missing in subboxes with sea ice, their temperature anomalies are set equal to those of any land stations with data within the 1200 km (or 500 or 250 km) limit of influence, on a distance-weighted basis where more than one such land station exists. The Cowtan & Way infilled version of HadCRUT4 effectively does much the same through its use of kriging.[25]

Insofar as temperatures over sea ice do reflect those of land temperatures within the radius of influence used, the NOAAv4 method can be expected to understate surface air temperature changes for cells with sea ice; this is also so (to a lesser extent) for the HadCRUT4 method.

It is unclear why the 1200 km GISTEMP version has warmed faster than the 250 km and 500 km versions in 64N-90N in recent decades. Possibly it reflects a higher weighting being given to the highest latitude land stations, and an even lower weighting being given to the very small ice free ocean area included in the zone. Comparisons with the ERA reanalysis dataset suggest that the GISTEMP 1200 km limit version produced realistic trends in arctic temperatures from 1979 until the late 2000s, with a slight underestimation of warming since then.23

Conclusions

The answer to the question originally posed, “How dependent are GISTEMP trends on the gridding radius used?”, is that they are much more dependent than claimed, with use of a 250 km, rather than the standard 1200 km, limit producing materially lower GMST trends over all periods investigated. That does not mean use of a 250 km limit produces a more accurate record. In my view, a 1200 km limit is in general preferable to a 250 km limit, although use of some intermediate value between 500 km and 1200 km might be best. In any event, a 1200 km limit is clearly unsuitable for use in the southern polar zone prior to the middle of the 20th century, since doing so results in unrepresentative temperatures at Base Orcadas – some way outside that zone – dominating temperature changes for the entire 90S-64S zone.

In principle, GISTEMP as currently constructed has several features that arguably make it more suitable for comparisons with global climate model simulations of surface air temperature than HadCRUT4 or NOAAv4, the most prominent other global temperature datasets.[26] GISTEMP:

- gives a full area weight to all latitude zones (unlike HadCRUT4)

- uses nearby land temperatures rather than SST to estimate temperatures where there is sea ice

- uses ocean temperature data that were until recently tied, on decadal and longer timescales, to marine air temperature rather than SST records.[27]

However, against this GISTEMP has a few problematic features that seriously detract from its suitability as a global temperature dataset. GISTEMP:

- fails to ensure ice-free ocean temperature anomalies are weighted by the area they represent

- uses a simplistic infilling method that sets cell anomalies equal to the weighted average of those for land stations up to 1200 km away, with no kriging-like reversion towards the mean with distance.

By contrast, the Cowtan & Way infilled version of HadCRUTv4.5 does not suffer from either of these shortcomings, while matching GISTEMP as regards all but one of its positive attributes identified for making comparisons with climate model surface air temperature data. And while HadCRUTv4.5, and hence Cowtan & Way, use HadSST3 for ocean temperatures rather than the marine air temperature data linked ERSSTv4 dataset, over 1880-2016 the two datasets have essentially identical global trends.[28] Although simulations by the vast majority of global climate models show that ocean surface (2 m) air temperature warms marginally faster than SST, it is not clear that their behaviour in this respect correctly reflects reality. Such models do not properly represent the surface boundary layer, within which steep temperature gradients exist; their uppermost ocean layer is typically 10 m deep. The fact that HadSST3 has warmed as fast as ERSSTv4 (and HadNMAT2) suggests that ocean surface air temperature may not actually warm any faster than SST.

If one is after a globally complete dataset for comparison with global climate model simulations, the Cowtan & Way infilled version of HadCRUT4 therefore looks a better choice than GISTEMP. Interestingly, it is only prior to 1890 and during the last decade that the Cowtan & Way GMST estimate systematically differs from the unfilled HadCRUT4v5 one; the two datasets’ 1890-2006 trends differ by under 1%. IMO, no infilling technique will be that successful prior to 1890 – there isn’t enough data to go on, and data accuracy is also an issue. It is much more plausible that HadCRUT4v5 understates warming since the early years of the 21st century, a period when the Arctic – where there are limited in-situ temperature measurements – warmed very fast. However, over the fifteen years to 2016 the HadCRUT4v5 and Cowtan & Way GMST trends, of 0.138°C /century and 0.160°C /century respectively, are equally close to the 0.149°C /century ERAinterim trend; the GISTEMP and NOAAv4.0.1 trends are both above 0.17°C /century.

Nic Lewis

[1] GISTEMP LOTI, combined land and ocean data: see https://data.giss.nasa.gov/gistemp/

[2] Hansen, J.E., and S. Lebedeff, 1987: Global trends of measured surface air temperature. J. Geophys. Res., 92, 13345-13372, doi:10.1029/JD092iD11p13345.

[3] Hansen, J., R. Ruedy, M. Sato, and R. Reynolds, 1996: Global surface air temperature in 1995: Return to pre-Pinatubo level. Geophys. Res. Lett., 23, 1665-1668, doi:10.1029/96GL01040

[4] Hansen, J., R. Ruedy, M. Sato, and K. Lo, 2010: Global surface temperature change. Rev. Geophys., 48, RG4004, doi:10.1029/2010RG000345

[5] Changes from the mean for the corresponding month over a reference period.

[6] Stations without, for at least one month, data for a total of 20 or more years are dropped.

[7] Hansen et al. 1996 states that “A coastal [sub]box uses a meteorological station if one is located within 100 km of the box centre.” But in fact, if there was at any time a station within 100 km GISTEMP throughout the record uses data from all land stations within 1200 km.

[8] It is unclear whether the GISTEMP code accurately implements this condition. The GISS-pre-processed ERSSTv4 data it uses appears to have had subbox SST data removed throughout the record but by individual month rather than for all year. I presume data was removed for all calendar months in which the subbox concerned contained sea ice in any year of the record. If SST data remain for at least two calendar months then the 240 months minimum data requirement will be met and SST data used for those calendar months. The presence of sea ice appears to be deduced from the subbox SST being cooler than –1.77°C. It is not evident that the ice-free condition was applied before 2010, but it was irrelevant when GISTEMP started using ocean SST data since then used dataset only covered 45°S–59°N.

[9] Curiously, monthly means over 1961-1990 are subtracted to compute subbox temperature anomalies, while an anomaly reference period of 1951-1980 is used when combining subboxes into boxes.

[10] Judging from the January 1886 land coverage for HadCRUT4 shown in Figure 5 of Morice CP, Kennedy JJ, Rayner NA, Jones PD, 2012. Quantifying uncertainties in global and regional temperature change using an ensemble of observational estimates: The HadCRUT4 dataset. J. Geophys. Res. 117: D08101..

[11] The data files it uses are GISS supplied, with sea-ice affected areas of ERSSTv4 data already masked out and the reconstructed Byrd record substituted for the original.

[12] The GISTEMP code is available via https://data.giss.nasa.gov/gistemp/sources_v3/. I used frozen data files from the provided input.tar.gz file, both for speed of processing and to ensure consistent results from different runs. The data files were dated 18 January 2017; slightly different results may be obtained if downloaded current data is used instead, as some pre 2017 values may have been revised. I ran the code on a 64 bit Windows 7 computer with the Anaconda36 implementation of Python, which includes required library modules, installed.

[13] File https://data.giss.nasa.gov/gistemp/tabledata_v3/ZonAnn.Ts+dSST.csv, downloaded 4May17. I also checked the trends produced by the Python code against those I calculated from a global time series produced by weighting each month the anomalies for individual boxes making up each latitude zone by the number of subboxes with data to give zonal anomalies and then combining these latitude zone anomalies, area-weighted. They were almost the same globally.

[14] NOAAv4 anomalies, which are relative to the 1971-2000 mean, have been restated relative to the 1951-1980 mean used by GISTEMP.

[15] In NOAA’s case, 5° latitude by 5° longitude grid cells, not equal area subboxes. However, cell anomalies are area weighted when combined to give zonal anomalies, so the difference should in principle be unimportant.

[16] To simplify the calculations, I reduced the monthly grid cell series to annual mean anomalies before rather than after combining them into zonal latitude bands and then a global time series. NOAA grid cells falling into two GISTEMP zonal latitude bands had their area weight split appropriately. As in GISTEMP, zonal anomalies were derived by combining on an area-weighted basis anomalies for all cells in the zone with data, but each zonal anomaly was given a full weight in computing the global anomaly irrespective of for what proportion of its area cell data existed. Note that a similar comparison is not given for other global temperature datasets since they do not use ERSSTv4 data.

[17] Argentina National Meteorological Service http://www.smn.gov.ar/serviciosclimaticos/?mod=elclima&id=68

[18] Since with only one data source the divisor in the calculation of the weighted subbox anomaly is the same as the weight given to that data source.

[19] Between 1945 and the mid-1950s, both 1200 km and 500 km limit 90S-64S zonal anomalies were also influenced by data from Esperanza Base station, located near the tip of the Antarctic peninsula, 1° outside the zone.

[20] https://chiefio.wordpress.com/2009/11/02/ghcn-antarctica-ice-on-the-rocks/#comment-1471

[21] The inclusion of the reconstructed 1957-2016 Byrd record nevertheless increases the 1957-2016 warming trend in GISTEMP’s 90S-64S region by approximately 0.25 °C/century, compared to when using the original Byrd and Byrd AWS records.

[22] Use of a 250 km limit gave the highest trend over the 1979-2016 period, due to it producing lower temperature anomalies in the 1980s and 1990s.

[23] Simmons, AJ et al., 2017. A reassessment of temperature variations and trends from global reanalyses and monthly surface climatological datasets. Q. J. R. Meteorol. Soc. 143: 101–119, DOI:10.1002/qj.2949. The comparison is for the a 30 degree latitude band around the pole.

[24] I did this in 2014 using 10° latitude zones; the effect on HadCRUT4 GMST trends was very small (zero over 1850-2013), indicating that sparse polar coverage in HadCRUT4 has not of itself led to any significant bias in GMST estimation.

[25] Although as the distance away from any station falls the anomaly for a cell with sea ice will gradually tend towards the global mean land anomaly.

[26] A potential understatement of warming arising from use of temperature anomalies when sea ice cover reduces has however been pointed out (Cowtan, K., et al., 2015: Robust comparison of climate models with observations using blended land air and ocean sea surface temperatures, Geophys. Res. Lett., 42, 6526–6534). This occurs even when sea ice anomaly temperatures are land-based, as the change to SST in temperature anomaly terms is generally less than the change in absolute temperature. However, bias arising from using temperature anomalies when sea ice cover is reducing is likely to be substantially smaller than suggested by the climate model simulations carried out by Cowtan et al., since the reduction in Antarctic sea ice extent simulated by climate models during the period over which they find a bias developing has not occurred in the real world.

[27] In ERSSTv4 ship sea surface temp (SST) measurements, on decadal and longer timescales, are adjusted to match movements in night-time marine air temperature data (per the HadNMAT2 dataset).

[28] As they do over the ice-free 60S-60N latitude zone, which is more relevant to their use by GISTEMP and Cowtan & Way.

357 Comments

The Berkeley Earth land/ocean dataset is also quite similar to the Cowtan and Way one, but has a bit better spatial resolution over land where it kriges individual stations rather than precomputed HadCRUT grid cells: http://berkeleyearth.org/data/

Thanks, Zeke; kriging based on individual stations makes sense, but I wouldn’t have thought it would make much difference on a global or zonal basis.

I believe BEST also homogenises land station data differently from HadCRUT4, and hence from Cowtan and Way.

It does, though the homogenization makes relatively little difference over land globally, at least since 1950 or so (though some regions like the U.S. and Africa are more strongly affected). HadCRUT4 also uses homogenized land data, but obtains it from national MET offices rather than via a consistant automated process.

Here are Berkeley and C&W anomalies over time: https://s3.postimg.org/m7o8ml0er/global_temps_1850-2016_berkeley_C_W.png

They have models to figure out how CO2 affects temperature which in turn is a modelled value and that model may be tuned to match the predictions that CO2 will affect temperature.

“Models All The Way Down” (TM) IPCC.

Thank you Nic. I remember John Daly puzzling over this a couple of decades ago.

Nic,

The paucity of data is evident in your plots, especially in the Southern hemisphere. If one wanted to compute

an approximation of the mean temperature (integral of T over the sphere above integral of the sphere), one would need a very fine equally spaced grid to do so accurately (I believe if T is smooth the actual accuracy could be computed using a standard local numerical error analysis). In any case the error using the current available observational data would probably be on the order of 100%, i.e., completely untrustworthy. Thus I find the arguments about global temperature trends nonsensical. And comparing those temperatures with global climate models that are based on the wrong reduced system of equations is pathetic. I am not saying there isn’t some global warming, just that the climate scientists science is not science.

Jerry

Plus, Jerry, they ignore instrumental resolution. It’s apparently a little known fact that climate thermometers are infinitely accurate.

The spatial estimate or prediction will always represent more precision than the observations. The prediction represents what you would have measured with a perfect sensor.

It’s not an average of the observations. It’s a prediction. Very different animal than yo u are used to.

And we actually test the prediction.

Citation please, not that Mosher ever, ever, exaggerates, but…

And of course, the esteemed Dr. Mann notes that predictions are not falsifiable. I must talk to my Astrologer about all of this.

Steve Mosher brings up the field of prediction, and changes from accuracy to precision. Since a thousand runs of a single GCM with similar initial and/or boundary conitions will yield a thousand different outcomes, how does the statement “The spatial estimate or prediction will always represent more precision than the observations” actually have any meaning?

I wonder what the rationale behind this statement is. Precision is a very different animal from accuracy, not to mention how it relates to measurement error and uncertainties. Estimates are different from predictions as well. I believe that the claim here by Steve Mosher is not supported by fact.

Pat Frank brings up the much claimed accuracy of observations, which is said to be high since there are thousands of measurements and by applying the well known formula you reduce measurement uncertainty to zilch. Apart from the inconvenient fact that the formula is not appropriate for the system being measured. The fomula states that if you have a number of independent measurements of a parameter, then the unceratinty of each measurment can be reduced in the average of all measurements by the inverse of the square root of the number of measurements. Insert “thousands of measurements” and the uncertainty reduces to almost nothing.

However, in the climate system this means you would have to have thousands of simultaneous measurments at each location, since the air mass being measured changes over time, both in composition and density. Composition is of course the main variable of these. Obviously, we do not have such a measurement system, and the claim of reduced uncertainty is thus bogus.

Instrumental resolution is not a precision problem. It’s a problem of the instrument itself unable to detect the difference between two external magnitudes.

The undetected difference is data lost forever. It remains an error in the record, forever.

Every single instrument will contribute its resolution error. It enters the record as an irreducible uncertainty in the measurement. No amount of averaging will ever remove it.

Instrumental resolution is the lowest possible limit of accuracy. It is entirely ignored in the published air temperature record; set aside.

Pat,

Do you have a contact for Roy Spencer?

Jerry

Hi Jerry — I’ve emailed it to your hxxxbb_at_cmcst account.

Actually Gerry, this is a good point. In areas where the stations are sparse, its equivalent to having a very large grid for the integration and errors in the integral could be large.

dpy

” errors in the integral could be large”

That’s just guessing. People actually work it out. It’s treated as coverage uncertainty in Sec 5.3 of Morice et al, 2012. It is a substantial part of the overall error quoted. I discussed in here, with tests here and here. It’s not nothing, but not huge.

dpy

” errors in the integral could be large”

That’s just guessing. People actually work it out. It’s treated as coverage uncertainty in Sec 5.3 of Morice et al, 2012. It is a substantial part of the overall error quoted. I discussed in here, with tests here. It’s not nothing, but not huge.

Nick, I’ve now approved both your comments that were stuck in moderation; sorry for the delay. However, they seem to be near duplicates?

If you have a problem with comments containing links getting stuck again, please post a comment asking for them to be released from moderation.

David,

“a short paragraph giving the essence of the argument that the mathematical error estimate is not applicable”

It comes back to my initial statement – you don’t have a derivative. I’m pretty sure that you and Jerry are thinking about integrating things determined by PDE. That tells you about derivatives – pressure gradient implies acceleration etc. But here we don’t have that. We just have a sampled field variable.

Numerical integration here basically makes an interpolation function based on the samples, and integrates that. So the question is, how good is the interpolation. The JB formulae would say, fit a polynomial based on the derivatives, and the error is attributable to neglected higher order terms. Here we can’t do that. The basis for saying interpolation is possible is correlation. That is why Nic’s post talks about 250km, 1200km etc. Correlation doesn’t have to work at all times (eg fronts) – it just has to work well enough on average for integration.

Jerry refers to his spherical harmonics example. I think that is instructive – I use SH integration extensively, and find it gives similar (good) results to triangle mesh. I posted here

moyhu.blogspot.com.au/2017/03/residuals-of-monthly-global-temperatures.html

a study of residuals, with a WebGL globe picture, especially of SH (following posts also are on integration accuracy). The number of SH is limited, because the scalar product is not an exact integral (unobtainable) but a product on the observation points, and so orthogonality fails. When the high-order SH start to have wavelengths on the order of gaps in the data, the failure is total (ill-conditioning) and you have to stop. When you do, the residuals are still large, and if you use a random model for them, you will deduce a large error.

But the residuals I show are clearly not random. They actually look very like a combination of the higher order SH’s that couldn’t be used. And the point is that, though the amplitude is large, those SH have zero (exact) integrals. The large residuals have been pushed by the fitting process into a space that makes almost no contribution to the integral.

Nic,

Thanks for releasing. The sameness is becauseI was exploring for the limit on links – I though three might work where four didn’t.

Thank you for the “here” links. They are priceless. I will try to keep things to a minimum.

In the first, you are heavily-focused on the standard deviation. The first laugher is this one:

“…So the spatial error is reduced by a factor of 8, to an acceptable value. The error of temperature alone, at 0.201, was quite unacceptable…”

The “error of temperature alone” was 1.69% of the mean value. The error for the mean anomaly was over 13%! It’s apples and oranges when you look at the magnitude alone. When you look at the relative error, it paints a different picture.

Then you go on and use area-weighting – which you apparently failed to implement correctly the first time (“Update: I had implemented the area-weighting incorrectly when I posted about an hour ago.”). You point out that, “Now I think it is right, and the sd’s are further reduced, although now the absolute improves by slightly more than the anomalies.” The absolute improved, yes…from 1.69% to 1.13%. But to say that it “improves by slightly more than the anomalies” is wrong. The standard deviation of the anomalies did drop from 0.025 to 0.016. But the mean value dropped from 0.191 to 0.101…so the error went from 13.1% to 15.8%! In whose world is that an improvement, other than yours? Yet you insist that, “For both absolute T and anomalies, the mean has gone up, but the SD has reduced. In fact T improves by a slightly greater factor, but is still rather too high. The anomaly sd is now very good.” The anomaly sd is only “very good” when you don’t look at it relative to the anomaly, and the absolute sd only looks bad when you don’t look at it relative to the absolute mean!

This was all for the 1981-2010 base, allegedly. You state that, “Does the anomaly base matter? A little, which is why WMO recommends the latest 3 decade period,” then “I’ll repeat the last table with the 1951-90 base.” Ok, great. Not sure why you’re going 40 years instead of 30. And then your table says…”Base 1951-80, area-weighted.” So which base did you really use? 1951-90? 1951-80? I can only assume the latter. But nobody in your echo chamber noticed this, and I am not convinced you calculated these property based on everything else I’ve seen. It seems amazing that the absolute T and it’s sd would be identical for those two 30 year periods – 12.102 vs 12.103 for T and 0.137 vs 0.136 for sd. Really? Back-to-back 30-year periods with a mean T difference of 0.001 and sd difference of 0.001? That doesn’t strike you as the least bit unusual? Or at least worthy of mention? Absolutely remarkable. But the anomaly was 0.620 for the 1st period and 0.101 for the 2nd? Not so sure.

Remarkably, your conclusion is that, “Spatial sampling, or coverage error for anomalies is significant for ConUS. Reducing this error is why a lot of stations are used. It would be an order of magnitude greater without the use of anomalies, because of the much greater inhomogeneity, which is why one should never average raw temperatures spatially.”

Again, the magnitude of the error is because the values being measured are more than a magnitude apart! The relative error of the absolute temps is an order of magnitude better than that of the anomalies for 1981-2010.

Repeat your exercise with T/100 as your parameter instead of just T, and you’ll get even smaller sd values in magnitude. Does that make it better? According to your logic, it does. Well then, how about T/100,000? T/1,000,000? Need I go on?

————————————————————

As for your second “here”…you took a look at one month and identified that the mean anomaly was close to 0.9 as you culled stations down to a count of 60. In some cases, the anomaly went up. In some cases it went down. You didn’t notice any bias. Wow, you really needed to run an exercise to tell you that? “An important thing is that the mean anomaly seems to remain fairly level at about 0.9°C. Culling obviously increases spread, but doesn’t seem to bias the result.” I started laughing and stopped reading after that “important” find. Was this an exercise for 7th grade algebra students?

“The “error of temperature alone” was 1.69% of the mean value. The error for the mean anomaly was over 13%! It’s apples and oranges when you look at the magnitude alone. When you look at the relative error, it paints a different picture.”

This is complete nonsense. It never makes sense to talk of the % of a temperature. There is always an arbitrary offset. The % of °C is quite different to % of K, yet it actually makes no difference which you use here. An anomaly subtracts off the mean, so of course the % is larger. This elementary fallacy permeates your comment.

“I can only assume the latter.”

Correct. The 90 was a typo (for 80). The meaning was obvious.

“Repeat your exercise with T/100 as your parameter”

Complete nonsense again. There was a factor of ten reduction in the magnitude of error. That is the same in any units.

“Wow, you really needed to run an exercise to tell you that?”

Yes. The second exercise, where SST was successively eliminated, did introduce a bias. We know that having fewer stations increases uncertainty. The fact that it doesn’t seem to introduce a bias is significant.

“…This is complete nonsense. It never makes sense to talk of the % of a temperature…”

Ummmm Nick, sure it does. People do it all of the time. It’s the relative error expressed as a percentage. It doesn’t matter if you’re talking measurements of temperature or widgets. Sure, you’ve got to be careful of misapplications. But you don’t need to express it as a percentage. You can just leave it as the ratio of the error to the mean if that makes you feel more comfortable.

“…The % of °C is quite different to % of K, yet it actually makes no difference which you use here…”

No it does not in this case (but certainly more appropriate to use with Kelvins). Relative error is not the end-all, be-all. But to just ignore it and immediately decry an error to be unacceptable on magnitude alone as you did, or proclaim that a method offered improvement when the relative error increased…pfffft.

“…Correct. The 90 was a typo (for 80). The meaning was obvious…”

The intended meaning was obvious. What period you based your calcs on had to be assumed. You’d already admitted mistakes on that page and weren’t fully convincing that even those had been fixed.

“…Yes. The second exercise, where SST was successively eliminated, did introduce a bias. We know that having fewer stations increases uncertainty. The fact that it doesn’t seem to introduce a bias is significant…”

That it didn’t seem to introduce a bias overall was to be expected. Again, I laughed and stopped reading after the first exercise. Oh, so eliminating sea surface temps introduced a bias in an additional exercise? Shocking. You mean land and SSTs aren’t in lockstep with each other? Who’da thunk it.

Nick,

Evidently you have never has a course in Real Analysis.

Relative norms are used all of the time to compute relative ((percentage)

errors between two functions in Banach or Hilbert spaces.

Jerry

Jerry,

“Relative norms are used all of the time”

You are talking more and more nonsense (and yes, I do have a PhD in maths with plenty of undergrad in Real Analysis). This is an elementary blunder. At issue is that I calculated a mean of station temperatures, 11.863°C, and sd. 0.201°C. I then calculated the mean anomaly, 0.191°C and showed that use of anomaly reduced the sd to 0.025°C. I think that is a big gain. But Michael J wants to argue, but no, the relative error in mean temp was 0.201/11.863 = 1.69%, and taking anomaly increases it.

This is, as I said, juvenile nonsense, and for reasons nothing to do with Banach etc. Relative error, or % is dimensionless. But here the temperature scale has an arbitrary offset. If I did the calculation in F (quite valid) the relative error is 0.362/53.35 = 0.68%. If I did it in kelvin, equally valid, the relative error is o.201/285 = 0.07%. It is a totally meaningless calculation.

I repeat, it never makes sense to take a ratio or logarithm of Celsius temperatures. Every Victorian schoolboy knows that.

Good try Nick,

When a function has a large mean you use the norm of the difference

(where the mean cancels out) divided by the norm of the function minus the mean. A simple example is the surface pressure where there are two digits that are constant with variations two orders of magniitude less. It is the accuracy of the variation of the pressure that one is interested in and including the mean in the denominator gives a misleading idea of the accuracy. If you read our 2002 manuscript then you see that this is the correct way to determine the accuracy of small perturbations on a large mean. Do you keep up on the literature?

Jerry

Jerry,

“If you read our 2002 manuscript then you see that this is the correct way to determine the accuracy of small perturbations on a large mean.”

You don’t seem to engage with the actual issues at all. These are not functions. They are measured temperatures, in °C. “large mean” is, well, meaningless. You can have any mean you like by rebaselining the temperature scale.

Nick,

You have forgotten that there are both continuous (integral) and discrete (summation) norms. Better go back and reread your real analysis text.

Jerry

Except they are not. You can test this. That would end rank speculation.

Nick,

I looked at your triangulation games . You have the same large number of knobs to turn as a climate model.

Give me a break. Where is the theory?

Jerry

Steven, It’s a mathematical point. The error in the integral is proportional to the maximum grid spacing times the first derivative of the function. At least that’s the rigorous bound. We have seen things like this in Antarctica where there have been papers that made these errors as I’m sure you know.

That’s wrong Young.

It is wrong. The key difference is that the integrand has only a finite number of known values (and no derivative). Any gridding will yield either very large grid cells, mixing inhomogeneous values, or smaller cells where some have no data points. It is the problem of dealing with those missing cells that creates most of the coverage uncertainty. GISS’ two-level system is a way of trying to get around this, but can only go so far.

There has been much work to estimate coverage uncertainty. Morice et al 2012 is much quoted.

whut,

I suggest you read a numerical analysis text on the approximation of an integral using a discrete number of points.

Or read my counter example in the text below. The mean is defined by the integral of the function over the surface of the sphere divided by the area of the surface, not a sum of a few points divided by the number of points. Numerical analysis tells us that the the numerical approximation of an integral for a smooth function requires a fine grid to be accurate. The more points the more accuracy. Note that temperature across a front is almost a discontinuity.

And that means you would need a huge number of grid points to compute the mean accurately.

Jerry

“And that means you would need a huge number of grid points to compute the mean accurately.”

But what is huge? No quantification here. I have investigated this extensively at my blog Moyhu. I can’t give links here, because that causes the comment to go into moderation – two of my comments have now been there for 3 days. But on April 5 2017 (see archive) there is just one of several studies (others linked) which show that the number of grid points is ample to establish an integrated monthly global mean reliably. And of course there are studies in the literature – I mentioned above Morice et al 2012, but it goes back to early Hansen.

Nick, Can you explain what the mathematical basis of your claim is? Forward error analysis might be nice. I think Gerry’s point is that in regions like the Southern hemisphere, where data is sparse, the normal mathematical analysis would yield a quite large error. The exact size depends on how smooth the function is.

An analogy you know well is aeronautical test data. Even a very large number of pressure measurements at discrete points give a very inaccurate estimate of global forces and moments, which are always measured by smart engineers separately.

David,

“The exact size depends on how smooth the function is.”

The exact size is important, but all we have here is hand waving. It isn’t like PDE solving, where you basically create the integration data. It isn’t even really like pressure testing, which I don’t think you would try to interpret without some mathematical model based on the physics of flow. You have just a number of isolated anomalies, and you have to have some basis for interpolating (and integrating the result) to get a global average. That basis is usually correlation.

I’ve referred to one method of estimating error (coverage uncertainty) which is subset selection. I spoke of a a post where I did this systematically for one month integration. moyhu.blogspot.com.au/2017/04/global-60-stations-and-coverage.html

Other methods are used. Morice et al used model results restricted to the measuring points. You can’t use methods that depend on an estimate of derivative – that is more uncertain than the integral. But you have to find some quantification.

Thanks for the response Nick, but could just give me a short paragraph giving the essence of the argument that the mathematical error estimate is not applicable? I usually avoid this area as it seems very complicated and not very interesting, but Jerry does seem to me to have a valid point that is pretty obvious and rigorous in its origins.

David,

I misplaced my response on the sub-thread above.

Nick,

Try your interpolation scheme or other gimmick on my counterexample and tell me what the mean is. Any interpolation method also relies on the smoothness of the function being interpolated and that smoothness is used by standard numerical analysis to determine the error. What is the numerical error in your interpolation method? The only person doing hand waving is you.

Jerry

Jerry,

“The only person doing hand waving is you.”

You have given no numbers relevant to the integration of temperatures on Earth. I have, and I have been putting this into practice on my blog, calculating the average from raw data every month for over six years now. I post this each month in advance of the others, and it agrees very well with what they get, by other means. And I have extensively analysed and tested the errors.

In terms of your “examples”, yes, one observation of a sinusoid in a period will give an unreliable mean. One hundred, randomly placed, on the other hand, will do very well. And I have dealt with your spherical harmonics example above, with link.

Nick Stokes, “And I have extensively analysed and tested the errors.”

It is to laugh.

Nic –

“If pre-1955 Base Orcadas data is removed, the GISTEMP 1880-2016 GMST trend, with a 1200 km limit of influence, falls by 0.01°C/century.” This does not seem consistent with the values of Table 2, which gives 90S-64S trend for 1880-2016 (1200 km) is 0.60 °C/century; sans Orcadas, 0.27 °C/century.

HaroldW – Table 2 is for the 90S-94S zone, which has an area and hence weight of is only 5% in the global mean. 5% of (0.60 – 0.27) is 0.0165 °C/century. That is still slightly higher than the GMST trend change of 0.01 °C/century (0.009 to 3 decimal places). The difference doesn’t seem due to the minor influence Orcadas on the 64S-44S. It might be because the GISTEMP code only outputs zonal and global temperature anomalies to 2 d.p., or to the data being converted from monthly to annula values before trends were calculated.

Thanks Nic. I misread the text, didn’t realize that it referred to global anomalies with and without Orcadas.

Welcome back Climate Audit!

Thanks for the work!

Question at Zeke, have you guys published your homogenization algorithm?

Back in 2013. Its available here: http://static.berkeleyearth.org/papers/Methods-GIGS-1-103.pdf

Published and tested in double blind studies. Nevertheless..folks continue to speculate. Speculation. Arm Chair science. Never touch the data just speculative thoughts.

Mosher,

I suggest you try this simple test. Generate a spherical harmonic function

using Spherepack with a reasonably realistic temperature in physical space (my guess is that any non constant function with several harmonics will be sufficient). Then use the locations of the observations to generate the mean temperature for the function and compute the error. What is the relative size of the error ?

Jerry

Mosher,

I assume you know what a counter example means. Assume you have a two pi periodic function that has a single observation at pi/2. The value there is 5. What is the mean of the function? Any answer you guess is wrong. And that is because the observations are not dense enough to correctly determine the mean. If there were sufficient obs to compute the necessary integral of the function accurately, then the mean could be computed. So much for the double blind (climate scientist) test.

Jerry

Gerald Browning,

Why generate climate data? There is plenty of synthetic data from models, reanalyses, or real data with global coverage that one can play with.

Like this example: Throw away 99.83 percent of the spatial information, and see if you can reconstruct the whole..

Do you still have doubts about sparse sampling? Do you believe that global warming in the long run can hide between those 18 dots?

Olof R,

You assume models are an accurate depiction of the atmosphere. They are not. The equations they are based on were derived for the troposphere (Charney) and are not applicable in the boundary layer. The boundary layer parameterization error destroys the accuracy of the model approximation of the tropospheric equations in a few days (see manuscript by Sylvie Gravel et al.). And that is assuming the hydrostatic equations are the correct ones which they are not (Browning and Kreiss 2002). The artificial diffusion in the models destroys the numerical accuracy

of the spectral method (Browning Hack and Swarztrauber) and I haven’t even begun to discuss the inaccuracy of the physical parameterizations (maybe you can explain why there are so many different versions).

Reanalysis data is a combination of these questionable models with obs data, i.e., the large errors in the models are included in that data.

As for the obs data itself it is essentially absent over the ocean (2/3 of the planet) and sparse over many land masses (especially the Southern hemisphere as can be seen in Nic’s plots).

Numerical analysts test various numerical methods on both simple and difficult analytic functions and then compute the errors. That is exactly how we found out how the excessive dissipation in the models is destroying the spectral accuracy (BHS).

I provided a simple analytyic counterexample in order to inform the reader that one must be wary of conclusions when the observations are sparse. I could easily make the example more complicated, but the point has been made in an easy to understand case.

Jerry

Olof R,

Please provide a citation or details of the method you used. I must admit I am tired of going thru these less than rigorous manuscripts only to find the gimmick that was used to obtain the result wanted by the author. Steve McIntyre

has done an excellent job on many of these tricks and that is why I so admire him.

Jerry

Jerry

“I provided a simple analytyic counterexample in order to inform the reader that one must be wary of conclusions when the observations are sparse.”

Your “analytical example” is trivial and pointless. Of course it is possible that an integral could be inadequately sampled. The question is whether in fact they are, and on that you have nothing useful to say. Olof’s example is not from models involving boundary layer parameterizations or any such. Nor is it from reanalysis. Nor is it based on observations which may be sparse over the oceans. He is using UAH V6 lower troposphere data measured by satellites. And his simple, relevant point is that by subsampling just 18 points, he gets essentially the same result as in integrating with the whole dataset. The same point I made with subset selection on the surface data with triangular mesh.

“What gimmick did you use?”

His process is perfectly straightforward, if you actually read it. I see no reason to doubt his method is valid.

“…The average is formed by area-weighting, which I prefer to think of as a spatial integration method…”

You “prefer?” Is it or is it not?

I “prefer” to think of infinity as my available funds.

Jerry & Pat

Re: errors. Have you included artificial anthropogenic warming/cooling (aka “fabrication”)? See:

Fabricating Temperatures on the DEW Line.

https://wattsupwiththat.com/2008/07/17/fabricating-temperatures-on-the-dew-line/

David,

Quite amusing and one more fact to support the case about questionable

data accuracy. Of course Nick and Olof will ignore this by not providing

error bars.

Jerry

Olof R,

Interesting that the many of the 18 points are in data sparse regions. Did you compute the means from the original obs data and if so how did you weight the individual obs? The method is just an interpolation of 18 points over large areas. What gimmick did you use?

Jerry

Nick,

Oh so now we have it. Let us talk about the accuracy of satellite data. It is well known that the inversion of the integral used to determine the temperature from the radiances is an ill posed process and very inaccurate over cloudy

areas. Now just how much of the earth is cloudy at any given time? And did he pick the 18 points to give him the answer he wanted? And how long has the satellite data been available?

Jerry

Jerry,

” Let us talk about the accuracy of satellite data.”

I’m sure you can go on forever about how we can’t know anything about anything. But in fact we’re talking about the accuracy of integration of satellite data. As to choice of 18 points, it looks like a regular grid with 120 spacing in longitude and 20 in latitude.

Satellite temperatures are no more accurate than about ±0.3 C. You can integrate all you like Nick, but they’ll never be better than that.

Jerry, global cloud cover is about 67%.

Pat,

I knew that the cloud cover is a large percentage of the earth. I was waiting for Nick to admit it. 🙂

Nick,

I looked at your web site. The triangulation of the surface of the earth can be manipulated to provide any result

you want because the answer will depend on the distribution and size of the triangles. And how many triangles (parameters) do you have? As many as Carter has liver pills?

I am waiting for a citation on a manuscript by you or Olof. Have Olof overlay his plots with those from IPCC

and plot the difference between so we can get a feel for the variation between the two.

Jerry

Pat,

Where did you obtain the accuracy of the sat data? As you know tomography is also an ill posed problem (inversion of an integral) but works for the large scale features because they have so many different angles ( and lots of radiation for the patient). My experience has been that the sat temp data only works reasonably well in clear skies and when there is a ground based measurement to anchor the result. There should be different errors for the clear and cloudy sky cases with and without a land based measurement?

Jerry

Jerry,

“The triangulation of the surface of the earth can be manipulated “

The triangulation is the unique convex hull of nodes, determined just by node location. There are necessarily approximately twice as many triangles as nodes. As to manuscripts, there are plenty of published indices, which you apparently dismiss. If you want to see overlaid results, I show them here:

moyhu.blogspot.com.au/p/latest-ice-and-temperature-data.html#Drag

The triangle result is called TempLS mesh, and you can compare it over any period with any of the regular indices. If you want to compare numbers, there are tables here, with different anomaly base periods:

moyhu.blogspot.com.au/p/latest-ice-and-temperature-data.html#L1

The different integration methods that I use are shown by pressing the TempLS button.

Troposphere temperature measurements use a microwave range which is little affected by clouds.

Hi Jerry, the accuracy I mentioned is a lower-limit estimate, based solely on the field calibration of the physical instrument itself. Other sources of error will add to that minimum.

Here’s a set of citations to temp sensor accuracy I posted once, elsewhere:

Radiosonde air temperature measurement uncertainty: ±0.3 C:

R. W. Lenhard, Accuracy of Radiosonde Temperature and Pressure-Height Determination BAMS, 1970 51(9), 842-846.

F. J. Schmidlin, J.J. Olivero, and M.S. Nestler, Can the standard radiosonde system meet special atmospheric research needs? Geophys. Res. Lett., 1982 9(9), 1109-1112.

J. Nash Measurement of upper-air pressure, temperature and humidity WMO Publication-IOM Report No. 121, 2015.

The height resolution of modern radiosondes using radar or GPS = 15 m = (+/-)0.1 C due to lapse rate alone.

That makes the lower limit uncertainty of modern radiosonde temperatures (inherent + height) rmse = ±0.32 C.

Satellite Microwave Sounding Units (MSU): ±0.3 C accuracy lower limit:

Christy, J.R., R.W. Spencer, and E.S. Lobl, Analysis of the Merging Procedure for the MSU Daily Temperature Time Series Journal of Climate, 1998 11(8), 2016-2041 (MSU ≈±0.3 C mean inter-satellite difference)

Mo, T., Post-launch Calibration of the NOAA-18 Advanced Microwave Sounding Unit-A IEEE Transactions on Geoscience and Remote Sensing, 2007 45(7), 1928-1937.

From Zou, C.-Z. and W. Wang, Inter-satellite calibration of AMSU-A observations for weather and climate applications. J. Geophys. Res.: Atmospheres, 2011 116(D23), D23113.

Quoting from Zou (2011) “Although inter-satellite biases have been mostly removed, however, the absolute value of the inter-calibrated AMSU-A brightness temperature has not been adjusted to an absolute truth [i.e., the accuracy]. This is because the calibration offset of the reference satellite was arbitrarily assumed to be zero [i.e., the accuracy of the satellite temperature measurements is unknown].”

The inter-satellite calibrations and bias offset corrections that are used to improve precision do not improve accuracy.

Infrared Satellite SST resolution: ±0.3 C

W. Wimmer, I.S. Robinson, and C.J. Donlon, Long-term validation of AATSR SST data products using shipborne radiometry in the Bay of Biscay and English Channel. Remote Sensing of Environment, 2012. 116, 17-31.

Land surface air temperature uncertainty,

Lower limit of measurement error: ±0.45 C (CRS LiG thermometer prior to 1980); ±0.35 C (MMTS sensor after 1980, but only in the technologically advanced countries).

Hubbard, K.G. and X. Lin, Realtime data filtering models for air temperature measurements Geophys. Res. Lett., 2002 29(10), 1425; doi: 10.1029/2001GL013191.

Huwald, H., et al., Albedo effect on radiative errors in air temperature measurements Water Resources Res., 2009 45, W08431.

P. Frank Uncertainty in the Global Average Surface Air Temperature Index: A Representative Lower Limit Energy & Environment, 2010 21(8), 969-989.

X. Lin, K.G. Hubbard, and C.B. Baker, Surface Air Temperature Records Biased by Snow-Covered Surface. Int. J. Climatol., 2005 25, 1223-1236; doi: 10.1002/joc.1184.

Sea Surface Temperature uncertainty: ±0.6-0.9 C for ship engine intakes:

C. F. Brooks, C.F., Observing Water-Surface Temperatures at Sea Monthly Weather Review, 1926 54(6), 241-253.

J. F. T. Saur A Study of the Quality of Sea Water Temperatures Reported in Logs of Ships’ Weather Observations J. Appl. Meteorol., 1963 2(3), 417-425.

SST uncertainty from buoys, including Argo: ±0.3-0.6 C:

W. J. Emery, et al., Estimating Sea Surface Temperature from Infrared Satellite and In Situ Temperature Data. Bull. Am. Meteorol. Soc., 2001 82(12), 2773-2785.

R. E. Hadfield, et al., On the accuracy of North Atlantic temperature and heat storage fields from Argo. J. Geophys. Res.: Oceans, 2007 112(C1), C01009.

T.V.S. Udaya Bhaskar, C. Jayaram, and E.P. Rama Rao, Comparison between Argo-derived sea surface temperature and microwave sea surface temperature in tropical Indian Ocean. Remote Sensing Letters, 2012 4(2), 141-150.

Those are all 1-sigma uncertainties and none of them represent random error. They do not average away.

Anyone who understands measurement uncertainty must conclude from the above published calibrations that the 95% lower limit uncertainty bounds for air temperatures are:

Surface air temperature: ±1 C

Radiosonde: ±0.6 C

Satellite: ±0.6 C

The climate consensus people never produce plots with physically real error bars.

The entire field runs on false precision.

Gerald Browning,

The 18 point subsampled UAH TLT are 18 gridcells (2.5×2.5 deg) in the center of 18 zones, 30 degrees heigh and 120 degrees wide. The points are area weighted by cosine of the latitude, which KNMI Climate explorer does automatically when I run the UAH field with such an 18 gridcell mask.

This was the first attempt. Next attempt was 36 points, filling the gaps in longitude between the first 18. This gave a clearly better result with reduced noise and a trend even more similar to the complete dataset.

Disclaimer, the chart was done in Nov 2016, so 2016 is only the year through Oct, but I dont think an update would change it very much.

I have done the same exercise with Hadcrut kriging through all 167 years. “18 points” surface temperature looks a little bit noiser than TLT, but there is no long-term bias compared to the complete global dataset.

Pat,

Do you trust the observational community (radiosondes, satellite, etc.) to be perfectly honest in their instrument accuracy estimates?

Jerry

Nick,

On your own website you show how changing the number, size, and location of the triangles changes the result. That is exactly what one would expect as it changes the weights in the calculation of the mean. No error analysis when you

have clearly shown that that you are tuning the result just as climate models do so you can provide the answer you want. Where is your reviewed publication in a numerical analysis journal if you are so confident in your scheme (or in any reputable journal)? Numerical analysts would immediately see thru your game just as I have.

Jerry

Hey Nick,

Here is a quote from your web site:

“The average is formed by area-weighting, which I prefer to think of as a spatial integration method. ”

So you are stating that it is an approximation of an integral with variable weights that you can control. Oops.

I dare you to submit this to a numerical analysis journal claiming that it is accurate for general integration

giving your choice of triangulation (weights) and an error analysis.

Also I wanted an overlay of Olof’s curves with those of IPCC during the same years, not yours.

Jerry

Jerry, my perception on reading the papers is that the people actually working to calibrate the instruments do an honest job.

That includes John Christy and Roy Spencer, though they never publish their T-series with the error bars they reported in the more technical literature.

The major problem comes with the people compiling the air temperature record (GISS, UEA, UKMet, BEST). There’s an entirely tendentious and universal assumption, openly stated, that all measurement error is random and averages away.

The SST people assume that the data from every “platform,” i.e., ship, has a single constant error distribution. It’s incredible. One gets the impression that none of these people have ever made a measurement.

Jerry

“On your own website you show how changing the number, size, and location of the triangles”

No, I show how culling the nodes changes the results. When you decide the nodes, the triangle mesh is determined as the convex hull. And I show how varying the culling modifies the results within a limited range.

“I dare you to submit this to a numerical analysis journal”

It would be rejected for unoriginality. It is bog standard finite element integration. Triangle elements with piecewise linear basis functions. It is also, as I say there, the 2D equivalent of trapezoidal integration.

Every numerical integral is a weighted sum of values at the quadrature points (OK, maybe derivatives too). And those weights represent the area (or volume etc) of something. The task of numerical integration is to find the right weights.

Nick,

You are trying to muddy the waters. Changing the nodes changes the triangles. Don’t use fancy language. Convex hull

just means that you connect the nodes with triangles so the enclosed domain is convex.

Anyone can start a blog and make claims about the results. It is only when the method is published in a reputable journal after review by 2 or more unbiased reviewers that the result is (possibly) acceptable.

The task is not to find the right weights. The weights for all well known and accurate numerical integration schemes (e.g. Gaussian quadrature) are well known. You are choosing triangles (nodes as you like) to obtain a result close to what you want, not for numerical accuracy. If for numerical accuracy of an arbitrary smooth function the triangles would not be clustered around observational sites.

I am still waiting for the citation for Olof’s manuscript.

Jerry

Jerry

“If for numerical accuracy of an arbitrary smooth function the triangles would not be clustered around observational sites.”

Again, we aren’t integrating an arbitrary smooth function. We are integrating a field function observed at a finite number of points which indeed were not chosen for optimal integration. They are the readings we have. The point of my culling is to de-cluster – to eliminate nodes in regions of concentration.

“Anyone can start a blog and make claims about the results”

Yes. You are making claims on a blog. I make substantiated claims.

“Convex hull just means that you connect the nodes with triangles…”

It means more than that. It means that the mesh is uniquely determined by node locations. But it also means that the mesh has the Delaunay property, which is important.

Nick,

Decluster is equivalent to tuning. You are not fooling anyone but yourself. I am trained in numerical analysis, partial differential equations, and have worked on climate models in my early career. I have published over 35 manuscripts in reputable journals that were reviewed by

reviewers that did not want the information to be revealed, but could not kill the mathematics. They spouted BS just like you and Olof, lots of talk and no mathematics or theory to back up the nonsense.

Jerry

Pat and Jerry See post above from: Fabricating Temperatures on the Dew Line

Interested in the topic, but the writing style makes it extremely hard to process.

This entire phrase is the subject of the first sentence: “Global surface temperature (GMST) changes and trends derived from the standard GISTEMP[1] record over its full 1880-2016 length”. An 18 word nominative phrase. You have to wander through that entire tortured set of words to figure out that this is just the subject and then a verb comes.

While the topic is technical, that does not mean the writing should be obscure.

May I suggest you compose a better sentence that conveys the same information? I am interested in what you would say instead.

“According to the GISTEMP record, the Earth has warmed faster in recent years than according to X record or Y record.”

That is a more reader-friendly topic sentence. You don’t have to overload the first sentence with every caveat and qualifier. After all, several sentences will follow.

Also, it is a bad idea to have such a long meandering phrase as a subject. The reader has to read far too long to see where the actual verb is in the sentence in relation to the subject (actually has to read forever to see that that whole phrase is acting as an 18 word noun). Has to parse 18 words and scratch skull to see that there is not an action taking place within that monstrosity.

Again, the topic is interesting. I would love to learn why the different temperature records differ. But the topic is poorly explained. Having to deal with that 31 word monstrosity of a first sentence to open an essay will just turn many people off. And it doesn’t have to be that way.

It has just been shown that an insufficient number of observations can lead to a mean that is completely wrong.

Thus the time series are not trustworthy to any extent and the arguments based on those series are nonsense.

Mathematical analysis cannot be refuted, though I am sure that climate “scientists” will try.

Jerry

David and Pat,

Does my counterexample prove the point we are trying to make. It is amazing how poorly educated some of the climate “scientists” seem to be.

Jerry

Davi and Pat,

The next piece of enlightenment is to have them understand that the climate and forecast models are based on the wrong set of equations. There is only one well posed reduced system of equations for a hyperbolic system with multiple time scales and for the atmospheric equations of motion that is not the hydrostatic equations. Minor problem. 🙂

Jerry