A couple of years ago, Anthony observed a gross discontinuity at Lampasas TX arising from a change in station location. Let’s see how the Berkeley algorithm deals with this gross discontinuity.

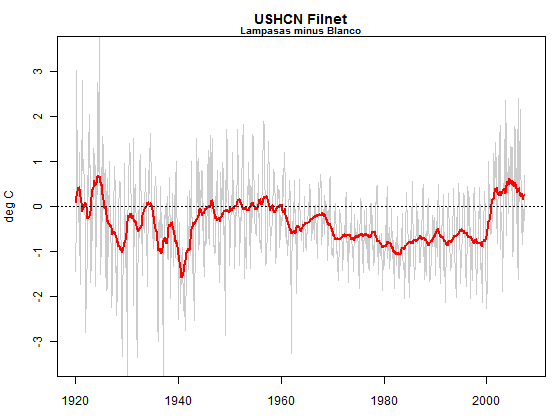

At the time, I discussed the difference between Lampasas and nearby Blanco under various USHCN (v1) versions, the one below using their “adjusted: (filnet) version. As Anthony had observed, the USHCN adjustment algorithm was unequal to the task of identifying a gross discontinuity.

Figure 1. Lampasas minus Blanco, USHCN v1 filnet

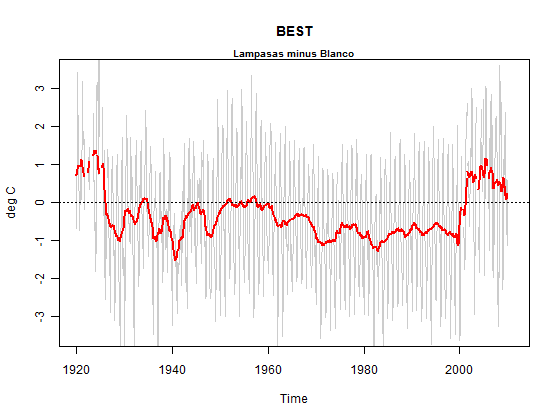

Here is the corresponding result from BEST. The BEST algorithm fails to pick up the Lampasas discontinuity. In fact, its hard to see precisely what the algorithm does other than introducing “off-centered” monthly normals. [Update – a reader points out below that the homogenization method appears to be applied to this data i.e. this data might well be split in the homogenization step. This seems plausible to me.]

68 Comments

If this is the BEST…..

In engineering, it is common to have a set of standard poeces of data on which proposed methods will be evaluated. So in image processing and com[ression there are sets of standard images. Tone detection methods are tested agianst standard recordings – so called talk off tapes. Standards are set on the performance of methods against the stnadard data and so repeatable evaluations are possible.

This is one area in whuch an IPCC like body could be useful. It could set standards and select data against which proposed methods could be evaluated. For me, this would be much better than the current method of peer review.

Is there a list of sites thart exhibit behavior that could be considered quality tests on these algorithms. A papaer describing such a list would certainly be publishable.

Coincidentally, it looks like the BEST algorithm exaggerated the warmth in the post-2000 section of the dataset …

w.

This should be an example of where their “scalpel” method comes into play to break the dataset and – I guess – split the station into two, shorter records where the abrupt discontinuity is not considered in the analysis of trends.

From the “averaging process” paper:

“Rather than correcting data, we rely on a philosophically different approach. Our method

has two components: 1) Break time series into independent fragments at times when there is

evidence of abrupt discontinuities, and 2) Adjust the weights within the fitting equations to account for differences in reliability. The first step, cutting records at times of apparent discontinuities, is a natural extension of our fitting procedure that determines the relative offsets between stations, encapsulated by 𝑏!, as an intrinsic part of our analysis. We call this cutting procedure the scalpel.”

…

“There are in general two kinds of evidence that can lead to an expectation of a

discontinuity in the data. The first is “metadata”, such as documented station moves or

instrumentation changes. For the current paper, the only “metadata” cut we use is based on gaps in the record; if a station failed to report temperature data for a year or more, then we consider that gap as evidence of a change in station conditions and break the time series into separate records at either side of the gap. In the future, we will extend the use of the scalpel to processes such as station moves and instrumentation changes; however, the analysis presented below is based on the GHCN dataset which does not provide the necessary metadata to make those cuts. The second kind of evidence requiring a breakpoint is an apparent shift in the statistical properties of the data itself (e.g. mean, variance) when compared to neighboring time series that are expected to be highly correlated. When such a shift is detected, we can divide the data at that time, making what we call an “empirical breakpoint”.”

…

“The analysis method described in this paper has been applied to the 7280 weather stations

in the Global Historical Climatology Network (GHCN) monthly average temperature data set

developed by Peterson and Vose 1997; Menne and Williams 2009. We used the nonhomogenized

data set, with none of the NOAA corrections for inhomogeneities included; rather,

we applied our scalpel method to break records at any documented discontinuity. We used the

empirical scalpel method described earlier to detect undocumented changes; using this, the

original 7,280 data records were broken into 47,282 record fragments. Of the 30,590 cuts, 5218 were based on gaps in record continuity longer than 1 year and the rest were found by our 25 empirical method.”

We would expect this record (and the Detroit Lakes one, a case of a gap) to be split by this algorithm, which presumably is in the MatLab code….

Steve: Hmmmm… so you surmise that the station data provided here is prior to homogenization. I wonder why they didn’t also provide the homogenized data. Maybe it’s somewhere. I’ll note this in the post.

Steve, I think what they are saying is that corrected data is never created. Instead the fragments are regarded in the scalpel analysis as independent sites, with reweighting.

Steve;- you’re probably correct in your interpretation. If so, given that off-the-shelf software in R gives the breakpoints and diagnostics, it’s too bad that they used homemade software which did not generate the diagnostics. Merely because they don’t reassemble the results, doesn’t mean that they don’t implicitly exist under breakpoint methodology.

True & it’s recorded enough to give figures about the 47,000 fragments they wound up with.

Steve & Nick

Re: “off-the-shelf software in R gives breakpoints”

David Stockwell describes testing for breaks in trends of global temperatures by applying

Would EFP / strucchange be useful to detect station move discontinuities, and the surrounding trends?

Steve: I used strucchange in a (rejected) 2008 submission on hurricanes (with Pielke Jr).

David, strucchange would work, although generally the candidate site is compared with a regional climatology composed of an average of neighboring sites, rather than with itself as strucchange does (eg Salinger, Torok).

BTW, Wouldn’t a struchange method applied to a trending noisy series tend to reduce the trend, if adjustments to non-significant breaks are applied?

There is, however, another completely different strong source of bias that I am writing up now in the application to the Australian HQ dataset.

are these alogorythms tested to see if they do what they are meant to do and not do something else. if so, how did they test it?

As far as I can tell, BEST never explicitly homogenise the data. Instead the “scalpel” is used to split inhomogeneous records into shorter homogeneous records.

So I suspect that they haven’t provided the homogenised intermediates you’re looking for because no such intermediates are created by their algorithm.

Steve: yes and no. they might not be calculated by the algorithm, but they might be implicit in the algorithm. Just a possibility. For example, Mann’s algorithms didn’t calculate weights but they were implicit and could be extracted.

There’s a changepoint algorithm in R that I used a few years ago in connectino with hurricane data to test a changepoint when aerial surveillance came in. If the data has a huge number of discontinuities, I wonder whether new problems are introduced,

Steve, Last I talked to Muller was some time ago. At that time the datasets did not reflect the “split” data. I suspect that when the scalpel is applied that no intermediate step is output. I will confirm this next week.

I don’t get it. They haven’t provided the raw data, and now I find that they haven’t provided the homogenized data either. Does anyone know what this intermediate product we have in our hands actually is? Is it, as Steve suggests, just a GHCN data with weird monthly adjustments?

I don’t care about their bizarre adjustments. I want the raw data, the stuff before anything at all has been done to it. I thought that what they were releasing was the raw data, but it wasn’t, so I figured it was their results. It’s not that either.

So once again, it’s nothing but a bait and switch. No raw data. Nothing that we can back-calculate to get back to the raw data. And on the other hand, no results either.

Y’know, by now I should be beyond surprise about Muller, but no, the bugger always seems to have one more surprise up his sleeve.

Grrrr …

w.

Steve; Willis, I recommend that you take a valium. You’re jumping to conclusions. There is a huge file of “raw” data. Too big for me to read. So please don’t say that they didnt provide raw data. Given that new methods are introduced, they should have provided intermediates and diagnostics, particularly given the unfortunate decision to use Matlab rather than R (where there would have a better opportunity to generate intermediates.) Nonetheless they are committed to providing a clear trail and I’m sure that they will do so.

Steve, if you are referring to the file “sources.txt” in the BEST zipfile, there are no temperatures recorded anywhere in that rather very large file.

I was only able to read the entire file because my new laptop has 8GB of memory 🙂 .

With less memory, one can see portions of the file as follows:

Open a connection to the zip file using the R function unz() which can access a single file at a time from the zipped collection. Using readLines(), you can then read a smaller fixed number of lines up to whatever will fit in the memory of your computer. The complete file took up about 3.5 GB of memory in R. When I tried to do a full read.table() on it and I also unsuccessfully ran out of memory!

There is precious little information in that file as evidenced by the fact that it compresses to 2% of its original size when zipped. Virtually all of that is already in the “data.txt” file.

Willis, seriously take a chill pill

My apologies, but I thought that what you had provided was the 39,000 raw datasets, as you had described them as “each object being a time series of the station data beginning in the first year of data.”

Now it appears it is not the station data at all. It is some intermediate processed product. It also appears that they do not support the product that they have produced. cce comments below:

In other words … your conclusions about Lampasas and Detroit Lakes are meaningless, because of “known bugs” they’ve already disowned the whole dataset due to problems like the ones in Lampasas and Detroit Lakes..

RomanM has indicated that the file you think contains the raw data may not contain temperatures at all. The BEST folks have pre-disowned the dataset, so all of your results vis-a-vis Lampasas and Detroit Lakes, they’ve already “moved on” from those. With huge fanfare, what they released turns out to be ready for the trash, unfit for us to use because of “known bugs” … and you advise me to take a Valium?

w.

BEST does not claim to output homogenized station histories, so I don’t know why people are looking for them. I don’t even think this is possible with the “least squares” method (or whatever it’s called). They detect discontinuities and then slice the series there, treating each segment as a separate station. They don’t try to put them back together again. They have not “moved on” or “disowned the whole dataset due to problems like the ones in Lamapasas and Detroit Lakes.” That’s just making stuff up.

Thanks, cce. If saying “this release is not recommended for third party research due to known bugs” is not disowning the release, I don’t understand what would be. It seems to me they’ve released a dataset and then disowned it. What am I missing here?

w.

They have not disowned it. They are clearly telling you that its preliminary.

Which again brings up the question why they had this big PR thingee when all they had was preliminary data and nobody (including peer reviewers?)could therefore check their work. Very convenient, eh?

well Im on the record in 2010 before any of this started suggesting that who ever redid the temperature series should publish the data and issue no press release.

From my perspective I’ve offered them help in getting the data out in a format that is easy for other to use with complete documentation.

I think its unfortunate that they did PR I think its unfortunate that people are throwing the baby out with the bathwater, especially when the release notes basically warn you away from drawing any conclusions.

I did about 4 hours work on the metadata, realized that the stuff was not ready for me to waste my time and went back to picking daisies.

what the hell do I know, just an english major, pass the poems.

Nobody’s throwing out anything. We’re just holding their feet to the fire and “gently suggesting” /sarc off that they live up to what it appeared that they’d promised. The author’s are welcome to come here and explain their bizarre PR policy.

Nobody of any consequence on either side of the debate is EVER going to endorse BEST, BESTlike methods, or BEST data. No matter what they say or do.

Nobody of any consequence on either side of the debate is EVER going to endorse BEST, BESTlike methods, or BEST data. No matter what they say or do.

Given their modus operandi, why should these people be given the benefit of any doubt? The history of science by press release is not great…remember Cold Fusion?

Steven, I don’t get it. If the dataset is inadequate for analysis … then what did Muller and the team use for their four papers?

w.

you are missing this

“The second generation release is expected to use the same format presented here and include a similar number and distribution of temperature time series. Hence the current release might be best used to prepare for future work.”

BEST is trying to practice release early, but unfortunately the community they are releasing to doesn’t have a clue how this works and BEST was not exactly clear on this. Muller talking to the press was also a mistake, especially on an Alpha release. The files are released so that you can get a handle on the data formats and build your import. I got a similar release (al beit much smaller) some time ago. I had the sense not to expect the data to useable at that point. that wasnt the purpose of that release and not the purpose of this release.

RTFM. which means read the release notes

given the press releases and press blitz, I think that they should have had all their ducks in a row. Saying that there will be a better release in a couple of weeks seems weird to me – why not wait until the better release if it’s important? what’s so pressing that they had to go before they were ready?

IPCC deadlines

That may well be true.

But does it excuse?

Im not in the business of excusing.

Then stop.

Steven Mosher,

I’m not sure why you think IPCC deadlines apply here. For AR5 WG1, a paper needs to be submitted by July 31, 2012, and published/accepted by March 15, 2013. So they’re not exactly up against the stops on those. Or are you suggesting that they’re trying to make the First Order Draft?

Judith Curry detailing her meeting with Muller after the publicity blitz:

“Second, the reason for the publicity blitz seems to be to get the attention of the IPCC. To be considered in the AR5, papers need to be submitted by Nov, which explains the timing. The publicity is so that the IPCC can’t ignore BEST. Muller shares my concerns about the IPCC process, and gatekeeping in the peer review process.”

That’s hilarious Mosher.

It is humorous to think of a movie script wherein a scientist who is about to discover a mechanism for faster-than-light travel publishes preliminary results in order to beat a deadline and as a result is discredited, thus depriving humanity of the ability to colonize the stars. As they say, truth if often stranger…

I keep asking … what deadline? I find no IPCC November deadline for submissions to WG1, but what do I know?

mosher, why didn’t BEST mention that the data appears to be mostly identical to Northern Hemisphere CRUTEM3?

http://www.woodfortrees.org/plot/crutem3vsh/from:2001/to:2011/plot/best/from:2001/to:2011/plot/crutem3vnh/from:2001/to:2011

I know it made the graphs more dramatic.

Only a few days after the announcement and BEST is already falling apart at the seams. That’s what happens when you race to the press before proper review (I won’t say peer review because that’s worse than useless).

Muller had a golden opportunity to do a proper reassessment and has fumbled the ball.

It’s a great pity, as I thought at the time, that you gave a warm welcome to the BEST analysis.

You can be quite sure that “warmists” will quote your original approval of BEST and not the subsequent posts.

Fight between Muller and Curry regarding BEST in the Daily Mail. Ross is referenced.

http://www.dailymail.co.uk/sciencetech/article-2055191/Scientists-said-climate-change-sceptics-proved-wrong-accused-hiding-truth-colleague.html

Judith Curry responds.

Apologies if this has been pointed out, but the download page for the processed and raw data files has the following message:

“Alert! We mistakenly posted the wrong text data file (TMAX instead of TAVG). We apologize for any inconvenience or confusion this may have caused. The correct files are now in place and may be downloaded below.”

Unfortunately there’s no indication when the mistake was spotted and the files were changed.

Steve: the TMAX data would be interesting as well. I just returned from Alabama and spent time with Christy. He’s a big proponent of TMAX as being less contaminated by local effects.

Is the data this analysis (and others) is based on the original or corrected data?

Yes, I noticed that also, when looking at some effects of correlation coefficients versus distance separation in temperature time series. Tmax and Tmin have rather different behaviour and T mean can do a few odd things depending of how you calculate it. This in reference to Fig2 of the Rohde et al. averaging processes pre release.

I got myself a copy of the Tmax dataset on 21.10.2011 at 9:32 am UTC And the new Tave set on 22.10.2011 at 11:14 am UTC. So you’ve got only a 26 hours interval to check out.

BTW the Tmax set was 194MB and Tave 252MB big.

Sent a quick email asking for that page to state when the corrected data was uploaded and asking for a rough idea how data is managed / versioned etc

==========

…

I note that the download page now has the message:

“Alert! We mistakenly posted the wrong text data file (TMAX instead of TAVG). We apologize for any inconvenience or confusion this may have caused. The correct files are now in place and may be downloaded below.”

Can you confirm when this error was corrected?

These things happen of course, but it would be useful if you could state explicitly on that page the date and time that the correct data was uploaded, and any quick way that a user can identify which version of the data they have – you can see how it is ripe for misinterpretation otherwise.

Could you also point me to anything that describes the procedures you have in place for managing versions of data sets or components of those sets (any versioning software, naming conventions, backup and recovery procedures etc.)

…

==========

Could @mosher point me in the right direction for any comments on the second point?

As Zeke point out over at Lucias we are kinda in the middle of preparing a poster. So I dont have much time to look at the BEST stuff. Zeke and I visited long ago, They gave me data files with a few hundred stations etc.

basically enough to get the input routines done. I did that. then there was the huge fight over the congressional testimony, communications tapered to nothing as they got busy on their stuff and I went off to pick daisies

Thanks, I’ll wait so see if anything pops out of official lines. I’m encouraged by the headers in each of the data files that include a brief abstract, date and time and MD5# as a check / ID, so it looks as if they’re taking the traceability / versioning issues seriously.

I hate those headers, but the checksum is a good idea.

Is there anyone around qualified (as I am SO certainly NOT) to reprise the unit-root/random walk/stationarity analysis anonymous econometrician “VS” once did for (IIRC, CRUTEMP) now to the BEST data series?

It seems to me this applies to Doug Keenan’s question. Is the data susceptible to such a model or is it just as likely as not to be noise?

The file that records changes to metadata (such as documented and undocumented station moves) is station_changes.txt and is not present in current zip file. The README.txt explains the purposes of the files. This information might be included in the Matlab files.

In addition, there is a README.data file that says this:

***************

The current release is represents a first generation work product and some of the expected fields are currently missing (essentially reserved for future use) and there is some station metadata in the Matlab files that are not included in the Text files at the present time.

In addition, there are a number of known bugs and errors, including but not limited to mislocated stations, improperly coded data quality flags, duplicated and / or improperly merged records.

This release is not recommended for third party research use as the known bugs may lead to erroneous conclusions due to incomplete understanding of the data set’s current limitations.

However, a second generation merged dataset is nearly complete and is expected to eliminate a large number of known problems. This is expected to be released to the public within a few weeks through the Berkeley Earth website.

The second generation release is expected to use the same format presented here and include a similar number and distribution of temperature time series. Hence the current release might be best used to prepare for future work.

Some have critiqued the removal of data but the temp data is a box of old holey socks by which we hope the average sock is whole. If the algorithm were sensitive enough to remove the steps like the one above, a lot of good data might go too.

Off topic, I think there is a big error in the confidence interval calculation.

Lol! Love that analogy.

There has been NO warming since 2001 even with BEST data. Ergo: the UHI effect has reached its peak/flattened OR/AND there has not been (10 years) any warming and there is no current warming per se (There is now significant COOLING refer to AMSU). This and other discussions concerning past attempts to reconstruct temps are mind boggling…and in my view anyway a waste of valuable time/money.

Can someone make a “blinkie” out of these two graphs?

Is “… This release is not recommended for third party research use as the known bugs may lead to erroneous conclusions due to incomplete understanding of the data set’s current limitations.” designed to stop anyone checking their work?

no its normally called an alpha release. release early release often. … thats not dating advice thats code release

Code yes, DATA???????

I wonder how those temperatures in the 80’s, 90’s, and 00’s correlate with ENSO?

Gosh. Seems to me perhaps the BEST team can use some basic configuration management practice common to software projects. They can store all their files: data, program, documentation at some place like github.com. They can use versions to delineate their software releases. They and their colleagues and critics alike can work off the same repository.

That way, the confusion about what files go together can be minimized.

I know this is a basic question for this technical blog, (so please forgive me), but it is one that occurs to me almost all the time.

Does anyone know what it is about climate science and scientists that they seem incapable of what, I suspect to many scientists, most engineers, and many other disciplines is second nature and a fundamental requirement – namely proper and transparent data management?

I don’t think it is a basic question. There also seems be a massive reluctance to use one of the most useful mathematical/computinq developments of the seventies ‘The Relational Database’ – even if it ended up being a database of one data table I would find that far more accessible to constantly changing files with headers and whatever to manipulate, in fact it stops me from bothering in the first place. If you want a file in that format then it would be easy to (automatically) generate from the database anyway. Changes could easily be handled by even the most basic of features such as keeping the raw data constant in ‘Tables’ and then having multiple ‘Views’. And when you need to do more complicated processing you then have about 3 decades of computing development to probably provide exactly the feature you are looking for.

I’m obviously a database person but it seems such an obvious thing for the ‘data’ to live in as iy is so flexible (and an industry standard for well over a decade)in what you can then do with the data.

BEST is trying to practice release early, but unfortunately the community they are releasing to doesn’t have a clue how this works

Mosher

It’s a shame that you had to add this unnecessary dig to some otherwise useful comments. The reality is that if you choose the path that Muller et all appear to have chosen then you should spend as much time as possible ensuring that misunderstandings are avoided / corrected.

I didn’t read it as an unnecessary dig but as a statement of fact.

I agree that Muller and team should have done better and I assume they know that by now. But ‘release early, release often’ is never as easy to practice as it sounds. I listened and chatted to a software friend at a conference on the weekend who I would consider at the leading edge of ReRo in London, both for startups and for government (which is an interesting story in its own right). He made the provocative suggestion that when programmers in a team commit their code to the main repository and all tests run successfully there should be a 25% chance that the changes go live, randomly and automatically. It was taken as a joke by many but I like the idea very much. Such are the wilder frontiers of the agile software movement using Ruby. But the broader point is that we’re all still learning about this stuff. So I read no snark in what Mosh said.

Why does the BEST plot have discontinuities in the 1920s that the USHCN data does not?

David Stockwell on Oct 31, 2011 at 2:53 PM you wrote in part:

QUOTE

There is, however, another completely different strong source of bias that I am writing up now in the application to the Australian HQ dataset.

UNQUOTE

I will be very interested to read your paper on AUST HQ as I have spent quite a lot of time looking at it.

Just a quick note on the Matlab issue: Matlab is used by many many people in engineering and biogeosciences. Also, it has a freeware clone called octave just like R is the S freeware clone. So if you get octave you should be able to run the code as is.

R is not just the S freeware clone. R has far outstripped its predecessor in available resources.

For statistical purposes, given the richness of R packages and the breadth of the user community, a Matlab release is sort of like releasing a video in VHS (yes, I’m overstating a bit, but I’m annoyed at their failure to provide a product in the form that would be useful to the community that they are supposedly trying to convince. All too typical academic unawareness.

In addition to working upon actual sites with known discontinuities – another approach to test the algorithm might be to use R noise (? – not sure what it is called) to generate some records and then edit the records to introduce breaks and discontinuties to see what the algorithm detects and produces…

Sorry to resurrect an old thread, but folks might find this interesting: http://berkeleyearth.lbl.gov/stations/27072

2 Trackbacks

[…] to mention – as Steve McIntyre recently did – the proudly, and it would seem prematurely, announced availability of the data and code: […]

[…] prometteurs, si on en juge en outre par les propos de Steve McIntyre (Climat Audit), qui a analysé certaines données qu’il connaissait comme étant «suspectes», afin de voir si BEST y répondait […]