Update (Jul 28, 2008): On Jan 18, 2008, two days after this article was posted, RSS issued a revised version of their data set. The graphics below are based on RSS versions as of Jan 16, 2008, the date of this article, and, contrary to some allegations on the internet, I did not “erroneously” use an obsolete data set. I used a then current data set, which was later adjusted, slightly reducing the downtick in observations. On Jan 23, 2008, I updated the graphic comparing Hansen projections using the revised RSS version. Today I re-visited this data, posting a further update of this data including the most recent months. While some commentators have criticized this post because the RSS adjustment reduced the downtick slightly, the downtick based on the most recent data as of July 28, 2008 is larger than the RSS adjustment as of Jan 2008.)

In 1988, Hansen made a famous presentation to Congress, including predictions from then current Hansen et al (JGR 1988) online here . This presentation has provoked a small industry of commentary. Lucia has recently re-visited the topic in an interesting post ; Willis discussed it in 2006 on CA here .

Such discussions have a long pedigree. In 1998, it came up in a debate between Hansen and Pat Michaels (here); Hansen purported to rebut Crichton here, NASA employee Gavin Schmidt on his “private time” supported his NASA supervisor, Jim Hansen here , NASA apologist Eli Rabett believed to be NASA contractor Josh Halpern here . Doubtless others.

It seems like every step of the calculation is disputed – which scenario was the “main” scenario? whether Hansen’s projections were a success or a failure? even how to set reference periods for the results. I thought it would be worthwhile collating some of the data, doing chores like actually constructing collated versions of Hansen’s A, B and C forcings so that others can check things – all the little things that are the typical gauntlet in climate science.

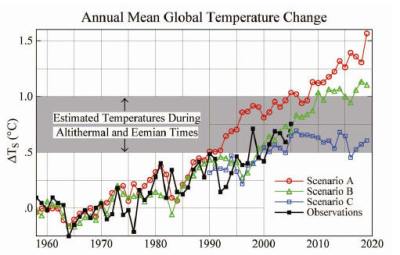

Here is my best interpretation of how Hansen’s 1988 projections compare to recent temperature histories.

I’ll compare this graphic with some other versions. On another occasion, I’ll discuss the forcings in Hansen et al 1988. First, I’m going to review the prior history of this and related images.

Hansen et al 1988

Hansen et al 1988 defined 3 scenarios (A,B, C), illustrated in the two graphics below taken from Figure 3 of the original article. Each scenario described forcing projections for CO2, CH4, N2O, CFC11, CFC12 and the other Montreal Protocol traces gases as a group. In subsequent controversy, there has been some dispute over which scenario was Hansen’s “primary” scenario. In the right panel, only Scenario A is taken through to 2050 and in both panels, Scenario A is plotted as a solid line, which could be taken as according at least graphic precedence to Scenario A. [following sentence revised at Jan 17, 2008 about 9 am] Despite the graphic precedence to Scenario A in the right panel graph, Hansen mentioned in the running text (9345):

Scenario A, since it is exponential must eventually be on the high side of reality in view of finite resource constraints, even though the growth of emissions (`1.5% per year) is less than the rate typical of the past century (~4% per year).

and, then inconsistently with the graphic shown on the right side only showing Scenario A out to 2050, said (p 9345) that Scenario B was “more plausible”, an aside that subsequently assumed considerable significance.

|

|

Hansen Debate 1998

In 1998, 10 years after the original article, in testimony to the U.S. Congress and later in the debate with Hansen, Pat Michaels compared observed temperatures to Scenario A, arguing that this contradicted Hansen’s projections, without showing Scenarios B or C.

[Update: Jan 17 6 pm] To clarify, I do not agree that it was appropriate for Michaels not to have illustrated Scenarios B or C, nor did I say that in this post. These scenarios should have been shown, as I’ve done in all my posts here. It was open to Michaels to take Scenario A as his base case provided that he justified this and analysed the differences to other scenarios as I’m doing. Contrary to Tim Lambert’s accusation, I do not “defend” the exclusion of Scenarios B and C from the Michaels’ graphic. This exclusion is yet another example of poor practice in climate science by someone who was then Michael Mann’s colleague at the University of Virginia. Unlike Mann’s withholding of adverse verification results and censored results, Michaels’ failure to show Scenarios B (and even the obviously unrealistic Scenario C) was widely criticized by climate scientists and others, with Klugman even calling it “fraud”. So sometimes climate scientists think that not showing relevant adverse results is a very bad thing. I wonder what the basis is for climate scientists taking exception to Michaels, while failing to criticize Mann, or, in the case of IPCC itself, withholding the deleted Briffa data. [end update]

In any event, in the debate, Hansen responded irately, arguing that Scenario B, not shown by Michaels, was his preferred scenario, that this scenario was more consistent with the forcing history and that the temperature history supported these projections.

Pat [Michaels] has raised many issues, a few of which are valid, many of which are misleading, or half truths, some of which are just plain wrong. I don’t really intend to try to respond to all of those. I hope you caught some of them yourself. For example, he started out showing the results of our scenario A, even though the scenario that I used in my testimony was scenario B…

I don’t have a copy of his testimony and can’t say at this point whether Scenario B was or was not used in the 1988 testimony.

[Update: The testimony is now available and Hansen’s statement that Scenario B was “used” in his 1988 testimony is very misleading: Hansen’s oral testimony called Scenario A the “Business as Usual” scenario and mentioned Scenario B only in maps purportedly showing extraordinary projected warming in the SE USA

On the other hand, Hansen also testified in November 1987 online here and, in that testimony (but not in the 1988 testimony), he did say that Scenario B was the “most plausible”, though in a context that was over a longer run than the 10-20 year periods being discussed here. At present, I do not understand how the trivial differences between Scenario A and B forcings over the 1987-2007 period can account for the difference in reported Scenario A and B results, that’s something I’m looking at – end update]

In any event, Hansen argued in 1998 that real world forcings tracked Scenario B more closely and that warming was even more rapid than Scenario B:

There were three scenarios for greenhouse gases, A, B and C, with B and C being nearly the same until the year 2000, when greenhouse gases stopped increasing in scenario C. Real-world forcings have followed the B and C greenhouse scenario almost exactly. … and the facts show that the world has warmed up more rapidly than scenario B, which was the main one I used.

Here’s the figure from the debate materials:

Hansen and Schmidt

In one of the first realclimate posts (when they were taking a temporary respite from their active Hockey Stick defence of Dec 2004-Feb 2005), NASA employee Gavin Schmidt here tried to defend the 1988 projections of his boss (Hansen) against criticism from Michael Crichton. Hansen issued his own defence here , covering similar ground, citing the realclimate defence by his employee (Schmidt), done in Schmidt’s “spare time”. On the left is the version from realclimate updating the history to 2003 or so; on the right is the image from Hansen’s article, updating the instrumental history to 2005 or so.

|

|

NASA employee Schmidt said of his boss’ work (not mentioning in this post that both were NASA employees, although Schmidt’s online profile in the About section says that he is a NASA employee):

The scenario that ended up being closest to the real path of forcings growth was scenario B, with the difference that Mt. Pinatubo erupted in 1991, not 1995. The temperature change for the decade under this scenario was very close to the actual 0.11 C/decade observed (as can be seen in the figure). So given a good estimate of the forcings, the model did a reasonable job.

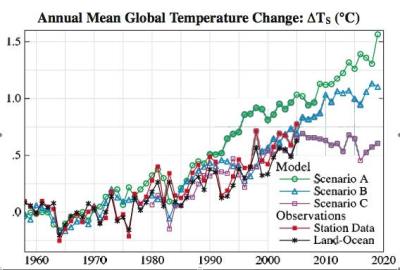

Hansen re-visited the predictions in Hansen et al (PNAS 2006) – this is his “warmest in a millllll-yun years” article – where he updated the temperature history to 2005, introducing a couple of interesting graphical changes. In this version, the color of Scenario A was changed from red (which is visually the strongest and most attention-grabbing color) to a softer green color. He also plotted two instrumental variants – the Land only and the Land+Ocean histories. Hansen argued that Scenario B was supported by the data and, continuing his feud with Crichton, asserted that his results were not “300% wrong”, footnoting State of Fear. NASA employee Schmidt loyally continued to support his boss on his”spare time” at realclimate, once more visiting the dispute at realclimate in May 2007, re-issued the graphic in substantially the same format as Hansen’s 2006 article, with further changes in coloring. It seems likely that Schmidt, as a NASA employee, had access to the digital version that Hansen used in his 2006 paper and, unlike us at CA, did not have to digitize the graphics in order to get Hansen’s results for the three scenarios. Schmidt stated:

My assessment is that the model results were as consistent with the real world over this period as could possibly be expected

|

|

Re-constructing the Graphic

While NASA employee Schmidt, in his “spare time”, has access to NASA digital data, we at CA do not. Willis Eschenbach digitized Hansen scenarios A,B and C to 2005 and I extended this to 2010. The three scenarios to 2010 are online here in ASCII format: http://data.climateaudit.org/data/hansen/hansen_1988.projections.dat

In the graphic shown above, I’ve compared the NASA scenarios to two temperature reconstructions: the NASA global series and the RSS satellite series (not showing CRU and UAH versions to reduce the clutter in the graphic a little.) The GISS temperature history has been used in the prior graphics and the RSS version has been preferred by IPCC and the US CCSP.

In order to plot the series, several centering decisions have had to be made. The GISS GLB (and other series) is centered on 1951-80, while the three scenarios were centered on the control run mean. The 1951-1980 means of the three scenarios, which included forcings for the period, were thus higher than the 1951-80 zero for the target temperature series by 0.1 deg C for Scenario A and 0.07 deg C for Scenarios B and C. The scenarios were only available in digital form for 1958-1980. For the GISS GLB series, there was negligible (less than 0.01 deg C) difference between the means for 1958-1980; 1958-1967 and 1951-1980.

In order to put the three Scenarios apples and apples to the GISS GLB temperature series (basis 1951-1980), I re-centered the three scenarios slightly so that they were also zero over 1958-1967. This lowered the projections very slightly relative to the instrumental temperatures. (Lucia recognized the problem as well and dealt with it a little differently). I applied a similar strategy with respect to the satellite series which did not commence until 1979. In this case, I re-centered it so that its 1979-1988 zero matched the 1979-1988 values of the GISS GLB series. This yielded the diagram shown above:

Comments

I’ll talk some more about the forcings in a day or two.

I’ve shown 1987, the last year of data for Hansen et al 1988. The 2007 RSS satellite temperature was 0.04 deg C higher than the 1987 RSS temperature and there was substantial divergence between Scenario B in 2007 and the RSS satellite temperature (and even the GISS temperature surface temperature series). Strong increases in the GISS Scenarios start to bite in the next few years. To keep pace, one must really start to see increases in the RSS troposphere temperatures of about 0.5 deg C. sustained over the next few years.

The separation between observations and Scenario C is quite intriguing: Scenario C supposes that CO2 are stabilized at 368 ppm in 2000 – a level already surpassed. So Scenario C should presumably be below observed temperatures, but this is obviously not the case.

It should be noted that Hansen et al 1988 considers other GHGs (CH4, N2O, CFC11 and CFC12). Methane is a curious situation as methane concentrations may have stabilized – making the SRES methane projections in even the A1B case possibly very problematic.

References:

Click to access hansen_re-crichton.pdf

http://rabett.blogspot.com/2006/04/rtfr-i-rather-strange-push-back-has.html

Click to access 1988_Hansen_etal.pdf

http://sciencepolicy.colorado.edu/prometheus/archives/climate_change/000771out_on_a_limb_with_a.html

rankexploits.com/musings/2008/temperature-anomaly-compared-to-hansen-a-b-c-giss-seems-to-overpredict-warming/

Click to access SPFtranscript.pdf

rankexploits.com/musings/2008/temperature-anomaly-compared-to-hansen-a-b-c-giss-seems-to-overpredict-warming/

http://rankexploits.com/musings/2008/how-gavins-weather-vs-climate-graphs-compare-to-hansen-predictions/

http://sciencepolicy.colorado.edu/prometheus/archives/climate_change/001318real_climates_two_v.html

http://illconsidered.blogspot.com/2006/04/hansen-has-been-wrong-before.html

http://www.climateaudit.org/?p=796

201 Comments

Here’s a script to generatre the above graphic. The script is online in ASCII form at http://data.climateaudit.org/scripts/hansen/hansen_1988.projections.txt if you have trouble converting Unicode signs here.

Hmm, curious. I suspect Hansen was feeling good about himself in 1998. His scenario B (and C to some extent) appered to track and trend well with his predictions, but the following years, 1999 and 2000, likely gave him a few sleepless nights. Todays comparison to observed is off the mark and heading in the wrong direction, which is where many will likely take the conversation.

However … despite my feelings toward the man, one must acknowledge his abilities to model (guess) climate well for approximately 15 years. I wish I could do the same for my retirement portfolio.

I had a related thought. It’s easy to “feel good about” one’s forecasts if one cherry picks time frames so that observed slopes are as steeply alarmist as one’s predicted slopes. As Steve M writes above, Hansen et al (1988) was published following a major El Nino. Meanwhile MBH98 was published following a monster El Nino. Based on the decadal pattern here (granted, n=2 cycles!) we can predict that the next major El Nino should result in another landmark publication where the hypothesis of an alarmist trend is resurrected. Shall we say … 2010? At that point GHG scenario C may start to look plausible again.

Thanks to Steve M and lucia and Willis for working up these graphics in the form of turnkey scripts.

Daniel Klein asked a few weeks back of Gavin Schmidt what it would take to falsify AGW. I think that dialogue is now relevant here. (If not, Steve M, feel free to delete.)

#529 of unthreaded #28

#549 of unthreaded #28

[I note that Daniel Klein has learned to genuflect after asking any critical question.]

And finally #553 from unthreaded #28:

It would be nice if we could keep this thread at a high level of civility and analytical correctness, and eventually get Gavin Schmidt to comment. I would really like some clarity as to how the ensemble of model runs are whittled down into a narrower subset without comprimising the ability of the model to “span the full range” of “weather noise”. What are the REAL confidence intervals on those ensemble runs? You need to know that before you start comparing observed and predicted time-series.

The nested blockquotes that I used in #4 did not work at all and now that comment is a disaster.

Is it possible to fix this? Nest blockquotes used to work.

Steve: IT doesn’t seem to anymore. I suggest italicizing the second nest of quotes. I tidied the above post for you.

In Gavin’s first plot he had just used the obs to 1998 which showed them falling between scenarios A and B. Then someone asked him (in the RC comments) to add the post 1998 data. He did so and, as you can see above, the obs then appeared to follow scenario C. A very short time after this there was a (coincidental?) publicized adjustment to the GISS data and you can see the effect on Hansen’s subsequent graph – the obs from 1992 onwards have been shifted up and they follow scenario B again. Shortly afterwards, Hadley then also made adjustments which brought their own graph back into line with GISS.

Yet he carried over the shaded area of the altithermal/eemian temps (listed as 6000 and 120,000 years ago, respectively). This flies in the face of the “warmest in a millllll-yun years” idea.

Using that shaded area seems to say that we are at least as warm (but not warmer than) as we were 6000 years ago.

And I’d still like to see which article he used as a reference to that idea.

#4 Bender

Many thanks for reprinting this very interesting and revealing interchange from RC, which I totally missed. I think Daniel Klein asked a really good question and in effect got Gavin to provide some confidence intervals around the projections. If I understand correctly that the models will have to be significantly rethought if the 1998 global temperature record is not exceeded by 2013, assuming no exogenous negative forcings. In adding an additional 5 years, I think Gavin is being a bit cute here and that the first statement indicating a decade is more reasonable – a math wiz here can probably come up with the difference in probabililites the additional 5 years makes. But more to the point, the decade estimate would mean that we would expect some significant reassessment of the models after THIS YEAR, 2008!! – prior to the next el Nino. (Your point in #3!) Is

Gavin playing poker or doing science?

Hansen’s testimony should be available in the Congressional Record of the 100th Congress. Unfortunately the online version at Thomas starts with the 101st Congress, and the online version at the GPO starts with the 104th Congress. The index though is searchable and has two listings, D429 23JN and D469 7JY. There is a document for a hearing on global warming before a House subcommittee on Energy and Power that took place July 7 and September 22 1988. Its SuDoc Number is Y 4.En 2/3:100-229 and its item number is 1019-A or 1019-B (microfiche). My GPO search showed there were three other hearings that year that mentioned global warming in the hearing title, none that were on the 23rd of June.

If anyone has easy access they could find these, some have been distributed to local libraries. Or obtain it through the GPO.

http://thomas.loc.gov/home/thomas.html

http://catalog.gpo.gov/F

Because we cannot tell what the real starting point for the projections/observations is …

… (is it 1958?, the 1958 to 1967 mean, 1984 (the last year of observation data Hansen says he used), 1987 (the last year of observation data Hansen would have had in 1988) etc. etc.) …

… this graph is susceptible to manipulation and whenever Gavin or Hansen or RealClimate plot it, they can make it look like the projections are very close to bang on.

As well, greenhouse gas emissions turned out to be between Scenario A and Scenario B (CO2 is closer to B but Methane is closer to C), so that also gives them some wiggle room.

I prefer using 1987 as the starting point. The 1987 RSS anomaly is +0.13C and the 2007 RSS anomaly is +0.16C. Twenty years later and lower atmosphere temperatures are only 0.03C higher.

I will send by email attachment the Hansen 062388 senate testimony transcript, written oral and attachments.

Steve: Received -thanks very much. These are now online here http://data.climateaudit.org/pdf/others/Hansen.0623-1988.oral.pdf and http://data.climateaudit.org/pdf/others/Hansen.0623-1988.written.pdf

He’s poking science.

I’m not sure why a volcanic eruption would automatically constitute a global cooling. I know a lot of ash and particulates (aerosols) are thrown up, but volcanoes also spew tremendous amounts of CO2, don’t they? If CO2 is so powerful why doesn’t the CO2 forcing override the aerosol effect? Maybe because CO2 forcing is logarithmic after all, and has very little effect at this point.

When say the Social Security Administration gives three projections of the system’s condition, ordinarily these are best case, worst case, and most likely case. So I think it’s reasonable to take Hansen at his word that case B was his most likely estimate, and not to read too much into his color choice.

Personally, I usually start with darkish blue since it looks nice in color and prints well in B&W. Then red, since it contrasts well with blue in color, and is a little lighter in B&W. Then green since it comes out a little paler than red in B&W. If I wanted to emphasize a line, I would try to make it the blue one, not the red one.

Steve: In mineral promotions, red is nearly always used to highlight the spot of interest.

Looks like maybe model variables were tweeked to create a best fit with observations. That yeilded pretty good results for a while, but when the trend that was occuring at the time the original model scenarios were run began to change, his results diverged from reality. GCMs have limitations and whole system is not understood enough to accurately model IMHO.

Re Glacierman in post 14

Perhaps what we see now is a climatological application of Goodhart’s Law?

Steve- Thanks for posting this. Here are some references that may be of some use for looking at the individual forcings in the scenarios:

Hansen, J., and M. Sato 2004. Greenhouse gas growth rates. Proc. Natl. Acad. Sci. 101, 16109-16114, doi:10.1073/pnas.0406982101.

http://www.pnas.org/cgi/content/full/101/46/16109

Most recent data on forcings:

http://www.esrl.noaa.gov/gmd/aggi/

Glacierman,

I think you are correct, bender said as much in #3. That said I doubt anyone has modelled the variability of the stock market as closely as Hansen has modelled the variability of Earth’s climate. Perhaps Ross could say a word or two about that.

I am not a fan of Hansen, he has many apologists, but strip away his bluster and political activism, you have a very smart individual and respected (in some circles) scientist. If he were not deviously clever, we would not be talking about him.

In many ways, Hansen (the so-called Shepherd of AGW) reminds me of Stephen Jay Gould (the controversial palaeontologist). Gould was to evolutionary psychology, as Hansen is to GW scepticism. He would infuriate his critics with his essays, books, and pet theories, but after he passed, his critics longed for his sharp mind.

RE #13,

Here I thought gold was their preferred color! ;=)

Another posting by NASA employee Gavin Schmidt defending Hansen, not mentioned in the above, was on December 2, 2004 here http://www.realclimate.org/index.php/archives/2004/12/michaels-misquotes-hansen-again/ , which appears to be Gavin’s very first post at realclimate (Dec 1, 2004 is the date of the realclimate introduction post.)

Gavin posted his defense of his boss in his “spare time”, which in this case occurred in the middle of a 9 to 5 Thursday. I wonder if Gavin booked Dec 2, 2004 off for “personal business”.

If you browse http://www.realclimate.org/index.php/archives/2004/12/ you’ll see how much effort they were then putting into preemptive strikes defending the HS ( MM2005 was then about to be published) and you’ll see why John A convinced me that I needed to try to defend myself. Thus, CA was born a couple of months later in Feb 2005 (carrying over a few website comments).

#13 In nature red is the universal color of alarm. Some smart googler could probably find a Nature paper on the topic.

Steve:

I believe these are the actual links

http://data.climateaudit.org/pdf/others/Hansen.0623-1988%20oral.pdf

http://data.climateaudit.org/pdf/others/Hansen.0623-1988%20written.pdf

I think there was a typo.

Comments on Hansen 062388 Testimony

Hansen bluntly says to the Senate that Scenario A is “Business as Usual”. In the first part of the talk, he talks about global climate. Then goes on to talk about summer heat spells. (This may have been a hasty concoction, as on that day in the summer of 1988 it was extremely and unusually hot in Washington DC. )

The written documents submitted with the oral talk follow one another closely as to content. A preprint of Hansen et al 1988 is included, along with the three viewgraphs that were presented.

As far as the “summer heat wave” section of the talk, Hansen discusses maps – not graphs – which have as the underlying basis, Scenario B. This is where all of the vague beliefs about “Hansen only talked about Scenario B” originate from.

Ten years later, when Michaels gave his Congressional presentation, he was addressing the primary prediction made by Hansen in 1988. That of course nothing to do with the sensational “summer heat waves” talk of ten years prior. There is of course no discussion of maps.

Rather it was on the primary topic – global warming. Michaels was dead on correct to note that Hansen’s prediction of 0.4C temperature increase was wildly incorrect.

Hansen’s phrase to the Senate was exactly as Michaels clear stated, “Scenario A was business as usual”.

The smearing of Dr. Pat Michaels was completely unjustified. Michaels presented the relevant prediction, Scenario A, “business as usual” in 1998.

#21 Interesting. He thought he could fool the politicians by cherry-picking his endpoint, 1987-88 – a choice, due to El Nino, that grossly exaggerates his trend. (Look what happened in 1989: La Nina.) He is smart enough to know what he was doing, in terms of creating a statistical distortion. He was sounding the alarm, promoting an activist agenda. And does anyone see any mention of a “precautionary principle”? All I see are distorted facts.

In 1988, Scenario A is the status quo scenario – Hansen’s “business as usual”. Therefore, the plain language interpretation is that assuming that nothing changes this is what Hansen’s models predict. The charts show that actual observations, whatever their flaws, immediately began to deviate from this scenario. This seems to be a case for rethinking the models and explaining how and why the physical assumptions underlying the model

need to change.

Moreover, since observations now appear to track Scenario C – a drastic reduction in GHG – and there appears to have been no such reduction, then again the assumptions underlying the model need to be made explicit and re-assessed.

Is it all this simple?

Finally, a lot seems to depend on treating 1998 as part of a trend rather than an aberration. If that one year was dropped or replaced with a 5 year mean, what would it do to the trends. This is another version of when will 1998 be surpassed. If it is not surpassed in the near future then it loooks more like an anomaly.

#15

Def. of Goodharts Law: `When a measure becomes a target, it ceases to be a good measure.’

I agree with Ian #17, he is a very smart guy. Smart enough to know how to make a trend seem as dire as possible by picking dates. If he is right, then placing activism and alarmism above scientific principals will make him a hero. But I believe that his actions are actually fueling the counter case to what he has been advocating. If he/they are so accurate and correct, their work should stand up to any scrutiny.

Thanks Mhaze.

I do not see any mention of a “preferred” outcome. Nor is there a “worst case”, “expected”, “best case” organization in either oral or written testimony. Instead, A is presented as business as usual, what would happen if the trace gasses continue growing at an exponential rate, B is some sort of reduced linear growth and C is drastic curtailment of emissions with everything cut off by 2000.

A is clearly our not doing anything. Going back 10 years later and saying B fits the data is different than highlighting what happends if we don’t do anything. If he wants another re edit, he could claim he saved us from A. Isn’t he arguing now that the reason that we are seeing C is that the MDOs are covering up the growth?

Steve

Did you hear Gavin Schmidt’s statements on the acceleration of global warming, tipping points etc on PBS Radio yesterday morning? PBS had him on for a whole hour

http://wamu.org/programs/dr/08/01/16.php#18143

I’ve been browsing some of the controversy over Hansen’s projections. NASA employee Gavin Schmidt said:

As noted above, in his debate with Pat Michaels, Hansen stated:

I agree entirely with Mhaze characterization of Hansen’s 1988 testimony, now available online at CA. Hansen said in his testimony:

Scenario B is only mentioned in the following context in the oral presentation:

This regional prediction is worth re-visiting in itself – it seems to me that our USHCN discussions have shown that USHCN temperatures have increased more in the west than the southeast (where, as I recall, there has been cooling.)

Hansen said in 1998:

Yes, he used Scenario B to illustrate regional effects, but there is no indication in his testimony that he regarded Scenario B as the “most plausible”. But the transcript shows that he identified the “business-as-usual” case as Scenario A.

I wonder how NASA employee Gavin Schmidt, in his “spare time” of course, would characterize Hansen’s 1998 statement on a scale ranging from “simple confusion” to “pure dishonesty”.

sorry for the digression, but is not the difference in Hansen’s scenarios mearly the model boundary conditions (primarily, the change in ambient GHG concs over time?). Asside from the arguments over who is being dishonest about the testimony…didn’t scenario B turn out to be closer to what actually happened RE said boundary conditions and so should not that be the proper comparison result (over time) for the Hansen model at this time?

Thanks

So it seems to me we have a total error of Actual-Scenario A.

However, we can decompose that to:

Model error = Actual-Scenario B

Assumption error = Scenario B-Scenario A

And even this would be based on an assumption that the three scenarios were entirely exogenous factors.

This is such a telling observation

From the written testimony, page 48, description of figure 3:

This is quite revealing of the Hansen point of view at the time of his testimony.

I’m willing to bet that the growth in CO2 since 1988 has been much closer to the “business as usual” run, than it was to any of the other runs.

Hansen writes on p. 9345 of his 1988 paper:

“Scenario B is perhaps the most plausible of the three cases.”

Click to access 1988_Hansen_etal.pdf

He explains what he means by this:

“Scenario A, since it is exponential, must eventually be on the high side of reality in view of finite resource constraints and environmental concerns . . .”

He also says on p. 9343:

“Scenario A assumes that growth rates of trace gas emissions typical of the 1970s and 1980s continues indefinitely . . .”

Thus, I think it is appropriate to conclude that over the period to 2007, Scenario A is equivalent to what the IPCC calls its “no policy” scenarios, which mean business as usual without explicit policies implemented to limit emissions. When Hansen said “most plausible” he was factoring in factors such as limitations on fossil fuels and eventual emissions policies, which may certainly be the case over the longer term but have not interrupted BAU as yet.

The accuracy of his scenarios to replicate what actually happened since 1988 is another question (stay tuned, more on that shortly).

As an aside, I note that in section 5.2.4 of the paper Hansen compares 7 years of observational data with the model runs. Gavin Schmidt went nuts when I compared 8 years of data with a model prediction (and I even concluded that little could be said on that time scale).

The more general conclusion is that forecast verification make climate modelers very nervous. It shouldn’t. Weather forecasters bust forecasts all the time and learn from it without going nuts. of course, weather forecasters have never been so bold as to claim that all of their forecasts are always right.

#25 bender: “Hypothesis: GHG/AGW may be happening, but the effect is far weaker than what these partisan pseudoscientists claim.”

This is exactly the point. AGW may be happening, but so far the effect has been entirely indiscernable. 20th century climate wanderings have been historically unremarkable and indistinguishable from normal happenstance.

35 MarkW says:

It depends on what he meant by “about 1.5% yr-1 emission growth”. If he was talking about the CO2 output by humans then Scenario A matches reality. If he is talking about the CO2 concentration in the atomosphere then he can argue that the acutual CO2 growth was less than what was assumed by Scenario A.

#37 RPJ

Not another AGW double-standard? From a modeler no less! Shameful.

Just noting that trace gases (CFCs and Methane) did meet the case of Scenario C. CFC concentrations have fallen now and Methane levels have stablized.

It is not really clear, however, what Hansen was assuming for CO2 in his Scenarios (CO2 and N2O have continued increasing at the trends of the mid-1980s.)

Concentrations of the GHGs from 1979 to 2006 are shown in the chart at this link.

Re 13, Hu, Steve

In the CS world, red (or red-orange) is almost invariably used for the warmest temps, AL on maps. So that may explain the orig color choice.

Cheers — Pete Tillman

I just received my monthly issue of one of the trade mags I get. It has a “lessons learned” article in it about Challenger. I will read it with great interest. There is what I consider to be an out of control management system at NASA. I will report out on the article later.

bender says, It would be nice if we could keep this thread at a high level of civility. Umm, too late McI’s original post crossed the civility line. I keep reading deniers claiming this site is scientific but it appears to be more catty than the girls lockeroom in a high school.

The Hansen et al 2006 paper makes some interesting statements:

It’s unusual not just because of its magnitude, but because the GCMs don’t predict it. The GCMs are underestimating internal climate variability, which despite its “regionality” nevertheless contributes massively to globally averaged statistics.

Proving that Hansen is fully aware how statistical distortions may arise from cherry-picking 1988 or 1998 as convenient dates to publish “landmark” papers.

“Say it three times every night before going to sleep*”: when the solar cycle peak coincides with El Nino it is time to sound the alarm.

* -raypierre

Here is a graph showing Hansen’s CO2 component of the relevant scenarios vs. observations 1984-2006.

The difference between A and B is irrelevant on this time scale, and both perform better than C (obviously). You can see Hansen’s version of this (without the obs) in the top of Figure 2 in his 1988 paper.

#44 Winnebago

Just so we’re clear, there is nothing civil about the term “denier”.

Scenario B is perhaps the most plausible of the three cases.

what exactly is the reason for further debate?

Meanwhile, in la-la land, gavin Says:

11 January 2008 at 2:14 PM

http://www.realclimate.org/index.php/archives/2008/01/uncertainty-noise-and-the-art-of-model-data-comparison/langswitch_lang/sw

This is special pleading, and it is fatal to Schmidt’s entire argument that the predicted and observed trends match. Maybe someone can pre-empt me and explain why? [Hint: I will simply invoke the only argument available to him when he was desperately trying to rebut Douglas et al.: the error on the GCMs is enormous.]

More later on the GS/RC double-standard on “weather vs climate” and “internal vs external variability”.

Pat Frank said (January 17th, 2008 at 11:40 am

This is exactly the point. AGW may be happening, but so far the effect has been entirely indiscernable. 20th century climate wanderings have been historically unremarkable and indistinguishable from normal happenstance.

If you really want to see how “unremarkable” they’ve been, put an upper line (through the grey area) corresponding to the 39-40 high temp.

The temperature trends for the US regions can be generated here . I see no alarming temperature trend in the southeast or midwest for the time period mentioned by Hansen. A regional precipitation plot is harder to generate but I think it will show nothing abnormal also.

On global temperature trends, one thing to note is that the 1997-2001 period was one of a strong La Nina (cool) followed by a strong El Nino (warm) followed by a strong La Nina (cool). This pattern masked (my conjecture) a “background” rise in global temperatures over that period, instead making it look like there was a rise circa 2000-2001 rather than 1995-2001.

My belief is that much of the 1995-2001 “global” rise was related to warming of the far north Atlantic (see here for the warm-season SST pattern). This warming released both sensible heat and humidity into the Arctic region, resulting in this Arctic/Subarctic air temperature increase .

If this far north Atlantic SST rise is related to the AMO and has peaked, and if the PDO is switching back to a cool phase which reinforces La Nina activity, then the global temperature pattern may be sideways for quite a few years. That would be a problem for gavin and Hansen.

It seems ro me that in order to assess the accuracy of the models, you first need to check their assumptions. For instance, I think someone mentioned a scenario which had several volcanic eruptions between 1988 and now. But did these actually happen? And which scenario’s CO2 is closest to actual? I know Roger posted something above, but it seems to me that the only apples to apples comparison you can do is to rerun the models with what actually happened (the actual volcanoes, El Ninos, La Ninas, and emmissions.) But for that you’d need the code for the models. I’m not clear, but is that public? Can you get it?

On which scenario was primary:

I think you should take Hansen at his word that he always thought scenario B was the main one at least in some sense. I can’t find any spot in the paper where he calls this out, but when he showed detailed geographical predictions, he used scenario B.

Why was scenario A run longest? I have no idea. You’d have to ask Hansen. However, I can suggest a reason that is consistent with A not being the main computation. First, scenario A was run longest because it was run first. (See page 9342 just before section 2.)

So, why was scenario A run firsts? Well, NASA scientists do have bosses and program managers. I will also speculate that the forcing for scenario A was selected partly to foster discussions with programmatic types and to convince program managers that study was required. It actually fairly routine to do bounding calculations to show whether or not further review should be done, and “A” has the fingerprints of that sort of thing.

Had A shown nothing, B & C would never have been run. B&C were only run because A was not the main scenario.

On access to raw data:

I disagree with some of the choices Gavin made when comparing the Hansen’s model to data, and I disagree with his conclusions.

Still, I think this is unfair:

When I asked Gavin for the scenario temperature anomaly data in a comment at RC posted during Christmas break, he provided that data (I think about a week later). He also proactively provided files for the forcing data, which I hadn’t requested.

Gavin says the ABC anomaly data were digitized from the graph, but evidently not by him. My plots at my blog use those data. Gavin isn’t an author on the 1988 paper and I suspect he didn’t have access to the raw data.

I’d go furter and speculate that NASA’s programmatic requirements didn’t require Hansen to keep a file of the data points and the raw data no longer exist. ( I could grouse about gov’t agencies here. But, this is not, strictly speaking the fault of the individual scientists who may not remember to back up 1988 data and subsequently store it on easy to retrieve media over the course of two decades, which may involve moving to different offices, buildings etc. At Hanford, with waste tank work, we had specific requirements for keeping data imposed by program managers. The rules were a pain in the *&%, but they ensure that, eventually, key information can be obtained should things go wrong later. )

On resetting the baseline:

I don’t think there is an officially good way to reset the baselines given data now available and published. I wish modelers were in the habit of reporting the real honest to goodness thermometer temperature corresponding to the baseline. If they did, we’d at least have a preferred pin point.

I read a blogger somewhere suggest they just stick with Hansen’s first choice. That’s fair in some sense. But, on the other hand, he was able to eyeball agreement between 1958-1983 before publishing. Since the zero point for the baseline is rather arbitrary, it might be difficult for him to be entirely objective and avoid selecting one that showed the best agreement.

Right now, figuring out just how close the models and data are is a bit of a cherry pickers dream. Shifting the baseline within reasonable bounds alters one’s conclusion. Shifting the measurements around also do.

The one thing that is true: The models predict up. Temperatures went up. So, the values are qualitatively correct. Unfortunately, that still leaves a very wide uncertainty when using these to extrapolate forward.

@John Lang–

On the dividing point for projections/ predictions. It’s best not to pick the starting point for comparison based on the the temperature measurements themselves, or the results you want to get. This is already sort of a cherry pickers dream, but still, there are some rules making some cherries off limits!

I recommend looking at dates associated with when the work was initiated and first made public.

The manuscript was submitted in Jan 25, 1988. Given clearance processses at most labs, I think we can be confident the manuscript was, for all intents and purposes, complete no later than Thanksgiving 1987. Still, it’s unlikely the scientist finished computations in 1986 and left the data in a box for a year waiting to see if their prediction for 1987 was right. So, 1988 is the plausible date to beging testing forcast ability; 1987 is not.

The paper itself says computations for transients began in 1983, and results for scenario A were presented at a meeting in 1984. So, 1984 is the earliest possible data for testing forecast ability. At that point, the model was frozen, and other scenarios were run. Earlier results are, strictly speaking “post dictions”.

I picked 1984 to test the model. But, I think if you are trying to be fair, you need to pick 1984 or 1988. Picking 1987 is really sifting those cherries.

The dialogue on this topic at RC is absurd, reduced to a strawman yes/no AGW by the consistent, concerted efforts of gatekeepers Hank Roberts and Ray Ladbury, among others.

The opening post by GS is convincing to me that it is unlikely that a positive, err, “trend” in temperature has abated since 1998. My question concerns the quantititative estimate of the slope of that trend. The gatekeepers’ job is to steer discussion toward yes/no debate on AGW, and away from a scientific discussion of the magnitude of slopes on those 8-year trend lines and the contribution attributable to CO2. The uncertainty on the slopes is high, and the proportion that can be attributed to CO2 even more uncertain. They do not want you to talk about that. They want to push the skeptic back to the less defensible, categorical position that GHG/AGW=0. Much easier to win that argument.

You nailed it exactly right David. This is exactly the theory that I have espoused, and you are the first person I have seen to echo it so succinctly. Thanks for putting in writing here what I never rose to the occasion to do.

I have a question. Where do the “wiggles” in the 1988 projections come from?

This was not immediately evident from skimming the original Hansen paper. If the model is deterministic, one would expect that the result would be smooth whether the solution oscillated or not. It can’t come from other variables expect as boundary conditions since the future behavior of those other variables would not be known. I did notice from Figure 2 in the paper that they threw in some hypothetical volcanoes for the years 1995, 2015 and 2025 presumably to create an aura of “reality” for their model. Did they also create hypothetical variations for the other factors? I presume that there were no randomly simulated components in the model because a result based on a single run of such a model would basically be meaningless with no indication of how the randomness affects the results. The wiggles make the projections look like a “real” temperature series, but how did they get there?

In Hansen’s discussion with Michaels, he states the model scenario contained a large volcano. It turns out there was a volcano in the projected time period and Hansen claims models B and C followed actual measurements.

If a volcano is modeled, why isn’t an El Nino also modeled which would tend to increase the projected temperature changes?

re 52. hadley and hansen are already handicapping 2008 as cool

That you can approximate a 30-year ‘trend’ using a sequence of shorter trend lines (e.g. 8 years) does not imply the 30-year pattern is a GHG-caused trend. LTP/ocean upwelling can generate low-frequency anomalies that look like increasing trends over very short (relative to ocean circulation) time scales. Same for decreasing (or abating) “trends” – a point that agrees with DS’s #52.

lucia says

Therein lies the problem.

Depending on when he’s speaking, which scenario is primary has changed.

There are no wiggles in the ensemble error bars – which I have only ever seen plotted once – when GS was trying deperately to refute Douglas & Christy.

@bender–

Yes. For many people seen as deniers/ skeptics, the question is not “yes/no” it’s “how big”. There is a huge difference between Scenario A and Scenario C. A definitely over estimates. Scenario C may over estimate, but not so badly that we can say it’s wrong with any high degree of certainty.

That said: I doubt the warming trend has ended. I just doubt it.

ENSO is an ’emergent property’ of the ocean circulation. Therefore it *is* modeled, in the sense that all the thermodynamic and fluid dynamic processes that are thought to produce these kinds of effects are included in the GCMs. The only problem is that these features don’t emerge the way they are supposed to.

So do I. But human intuition is fallible. So we measure and we model and we try to be objective about how the models are performing.

lucia, would you have predicted the flattening of global CH4 in 1999?

Bender– I don’t predict forcings. 🙂

I just predict temperature given a forcing history. My current post-dictions are a little high, but slightly better than Scenario C.

I came up with the idea for my model on Sunday night (I think.. maybe Saturday.) The first cut gives me a correlation coefficient of 0.89 (as Excel calculates it. I have issues with that for this particular model. I’ll be calculating a real one later– I think I’ll look worse. But it’s still pretty good.)

Once I get this nailed down, I’ll be able to run all sorts of “thought experiments” quickly. Of course, the thought experiments will only be meaningful to those who think my model describes anything realistic. 🙂

lucia says:

Humans used to looking at various economic graphs which tend to always go up in the long run (population, stock market, housing prices, etc). I think this gives most humans a bias toward expecting continuous upward trends with periods of dips. Natural processes are different and do not follow the same rules. A phase reversal on the PDO or another Dalton minimum could send temps falling again no matter what the science of CO2 says.

In reality Scenarios A, B or C as proposed in 1988 hardly matters 20 years later – it is whether actual observations support the underlying assumptions of today’s models and at what point do the model builders acknowledge and explain how they have adjusted the models to fit with new observational data. (How Hansen et al dealt with the more contemporaneous discussion of these Scenarios, is another matter.) That is why I think Daniel Klein’s question and Gavin’s response is so interesting. If Gavin et al are seeing 2008 as a cool year, we should expect some serious reconsiderations of the assumptions underlying the model – but then Gavin punted to 2013 and we have to wait. If we stick with 2008, it brings us neatly back to Steve’s question of where the 2.5C for doubling of CO2 comes from – since this is surely the crucial assumption underlying these models.

– Conventional wisdom, 2006.

Bender (comment #4), I brought up on RC one confounding effect that probably needs to be considered with respect to anthropogenic global warming.

If we take seriously the flattening out of the global mean temperature (i’m not suggesting that we should), this might be explained by a simultaneous improvement in CO2 emissions by industrialized nations coupled with increased aerosol production in third world nations. I know some people (e.g., Singer) are nervous about the link between CO2, aerosols and global temperature. Indeed they tend to regard the introduction of the aerosols as a “hack” to explain away the lack of warming in the 1945-75 period.

I write it off as a very real effect that is not well characterized by the models, probably because these models don’t model with enough accuracy the effect of the additional aerosol particles on cloud production to properly account for it’s full effect on temperature.

In any case, if I’m correct, then the apparent failure of Hansen’s prediction was not to foresee the industrialization of the 3rd world nations and its ramifications, and not some more basic problem with his climate model.

Bernie says:

What you are describing is a catch 22 situation. We can’t evaluate the new models because there is not enough data. But we can’t evaluate the old models because they are out of date. We can’t do much about the lack of data but we can evaluate the old models.

RE: #68 – Mother nature loves oscillations, and superpositions of many different oscillations at different frequencies. Meanwhile the Naked Ape, has more of a linear perception bias hard wired into its brain. Some of the longer lived civilizations have learned to somewhat overcome it with a more cyclical philosophical perspective, but here in the West, this hard wired bias rules supreme.

Sorry I meant #66 … AKA “Raven says: January 17th, 2008 at 2:11 pm”

That is a dodge, but your smiley gets you off the hook. 🙂

To a climatologist CH4 may be a forcing, but to a biogeochemist it is a response. So let me rephrase. Would the amateur biogeochemist in you have predicted a flattening of CH4 in 1999? The amateur economist a housing market collapse in 2007? And so on. Things happen in dynamic systems.

Steve,

I took your method of zeroing the three projection scenarios:

and re-zeroed the GISS surface-ocean data to the same 1958-1967 period. Here is my scorecard for the 20 years (1988-2007) of out-of-sample projections:

Hansen A:

Average projected anomaly: 0.82 C

Average actual anomaly: 0.39 C (48% of projected)

20 of 20 years projected anomaly exceeded actual anomaly.

Hansen B:

Average projected anomaly: 0.58 C

Average actual anomaly: 0.39 C (68% of projected)

18 of 20 years projected anomaly exceeded actual anomaly (all but 1997 and 1998)

Last projected anomaly less than actual: 1998

Hansen C:

Average projected anomaly: 0.49 C

Average actual anomaly: 0.39 C (80% of projected)

17 of 20 years projected anomaly exceeded actual anomaly (all but 1988, 1996, and 1998)

I’d say scenario A is not only dead, it’s long dead.

As mentioned above, the forcing assumptions of scenario C have proven to be way off, so that one is pretty well dead, too.

Which leaves scenario B hanging on life support at best, with the last sign of brain-wave activity being the El Nino year of 1998.

@Raven–

I rarely look at econometric data. I’m a mechanical engineer. I tend to think of the world in terms of mechanics and thermo-dynamics. I’m used to the idea things go down– gravity tend to pull things in that direction. 🙂

But yes, phase reverals on the PDO could do dramatic things, and I understand that lowering temperature could be one of them.

@bender-

There is no amateur bio-geochemist inside me. Or, if such a thing lurks within, it’s a very stupid ill-informed amateur biogeochemist. So….. if it’s a biological effect, I would have done no better than a coin flip and possibly worse.

Later on, if I get the simple model with CH4 as a forcing on heat ironed out, then maybe I’ll ask you to suggest an equation to predit CH4 as a function of temperature. That might be fun to do.

But first, I need to get these volcanos accounted for in detail, and see if I can get Temperatures right based on forcings in Watts/m^2.

I did expect this housing market collapse. That was kind of obvious; there were so many factors artificially boosting prices. My brother asked me if he should buy near the top of the bubble and I told him, “Don’t buy!” He told me he was going to go ahead anyway, but then life happened , he got busy and was spared a financial loss.

Lucia,

Although I am not thoroughly familiar with everything that you have written, you seem to have a high degree of integrity and graciousness. From what I have read from Hansen & his supporters and from what I sense of his political agenda, I do not trust him. The intensity of his beliefs and the lack of protocol safeguards provide too many opportunities to taint his work. (He might have genius thoughts and legitimate contributions, but trust is another matter.) That being said, I would love to get a modeler’s view on my following concern. From my work in econometrics, I know that with enough dummy variables, I can get a great fit with even a sloppy and poor model. From my readings on Global Climate Models, it appears that often modelers are using aerosols as almost dummy variables. Can you give any insight that aerosols are being included in a legitimate manner? If the parameters of a model are determined from data going back 40 or 60 or 80 years, what values are used for aerosols? How do we know what levels of aerosols existed in what zones of the atmosphere going back into history? And then how do we forecast aerosols in the future?

So bottom line:

GCMs that have been curve fit to past temperature data don’t have predictive value.(?)

Or in general:

Don’t bring extrapolation to a prediction fight.

An Inquirer says:

Short answer: they use the “inverse method” (i.e. they calculate the amount of aerosols requried to make the model match the data). I posted a link to paper that explains this in more detail before. Newer models try to use real data but I have not seen any studies on how those models perform.

Raven: #70

I didn’t mean to suggest that we should not evaluate the models on their own terms. But it seems to me that we certainly cannot assume today’s models have exactly the same specifications and assumptions as those from 1988 – of course they could, but that would raise another set of issues! That is why I think we need to understand the key assumptions embedded in today’s models, such as the 2.5K for doubling CO2. The underlying assertion of all these models is a strong AGW – which can only be demonstrated if previous record high’s are exceeded on a fairly regular and frequent basis. In a sense this is a gross test of all the models that predict a strong AGW.

Raven:

If it’s not too much trouble, could you post that link again?

Thanks.

re 74. 20 year projections have no validity, unless of course they match observations.

Try

Click to access BNL-71341-2003-JA.pdf

#62 Thank you Bender.

Where I am stuck on this is Hansen’s seemingly prideful consideration of the volcanic activity in his models. These, it seems, are rare events that spike the system with CO2 (raised temp) or ash (lowered temp). If extra-ordinary volcanic events are significant to a climate model, why aren’t other extra-ordinary events such as the “effects” of rare but major El Ninos or La Ninas or changes in solar activity or any other non-standard state events.

And if these are considered, then there is the timeframe modeling of these events that can be concurrent or phased over years. And some of these models would vary the assumed contribution of CO2. The result is models A-ZZ, not just A, B or C. These models may be as (or more) accurate when compared later to temperature changes.

So how does one choose A, B or C when there are so many other possible models?

Ah, I found the lenghty discussion of this on the Willis E on Hansen thread.

Carrick,

Certainly an interesting idea. But there are literally HUGE error bars on aerosols right now, so what your talking about is nothing but speculation. Without an empirically derived value for the effect, it actually is a hack to fit.

That of course could be said of just about anything in climate science at this stage, given the “low” or indeed “very low” level of scientific understanding on some of these subjects. If our level of scientific understanding of aerosols is low, then we can’t say we are justified in assuming that the account for the lack of warming between World Warm 2 and the the late 70’s. They might. Hardly a basis for sound policy. More research, and, you know, maybe some experiments are necessary.

But who’s suggesting anything like that? No one at realclimate, to my knowledge. I thought the “science is settled”. But maybe not?

Eh-hem…That’s World War 2, not Warm.

AGW on the brain, evidently.

come on!

we are discussing a paper from 1988 and global warming wasn t that hot a topic back then.

the actual temperature is slightly below the most “plausible” scenario, especially if we accept Steve’s recentered graph. (though if you look at the graph, it might have been further away between 1970 and 80…)

now the skeptic movement seems to be too young to have made any testable predictions. (any predictions? some times i can t shake the feeling that you guys prefer to stay imprecise in that aspect..)

but there is a LOT of room at the bottom of that graph, and temperatures could have been falling for 20 years now…

As I believe was explained to Willis back when he did his analysis, if you want to compare the model predictions to the real world, you cannot recenter to the real world mean.

Each year has a measured anomaly. Imagine you could rewind last year to January, would the same anomaly be measured? Hardly, because there are random fluctuations at work. The whole point of having a control run is to have the model figure out what the “average” anomaly would be for the conditions of that particular year. If you recenter to the real world mean, you introduce a large bias from interannual variability.

In short, if you recenter you are throwing out part of the model’s projection.

Steve: However, I’d be amazed that if I’ve introduced a “large bias”. I presume that you just said that without checking the point. Another possibility that would protect any model abilities would be center on the 1958-87 calibration period perhaps through a regression – constant only. My guess is that it will look about the same. I’ll look at that tomorrow.

In fact, Hansen’s scenario A is even less than reality. He assumes an annual increase of 1.5%/year (a small exponential) whereas the lastest paper by Le Quéré (who predictably was a delight for the alarmist crowd and triggered sky-falling headlines in all languages) reported a more than 3%/year increase in CO2 emission.

So what is 100% wrong is Hansen’s CO2 absorption model. With this “business as usual” projections o 1,5%/year emissions increase for his scenario A, his models predicted a CO2 atmospheric content of 384 ppmV for 2006 (R. Pielke Jr’s graph in #44).

But in reality, even with >3%/year emissions increase since 2000 (damn Yankees who refuse to stop buying SUVs & Chinese to stop building power plants), atmospheric CO2 is still

(tag problem, reposted)

In fact, Hansen’s scenario A is even less than reality. He assumes an annual increase of 1.5%/year (a small exponential) whereas the lastest paper by Le Quéré (who predictably was a delight for the alarmist crowd and triggered sky-falling headlines in all languages) reported a more than 3%/year increase in CO2 emission.

So what is 100% wrong is Hansen’s CO2 absorption model. With this “business as usual” projections o 1,5%/year emissions increase for his scenario A, his models predicted a CO2 atmospheric content of 384 ppmV for 2006 (R. Pielke Jr’s graph in #44).

But in reality, even with >3%/year emissions increase since 2000 (damn Yankees who refuse to stop buying SUVs & Chinese to stop building power plants), atmospheric CO2 is still under 380 ppmV in 2006. That means that the Earth has absorbed the satanic gaz twice more than he expected.

That should be a motive to rejoice in a sane world. But hey, we’re dealing with climate science.

One of the many things that puzzle me as non scientific citizen of average scientific knowledge in trying to follow the debate concerning AGW is understanding how, given the many “low” levels of uncertainty admitted by most experts, can anyone claim to “know” such a complex question?

When the IPCC makes a “non prediction” prediction of mean temperature response to manmade C0-2 is it defined to both latitude and altitude? Is it, and should it not be also be defined to T-max daytime and T min night time for both the surface and different altitudes and latitudes? Should the veracity of the GH theory not have to answer to these far more detailed predictions then to a simple estimation of increased surface temperature, and using whichever of the various means of arriving at a global average best matches that one parameter?

Do the tropical latitudes receive more incoming radiation then the sub tropic and poles?

Is the majority of the initial (before feedback) atmosphere heating resulting from increased CO-2 reduced by the fact that the majority (W-Sq-M at low latitude) of outgoing radiation is in the latitudes most saturated by water vapor?

Is not all over lapping absorption molecules the same, and therefore would it not take an exponentially larger increase in CO-2 to increase temperature in the tropics as opposed to the poles?

Would it not then be a mistake to assume a global average incoming watt per sq-m solar radiation and global average outgoing L-W radiation and global average Greenhouse effect for Co-2 and apply those global numbers to the tropics, when a higher percentage of both LW and SW radiation is in tropical latitudes where that increase in CO-2 has less effect?

Is it not also therefore true that the polar areas of least water vapor, where a greater temperature increase from doubling of Co-2 would have the most effect, has the least percentage of both incoming S-W and outgoing L-W radiation due to the incident angle of incoming Sun light, the high reflectivity of the snow and ice, and the greatly reduced outgoing L-W radiation due to this?

Would not then these very different percentages of both incoming and outgoing L-W and S-W radiation and different responses, to increased Co-2, produce very different results then using straight line global averages? (IE…lower response in high radiation areas and high response in low radiation areas) Would this not produce a lowered, “before feed back” estimate of the global response to increased C0-2?

Also, as I understand, any increase in heat would cause an increase in convection. Is this negative feedback quantified?

Is the further increase in cloud cover due to an increase in water vapor quantified?

Should not the IPCC “Prediction” be more like as follows…?

1. Incoming tropical W/sq-m. (A different calculation then for subtopic and polar latitudes)

2. Outgoing tropical L-W W/sq-m. (A different calculation then for subtopic and polar latitudes)

3. Doubling in tropics of Co-2 = X W/sq-m increase in temperature. (A different calculation then for subtopic and polar latitudes)

4. Subtract X W/sq-m due to increase in convection. (A different calculation then for subtopic and polar latitudes)

5. Subtract or add X W/sq-m due to increase in cloud cover and energy spent in precipitation.

6. The other cogent factors the experts believe, quantified to different latitudes etc.

7. Then a global statistical average of these factors.

8. Error bars assigned to all these estimates, and finale estimates.

Could not all this be done on a single spreadsheet linked to relevant papers and discussions, and also linked to a layman’s explanation? Sorry this is so long, but I think any response would help the average interested citizen get a handle on this issue. Any concise non technical feedback is appreciated.

@An Inquirer

Thanks for the compliments. I’m not ordinarily gracious though. I just sometimes happen to have opinons that sound nicer than at other times.

I don’t know that much about GCM’s particularly, but I know something about transport models, and physical modeling in general. Stil, I can say what I think about aerosols. (Bear in mind, I may say somethings that are wrong. )

First: It is totally legitimate to include aerosols and the effect of particulate in the atmosphere in a GCM. It’s not just thrown in as a total fudge and the magnitudes used to estimate the forcings aren’t totally adjustible. So, it is not just a big curve fit.

The numerical values for the forcings on Aerosols come from experiments that are done externally from GCM’s. For example, I did a Google, and found this Proceedings of the Seventh Atmospheric Radiation Measurement (ARM) Science Team Meeting ARM does lots of infield experimental work.

The estimates of the magnitude for aerosol are pegged by these sorts of measurements They aren’t just picked to make GCM’s predict better values. If GCM modelers used values outside the range with experimental support, people would really give them hell (and justifyably so.)

But there is a potential problem: I don’t know how precisely the experimentalists have pegged these values either for current years (when direct measurements are possible) or in past years (when direct measurments likely weren’t done) It may be the uncertainty bounds from in experiments are rather large giving modelers quite a bit of leeway.

But I do what to emphasize– since these are blog comments and “I don’t know” often gets take the wrong way: Wh I say “I don’t know”, what I mean is simply: I don’t know. I haven’t read enough on this issue. My area isn’t climate or atmospheric radiation.”

So… the short is: including aerosols is not just a fudge like predicting stock market prices based on Superbowl winners (or even less loonie things, but just using way too many.) However, I don’t know how good a handle they have on aerosols. I happened to email Gavin out of the blue (on a day after I’d been kind of nasty to him.) He was neverthelss very nice, answered all my questions, and voluntered those values could be off by a factor of 2.

One of the many things that puzzle me as a non-scientific citizen of average scientific knowledge in trying to follow the debate concerning AGW is understanding how, given the many “low” levels of uncertainty admitted by most experts, can anyone claim to “know” such a complex question?

When the IPCC makes a “non prediction” prediction of mean temperature response to manmade C0-2 such as discussed here, is it defined to both latitude and altitude? Is it, and should it not be also be defined to T-max daytime and T min night time for both the surface and different altitudes and latitudes? Should the veracity of the GH theory not have to answer to these far more detailed predictions then to a simple estimation of increased surface temperature, and using whichever of the various means of arriving at a global average best matches that one parameter?

Do the tropical latitudes receive more incoming radiation then the sub tropic and poles?

Is the majority of the initial (before feedback) atmosphere heating resulting from increased CO-2, reduced by the fact that the majority (W-Sq-M at low latitude) of outgoing radiation is in the latitudes most saturated by water vapor?

Is not all over lapping absorption molecules the same, and therefore would it not take an exponentially larger increase in CO-2 to increase temperature in the tropics as opposed to the poles?

Would it not then be a mistake to assume a global average incoming watt per sq-m solar radiation and global average outgoing L-W radiation and global average Greenhouse effect for Co-2 and apply those global numbers to the tropics, when a higher percentage of both LW and SW radiation is in tropical latitudes where that increase in CO-2 has less effect?

Is it not also therefore true that the polar areas of least water vapor, where a greater temperature increase from doubling of Co-2 would have the most effect, has the least W/sq-m percentage of both incoming S-W and outgoing L-W radiation due to the incident angle of incoming Sun light, the high reflectivity of the snow and ice, and the greatly reduced outgoing L-W radiation due to this?

Would not then these very different percentages of both incoming and outgoing L-W and S-W radiation and different responses to increased Co-2, produce very different results then using straight line global averages? (IE…lower response in high radiation areas and high response in low radiation areas) Would this not produce a lowered, “before feed back” estimate of the global response to increased C0-2?

Also, as I understand, any increase in heat would cause an increase in convection. Is this negative feedback quantified?

Is the further increase in cloud cover due to an increase in water vapor quantified?

Should not the IPCC “Prediction” be more like as follows…?

1. Incoming tropical W/sq-m. (A different calculation then for subtopic and polar latitudes)

2. Outgoing tropical L-W W/sq-m. (A different calculation then for subtopic and polar latitudes)

3. Doubling in tropics of Co-2 = X W/sq-m increase in temperature. (A different calculation then for subtopic and polar latitudes)

4. Subtract X W/sq-m due to increase in convection. (A different calculation then for subtopic and polar latitudes)

5. Subtract or add X W/sq-m due to increase in cloud cover and energy spent in precipitation.

6. The other cogent factors the experts believe, quantified to different latitudes etc.

7. Then a global statistical average of these factors.

8. Error bars assigned to all these estimates, and finale estimates.

Could not all this be done on a single spreadsheet linked to relevant papers and discussions, and also linked to a layman’s explanation? Sorry this is so long, but I think any response would help the average interested citizen get a handle on this issue. Any concise non technical feedback is appreciated.

Are these graphs mislabelled? They are supposed to be delta-T, the CHANGE in yearly temperature. Take any graph, and there is a 20 year span of ~0.5 degree yearly temperature increase. So, is the temperature now almost 10 degrees warmer than it was 20 years ago? Almost every data point on the graph is a positive increase, and so in taking the entire time span, the math should indicate we are now 20+ degrees warmer? What am I missing here?

I’ve added the following paragraph:

I wrote this post late last night and about 9 am this morning, I noticed that Hansen et al 1988 had included a sentence that Scenario B was the “most plausible”; I inserted this in the post above and amended an incorrect statement. I’ve also inserted an update referring to the Hansen testimony which MHaze has made available.

When we talk about the “warming trend” ending or not, there’s really four scenarios. #4 seems the most interesting.

1. The rise in the anomaly trend is correlated to how we gather and process the readings, and whatever happened in ~1985 has set a new “floor”. In this case, “the warming” is probably over.

2. The rise in the anomaly trend is correlated to technological sophistication and industrialization fluxes. In this case, “the warming” may either increase or decrease according to a number of factors, mainly how “players in the industrial game” (new and old) ebb and flow in relation to industrialization and economies and technology.

3. The rise in the anomaly trend is meaningless, because the world economy and political situation will change drastically due to some catastrophic event, human caused or not, by either action or inaction, accientally or on purpose. This makes “the warming” moot.

4. The rise in the anomaly trend is correlated to population, in which case the expected 50% increase in population in the next 40 years will cause this to rise. In this case, should the expectations hold true, “the warming” is not over.

Can somebody chart world population and compare it aginst the proxy and instrumental record?

year world population (millions)

-10000 4

-8000 5

-7000 5

-6000 5

-5000 5

-4000 7

-3000 14

-2000 27

-1000 50

-750 60

-500 100

-400 160

-200 150

0 170

200 190

400 190

500 190

600 200

700 210

800 220

900 226

1000 310

1100 301

1200 360

1250 400

1300 360

1340 443

1400 350

1500 425

1600 545

1650 470

1700 600

1750 790

1800 980

1815 1000

1850 1260

1900 1650

1910 1750

1920 1860

1927 2000

1930 2070

1940 2300

1950 2400

1960 3020

1970 3700

1974 4000

1980 4430

1987 5000

1990 5260

1999 6000

2000 6070

2005 6500

2007 6576

As you can see, it took 11,815 years to reach a billion, and less than 200 to increase that number by over 650% Just in the last 37, it’s gone up over 60%

George M: The charts show each year’s mean anomaly compared to the base period. That’s why you have to know if somebody is talking about growth rates for any increment versus growth rates expressed in terms of the quantity, and not mix up absolute values with trends. If it’s at +.5 this year and +.3 last year, then it’s +.2 But next year it might be -.2 back to +.3

It’s like giving an agency a million dollars this year and eight-hundred thousand next year and describing it as cutting their budget.

Or even mixing things up more. If I say the CO2 trend over the last decade is 2, it’s a different discussion than saying CO2 levels went up 20 over the last decade, which is a different discussion than saying the levels are now about about 390 ppmv. (And equating that to some percentage higher than year X).

Steve:

I am sincerely impressed. Did you do that all by yourself … or have help?

Steve M has no secretarial help. Are you volunteering?

Hanson wrote his 1988 paper for two audiences, not only scientists and others with technical backgrounds but also laypersons like reporters, politicians etc. With this in mind, scenario A was hyped, both in the paper and in his corresponding congressional testimony, so as to cause alarm, also explaining why scenario A was the only one drawn out for so many years. What better way to get get people’s attention than showing a bright red line heading ever upwards? When the paper described scenario A as continuing the present emissions growth, with the only caveat being that it must “eventually” be on the high side of reality as resources dwindle, most people, including myself who has an engineering degree, would understand that scenario A was the emission path that the world was then following. Note also the later implication that the 1.5% emissions growth was actually conservative given that emissions growth had historically been higher. Taken in this context, the later statement that scenario B was “perhaps” the most plausible could only be reconciled with the earier description of scenario A if one were to consider the scenarios over the very long term. Certainly when testing the short term validity of the model presented in the paper, most people would look to Scenario A for comparisons to actual data.

Whether or not Hanson subjectively considered scenario B to be the most realistic, he was quite properly taken to task for the wild inaccuracies of Scenario A. He obviously was promoting Scenario A to both Congress and the public at large, probably as part of the idea that the threats of global warming have to be exaggerated so as to provide the impetus for change. It also doesn’t speak well of him that his response to Crichton and Michaels took his own prior statements out of context, i.e. omitting mention of the words “perhaps”, “eventualy” and “due to resource constraints”, instead pretending as though the paper unequivocally endorsed Scenario B as the most likely and Scenario A as the worst case possibility.

It is completely irrelevant which scenario Jim Hansen advertised as his favorite in 1988. Completely. I do not believe that it is possible to accurately predict energy use, emissions, policy developments, or technological innovations, among other things relevant to future forcings. So there is not point in faulting Hansen for not accurately predicting any of these things.