A guest post by Nicholas Lewis

In Part 1 I introduced the talk I gave at Ringberg 2015, explained why it focussed on estimation based on warming over the instrumental period, and covered problems relating to aerosol forcing and bias caused by the influence of the AMO. I now move on to problems arising when Bayesian probabilistic approaches are used, and then summarize the state of instrumental period warming, observationally-based climate sensitivity estimation as I see it. I explained in Part 1 why other approaches to estimating ECS appear to be less reliable.

The AR4 report gave probability density functions (PDFs) for all the ECS estimates it presented, and AR5 did so for most of them. PDFs for unknown parameters are a Bayesian probabilistic concept. Under Bayes’ theorem – a variant on the conditional probability lemma – one starts by choosing a prior PDF for the unknown parameter, then multiplies it by the relative probability of having obtained the actual observations at each value of the parameter (the likelihood function), thus obtaining, upon normalising the result to unit total probability, a posterior PDF representing the new estimate of the parameter.

The posterior PDF melds any existing information about the parameter from the prior with information provided by the observations. If multiple parameters are being estimated, a joint prior and a joint likelihood function are required, and marginal posterior PDFs for individual parameters are obtained by integrating out the other parameters from the joint posterior PDF.

Uncertainty ranges derived from percentage points of the integral of the posterior PDF, the posterior cumulative probability distribution (CDF), are known as credible intervals (CrI). The frequentist statistical approach instead gives confidence intervals (CIs), which are conceptually different from CrIs. In general, a Bayesian CrI cannot be exactly equivalent to a frequentist CI no matter what prior is selected. However, for some standard cases they can be the same, and it is typically possible to derive a prior (a probability matching prior) which results in CrIs being close to the corresponding CIs. That is critical if assertions based on a Bayesian CrI are to be true with the promised reliability.

Almost all the PDFs for ECS presented in AR4 and AR5 used a ‘subjective Bayesian’ approach, under which the prior is selected to represent the investigator’s views as to how likely it is the parameter has each possible value. A judgemental or elicited ‘expert prior’ that typically has a peaked distribution indicating a most likely value may be used. Or the prior may be a diffuse, typically uniform, distribution spread over a wide range, intended to convey ignorance and/or with a view to letting the data dominate the posterior PDF. Unfortunately, the fact that a prior is diffuse does not in fact mean that it conveys ignorance or lets the data dominate parameter inference.

AR4 stated that all its PDFs for ECS were presented on a uniform-in-ECS prior basis, although the AR4 authors were mistaken in two cases. In AR5, most ECS PDFs were derived using either uniform or expert priors for ECS (and for other key unknown parameters being estimated alongside ECS).

When the data is weak (is limited and uncertainty is high) the prior can have a major influence on the posterior PDF. Unlike in many areas of physics, that is the situation in climate science, certainly so far as ECS and TCR estimation is concerned. Moreover, the relationships between the principal observable variables (changes in atmospheric and ocean temperatures) and the parameters being estimated – which typically also include ocean effective vertical diffusivity (Kv) when ECS is the target parameter – are highly non-linear.

In these circumstances, use of uniform priors for ECS and Kv (or its square root) greatly biases posterior PDFs for ECS, raising their medians and fattening their upper tails. On the other hand, use of an expert prior typically results in the posterior PDF resembling the prior more than it reflects the data.

Some studies used, sometimes without realising it, the alternative ‘objective Bayesian’ approach, under which a mathematically-derived noninformative prior is used. Although in most cases it is impossible to formulate a prior that has no influence at all on the posterior PDF, the form of a noninformative prior is calculated so that it allows even weak data to dominate the posterior PDF for the parameter being estimated. Noninformative priors are typically judged by how good the probability-matching properties of the resulting posterior PDFs are.

Noninformative priors do not represent how likely the parameter is to take any particular value and they have no probabilistic interpretation. Noninformative priors are simply weight functions that convert data-based likelihoods into parameter posterior PDFs with desirable characteristics, typically as regards probability matching. This is heresy so far as the currently-dominant Subjective Bayesian school is concerned. In typical ECS and TCR estimation cases, noninformative priors are best regarded as conversion factors between data and parameter spaces.

For readers wanting insight as to why noninformative priors have no probability meaning, contrary to the standard interpretation of Bayes’ theorem, and regarding problems with Bayesian methods generally, I recommend Professor Don Fraser’s writings, perhaps starting with this paper.

The Lewis (2013) and Lewis (2014) studies employed avowedly objective Bayesian approaches, involving noninformative priors. The Andronova and Schlesinger (2001), Gregory et al (2002), Otto et al (2013), and Lewis & Curry (2014) studies all used sampling methods that equated to an objective Bayesian approach. Studies using profile likelihood methods, a frequentist approach that yields approximate CIs, also achieve objective estimation (Allen et al 2009, Lewis 2014).

Slide 10

I will illustrate the effect of using a uniform prior for TCR estimation, that being a simpler case than ECS estimation. Slide 10 shows estimated distributions from AR4 and AR5 for anthropogenic forcing, up to respectively 2005 and 2011. These are Bayesian posterior PDFs. They are derived by sampling from estimated uncertainty distributions for each forcing component, and I will assume for the present purposes that they can be considered to be objective.

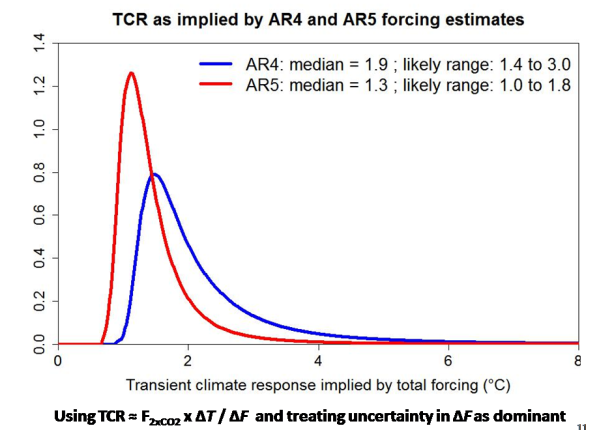

Slide 11 shows posterior PDFs for TCR derived from the AR4 and AR5 PDFs for anthropogenic forcing, ΔF, by making certain simplifying approximations. I have assumed that the generic-TCR formula given in AR5 holds; that uncertainty in the GMST rise attributable to anthropogenic forcing, ΔT , and in F2xCO2, the forcing from a doubling of CO2, is sufficiently small relative to uncertainty in ΔF to be ignored; and that in both cases ΔT = 0.8°C and F2xCO2 = 3.71 W/m2.

On this basis, posterior PDFs for TCR follow from a transformation of variables approach. One simply changes variable from ΔF to TCR (the other factors in the equation being assumed constant). The PDF for TCR at any value TCRa therefore equals the PDF for ΔF at ΔF = F2xCO2 ⨯ ΔT / TCRa , multiplied by the standard Jacobian factor: the absolute derivative of ΔF with respect to TCR at TCRa. That factor equals, up to proportionality, 1/TCR2.

Suppose one regards the posterior PDFs for ΔF as having been derived using uniform priors. This is accurate in so far as components of ΔF have symmetrical uncertainty distributions, but overall it is only an approximation since the most uncertain component, aerosol forcing, is assumed to have an asymmetrical distribution. However, the AR4 and AR5 PDFs for ΔF are not greatly asymmetrical.

On the basis that the posterior PDFs for ΔF correspond to the normalised product of a uniform prior for ΔF and a likelihood function, the PDFs for TCR derived in slide 11 correspond to the normalised product of the same likelihood function (now expressed in terms of TCR) and a prior having the form 1/TCR2. Unlike PDFs, likelihood functions do not depend on which variable that they are expressed in terms of. That is because, unlike a PDF, a likelihood function represents a density for the observed data, not for the variable that it is expressed in terms of.

The solid lines in slide 12 show, on the foregoing basis, what the effect is on the AR4- and AR5-forcing based posterior PDFs for TCR of substituting a uniform-in-TCR prior for the mathematically correct 1/TCR2 prior applying in slide 11 (the PDFs from which are shown dotted). The median (50% probability point), which is the appropriate best estimate to use for a skewed distribution, increases substantially, doubling in the AR4 case. The top of the 17–83% ‘likely’ range more than quadruples in both cases. The distortion for ECS estimates would be even larger.

I cut slide 12a out of my talk to shorten it. It shows the computed joint noninformative prior for ECS and sqrt(Kv) from Lewis (2013). Noninformative priors can be quite complex in form when multiple parameters are involved.

Ignore the irregularities and the rise in the front RH corner, which are caused by model noise. Note how steeply the prior falls with sqrt(Kv), which is lowest at the rear, particularly at high ECS levels (towards the left). The value of the prior reflects how informative the data is about the parameters at each point in parameter space. The plot is probability-averaged over all values for aerosol forcing, which was also being estimated. I believe the fact that aerosol forcing is being estimated accounts for the turndown in the prior at low ECS values; when ECS is very low temperaturs change little and the data conveys less information about aerosol forcing.

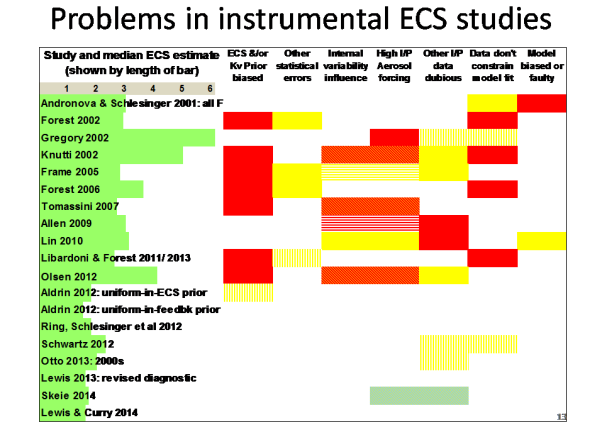

Slide 13 summarises serious problems in instrumental period warming based ECS studies, ordered by year of publication, breaking problems down between seven factors. Median ECS estimates are shown by the green bars at the left.

Blank rectangles imply no significant problem in the area concerned; solid yellow or red rectangles signify respectively a significant and a serious problem; a rectangle with vertical yellow bars, which may look like solid pale yellow, indicates a minor problem.

Red/yellow diagonal bars (may look like a solid orange shade of red) in rectangles across ‘Internal variability influence’ and ‘High input Aerosol forcing’ mean that, due to use of global-only data, internal variability (the AMO) has led to an overly negative estimate for aerosol forcing within the study concerned, and hence to an overestimate of ECS. Yellow or red horizontal bars across those factors for the Frame et al (2005) and Allen et al (2009) studies mean that internal variability appears to have caused respectively significant or serious misestimation of aerosol forcing in the detection and attribution study that was the source of the (GHG-attributable) warming estimate used by the ECS study involved, and hence to upwards bias in that estimate (reflected in a yellow or red rectangle for ‘Other input data dubious’).

The blue/yellow horizontal bar across ‘High input Aerosol forcing’ and ‘Other input data dubious’ for the Skeie et al (2014) study mean that problems in these two areas largely cancelled. Skeie’s method estimated aerosol forcing using hemispherically-resolved model-simulation and observational data. An extremely negative prior for aerosol forcing was used, overlapping so little with the observational data-based likelihood function that the posterior estimate was biased significantly negative. However, the simultaneous use of three ocean heat content observational datasets appears to have led to the negatively biased aerosol forcing being reflected in lower modelled than observed NH warming rather than a higher ECS estimate.

The ‘Data don’t constrain model fit’ red entries for the Forest studies are because, from my experience, warming over the model-simulation run using the claimed best-fit parameter values is substantially greater than per the observational dataset. The same entry for Knutti et al (2002) is because a very weak, pass/fail, statistical test was used in that study.

The ‘Model biased or faulty’ red rectangle for Andronova and Schlesinger (2001) reflects a simple coding error that appears to have significantly biased up its ECS estimation: see Table 3 in Ring et al (2012).

A more detailed analysis of problems with individual ECS studies is available here.

To summarise: all pre-2012 instrumental-period-warming studies had one or more serious problems, and their median ECS estimates varied widely. Most studies from 2012 on do not appear to have serious problems, and their estimates agree quite closely. (The Schwartz 2012 study’s estimate was a composite of five estimates based on different forcing series, the highest ECS estimate comes from a poor quality regression obtained from one of the series.)

Slide 14 gives similar information to slide 13, but for TCR rather than ECS studies. As for ECS, all pre-2012 studies had one or more serious problems that make their TCR estimates unreliable, whilst most later studies do not have serious problems apparent and their median TCR estimates are quite close to one another.

Rogelj et al (2012)’s high TCR estimate is not genuinely observationally-based; it is derived from an ECS distribution chosen to match the AR4 best estimate and ‘likely’ range for ECS; the same goes for the Meinshausen et al (2009) estimate. The reason for the high TCR estimate from Harris et al (2013) is shown in the next slide.

A more detailed analysis of problems with individual TCR studies is available here.

This slide came later in my talk, but rather than defer it to Part 3 I have moved it here as it relates to a PPE (perturbed physics/parameter ensemble) study, Harris et al (2013), mentioned in the previous slide. Although this slide considers ECS estimates, the conclusions reached imply that the Harris et al TCR estimate shown in the previous slide is seriously biased up relative to what observations imply.

The plot is of joint distributions for aerosol forcing and ECS; the solid contours enclose ‘likely’ regions, of highest posterior probability density, containing 66% of total probability. Median estimates are shown by crosses; the four Ring et al (2012) estimates based on different surface temperature datasets are shown separately. The black contour is very close to that for Lewis and Curry (2014).

The grey dashed (dotted) vertical lines show the AR5 median estimate and ‘likely’ range for aerosol forcing, expressed both from 1750 (preindustrial) and from 1860; aerosol forcing in GCMs is normally estimated as the change between 1850 or 1860 and 2000 or 2005. The thick grey curve shows how one might expect the median estimate for ECS using an energy budget approach, based on AR5 non-aerosol forcing best estimates and a realistic estimate for ocean heat uptake, to vary with the estimate used for aerosol forcing.

The median estimates from the studies not using GCMs cluster around the thick grey curve, and their likely regions are orientated along it: under an energy budget or similar model, high ECS estimates are associated with strongly negative aerosol forcing estimates. But the likely regions for the Harris study are orientated very differently, with less negative aerosol forcing being associated with higher, not lower, ECS. Its estimated prior distribution ‘likely’ region (dotted green contour) barely overlaps the posterior regions of the other studies: the study simply does not explore the region of low to moderately negative aerosol forcing, low to moderate ECS which the other studies indicate observations best support. It appears that the HadCM3/SM3 model has structural rigidities that make it unable to explore this region no matter how its key parameters are varied. So it is unsurprising that the Harris et al (2013) estimates for ECS, and hence also for TCR, are high: they cannot be regarded as genuinely observationally-based.

Further information on the problems with the Harris et al (2013) study is available here: see Box 1.

This slide shows what I regard as the least-flawed ECS estimates based on observed warming over the instrumental period, and compares them with ECS values exhibited by the RCP8.5 simulation ensemble of CMIP5 models. I should arguably have included the Schwartz (2012) and Masters (2014) estimates, but I have some concerns about the GCM-derived forcing estimates they use.

The violins span 5–95% ranges; their widths indicate how PDF values vary with ECS. Black lines show medians, red lines span 17–83% ‘likely’ ranges. Published estimates based directly on observed warming are shown in blue. Unpublished estimates of mine based on warming attributable to greenhouse gases inferred by two recent detection and attribution studies are shown in green. CMIP5 models are shown in salmon.

The observational ECS estimates have broadly similar medians and ‘likely’ ranges, all of which are far below the corresponding values for the CMIP5 models.

The ‘Aldrin ECS-2‘ violin is for its estimate that uses a uniform prior for 1/ECS, which equates to a ECS-2 prior for ECS. I believe that to be much closer to a noninformative prior than is the uniform-in-ECS prior used for the main Aldrin et al (2012) results. The Lewis (Forest) estimate is based on the Lewis (2013) preferred main ECS estimate with added non-aerosol forcing uncertainty, as shown in the study’s supplemental information.

This slide is like the previous one, but relates to TCR not ECS.

As for ECS, the observational TCR estimates have broadly similar medians and ‘likely’ ranges, all of which are well below the corresponding values for the CMIP5 models.

The Schwartz (2012) TCR estimate, which has been omitted for no good reason, has a median of 1.33°C and a 5–95% range of 0.83–2.0°C.

The Lewis (Forest) estimate uses the same formula as in Libardoni and Forest (2011), which also uses the MIT 2D GCM, to derive model TCR from combinations of model ECS and Kv values.

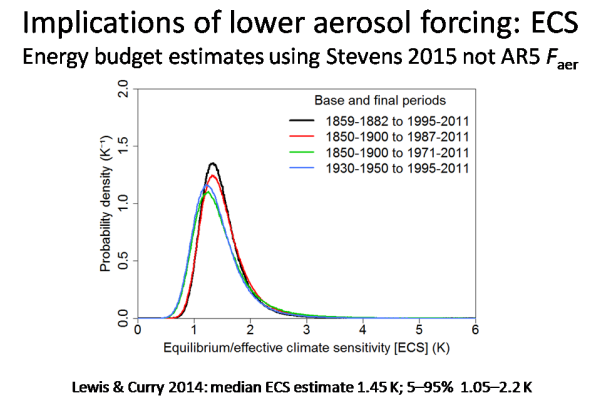

The main cause of long tails in ECS and TCR studies based on observed multidecadal warming is uncertainty as to the strength of aerosol forcing (Faer). I’ll end this part with a pair of slides that show how well constrained the Lewis and Curry (2014) energy-budget main ECS and TCR estimates would be if they were recalculated using the distribution for aerosol forcing implicit in Bjorn Stevens’ recent study instead of the wide AR5 aerosol forcing distribution. (For some reason these slides appear much later, out of order, in the PDF version of my slides on the Ringberg 2015 website.)

The median ECS estimate reduces modestly from 1.64°C to 1.45°C, but the 95% uncertainty bound falls dramatically, from 4.05°C to 2.2°C.

The picture is similar for TCR, although somewhat less dramatic. The median TCR estimate reduces modestly from 1.33°C to 1.21°C, but the 95% uncertainty bound falls much more, from 2.50°C to 1.65°C.

Additional references

Allen MR, Frame DJ, Huntingford C, Jones CD, Lowe JA, Meinshausen M, Meinshausen N (2009) Warming caused by cumulative carbon emissions towards the trillionth tonne. Nature, 458, 1163–6.

Frame DJ, Booth BBB, Kettleborough JA, Stainforth DA, Gregory JM, Collins M, Allen MR (2005) Constraining climate forecasts: The role of prior assumptions. Geophys. Res. Lett., 32, L09702, doi:10.1029/2004GL022241.

Harris, G.R., D.M.H. Sexton, B.B.B. Booth, M. Collins, and J.M. Murphy, 2013. Probabilistic projections of transient climate change. Clim. Dynam., doi:10.1007/s00382–012–1647-y.

Lewis N (2014) Objective Inference for Climate Parameters: Bayesian, Transformation-of-Variables, and Profile Likelihood Approaches. J. Climate, 27, 7270-7284.

Masters T (2014) Observational estimate of climate sensitivity from changes in the rate of ocean heat uptake and comparison to CMIP5 models. Clim Dynam 42:2173-2181 DOI 101007/s00382-013-1770-4

Sexton, D.M. H., J.M. Murphy, M. Collins, and M.J. Webb, 2012. Multivariate probabilistic rojections using imperfect climate models part I: outline of methodology. Clim. Dynam., 38: 2513–2542.

Stevens, B. Rethinking the lower bound on aerosol radiative forcing. In press, J.Clim (2015) doi: http://dx.doi.org/10.1175/JCLI-D-14-00656.1

157 Comments

Great article, though I’d suggest dropping the reference to Fraser’s anti-Bayesian rant. He seems to be arguing that credible intervals aren’t confidence intervals, apparently advocating Fisher’s fiducial statistics. Seriously? Fisher’s one failure?

Drop the Fraser reference paragraph: strengthen and focus your argument.

Lindley proved decades ago that credible intervals can’t in general be confidence intervals. The one dimensional location parameter or transformation of a location parameter case is an exception. Figure 7 in Fraser’s paper that I cited gives a simple illustration in a 2D case of CrIs not being able to match CIs.

I don’t see that Fraser is advocating Fisher’s fiducial statistics (which IIRC requires finding a pivot) as such, although he does deal with confidence distributions, introduced by Fisher. Confidence distributions are coming back into use in quite a big way now.

Don Fraser is certainly more forceful in his critiques of standard Bayesian theory than most statisticians, and not afraid to call a spade a spade. At his age one is allowed to rant a bit! As you may be aware, Don Fraser and his wife Nancy Reid have also done much work in the Bayesian field, developing sophisticated noninformative priors with good probability matching properties.

Yes, it’s well-known that confidence intervals are not credible intervals… except when confidence intervals are plotted on graphs as if they were credible intervals. (Where 100% of non-statisticians and some large proportion of statisticians interpret them as if they were credible intervals.) My question isn’t how he could think they’re different, but why he’s so furious about the distinction that credible intervals are not confidence intervals. (As opposed to the usual confusion that confidence intervals are credible intervals.)

Perhaps he’s arguing that credible intervals aren’t actually credible intervals because priors often aren’t actually prior probabilities. OK, I can understand that. But that doesn’t seem to be his point. If you insist on linking to his paper — which distracts from your main, well-taken points — you might want to link to the discussion replies to his paper:

[1] T. Zhang, “Discussion of ‘Is Bayes Posterior just Quick and Dirty Confidence?’ by D. A. S. Fraser,” Statist. Sci., vol. 26, no. 3, pp. 326–328, Aug. 2011.

[2] Kesar Singh and Minge Xie, “Discussion of ‘Is Bayes Posterior just Quick and Dirty Confidence?’ by D. A. S. Fraser,” Statist. Sci., vol. 26, no. 3, pp. 319-321, Aug. 2011.

[3] Christian P. Robert, “Discussion of ‘Is Bayes Posterior just Quick and Dirty Confidence?’ by D. A. S. Fraser,” Statist. Sci., vol. 26, no. 3, pp. 317-318, Aug. 2011.

It’s Fraser himself who brings up the comparison to Fisher’s fiducial mistake: “.. the function p(θ) can be viewed as a distribution of confidence, as introduced by Fisher (1930) but originally called fiducial…”, as if it were the basis for his argument rather than a failed branch in its lineage.

Again, we’re getting distracted. As far as I can tell, your argument does not depend on a foundational flaw in Bayesian statistics but rather in a naive use of it — in particular priors. Is this not the case?

I agree, we should not get distracted. As you say, my argument does not depend on any foundational flaw in Bayesian theory.

My concern is that there seems to be a misconception amongst climate scientists that priors always have a probability interpretation. So when a prior that varies with 1/ECS^2 is used, it will be perceived as ruling out high ECS values a priori, even if it is in fact a completely noninformative prior. I want readers to realise that this way of thinking is completely wrong.

Don Fraser gives the clearest justification I have seen for noninformative priors having no probabilistic interpretation. Whilst Jose Bernado states that noninformative reference priors have no probabilistic interpretation, he does not give a justification for this statement.

If you wish to give URLs where PDFs of the discussion replies to Don Fraser’s paper can be found (including the supportive reply by Larry Wasserman, and Fraser’s rejoinder), I will attempt to make them into links, although I think that would really be a distraction from the main thrust of my post.

It may have come close to a distraction but that and the previous paragraph shed a lot of light.

Thanks, Nic, for the Fraser reference. It looks like I’ll learn a lot from it. Thanks also to Wayne2 for the additional references. Ditto for them.

Whatever the merits of these arguments, you certainly can’t beat the title of Wasserman’s comment, “Frasian Inference”, his term for Frasier’s advocacy of Bayesian procedures with frequentist validity!

Fraser’s course was the first statistics course that I ever took. We worked off Fraser’s preprints as a text. In retrospect, it was a very strange way to approach statistics as most of us knew math but had no prior knowledge of “ordinary” statistics and no knowledge of Bayes-frequentist disputes.

An interview from 2004 with Donald A.S. Fraser here: http://projecteuclid.org/download/pdfview_1/euclid.ss/1105714168

Steve, were you and your comrades at all challenged by having no independent recourse in the text for material difficult to grasp in the lectures? I was often adrift in a physics course lectured by two guys who were composing a textbook on the subject If I didn’t “get” it in class, what was in the loose-leaf work-in-progress wasn’t going to help.

Reblogged this on Centinel2012.

I second the comments regarding deleting the reference to Fraser. I looked at that reference quickly and I was unable to discern if his criticism was:

(1) with bad priors you get bad posteriors;

(2) if you believe your prior, you can get different results than frequentists do; or

(3) something else.

However, all of us are Bayesians—-some of us admit it but others do not.

See https://xkcd.com/1132/ or

Feynman Lectures on Physics Vol. I, Section 6-3.

It is probably better to realize that the probability concept is in a sense subjective, that it is always based on uncertain knowledge, and that its quantitative evaluation is subject to change as we obtain more information.

Observer

Nic, I also applaud not only your work but the tables, especially the one supplying your opined criticism of the prior studies. That’s a great reference for those of us catching up. I find your trend of TCR/ECS estimations in IPCC reports informative on it’s own. I hope it continues. 🙂

I understand that the PDO/AMO trough in the 1970s could have falsely attributed cooling to aerosols peaking then. But does your ECS/TCR studies weigh for the possibility of PDO/AMO distortion of the temperature record in the 1990s? It did not occur to me until Part II that you only refer the AMO, not the PDO. Why?

Ron, Thanks. The PDO is a decadal cycle and as such is not a major concern for sensitivity estimation. The IPO appears correlated with the SOI/ENSO and it is unclear that it involves a physical mechanism with a cyclical basis, apart from the extent to which it is influenced by the AMO.

Areas influenced by the AMO overlap to a much greater extent with where the increase in aerosol forcing and its flattening off after the 1970s was concentrated than do areas influenced by the PDO/IPO.

Most of the ECS/TCR studies that I consider good use an analysis period covering something like 1860 to some point in the first decade or so of the current century. That pretty much spans two full AMO cycles, so (leving aside the aerosol issue) so the AMO should cause relatively little bias in estimation.

NicL,

How sensitive are these numbers to updated forcing change estimates (e.g. Schmidt et al 2014) and differences in observational datasets used (e.g. BEST/CW2014)?

Also – how do you compensate for the time-lag issue brought up by Ricke et al (2014) with respect to emission versus response?

http://iopscience.iop.org/1748-9326/9/12/124002/article

Final question – when you discuss the AMO – what definition are you using because they differ significantly?

Robert W

Not particularly sensitive. There are many adjustments that one might make to forcing change and observational temperture datasets, based on published research, going in both directions for each. However, I think one should be cautious about doing so. I also think there is a risk that people from modelling centres may tend to look more for adjustments that would bring observationally-based ECS and TCR estimates closer to their, higher, model-based values.

The time-lag between CO2 emission and response brought up by Ricke et al (2014) is obvious and well-known. But surely you can see that it is totally irrelevant when one is estimating ECS and TCR from concentrations not emissions, as in case of all the observationally-based ECS and TCR studies I discuss?

I generally use the NOAA AMO index (Enfield DB, Mestas-Nunez AM, Trimble PJ (2001) The Atlantic multidecadal oscillation and its relationship to rainfall and river flows in the continental US. Geophys Res Lett 28:2077–2080; see http://www.esrl.noaa.gov/psd/data/timeseries/AMO/). It matches closely the Internal Multidecadal Pattern found in Delsole et al (2011) using a quite different method.

Robert,

Given assumptions made by your good self in kriging parts of the Arctic, do you consider that you outcome is good enough to be used in this sensitivity work?

Yes, I am well aware of your work on verification, but I come from a background of geostatistics for ore estimation where one can drill another hole to get more, hopefully better data. There is a financial advantage from getting it right.

The overall outcome is often quite sensitive to the placement in 3D of the boundary between ore and uneconomic mineralisation.

This often puts emphasis on volumes that are at the interpolation/extrapolation crossover and sometimes it involves regard for differnt rock types setting the boundary.

As I read it, C&W does more extrapolation than we would have done; and you seem to pay less attention to boundaries such as sea ice/land ice. So my question is really about whether you consider that your assumptions are robust enough to be used in this sensitivity work, given that you are using guessed values rather than measured, at the extremes.

Geoff

Nic, I meant PMO but 150-yr study spans eliminate bias for that too, thanks.

Has there been any suggestions that TCR is temperature dependent? It seems it would be a nice thing to find out. Is there any thought to the ability to reveal through statistical study, for example, if vapor/cloud-based feedbacks follow a climate temperature curve?

I’m not aware of much evidence that TCR or ECS is materially temperature dependent, within a span from a degree or two colder than now up to four or five degrees warmer, although this is based mainly on model simulations.

Ron and Nic: When thinking about whether ECS should be approximately linear, I find it easier to consider its reciprocal, the climate feedback parameter. After GMST has warmed 1 degC, how much more radiation (OLR and reflected SWR) will be leaving the planet (in W/m2). If the answer is 3.2 W/m2 (net feedback is zero), then ECS (for 2XCO2) is 1.2 degC. If the answer is 1.6 W/m2, then ECS is 2.4 degC. If GMST warms another degC, I expect approximately the same change in OLR and SWR.

This approach to ECS circumvents the details of ocean heat uptake and centuries-long approach to equilibrium. As I see it, OLR and reflected SWR depend only on GMST, not whether ocean heat uptake is large or has ceased. Or, at least this appears to be correct for all fast feedbacks. Ice-albedo feedback from ice cap melting won’t have reached equilibrium.

Things get trickier when you consider how many different ways one can raise GMST by 1 degC: a 1 degC rise everywhere, with polar amplification, more warming in one hemisphere, etc. These possibilities won’t produce exactly the same change in radiation and some are arguing that their isn’t a unique value for ECS for this reason. But there is only one scenario that represents an equilibrium rise of 1 degC in GMST.

Frank, I agree that it is often better to consider the reciprocal of ECS, which is more linearly related to the least well-constrained observable variables.

But there is a big problem with directly estimating the response of TOA radiation to GMST change: it is very difficult to distinguish over the timescales for which data is available between random cloud fluctuations affecting outgoing radiation and thereby causing a change in GMST, and changes in GMST that cause a change in outgoing radiation. Only the second type of effect represents climate feedback. Lindzen, Choi and their colleagues have tried to overcome this problem by using lagged regression, but it is unclear that they have yet succeeded outside the tropics or for SW radiation. I think AR5 was right to express caution about this and other approaches to estimating ECS from short timescale changes.

Ice cap melting is excluded from the definition of ECS, BTW, although sea ice melting and changes in snow cover are included.

Is there anything new in here arising beyond the discussion/debate that occurred a little while back on the ClimateDialogue.org website?

Click to access Climatedialogue.org-extended-summary-climate-sensitivity.pdf

I remember it getting a little impasse-y, and/or not as cut-and-dried as the perspective here (once other experts were in the room discussing).

Yes, there is new material here. But as I didn’t refer to the ClimateDialogue discussions when formulating my Ringberg talk, I couldn’t say how much was covered there.

It would be informative to see the effect of Stevens’ forcing estimates on tcr/ecs on slide 17/18 as a pre-Stevens (ar5) and post-Stevens curve.

The basis period doesn’t seem to change much, so you could thin the number of pdfs down.

(I appreciate that the talk has already been given, but when I got to 17/18 I was scrolling back up to try to see pre-Stevens pdfs to compare.)

Small point: how did you manage to get through all this in the time allowed? There’s an awful lot of material here.

I agree that might have been better. But the comparison of median and 95% estimates provided below the tcr/ecs slides gives most of the important information.

I talked fast, omitted some material and ran slightly out of time!

Outstanding presentation by Judith Curry at US senate hearings absolutely outstanding http://science.house.gov/hearing/full-committee-hearing-president-s-un-climate-pledge-scientifically-justified-or-new-tax

Nic

You say that “But surely you can see that it is totally irrelevant when one is estimating ECS and TCR from concentrations not emissions, as in case of all the observationally-based ECS and TCR studies I discuss”

I understand that this may be the case for TCR but given the inertia in the oceans there is a pretty good phys/chem justification for some lag in the ECS when considering concentration changes. Im not up to speed with your maths and stats, but simple chemistry says there should be a lag component. Am I missing something that your maths already accounts for?

Terry

All studies that estimate ECS from observed warming allow for the lag in temperature response, which is reflected in ocean heat uptake. This is most simply seen from the energy budget equation in slide 5 (Part 1): ECS = F_2xCO2 x ΔT / (ΔF – ΔQ). Here, ΔQ is heat uptake (very largely by the ocean), which is high initially relative to the imposed forcing ΔF (resulting, e.g., from a change in GHG concentrations). This compensates, when applying the formula, for ΔT being low initially.

Nic, If I am understanding ‘subjective Bayesian’ approach it is to mean one must re-evaluate a hypothesis each time a new evidence (analyzed data set) is added to the knowledge base. But in the case of climate science there are so many acting variables hypotheses can be multiple, elastic and even contradictory. Also, with a ‘subjective Bayesian’ approach in which any selected prior can be theoretically recovered by theoretical manipulation of any one of a potpourri of variables, one runs into the Karl Popper dilemma of infallibility. Should there not be a test for validity of a prior by having an established predicted data result criteria set for failure of hypothesis? Would this not automatically require the priors to be weakened and thus give more power to the evidence? For example, if one claims ECS can be above 3.5 should not that require a pre-set establishment of predictions of temperature probability at a given time series, compensated by known frequented events, volcanic eruptions, ENSO shifts, etc…?

Couldn’t find the last Unthreaded post so FYI on Paleo archiving: https://www.authorea.com/users/17200/articles/19163/_show_article

Ron, both subjective and objective Bayesian approaches in principle permit, but do not require, that a new dataset be analysed in the light of all existing quantitative evidence. As you say, doing so would be impracticable in the case of climate science, at least. And it is normal to present the results of a scientific experiment/investigation on a stand-alone basis. Multiple experimental results (analyzed data sets) and then be combined in a meta-analysis.

In an objective Bayesian approach, the general idea is to select a prior that “lets the data speak”, influencing the result as little as possible, rather than conveying any particular view as to what value of the unknown parameter involved is most likely to have. However, one might reasonably truncate such a prior to rule out negative or exceedingly high (say > 10 C) values for ECS, on the basis that the unstable climate system that they imply is not consistent with the history of the planet.

Nic, thanks for your posts. I saw that James Annan commented on the recent Ringberg conference (http://julesandjames.blogspot.com/2015/04/blueskiesresearchorguk-climate.html)

“It’s hard to see how the climate can have changed as we see in the past if the sensitivity to radiative forcing (in its most general sense) was either negligibly low or extremely high.”

I saw you were at the conference as well. Do you agree with him: how low an ECS is “consistent with the history of the planet”?

I agree with James, although we may differ somewhat on what numbers we put forward.

In a paper led by James Annan’s partner Julia Hargreaves, of which he was 2nd author (Hargreaves et al 2012: Can the Last Glacial Maximum constrain climate sensitivity? GRL) an objective regression-based best estimate for ECS of 2.0 C, with a 5-95% range of 0.8-3.6 C, was obtained (this is the estimate that is adjusted for dust). I have no problem with that distribution – ECS estimates based on instrumental-period warming, typically circa 1.6 C, are in its high likelihood region.

I think the true uncertainty of the Hargreaves et al (2012) ECS estimate is probably greater than the 5-95% range implies, however. So, based on that study alone, the 0.8-3.6 C range is probably better regarded as no more than a ‘likely’ (17-83%) range, at best. On the other hand, it seems quite a stretch to make the observed instrumental-period warming consistent with an ECS of below 0.8 C or above 3.6 C, based on which additional evidence it is reasonable IMO to regard that range as 5% and 95% uncertainty bounds.

Nic, what changes, if any, in accepted protocol would you like to see established in the field? For example, I recently found the late Michael Crichton had the same view as myself that any field study undertaken in a politically volatile environment should be done as a tandem exercise of two teams carrying opposing hypotheses reporting their results publicly and simultaneously upon completion. This natural competition would raise the confidence bars not only on the data but on the analysis. The up-front investment cost would be double but the return would pay 10-fold dividends. As it stands now one tribe can safely ignore the other’s ideas but not their votes. A protocol that demands competitive cooperation raises the stock of all.

Ron, I can see merit in Michael Crichton’s and your suggestion, but this is not really my field of expertise.

I enjoy these posts on TCR and ECS, Nic, and particularly ones utilizing Bayesian inferences. I have noted before I am attempting to learn and use it – or at least attempting to find a justification for using it- in my own analyses. It would appear to me that your cases against using uniform or subjective priors in this particular analysis with sparse and uncertain data have been made and yet in the AR5 both of these approaches were used. I know you were an active participant in the AR5 proceedings and I was wondering if you have any comments on how this was rationalized. If one were to question the motivations of the IPCC (and I have and continue to do so to the extent that I judge the IPCC to be acting as a lawyer would in presenting its case in an adversarial court of law, i.e. using evidence that support its case only) uniform and subjective priors will extend the upper probability limits for TCR and ECS. Those upper probability limits are more critical to the argument for drastic and immediate government mitigation of AGW than are the median values – and a point I am certain not lost on those in control at the IPCC. I would guess that with your background and natural curiosity about matters of physics and mathematics you would need no more motivation for your involvement with the IPCC and particularly in the area of TCR and ECS. You have appeared to me to have gone right to root of the issue that has the greatest potential for influencing “informed” policy in tackling the upper probability limits and it is there I am wondering if you were motivated by reasons in addition to scientific curiosity.

Also in the same context there was a comment that I excerpted from the link below that was originally linked above in a Salamano post.

“According to Pueyo the point is that using a reference prior as if it were non-informative “can cause serious trouble unless the amount of data makes the result quite insensitive to the prior, which is rarely the case with climate sensitivity.” and the method of Nic Lewis “results into a vast underestimation of climate sensitivity.”

I believe Pueyo was described as an expert in Bayesian inference, but his last comment would require some extensive knowledge in this area of climate science. Any comments on this exchange.

In addition John Fasullo made some comments in this discussion where it appeared to me he was opposed to ruling out climate models based on lack of conformity to other model and observed results. He also talks about internal variability of climate models preventing a narrowing of the PDF on TCR and ECS. There are large differences in the internal variability for some climate models and that in my mind is sufficient reason to seriously think about developing a criteria for using or not using a model in an ensemble distribution. I think that maintaining a large range of model outcomes, in effect, is having your cake and eating it too, since the large range makes it more difficult to make statements about the observed results falling out of the range or statistically significant probability range of the models, while at the same time allowing a reasonable probability for the higher end of the models’ outputs.

Click to access Climatedialogue.org-extended-summary-climate-sensitivity.pdf

Kenneth –

If one were to consider only GCMs in Bjorn Stevens’ ECS range of 2.0 to 3.5 K (inclusive), it would disqualify 9 of the 23 models whose ECSs are listed in AR5 WG1 Table 9.5. The remaining 14 have an average ECS of 2.7 K (ranging from 2.1 to 3.5), as compared to 3.2 for the entire set of 23, ranging up to 4.7.

Kenneth, Thanks for your comment. Just to clarify, my role in AR5 was as one of the many reviewers, commenting principally on Chapter 10. Frankly, I don’t think any of the climate scientists involved in AR5 has an adequate understanding of Bayesian inference, at least so far as ECS estimation is concerned. This is a criticism of the way climate science and statistical methods have evolved rather than of any particular AR5 author.

I turned my attention to climate sensitivity about four years ago becuase I thought it to be the most important uncertain parameter in climate science, and I soon realised that the use of subjective Bayesian methods with uniform priors in AR4 was completely inapproriate. In my investigations of what approach should be used, I was motivated by pure mathematical/statistical curiosity as well as scientific curiosity and a concern that the risks of climate sensitivity being high were being seriously overstated.

I totally disagree with Pueyo’s claims about reference priors. Read Jose Bernardo’s papers on reference priors, or chapter 5 of Bernardo and Smiths book Bayesian Theory, and judge for yourself. Nor do I agree with Pueyo’s paper about objective priors for ECS.

I’m wary about relying on climate model simulations. But their long preindustrial control runs do provide useful estimates of natural internal variability of the climate system. The ECS and TCR studies that I have been involved with all allow for such variability, based on AOGCM control run data, as do other studies, but it generally does not seem to be the most serious source of uncertainty present.

I would want something more comprehensive including model to model and model to observed comparisons like a ks.test, ARMA model of red noise and standard deviation of white noise. Might be a good time for me to attempt to put a laypersons criteria together for selecting models. I would suppose someone will remind me that a model can get the deterministic part right and the stochastic part wrong. An alternative might be to group the models and then look at ECS and TCR values.

Kenneth, some time ago I tried this approach: http://notrickszone.com/2015/03/05/solar-cycle-weakening-and-german-analysis-shows-climate-models-do-overestimate-co2-forcing/#sthash.B1ipUKjV.Wqps5ViB.dpbs ( see the 2nd part of the post). I ruled out some models with two criteria: 1.: The failures in replicating the trens of observations to 2014; 2. the stability of the model-outputs to 2005 vs. 2014. The resulting TCR of the “good” models was 1.6, for comparison it’s 2.0 of the full ensemble.

Frank, while on a quick look I see that your criteria covers a large range of frequencies, it does use linear trends which I judge to be limiting. Year over year noise is not included nor is auto correlation. If you plot the CMIP5 models temperature series these differences become apparent.

If my criteria resulted TCR values or trends for models higher than expected for the observed that would be informing also.

Kenneth, I’m not sure if autocorrelation is an important thing if one uses 12 month averages just like me, see D/W test ( http://en.wikipedia.org/wiki/Durbin–Watson_statistic ). The “d” is well above one for the trends in this case, so it would surprise me if the autocorrelation had a too big influence on the trends.

Frank, my point on AC of trend residuals – linear or otherwise – was whether a different ARMA model was a better fit for individual models and observed series.

I am looking for ways of distinguishing between these series – and finding a difference with statistical significance.

For my money I’d start by looking at the absolute temperatures they each run at.

Please put me down in the objective Bayesian camp…..let the data do the talking.

Ah, but you see, if the data is mute then the scientist can do the talking.

Objectivity is often just slightly less shameless subjectivity.

Note the recent Planck paper minimizing aerosol uncertainty, pinning it at the minimum, -1.0W/m2…

Nic writes in the post

As I understand that sentence, that’s not correct in most cases, and specifically that’s not correct for the determination of ECS from observations. That cannot be correct, because there are no data-based likelihoods to convert. There’s only one history, and all likelihoods linked to that are model based. Nothing resembling even remotely a frequentist analysis can be even started, when we have only one history rather than an ensemble of identically repeated experiments that lead to a large number of results and allows for counting frequencies.

What the noninformative prior that Nic is using represents is not data based or determined to the least by the data. It’s totally determined by choices made in selecting what data to collect, how to represent the data, the climate model used as a tool in the analysis, and by the way Jeffreys’ rules are applied in fixing the prior. Nothing in the above depends on the data (the climate model used has, however, been influenced by some other observations). Many subjective choices done by scientists involved are included in the process. Nothing is fixed by the system being studied, everything is fixed by subjective choices of people involved.

What Nic is assuming is that the observed temperatures have flat prior distributions. He makes another similar assumption about the ocean flux. These are subjective assumptions about the prior. These assumptions are not dictated or supported by any empirical observations, they are simply Nic’s personal subjective choices. It’s possible that he didn’t know initially where these choices lead. In that respect they differ from typical informative priors, whose consequences are known, when they are chosen, but that does not make Nic’s choices any less subjective or any more objective or any more correct than the expert priors used by others.

What’s a good expert prior is an important question. James Annan has discussed that both in scientific articles and in blog posts a couple of years ago. His preferred choices lead to rather similar final outcome as Nic’s choice. My own favorites are not very different from that, but on detailed level neither Annan’s nor mine subjective preferences are identical with Nic’s choice. It’s unknown, how much the final results would differ from those of Nic. The conclusions are certainly closer to those of Nic than those based a uniform prior in ECS, but that’s about as much I can say about that.

Inferring the PDF or just confidence limits for ECS from a single history is possible only based on the Bayesian approach, and the Bayesian approach is always built on a subjective choice of the prior. Deciding to use Jeffreys’ prior in a specific setting is an equally subjective choice as any of the others, and affects the outcome as much as any other choice that can be considered reasonable at all.

Pekka,

Thank you for your comment. We have some differences in our views on these matters. Probability and statistics has been riven by foundational disputes from early on! It is interesting, however, that your preferred subjective priors are, in the cases involved, not hugely different from what I arrive at using an objective Bayesian approach.

I disagree that there are no “data-based likelihoods” for estimating ECS from observations, but I may be using the term more loosely than you. I accept that the selection of data to use and the uncertainty distributions to attach to the data involves subjectivity. However, I think you will find a considerable commonality or similarity of data used for estimating ECS from observed warming over the instrumental period, so the influence of different choices is typically not that great. And although the uncertainty estimates involve models (to estimate internal variability, for instance), choice of different models typically doesn’t make much difference to the shape of the ECS estimate nor change its median. The width of the ECS estimate distribution is typically dominated by assumptions regarding uncertainty in aerosol forcing.

You say “What Nic is assuming is that the observed temperatures have flat prior distributions.” Effectively, yes, but only because a uniform prior is totally noninformative when errors and other uncertainties in observed temperatures are symmetrical and independent of temperature over the range involved. These are not subjective assumptions by me – they reflect error estimates by the data set providers and the statistical characteristics of internal variability in the AOGCM control runs used to provide estimates of internal variability.

The choice of physical model, to link the variables to which the data used relates with values for ECS and any other unknown parameters being estimated, and the model error assumptions, are also subjective. However, judging from the similarity (when not biased by choice of prior or other faults) of ECS estimates based on similar data but different physical models, extending from single equation energy-budget models to AOGCMs, this is not a critical issue.

Noninformative priors are computed from the likelihood function, and reflect relationships between the data and the model parameters, the assumed data uncertainty distributions and the data values. It is in my view valid to regard them as weight functions, however much that may offend subjective Bayesian purists.

Yes, there is only one history, but that doesn’t mean one has to use a conventional Bayesian approach. One can make multiple draws from the assumed uncertainty distributions to obtain hypothetical realistations if one wishes. That is how Andronova and Schlesinger (2001) derived their PDF for ECS. Or one can use the frequentist profile likelihood method, or more sophisticated developments of it, which provides approximate confidence intervals directly from the likelihood function.

So, yes, of course there is subjectivity in arriving at likelihood function, but they are nevertheless data-based. And to my mind it certainly makes sense to select the prior distribution so as to achieve objective inference (something approximately probability matching) based on the likelihood function used. Why add unnecessary additional subjectivity and likely bias?

Nic,

In my view you mix two issues that are logically independent, while have both a connection to the empirical work.

– The methods of collecting and presenting empirical data.

– The data itself.

The data itself is what tells about the reality.

Your choice of the prior is not at all affected by the data itself, it’s derived from the methods of collecting and presenting data. It’s true that the methods are affected by physical realities, but these realities are realities of doing measurements, not realities of the Earth system. There aren’t any generally valid reasons to think that technicalities of doing measurements form a valid basis for choosing the prior.

It’s, however, true that some priors are extreme in a way that makes them contradict with many different arguments. That’s the case here. You have one approach, James Annan had another, and I have a somewhat different again. We all do, however, deviate from the uniform in ECS in a similar way, because uniform in ECS is rather extreme in it’s own way.

In spite of that agreement all our arguments are purely subjective, supported by some rationale, but subjective anyway. It’s not possible to conclude that those who disagree have made an error or that they are objectively wrong.

Pekka,:

Actually the data tell us absolutely nothing by themselves—they are just numbers in the absence of the context provided by a theoretical framework.

I think this is a pointless distinction. The Universe imposes limitations on how we can collect data for a particular system. Generally it is impossible to completely separate the measurement space from the underlying system we are trying to study.

Whether you do this with statistically based methods or not, regardless you always have to include the measurement method in the model of what you are measuring (and not just what you’d ideally like to have been measuring).

Pekka,

In general, noninformative priors are data-dependent, although many of them, such as Jeffreys’ prior, are not.

Actually the data tell us absolutely nothing by themselves—they are just numbers in the absence of the context provided by a theoretical framework

#####

+100

Now this is interesting. It has always been my assumption that one draws inferences from the data and thus devises theoretical constructs. But now, at this late stage, I am told that this is backwards, that data is meaningless unless it fits some previously devised theory. Or did I misunderstand?

We had a while ago another case, where the idea of non-informative priors was discussed, that related to radiocarbon dating. That was a different case in the way that in that case the “non-informative” prior was very strongly informative in a way that resulted in highly nonsensical results. In the present case, the prior of Nic leads to results that are not highly nonsensical, but the basic problem is still the same.

In both cases the measurements have a variable separating power in the space of model parameters (real age in the radiocarbon case, ECS, ocean diffusivity and aerosols in this case). Nic’s claim is essentially that those regions in the space of model parameters, where the empirical method has little separating power should be given a small weight proportional to the separating power.

In many cases this is justified, but this is not a law that’s true generally; it was not true in the radiocarbon case. It’s necessary to look carefully at the reasons for the low separating power. If it’s due to limitations of the empirical method, it has no value as a guideline in choosing the prior, but when it’s due to the nature of the set of model parameters to be determined, then the low separating power is at least a hint that should be taken into account in choosing the prior.

In the case of radiocarbon dating it was absolutely obvious that the lacking power of the method does not indicate that certain real ages are much less common than both younger and older ages. In this case one part of the effect is related to the fact that a small change in feedback strength has a very large influence for the ECS in the far tail of the ECS PDF. In a model, where feedback strength is considered in some sense a more primary physical parameter than ECS, the prior for the ECS distribution has an rapidly falling tail (inverse square). The relationship between observed temperatures and feedback strength is much more uniform than the relationship between ECS and either one of the others. For this reason my preferences that are based on the idea that feedback strength is more primary than ECS leads to qualitatively similar conclusions than the approach of Nic.

The basic conclusion is that the approach of Nic may give hints about a good choice of prior, but those hints may turn out to totally wrong. Only a more detailed study can tell, what is the more likely conclusion.

Ultimately we are left with a subjective choice, there simply are no objectively favored priors in thew absence of a verified and accepted physical model.

For the record, I disagree with Pekka about the Radiocarbon dating case. The underlying reason why some people, including Pekka, objected to the noninformative prior that I used (Jeffreys’ prior) was that they considered that there was genuine prior information that it did not reflect. Since the study I was critiquing stated that the method used did NOT employ any such prior information, it was appropriate that I did not do so either. Moreover, the prior I used gave results that agreed with the non-Bayesian likelihood ratio methods and perfectly matched frequentist confidence intervals, unlike the prior favoured by Pekka.

Nic,

What makes you think that the way you applied frequentist methods for that case is valid.

You may draw wrong conclusions from misapplying either one of the approaches in that case. That’s a case where common sense works well and proves very clearly thatg your conclusions were not correct even when you agreed that the full PDF cannot be determined but claimed that confidence intervals or credibility intervals can. In reality all these were wrong in a fully obvious way.

In that case common sense is more powerful than erroneously applied statistical methods.

The case was discussed so thoroughly at that time that anyone really interested should check the earlier thread for details.

The problems involved in the term ‘frequentist

statistics’ seem neatly illustrated by this discussion. It was a surprise to me that statistics still uses the term. Taken literally, it precludes most major areas of non-Bayesian applied statistics, viz. econometrics, epidemiology and much besides. There is a marked lack of repeated, exchangeable experiments, let alone attained limits! As too with climate statistics.

Frequentist probability as a universal concept was pretty thoroughly debunked 60+ years ago. Retaining the term seems a recipe for confusion. This is not to say it may not make sense in certain areas of physics where there actually are frequencies which exhibit some long-run convergence. It is also not meant to suggest we should all be subjective Bayesians. There are several ways around frequentist, even if they are non-constructive and semantic. But this is a bit angels and pinheads.

I took a course in Bayesian Statistics from Dennis Lindley many years ago. He tried to convince us to switch to the other side arguing with passion that frequentist probability, and frequentist statistics based on it, are complete rubbish: incoherent, logically flawed, and just the wrong thing to do. But if we still were not convinced, not to worry, because Bayesian and frequentist analyses produce almost the same answers in large samples anyway. I ended up really liking Dr. Lindley but remained a frequentist.

Great discussion going here. From it I would imply that the strict definition of the Bayesian prior defined by some here is that it has to be subjective. I suppose then that the strict Bayesian need only argue about the choice of that prior and how it may affect the posterior – unless we can also argue about the choice of data used. I am not inferring here that the frequentist approach does not have some of the same issues.

Carrick made the point that data is nothing without a theory to connect it to a model and here I see a connection to the choice of the prior for Bayesian inference. If the Bayesian prior is well supported by theory or at least reasonable conjecture then you can call it subjective or whatever and it should take less then comprehensive observed data to make a reasonable inference with the posterior result. With lots of observations the choice of a prior should become less important. The problem I see with many frequentist and Bayesian approaches in climate science is that those using the statistics can misapply or make claims for the results that are not warranted. For me the use of subjective expert priors is particularly troubling in applying Bayesian inference to climate science data.

Kenneth,

Although Carrick made his comment as a protest against something that I had written, I agree fully with Carrick, I had just formulated my thoughts imprecisely.

When frequentist approach is possible and without obvious problems, most people would choose such priors for the Bayesian approach that the results would be similar to the frequentist results. The main difference is that Bayesians think that some conclusions are possible also in cases where frequentist approach is not applicable at all. A genuine frequentist would conclude in those cases that there’s too little data for any conclusions. There are also cases, where the results differ significantly, but in these cases the reasons for that are quite obvious.

Here we are discussing a situation where the frequentist approach cannot be applied as all, because there is far too little data for that.

One way of looking at the prior is to consider it as a measure of the space formed by the parameters being considered. In this measure a range of possible parameter values has a volume that’s proportional to the prior probability that the true value is within this range. The fundamental problem is that mathematics cannot tell, what is the preferred measure in a space spanned by continuous parameters.

When we are looking at physical systems, physics can in some cases tell that. To take an example we may consider a single particle moving freely in space. In this case physics tells that the measure is proportional to the ordinary concept of volume multiplied by the volume calculated from components of linear momentum (or velocity). This rule for calculating the measure leads to the ordinary kinetic gas theory that has been found to be valid for nearly ideal gases. It’s, however, not totally obvious that the linear momentum is one of the natural variables rather than some power of it or something else.

When we move to more complex situations like ECS or the empirical observables used in determining ECS, it’s not to the least clear, what are the natural variables that can be used in a linear fashion in the analysis. It’s not obvious, because we do not have any comprehensive enough physical theory to tell that. Furthermore we have practically no empirical data that would help in deciding on that. The best that we have are some crude theoretical ideas related to our present understanding of the Earth system. One of these ideas is that the model based on the concept of feedbacks might be an applicable approximation and that the feedback strength might be a variable that could be assumed to have a rather uniform probability measure over the relevant range. That’s my preference, and I have told in an earlier comment, where that leads.

What is directly measurable is in many cases also a quantity that might be expected to have an uniform natural probability density, but there are innumerable exceptions to that (the case of radiocarbon dating is a good example).

When we are looking at physical systems and when we are doing empirical work on them, we do always have at least some limited understanding of relationships between variables. That means that we have some information as basis for choosing the priors. We do have information on the empirical methods and we do have information on the data handling. This information must be taken into account, when we are looking at a prior that can be considered uninformative on the physical parameters we are trying to determine.

If we know that very many real world situations lead to a similar outcome in the empirical data while a fraction of possible situations leads to significantly different empirical observations, it would be highly informative in a wrong way to take a prior that’s uniform in empirical values. That kind of erroneous assumption was behind the nonsensical results in the case of radiocarbon dating.

In this case we can tell that the choices of Nic led to a highly informative prior as shown in his slide 12a. That’s not uniformative, that’s highly informative for the determination of ECS. The same reason that I have discussed above is part of that, but we do not really understand what else is there. The fact that we understand only part of the mechanisms that lead to that highly informative outcome does not make the prior any less informative, it’s just informative in an unknown way. Deciding to use such a highly informative prior is a subjective choice that cannot be justified objectively.

I do certainly prefer informative priors whose basis is understood over informative priors whose basis is badly understood, and impossible to justify by known physical arguments. For this reason I dislike strongly Nic’s choice. It includes some strong potential distortions whose nature is unknown.

Pekka,

You write “In this case we can tell that the choices of Nic led to a highly informative prior as shown in his slide 12a. That’s not uniformative, that’s highly informative for the determination of ECS.”

You are mistaken. On the basis that the ‘optimal fingerprint’ based whitening preprocessing of the observational data is valid, that prior is noninformative for the estimation of the joint posterior PDF for ECS, sqrt(ocean effective vertical diffusivity) and aerosol forcing. And it is virtually noninformative for the estiamtion of a marginal posterior PDF for ECS.

I can say that because the whitening is designed to produce independent standard normal variables, for estiamtion of which uniform priors are known to be completely noninformative. Applying uniform priors to the joint likelihood function gives a joint posterior distribution for the true value of the whitened observables. The 3D PDF of which slide 12a shows a 2D marginal version is simply the Jacobian determinant for the dimensionally-reducing transformation of variables from whitened observables space to parameter space.

I arrive at an almost identical marginal distribution (in this case a confidence distribution) for ECS by using a standard frequentist profile likelihood method.

I do agree with you that concepts of measure are highly relevant here. Maybe you are unaware that this is a key concept underlying the Jeffreys’ prior.

Nic,

You pick your in a subjectice way your criteria to prove that you have a noninformative prior, but that’s a fully circular argument.

It’s a simple well known fact that there are no objectively noninformative priors for problems based on continuous variables. That has been accepted also by many well known experts who favor the use of rule-based priors like Jeffreys’ prior as a practical solution, when it’s more important to remove intentional bias that to get results that are most likely to be correct.

Pekka,

What you say is an overgeneralisation. Where the continuous variable parameter involved is a location parameter, a uniform prior is completely noninformative: that is the exception to the general rule, and it extends to transformations of a location parameter.

Agreement between the Bayesian posterior PDFs I obtain and confidence distrbutions obtained using a frequentist profile likelihood method doesn’t strike me as a subjective criteria.

Nic,

It’s not. Only in systems that are controlled by a well verified theory can we tell, what’s a “location parameter” in the sense that a uniform distribution is objectively correct, and even then only, when no additional information changes that. in practice that’s extremely seldom true.

Where is your agreement between a frequentist profile that you compare with results obtained from some particular prior?

None of the cases that have been discussed here provides any frequentist profile to compare with. The data that could show that is missing and it’s impossible to collect such data in these cases.

Thank you to Nic, Pekka and Ken for this discussion.

Nic:

Is this a sufficient reason not to use an expert-elicited prior for this particular case?

As I understand it, the prior distribution should, ideally, be the best representation of our knowledge at the time of the introduction of the new data. We now have good reason to believe that we have a reasonable idea of the likely range within which modern transient & effective climate sensitivity lie, so it’s not clear to me why a non-informative prior is required. (This might just be due to my limited grasp of the topic).

Per Mosher, I’d prefer to see the results for a set of competing priors, and then perhaps a discussion of which is best.

oneuniverse

It is normal to report estimates of unknown variables derived from a scientific experiment on the basis of knowledge gained from that experiment alone. Information gained from different experiments can then be pooled through metastudies or, qualitatively, in review studies (of which IPCC assessment reports are an example).

Thanks Nic, I had mistakenly thought that you were using the Jeffreys prior to immediately derive a posterior which represented our best estimate of ECS in general.

By the way, is there an established mathematical framework for gathering into a single Bayesian assessment the various observational studies of what is by necessity a single climate experiment ? It seems like a difficult problem.

Oneuniverse,

To the extent various empirical studies are independent they can be considered successively. First study modifies the original prior to first posterior distribution. Then this is used as prior for the next analysis, etc. The steps commute, i.e., the final outcome does not depend on the order the studies are considered.

In practice the studies are often not independent. In that case it’s difficult to combine them correctly as the common part tends to be counted many times, which distorts the outcome.

oneuniverse,

There have been various proposals for doing so, e.g. the Bayesian Melding Approach. I have proposed an objective Bayesian approach that derives a noninformative prior for inference from all the available observational evidence, provided it is independent. That method is commutative, and for ECS studies at least gives results that agree with frequentist profile likelihood methods. I don’t recommend the standard subjective Bayesian approach that Pekka mentions, unless the likelihood functions for the two studies have similar forms.

As Pekka says, correctly combining evidence from non-independent studies is difficult. So it is probably OK to combine the results of an insrumental period study with a paleocliamte study (maybe more than one, if from different past periods), but not results from two studies dependent on observed warming during the isntrumental period.

Nic

You are not deriving anything about your prior from observational evidence.

You are deriving everything that affects the prior from the way the measurements have been set up and analyzed, i.e. from the methodological choices, not from the results that have been obtained.

It’s actually good that the data does not affect the prior, because it were not a prior if it were affected by data. There were also a major risk of double-counting in that case.

“As Pekka says, correctly combining evidence from non-independent studies is difficult. So it is probably OK to combine the results of an insrumental period study with a paleocliamte study (maybe more than one, if from different past periods), but not results from two studies dependent on observed warming during the isntrumental period.”

How would independence of instrumental and paleoclimate studies be reconciled with the selection criteria for the proxies for paleoclimate temperature reconstructions that invariably is based (directly or indirectly) on how well the response correlates with the instrumental record? A method whereby the proxy selection criteria is developed using reasonable physical bases prior to selection and then using all the proxy data would provide a more independent basis – or could also show that the proxy response did not have a reasonable temperature signal.

Nic, Pekka, Ken, thank you for the explanation and references.

Nic, might it be possible to place the full text of Lewis 2013 online (or even to provide me with a copy) ?

I’m finding the lecture notes from Prof. Michael Jordan’s stats course useful. This is from lecture 7, as it seems relevant :

Oneuniverse,

What Michael Jordan writes does not help in resolving the question we have discussed. He refers to Jeffreys’ prior, but there’s an infinity of Jeffreys’ priors, because any nonlinear transformation of the variable results in another Jeffreys’ prior, and because we have been arguing on issues linked directly to different variables or sets of variables that are related in a non-linear way.

What makes this case so difficult is that there are many competing alternatives for the variables including ECS itself and the feedback coefficient as well as the complex set of parameters that Nic wants to use.

Pekka, no, I didn’t think it resolved your discussion with Nic, it just clarified the idea behind the ‘noninformative’ prior a bit for me. (I don’t find the term ‘objective bayesian’ a very helpful one in this debate by the way).

I’m not sure I can yet see how aiming to pick a prior that maximizes the divergence between prior & posterior actually ‘let’s the data speak for itself’. Intuitively, I can see an argument (flawed I think) that, since there is a certain distance to be travelled before a prior, through many updates, converges on a good “truthful” posterior distribution (making the particular choice of prior irrelevant), then picking an initial prior that maximises the distance travelled on the first update is a good first step, as it were. However, this isn’t the case if the maximisation occurs because the prior is way off-base, and the non-informative prior cannot provide any guarantees of that, no more than an expert prior can.

In fact, the “ideal” prior could be argued to be the one that is, oracularly, already as close as possible to the “truthful” (unknown final) posterior distribution. ie. that could be an argument that we should be aiming to minimize the divergence between prior and posterior. (I don’t think this argument works in general either).

Nic, I just saw the link to your website & papers, thank you – sorry I missed it. (Google Scholar has missed it too btw)

Oneuniverse,

Waking up in new morning I think that your excerpt may, indeed, help in understanding, why Nic disagrees with my thinking (or vice versa).

Let’s consider another problem. Someone has developed a new empirical method and the question is presented:

What are the cases where the new method is most useful?

It’s obvious that it’s not useful to measure inaccurately a quantity that’s already well known. As an example radiocarbon dating is of little value, if it’s already known that the age of a sample is between 1100 and 1170 years, because all ages in that interval result in essentially the same radiocarbon age, but it may be useful, if we know already that the age is between 1260 and 1330 years, because the radiocarbon age changes monotonically and rather uniformly by almost 200 years over this range of real age.