In today’s post, I’m going to re-examine (or more accurately, examine de novo) Ed Cook’s Mt Read (Tasmania) chronology, a chronology recently used in Gergis et al 2016, Esper et al 2016, as well as numerous multiproxy reconstructions over the past 20 years.

Gergis et al 2016 said that they used freshly-calculated “signal-free” RCS chronologies for tree ring sites except Mt Read (and Oroko). For these two sites, they chose older versions of the chronology, purporting to justify the use of old versions “for consistency with published results” – a criterion that they disregarded for other tree ring sites. The inconsistent practice immediately caught my attention. I therefore calculated an RCS chronology for Mt Read from measurement data archived with Esper et al 2016. Readers will probably not be astonished that the chronology disdained by Gergis et al had very elevated values in the early second millennium and late first millennium relative to the late 20th century.

I cannot help but observe that Gergis’ decision to use the older flatter chronology was almost certainly made only after peeking at results from the new Mt Read chronology, yet another example of data torture (Wagenmakers 2011, 2012) by Gergis et al. At this point, readers are probably de-sensitized to criticism of yet more data torture. In this case, it appears probable that the decision impacts the medieval period of their reconstruction where they only used two proxies, especially when combined with their arbitrary exclusion of Law Dome, which also had elevated early values.

Further curious puzzles emerged when I looked more closely at the older chronology favored by Gergis (and Esper). This chronology originated with Cook et al 2000 (Clim Dyn), which clearly stated that they had calculated an RCS chronology and even provided a succinct description of the technique (citing Briffa et al 1991, 1992) as authority. However, their reported chronology (both as illustrated in Cook et al 2000 and as archived at NOAA in 1998), though it has a very high correlation to my calculation, has negligible long-period variability. In this post, I present the case that the chronology presented by Cook as an RCS chronology was actually (and erroneously) calculated using a “traditional” standardization method that did not preserve low-frequency variance.

Although the Cook chronology has been used over and over, I seriously wonder whether any climate scientist has ever closely examined it in the past 20 years. Supporting this surmise are defects and errors in the Cook measurement dataset, which have remained unrepaired for over 20 years. Cleaning the measurement dataset to be usable was very laborious and one wonders why these defects have been allowed to persist for so long.

The “RCS” Chronology: Calculated vs Archived

To avoid burying the lede in further details, the diagram below shows the difference between my RCS chronology calculation from measurement data (left panel) and the “RCS” reconstruction used in Esper et al 2016 (as well as Gergis et al 2016 and many other studies), extended prior to 1000AD from the underlying chronology archived at NOAA in 1998. Versions of both are available back to 1500BC (and will be shown later), but only the past 1500 years is shown in the diagram below, which is intended to illustrate the differences.

Despite the difference in visual appearance, the two versions are very highly correlated (r=0.57 over nearly 3600 years). However, the RCS chronology shows a long-term decline, with 20th century values returning to values reached earlier in the millennium, and less than values of the first millennium. On the other hand, the Cook chronology (archived by Esper) has flattened values earlier in the millennium, such that late 20th century values appear somewhat anomalous.

Figure 1. Mt Read versions (converted to SD units): left – RCS chronology calculated from Esper et al 2016 archive, edited to remove defects as described in Postscript; right – Esper et al 2016 reconstruction for Mt Read, extended prior to 1000AD with NOAA arfilt reconstruction (see discussion below).

Cook’s RCS Description and Age Profile Curve

A tree ring chronology is, in its essence, an estimate of an annual growth index after allowing for juvenile growth (since ring widths decline with age.) “Traditional” standardization fit a growth curve to each tree individually. But if growth rates varied between century, such techniques transfer variability over time to variability between trees. This type of problem is well known in statistics as fixed and random effects – techniques long advocated at Climate Audit – but these techniques are unfamiliar to tree ring scientists, who describe such phenomena in tree rings in coarse and artisanal terms.

Cook was keenly aware of the lack of low frequency variability in “traditional” standardization ( a technique that he had used in his original publication of Tasmanian data in 1991 and 1992). The issue also concerned Briffa, who, in two influential publications (Briffa et al 1991, 1992), advocated the use of a single growth curve for each site (rather than individual growth curves for each tree) as a means for retaining centennial variability, but, like Cook, being unable to express the issue in formal statistics. The single-growth-curve technique was subsequently labeled as “RCS” standardization. The technique has problems if the dataset is inhomogeneous between sites (as many are). There are numerous CA posts structuring chrobnology development in terms of fixed and random effects.

Back to Mt Read: Cook et al 2000 clearly and unambiguously stated that they used a single age profile curve (“RCS”) to allow for juvenile growth in chronology development:

In an effort to preserve low-frequency climatic variance in excess of the individual segment lengths, we applied the regional curve standardization

(RCS) method of Briffa et al. (1992, 1996) to the data.

Cook described his RCS technique in straightforward terms and (of considerable assistance for subsequent analysis) included a figure showing the age profile curve.

The RCS method requires that the ring-width measurements be aligned by biological age to estimate a single mean growth curve that reflects the intrinsic trend in radial growth as a function of age… The mean series declines in a reasonably systematic and simple way as a function of age. The simplicity of this ring- width decline with increasing age indicates that the RCS method may work well here…Therefore, a simple theoretical growth curve has been fit to the mean series. This RCS curve is shown in Fig. 2A as the smooth curve superimposed on the mean series.

Cook’s Figure 2A can be closely replicated from a quality-controlled version of the Mt Read dataset, as shown below, where my replication matches Figure 2A down to fine details. This match accomplishes three things. It confirms that differences between my RCS chronology and the archived Cook version do not arise from different age profile curves. Second, it also confirms the validity of my quality control editing for the defects and errors in the archive. While the edits were motivated by other inconsistencies, the age profile curve calculated on the data prior to quality control for stupid defects does not match. Third, the data is better fitted by a Hugershoff curve, a common form in dendro analysis (y= A+ B*x^D *exp(-C*x) ), than by a negative exponential (another common form). I accordingly used the Hugershoff fit in my calculation of the RCS chronology.

Figure 2. Mt Read age profiles. Top – from Cook et al 2000; bottom – calculated from archived measurement data. Blue- fit from Hugershoff curve y= A+ B*x^D *exp(-C*x). A slight discrepancy in fit on the far tail won’t effect results since so few measurements at this age.

Reverse Engineering

If Cook’s chronology wasn’t an RCS chronology, then what was it? Its lack of centennial-scale variability strongly suggests that it employed some form of fitting to each individual core. Cook’s first articles on Tasmania (Cook et al 1991, 1992) had employed smoothing splines – a technique which made it impossible to recover centennial variability, as Cook understood very clearly.

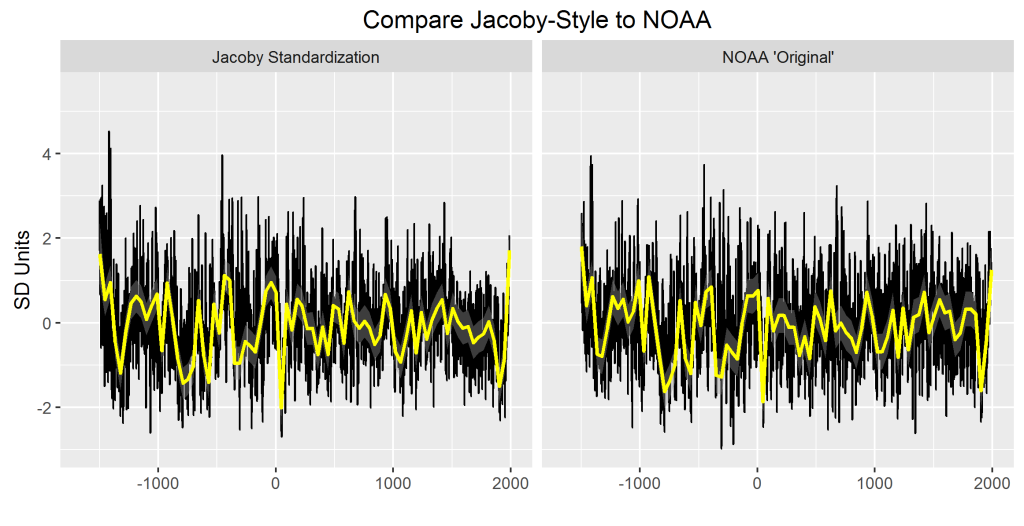

Another standardization technique employed at the time was (supposedly) “conservative” standardization with “stiff” negative exponential curves, a style of standardization used by Cook’s colleague, Gordon Jacoby – a technique which still standardized on individual cores (rather than one curve for the entire site). As a guess, I did a Jacoby-style standardization on the Mt Read data, comparing to the Cook et al 2000 Figure 3 chronology (archived as the NOAA “original” chronology) – see figure below.

Figure 3. Mt Read versions. Left- using “traditional” standardization (negative exponentials for each core); right – NOAA “original”. The NOAA “original” version was illustrated in Cook et al 2000 Figure 3

It doesn’t match exactly, but the general similarity of appearance, combined with the dissimilarity to a freshly calculated RS chronology, convinces me that something went awry in Cook’s calculations. While he clearly intended to calculate an RCS chronology, I am convinced that the Cook et al 2000 chronology was calculated using some variation of “traditional” standardization (in which curves were separately fit to individual trees/cores), a conclusion supported by the lack of centennial scale variability.

Compare RCS Chronology to Mean Ring Width Series

The RCS chronology calculated in this post is closely related to the series of mean ring widths illustrated in Cook et al Figure 1A.

Figure 4. Top – Cook et al 2000 Figure 1A showing mean ring width; bottom – mean ring width series calculated from quality-edited measurement data.

Cook observed that his method (claimed to be RCS) had “removed some long-term growth” variation, but claimed (incorrectly in my evaluation) that it had preserved “much of the century-scale information”:

Comparing the mean series in Figs. 1A and 3A, it is clear that the RCS method has removed some long-term growth variations in the standardized chronology, but much of the century-scale information has been preserved.

This observation can be dramatically re-stated in light of the preceding analysis. The RCS calculation (this post) preserves virtually all of the long-term growth variation of the mean ring width series.

The “Original” and “Arfilt” Versions

Both Cook et al 200 and the associated NOAA archive contain two closely related versions of his chronology: the “original” version (shown in Figure 3) and the “arfilt” version (shown in Figure 7). To foreclose potential issues arising from differences between these versions, I’ve shown both variations against an RCS chronology in the figure below, this time showing values back to 1500BC (as in the NOAA archive), more than doubling the coverage shown in the first figure above.

Figure 3. MT Read chronologies from -1500BC to 1991AD: left – RCS chronology (this post); middle: “original” chronology; right: “arfilt” chronology.

Relative to the RCS chronology, both the “original” and “arfilt” chronology appear very similar to one another and differ from the RCS chronology through their lack of low-frequency variability. In this view, one can discern a general correspondence in decadal features of the smoothed (yellow) version, while noticing that the Cook versions have flattened out high early values apparent in the RCS chronology.

Cook described the calculation of his “arfilt” chronology as follows:

Simple linear regression analysis was used to transform the Lake Johnston Huon pine tree-ring chronology into estimates of November-April seasonal temperatures. Prior to regression, both the tree-ring and climate series were prewhitened as order-p autoregressive (AR) processes… The tree-rings were modelled and prewhitened as an AR(3) process, with the AR order determined by the minimum AIC procedure (Akaike, 1974). The AR coefficients and explained variance of this model are given in Table 2. Note that the majority of the persistence is contained in the first coefficient (ARl = 0.397), which basically reflects an exponentially- damped response to environmental inputs such as climate. In total, this AR(3) model explained 21.8% of the tree-ring variance. In contrast, the November-April average temperatures were modelled as an AR(1) process that explained 10.5% of the variance (Table 2).

Reading this article today, it’s impossible to see any value added through the addition of the arfilt procedure to the “original” chronology, however this was actually calculated.

Even though the arfilt series is even further from an RCS chronology than the “original” chronology, this was the version used in both Gergis et al 2016 and Esper et al 2016. (This can be proven by digital comparison of the data.) Gergis et al 2016 contained 10 values for the arfilt series (1992-2001) not included in the NOAA archive and not supported by the the present measurement data archive, which ends in 1991. If the additional values arise from the inclusion of fresh tree ring data, it’s hard to understand why this data wouldn’t have some impact on the chronology up to 1991, but these values remain unchanged – even to the third decimal place.

Gergis et al 2016

As noted in the introduction, Gergis et al re-calculated tree ring chronologies from the underlying measurement data for all sites using an RCS variation (“signal-free detrending”) recently developed at the University of East Anglia:

All tree-ring chronologies were developed from raw measurements using the signal-free detrending method, which improves the resolution of medium-frequency variance…

The method has been presented as yet another recipe, with no attempt to place it in a broader statistical context.

When the method was applied to tree ring sites in the Gergis network, it frequently resulted in much enhanced 20th century relative to previously published results, as, for example, for Celery Top East (shown below), where the published version had little centennial variability, but, as re-calculated by Gergis et al, had a very pronounced increase in the 20th century.

Figure 4. Celery Top East chronologies. Left- the (unarchived) BLT (East) chronology as illustrated in Allen et al (2001); right – the Celery Top East chronology archived in connection with Gergis et al 2016. The series name includes identifiers BLT and RFR, both sites discussed in Allen et al 2001. The difference between versions appears to arise from methodology, not data.

However, for Mt Read (and Oroko), Gergis used prior chronology versions, ostensibly for consistency with published results” – though such inconsistency had not troubled them in cases where they obtained elevated 20th century values. They described their choice of older versions as follows:

The only exceptions to this signal-free tree-ring detrending method were the New Zealand silver pine tree-ring composite (Oroko Swamp and Ahaura), which contains logging disturbance after 1957 (D’Arrigo et al. 1998; Cook et al. 2002a, 2006), and the Mount Read Huon pine chronology from Tasmania, which is a complex assemblage of material derived from living trees and subfossil material. For consistency with published results, we use the final temperature reconstructions provided by the original authors that include disturbance-corrected data for the silver pine record and regional curve standardization for the complex age structure of the wood used to develop the Mount Read temperature reconstruction (Cook et al. 2006).

It is evident that their decision to use a prior version of Mt Read was only made after examining results of the fresh chronology, which must have been similar to the results calculated above i.e. with elevated values in the first millennium and early second millennium. Such an election, only made after getting (presumably) adverse results is yet another example of data torture (Wagenmakers, 2011, 2012), for which Gergis’ proffered rationale (“consistency with published results”) is both flimsy and inconsistent with handling other series.

The version used instead in Gergis et al 2016 was, as noted above, identical to Cook’s arfilt series between 1000 and 1991. Gergis did not use values in the archive from prior to AD1000, but her dataset included 10 additional values (1992-2001). Presumably these additional values were provided by Cook, but, if they were calculated from additional data, one would have expected that earlier values would at least be somewhat impact (and not identical to three decimal places).

Esper et al 2016: Reconstruction and Measurement Data

Esper et al 2016 stated that they used the “most recent” reconstruction from each site. However, as noted above, the Tasmania reconstruction (values from 1000-1991 AD) in their archive ends in 1991 and is a shortened form of Cook’s arfilt reconstruction (archived at NOAA in 1998). Values before 1000AD were not archived for Tasmania, though earlier values were archived for many other sites.

Esper et al asserted that Cook’s Tasmania reconstruction had been produced from an RCS chronology, but this assertion does not appear to have been verified by Esper and/or other lead authors.

The Mt Read measurement data in the Esper et al 2016 archive appears to be more or less identical to measurement data archived at NOAA in 2002 (ausl024). Both contain many booby traps for the unwary – all of which ought to have been corrected long ago. Because of these defects, the archive is not readable using the R package dplR. Cook’s booby traps have several levels. Level 1: Cook’s measurement data contains in two different units. Each core segment has a trailing (final) value of either -9999 or 999; cores with a trailing value of 999 are in units that are 10 times larger. I do not recall any other measurement data archive which isn’t consistent. I don’t understand why this inconsistency isn’t tidied in the archive. Level 2: in all or nearly all measurement data archives, each core segment has a separate ID through adding a single-character suffix to the core ID. The end of each segment is marked by a trailing value of -9999 or 999. However, Cook’s archive contains multiple segments with the same ID, blowing up data assimilation. In my read program, problematic cores could be picked up by seeing if the core, after assimilation, still included a trailing value. There were about 15 such cores – in each case, I manually added a suffix to one segment of the core, thereby differentiating the segments to accommodate a yield. This took a lot of time to diagnose and patch. Level three: this was the trickiest. As a cross check, I examined the distribution of average ring widths by core segment and found that average ring widths for six cores (KDH89, KDH16B, KDH85, KDH61A, KDH64, KDH83) were about 10 times the average ring widths. Using the “native” values of the Esper archive, the age profile curve didn’t match the Cook et al figure. I concluded that the values for these cores in the Cook archive needed to be divided by 10 to make sense. I did this semi-manually and saved a clean measurement dataset, which I then applied. After this cleaning, the age profile match improved considerably. Note that these cores were all in early periods (mostly first millenium). Thus the editing of the data reduced first millennium values.

Conclusion

An RCS chronology calculated according to the stated methodology of Cook et al 2000 yields an entirely different result than that reported by Cook. In my opinion, Cook, like Gergis et al 2012, did not use the procedure described at length in the article – in Cook’s case, he did not use the RCS procedure described in the article as a method to preserve low-frequency variability. In my opinion, Cook’s chronology was most likely produced using a variation of “traditional” standardization that did not preserve low frequency variability.

Cook’s chronology has been used over and over in multiproxy studies: Mann et al 1998, Jones et al 1998, Mann and Jones 2003, IPCC AR4, Mann et al 2008; most recently, Gergis et al 2016 and Esper et al 2016. Despite its repeated use, one can only conclude that no climate scientist ever looked closely at Cook’s actual chronology, a conclusion circumstantially supported by the persistence of gross errors in the Cook measurement data, even in the Esper et al 2016 version, issued more than 20 years after the original measurements.

The actual RCS chronology for Mt Read has elevated values in the late first millennium and early second millennium. Gergis et al evidently calculated such a chronology and, in another flagrant instance of ex post cherry picking, decided to use the ancient Cook chronology, which turns out to have been erroneously calculated (like Gergis et al 2012, one might add). Use of the Mt Read RCS chronology and Law Dome series would obviously lead to substantially different results in the medieval period where Gergis only used two proxies.

Postscript

A reader pointed out that Allen et al (2014) reported an update to Mt Read. I should have taken note of this, but take some consolation in the fact that Esper et al 2016 didn’t take note of this update, despite claiming to have used the most recent series from each site and having a common co-author (Cook).

Allen et al took cores from 18 trees. Their reported chronology was not an RCS chronology, but a chronology based on fitting individual series – a technique that Cook had purported to avoid in order to preserve centennial variability. Allen et al describe the use of negative exponential curves, conceding that this technique “potentially loses some centennial time scale variability” relative to Cook et al 2000:

The updated chronology was constructed from the new samples and the previously crossdated material (Buckley et al. 1997; Cook et al. 2000) and is based on individual detrended series with a mean segment length greater than 500 years (Cook et al. 2000). All samples, including those previously obtained, were standardised… using fitted negative exponential curves or linear trends of negative or zero slope, with the signal-free method applied to minimise trend distortion and end-effect biases in the final chronology (Melvin and Briffa, 2008). The use of negative exponential and linear detrending preserves low-frequency variations due to climate consistent with the ‘segment length curse’ (Cook et al., 1995b), but potentially loses some centennial time scale variability that had been preserved in the Mt Read chronology based on regional curve standardisation (Cook et al. 2000).

My surmise is that the technique used by Allen et al is probably similar to, if not identical, to the technique that Cook et al 2000 actually used. The figure below compares Allen et al 2014 Figure 2C to the RCS chronology of this post. The two series are highly correlated, but the Allen chronology has flattened out the long-term decline of the RCS chronology.

Figure ^. Left – Allen et al 2014 Figure 2C. Right – RCS chronology (this post) overlaid onto Allen Figure 2C.

Once again, it seems implausible that Allen et al 2014 did not first attempt an RCS chronology in order to directly update the Cook et al 2000 chronology, deciding to use an individually fitted chronology only after inspecting an RCS chronology. In addition, the start of their figure (400BC) neatly and perhaps opportunistically excludes high values in the immediately preceding interval.

References:

Allen et al 2014. Continuing upward trend in Mt Read Huon pine ring widths–Temperature or divergence? Quat Sci Rev. pdf

Cook et al 2000. Warm-season temperatures since 1600 BC reconstructed from Tasmanian tree rings and their relationship to large-scale sea surface temperature anomalies. Clim. Dyn. pdf

Gergis et al 2016. Australasian Temperature Reconstructions Spanning the Last Millennium. Journal of Climate.

Esper et al 2016. Ranking of tree-ring based temperature reconstructions of the past millennium. Quat Sci pdf.

220 Comments

“average ring widths for six cores (KDH89, KDH16B, KDH85, KDH61A, KDH64, KDH83) were about 10 times the average ring widths.”

???

Holy crap. Brilliant, meticulous work.

This is a real test of Cook’s character as a scientist.

Steve: I think that there is a greater issue regarding Gergis. There is strong evidence that their fresh version of the Tasmania chronology contained high medieval values, but they withheld these results “for consistency” with previous publications. Imagine the fate of a geologist who did this with drill results that failed to confirm prior results.

My point assumes a lot of back story.

I was giving Cook’s integrity the benefit of the doubt.

This episode simply adds to long list of sins for Gergis.

Found in the ‘sin soared’ file.

==========

“This is a real test of Cook’s character as a scientist.”

###

The test is whether Cook responds to this post. The geologist eventually has to answer, the dendros never need worry about that. But Steve McIntyre has done invaluable work here and that work will endure in the future while the work of the dendros joins Lysenkoism as a byword for the perversion of science.

snip – OT

Wonder if Michelson and Morley should have reported that the ‘aether’ existed for ‘consistency with previous results’.

Steve, is it possible that the ‘nine year addition’ comes from http://www.sciencedirect.com/science/article/pii/S0277379114003084

Allen, Cook et al 2014 QSR, from abstract,

“To date, no attempt has been made to assess the presence or otherwise of the “Divergence Problem” (DP) in existing multi-millennial Southern Hemisphere tree-ring chronologies. We have updated the iconic Mt Read Huon pine chronology from Tasmania, southeastern Australia, to now include the warmest decade on record, AD 2000–2010, and used the Kalman Filter (KF) to examine it for signs of divergence against four different temperature series available for the region. Ring-width growth for the past two decades is statistically unprecedented for the past 1048 years…..”

The full paper is available here. There’s some interesting stuff in the paper. Cook is the second author, and for some reason, they decided:

“All samples, including those previously obtained, were standardised using fitted negative exponential curves or linear trends of negative or zero slope, with the signal-free method applied to minimise trend distortion and end-effect biases in the final chronology. The use of negative exponential and linear detrending preserves low-frequency variations due to climate consistent with the ‘segment length curse’, but potentially loses some centennial time scale variability that had been preserved in the Mt Read chronology based on regional curve standardisation. Because our tests of divergence are based only on the outer 100 years of the chronology concurrent with meteorological

data, the way we have standardised the chronology here versus the way done by Cook et al. (2000) should not have any impact on the possible detection of divergence in the Mt Read chronology”

They also have a lot of discussion around the divergence problem, they are evaluating it as a “time dependence”. They have a test that plots divergence over time. And they have this in the conclusion:

“it is abundantly clear that the conclusion that inferred ring-width-based temperatures over Tasmania and southeastern Australia for the past decade and a half have been higher than for any other period in the past 1000 years is a conditional one. It is conditional on the assertion that the relationship between temperatures and ring width has remained sufficiently stable over time”

“It is conditional on the

assertionassumption that the relationship between temperatures and ring width has remained sufficiently stable over time”Fixed. 😉

Thanks for drawing this article to my attention. I’ve added the following section to the post:

Postscript

A reader pointed out that Allen et al (2014) reported an update to Mt Read. I should have taken note of this, but take some consolation in the fact that Esper et al 2016 didn’t take note of this update, despite claiming to have used the most recent series from each site and having a common co-author (Cook).

Allen et al took cores from 18 trees. Their reported chronology was not an RCS chronology, but a chronology based on fitting individual series – a technique that Cook had purported to avoid in order to preserve centennial variability. Allen et al describe the use of negative exponential curves, conceding that this technique “potentially loses some centennial time scale variability” relative to Cook et al 2000:

My surmise is that the technique used by Allen et al is probably similar to, if not identical, to the technique that Cook et al 2000 actually used. The figure below compares Allen et al 2014 Figure 2C to the RCS chronology of this post. The two series are highly correlated, but the Allen chronology has flattened out the long-term decline of the RCS chronology.

Figure ^. Left – Allen et al 2014 Figure 2C. Right – RCS chronology (this post) overlaid onto Allen Figure 2C.

Once again, it seems implausible that Allen et al 2014 did not first attempt an RCS chronology in order to directly update the Cook et al 2000 chronology, deciding to use an individually fitted chronology only after inspecting an RCS chronology. In addition, the start of their figure (400BC) neatly and perhaps opportunistically excludes high values in the immediately preceding interval.

Wow. A lot of work, Steve. Thanks!

As usual, you put the so-called “professional” climate scientists to shame. Good grief.

Cheers — Pete Tillman

Professional geologist, advanced-amateur paleoclimatologist

Excellent work again. This is what real replication look like. My thanks and appreciation.

Perhaps “consistency with published results” doesn’t refer to proxy consistency with the original authors’ publications. Rather, the G2016 reconstruction should resemble that of G2012. 😉

“When the [new] method was applied to tree ring sites in the Gergis network, it frequently resulted in much enhanced 20th century relative to previously published results, as, for example, for Celery Top East”

Any investigation into this new method on the horizon? Just asking.

Great work, Steve.

As ever we are reminded of climate scientists blatant and so far unchallenged by the scientific community, explanation of data torture;

D’Arrigo: “you have to pick cherries if you want to make cherry pie”

Esper: “the purpose of removing samples is to enhance a desired signal, the ability to pick and choose which samples to use is an advantage unique to dendroclimatology”

Says it all.

Thanks Steve for an important analysis of Cook’s Mt. Read TR data sets.

The implications are staggering. All the papers using the improperly reconstructed data (without RCS) must be re-evaluated or discarded.

Thanks Anon for the Allen-Cook(2014) paper on the “Divergence Problem” (DP)

in relation to temperature. It seems like so many of these papers go ahead with a reconstruction, (past projection,) knowing the underlying methodological assumptions are unsettled. But the DP is not just an uncorrected age-growth bias or mistaken spliced thermometer record, DP tears at the fundamental assumption of the proxy’s validity at modern temperature levels.

This is a huge issue because if modern decade warmth levels are “unprecedented” there is no ability to demonstrate this unless the DP can be unequivocally linked to an non-temperature growth limiting factor that in itself assumed unprecedented (anthropogenic). Obviously the dendros must be studying ozone hole and every type of pollution like mad.

Allen-Cook(2014) asserts a non-strict DP for Mt Read where its correlation decouples not only in the recent 15 years but also at past times. Also this: “Unlike the decoupling first observed by Jacoby and D’Arrigo (1995) in which ring widths underestimated temperature in recent decades, the Mt Read ring widths overestimate warm season temperature for the past two decades.” But they admit that could be due to bad data or processing. Good for them.

“All the papers using the improperly reconstructed data (without RCS) must be re-evaluated or discarded.”

Yeah, we can count on that. NOT.

It’s pay-walled to me. Did they even discuss the possibility of CO2 fertilization?

Charles, the paper autodownloads to a pdf for me in Windows. Allen-Cook(2014) refers to CO2 fertilization several times citing LaMarche(1984), Brienen(2012), and Gedalof(2010). None cite Loehle(2009)— Sorry Craig (I found it searching: loehle 2008 divergence).

Craig, do you think Mt Read’s unprecedented growth rate for past 15-20 years cited by Allen et al could be CO2 limiting factor elevation, in other words, CO2 fertilization?

Brienen(2012) felt that sampling bias for picking larger, healthier trees was more significant than CO2 bias. It suggested using random sample selection. (We could have told them that.)

Craig,

Regarding the warming in Scandinavia, has the elevation of the treeline increased?

Jeff Norman: There has been elevation of the treeline, and I think it is increasing.

From 1909 Norway: Scientists concludes that treeline is elevated to a level of 1930ies.

And not very good match between models and observation.

Rate of forest advance and tundra disappearance. From Annika Hofgard 2015:

• Yes, trees and shrubs are moving up and north, but ……..

• Where – local to regional perspective

• Why – causal background (non-climatic drivers can

dominate)

• Mismatch between predictions and observations

• Mismatch between results based on experiments vs.

natural (both rate and species-specific responses)

• Rate of advance – not km but meters/year

• Modelled tundra loss of 40-50% – a serious overestimate

• Multi-site analyses are needed to refine regional and

circumpolar forest advance scenarios

• If not – misleading interpretations regarding rates of

climate-driven encroachment will prevail

• Model-based rate scenarios may cause management

failures.

And – there were big forests in highlands of Norway 7000 years ago that have no trees now (only Mountain birch).

Once the scientist doing temperature reconstructions can except (incorrectly) the post fact selection of proxies, the remaining data torture that is frequently applied falls within the boundaries of post fact selection.

Thanks, SteveM for the good review of the basis for the various methods used to extract tree ring response from ring width that varies with tree age. Without a consensus on a given method the scientists doing reconstructions are evidently allowed to use whatever method serves their purposes.

Thanks, Steve, for a very impressive piece of detective work and analysis. Yet more evidence that something is very wrong in the dendrochronology field.

Correction: I meant dendroclimatology, of course.

Mr. McIntyre: “Presumably these additional values were provided by Cook, but, if they were calculated from additional data, one would have expected that earlier values would at least be somewhat impact(ed) (and not identical to three decimal places).” I am intrigued by this. Are you suggesting that a splice of instrumental data was used here? Or, more basically, do you have a possible explanation for this strange situation?

Steve: not suggesting instrumental data and doubt it. I really don’t know.

Steve,

After the corrections you describe, my next focus would be on the relation of tree data to temperatures, the calibration stage.

I have actually seen Mt Read at close range. Few others have. It sits about where the Tasmanian west coast sub-climate meets the different east coast sub-climate.

The climate difference cannot easily be described by the recorded temperatures from near to Mt Read, for there are few stations. Here is a rough summary of those within say 100 km of Mt Read. Although there have been mines nearby since the late 1800s, there are few public BOM records earlier than the 1960s. That is, we do not have many (any) useful temperature records for the period before CO2 is alleged to have changed global climate and possibly tree growth.

Here is a snapshot of those BOM records as at 2007, with start and finish dates and position in lats and longs. Most to all of these records have slabs of missing data and are not useful.

So, temperature calibration is not good over the 0-100 km range. Over the whole of Tasmania, an island about 300 x 250 km in size, the longer term records are at Hobart and Launceston, some 200 km and 135 km from the target at Mt Read. Launceston in particular has several local weather stations used at various time intervals. It is hard to extract a composite picture and maybe it is not worth the effort because only the brave would claim the climate at Launceston to be similar to that at Mt Read. So, to calibrate with temperatures, Cook and others have looked at proxies of sea surface temperatures west of Mt Read, at Antarctic temperatures (which have hardly changed) and even to Asian monsoon regions.

Conclusion – what is the value of a publication as a temperature proxy when there are such difficulties relating tree properties to known temperatures?

Geoff:

the value os that one gets a times series with a particular pattern of variance, another tool in the box to employ when curve fitting.

The fact that there is no way to calibrate it to temperature with local records is a strength, not an impediment.

This means you can take your squiggles, and calibrate it to whatever you wish.

The huon pines at Mt. Read are an interesting bunch, they are all male and have the same DNA, it is thought they are clones of a tree about 10,000 yrs old that has been reproducing vegetatively since then.

http://www.apstas.com/Mt__Read_Huon_pine.html

a very interesting link that I commend to readers

“Because the rings vary with the climate at the time, and particularly with temperature, they give a good indication of how the climate has varied over that time. Of interest in this record is the clear indication of climate warming since the 1960’s.”?

really?

Another thought…since all the pines are genetically identical, one wonders just what the variance in phenotypic expression across all of the clones looks like?

same tree, almost identical location….same climate (same microclimate)…..

just wondering.

“Because the rings vary with the climate at the time, and particularly with temperature”

Isn’t this just the same ol’ assertion?

Andrew

that was a throw away line in a piece that mainly dealt with the genetics of the population of pines. a quick read.

worth the time.

Steve, the article says Mt Read Huon Pines chronologies are over 4000 years old. Have you found any from 2000BC? Would they not give better indications of the proper corrections to preserve low frequency?

A google books view of “Australian Rainforest Woods, Morris Lake, 2015 (CSIRO Publishing) mentions on page 115 a 7000 year long chronology for Huon wood on Mt. Read (by Mike Peterson – senior forester).

“The huon pines at Mt. Read are an interesting bunch, they are all male and have the same DNA, it is thought they are clones of a tree about 10,000 yrs old that has been reproducing vegetatively since then.”

Reminds me of the ents losing the ent wives.

Tolkien’s intuition. I’d trust that ahead of some notables in the field.

Finally, a rival for the most important tree in the world (Yamal).

“For consistency with published results” as a reason is pretty mind-boggling in a supposedly scientific paper. How could any errors ever be corrected if this was the approach taken to them?

And, while Gergis has clearly identified herself as a non-scientist with this phrase and many others, where are Cook and Allen and Esper and all their co-authors? Do none of them actually care about getting something like the correct answer out of these datasets? Do they accept these findings or not?

“The use of negative exponential and linear detrending preserves low-frequency variations due to climate consistent with the ‘segment length curse’ (Cook et al., 1995b), but potentially loses some centennial time scale variability that had been preserved in the Mt Read chronology based on regional curve standardisation (Cook et al. 2000).”

I have been puzzling over this claim for a while. I have the gnawing suspicion, borne of reading too much climate science, that the evidence for this claim is that the use of RCS in a direct comparison to individual series standardization leaves bigger wiggles. But are the wiggles bigger because RCS “preserves variability,” or because the magnitude of growth standardization error is greater if you apply a regional curve?

From the link above in a Ron Graf post about tree selection and the biases that result from those practices we have:

Click to access brienen_gbc12.pdf

My blockquote should have included only the firat paragraph.

Yes, Ken, its clear that they are aware of the problems (and the failures) of tree ring climatology and this fact starkly underscores their lack of participation here. They read the posts and the comments here, I have no doubt.

I doubt that post-1950 tree-ring data can be compared with earlier tree ring data. I read these posts with interest but often limited comprehension, so perhaps I am off base here. But bear with me on this. Recent studies have shown a worldwide greening of vegetation which is attributed to rising levels of carbon dioxide. https://wattsupwiththat.com/2016/04/27/nasa-carbon-dioxide-fertilization-greening-earth-study-finds/

So I think it is safe to say that rising levels of carbon dioxide are changing the way plants grow. And wouldn’t this at least imply that rising levels of CO2 would have an effect on the growth of trees and their annual rings? And wouldn’t this effect, whatever it is, be progressively greater as CO2 increases? So it seems to me that post-1950 tree rings would be “contaminated” with a CO2 effect that is increasing through time. Thus I suggest that post-1950 (or whenever you want mark the beginning of increase in atmospheric CO2)tree rings cannot be compared to tree rings laid down in the earlier CO2-stable atmosphere until the CO2 effect can be characterized and quantified.

TedL:

Fertilization is a bit OT, but see this:

“The annual growth of trees, as represented by a variety of ring-width, densitometric, or chemical parameters, represents a combined record of different environmental forcings, one of which is climate. Along with climate, relatively large-scale positive growth influences such as hypothesized `fertilization’ due to increased levels of atmospheric carbon dioxide or various nitrogenous compounds, or possibly deleterious effects of `acid rain’ or increased ultra-violet radiation, might all be expected to exert some influence on recent tree growth rates. Inferring the details of past climate variability from tree-ring data remains a

largely empirical exercise, but one that goes hand-in-hand with the development of techniques that seek to identify and isolate the confounding influence of local and larger-scale non-climatic factors.”

This is from the same 1998 paper I quoted above:

Click to access 43XA8LK6PCMVMH9H_353_65.pdf

so workers in this field are well aware of these confounding factors. That is not the same as saying that they have developed good methods for dealing with them!

TedL:

Your getting caught up on the reasons why the divergence might be real, but you are ignoring why it might not even matter.

The first step in determining whether a thing is a proxy for something else should be to detail why and under what conditions a person thinks that a thing being measured is a good proxy for something else. You then need to sample and see if it correlates. If it doesn’t, figure out why and refine your sampling criteria.

You don’t sample a bunch of stuff, then use the ones that correlate well. Must less sample it, see that part of it correlates and another part doesn’t, then just throw out the part that doesn’t.

Are the trees a proxy for temperature or just a coincidence for a number of years? If it is a coincidence, then there is no reason to even look at a 1950 or whatever year divergence as it shouldn’t even be used as a proxy.

MattK

Au contraire!

The protocol you outline above (and denigrate, BTW) is exactly what one does.

/sar

Steve, does the TR data contain information on the cambian (life) age of the samples? In other words how do we know that the 3000-yr chronology is not a truncated version of a 4000-yr or 7000-yr chronology? And doesn’t one need to know that in order to apply the RCS correction?

Steve,

I note that you defend your repeated use of the term “data torture” on the grounds that it is a well defined term in something called “statistical commentary”, whatever that might be. Indeed you define it as a “Technical term”:

” The term “data torture” is a term that is used in statistical commentary – I cited Wagenmakers. It has a technical meaning that precisely fits Gergis et al 2016.”

Technical terms, like entropy, chemical equilibrium, or anthropogenic global warming, have quite strict definitions that are understood by the community of technical specialists who use the terms. Indeed it would have to have a precise definition in order to “precisely fit” a particular phenomenon. Would you care to provide, preferably by reference to an appropriate dictionary of technical terms, a clear definition of the term? The fact that you only seem to be able to cite one individual, by the name of Wagenmakers, as using this “technical term”, suggests it does not have any such “community acceptance”, whether among “statistical commentators” or anywhere else.

Google is your friend.

Ronald Coase, economist and previously the Clifton R. Musser Professor Emeritus of Economics at the University of Chicago Law School, is the source of the most popular use of the phrase, brought into the cultural lexicon in the early 20th century: “If you torture the data enough, eventually it will confess.”

Which, of course, is exactly what Gergis did.

Coase was also awarded the

The Sveriges Riksbank Prize in Economic Sciences in Memory of Alfred Nobel”,

the prize commonly referred to as “the Nobel Prize in Economics” in 1991.

What would he know about applied statistical analysis, after all?

David E,

So if a word or phrase is in “the cultural lexicon” are you saying that makes it a technical term? Are, for instance, “motherf****r” or “asshole” a technical term. They are both indubitably part of the cultural lexicon.

The problem is that Steve is using the “technical term” argmument as a justification for what, at face value, is an insult, especially when he uses it repeatedly.

its an accurate description of the statistical analysis to which Gergis subjected data.

In all of your bleating and moaning, I fail to discern any critique of Steve’s analysis of Gergis’ indefensible practices.

Here we go: another yet apologist for the inexcusable shows up to quibble over the definition of a term, which in this case is clear, precise and altogether appropriate.

Bill h,

“As I’ll show below, it is hard to contemplate a better example of data torture, as described by Wagenmakers, than Gergis et al 2016.” – Steve McIntyre

Can’t be clearer. Go read the links to Wagenmaker that Steve McIntyre provided. These will serve much better than any dictionary to define and illustrate the abuses of statistical techniques known as “data torture”.

You should not expect any authoritative definitions of “data torture” to be issued by the tree ring climate community.

Craig, and what evidence is there in my post that justifies your attack on me.

I thought scientists were supposed to draw conclusion s based on evidence.

Easy there, Bill. What evidence was there in Craig’s response that you were being “attacked”? This is a site for informed and spirited discussion. Better develop a little thicker skin if you’re going to be that easily rattled by a couple of pointed questions.

G.Holcombe,

Sorry, but saying that I “think it is ok to keep doing statistical tests with different methods until you get a result you like? And ok to include or exclude data depending on how it affects the answer?” does look like an attack on my integrity.

Also in what way is Craig’s remark “informed”, to use your characterisation of this website. What evidence informs Mr. Loehle’s conclusion about what I think is “OK”?

bill h, it serves very well as a term for discussions. The phrase encompasses and represents violations of approved statistical techniques, as described in Steve’s links to Wagenmaker (did you avail yourself of those links?). Used in such a manner, it is a valid term. Yes, its use by a Nobel laureate in the same context authenticates its use by Wagenmaker.

The tree ring climate community would benefit enormously by a study of Wagenmaker and Steve McIntyre’s examples of “data torture” in the Gergis et al 2016.

Erm, David E. I suggest you have look at some of those “examples”, before citing them as evidence.

Also, where have I claimed ignorance of the term “data torture”? Again, a bit of a non-seq

bill, the monologues intersecting must both be in good faith to constitute a conversation. Yours is lacking something essential.

===========

bill h:

I cited the number of hits for that phrase to make the plain that it is widely used in peer reviewed articles published by those who practice applied statistical analysis,

that its been the subject of quite a bit of research,

and to show that if you are indeed ignorant of the term, or the application of it to a myriad of untenable practices, that you aren’t really qualified to maintain that your opinion on this issue is an educated one, thats all.

I suggest you look at a few screens worth of relevant research, just to familiarize yourself, before you go and waste others’ time again.

“I queried “data torture”, google scholar returned around 220,000 results.

heres the link:

https://scholar.google.com/scholar?hl=en&q=data+torture&btnG=&as_sdt=1%2C39&as_sdtp=”

If you use “data torture”

96 results.

not 220K

I replicated your search. good faith.

I got 220K, as you did

BUT

as I noted, they were mostly about ACTUAL torture

using a quoted “data torture” yeilds 96

Like I said.. do the math on 96/220000

First page includes

[BOOK] Torture and its consequences: Current treatment approaches

M Basoglu – 1992 – books.google.com

… Victoria 3166, Australia © Cambridge University Press 1992 First published 1992 Printed in Great

Britain by Bell and Bain Ltd., Glasgow A catalogue record for this book is available from the British

Library Library of Congress cataloguing in publication data Torture and its …

Cited by 192 Related articles All 3 versions Cite Save More

[CITATION] Torture: does it make us safer? is it ever OK?: a human rights perspective

K Roth, M Worden, AD Bernstein – 2005 – Human Rights Watch

Cited by 5 Related articles Cite Save More

Create alert

Page 2

Is torture reliably assessed and a valid indicator of poor mental health?

M Hollifield, TD Warner… – The Journal of nervous …, 2011 – journals.lww.com

… Descriptive data about the 3 groups are provided below. The TEQ was transformed to

dichotomous data (torture vs. nontorture) by combining the nontorture war trauma and no

war trauma groups into 1 nontorture group where required for analyses. …

Cited by 4 Related articles All 8 versions Cite Save

By page 5 it looks like this

https://scholar.google.com/scholar?start=40&q=%22data+torture%22&hl=en&as_sdt=1,5

Here you go

https://scholar.google.com/scholar?hl=en&q=%22data+torture%22&btnG=&as_sdt=1%2C5

96 results

not 220,000

Somebody learned to search from gergis or maybe it was just your typo.

Bill,

“… Sorry, but saying that I “think it is ok to keep doing statistical tests with different methods until you get a result you like? And ok to include or exclude data depending on how it affects the answer?” does look like an attack on my integrity. …”

Actually, that series words is terminated with a question mark? That is, you were asked a question. No one attacked you, though you could perhaps understand that your prior comment read in a rather “post-normally” permissive mode, and that some might view such permissiveness as a less than rigorous approach to gaining understanding. Wagenmaker is far from the only individual to use the term. I heard it in use by Peter A. Griffin, who taught some of my stat classes in ’70s. In fact, “data torture” is rather obvious simile when you look at statistics used on selectively biased data to support prior assumptions. Dr. Griffin once described a study we were required to read and criticize has having been conducted by a “data Torquemada.”

So, because a nobel laureate uses a phrase it automatically becomes a “technical term”

Bill,

Also see Mills, 1993 published in the NE Journal of Medicine:

http://www.nejm.org/doi/pdf/10.1056/NEJM199310143291613

no…

its your abject and proud ignorance of the term, its provenance, its history and its meaning thats somewhat irritating Bill.

Why not go to google scholar and search for “data torture”

get back to us?

here: i did the heavy lifting for you:

I queried “data torture”, google scholar returned around 220,000 results.

heres the link:

https://scholar.google.com/scholar?hl=en&q=data+torture&btnG=&as_sdt=1%2C39&as_sdtp=

that you seem to be unaware of this term, and its applicability to subject at hand says a lot about you bill, and not much of it is good.

“I queried “data torture”, google scholar returned around 220,000 results.

heres the link:”

I did the same thing.

However, I actually looked through pages of cites.

there were only a few that referred to data torture, the vast majority were about TORTURE

as in hurting people.

so… dig a bit deeper

When I googled “‘data torture'” with bounding quotation marks, nearly all the retrievals were relevant to data torture.

Steven Mosher

I did: https://climateaudit.org/2016/08/16/re-examining-cooks-mt-read-tasmania-chronology/#comment-770649

Did you not see that one?

From the Devil’s Dictionary by Ambrose Bierce:

Pen- an instrument of torture wielded by an ass.

Thank you Steve.

Mosh. youre simply not arguing in good faith.

a multitude of the citations returned by the query “data torture” submitted that way, with the term in quotation marks are on point.

Youre really grasping at straw men now.

You know what the term means…

Anyone who has done any work in the field does as well.

there are thousands of citations for that term.

Why you would choose this battle field to stand and fight is incomprehensible.

More to the point:

Do you endorse the practices that Gergis employs?

“Thank you Steve.

Mosh. youre simply not arguing in good faith.”

I used your link.

https://scholar.google.com/scholar?hl=en&q=%22data+torture%22&btnG=&as_sdt=1%2C5

96 results

not 220k

What’s your point, Mosh? That 96 retrievals is not enough? That more is needed?

I don’t see why you saw fit to complain in the first place. Piddling criticism. One the links here is to a 2007 CA post. In your comments you blew hot against the “tree ring circus” (your words).

How you have changed.

The google search for “data torture” reveals a number of papers. One of thse papers on “data torturing” has 173 citations. So the assertion that there are only 96 results for the concept appears to be refuted – 173 citations to one paper on the concept

I reviewed literature on data torture long ago and have notes on many interesting articles. I found some by Google and some by tracing citations.

I fail to see why it is relevant whether there are 96 google references vs 220,000 for the more general terms “data” “torture”, both of which are common words. I didn’t say that there were 220,000 references to “data torture”, so I’m puzzled at the point.

As mentioned before, I find the concept of “data torture” to be useful term to note poor statistical practice, especially in the context of motivated researchers, without implying the additional baggage of misconduct and/or fraud. I distinguish such usage from use of the term as mere abuse. I completely fail to understand why anyone would object to such usage.

In my recent comments on Gergis, I’ve generally tagged the usage with a citation to Wagenmakers (2011,2012), even using the additional qualification “sensu Wagenmakers” on occasion. As previously pointed, I submit that Gergis’ screening in Gergis 2016 is a classic example of data torture as described by Wagenmakers. I don’t see how anyone can reasonably contest this point, nor has anyone done so thus far.

Steve:

This is my fault.

I ran the query without quotation marks, and cited the number of hits google scholar returned…

Of course some are not relevant, however, many are.

Some here chose to pick a couple of non relevant hits, and focus on them, while ignoring the large number of relevant cites, the provenance of the quote and its applicability to the situation at hand.

Cherry picking?

IDK.

Its sad and interesting just what some choose to focus on.

BTW, I reread you post detailing your own efforts to update some tree ring chronologies.

The hardships you endured while attempting to access remote sites.

The logistics involved in bringing heavy equipment to the site, as well as the excellent documentation of the trees previously sampled, a practice which no doubt made it so much easier for you to identify those trees.

I should be clear.

I appreciate the work Mosh has done for all of us.

However it is obvious that he hasn’t absorbed the most fundamental concepts that undergird the entire enterprise of applied statistical analysis.

The results of this failure can be seen across disciplines, for example, the financial crisis caused by bundling collateralized debt obligations (it was argued that the individual mortgages’ behavior was independent of each other, a laughable construct once made explicit).

Mosh’s expertise with R doesnt mitigate his lack of concern for practicing sound statistical analysis.

You just have to love it.

Google ignores delimiters. In a Google search, Google applies their own version of what their programmers prefer you search on.

Bing, however, still allows delimiters.

Using Bing and the following search:

+”data torture”

returns 3,820 results.

First in line is Climateaudit’s “Joelle Gergis, Data Torturer”; but is followed by many diverse sources.

Mosher, as is becoming tediously common, seems to be more interested in raising pointless objections, than in addressing anything of substance. Yes, data torture (without quotations) will bring up many hits involving actual torture. However, Mosh’s response (change the search to “data torture”) is silly as it will exclude many relevant results. “Data torturing” yields an additional 265 hits on google scholar (probably some overlaps). “Torturing data” is 180. “Torture the data” is 634. I think it hard for anyone (including Mosh and bill h) to sustain an argument that it is not a term of art in the statistical literature.

If bill h is not just trolling and is genuinely interested in an answer as to what is data torturing, there is a good description in one of the first google hits from the famed NEJM: “IF you torture your data long enough, they will tell you whatever you want to hear” has become a popular observation in our office. In plain English, this means that study data, if manipulated in enough different ways, can be made to prove what-ever the investigator wants to prove. Unfortunately, this is generally true. Because every investigator wants to present results in the most exciting way, we all look for the most dramatic, positive findings in our data. When this process goes beyond reasonable interpretation of the facts, it becomes data torturing.”

“I fail to see why it is relevant whether there are 96 google references vs 220,000 for the more general terms “data” “torture”, both of which are common words.”

“I cited the number of hits for that phrase to make the plain that it is widely used in peer reviewed articles published by those who practice applied statistical analysis,

that its been the subject of quite a bit of research,”

Seems like 96 or 220K is relevant 220K is rather Yamal like

More funny is that I use the very link provided to do the search and get condemn for having bad faith.

too funny

Really Mosh:

this is beneath you.

I provivded a link, one that returned hundreds of thousands sites.

Now, be a big boy and refine my query if you dont wish to wade through the larger body of results.

As if my point is any less valid if there are only tens of thousands of citations?

Geez mosh, its just sad.

You present a selected subset of three responses and attempt to argue that the term isnt commonly used in peer reviewed literature?

That it doesnt exist in the current lexicon?

That my contentions were in some way incorrect?

mpainter, your non-sequitur responses are becoming rather trying. I am reminded of Rebecca West’s rather cynical definition of “conversation” as “an intersection of monologues”. To re-quote Steve:

” The term “data torture” is a term that is used in statistical commentary”. “It is a technical term”.

So, it’s a technical term drawn from the “statistical commentary community”. Your comment about the “tree ring community” has no relevance

Sorry, the second sentence of my quote is incorrect. It should read “It has a technical meaning”, NOT “It is a technical term”.

The post in large part is addressed to the tree ring climate community. My comment was indeed revelant.

Where is ATTP, I wonder.

Also, bill h, please correct me if I’m wrong, but you presumably agree with the rest of my comment above, as you did not demur. I copy it below for your convenience.

Posted Aug 19, 2016 at 11:34 AM | Permalink

bill h, it serves very well as a term for discussions. The phrase encompasses and represents violations of approved statistical techniques, as described in Steve’s links to Wagenmaker (did you avail yourself of those links?). Used in such a manner, it is a valid term. Yes, its use by a Nobel laureate in the same context authenticates its use by Wagenmaker.

bill h: Your subtle ploy was too clever for us. The old “false AND immaterial remark” ploy, to get us arguing whether it’s more untrue than pointless or more pointless than untrue. And asking the host to do your homework, nice touch. Sorry that last part failed for you. Oh, first part failed too. Look at the bright side, you’re good at failing. Just thought I’d help you recognize an attack

LOL, here is another technical term for bill h, “cavil”. Would I be cavalier to call bill h a caviler.

How about ‘draws conclusions that are extremely non-robust to arbitrary methodological choices’.

bill h, you need to correct your mis-attribution in this comment. Steve posted the words “technical meaning”, and your comment above falsely attributes to him the expression “technical term”.

Thus your fuss about use and definition is baseless.

Somewhat loath to continue to kick this can down the road, but the man did ask for a reference in a dictionary of technical terms or some such.

I note that the Data Archives section of the Encyclopaedia of Biostatistics (which this what this is basically all about) says:

“Finally, the requirement to provide original data is one protection against the production of findings based on a particular statistical approach to the data – findings produced by what is commonly called “data torture” and the actual fraudulent invention of results.”

Davey Smith, G. 2005. Data Archives. Encyclopedia of Biostatistics. 2.

Can we move on?

TY HAS.

Actually David I should have added a h/t in your direction. All I did was add quotes around the phrase in your google scholar search and it found itself.

billh, I would just suggest that the term is well defined in the statistical literature. The real question is whether or not you disagree with SteveM’s characterization of Gergis et al. If so, please tell us why. If not, then you are just quibbling.

Tales of Horror from Ivory Towers, vintage 1980, mentions “data torture.”

Steve: an article that acknowledged with thanks comments from my former golf adversary, George Stigler.

you golfed with Stigler?

now Im impressed.

Steve: Stigler used to take summer vacation on Lake Rosseau in Muskoka, Ontario. He played at Muskoka Lakes Golf Club, as did my family. When I was 13 or 14, I was playing with some friends and were playing behind Stigler and his friends, who were taking forever. On the 11th hole, a par five, we got tired of waiting for them and hit our second shots before they finished putting out. I hit a really good shot and it run onto the green about 10 feet from the hole inside where they putting out. I was really excited because I had a chance for an eagle, which I’d never had. However, Stigler picked up my ball and threw it into the woods. I knew who he was because I’d caddied for my father in a club tournament against Stigler, who’d beaten him. By chance, I played against Stigler in the club tournament later in that summer. Trounced him. He was pretty annoyed because I was then pretty young.

Mike Spence, another Nobel laureate, was an older contemporary of mine at high school in Toronto.

I am less impressed with Stigler now than before I knew this

😉

Im glad you got the chance to beat him.

A slow, poor golfer shouldn’t ruin some kid’s excellent round.

It is a certain kind of guy who takes it upon himself to teach someone else’s 13 year old boy a lesson.

Am reminded of Stigler’s Law of Eponymy which holds that no scientific discovery is named after its original discover. (Stephen Stigler–George’s son) At first blush it occurred to me that the Mann Hockey Stick runs counter to this, then I remembered the “scientific” qualifier.

You say:

On the contrary, if I were to characterize the literature using the phrase, I would say that the term is used somewhat differently by different people. I have never said that “date torture” is a “well defined term”. That is a fabrication on your part.

Precisely because there is no uniformity of usage, I cited Wagenmakers (2011,2012), which used the term to describe a variety of disapproved statistical techniques, arguing that Gergis et al 2016 amply met the criteria for data torture sensu Wagenmakers – an observation that you have not directly contested.

Your assertion that I “only seem to be able to cite one individual, by the name of Wagenmakers, as using this “technical term”” is simply untrue. I could have cited numerous other uses of the term “data torture”, but was writing a blog post about Gergis et al 2016, not an exegesis of the term “data torture”. Other uses can readily be found through Google and by tracing various references.

While I do not have time to fully canvass the uses of the term “data torture”, I think that the term is particularly useful in characterizing poor statistical practices (of which there are manifold types), which one does not wish to characterize as fraud or misconduct (though characterization as data torture does not exclude). Wagenmakers 2011, 2012 gave a variety of examples.

Clarity.

Charity.

Two foxes shot. That was fun to read.

Gergis’s response on her paper gergis 2012 and gergis 2016 was that the only error was the incorrect reference to de trended data instead of trended data. Her claim was that correcting for the “typo’ / “missidentification” of the method was the only error and that amateur Bloggers were making a mountain out of a mole hill.

https://theconversation.com/how-a-single-word-sparked-a-four-year-saga-of-climate-fact-checking-and-blog-backlash-62174

If I am not mistaken, the substantive issue is the use of certain proxies which provide the “desired result and the exclusion of proxies which contradict the desired result.

Her argument (which includes complaints of blogger gender bias) is more of a case of trying to hide the mountain with a mole hill.

I just read that myself. Hard to see her use of the word “typo” as anything but disingenuous, same with her characterization of why it took so long to get through peer review. The whole page is a bit creepy, a blog called “The Conversation” with a painfully false narrative followed by only cheerleading comments because the other side of the “conversation” was removed by the moderators.

BillH:

“The problem is that Steve is using the “technical term” argmument (sic) as a justification for what, at face value, is an insult, especially when he uses it repeatedly.”

No, that’s not the “problem.” The problem is that you are ignoring the substance of this post and trying to create an argument about the use of a word description of an inappropriate “statistical” device; the appropriateness and prior use of which has been amply pointed out to you above.

If you have something to say about the substance of this post, I would invite you to say it.

Phil

well put phil.

The search for victimhood continues …

Heh, we’re injured but she’s supposedly insulted. The search for justice stumbles blindly on.

===========

Stockbrokers are notorious data miners, which is quite understandable since they are selling, to earn commissions.

The literate Andrew Smithers:

“Data mining is the key technique for nearly all stockbroker economics. There is no claim that cannot be supported by statistics, provided that these are carefully selected. For this purpose, data are usually restricted to a limited period, rather than using the full series available. Statistics, it has been observed, will always confess if tortured sufficiently.”

http://www.ft.com/cms/s/1/9a3965a0-7c8c-11da-936a-0000779e2340.html#axzz4HnzEOz2d

Gergis is also selling, and has her preferred answer well in mind.

Steve Mc:

Steve, in re-reading your post it occurred to me that much of the chronology are from ancient dead wood cores. Would the mix of ancient wood normally provide a fear of inhomogeneity? I understand that RCS does not do well with inhomogeneity. There would still be no excuse for Gergis citing “consistency with published results,” as an excuse, especially on a selective basis. But Allen uses the excuse of dealing with the “segment length curse.” Could RCS have handled this effectively? Did Allen’s excuse have merit? What constitutes inhomogeneity?

I also noticed now that the tree ring width chart goes back 4000 years; why not the chronology? Was there possibly a hiding of a very large 450BC-250BC warming period?

If one were manning the fort and defending the consensus building on the modern warming period being unprecedented for millennia, the divergence problem must be attributed to a recent anthropogenic effect that could only occur during the recent warming period. In that manner the past temperature history remains unaffected and the skeptical proposition that it makes that past history uncertain can be waved off.

The CO2 fertilization issue is a bad effect for the above consensus since it corresponds with the recent warming and thus CO2 cannot be acknowledge as an effect or probably even a potential one.

There is, of course the, possibility that divergence with a downward series ending trend and CO2 with an upward ending trend are merely part of the same stochastic wandering up and down of a series without a long term trend. Of course, if you are looking for post facto selections that up and down wandering is a gift that keeps on giving.

I believe M. Mann is the only author doing temperature reconstructions who has cut and pasted proxies in order to avoid showing the effects of both divergence and CO2 fertilization. The divergence changes were in Mann (2008) for the MXD tree ring series and as I recall the CO2 changes were for either a Mann paper in 2006 or 2007 for some North American trees that showed a very large change that started before the likely advent of the effects of increasing GHGs on temperatures.

Ken, Craig’s paper, Loehle(2008), covers the divergence problem well. One point in the paper is that there’s no reason to assume that growth response to temperature would be linear. We know the curve must flatten out and then decline with temperature. And then are we talking average temperature? How that energy is delivered is important. Does it include scorchers, heat waves or warmer nights? What range of variation?

The total number of parameters affecting ring growth unique to species and location are staggering. Precipitation frequency and timing, humidity, evaporation rates, nutrients, wind, disease, pests, competition, frost damage, fire, sunlight and temperature range variation, all have no relation to mean growing season temperature, never mind year-round. Each one of the parameters has a such a complex interaction it seems hopeless. But if one could prove that temperature was the continuous limiting factor over hundreds or thousands of years, and you could prove a known curve to CO2 as well as temp, one might have a shot if protocols were established and followed to prevent sampling bias or processing bias (data torture).

If this can be done for two decent independent proxies near each other the odds of getting significant information improve immensely. I try to be optimistic.

Ron, the multitude of variables that can potentially affect tree ring response makes using all the proxy data (as opposed to post fact selection) an important issue when reconstructing temperatures. If all the variable effects except that from temperature were random then over time and space one would consider that with sufficient samples the other effects would cancel out. That would mean that one has to use all proxy data series whether they end with an upward, downward or no trend. If these other non temperature variable effects are not random then we have a situation where tree rings are not valid temperature indicators. The only way around this problem would be to have independent evidence for these non random and non temperature effects going back in time.

Attempting to sort all this out should be an exciting field crying for interested scientists to investigate. That interest will probably not come from the dendroclimatology community since the (incorrect) use of post facto proxy selection gets around having to be concerned with these issues other than on a superficial level. There is also the political issue that such investigation calls into question the conclusions drawn from past temperature reconstructions. It well may be that tree rings will never be a proper proxy for temperature reconstructions (which might well be the conclusion of a basic investigation) and as a result other better understood proxies would be pursued.

kenfritsch,

“The CO2 fertilization issue is a bad effect for the above consensus since it corresponds with the recent warming and thus CO2 cannot be acknowledge as an effect or probably even a potential one.”

It is also bad for the consensus if they continue with the “CO2 is a pollutant” meme.

Why don’t you create your own chronology and publish it?

So I take it that you have no substantive issue with Steve’s analysis?

You haven’t presented one.

Evan, your comment reminded me of this gem https://climateaudit.org/2007/10/12/a-little-secret/

Suppose that a peer reviewer raised these challenges prior to publication of the article. Is it your view it would be sufficient for the author to challenge the peer reviewer to publish his own chronology? Obviously not. So why do you take exception to my raising issues that the peer reviewers missed?

For some reason I think they’d love for you to get tied up with some heavy work, Steve.

Great idea, while we are at it:

– a pharmaceutical company can tell the government to publish their own trial results

– a bank can tell an auditor to run their own bank

– an engineer can tell the people testing a safety critical system to build their own

– a company can tell their shareholders to start their own company.

– an employee submitting expenses can tell their company financial department to get lost

While we are at it, since when does the person auditing have any need to be nice, friendly and non snarky. They sure are not in my world. Frankly the light snark here is in my opinion very mild, well deserved and nothing compared to what goes through the minds of many of us reading here.

Steve: Most brokerage firms employ industry analysts who analyse individual companies within an industry. As you say, a CEO, confronted with a negative report from an analyst, can’t just say: start your own company and see if you do better. The analyst’s job is to provide an independent assessment to the public/clients. If climate scientists didn’t make press releases to the public or expect the public to do things on the basis of their assertions, then there would be no interest in critical analysis of their assertions. However, the situation seems to be otherwise.

You too?

Steve,

I am not taking exception to your critical reviews of the work of others. I am suggesting that maybe it’s time to go beyond that.

In fact, Steve McIntyre has completed a study involving cores from bristle cone pines and d18O analysis. The results were posted here a few years ago.