The strong El Nino has obviously caused great excitement in the warmist community. It should also cause any honest skeptic/lukewarmer to re-examine whether observations remain inconsistent with models. In today’s post, I’ll show two comparisons: 1) CMIP5 models (TAS) vs HadCRUT4; 2) CMIP5 models (TLT) vs RSS (UAH is only negligibly different). For this post, I’ve used the same scripts as I used in earlier comparisons.

Surface Temperatures (TAS)

First, here is an updated comparison of the 5-95% envelope of CMIP5 runs (TAS – surface) to most recent HadCRUT4 (black ), which, as of right now, goes only to October last year. Satellite data is available through December. Weekly Nino 3-4 data shows a slight downtick in the last half of December (see David Whitehouse here; original weekly data here). .

HadCRUT4 to October had not quite reached the transient of the CMIP5 model means, but it looks like it will. In the graphic below, I used RSS satellite data to estimate HadCRUT4 (+ signs) for December – simply adding the RSS deltas to the closing HadCRUT4 value. This slightly pierces the model mean transient. If the present El Nino is like prior El Nino’s, then we can expect a fairly sharp decline in GLB temperatures in 2016. We will see whether these levels will once again fall outside the 5-95 percentile range of CMIP5 models. My guess is that they will. To my eye, the El Nino peaks are gradually losing ground to the CMIP5 model mean: 1998 went above the envelope; the prior El Nino easily exceeded the model mean.

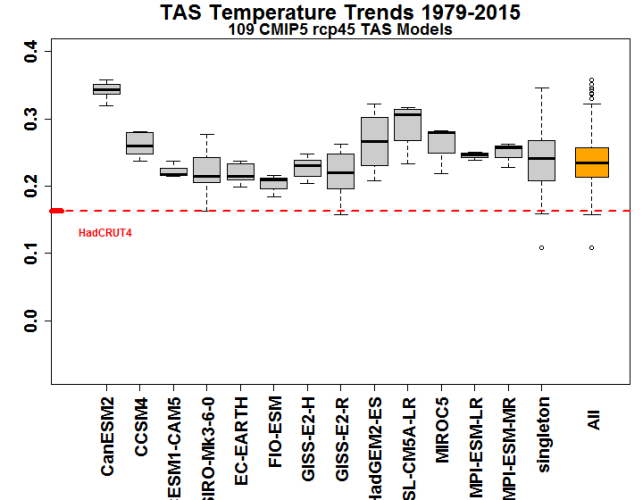

While a comparison of observations to a 5%-95% percentile envelope of CMIP5 models is important for orientation, the next graphic, in my opinion, is considerably more illuminating as it disaggregates the various models and focuses on the trend since 1979. In it, for models with more than one run, I’ve done boxplots of the trends, grouping singletons as one model class. On the right (orange), I’ve done a boxplot for all CMIP5 runs. All of the individual models have trends well above observations, with the CanESM2 model from Andrew Weaver’s group being a wildly overheating performer. There are now over 440 months of data and these discrepancies will not vanish with a few months of El Nino.

Lower Troposphere (TLT)

Next, here are corresponding graphics for the lower troposphere, using the RSS series preferred by warmists (data is available through December). The reference period for these comparisons is 1979-1990 (versus 1961-1990). While the El Nino peaks occur at the same time in both series, there is a dramatic difference in the trend of peaks, which decline not only relative to the model mean transient, but in absolute terms. Even December El Nino values only return the series to barely within the 5-95% envelope. About a decade ago, there was considerable controversy over the discrepancy between satellite and surface trends. Skeptics obviously have taken issue with the surface record, but it should be kept in mind that the possibility of systemic bias in the satellite data also needs to be kept in mind.

Be that as it may, there is great consistency to the corresponding comparison for surface trends in the boxplot comparison of trends for individual models against observations shown below. For all models, the model trend is more or less double the observed trend, somewhat more pronounced than for surface trends, but structurally very similar.

A Comment on Anomaly versus Temperature

The El Nino had a very dramatic impact on Toronto temperatures, which set records in December. The anomaly temperature would be a gaudy red on anomaly maps. How did we manage to survive? Experientially, it was a slightly cooler version of (say) San Francisco weather. It felt very pleasant for December, “good weather” rather than bad. The forecasters now say:

Following the extended bout of warm weather, it appears Toronto will return to more seasonable temperatures in the coming days.

“More seasonable weather” sounds good, but it means that we’re now going to see some brutal cold. Ryan Maue warns of the return of the polar vortex.

I mention our local weather because the negative impact of warmer winter weather is far from obvious to me. It did have a negative impact on high CO2 footprint local residents who, like Eric Steig, travel outside the city to ski, as the season is delayed, but a positive impact on reduced heating bills.

244 Comments

I get pretty similar results even when using climate model data adjusted to better match the observed HadCRUT4 coverage (via Cowtan et al., 2015). See here:

-Chip

Since hadcrut4 is the coolest record one wonders why a good analyst would not show all the records..

And brace yourself for a new product from hadcrut……

Steven,

I am making no claims about being a good analyst, rather just using the data provided by Cowtan et al. from their 2015 GRL paper. In it, they only adjusted the climate models to match the HadCRUT4 (the last I looked), so my non-analytical options were limited.

-Chip

“I get pretty similar results even when using climate model data adjusted to better match the observed HadCRUT4 coverage (via Cowtan et al., 2015). See here:”

Sorry Chip. I thought this sentence meant you did some work

Not much! Just added in a guess at 2015.

I’m no climate scientist, but perhaps you guys can help me understand something.

In all the model projections I’ve seen, the further into the future you go the wider the error bars get. This seems highly counterintuitive IF CO2 is the control knob for global temperature.

It seems to me that over time, as CO2 levels pass the various tipping points, they should begin to take a larger role, and the role of other natural variability must necessarily diminish. Why don’t all the climate models narrow to a greater precision over time, as the “pure physics” of CO2 that has been well understood for more than a century asserts a larger and larger role in determining the temperature of the globe? The greatest variability should be today and going backward where all the other natural variability has predominated, but now that we’re in the CO2-era, we should have much greater predictive ability.

Steve Mosher wrote: “Since hadcrut4 is the coolest record one wonders why a good analyst would not show all the records. And brace yourself for a new product from hadcrut……”

Steve and Steve: GMST is mostly SST. The SST record has been compiled from a changing mix of sources and the overall warming rate is dependent on the correction for bias between multiple data sources. I have no idea of whether ERSST4 is better than ERSST3. I am sure that global temperature trends are uncertain simply because there are many ways to approach correcting bias between multiple data sources. And those doing the correcting have personal biases. I don’t need to re-read your book on Climategate to be skeptical of this process.

What is the best temperature record that has been complied from a single measurement technology (and therefore isn’t subject to the ERSST3/ERSST4 dilemma)? Satellite measurements of the lower troposphere as analyzed by two competing groups? If so, I’d like to see model projections for the lower troposphere compared with UAH and RSS.

We have SST’s measured by satellites for the last few decades. Those records have problems with aerosols and surface wind, but at least they are homogeneous. Everyone seems to ignore them.

Finally, we have the land temperature record to compare with projections of warming over land from climate models. I have far more faith in comparing land observations to land projection, than global observations to global projections.

Steve: you say: “If so, I’d like to see model projections for the lower troposphere compared with UAH and RSS.”. Why don’t you read the above post, approximately half of which is devoted to that comparison.

The most recent ERSST adjustments are relevant to the measurement of the present El Nino relative to 1998. On the satellite scale, it seems to be not as strong, but under the new ERSST, it is slightly stronger.

Steve: I began by replying most to Steve Mosher – who was hinting that the Hadley global surface temperature record was likely to be revised upward for the same reasons are others – reprocessing the SST record.

My frustration with the uncertainty in composite temperature records from changing sources is no excuse for my not retaining the most important information in your post. My apologies. I only wish there were other homogeneous sources besides TLT that could be used. ARGO probably isn’t long enough. Satellite SSTs? Land?

Chip,

What about this paper which seems to be suggesting that if you do the comparison only with the models in which the internal variability (represented by the ENSO Oscillations) is in phase with the observations, the comparison is quite good.

ATTP,

Also consider Gavin’s 2014 paper which casts and ENSO signal on the model ensemble mean…so, the multimodel mean ought to tick up in 2015, which would keep observed 2015 in the lower half of the model distribution.

-Chip

Chip,

It depends what you mean by multi-model mean. In the 2014 paper, they do include an ENSO corrected form (which is the dashed blue line in Figure 1), but it’s not the same as the multi-model mean in the figure you’ve included. When they do include the ENSO correction, the comparison is improved, so I’m not sure what you’re really suggesting. We don’t expect the observations and multi-model mean to match at all times – that’s why there’s a range.

Steve: is this the sort of match between models and observations that you endorse?

I think ATTP’s contention is the trend chart Steve shows is not a strict apples-to-apples comparisons for reasons having to do with 1) model TAS is not the same as the observations contained in HadCRUT4 (addressed in Cowtan et al., GRL, 2015) and 2) the real-world forcings are not the same as the model-world forcings (e.g., Schmidt et al, NatGeoSci, 2014). The third issue, regarding ENSO effects isn’t really on-point (the multimodel range ought to include this).

In a post over at Climate Etc a couple of weeks ago, we addressed the first issue (see Figure 3 from http://judithcurry.com/2015/12/17/climate-models-versus-climate-reality/). I am not sure the second issue is on as firm footing (i.e., what the real forcings are).

The models still appear to run hot.

-Chip

No, what’s endorsement got to do with this?

A horse is a horse of coarse endorsement

And models they shun the observations.

=========================

you are aware that an ensemble mean, or a multimodel mean is indefensible and meaningless at best?

The models aren’t independent; the very notion of an ensemble mean lies outside of accepted statistical analysis, and is at best uninformative, at worst, misleading.

I must say I’m unclear what a 15 year trend in Nino3.4 being the same in a model and in observations is telling us. It is a very volatile and I’m not at all sure what selecting models on this criteria tells us, and in particular whether it tells us that these models are in phase with the observed Nino.

I note that the models selected presumably change for each separate 15 year selection so moving from one year to the next we potentially are comparing the obs to quite a different set of models.

Just to add an overnight rumination on this paper.

The intuitive thing to do in order to select on models that are more in phase with nino3.4 would be to create an index of the correlation coefficients between model nino3.4 and obs nino3.4 over the trend periods being evaluated. This would provide a much better test of the hypothesis being advanced.

The thing that I find depressing about papers like this (and the review process) is that if the authors went to that much work and thought about the subject that much, why not try the obviously better test and report on it? They had calculated the trend, no doubt their software also provided the corr. coef.

Without doing a replication to see what the result would be, one has to suspect a case of the dog that didn’t bark in the night.

Your cited paper, Risby (2014) (co-authors include Lewandowsky and Oreskes), had cherry picked four “best” and four “worst” models which they did not identify out of 18 which they did identify picked out of the CMIP5 52-56 coupled pairs, making critical analysis largely pointless as concluded by Bob Tisdale who reviewed the paper for WUWT. The paper did confirm that the ENSO cycles are randomly out of cycle in the models. This, in effect, washes ENSO’s influence out of the CMIP5 mean, unlike the volcanic forcing that is precisely administered once it is actually observed (appearing only post facto in trend plots). ATTP or anyone else who can find a paper that extracts the ENSOs out of each model and then re-distributes them to properly observed history would be doing a service. In that case one could critically compare models to land station indexes, sea surface indexes, vs. satellites’ TLT for clues in skill. In particular, it would be interesting to compare responses to the next major volcanic eruption to see if adjustments in ERSST4 and others have changed the response of past known non-GHG forcing spikes compared with future.

Steve: this timing issue is irrelevant to the comparison in the boxplot of trends over 37 years.

Also irrelevant to the comparison, but cool, is this animation of 24 hours in the life of the planet as seen by Himawari-8 in August.

Good to see the Canadian model pushing for the extra warmth it knows, in its heart of hearts, the country will benefit from.

Members of the Optimists of Canada.

I “threw out my back” in December. The lack of snow and ice in Toronto has been a gift from the heavens.

‘To my eye, the El Nino peaks are gradually losing ground to the CMIP5 model mean: 1998 went above the envelope; the prior El Nino easily exceeded the model mean’

Yes. All the ‘excitement’ that temperatures have for two months reached the model mean is ridiculous – exactly the kind of cherry-picking that supposedly should be avoided.

John Christy showed temps with a 5-year running mean in the last hearing.

It would be interesting to see that for the surface (eyeballing I’d say they are right at the bottom of the confidence interval).

The other problem with model hindcasts is that with so many low-certainty forcings you can get a very good fit just by playing around with the numbers. The most famous is aerosols but apart from that you have at least two positive forcings (tropospheric ozone, black carbon on snow) and two negative ones (deforestation, stratospheric ozone). The contribution of F gases also isn’t very clear.

http://escholarship.org/uc/item/902057fn

Red is ECS = 5C, blue is 2C. So very different results can be ‘consistent with’ observations.

When are models “wrong”?

Under the scientific method, when do we recognize “the models are wrong” – and the “emperor has no clothes”? Nobel Laureate Richard Feynman

vis Global Warming and the Feynman test Tom Trinko Sept. 11, 2014

The IPCC declares:

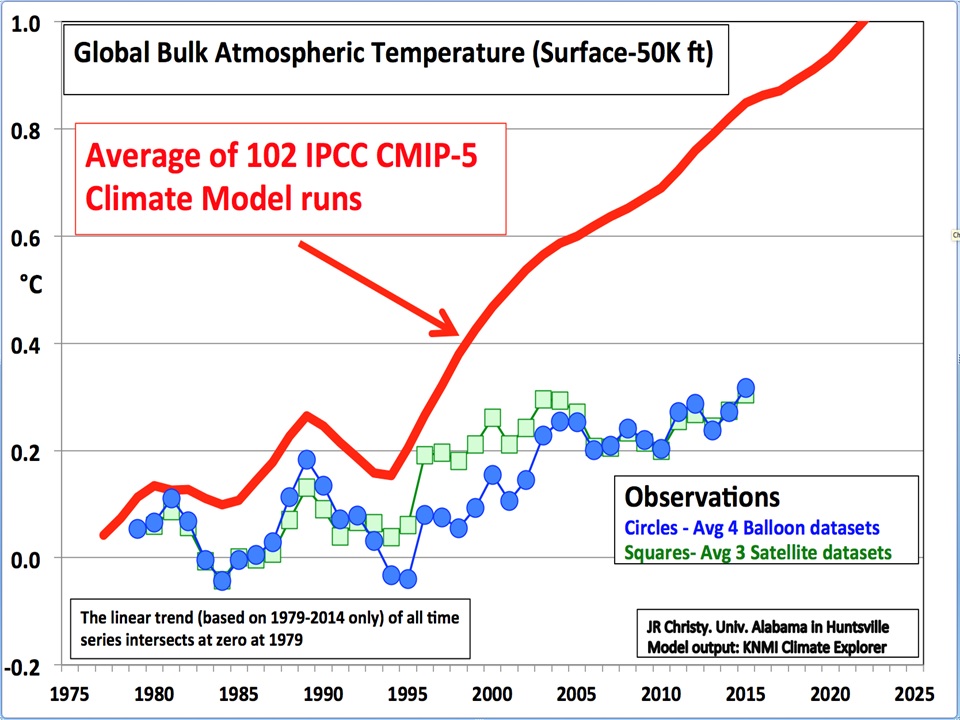

Steve’s Tropospheric Temperature graph shows ALL the models (5% to 95% ) exceed the temperature records over the satellite era which now extends longer than that 30 year period.

John Christy’s graph above shows the climate model mean prediction over the 35 year satellite error is now 400% of the actual satellite tropical tropospheric temperatures.

If that does not constitute “wrong” and “failed” what does?

“The strong El Nino has obviously caused great excitement in the warmist community.”

Before the Team schedules the victory parade, they should consider the old adage that even a broken clock tells the correct time twice a day.

Reblogged this on I Didn't Ask To Be a Blog.

Steve The key take away from your post is ” All of the individual models have trends well above observations, with the CanESM2 model from Andrew Weaver’s group being a wildly overheating performer. There are now over 440 months of data and these discrepancies will not vanish with a few months of El Nino.”

The latest Australian BOM analysis states “A number of El Niño-Southern Oscillation (ENSO) indicators suggest that the 2015-16 El Niño has peaked in recent weeks. Tropical Pacific Ocean temperatures suggest this event is one of the top three strongest El Niño events of the past 50 years. Climate models suggest the 2015-16 El Niño will decline during the coming months, with a return to ENSO neutral likely during the second quarter of 2016. ”

The peak in the natural millennial temperature cycle probably occurred in about 2003 following the peak in the solar driver at about 1991.The current situation is summarized in the following exchange with Dyson.

” E-mail 4/7/15

Dr Norman Page

Houston

Professor Dyson

Saw your Vancouver Sun interview.I agree that CO2 is beneficial. This will be even more so in future because it is more likely than not that the earth has already entered a long term cooling trend following the recent temperature peak in the quasi-millennial solar driven periodicity .

The climate models on which the entire Catastrophic Global Warming delusion rests are built without regard to the natural 60 and more importantly 1000 year periodicities so obvious in the temperature record. The modelers approach is simply a scientific disaster and lacks even average commonsense .It is exactly like taking the temperature trend from say Feb – July and projecting it ahead linearly for 20 years or so. They back tune their models for less than 100 years when the relevant time scale is millennial. This is scientific malfeasance on a grand scale. The temperature projections of the IPCC – UK Met office models and all the impact studies which derive from them have no solid foundation in empirical science being derived from inherently useless and specifically structurally flawed models. They provide no basis for the discussion of future climate trends and represent an enormous waste of time and money. As a foundation for Governmental climate and energy policy their forecasts are already seen to be grossly in error and are therefore worse than useless. A new forecasting paradigm needs to be adopted. For forecasts of the timing and extent of the coming cooling based on the natural solar activity cycles – most importantly the millennial cycle – and using the neutron count and 10Be record as the most useful proxy for solar activity check my blog-post at http://climatesense-norpag.blogspot.com/2014/07/climate-forecasting-methods-and-cooling.html

The most important factor in climate forecasting is where earth is in regard to the quasi- millennial natural solar activity cycle which has a period in the 960 – 1020 year range. For evidence of this cycle see Figs 5-9. From Fig 9 it is obvious that the earth is just approaching ,just at or just past a peak in the millennial cycle. I suggest that more likely than not the general trends from 1000- 2000 seen in Fig 9 will likely generally repeat from 2000-3000 with the depths of the next LIA at about 2650. The best proxy for solar activity is the neutron monitor count and 10 Be data. My view ,based on the Oulu neutron count – Fig 14 is that the solar activity millennial maximum peaked in Cycle 22 in about 1991. There is a varying lag between the change in the in solar activity and the change in the different temperature metrics. There is a 12 year delay between the activity peak and the probable millennial cyclic temperature peak seen in the RSS data in 2003. http://www.woodfortrees.org/plot/rss/from:1980.1/plot/rss/from:1980.1/to:2003.6/trend/plot/rss/from:2003.6/trend

There has been a cooling temperature trend since then (Usually interpreted as a “pause”) There is likely to be a steepening of the cooling trend in 2017- 2018 corresponding to the very important Ap index break below all recent base values in 2005-6. Fig 13.

The Polar excursions of the last few winters in North America are harbingers of even more extreme winters to come more frequently in the near future.

I would be very happy to discuss this with you by E-mail or phone .It is important that you use your position and visibility to influence United States government policy and also change the perceptions of the MSM and U.S public in this matter. If my forecast cooling actually occurs the policy of CO2 emission reduction will add to the increasing stress on global food production caused by a cooling and generally more arid climate.

Best Regards

Norman Page

E-Mail 4/9/15

Dear Norman Page,

Thank you for your message and for the blog. That all makes sense.

I wish I knew how to get important people to listen to you. But there is

not much that I can do. I have zero credibility as an expert on climate.

I am just a theoretical physicist, 91 years old and obviously out of touch

with the real world. I do what I can, writing reviews and giving talks,

but important people are not listening to me. They will listen when the

glaciers start growing in Kentucky, but I will not be around then. With

all good wishes, yours ever, Freeman Dyson.

Email 4/9/15

Professor Dyson Would you have any objection to my posting our email exchange on my blog?

> Best Regards Norman Page

E-Mail 4/9/15

Yes, you are welcome to post this exchange any way you like. Thank you

for asking. Yours, Freeman Dyson.”

Note the exchange was written last April- the cooling trend referred to is now temporarily obscured by the major El Nino but will return by 2017 -18 in the probably following La Nina.

A wonderful interaction Norman, thank you.

Unfortunately, pointing this out gets skeptics nowhere. I once posted a link to the IPCC itself, showing how little confidence it had in their models.

I had absolutely no comeback to the inevitable “Why would scientists use models if they didn’t work, you ignorant, oil-industry shrill liar idiot scumbag troll”. I may have amalgamated some responses for that last part.

I don’t follow the news all that closely, but how much coverage was there of the upcoming El Nino? Obviously, global warming or no global warming, you would think people would know not to make skiing plans in December in an El Nino year.

Steve: Ontario skiing is about a 90-mile drive from Toronto and does not require elaborate advance planning. most Ontario skiers ski throughout the winter, traveling north on the weekends, and a certain amount of unavailability is allowed for. Our family did this when I was a young teenager. The ski areas supplement with man-made snow.

The problem in Canada is that the English media is centered in Toronto. If there is no snow on the ground in Toronto, then there is no snow anywhere on the ground anywhere in Canada – even if you only need to get a few klicks north of the UHI that is the concrete jungle of Toronto to see snow.

I’m from Muskoka (which is just north of Ontario’s main ski area) which is in the so-called “snow belt”, (named because cold winds moving across the warmer waters of Georgian Bay (part of Lake Huron) bring large amounts of snow, often in huge “streamers” of snow squalls.)

This is only the second of my 51 Christmases that have been green (1982 was the other). We did note that going through an ancestor’s diary, there were green Christmases in the 1800’s. But that was before the Big Warming Scare…

I recall 1982 as well. One of my brothers got married in December and it was really mild. As I’ve mentioned on other occasions, one of my sons caught a frog near Collingwood on New Year’s Day.

This el nino has me puzzled. On the east coast of Australia it has been unlike any that I have previously experienced. Lots of rain and only a few hot days. Right now we have had 200 mm in the last 48 hr and still going. If this is el nino I’m not looking forward to the following la nina.

ColinD

Have a look at the 2015 Pacific temperatures at

http://weather.gc.ca/saisons/animation_e.html?id=year&bc=sea

Then check 1957-58

at

http://sabolscience.blogspot.com.au/2015/08/a-deeper-look-at-top-10-el-ninos-since.html

That wasn’t a drought year in my part of western Qld

Ian, the 57-58 looks much weaker than the current one, if I am reading the legend correctly.

ColinD (and Another Ian), I’ve been thinking the same thing. Whatever the reasonable expectations from El/La might be, the cycle has been presented (to Australians at any rate) as broadly El Niño => heat and drought; La Niña => cool and floods.

I was obligingly expecting a hot and dry summer here in south-east Queensland, but it hasn’t worked out that way. The ABC reported that “despite SA recording the hottest October and December on record” the year for Australia was (disappointingly) only the 6th hottest on record. SA and Victoria may have had their moments, but in Qld, things have been pretty damp.

So, as you say, ColinD, if this is an El Niño, watch out for La Niña. Although, of course, she might misbehave as well. Just when you get a nice nice solid hook to hang your predictions on, it falls off the wall.

BOM actually predicted average rainfall early on due to Indian Ocean influences overcoming El Nino effects. When it did not rain down South El Nino was quickly trotted out as the reason.

Here in the Great Pacific Northwest, we’re always told that El Niño brings warmer drier weather west of the Cascades, and La Niña the opposite. But I saw somewhere, can’t remember where, that historic data showed it was pretty much a toss up, either phenomenon could cause either warmer/drier, or colder/wetter, or some combination thereof during any particular occurrence.

The El Nino had a very dramatic impact on Toronto temperatures

I do not follow this. El Ninos effect the positions of the troughs and crests of the jet stream?

Steve: I guess so. In El Nino years, it tends to be warm here. One of my sons caught a frog on New Years Day in the 1983 El Nino (though we didn’t know it was an El Nino then).

Steve,

Thanks for this.

Dear Steve,

Just a question, in the box plots you indicate RCP45 but in the temperature vs time plots you indicate “5-95% envelope of CMIP5 runs”. Okay but also for rcp45 exclusively? Or maybe for all scenarios up to rcp85? Since climate talks usually take rcp85 as business as usual, maybe an explicit comparison for that would be interesting?

I tried that here for 30 year trends (ie every point on the graph represents the trend of the previous 30 years):

https://dl.dropboxusercontent.com/u/22026080/rcpvsground.xlsx

Just an aside RCP8.5 isn’t business as usual. It is an upper bound on the IPCC AR5 scenarios, in general reflecting the 75% upper limit of the scenario drivers. In IPCC-speak I guess that makes it unlikely.

75% upper limit of the scenario drivers or top of the scenario drivers?

but also business as usual.

It is the 50% marker as Business worse than usual which makes up the other 50% is not modeled [I thought].

Consequently any average of models is already missing 50% of predictions on the high side as they were never made.

Not business as usual. Riahi et al “RCP 8.5—A scenario of comparatively high greenhouse gas emissions” Climate Change (2011) describes it as “the upper bound of the RCPs” and “a relatively conservative business as usual case”. It is the latter reference that saw BAU get incorrectly applied to it.

Riahi et al further states “With few exceptions …. RCP8.5 builds … upon the socio-economic and demographic background, resource assumptions and technological base of the A2r scenario.” This A2r scenario is described in Riahi et al “Scenarios of long-term socio-economic and environmental development under climate stabilization” (2007) as aiming “to be positioned above .. the 75th … percentile … of the comparable scenario literature, but without all their salient scenario parameters necessarily always falling within this indicative range.”

Basically treat RCP8.5 middle projections as the upper bound of the likely scenarios.

Andy makes an important point. RCP45 assumes mitigation measures beyond anything visible today. RCP85 is the scenario behind almost every extreme climate forecast, used because of the fallacious labeling of it as the “business as usual” scenario.

Why did you choose RCP45 for this comparison?

Steve: I used RCP for the comparison when I did it previously a couple of years ago. For the period in question, there isn’t much difference between the two and it wouldnt impact the appearance of the graph. Plus I’ve been very sick and, since I had one consistent set of model runs, had zero interest in verifying another set.

Steve,

Thanks for the explanation! Best wishes for a speedy recovery.

Interesting to read this recent article by Michaels and Knappenberger looking at the infilled, homogenized surface temp data compared to the latest Watts et al study results using clean sites across the USA. The normal (?) sites show over 50% more warming than the new clean sites and the 1910 to 1945 warming doesn’t seem to support concerns about co2 enhanced warming either. And the cooling from 1945 to 1976 ( cool PDO ?) is problematic as well.

Of course we’ve been told that the US temp database is the best in the world, so how much more fiddled warming should we expect from the rest of the globe?

http://townhall.com/columnists/patrickjmichaels/2015/12/29/homogenized-us-warming-trend-may-be-grossly-exaggerated-n2097972/page/full

US is probably one of the worst. measurably so.

OT

So Steven, if the USA has some of the worse databases, can you please tell us what DBs are the best and why?

The better data comes from country’s, pick any you like, that did not go through changes like TOB. since there are only a few that did change TOB picking any country should be good.

Steven,

In fact, the real fault of the US temperature datasets is the spurious warming due to faulty siteing, as shown by the Watts et al study. It is a fair assumption that such siteing induced spurious warming is global.

Regarding MSU/AMSU temperature datasets VS surface datasets, these corresponded well enough until a few years ago, when surface datasets started to show a warming not seen in the MSU/AMSU data. What’s the explanation?

“It should also cause any honest skeptic/lukewarmer to re-examine whether observations remain inconsistent with models. ”

Got that right. I’m quite tired of people having entrenched views on a trend. If observations change, they change. In the meantime, global warming (old school term) is about long term accumulation of energy in the atmosphere. The atmosphere of the Earth has a huge energy capacity so looking at a single datapoint to see if it drops outside of a single datapoint CI of a variety of models is hardly a scientific comparison. It is the only thing the warmists have to hold on to but they don’t look very objective when they do so. I’ve made the same comment at tAV many times, the trend is what matters. The trend is the accumulation of energy and as Steve has pointed out here again, a small spike in data won’t shift a 40-ish year trend much.

If that small spike grows to something else, us who are considered skeptics must absolutely adjust our opinion.

If you aren’t willing to be wrong once in a while, you aren’t trying. If you can’t admit to being wrong, you aren’t a scientist. Fortunately for us evil skeptics, we weren’t wrong at all. CO2 appears to be much less effective at warming the planet than was estimated by models. More importantly, damage from CO2 warming is to-date literally undetectable.

Steve McIntyre,

Your wrote: “The El Nino had a very dramatic impact on Toronto temperatures, which set records in December.”

We maybe, but historically there is very little correlation between ENSO and northern winter land temperatures. Most correlation of ENSO with global average temperatures is due to the strong influence of ENSO on temperatures within the Hadley circulation (or roughly from 30S to 30N).

The correlation of November to March land temperatures with the state of the ENSO near 45N is very low. However, large anomalies in winter land temperatures, usually lasting a month or so each, and both positive and negative in sign, are very common near 45N, independent of the state of the ENSO. RomanM showed with a nice graphic how variable high latitude winter land temperatures are on his blog a couple of years back.

(Global precipitation patterns are a different story; ENSO has considerable influence outside the tropics.)

Steve: do you mean that the tree in my backyard isn’t a uniquely accurate thermometer for world temperature. What terrible news.

Chicago isn’t all that far from Toronto, and we’ve been quite warm too. It’s now back down to “normal”.

Of course, it’s not so easy to find good downhill skiing here due to the terrain. There is some kinda-sorta skiing. But really, not so much. I googled and found ice fishing is going on despite the recent warm weather: http://chicago.suntimes.com/sports/7/71/259449/chicago-fishing-ice-fishing . The page does say “Ice fish at your own risk” for an awful lot of locations. (That said, I thought one always ice fished at ones own risk. The hobby seems to involve lots of schnapps while sitting around in a cold hut.)

Maybe changing my ‘Public Display Name’ will help… (this is a test.)

Ah, and wormpuss is so endearing.

Steve: a strange avatar for the charming Lucia, to say the least.

Why haven’t the more extreme scenarios been dropped? How do the suite of individual scenarios go from observations today to their previous endpoints in 2100?

Sorry for being a pain, but I’ve asked this question many places, and never receive a reply. Is there something basic I’m missing?

The scenarios start from 1998. Most are above all the temp graphs from UAH to NOAA2015. If the science is known, and the parameters have been determined, then the energy in the system is represented by the temperatures at any given moment. The endpoints of extreme projections first have to add energy in the system to get from “here” to the point they posit should have already happened, before they can carry on to 2100. No scenario has a cross-cutting increase of system energy to do that.

If I were to create a projection for my Company revenue that said we were already twice as profitable as the CFO said we were, I would be declared incompetent and released to a health care facility. Why aren’t the IPCC modellers treated thus?

There’s a few climate graphs out there used by activists which have been badly in need of updating.

Anyone want to bet against the end of 2015 very rapidly becoming the end point for most climate-to-model comparisons — and remaining that way for many years?

Actually not.. Zeke and kevin Cowtan and some others have added improvements to the methods used to do the comparisons. Zeke presented at AGU on it.

So, sure I’ll bet. you already lost

Mosher,

I don’t think Zeke can be called an “activist”! But even if he could, you can’t win that best until he and Kevin post data including 2016 which hasn’t happened yet. Right now 2015 is the end point and it’s unlikely they have used a later end point yet.

Oh.. that’s me. I created a blog on wordpress to comment here. And I gave it the name “worm puss”. I’ll have to fix that. Lucia

So basically, this guy posted an obnoxiously dismissive, wholly wrong one-liner, was immediately shown to be wholly wrong, and never admitted it. Seems to be a pattern.

“Skeptics obviously have taken issue with the surface record, but it should be kept in mind that the possibility of systemic bias in the satellite data also needs to be kept in mind.”

The “bias” in the TLT record comes from analyst choices. In order to adjust the various records ( from 9 different platforms) the analysts make choices.

Meers has recently unveiled the impact of these choices by doing a RSS ensemble,

much like the hadsst ensembles.. The uncertainty is very large.. The structural uncertainty in TLT dwarfs the measurement uncertainty.

Expect a publication, and howling to follow…

Steve: why has it taken Mears so long to write up the structural uncertainties in splicing the satellites? Isn’t this something that he could have done years ago. Also, IMO, it would also be nice if he analysed the uncertainties analytically using known statistical methods rather than doing a zillion simulations. Also, some of the splicing uncertainties pertain to the early period. For the post 1998-period, the splicing issues are more finite. I’d be surprised if the more downward trend in RSS satellite relative to surface measurements in the post-1998 period will be mainly due to splicing uncertainty. If I were quadruple checking this data for a Challenger flight, I’d be more concerned about leakage of stratosphere into the troposphere estimates and how that was dealt with.

snip

OT. Rules here requiring on-topic are not the same as elsewhere.

I have confidence that the “keepers” of the satellite temperature datasets will correct any “systemic bias” that is convincingly demonstrated. However, I doubt that the “keepers” of the surface temperature datasets will address the faults shown to exist in theirs, such as the warming bias demonstrated in Watts, et al.

Not talking about systematic bias. talking structural uncertainty. or a way of accounting for the uncertainty in the large adjustments made to satellite records.

Funny of course to see skeptics decide which people to trust.

Tell me… when was the last time you saw the satellite guys post

A) the raw series

B) the adjusted – Raw

C) The code to explain the how and why

Well, Steven, UAH v6 was introduced by a post at Roy Denver’s website in a study authored by him, John Christy, and William Braswell, April 28, 2015. They discussed the problems they had to deal with frankly, it seems. They made it clear that improvements and modifications were expected to be an ongoing process. This is as it should be, in science.

On the other hand, what is done to to the surface datasets other than a continual, minute process of steepening a warming trend by modifying past data? I doubt that we will see many other studies of the Watts, et al, performed in other countries.

There will always be a sound basis for doubt in surface datasets until the keepers clean it up.

I think the two satellite temperature datasets are close enough as to give a high degree of confidence in either.

Correction, Roy Spencer, not Denver.

This is why I trust them:

Update 13 July 1999***********************

Recent research has shown that the time series of

t2lt is affected by orbit decay. This effect causes

artificial cooling on the time series when examined

in isolation. (Wentz and Shabel 1998)…

Update 7 Aug 2005 ****************************

An artifact of the diurnal correction applied to LT

has been discovered by Carl Mears and Frank Wentz

(Remote Sensing Systems). This artifact contributed an

error term in certain types of diurnal cycles, most

noteably in the tropics. We have applied a new diurnal

correction based on 3 AMSU instruments…

They acknowledge and correct mistakes, and credit the parties who identify them. No international men of mystery are involved.

Also, their code is available, as you yourself note nearby.

Steve Mosher,

“The uncertainty is very large.. The structural uncertainty in TLT dwarfs the measurement uncertainty.”

This puzzles me a bit. Presumably the same reasoning is used for all of the transitions between the 9 platforms, yet the divergence of the TLT from surface measurements is only apparent in the second half of the satellite record. I look forward to Meers’ paper.

Its more than just splicing..Imagine taking every adjustment you have to make ( oribital decay, diurnal drift, etc ) and instead of just picking a single adjustment number you actually look at the range of values the adjustment could actually be. Instead of just picking a single number you actually look at the range.

In any case it will be hilarious because skeptics will be arguing for LESS uncertainty in the satellite record.

Which is a bit like saying you should not be surprised if you lost all your money on a highly diversified portfolio of mid cap stocks because they can potentially all go bust. Even if the individual adjustment ranges are – by some criteria – “high”, I would expect the error covariances to rein in the larger impacts on the aggregate adjustment error, assuming there is one that is noteworthy.

The main issue surely is the trend (or sub-trend if you like) divergence with the surface series from the turn of the century or so onwards. How are we to interpret a newly-uncovered wider error range? That the satellite series’ relative concordance with pre-2000 surface temperatures was a statistical fluke? Are the adjustments less accurate now than they used to be, and not just becoming increasingly so, but in only one direction? And if so, what is the physical explanation for this? I await the release of Mears’ work with interest.

Igsy.

You should be asking Spencer and Christy for the same work.

That is a full examination of the uncertainty due to adjustments.

After all, the science isnt settled.

Except of course the “science” that suggests there is a problem with satellites and the surface records.. THAT science is sacrosanct to skeptics.. never question

THAT.

Steve Mc: Mosh, since you’re commenting here and not at WUWT, I don’t recall ever regarding any of this stuff as “sacrosanct”, let alone the inviolability of satellite splicing or inversions.

The true model uncertainty dwarfs everything.

I too wondered why Mears took so long and why Spencer and christy havent even done it.

Make no assumptions about where the uncertainty lies.. Its more than just splicing

there are a whole host of adjustments made. if you review UHA code ( available finally) you’ll be amazed.. It would be nice if RSS made code available.

In any case, when Spencer gets around to doing the same thing perhaps he can use some help.. and have a good story why he took longer than Mears

I will be curious to see what they come up with. I’ve always found this to be fairly convincing because the radiosonde used here comprises the trend at the proper altitudes. There are two datasets in agreement with satellite data rather than just one.

http://www.remss.com/measurements/upper-air-temperature/validation

My guess is that Steve McIntyre is right about problems with stratospheric contamination being a greater factor but the differences in trend between sat and ground may be more due to what is actually happening in the atmosphere than we realize. Also, the ground set, which I have also averaged into a global series well before BEST was published, is not terribly clean no matter which country it comes from.

You should look at all the adjustments made to radiosonds.. all sorts of changes.. and VERY sparse data..

But never question that science. radiosonds is settled science

Steve: Mosh, you’re getting carried away. While radiosonde has not been a primary topic of interest at CA, on the two or so occasions that I looked at it, I drew attention to the huge problems of inhomogeneity and adjustments, especially when the size of the adjustments were the same order of magnitude as the putative signal. I do not see any commentary at CA that warrants your jibe or sarcasm on this point.

For example,

The latter article , inter alia, observed:

This still seems fair to me seven years later.

Steve, you are being unfair to me. I have always changed my opinions in the face of new data.

I have spent time with radiosonde data. John Christy is quite experienced with the radiosonde adjustments that are primarily related to instrument changes and I’ve discussed them with him by email. Fortunately the adjustments don’t correlate well with the satellite changes, except in one particular instance…

Anyway, two datasets are the target not just satellites and that is real data we must all address rationally.

Jeff I dont think I am being tooo unfair.

I would just compare the volumes of ink poured onto the TOBS question with the small amount of attention paid to Sonds.

With a few exceptions ( perhaps yourself and Mc) skeptics swallow sonds hook line and sinker. When was the last time you saw a skeptic compare the raw and adjusted sonds? when was the last time you saw a congress person demand the emails of sond adjusters?

What I am saying is very simple. In general skeptics say very little about the adjustments to sonds or to satellites. Little ink is spilled on it. The daily Mail never makes charges of fraud for adjustments..

But in the case of the surface data, where all the dirty laundry is published, you get the loudest cries of fraud. You get 7 years ( my history here ) of people STILL going on about TOBS as an example.

Ask any skeptic to name an adjustment in the surface record and they probably can.

Do you have any idea how many skeptics were shocked to find out that RSS is adjusted by GCM output?

Steve Mc: Mosh, I don’t understand why you’re litigating this complaint at Climate Audit of all places. I have frequently expressed frustration at the preoccupation with station data. I looked at the TOBS issue in 2006 or so and had no particular issue with it. Indeed, I think that my parsing of TOBS was the first blog discussion of this topic. I have some concerns about the use of home-made breakpoint methods and think that it would be prudent to examine the statistical properties of these methods in a more abstract way.

Yes, mc it seems fair

I liked this

“Radiosonde adjusters take adjustment to extremes not contemplated in the surface record – ultimately even changing the sign of the trend. Sort of like Hansen on steroids.”

Steve Mc: hmmm, that was forcefully expressed. So you agree that I haven’t treated radiosonde adjustments as sacred.

Mosh, the big adjustments to radiosonde trends don’t typically happen at the same time as sat overlap.

Be skeptical.

I can’t work it out easily, how did you do it?

Steve Mosher,

I think that maybe my last reply was unfair. I think though that if you want to say satellite data has huge problems, you have to also make the same comment about radiosonde as they both produce very similar answers.

As an exercise, I have mapped the difference by latitude between UAH and RSS and you can see a clear imprint of the land masses of the globe between the two methods. This means to me that contamination by ground might also be a bigger factor with respect to trends than is understood. Another factor is at about 86 (from memory), there is a satellite overlap which is particularly problematic for both satellites and radiosonde. A lot of effort has been put into all of the transitions though.

I wonder though, rather than a black hat, white hat, sat bad, ground good type solution or the reverse, perhaps the problem is with climate models not taking into account atmospheric flow correctly in the bottom 10m of the atmosphere. The models are oversensitive as Steve Mc has shown here no matter what so we have one very glaring problem, the CAM model I read the code of, isn’t well constructed to look at ground clutter effects.. SO, maybe the actual situation is that near ground temps and sat/sonde temp observations are all reasonably accurate. Maybe there should be a differential trend and proper less sensitive climate modeling with some better modeling of the surface layer would bring everything back into alignment.

Finally, I have also taken ground temp data and randomly sub-sampled it. It is an interesting exercise when you realize you can get below 100 stations and represent global trends pretty repeatably. It says that whatever measurement we are making is very repeatable. Is there a larger UHI effect than is generally reported, my opinion is that Anthony has demonstrated that. I did play games by sorting the stations according to various factors and was unable to easily find a differentiation, but his method is vastly better. So WILL that change the temp trends much, NO WAY. The ocean overwhelms land data. The ocean data does stink though as does the loose rationale for the corrections so frankly I don’t trust global ocean surface temp to be the gold standard they are held out to be either.

I don’t see the good guy, bad guy that is being promoted by the various extremes in climate discussions – quite heavily by government funded science IMO but I tend to ignore uninformed people who don’t understand climate science so my opinion may differ from others. I do see oversensitive models and observations of climate that may be inconsistent with each other. Opportunities for improvement exist there and I’m not sure that pointing out uncertainty in the satellite record is going to improve understanding.

Santer’s longstanding strategy has been to argue that one can ignore the discrepancy between models and observations because of uncertainty in observations. Arguing that uncertainty in splicing satellites is merely one more iteration of the Santer meme.

There are 12 CMIP5 climate models with multiple runs and of these 12, five have median trends exceeding 0.3 deg C/decade. This is a very very large gap with observations and I have difficulty believing that there is enough uncertainty in splicing to resolve this discrepancy. While there is undoubtedly some uncertainty associated with the splicing of satellites, there’s a difference between uncertainty and bias. Unless it can be shown that there is bias in the splicing, then the satellite trends will remain the same.

In addition, I presume that the splicing uncertainties are greater in the earlier part of the record. However, if one takes trends from 1995 on or something like that, there is the same or greater discrepancy. Whatever the true cause of the discrepancy between satellite trends and models, I doubt that it will be resolved by saying “uncertainty”.

IMO if one were trying to attack the lower trend in the satellite record, it would make more sense to show that it is somehow incorporating more stratospheric data than assumed in present RSS and UAH algorithms and that that stratospheric data is declining.

Jeff,

I found the sub-sample analysis (comparing RSS/UAH/balloons only where there were balloon measurements are available) quite convincing. There is not much disagreement between them when apples are compared to apples. The implication (as you suggest) is that the Satellite TLT data is not far from right, and that discrepancies with ground data are due to real differences…. and perhaps due to an imperfect understanding of heat transport dynamics in the lower atmosphere.

First, thanks for reading my comment so carefully. Second, we might all be in closer agreement with an insignificantly small difference dT/dh of the atmosphere of near surface temp.

“The strong El Nino has obviously caused great excitement in the

warmist“real” scientists’ community.”Kevin Trenberth was so excited about the prospects last April that he gave an interview in which he predicted:

He could be right that this ushers in a new stair step rather than a peak but I would love to get his reaction in a couple of years if the pause continues. Trenberth continued the interview in his typical humble fashion:

The trouble with Abraham is that he disagrees with almost all climate scientists with his recent paper (co-written with Tamino) which tells them that they are all wrong about a hiatus even existing in the first place but that they were too stupid to realise it.

And since Abraham’s expertise is in fluid mechanics he is no more a climate expert than anyone else. As for ‘second-rate’, several skeptics predicted the hiatus which most scientists – either warmist or coolist, still insist is there despite dogged upwards adjustments and despite Abraham/Tamino or Karl pretending otherwise.

Moreover the blatant cherry-picking of an el nino year sits uneasily with the constant warmist refrain that skeptics prefer to start the hiatus at the el nino year of 1998. In truth we use a trend and that trend is virtually unchanged by 2015 or the expected cool year of 2016.

The latest UAH V 6 data for Dec 2015 is 0.44 C, up about 0.1C from Nov.

http://www.drroyspencer.com/2016/01/uah-v6-global-temperature-update-for-dec-2015-0-44-deg-c/

“About a decade ago, there was considerable controversy over the discrepancy between satellite and surface trends.”

Lots of blog posts but few papers in the p-r literature. And even less in the past few years. Any ideas why this is a low research priority?

US Rep. Lamar Smith is investigating this issue and Karl et al (2015) by way of congressional subpoena of NOAA (and help from the organization Judicial Watch). Yesterday’s article in CNS News quotes Rep Lamar Smith:

http://www.cnsnews.com/commentary/tom-fitton/judicial-watch-comes-rescue-congress-climate-change-scam

That article also give a tip of the hat to our host:

Satellite versus Surface?

One measures Air temp at 2m

the other estimates the temperature of a column of air that is kilometers thick.

First step would probably be to compare the Surface with radiosonds.

Steven,

Yes, that w/b a logical first step. Why has it not been taken? Is reconciling atmospheric databases useful, perhaps s/b a priority?

As McIntyre said, there was a flurry of papers. The last I saw was interesting, but not in a high-impact journal: “Reconciling observations of global temperature change”. Energy and Env, 2013.

Click to access 07-Douglass-Christy-EnE-2.pdf

For the situation in 2007, here is a report by Temperature Trends in the Lower Atmosphere: Steps for Understanding and Reconciling Differences. A Report by the Climate Change Science Program and the Subcommittee on Global Change Research, Washington, DC.

Click to access sap1-1-final-all.pdf

The Chief Editor was the all-purpose Karl.

Not Popper we take it.

Richard,

That points to a powerful question about model predictions — relevant to thinking about reconciling models with observations.

“Confirmations should count only if they are the result of risky predictions; that is to say, if, unenlightened by the theory in question, we should have expected an event which was incompatible with the theory — an event which would have refuted the theory.”

— Karl Popper in Conjectures and Refutations: The Growth of Scientific Knowledge (1963).

The world warmed in the century-plus before 1950 (per IPCC after which antropogenic warming dominated). Is it a “risky” prediction to say that warming will continue at roughly the same rate? Popper might say that a theory’s prediction should differ from that default scenario in order to be significant and testable.

Hey, I was joking! Don’t hold me responsible for any addition to the automatic moderation trigger words 🙂

A paper you and others might find of interest regarding GCM’s.

Click to access UKPC09_Synthese.pdf

Excerpt:

Abstract The United Kingdom Climate Impacts Programme’s UKCP09 project

makes high-resolution projections of the climate out to 2100 by post-processing the

outputs of a large-scale global climate model. The aim of this paper is to describe

and analyse the methodology used and then urge some caution. Given the acknowledged

systematic, shared errors of all current climate models, treating model outputs

as decision-relevant projections can be significantly misleading. In extrapolatory situations,

such as projections of future climate change, there is little reason to expect

that post-processing of model outputs can correct for the consequences of such errors.

Steve,

I have an architecture/engineering background, and I understand that yours is in Engineering as well. Have you ever made a post regarding the profound differences between empirical-based applied sciences models and first-principle climate science/geoengineering models?

Steve,

Thank you for the reminder to watch for bias in both the satellite temperatures and the CMIP5 ones, because both swing around. A one-sided view would remind me of this story –

… Paddy drove his mule along the tow path, under the foot bridge, many times a day. Most times, the mule shied at the bridge and Paddy had to drag it along.

One day, with a bright idea, he took a shovel to the path and took about six inches of gravel out from under the bridge.

His mate Patrick watched him work, then declared that the fix would not work. “Paddy, that mule, it’s his ears is too long, not his legs.” …

I’m still having conceptual problems re the meaning of the mean and variance of an assemblage of model runs. There is a lot of distance between the “mules’ ears” hanging down and pointing up.

I trust that you are well on the path to recovery.

Have the models in the comparison been redone with the updated forcings, as suggested in this paper.

CMIP5 has been used by numerous peer-reviewed papers so this question seems like another red herring.

Models are constantly being updated and modified. Surface temperature anomaly estimates (which, by the way, should always display an error range/confidence interval) are frequently revised as well. The snapshot comparison displayed in this post is useful, nonetheless.

I know the snapshot is useful, but the question of updated forcings is a valid question. As I undertand it, the original CMIP5 runs were done using forcings that we “known” (or that weren’t guesses) up until 2005, and then estimated forcings for the period after 2005. It seems that the actual forcings post-2005 (and some of the pre-2005 forcings) are, in reality, different to what was assumed. Given that the goal of the models is not to predict what the change in forcings will be, but what the response will be to the change in forcings, updating the forcings seems like an important thing to do if you want to do a proper comparison between the models and the observations.

“…updating the forcings seems like an important thing to do if you want to do a proper comparison between the models and the observations.”

This is a relevant point, the CMIP5 gets periodically adjusted, particularly for volcanic aerosol cooling. The 1991-1994 dip in plotted CMIP5 in the first figure at top is surely the adjustment post Mt. Pinatubo. The CMIP5 protocol is not to predict volcanic events. This leaves the projection always at worst case (intentionally?)for the future.

aTTP:

As you point out, CMIP5 is circa 2005. So the proper comparison is between observed temps (well, HADCRUT 4.4 and/or RSS) and post-2005 model projections.

Not by coincidence, that is approximately the period during which models begin to consistently overestimate warming.

The earlier years are just eye-candy for the unwary.

Actually, the sort of problem began much earlier. The first patch was Hansen’s “discovery” of aerosol cooling.

one of the large problems in forcings is trying to locate data on actual forcings (other than CO2) on a consistent basis with forcings in the underlying model. Can you tell me where I can find the aerosol forcing used in (say) a HadGEM run and then the observed aerosols. Also data for observed forcings that are published on a timely basis, and not as part of an ex post reconciliation exercise.

I’ve spent an inordinate amount of time scouring for forcing data. I’m familiar with the obvious dsets, but they are not satisfactory.

Steve McIntyre,

“I’m unconvinced that the “physics” precludes lower sensitivity models.”

Yes, modelers make choices for parameters, consistent with ‘physics’, which influence the models, and there for certain is a lot of room for different choices, as evidenced by the (comically) wide range of sensitivity values diagnosed by different ‘physics based’, ‘state of the art’ GCMs. The problem is that the modelers appear unwilling to incorporate reasonable external constraints on critical factors like aerosol effects and the rate of ocean heat accumulation. Seems to me a couple of very important questions are being neither asked nor answered: Do the individual model’s heat accumulations match reasonably well the measured warming accumulation from Argo? Do the aerosol effects which each model generates align reasonably well with the best estimates of net aerosol effects from ‘aerosol experts’ (say, those who contributed to AR5)? My guess is that were these questions asked and answered, it would be clear why the models project much more warming than has been actually observed… parameter choices which lead to too much sensitivity combined with too high aerosol offsets and/or too much heat accumulation.

Some feet need to be put to the fire… or the models ignored.

Yes SteveF, holding feet to the fire is called for. My informants tell me GCM’s are intensely political and not a career enhancement vehicle. DOE is building a new one by 2017 but have apparently been told in very clear terms to not stray too far from what existing models use. It is depressing and sad. By contrast, turbulence modelers are generally more scientific and open minded.

Ken and Steve, It seems to me that the main argument for constructing low sensitivity models is to understand the effects of the various choices and there are so many in a GCM that the sensitivity to these choices are I believe badly understudied and under reported. That is true of turbulence models too, modelers know these things but they are almost never reported in the literature. A careful and systemic study would be a huge contribution and such a study has been started at NASA. However, large resources will be needed to do a rigorous job.

The real issue is the uncertainty in the models and since all the models are strongly related in terms of methods and data used, the usual 95% confidence interval is surely an underestimate and possibly a bad underestimate. This is what we found for CFD. The models are closely related and yet the variety of answers can be very large. We did study some methodological choices as well. But it turns out that its really difficult to isolate the uncertainty in the underlying turbulence models and methods because there are so many other sources of uncertainty such as grid density, level of convergence, etc. I personally don’t see how it is possible to really rigorously tune parameters in a climate model given the incredibly course grid sizes and the limited time integration times that are achievable on current computers.

Potsdam Institute has a database (actually ATTP gave me the link). Not updated since 2011, apparently.

http://www.pik-potsdam.de/~mmalte/rcps/

I downloaded the concentration and forcing Excels for RCP6. The former says 400ppm CO2eq for 2014, which is 1.9w/m2 (assuming 3.7w/m2 per doubling of CO2). But the forcing Excel disagrees says 2.2w/m2 for 2014. So I wouldn’t trust this stuff very much…

Steve: that is not what I was asking for. I am completely aware of RCP projections. My request was for OBSERVED data in a format consistent with IPCC projections. Giving me back the IPCC projections is not responsive. It is too typical of people like ATTP to give an obtuse and unresponsive answer. Also there is an important difference between EMISSIONS and CONCENTRATION. AR5 seems to have taken a step back from SRES in not providing EMISSION scenarios.

Well shame on me, the Potsdam website has files created in 2011 but the actual concentration data is indeed only for pre-2005; since that year it shows RCPs. So everybody else ignore that link unless you have some fondness for historical methane forcing.

No, the models are attempting to determine what will happen for a given concentration/forcing pathway. If the concentration/forcing pathway turns out to be different to what was initially assumed, then this should be updated in the models before doing the comparison. Essentially the concentration/forcing pathway is conditional: the model output is really saying “if the concentration/forcing pathway is what we assumed, this is what we would predict”. Hence if the concentration/forcing pathway turns out to be different, doing the comparison without updating the forcings is not a like-for-like comparison.

Your herring is growing more red by the minute.

The various concentration/forcing pathways are not the only source of flawed model projections.

Model output is grounded in physics and not adjusted?

“The graph is from the NRC report, and is based on simulations with the U. of Victoria climate/carbon model tuned to yield the mid-range IPCC climate sensitivity.”

http://www.realclimate.org/index.php/archives/2011/11/keystone-xl-game-over/

Models can definitely produce low sensitivity outputs. Older version of one developed by Prinn, known for high-sensitivity models, had parameters you an set for oceans and aerosols and clouds, and certain reasonable levels of these would produce warming close to 1C by 2100.

It is reasonable to evaluate models based on updated emissions scenarios. I have advocated that models should be frozen with code to allow for such evaluations at a later time.

The concentration/forcing pathways aren’t model projections at all, they’re inputs. That’s kind of the point. It’s a bit like saying “I predict that if you drop a cannonball from the 10th floor of a building, it will takes 2.5s to reach the ground” and you claim that the prediction was wrong because it only took 2s when you dropped it from the 7th floor.

Steve: as I understand it, the scenarios are supposed to be relevant and realistic. And rather than CO2 emissions being at the low end of the scenarios, they are right up at the top end of the scenarios from the earlier IPCC reports.

ATTP:‘…the model output is really saying “if the concentration/forcing pathway is what we assumed, this is what we would predict”’.

This is the Gavin Schmidt game of separating projection from prediction. He didn’t invent it; economists did. It is not fair in science to say when predictions are correct that they are validation and when they are wrong that they were qualified projections. That is creates an unfalsifiable argument, which by Karl Popper’s definition is the opposite of science.

Steve: I’m considering putting “Popper” on my list of proscribed words.

Ron,

What? Let’s say I develop a model that is used to understand how some system will respond to some kind of externally imposed change. I then assume something about what that external change will probably be and I run the model. I then report that if the change is X, the model suggests that Y will happen. If, however, in reality the change that is imposed is different to what I assumed would happen, then if I want to check how good the model is, I should redo it with what the actual external change was.

The point is that climate models are not being used to predict what we will do AND what the climate will do. They’re really only being used to understand the climate. That what was assumed about what we would do (the concentration pathway) turns out to be different to what we actualy did, doesn’t mean that the models were somehow wrong.

If observed CO2 emissions have been at the top end of scenarios (as they have been) and observed temperatures have been at the very bottom end of scenarios, it seems reasonable to consider whether the models are parameterized too warm. From a distance, it seems like far more effort is being spent arguing against that possibility than in investigating the properties of lower-sensitivity models.

I didn’t say the pathways were projections, I said they were not the only source of flaws in model projections. Obviously, if the feedbacks and physics are poorly modeled you can project significant warming even with a lower concentration pathway.

Bottom line: If CMIP5 was “good enough” to demand global economic restructuring, I think it’s good enough for the purposes of this post.

opluso,

None of what you say is really an argument against updating the concentration pathway if you know that what actually happened is different to what you initially assumed.

Except climate sensitivity is an emergent property of the models. You can’t simply create a lower sensitivity model if the physics precludes such an outcome. As you have probably heard before, the model spread is intended to represent a region where the observed temperatures will fall 95% of the time. If the observed temperatures track along, or outside, the lower boundary for more than ~5% of the time, there would certainly be a case for removing some of the higher sensitivity models and trying to understand why the models tend to produce sensitivities that are higher than seems reasonable (or trying to construct physically plausible models with lower sensitivity). However, this doesn’t appear to be what is happening and, hence, the case for trying to artificially construct lower sensitivity models seems – IMO – to be weak.

I’m unconvinced that the “physics” precludes lower sensitivity models. In any other walk of like, specialists would be presently exploring their parameterizations to see whether they could produce a model with lower sensitivity that still meets other specifications. The seeming stubbornness of the climate community on this point is really quite remarkable.

there are dozens of parameterizations within the model. There is obviously considerable play withing these parameterizations to produce results of different sensitivity, as evidenced by the spread that includes very “hot” models like Andrew Weaver’s. The very lowest sensitivity IPCC models are still “in ore”. Opposition to investigation of even lower sensitivity parameterizations strike me as more ideological than objective.

Ken Rice says:

Actually, I haven’t heard that before. My understanding is that the models were independently developed and represented an ensemble of opportunity rather than being designed to cover a 5-95% spread. What, if any, is your support for claiming that the model spread is “intended to represent a region where the observed temperatures will fall 95% of the time”? Can you provide a citation to IPCC or academic paper?

Steve: in responding to Rice’s outlandish assertion, I expressed myself poorly above. There is no coordination among developers so that the models cover a space, but it is incorrect to say that they are “independently developed”. There are common elements to most models and systemic bias is a very real possibility, as acknowledged by Tim Palmer.

I didn’t say that they did preclude it, I simply said “if they preclude it”. The problem as I see it is that if we actively start trying to develop models that have low sensitivity then that’s not really any different to actively trying to develop ones that have high sensitivity. Even though there are parametrisations, they are still typically constrained in some way.

What makes you think there’s opposition? Maybe it’s harder than it seems to generate such models and maybe people who work on this don’t think that there is yet a case for actively doing so.

If the extraordinary and systemic overshoot of models in the period 1979-2015 doesn’t constitute a case for re-opening examination of the parameter selections, I don’t know what would be. In other fields e.g. the turbulence example cited by a reader, specialists would simply re-open the file, rather than argue against it.

In fact, I pointed out that in far more important situations (e.g., COP21), CMIP5 projections have been acceptable. Therefore, in the context of this post, there is simply no need to compile a CMIP6 database before examining the existing hypotheses.

I strongly suspect that even if SMc had satisfied your desire for an updated CMIP, you would say he should wait for HadCRUT 5.

Ecs is an emergent property just as boundary layer health is for a turbulence model. Developers of models who I know personally are much smarter than Ken Rice seems to believe. They know how to tweak the parameters or the functional forms in models to change the important emergent properties. For climate models where many of the emergent properties lack skill, one needs to choose the ones you care most about. According to Richard Betts for the Met office model they care most about weather forecast skill. Toy models of planet formation are not the same ballgame at all.

I’ve no idea why you would say this as I’ve said nothing about how smart, or not, model developers might be. All I do know is that no one can be as smart as you seem to think you are.

Steve: this is a needlessly chippy response. The commenter had made a useful substantive point: “They know how to tweak the parameters or the functional forms in models to change the important emergent properties” in response to your assertion that the models were grounded on physics. Do you have a substantive response to this seemingly sensible comment.

Having almost infinitely better understanding of CFD modeling than you Ken is more accurate. Modelers could produce low ECS models if they wanted to do so. I share Steve M’s puzzlement as to why. There are some obvious explanations having to do with things like the terrible job models do with precipitation that may be higher priorities.

Steve: I’d prefer that you and Ken Rice tone down the comparison of shall-we-say manliness.

Have the models had their concentration/forcing pathways updated? Have you considered sampling bias in the surface temperature dataset? Have you considered uncertainties in the observed trends? Have you considered the analysis where only models that have internal variability that is in phase with the observations shows less of a mismatch? Maybe your supposed gotcha isn’t quite as straightforward as you seem to think it is?

Oooh, I wonder who that could be?

I don’t know of anyone who’s specifically arguing against it. All I was suggesting is that it may be that it’s not as straightforward as it may seem. If a group of experts are not doing what you think they should be doing, maybe they have a good reason for not doing so.

perhaps. What is it?

On the other hand, there’s a lot of ideological investment in high-sensitivity models and any backing down would be embarrassing. Had there been less publicity, it would have been easier to report on lower sensitivity models, but unfortunately, this would undoubtedly be felt in human terms as some sort of concession to skeptics.

The boxplot comparisons deal with trends over the 1979-2015 period. This is a long enough period that precise phase issues are not relevant. Further, the comparison in the present post ends on a very large El Nino and is the most favorable endpoint imaginable to the modelers.

I rest my case.

I think there is a great deal of ideological desire for low-climate sensitivity too.

All I’m suggesting is that there are many factors that may be contributing to the mismatch and that it may not be quite as simple as it at first seem. To add to what I already said, there’s also the blending issue highlighted by Cowtan et al.

As for your 95% question that you asked. You’re correct, I think, that the models are intended to be independent, so I wasn’t suggesting that they’re somehow chosen/tuned to give that the observations would stay within the spread 95% of the time (although I do remember having discussions with some – maybe Ed Hawkins – who were suggesting that some models are rejected for various reasons). I was suggesting that if the observations stayed out for more than 5% of the time, then we’d have a much strong case for arguing that the models have an issue (given that the observations are outside the expected range for much longer than would be reasonable).

in responding to your assertion that the models were designed to cover a model space, I did not mean to suggest that the models are “independent” in a statistical sense. For example, I said that the ensemble was one of opportunity. The models are not “independent”, as elements are common to all of them – a point acknowledged by Tim Palmer somewhere. The possibility of systemic bias is entirely real and IMO there is convincing evidence that there is. I’ve added the following note to my earlier comment to clarify:

We recently did an analysis of CFD models for some very simple test cases and discovered that the spread of results was surprisingly large. These models also are all based on the same boundary layer correlations and data. This spread is virtually invisible in the literature. My belief is that GCMs are also all based roughly on common empirical and theoretical relationships. I also suspect that the literature may not give a full range of possible model settings or types and may understate the uncertainty, but this would be impossible to prove without a huge amount of work.

The argument that transient climate response TCR is an emergent property of the models is based on the assumption all the model parameters are constrained by lab-validated physics. “It’s just physics,” as I’ve heard said. What I believe is remarkable is that a scientific body approved a protocol that leaves the mechanics of the physics blind to outside review. The CMIP5 models in fact are such black boxes that TCR does not “emerge” but with the use of multiple linear regressions on the output of multiple realizations. In other words, one run gives a TCR the next run can give a different one. One can manipulate TCR not only by selective input but also by selective choice of output or ensemble mix and its method of analysis. If it were just physics why is there 52 model pairs, each producing unique responses?

“The concentration/forcing pathways aren’t model projections at all, they’re inputs.”

Indeed.

Aren’t the models in CMIP run using several scenarios – RCP8.5, RCP6 RCP4.5 and so on?

A valid comparison might then be: if real emissions between RCP4.5 and RCP6, then let’s compare those model runs to your preferred measurement metric.