In the past few weeks, I’ve been re-examining the long-standing dispute over the discrepancy between models and observations in the tropical troposphere. My interest was prompted in part by Gavin Schmidt’s recent attack on a graphic used by John Christy in numerous presentations (see recent discussion here by Judy Curry).  Schmidt made the sort of offensive allegations that he makes far too often:

Schmidt made the sort of offensive allegations that he makes far too often:

@curryja use of Christy’s misleading graph instead is the sign of partisan not a scientist. YMMV. tweet;

@curryja Hey, if you think it’s fine to hide uncertainties, error bars & exaggerate differences to make political points, go right ahead. tweet.

As a result, Curry decided not to use Christy’s graphic in her recent presentation to a congressional committee. In today’s post, I’ll examine the validity (or lack) of Schmidt’s critique.

Schmidt’s primary dispute, as best as I can understand it, was about Christy’s centering of model and observation data to achieve a common origin in 1979, the start of the satellite period, a technique which (obviously) shows a greater discrepancy at the end of the period than if the data had been centered in the middle of the period. I’ll show support for Christy’s method from his long-time adversary, Carl Mears, whose own comparison of models and observations used a short early centering period (1979-83) “so the changes over time can be more easily seen”. Whereas both Christy and Mears provided rational arguments for their baseline decision, Schmidt’s argument was little more than shouting.

Background

The full history of the controversy over the discrepancy between models and observations in the tropical troposphere is voluminous. While the main protagonists have been Christy, Douglass and Spencer on one side and Santer, Schmidt, Thorne and others on the other side, Ross McKitrick and I have also commented on this topic in the past, and McKitrick et al (2010) was discussed at some length by IPCC AR5, unfortunately, as too often, deceptively on key points.

Starting Points and Reference Periods

Christy and Spencer have produced graphics in a similar style for several years. Roy Spencer (here) in early 2014 showed a similar graphic using 1979-83 centering (shown below). Indeed, it was this earlier version that prompted vicious commentary by Bart Verheggen, commentary that appears to have originated some of the prevalent alarmist memes.

Figure 1. 2014 version of the Christy graphic, from Roy Spencer blog (here). This used 1979-83 centering. This was later criticized by Bart Verheggen here.

Christy’s February 2016 presentation explained this common origin as the most appropriate reference period, using the start of a race as a metaphor:

To this, on the contrary, I say that we have displayed the data in its most meaningful way. The issue here is the rate of warming of the bulk atmosphere, i. e., the trend. This metric tells us how rapidly heat is accumulating in the atmosphere – the fundamental metric of global warming. To depict this visually, I have adjusted all of the datasets so that they have a common origin. Think of this analogy: I have run over 500 races in the past 25 years, and in each one all of the runners start at the same place at the same time for the simple purpose of determining who is fastest and by how much at the finish line. Obviously, the overall relative speed of the runners is most clearly determined by their placement as they cross the finish line – but they must all start together.

The technique used in the 2016 graphic varied somewhat from the earlier style: it took the 1979 value of the 1975-2005 trend as a reference for centering, a value that was very close to the 1979-83 mean.

Carl Mears

Ironically, in RSS’s webpage comparison of models and observations, Christy’s longstanding adversary, Carl Mears, used an almost identical reference period (1979-84) in order that “the changes over time can be more easily seen”. Mears wrote that “If the models, as a whole, were doing an acceptable job of simulating the past, then the observations would mostly lie within the yellow band”, but that “this was not the case”:

The yellow band shows the 5% to 95% envelope for the results of 33 CMIP-5 model simulations (19 different models, many with multiple realizations) that are intended to simulate Earth’s Climate over the 20th Century. For the time period before 2005, the models were forced with historical values of greenhouse gases, volcanic aerosols, and solar output. After 2005, estimated projections of these forcings were used. If the models, as a whole, were doing an acceptable job of simulating the past, then the observations would mostly lie within the yellow band. For the first two plots (Fig. 1 and Fig 2), showing global averages and tropical averages, this is not the case.

Mears illustrated the comparison in the following graphic, the caption to which states the reference period of 1979-84 and the associated explanation.

Figure 2. From RSS here. Original caption: Tropical (30S to 30N) Mean TLT Anomaly plotted as a function of time. The the blue band is the 5% to 95% envelope for the RSS V3.3 MSU/AMSU Temperature uncertainty ensemble. The yellow band is the 5% to 95% range of output from CMIP-5 climate simulations. The mean value of each time series average from 1979-1984 is set to zero so the changes over time can be more easily seen. Again, after 1998, the observations are likely to be below the simulated values, indicating that the simulation as a whole are predicting more warming than has been observed by the satellites.

The very slight closing overlap between the envelope of models and envelope of observations is clear evidence – to anyone with a practiced eye – that there is a statistically significant difference between the ensemble mean and observations using the t-statistic as in Santer et al 2008. (More on this in another post).

Nonetheless, Mears did not agree that the fault lay with the models, instead argued, together with Santer, that the fault lay with errors in forcings, errors in observations and internal variability (see here). Despite these differences in diagnosis, Mears agreed with Christy on the appropriateness of using a common origin for this sort of comparison.

IPCC AR5

IPCC, which, to borrow Schmidt’s words, is not shy about “exaggerat[ing or minimizing] differences to make political points”, selected a reference period in the middle of the satellite interval (1986-2005) for their AR5 Chapter 11 Figure 11.25, which compared a global comparison of CMIP5 models to the average of 4 observational datasets.

Figure 3. IPCC AR5 WG1 Figure 11.25a.

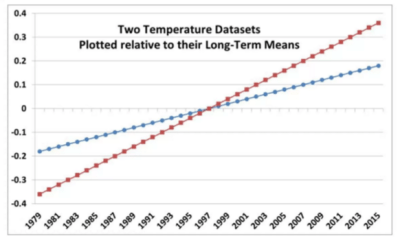

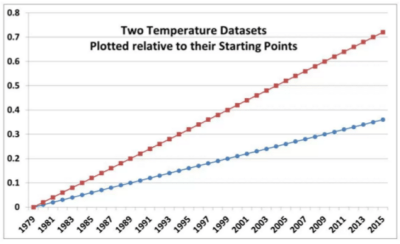

The effective origin in this graphic was therefore 1995, reducing the divergence between models and observations to approximately half of the full divergence over the satellite period. Roy Spencer recently provided the following diagram, illustrating the effect of centering two series with different trends at the middle of the period (top panel below), versus the start of the period (lower panel). If the two trending series are centered in the middle of the period, then the gap at closing is reduced to half of the gap arising from starting both series at a common origin (as in the Christy diagram.)

Figure 4. Roy Spencer’s diagram showing difference between centering at the beginning and in the middle.

Bart Verheggen

The alarmist meme about supposedly inappropriate baselines in Christy’s figure appears to have originated (or at least appeared in an early version) in a 2014 blogpost by Bart Verheggen, which reviled an earlier version of the graphic from Roy Spencer’s blog (here) shown above, which had used 1979-83 centering, a choice that was almost exactly identical to the 1979-84 centering that later used by RSS/Carl Mears (1979-84).

Verheggen labeled such baselining as “particularly flawed” and accused Christy and Spencer of “shifting” the model runs upwards to “increase the discrepancy”:

They shift the modelled temperature anomaly upwards to increase the discrepancy with observations by around 50%.

Verheggen claimed that the graphic began with an 1986-2005 reference period (the period used by IPCC AR5) and that Christy and Spencer had been “re-baseline[d]” to the shorter period of 1979-83 to “maximize the visual appearance of a discrepancy”:

The next step is re-baselining the figure to maximize the visual appearance of a discrepancy: Let’s baseline everything to the 1979-1983 average (way too short of a period and chosen very tactically it seems)… Which looks surprisingly similar to Spencer’s trickery-graph.

Verheggen did not provide a shred of evidence showing that Christy and Spencer had first done the graphic with IPCC’s middle-interval reference period and then “re-baselin[ed]” the graphic to “trick” people. Nor, given that the reference period of “1979-83” was clearly labelled on the y-axis, it hardly required reverse engineering to conclude that Christy and Spencer had used a 1979-83 reference period nor should it have been “surprising” that an emulation using a 1979-83 reference period would look similar. Nor has Verheggen made similar condemnations of Mears’ use of a 1979-84 reference period to enable the changes to be “more easily seen”.

Verheggen’s charges continues to resonate in the alarmist blog community. A few days after Gavin Schmidt challenged Judy Curry, Verheggen’s post was cited at Climate Crocks as the “best analysis so far of John Christy’s go-to magical graph that gets so much traction in the deniosphere”.

The trickery is entirely the other way. Graphical techniques that result in an origin in the middle of the period (~1995) rather than the start (1979) reduce the closing discrepancy by about 50%, thereby, hiding the divergence, so to speak.

Gavin Schmidt

While Schmidt complained that the Christy diagram did not have a “reasonable baseline”, Schmidt did not set out criteria for why one baseline was “reasonable” and another wasn’t, or what was wrong with using a common origin (or reference period at the start of the satellite period) “so the changes over time can be more easily seen” as Mears had done.

In March 2016, Schmidt produced his own graphics, using two different baselines to compare models and observations. Schmidt made other iconographic variations to the graphic (which I intend to analyse separately), but for the analysis today, it is the reference periods that are of interest.

Schmidt’s first graphic (shown in the left panel below – unfortunately truncated on the left and right margins in the Twitter version) was introduced with the following comment:

Hopefully final versions for tropical mid-troposphere model-obs comparison time-series and trends (until 2016!).

This version used 1979-1988 centering, a choice which yields relatively small differences from Christy’s centering. Victor Venema immediately ragged Schmidt about producing anomalies so similar to Christy and wondered about the reference period:

@ClimateOfGavin Are these Christy-anomalies with base period 1983? Or is it a coincidence that the observations fit so well in beginning?

Schmidt quickly re-did the graphic using 1979-1998 centering, thereby lessening the similarity to “Christy anomalies”, announcing the revision (shown on the right below) as follows:

@VariabilityBlog It’s easy enough to change. Here’s the same thing using 1979-1998. Perhaps that’s better…

After Schmidt’s “re-baselining” of the graphic (to borrow Verheggen’s term), the observations were now shown as within the confidence interval throughout the period. It was this second version that Schmidt later proffered to Curry as the result arising from a “more reasonable” baseline.

|

|

Figure 5. Two figures from Gavin Schmidt tweets on March 4, 2016. Left – from March 4 tweet, using 1979-1988 centering. Note that parts of the graphic on the left and right margins appear to have been cut off, so that the graph does not go to 2015. Right- second version using 1979-1998 centering, thereby lowering model frame relative to observations.

The incident is more than a little ironic in the context of Verheggen’s earlier accusations. Verheggen showed a sequence of graphs going from a 1986-2005 baseline to a 1979-1983 baseline and accused Spencer and Christy of “re-baselining” the graphic “to maximize the visual appearance of a discrepancy” – which Verheggen called “trickery”. Verheggen made these accusations without a shred of evidence that Christy and Spencer had started from a 1986-2005 reference period – a highly questionable interval in the first place, if one is trying to show differences over the 1979-2012 period, as Mears had recognized. On the other hand, prompted by Venema, Schmidt actually did “re-baseline” his graphic, reducing the “visual appearance of a discrepancy”.

The Christy Graphic Again

Judy Curry had reservations about whether Schmidt’s “re-baselining” was sufficient to account for the changes from the Christy figure, observing:

My reaction was that these plots look nothing like Christy’s plot, and its not just a baseline issue.

In addition to changing the reference period, Schmidt’s graphic made several other changes:

- Schmidt used annual data, rather than a 5-year average.

- Schmidt showed a grey envelope representing the 5-95% confidence interval, rather than showing the individual spaghetti strands;

- instead of showing 102 runs individually, Christy showed averages for 32 models. Schmidt seems to have used the 102 runs individually, based on his incorrect reference to 102 models(!) in his caption.

I am in the process of trying to replicate Schmidt’s graphic. To isolate the effect of Schmidt’s re-baselining on the Christy graphic, I replicated the Christy graphic as closely as I could, with the resulting graphic (second panel) capturing the essentials in my opinion, and then reproduced the graphic using Schmidt centering.

The third panel isolates the effect of Schmidt’s 1979-1998 centering period. This moves downward both models and observations, models slightly more than observations. However, in my opinion, the visual effect is not materially changed from Christy centering. This seems to confirm Judy Curry’s surmise that the changes in Schmidt’s graphic arise from more than the change in baseline. One possibility was that change in visual appearance arose from Christy’s use of ensemble averages for each model, rather than individual runs. To test this, the fourth panel shows the Christy graphic using runs. Once again, it does not appear to me that this iconographic decision is material to the visual impression. While the spaghetti graph on this scale is not particularly clear, the INM-CM4 model run can be distinguished as the singleton “cold” model in all four panels.

Figure 1. Christy graphic (left panel) and variations. See discussion in text. The blue line shows the average of the UAH 6.0 and RSS 3.3 TLT tropical data.

Conclusion

![]() There is nothing mysterious about using the gap between models and observations at the end of the period as a measure of differing trends. When Secretariat defeated the field in the 1973 Belmont by 25 lengths, even contemporary climate scientists did not dispute that Secretariat ran faster than the other horses.

There is nothing mysterious about using the gap between models and observations at the end of the period as a measure of differing trends. When Secretariat defeated the field in the 1973 Belmont by 25 lengths, even contemporary climate scientists did not dispute that Secretariat ran faster than the other horses.

Even Ben Santer has not tried to challenge whether there was a “statistically significant difference” between Steph Curry’s epic 3-point shooting in 2015-6 and leaders in other seasons.  Last weekend, NYT Sports illustrated the gap between Steph Curry and previous 3-point leaders using a spaghetti graph (see below) that, like the Christy graph, started the comparisons with a common origin. The visual force comes in large measure from the separation at the end.

Last weekend, NYT Sports illustrated the gap between Steph Curry and previous 3-point leaders using a spaghetti graph (see below) that, like the Christy graph, started the comparisons with a common origin. The visual force comes in large measure from the separation at the end.

If NYT Sports had centered the series in the middle of the season (in Bart Verheggen style), then Curry’s separation at the end of the season would be cut in half. If NYT Sports had centered the series on the first half (in the style of Gavin Schmidt’s “reasonable baseline”), Curry’s separation at the end of the season would likewise be reduced. Obviously, such attempts to diminish the separation would be rejected as laughable.

There is a real discrepancy between models and observations in the tropical troposphere. If the point at issue is the difference in trend during the satellite period (1979 on), then, as Carl Mears observed, it is entirely reasonable to use center the data on an early reference period such as the 1979-84 used by Mears or the 1979-83 period used by Christy and Spencer (or the closely related value of the trend in 1979) so that (in Mears’ words) “the changes over time can be more easily seen”.

Varying Schmidt’s words, doing anything else will result in “hiding” and minimizing “differences to make political points”, which, once again in Schmidt’s words, “is the sign of partisan not a scientist.”

There are other issues pertaining to the comparison of models and observations which I intend to comment on and/or re-visit.

282 Comments

The Verheggen and Spencer links go to the same place, Spencer’s.

Steve: fixed.

The Consensus of 97% of the Models is that the Earth is flat out wrong.

or

The Consensus of 97% of the Models is that this is the wrong Earth. 🙂

Of course it’s all political. Statistics allows a person to twist the data to whatever whim you’re chasing. The problem for Gavin Schmidt is that historical context is irrelevant to where we’re going. Looking backwards results in nothing because we can’t change the past (unless you work for NOAA). Divergence, whether or not it’s by 50% as great as some other researchers’ conclusion, is still a divergence.

There are more differences between the left and right panels of figure 5 than a simple baseline change. For example, looking at the model-mean (dark black) curve, on the right panel, years 2005/6/7 form a “v” shape, while on the left, the curve increases over that interval. Or consider 2004, which is the bottom of a small “v” in the satellite series — on the left the model-mean curve shows a corresponding “v”, while the right panel lacks that feature. Etc.

Steve: you’ve got sharp eyes. I noticed this as well and have been trying to reverse engineer. You did well to spot so quickly.

Also, Gavin seems to have mixed up his data labeling. For example, he shows RSS 3.3 as warmer than UAH v5.6 when in fact the reverse is true: RSS has been cooler than UAH v5.6 this century. The whole of Gavin’s plot seems of dubious accuracy. Tweet science.:-)

In the Figure 1 caption is there supposed to be a link in the word “here”. “This was later criticized by Bart Verheggen here.”

It reads that way to me.

Unless you mean Bart Verheggen criticized the graph at Climate Audit?

On balance – seems like a link is missing (to me).

Steve: thanks. fixed.

Lessons from Lysenko; the Russians have the best model, and it is obvious why. But the lesson is not about models, but about narrative.

==============

Advances in climate science: moved on from “hide the decline” to “hide the discrepancy.” Great article, thanks. Can anyone point me to a discussion of the differences among the models? Wondering why the Russian one runs cooler.

Better clouds, better oceans. Mebbe better aerosols and water vapour feedback.

=================

Ron Clutz did an analysis. Bottom line is more ocean thermal inertia, less water vapor feedback.

Thanks, Rud. Ron Clutz was my source but I neither remembered his name nor accurately his findings. See my bias on display?

============

Steve, Any idea why the grey envelope in the two Schmidt charts that you presented are visually different? It looks much more than rebaselining to different time period means should account for.

Steve: Masterful exposition.

I second that! Even I can understand this, and I will now be sure I understand the “baseline” used when I am viewing such comparisons. I was being fooled!

Thank you!

Reblogged this on CraigM350.

I really can’t believe that a scientist with peer-reviewed papers to his name, like Gavin Schmidt, would stoop to trying to hide the gap in such a way. In a race with error-prone observations, the only thing at issue is how to fairly draw a line between the runners at the start of the race. It is not whether the correct time to draw that line is at the start of the race or in the middle of the race.

Rich.

Got this from Gavin Schmidt on Twitter re: this article

Steve: the issue of observational uncertainties was discussed at length in McKitrick et al (2010) pdf and the rejected MM commentaries on Santer et al 2008. pdf pdf. As I noted in my post, I plan to discuss other aspects of the comparison in forthcoming posts.

But it was my understanding that Schmidt held that the Christy baseline was not “reasonable”, while other warmists (Sinclair, Verheggen) accused Christy of “trickery” in connection with the baseline. If Schmidt does not agree with such accusations and believes that the issues lie elsewhere, then it would be helpful if he says so.

Observation Error, not Structural Uncertainty?

Structural uncertainty, aka model inadequacy, model bias, or model discrepancy, which comes from the lack of knowledge of the underlying true physics. It depends on how accurately a mathematical model describes the true system for a real-life situation, considering the fact that models are almost always only approximations to reality. One example is when modeling the process of a falling object using the free-fall model; the model itself is inaccurate since there always exists air friction. In this case, even if there is no unknown parameter in the model, a discrepancy is still expected between the model and true physics.

Experimental uncertainty, aka observation error, which comes from the variability of experimental measurements. The experimental uncertainty is inevitable and can be noticed by repeating a measurement for many times using exactly the same settings for all inputs/variables.

Qualitatively, what the MSU and RAOB measurements indicate is that for the MSU era, the Hot Spot has not appeared. Exactly why and what that means with respect to AGW would appear to be open to conjecture.

One problem with assuming large error with the upper air obs is that

the obs tend to agree qualitatively with each other and with models:

1.) in the lower, mid, and upper stratosphere globally

2.) in the lower troposphere globally

3.) in the lower, mid, and upper troposphere over the Arctic

That the RAOBS and MSU tend to agree everywhere,

and that they tend to agree with the models everywhere but the mid and upper sub-polar troposphere

tends to strengthen the case for the observations.

Radiative forcing is occurring, the earth’s surface is warming.

But are sub-grid scale parameterizations are unlikely to harbor predictive power in GCMs?

more missing the point

??? The real “point” was not missed by Mr. Schmidt previously. For purposes of politics and partisanship and obtaining money, graphic representations are important. Such is what is shown to the politicians and public–not tables of data. It is partisanship and calling it “science” while calling the opponent “partisan” is just part of the game. Schmidt’s no fool, he knows how to keep the money coming. Of course, he has to follow through with the “structural uncertainty in the ‘obs'” line successfully because if he cannot, he has stepped into a trap of his own instigation.

Structural uncertainty

Latest buzzterm of the faithful. Fits well in tweets. Lends itself to a multitude of expressions and is vague enough to gain currency with the AGW crowd. In short, pseudoscience at its best. Mosh loves it.

funny. When I talked about structural uncertainty back in 2007, skeptics thought it was great to talk about.

It’s not that hard to understand. It’s basically model inadequacy. In the data analysis of observations

there are often times where we make adjustments. These adjustments are based on models. Those models themselves are sources of potential bias.

Take RSS for example.

1. Satellites do not sample the earth at uniform times.

2. To “shift” all the observations to a standard time (local noon ) the measurements must be adjusted.

3. To do this adjustment a model is created ( ie, if its 34F at 9AM whats the temp at noon.

4. RSS used a SINGLE GCM to create this model.

5. Given THAT GCM you get one collection of “shifts”

So the question is “Is that model good? or how much does that arbitrary choice of using one GCM impact

the final answer?

To look at that you then do a sensitivity analysis by picking other GCMs..

And BAM! what do you see?

You see that the choice of GCM can change the answer. BIG TIME!

Or Take all the “re calibrations” that have to be done to sat data.

1. The sensors are calibrated in the lab.

2. The calibration gives you two datapoints: One for “cold space” ( say 700 counts) and one for the

hot target ( 3500 counts ).

3. You then generate a non linear calibration curve. Without this curve you cannot transform

sensor counts into temperature.

4. The satillite launches and then you find out… OPPS.. the in field “counts” for cold space are 1800!

Jesus. You have to generate a different non linear calibration curve. That is, you have to create

a new MODEL for transforming counts into temperature.

5. You guessed it! that model itself is a source of uncertainty.

Most skeptics are not skeptical enough.

S.M. – I do not see how your comments about data analysis of observations impeach McIntyre’s comments re: Schmidt’s comments re: reference periods. I.e., one could cavil or find major fault with one or more or all sets of observations, and the same for one or more or all of the model runs. Such is not fundamental to a discussion here about reference periods in these graphical representations.

Mosh, Gavin tweets “structural uncertainty in the ‘obs'”. What do you think, satellite bashing?

Could be. Lot of that going around today. Christy and Spencer seemed to have stuck a harpoon deep in AGW with their graphics. I predict that satellite bashing will increase. Funny that Mears leads the charge, but remember, RSS depends entirely on public funding: grants from NASA, NSF, NOAA. And of course, they stand to lose that if the election goes the wrong. By the way, Santa Rosa is not far from Berkley, right? About 50 miles, I think. How’s ehak?

“S.M. – I do not see how your comments about data analysis of observations impeach McIntyre’s comments re: Schmidt’s comments re: reference periods. I.e., one could cavil or find major fault with one or more or all sets of observations, and the same for one or more or all of the model runs. Such is not fundamental to a discussion here about reference periods in these graphical representations.”

I’m responding to mpainter. Not to Steve’s analysis, which seems spot on.

These are the unaddressed issue with comparing Sat “observations” to model outputs is that few

have described in clear enough detail to know how exactly they actually get and process the “model” data.

1. What source do they use? do they use the data as generated by the models? Or the data AS archived

at the official data archives? or do they download from a source like KNMI which does not

document its process of archiving data?

2. What hPa do they use? How do they reconcile that with satellite data which is a proxy for

the entire column ?

3. How do they treat the time of observation? satellite data is for local noon, what about GCM data?

4. Masking. All the satellite data has gores, at the poles and at various regions ( Tibet and Chile)

do they mask appropriately?

Once folks are clear on the source data and how it is acquired, then you can address the chartsmanship issues.

I dont have any fervid interest in the chartsmanship issues or the baselining issues until the basics are addressed.

first things first. Where did you get the data. when did you get it. how did you get it. how did you process it and THEN how did you present it.

That’s just how I do everything. chartsmanship is always last for me and the most uninteresting, cause its basically politics and rhetoric. Its OK to be interested in that, manyfind it fun. I dont so much.

I agree, there’s a lot that goes into the MSU stew.

But RAOBs and multiple MSU analyses all tend to agree qualitatively, with height, with maxima and minima, and location.

And they tend to confirm the model predictions in areas other than the Hot Spot.

“Mosh, Gavin tweets “structural uncertainty in the ‘obs’”. What do you think, satellite bashing?”

Err no.

When HADSST was redone using an ensemble approach ( multiple adjustmeent approaches ) my sense was this

was a good thing. It wasnt bucket bashing.

When Thorne stressed the importance of structural uncertainty in early drafts of Ar5 I thought it was a good thing.

When Mears published his analysis of structural uncertainty I thought it was a good thing. When I posted

about that here, some folks said “Its about Time!!” its a good thing

Since 2007 a few people have been asking for the code and data especially for satellites. google magicjava.

Now that the code is available ( but hard to find ) you can expect folks to look at it and question

the assumptions of data analysis. That’s a good thing. I dont care about the politics.

Long ago (2012) At berkeley earth we had one guy dedicated to looking at satellite data. So I sat through

a bunch of rocket science presentations. My take away? Both sides were putting waaay too much faith

in the measurements. tons and tons of assumptions.

It’s not an issue of bashing. Its a simple issue of understanding every step and every decision made in the process and then looking at how those choices shape the answer.

you are welcome to defend the “observations” but I’d suggest looking at the sausage factory first.

Steve Mosher: “Once folks are clear on the source data and how it is acquired, then you can address the chartsmanship issues.

I dont have any fervid interest in the chartsmanship issues or the baselining issues until the basics are addressed.

first things first. Where did you get the data. when did you get it. how did you get it. how did you process it and THEN how did you present it.”

I think this subject is O/T for this thread, but I agree with your comments. I have never seen it aggressively argued that the GCM’s may be right because observational uncertainty is so great! Seems like a good way to get hoisted on a petard.

Mosh, far be it from you to bash satellites? You describe them as a

“Sausage factory”.. and you don’t call it satellite bashing to say that?

Would you describe Berkeley earth as a “sausage factory”?

####

Also, if Gavin did not mean satellites, what could he have meant?

###

Also, Spencer, Christy, and Braswell are about to publish.Sit tight and then have at. But Mosh,you surely consider it possible that they know their business better than you do.

### Good to know that your and Gavin’s and Mear’s motivations are unsullied by any base political considerations. I think.

Regarding the sniping against the Spencer, Christy observations vs models graphics, I’m fine with satellite data, which I consider to be more reliable than the surface datasets, even though these depend on satellite data, to some extent, such as infilling. The real discrepancy between satellite and surface data is in the last four years, more or less. When Spencer, Christy, and Braswell publish (soon, hopefully), there will be intense interest. Then Spencer, Christy, and Braswell will have their say. It should be good.

Mosh, your own BEST product has been criticized a lot. I have never seen you address the sitting issues (spurious warming), nor such issues as raised by Willis Eschenbach, nor your assumptions in your algorithms. But the best defense is a good offense. I predict that you will continue to ignore these criticisms and join in with Mears, Schmidt, and the rest in attacking the UAH product.

“Mosh, far be it from you to bash satellites? You describe them as a

“Sausage factory”.. and you don’t call it satellite bashing to say that?

Would you describe Berkeley earth as a “sausage factory”?”

1. and you don’t call it satellite bashing to say that?. Nope. It is a sausage factory.

Look at the code. Judge for your self. It might be tasty sausage. I like tasty sausage.

2. Would you describe Berkeley earth as a “sausage factory”?. Of course. There are many moving

parts. Again, be more skeptical and go look for yourself. List the assumptions and decisions

made in all the approaches. Compare, explain, quantify.

It still amazes me that no one wants to look critically at the adjustment processes of the satellite

data. It’s actually quite stunning. For years I told folks that RSS adjusts their data with a GCM.

did folks care that physics they dont trust (GCM) is used to adjust “raw” data? Nope. That might be

because they like the “observations”. me? every dataset gets the same treatment. Show me the source data.

Show me every step and every decision. It seems weird to suggest that I put my skepticism aside.

“Regarding the sniping against the Spencer, Christy observations vs models graphics, I’m fine with satellite data, which I consider to be more reliable than the surface datasets, even though these depend on satellite data, to some extent, such as infilling. The real discrepancy between satellite and surface data is in the last four years, more or less. When Spencer, Christy, and Braswell publish (soon, hopefully), there will be intense interest. Then Spencer, Christy, and Braswell will have their say. It should be good.”

“I’m fine with satellite data, which I consider to be more reliable than the surface datasets, even though these depend on satellite data, to some extent, such as infilling. The real discrepancy between satellite and surface data is in the last four years, more or less. ”

1. Err No. the “discrepancy” is limited to specific times and regions.

2. During the MSU period, there is little to no discrepancy.

3. During the AMSU period, the vast majority of the discrepancy is over land.

4. the discrepancy over land is focused on Areas of the globe that exhibit temperature inversions

5. the discrepancy over land is focused on areas of the globe where the surface characteristics have

changed.. namely changes that impact emissivity, like snow cover.

6. For RSS the calculation of temperature assumes a constant emissivity of earth.

“Mosh, your own BEST product has been criticized a lot. I have never seen you address the sitting issues (spurious warming),”

1. We published a paper on it.

2. in July 2012 here on Climate audit I asked Anthony for his “newest data” he refused. I argued

That he could just sit on the data for years and that I would sign an NDA to see it.

I was informed that my concerns about delays in releasing the data were unfounded.

That data is still unreleased.

3. The actual siting criteria have never been field tested properly. The only test I know of

( Le Roy co worker) indicated really small biases.. on the order of .1C.

So I guess you could say that no one ever responded to my criticism of SITING RATINGS.

basically, the site rating system (CRN1-5) has never been tested as a valid rating system.

of course, Since we wrote a paper using his system, you can expect that we would have written

to him and asked for the field test data that backed up the rating approach..

Like I said, skeptics need to be MORE skeptical. You’all just swallowed the CRN rating approach

cause NOAA did. you never actually checked its history.

“nor such issues as raised by Willis Eschenbach, nor your assumptions in your algorithms. But the best defense is a good offense. I predict that you will continue to ignore these criticisms and join in with Mears, Schmidt, and the rest in attacking the UAH product.”

1. splitting stations was WILLIS’S idea.

2. the second criticism I am aware of is his criticsm of using 500meter data for UHI studies

a) I’ve re looked at classifications using 300 meter data. No difference.

b) the chinese have just posted 30 meter data. Processing all that will take time.

c) I have a standing offer to anyone who wants to suggest a better metric for urbanity

3. The “sawtooth” critique.

a) Looked for this phenomena. Doesnt exist

b) the critique itself doesnt understand how adjustments are done. Anyone who read the code

can see that.

4. Assumptions in the algorithms. There are tons of assumptions. They are all listed in the Appendix

those that could be tested via sensitivity were tested. As an example, how to do breakpoint

So, I havent ignored the criticisms. What I do find is that when I answer one

“what about micro site?”

answer: read our paper.

the answer is typically ignored.

the best criticisms of the implemetation belong to

A) mwgrant

B) brandon and Carrick

5. Attacking the UAH product? Since when is writing about RSS an attack on UAH?

And since when is looking at uncertainty an attack?

Err, yes, the real discrepancy has occurred in recent years, as I stated. Of course I meant the global anomaly and I think that you knew that.

You repeatedly ignore comments that address perceived faults in BEST methods, practices, etc. This is the impression that you project universally. So your complaints about others have no force, don’t you see?

Please show how land discrepancies are not the result of spurious warming via siting issues, fallacious infilling, and data fiddling of the sort that has been copiously documented. You see, if you wish to compare land datasets with satellite, you are going to have credibility

You never made it clear that you were not including UAH in your criticism of “satellites” (you used the plural repeatedly). So now you say that you meant RSS, not UAH? This discussion has come to this.

Err, yes, the real discrepancy has occurred in recent years, as I stated. Of course I meant the global anomaly and I think that you knew that.”

1. You said “The real discrepancy between satellite and surface data is in the last four years”

2. I said the AMSU period. That starts in 1998. Longer than 4 years.

3. The details in the grid cells tells you more. A real skeptic would push down

to the DETAILS. like I said, be more skeptical

“You repeatedly ignore comments that address perceived faults in BEST methods, practices, etc. This is the impression that you project universally. So your complaints about others have no force, don’t you see?”

1. You cited microsite. We wrote a paper on that. That is not ignoring.

2. Willis had 2 complaints that I recall. UHI 500 meter issues. I addressed that

‘Sawtooth” I could not find anything like that in the data.

3. JeffId had an issue about how we calculated CIs. We did it his way and the CI

got SMALLER.

4. Brandon and carrick had an issue with smoothing. We acknowledged the possibility

in fact we raised the issue ourselves first

5. carrick had an issue with Correlation being different east to west. We had already

noted that in our paper.

6. Rud had an issue with an antarctic station. We had already noted that antartica was

problematic. I think the issues are related to temperature inversions and told Rud

as much.

7. mwgrant has issues with the description of climate as merely a function of latitude

and altitude. That’s a real issue but we’ve found that adding other variables to

the regression didnt help.

I am sure there is no end to objections. One simple answer is this. If you have a problem

we have provided all the tools and data you need to SHOW how changing our assumptions

will change the answer. So, if you think microsite matters, go get the data. remove

all the bad stations and DEMONSTRATE that there is a problem. In short we give

you all the tools to prove how our decisions skew the answer. You think “sawtooth” is

a problem? go find one. Generally the approach is this: A guy says

“what about airports?” I tell him “we looked at removing all airports” we looked at

airports versus non airports” he says.. what about heliports? what about unicorns?

what about every other tuesday? you see the “making work” is endless. Folks know

how this works. This is what was done to Odonnells work on antartica.. where the reviewers

just forced him to do endless work. In any case, you have the code. you have the data.

THOSE are the best tools to actually demonstrate a point. you know, like when SteveM shows

how the answer changes when you make decisions differently than Mann did.

“Please show how land discrepancies are not the result of spurious warming via siting issues, fallacious infilling, and data fiddling of the sort that has been copiously documented. You see, if you wish to compare land datasets with satellite, you are going to have credibility”

1. Spurious warming due to siting has never been demonstrated. the last attempt was 2012.

That “paper” was withdrawn by Anthony. the data remains unavailable.

2. We dont infill.

3. When comparing Land to Satellite in my mind neither gets PRIORITY. I will note

that the various versions of UAH and RSS have dramatic differences. That is structural

uncertainty. lets put it this way. OR..If you accept that CRN stations are a gold standard

then the bad stations match them exactly over the last 15 years.

“You never made it clear that you were not including UAH in your criticism of “satellites” (you used the plural repeatedly). So now you say that you meant RSS, not UAH? This discussion has come to this.”

UAH is subject to the same TYPES of objections that RSS is subject to. Their next gen product differs materially from their last gen product. THAT should tell you something about structural

uncertainty. If tommorrow I said I had a new way of calculating temperatures and my

trend increased by 5% you’d kinda wonder now wouldnt you?. Satellites is PLURAL

because there are Multiple satellites used. For christs sake you didnt even know the switch over to AMSU was in 1998. Again, be more skeptical. More skeptical about siting issues, more skeptical about satellite adjustments, more skeptical about method changes that yeild vastly

different answers.

In the end here is what we have.

1. GCM models: and Statistical models of the data they put out.

2. Models of satellite observations

3. Models of surface observations

4. Models of Sond data.

None of those models ( physical or statistical) are perfect. they are all subject to error and bias. the best place to start is to compare them all with a clear understanding of all the uncertainties. Then you might START the process of figuring out which was most reliable.

Again, you need to be more skeptical.

Mosher, sophistry is your forte.

By the way, Mosh, satellite bashing is the new style with the AGW hard core, as I’m sure you know.

You say Gavin Schmidt was not satellite bashing with his tweet “structural uncertainty in the obs” but you offer no alternative meaning for his tweet. You probably have none. It’s a safe bet that he was sniping at UAH, Christy, Spencer.

Mears is satellite basher #1. That is, when he is not bashing “denialists” (his word).

Funny that. The RSS website still claims that their product is “research quality data”. Should the conscientious citizen compare that claim with what Mears has recently published on MSU/AMSU data reliability? Or, rather, unreliability? Seems to be a big discrepancy. Perhaps the RSS funding sources (NASA, NOAA, NSF) should be alerted. Certainly they would be concerned if public funds were being wasted.

I stick with my prediction that satellite bashing will intensify among the AGW hard core. And that UAH will be the main focus.

As for Mears and RSS, if they do not produce “research quality data”, I do not see how their funding by the public can be justified.

In the end here is what we have.

1. GCM models: and Statistical models of the data they put out.

2. Models of satellite observations

3. Models of surface observations

4. Models of Sond data.

Yes.

And all four tend to agree ( within noise ) everywhere except for the Hot Spot ( which may or may not appear later ).

Confirmation of all, except the GCM Hot Spot?

Mosh and his RSS-GCM obsession appears again.

It’s a valid point…just like criticisms of the adjustments to the surface record, which seem to happen much more frequently and with much greater magnitude.

Steve: please do not coat-rack discussion of the surface record onto the issue of baseline-shifting. I know that there have been prior comments, but enough.

This is probably true in certain quarters. Undoubtedly Monckton will have chosen the data which suited his argument. Though he is smart and correct about many things, he is no more an honest broker then Gavin Schmidt.

Again UAH does not use GCM, and this is a good reason to prefer their extractions.

It was interesting that the warmist RSS showed less warming the sceptical UAH, that was reassuring about the objectivity of both efforts. – snip about motives

Don’t know what WordPress did the markup there. I closed the ‘strong’ tag and inserted a strike out. The last sentence was NOT as strikeout:

Steve: it should have been. blog policies discourage attribution of motives to people, especially base motives. It also discourages reference to public political figures, except in sanctioned threads. I regard both parties named as political figures.

-snip

BTW, Mosh always forgets to mention this in regard to satellites…

=======================================

“The weights applied to the pressure level temperatures are determined by radiation code that has been empirically tested. We’ve tested such results with controlled radiosonde measurements and found them to be virtually identical – this was all published in our papers years ago (see Spencer and Christy 1992a,b, Christy et al. 2003, Table 2 of 1992a and Table 7 of 2003 show regional correlations of 0.95 to 0.98)”

=======================================

Mosher writes

This is a weather adjustment, not a climate adjustment. There will be no accumulated errors in making these adjustments, its a straightforward less than 24 hour calculation which we are quite good at.

Compare that with a GCM projecting out say 100 years where the energy accumulation errors accumulate with every 24 hours of calculation that “passes”. That kind of bias obviously impacts temperature slopes whereas its not so obvious that the errors introduced by a weather calculation will impact the temperature slope.

Gavin is moving the pea again!

what happens when we rebase the global twmp anomaly,1850-2016

and centre it it around -cough- 1934??

1934 eh? That is the problem with all base periods. They are constantly changing as the surface record is constantly being adjusted.

1934 was warmer in 1980 then it is in 2016.

Reblogged this on TheFlippinTruth.

Translation for MSM journalists:

Most (OK, many) people are familiar with statistical deceptions that involve fiddling with the starting point of numbers at the base of a graph (things going upwards or downwards).

Lo, and behold, similar tricks can be done using the axis on the left-hand side of the graph (things going sideways).

When someone (hint?) produces an update of Darrell Huff’s famous 1954 book “How to Lie with Statistics”, Gavin Schmidt will merit a passing reference in the first chapter.

Most of the argument would-be resolved by using actual temperatures, as proper scientists do, instead of this anomaly contrivance so loved by the climate researchers.

But then, worse problems would be revealed.

Is it science or illusion?

Geoff.

1. Satellites dont give you actual temperatures.

2. RSS DOES provide data tables of an estimated temperature. UAH? if you search roys blog

I think he provided some help in a comment somewhere to help folks go from anomaly to temps

3. Working in real temps has some advantages, but you have to articulate them on a case by case basis.

blanket statements about “use real temps” are not very insightful

4. Even when you use ‘real ‘ temps with satellite data there is an element of arbitrariness: see

RSS ATBD and the references to baselining everything to NOAA-10

“1. Satellites dont give you actual temperatures.”

Nor do liquid-in-glass thermometers, they simply allow you to observe two points on a linear scale, which is not a temperature.

And resistive thermometers give you the change in current through a resistor – an even greater abstraction than the liquid-in-glass thermometer.

yes.

I have made this point several times.

However.

1. The scale on the LIG is linear ( within the temperatures we are talking about and for mercury )

2. The transformation of ‘digital counts” to temperature is Non Linear.

3. The assumptions that are required for LIG amount to this: The liquid inside will expand when it warms.

The amount of expansion will be roughly linear within the range of the device.

4. The asssumptions required for satellites? let me list a few

a) the surface emissivity is constant

b) humidity profiles dont change over time

c) Radiative transfer physics is correct.

d) cloud emissivity doesnt change over the POR

Now most interesting is 4c. You realize that the physics required to turn digital counts into brightness temperature IS THE CORE OF AGW theory?

Mosh, you say

“Now most interesting is 4c. You realize that the physics required to turn digital counts into brightness temperature IS THE CORE OF AGW theory?”

###

But in fact, AGW is principally a hypothesis that puts positive feedback of increased atmospheric water (as vapor and clouds) as an amplifier of CO2 forcing which itself would be inconsequential without such amplification. The whole AGW debate is an inverted pyramid with its tip balanced delicately on the assumptions regarding increased atmospheric water (vapor/clouds). Thus, the crux of the AGW issue is not so much radiative physics as you suppose, rather, it is the poorly founded assumptions concerning atmospheric water.

####

Also, “4. The asssumptions required for satellites? let me list a few

a) the surface emissivity is constant

b) humidity profiles dont change over time

c) Radiative transfer physics is correct.

d) cloud emissivity doesnt change over the POR”

Again, you leave to the reader to determine that you do not refer to UAH but only to the RSS methods. A little clarity would greatly improve your commenting style. In this case, you might have made it clear that your “assumptions required for satellites” did not include UAH.

Mosher wrote about Liquid in Glass thermometers:

“3. The assumptions that are required for LIG amount to this: The liquid inside will expand when it warms. The amount of expansion will be roughly linear within the range of the device.”

There are lots of other additional assumptions required for LIG records.

a) The LIG thermonmeter level must be converted by a fallible human into some specific number.

b) The archiving of that number must be done without error.

c) The 10s of thousands of fallible humans that collect the records are all reading the thermometers in the same way.

c) Time of observation errors.

d) Siting errors.

e) Thermometer enclosure errors (aspiration vs no aspiration).

f) Urban heat island errors.

I’m sure readers could think of many others.

Some of these problems may occur more generally than for just LIG thermometers, but they are still embody assumptions that must be made about the LIG records.

I find that Godel’s incompleteness theorem provides useful context here: any non-trivial logic argument requires an INFINITY of postulates. The vast majority of those postulates may be trivial (For instance, that the universe will not vanish over the time period of interest.), but there are always going to be a lot of important assumptions that must be made. This does not undercut the value of science and mathematics, but should be a caution to all that practice it.

Certainly, it does not appear that the exceedingly complex collection and collation of human LIG observations is likely to be less error prone than a small number of closely monitored satellites.

“But in fact, AGW is principally a hypothesis that puts positive feedback of increased atmospheric water (as vapor and clouds) as an amplifier of CO2 forcing which itself would be inconsequential without such amplification. ”

1. first things first. DO you accept that the effect from c02 alone will be 1.2-1.5C?

2. Yes feedbacks play a role in getting responses up to 4.5C

3. Do you deny there is a possiblility of positive feedbacks?

“The whole AGW debate is an inverted pyramid with its tip balanced delicately on the assumptions regarding increased atmospheric water (vapor/clouds). Thus, the crux of the AGW issue is not so much radiative physics as you suppose, rather, it is the poorly founded assumptions concerning atmospheric water.”

1. No. Its not an inverted pyramid.

2. As long as people agree that 1.2-1.5C is the low estimate established by a no feedback

case THAT is enough to have a dialog about feedbacks. You seem to think its settled

science that there cant be positive feedbacks. I’m skeptical. I think they could be positive.

####

Also, “4. The asssumptions required for satellites? let me list a few

a) the surface emissivity is constant

b) humidity profiles dont change over time

c) Radiative transfer physics is correct.

d) cloud emissivity doesnt change over the POR”

Again, you leave to the reader to determine that you do not refer to UAH but only to the RSS methods. A little clarity would greatly improve your commenting style. In this case, you might have made it clear that your “assumptions required for satellites” did not include UAH.

UAH uses radiative transfer codes that they themselves wrote. dont be stuck on stupid.

The weighting functions they use also assume a) and b)

Go read their documentation.

“Mosher wrote about Liquid in Glass thermometers:

“3. The assumptions that are required for LIG amount to this: The liquid inside will expand when it warms. The amount of expansion will be roughly linear within the range of the device.”

There are lots of other additional assumptions required for LIG records.

a) The LIG thermonmeter level must be converted by a fallible human into some specific number.

b) The archiving of that number must be done without error.

c) The 10s of thousands of fallible humans that collect the records are all reading the thermometers in the same way.

c) Time of observation errors.

d) Siting errors.

e) Thermometer enclosure errors (aspiration vs no aspiration).

f) Urban heat island errors.

#####################

First, I am talking about the PHYSICAL THEORY that gets you from the expansion ot liquid to a temperature. Recall, the reader objected that LIG was a PROXY.

a) The LIG thermonmeter level must be converted by a fallible human into some specific number.

Yes, and you can estimate this error. It is tiny.

And the same goes for Any instrument even a satellite

b) The archiving of that number must be done without error.

yes. same goes for a satellite. I find errors all the time.

c) The 10s of thousands of fallible humans that collect the records are all reading the thermometers in the same way.

yes and you can test for consistency same with satelittes

c) Time of observation errors.

Satillites have larger TOB errors. In fact RSS uses a GCM to correct for them.

d) Siting errors.

Satellite gores. Sensor drift. deteriorating sensors

e) Thermometer enclosure errors (aspiration vs no aspiration).

Hot target errors.

f) Urban heat island errors

You can test for this. remove the urban stations. No difference.

For satellites, errors in removing surface reflections from high altitude

parts of the world. Changing water content of the soil. changing snow

changing tree cover. all of those changes impact the return from the surface

This return must be subtracted OUT so that the returns from the atmopshere are

clean. The number of assumptions is huge.

Some of these problems may occur more generally than for just LIG thermometers, but they are still embody assumptions that must be made about the LIG records.

Yes and we can test the assumptions by comparing subsets of records.

We can compare LIG to other methods.

NONE of those assumptions drives the record.

I find that Godel’s incompleteness theorem provides useful context here: any non-trivial logic argument requires an INFINITY of postulates. The vast majority of those postulates may be trivial (For instance, that the universe will not vanish over the time period of interest.), but there are always going to be a lot of important assumptions that must be made. This does not undercut the value of science and mathematics, but should be a caution to all that practice it.

playing the GODEL card in a pragmatic discussion is a losing move.

“Certainly, it does not appear that the exceedingly complex collection and collation of human LIG observations is likely to be less error prone than a small number of closely monitored satellites.”

All you have to do is look at the change in the last two versions of UAH.

or look at RSS ensembles. The uncertainties they admit to are huge.

Your argument from complexity is backwards. Many little mistakes in the LIG record

basically sum to zero.

One mistake in the satellite record gives you huge errors. Just look at past versions.

Mosh: “1.first things first”

###

As you insist. First, in order that the discussion might proceed in accordance with sound scientific principles, please give support to the assumption that measurements obtained in the laboratory can be applied in a straightforward way to the dynamics of the atmosphere.

Steve: Please don’t. editorial policy at this blog encourages comment on the narrow issue of the thread, as otherwise every thread quickly generates the same arguments.

“…yes.

I have made this point several times…”

Except in this case, you pointed it out as if it were only a shortcoming of the satellites.

Nice touch linking to ATTP from here. Good Lord.

Steve: ATTP made a civil comment upthread. I am encouraged by any breaks in the long-standing fatwa against commenting at CA and prefer that readers are polite.

““…yes.

I have made this point several times…”

Except in this case, you pointed it out as if it were only a shortcoming of the satellites.

Nice touch linking to ATTP from here. Good Lord.”

1. Huh? Your esp is off. as if.

2. ATTP? I read everywhere. I also post everywhere. Did somebody appoint you as link nanny?

Steve: Mosh, I’d prefer that you walk by potential food fights.

There is no guarantee they sum to zero. There have been many changing practices and many “corrections” applied. 70% of the surface record is SST, and that’s a whole other kettle fish ( no pun intended ). LIG may be a more direct proxy of the temperature of hte bulb of the thermometer but the problem, sadly, does not end there.

Mosher writes

IMO the problem for a lot of people is that they see the ~1.1C increase from a doubling of CO2 and then consider what happens from there. Obviously a warmer atmosphere holds more water vapour and so the natural thing for far too many people to think is that the feedback is positive.

– snip-

Steve: Blog policies discourage efforts to argue CAGW from first principles in every thread. I realize that Mosher initiated this (and he ought to know that I discourage this sort of discussion in order to maintain readabliity), but I’m picking up the editing this morning here.

I suppose that if the aim is to pinpoint differences in trend rather than in timelines then a cleaner solution would have been to extract an index of trend, such as slope of the best fit line, to each model or ensemble realization. These could be plotted as a histogram or cumulative frequency curve on which the corresponding points from the observations could be marked. It is at least arguable that if you plot the time series then it is the time series as a whole that you seek to compare rather than one property of the time series. One could take the matter a stage further and ask what is the chance that the observations could derive from a population that can throw up a sample such as the histogram incorporating the uncertainties in the observed trend.

Steve: such analyses have been discussed in the past in connection with Santer et al. In addition, I’ve used boxplots in this way. Also, as I said in my post, I have other posts in the works. Gavin Schmidt also presented a histogram, but did not analyse the results. I intend to analyse this.

Wasn’t meaning to teach Grandma how to suck eggs and look forward to your analysis focused on trend or other properties of the data.

Reblogged this on ClimateTheTruth.com.

It’s the liberal way. Let’s not hurt the models’ feelings so let’s have a “handicap” race so they all finish equal.

LOL – that’s one of the best comments I’ve ever seen!

Yeah, tweeted. Thanks to Steve for such a clear exposition of the baselining dispute, with help from Secretariat and Steph Curry. I’m sure I’ll have much to learn from other installments in the series.

Reblogged this on Tallbloke's Talkshop and commented:

.

.

Still hiding the decline. They never learn.

Thanks, I saw the spat on Twitter and it looked trivial – but then again, I suppose you don’t become an alarmist without being able to build up the smallest thing into some mountain of an issue.

However, I really wish people would use 1990 as the start date because in the 2001 IPCC report, the IPCC said that warming would be at least 1.4C from 1990-2100. This would then allow the comparison of models, official prediction and actual temperatures.

“The trickery is entirely the other way. Graphical techniques that result in an origin in the middle of the period (~1995) rather than the start (1979) reduce the closing discrepancy by about 50%, thereby, hiding the divergence, so to speak.”

I think one could argue to display two sets of results with the average of both removed so as to compare gradients. I could argue that the baseline should be an average of the period, but I would need the curve to be averaged over that period — so it couldn’t start before the average and would not run to the end.

1986-2005? What! If you are using that starting point you should use a twenty year average otherwise it is deceitful.

… On reflection I would like to remove my comment that “it looked trivial” and instead I think Gavin ought to apologise for his appalling graph …. there’s no reason for his average …. it’s not a total average, there’s a massive discrepancy between baseline period and the averaging period shown in the graph. In short, he is showing apples with a baseline to cheese.

The 2 figures from the Schmidt tweet shown above can be compared under simple graphics to save the eye doing it.

Method: Copy the right pane, use a colour invert, overlay the invert onto the left pane then change the transparency of the inverted pane to 50% or so.

Black becomes white, other colours become their RGB inverse and it looks like this –

While there are still separate objects, base + overlay, before you compress into one, you can toggle up/down, stretch X or Y axis etc to match the chosen reference points.

Here, I have simply made the year dates match and the horizontal zero lines match (now less visible with white overlain on black to give mid grey like the background.)

There are more cases of difference than match really.

Not good images to compare by eyeball.

Thanks Geoff. Transparency (with or without inversion) is a great method for comparison.

While we are at it, I wonder if model runs can be equated to pilots to give more problems with their average.

http://www.thestar.com/news/insight/2016/01/16/when-us-air-force-discovered-the-flaw-of-averages.html

Geoff.

Verheggen’s criticism is entirely reasonable. If you baseline on short periods, the result depends a lot on what period you choose. That’s undesirable anyway, and of course lends itself to cherry-picking. As he points out, if you baseline from 1986 to 2005, you get a fairly good correspondence throughout, with a peak in about 1979-1983. If you choose the latter baseline, it just moves evrything down, in the historical and the RCP’s. You can see that the blue curve, especially, dives down over the first three years which creates a discrepancy that is maintained through the historical. A bad match. Shifting that base period forward three years would undo this, to considerable effect. And there is no basis for saying that one or the other is right, as a start period. The “start of race” analogy is juvenile.

To me, the more interesting question is what the climate model numbers actually are? He compares them with temperatures in two different places. Lower troposphere and surface land/ocean (SST). Do the CMIP numbers correspond with one or the other of these? Or something else again?

There’s a fallacy in here somewhere. If the race were tortoise and hare, it makes a great deal of difference where the slice you look at is taken. If the models are zeroed to measured at some date, why isn’t that alignment point their start and a reasonable place to start the performance comparison?

“If the models are zeroed to measured at some date”

They certainly aren’t zeroed at 1979. Christy did that. Models are generally initialised at some remote past date. The reason is that it is well acknowledged that they aren’t solving an initial value problem, so it is more important to attenuate artefacts from initialisation than trying to specify a recent initial state.

Steve: you avoided the following: Do you agree with Verheggen’s accusation of “trickery” against Spencer and Christy? If so, on what grounds do you believe that Mears did not also engage in “trickery”? Or do you also believe that Mears engaged in “trickery”?

Okay, Nick, here’s the problem:

There is a discrepancy between observations and the products of the models.

How should the faithful deal with this glaring efficiency of the models?

Simple enough, just divide the discrepancy into two parts, stick one part at the beginning of the time series comparison and the other part at the end.

Now you can argue all kinds of inanities about “comparison of means”, “model initialization”, “baseline error”, etc. Really there is no limit to the smoke and fog one can generate. Such a clever fellow, that Gavin Schmidt.

Roy Spencer calls the whole debate “silly”, and he is right.

Deficiency, not efficiency.

Oh, yes, mpainter. That’s EXACTLY how it looks like. And the fact that Nick Stokes finds it necessary to defend this “silly” action is also revealing

Nick,

Do you really think Spencer/Christy “Forced” the graph to begin at 1979… Or somehow intentionally “Did” it for any reason (what-so-ever) other than the fact that the satellite era started in 1979..?

This seems to smack of the same sort of charges of nefariousness so condemned in the mainline climate community, you know, the one where they claim that every single argument made is no longer valid because the arguer brought up the idea that whatever was done had an ulterior motive rather than some innocuous Occam’s-Razor type explanation.

“Do you really think Spencer/Christy “Forced” the graph to begin at 1979”

They forced, by offsetting, the CMIP runs to all have 1979-1983 averages equal to 0. That was in the version Verheggen was criticising (Fig 1), and is a rather unnatural thing to do to CMIP. You can see in the more recent plot shown small at the top that uses trendline matching, that there is a scatter in the same period. That is a more reasonable approach.

Nick, you continue to dance around. The hard truth is that it is the trends of the models that depart so egregiously from observed trends. But you won’t admit this.

Nick, you state,

=================

“If you offset to force the error to be zero at 1979, then of course it will grow..”

===================

Why “of course”? Are you saying it will grow because more time is given to show the over warming in the models? Is this not desirable if you wish to improve your marksmanship?? If you are making a consistent error in one direction, then more time will clarify that error will it not?

If, over longer periods the models do not predict to much warming, but are just off over short time frames should not more time demonstrate this, and likely correct it? Therefore your “of course” is not of course” at all.

BTW some skeptics say the models are not informative. but actually they are. If they were all over the park, some very much over warm, some very much over cold, then I would say they are not informative.

Because they are all wrong in ONE DIRECTION, they are highly informative, likely of some general errors in C.S. to CO2.

1979 was when the satellite record began. If I wanted to compare the trend from the beginning of satellite readings to how well models trended over that same period, of course I’d “zero” everything to the start date of the satellite record! Why WOULDN’T I??????

Especially if those models were calibrated using the satellite data themselves!

The idea that the models wouldn’t match up with, or be close to, “observations” means by default that the models can’t simulate reality! If those model runs were hind casts that included the actual satellite data that started in 1979, IOW, they were preprogrammed with specific 1979 data, and they STILL didn’t get 1979 “right”, then they are worthless!

Taking a chart Christy (or anyone) made for a specific purpose, data charted to demonstrate a specific comparison, and attacking what you presume or assume the author’s motives were, because YOU personally do not like that chart for some reason is simple ad hominem-logical fallacy, which has NO place in science.

Calvin’s chart shows the models don’t track reality just like Christy’s does. Normal, rational observers wonder why the leader of NASA’s “climate science community” isn’t discussing THAT instead of bickering over chart centering.

“Really there is no limit to the smoke and fog one can generate.”

That’s right. You never see these pseudotechnical criticism toward the graphs generated to support decadal-scale AGW conjectures, Schmidt and Marvel’s Bloomberg graph, for example. They always flow in one direction

The problem is one of compounding annual error in the models. If you start in 1995, rather than 1979, you allot fewer years for the error to grow even though the ultimate trends are the same.

If one assumes the models accurately recreate global climate one might argue that it makes no difference. Yet the evidence does not suggest that is the case (compounding error).

If one is interested in the likelihood that model projections will be accurate 30 or 50 years into the future it seems that the earlier baseline provides a more appropriate graphic.

“you allot fewer years for the error to grow”

No, that’s just playing games. If you offset to force the error to be zero at 1979, then of course it will grow. That’s just a result of that artifice. The fact is, you have a whole lot of curves that claim to represent climate in the historic period, and you want to add offsets so that they will, as best you can get, be on the same basis for projection into the RCP period. That is, the best fit to the knowledge you have, not the best fit restricted to a five year period.

Steve: you avoided the following: Do you agree with Verheggen’s accusation of “trickery” against Spencer and Christy? If so, on what grounds do you believe that Mears did not also engage in “trickery”? Or do you also believe that Mears engaged in “trickery”?

Nick,

You write, “of course [the error] will grow” if one moves the alignment back to 1979. So you’re conceding that there is a difference in slope?

Nick, do you agree that Mears used essentially the same reference period (1979-84) as the Christy-Spencer reference period(1979-1983) criticized by Verheggen? Do you agree with Verheggen’s accusation of “trickery” against Spencer and Christy? If so, on what grounds do you believe that Mears did not also engage in “trickery”? Or do you also believe that Mears engaged in “trickery”?

No, it’s the result of excessive warming in the models. The error is not random, therefore it accumulates over the length of the projection.

You are asserting an offset error when, in fact, the error is inherent in the models. You may prefer to hide this gap but it exists and is not an artifice.

“Nick, do you agree that Mears used essentially the same reference period (1979-84)”

Yes, and I think the use of a narrow base is subject to the same general objection, that the outcome is likely to depend on the period chosen, and creates an opening for cherry-picking. This does not apply so much to the tropical plot shown, as there doesn’t seem to be a pronounced peak in the observations at this time (1979-84). It didn’t turn out to be a cherry. That may be aided by the use of ensemble for RSS.

It does give the same somewhat misleading impression that both the models and the ensemble have increasing error as you go forward from 1984. That is an artefact of the narrow base.

Nick says “It does give the same somewhat misleading impression that both the models and the ensemble have increasing error as you go forward from 1984. That is an artefact of the narrow base.”

###

That is exactly the case, Nick. The discrepancy increases with time. Yet you say no it does not. You are a wonder.

HarroldW,

“So you’re conceding that there is a difference in slope?”

I’m saying that if you force differences to be zero somewhere, then as you move away, they won’t be zero – ie will increase. Trend differences will exist and will be part of that. Nothing special about that point.

Christy’s new way of using trendlines to fix a comparison is in principle good, and clarifies the issue. If you fix at 1979, you get the best picture of differing trends, but when you come to the RCP period, it makes it hard to see what is happening there. If you want to know about the RCP period, you should fix the trends at the starting point of that. I use a similar method here for comparing spatial maps of present temperature anomaly.

I think Nick has a point. You have to go back to what the models are saying and pick through from there. The actual output of the models is a range of absolute average (1961-90) temps that are from IPCC AR5 WG1 fig 9.8 ~14C +/- 1.5. The models have been spun up and forced to track the instrumental period as I recall.

Using the scenarios to describe what the world might look like in 50 to 100 years time in absolute terms has all the basses covered. The models would be almost impossible to falsify. So we aren’t testing actual model output.

What happens next is that IPCC say we can transform the model output to anomalies from each of their 1961-90 average temp. This forces them all back to zero at that time, and any projection and comparison can really only made against that base. The average of 1961-90 being zero is an essential feature of the claimed accuracy of the projections. It is, using the analogy some have used, the starting line in the IPCC universe. No handicapper in sight.

I’ve got to tell you HAS that you make very little sense.

Nick Stokes: “I’m saying that if you force differences to be zero somewhere, then as you move away, they won’t be zero – ie will increase.”

The only way you can bet on *increasing* (as opposed to changing in either direction) as you move away is if there is a trend difference. That’s simple math. Twice now you’ve said that the difference will increase the further back one sets the baseline. A logical inference from your statements is that you accept that the models run hotter than observations.

mpainter I trust it was only my saying that Nick has a point that caused you to instinctive respond that way, but in case you genuinely didn’t understand:

The model produce absolute temps. Any genuine comparison with actuals should be with those (the system is non-linear with temp so arguing that the slope irons out these problems doesn’t wash).

If you do a test with absolute temps the models project a range so wide they are unfalsifiable. (But equally they provide no useful information about the future for policy makers).